1. Introduction

With recent developments in technology and the different sources of information available, time series data are one of the most common instances among the collected data [

1]. These time series can often be highly dimensional, possibly correlated, and even if they measure similar phenomena, they may be different in length [

2,

3,

4]. Additionally, they are usually of a considerable length, since data are collected regularly and often with high frequency. These types of data can be detected in a variety of scientific fields such as in meteorology [

5], medicine [

6], finance [

7], and epidemiology [

8], aggrandizing the importance of proceeding with an analysis of them.

Over the years, time series data have been employed in a variety of approaches and techniques. These include, among others, curve smoothing and fitting [

9,

10], the identification of patterns like long-term trends, cycles [

11] or seasonal variation [

12], forecasting [

13,

14], and change point or outlier detection [

15,

16]. Additionally, time series data are used for intervention analysis, where the effects of significant events on the studied phenomena are identified, and for clustering by identifying categories of data in a time series, or groups of time series, with similar characteristics [

17].

Through the clustering of the spatiotemporal data available, which is the main interest of this paper, we aim to identify groups of time series with similar temporal patterns, i.e., cluster spatial units that exhibit similar temporal patterns, rather than modeling dependencies or forecasting future values. This distinction is critical, as clustering prioritizes exploratory analysis of inherent structures in the data, such as seasonal or trend similarities, without requiring explicit parametric assumptions about time-dependent processes. By this approach, important patterns and/or anomalies can be discovered in the structure of the data, and valuable information can be extracted [

18]. Clustering is considered an unsupervised data-mining technique to organize similar data objects into groups based on their similarity. The objective is that these groups (i.e., clusters) are as dissimilar as possible, but the objects located in the same cluster have the maximum possible similarity among them [

19]. The identified clusters may represent groups of data points/objects, or time series observations, as in the present paper, collected by different sensors or in various locations, exhibiting similar temporal patterns and dominant features, irrespective of spatial proximity.

In the latter case,

n different univariate time series,

(or

for multivariate), of possibly different lengths, i.e., with possible different number of observations, are grouped in

K different clusters, namely,

, …,

, according to a similarity criterion measure. Considering that

is the set of the

n time series, i.e.,

, these clusters, under a hard clustering algorithm, should satisfy the following relationships:

Over the years, numerous applications of time series clustering have been presented, in a diversity of domains, for instance, in medicine to detect brain activity based on image time series [

20], or for diabetes prediction [

21], further, in biology and psychology to identify related genes [

22] or for a deeper analysis of human behavior [

23]. Concerning the climate and the environment, time series clustering has been employed to discover climate indices [

24], weather patterns [

25], or to analyze the low-frequency variability of climate as in [

26].

Regarding the clustering algorithms, there are several techniques available, although it is true that more clustering algorithms are available for static and not time series data. More specifically, there is a diversity of types of algorithms in the bibliography for static data, which can be summed up into five major categories. These are the partitioning methods, the hierarchical, the density-based methods, the grid-based methods, and finally the model-based methods [

27].

Briefly, the partitioning methods attempt to partition the objects into

k distinct groups. The general idea is that a relocation method iteratively reassigns data points across clusters to optimize the cluster structure. This optimization is guided by a specific criterion, with the most ordinary choice for the partition procedure being the within-cluster sum of squares (WCSS). Another alternative to derive the partitions is the so-called Graph-Theoretic Clustering [

28]. Hierarchical methods, on the contrary, define a structure that resembles a tree, depicting the hierarchical relationship between objects [

29]. More specifically, the latter can be divisive or agglomerative based on the merging direction (i.e., a top–down or a bottom–up merge). Throughout density-based clustering, clusters are formed based on the idea that a cluster in a data space is a contiguous region of high point density. These clusters are distinguished by contiguous subspaces of points with low density [

30]. Grid-based algorithms divide the data space into a number of cells so that a grid structure is formed, and afterward, clusters are formed from these cells in the grid structure [

31]. Lastly, model-based clustering is a more statistical approach. It requires a probabilistic model because data are assumed to have been generated from that model. To result in clustering, the parameters and components of the distribution have to be determined [

32,

33,

34].

On the contrary, time series clustering methods are scarce and, in most cases, actually rely on methods employed on static data. According to the study of [

19], the categories of the time series methods can be divided into those that are raw data based, those that are feature based, and finally those called model based. Methods appertained to the first category are those that employ the raw time series along with an appropriate distance measure. These methods can be considered analogous to those employed in static data, such as hierarchical clustering. The other two categories involve the conversion of the raw time series into a feature vector of lower dimension or model parameters correspondingly. Afterward, conventional clustering algorithms (e.g., hierarchical, partitioning, and grid based) are applied to the extracted feature vectors or model parameters.

Recently, advanced MTS clustering approaches have emerged, including LSTM-DTW hybrid models [

35], as well as shape-based clustering and graph-based methods [

36] that capture complex temporal dependencies. Similarly, MTS imputation has seen the development of powerful frameworks such as GAIN [

37], BRITS [

38], and temporal graph-based models, addressing missing data by leveraging deep learning architectures and temporal graph representations. An extended time series clustering review is presented in [

18] as well as in [

39], while a deep time series clustering review is in [

40]. Recent additions to the multivariate time series clustering in the bibliography are [

41,

42,

43], while multivariate time series imputation techniques, a critical step for since missing values are common, are discussed in [

44,

45].

It is also worth mentioning that most of the clustering algorithms rely on a properly selected distance measure. As a result, it is not a surprise that the selection of a distance measure plays a profound role in time series clustering as well. According to [

18], deciding the optimal measure is a controversial issue among researchers because it generally depends on the structure of the time series, their length, and the clustering method that is employed. It also depends on the aim of clustering and, more precisely, whether it aims to find similarity in time, shape, or change [

46]. Similarity in time signifies that time series vary in a similar way at each time point. Clustering concerns the similarity in shape targets to cluster time series objects that share common shape features, while in the third case, time series are clustered based on the manner in which they vary from time point to time point.

In the present work, a two-level clustering algorithm for time series, relying on univariate but also multivariate measurements, is proposed. The algorithm aims to identify similar patterns in different time series that may be different in length but share a common seasonal time period. More precisely, in the first level of the clustering procedure, the data of all the available time series are employed to identify groups of time series with similar seasonal and trend, if present, patterns. The raw data of each time series in each cluster identified in the first level are then used to further separate the available data. In this step, imputation methods are also employed to handle the missing values in each characteristic of the available time series [

47]. These characteristics could be, for instance, measurements of precipitation levels, mean temperature levels, etc.

The proposed two-level algorithm is particularly designed for clustering time series with missing data and seasonal patterns. Unlike modeling techniques that focus on error-term dynamics (such as ARIMA or SARIMA), our approach emphasizes the extraction of dominant temporal features (seasonality and trends) and employs distance metrics specifically designed to handle time series misalignments (e.g., Dynamic Time Warping). This approach ensures that the clusters reflect temporal coherence rather than relying on spatial intuition or parametric relationships.

The rest of the paper is organized as follows.

Section 2 presents the motivation behind this work along with its importance. In

Section 3, the proposed methodology is presented in detail for both scenarios of univariate or multivariate time series. In

Section 4, the proposed algorithm is applied, in the first place, to the precipitation data of Greece and to the mean temperature data. Afterwards, it is applied to both precipitation and mean temperature data from the same region, in order to cover the scenario of multivariate time series. The resulting clusters are derived purely from temporal features, enabling data-driven identification of meteorological regions without relying on predefined spatial or parametric assumptions. Finally, some concluding remarks are presented in

Section 5.

2. Motivation

Identifying regions that share common meteorological features is of great socioeconomic and ecological importance for decision and policy making. Additionally, regions with similar meteorological characteristics enable better management of the available resources like water, energy, or agricultural productivity, allowing the adoption of more efficient and sustainable policies and planning. Meteorological data, such as wind and temperature, play an essential role in renewable resources such as water. As mentioned in [

13], more than 75% of global greenhouse gas emissions and almost approximately 90% of all carbon dioxide emissions are caused by human activities, leading to a constantly changing climate environment. Thus, with the aid of renewable sources of energy, these percentages will be decreased in an attempt to stabilize climate change as much as possible and prevent its further effects on the Earth.

Under global climate change and the constantly increasing human pressures on aquatic ecosystems in terms of water quantity and quality, the need for studying and modeling freshwater resources plays a key role in sustainable water, energy management, and efficient decision-making [

48,

49,

50]. A key point for identifying such regions is to recognize similar regions, i.e., regions with similar meteorological characteristics, since dealing with individual weather stations is not only time-consuming but also prone to more variation in contrast to dealing with a group of homogeneous stations [

51,

52,

53], and often insufficient due to the poor coverage of an area they provide and the missing data that they often provide.

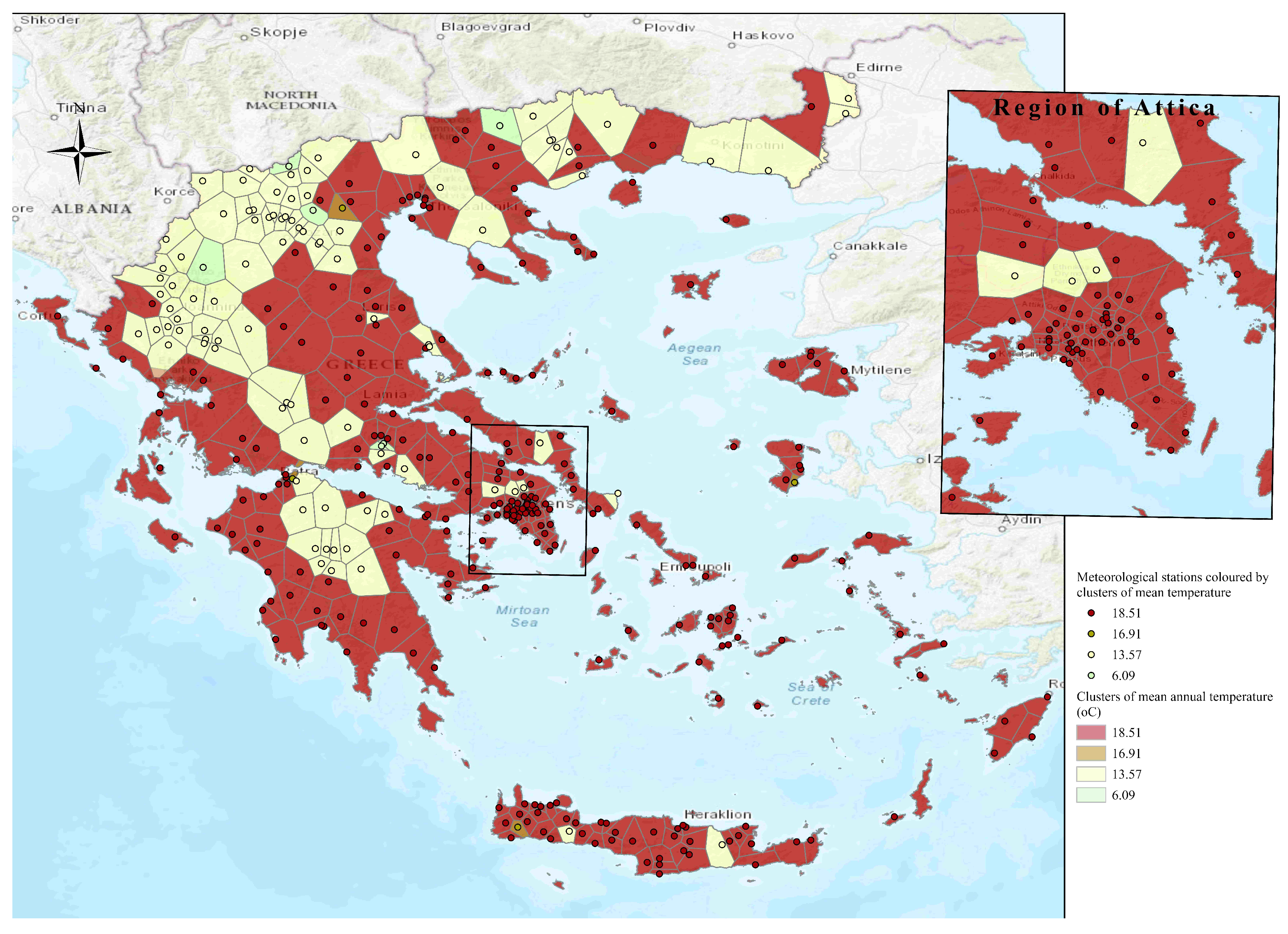

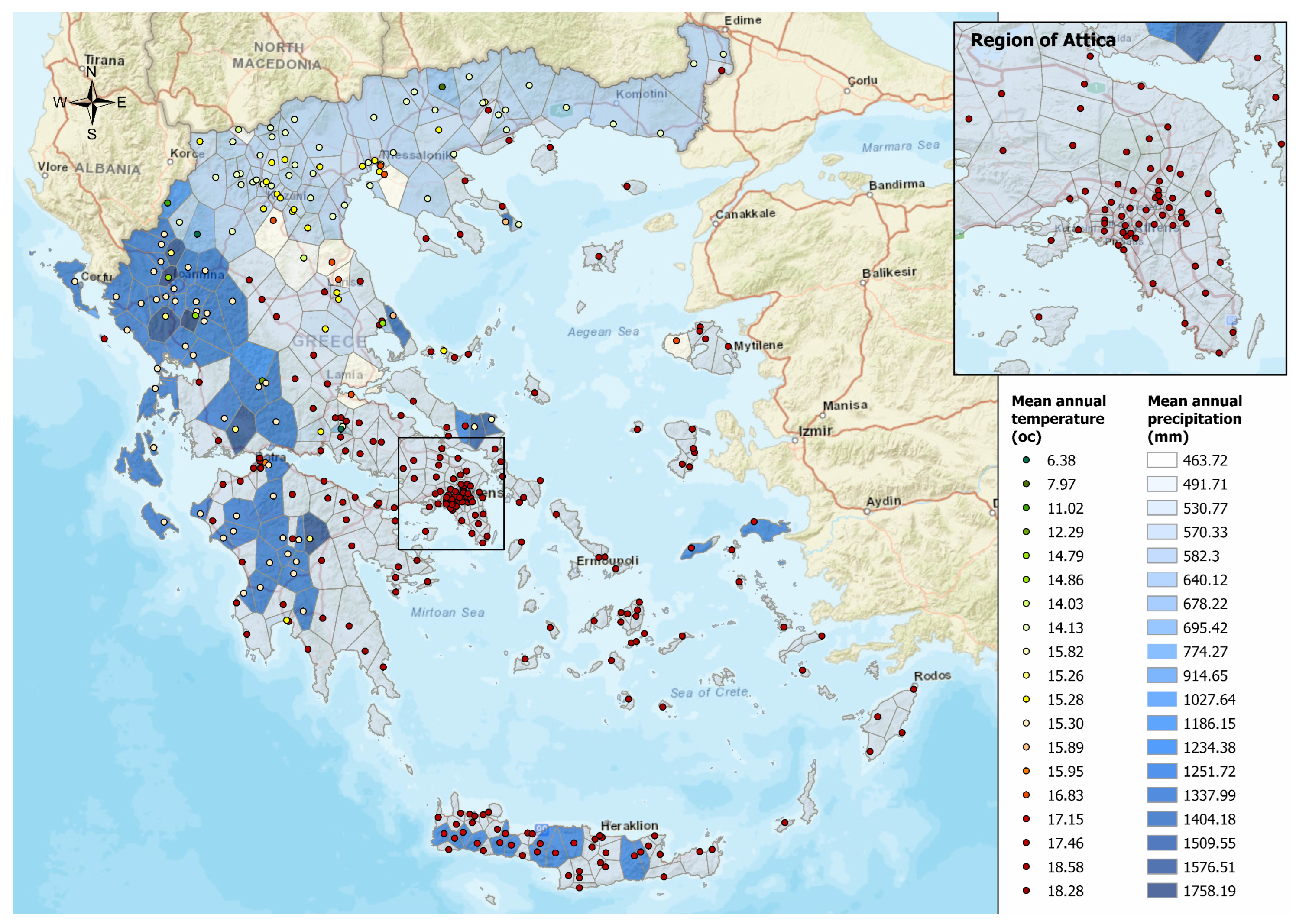

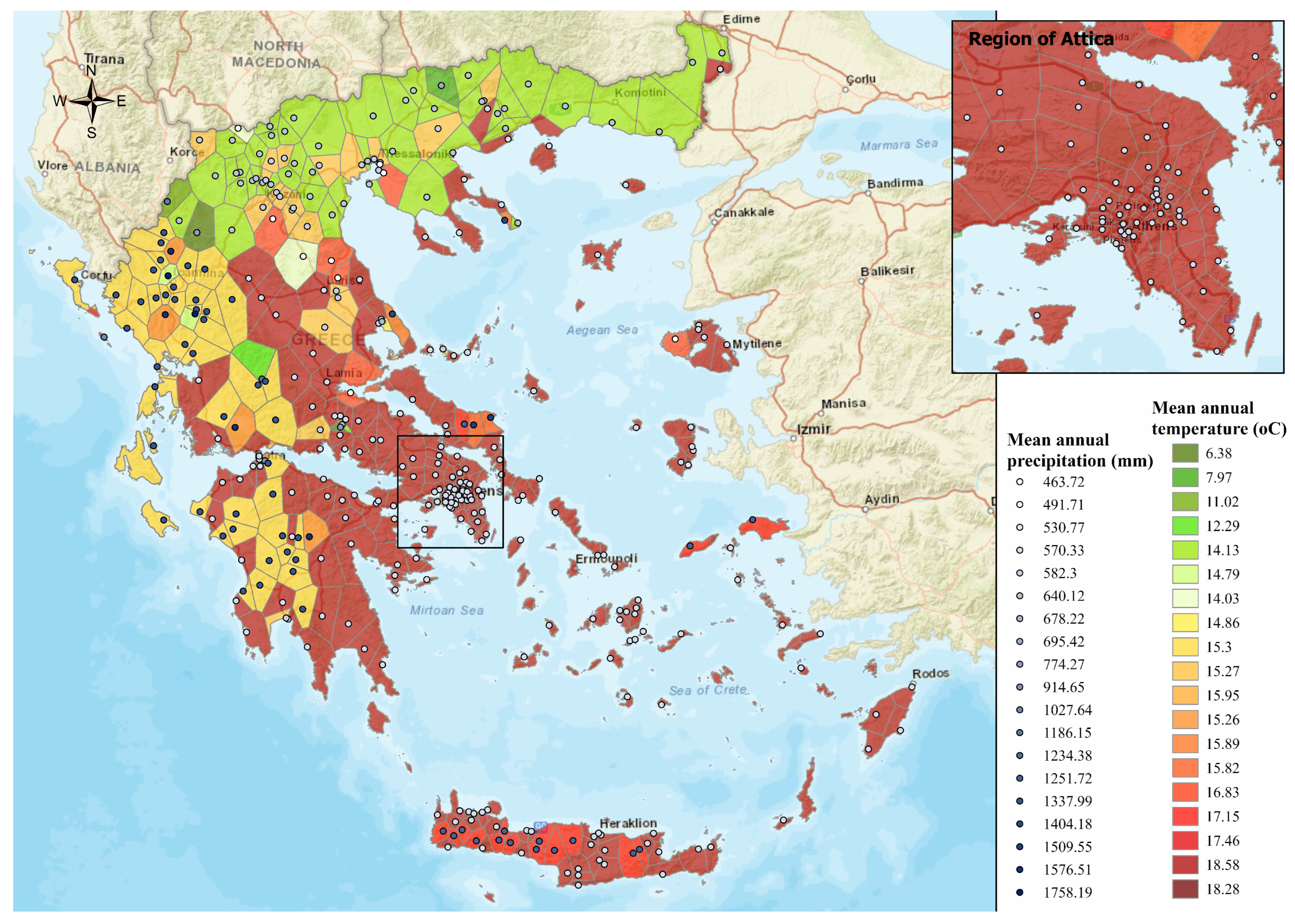

The limited coverage of meteorological stations in certain areas, along with the frequent occurrence of missing data, presents challenges that are difficult to overcome, particularly in remote regions or those with complex topography. For example, Greece’s varied and complicated topography, its long and complicated coastline, and the remarkably extensive island complex create a demanding and complex environment to cover extensively. At the same time, the same topography characteristics play a considerable role in the spatial distribution of precipitation and other meteorological characteristics across the country, making the adequate and reliable description of the climate and weather conditions a demanding task. Due to all these unique and particular hydroclimatic characteristics, the division of Greece into climatologically homogeneous regions [

54] (with similar precipitation and temperature characteristics), comprised of a number of meteorological stations, based on the similarity of the measured monthly precipitation or other relevant meteorological time series, is of great interest since the high altitude of several mountainous remote areas, i.e., with limited accessibility, and the extensive island complex results in insufficient, totally absent, or inaccurate (i.e., with a lot of missing observations) data for many areas.

3. Methodology

In this section, a two-stage clustering technique is proposed to identify clusters of (multivariate) time series with similar patterns that share a common seasonal time period (e.g., yearly) but may be different in length (i.e., number of observations) and/or may contain different percentages of missing values. The first stage relies on the dominant features, i.e., the trend, if present, and the seasonality, of the time series while the second makes use of the raw data and the imputation of any missing value to further enhance the clustering process, providing deeper insights into the internal structure and characteristics of the data.

In terms of notation, let

denote the

m available

ℓ-attribute time series (with a common period

d for seasonality). For each

, there are

available observations, a part of which may be missing. As a result, at each time point

for the

time series, the following

ℓ-dimensional observation vector is available:

where

is the

value (possible missing) of the

attribute of the

time series.

It should be mentioned that the employed clustering methods were selected initially for their interpretability and computational efficiency. The decision of not including advanced approaches for clustering or imputation was primarily driven by the computational demands of such models, which are often resource intensive and require extensive hyperparameter tuning. Additionally, a key priority of the present study was to maintain interpretability and methodological transparency, especially in the context of environmental data analysis.

3.1. Dominant Features Clustering

The first stage of clustering relies on the dominant features of the time series, i.e., the trend and the seasonal variation. The trend represents the long-term change in the values of a time series, while seasonal variation reflects regular cycles of the phenomena. In the present study, it is assumed, as already mentioned, that the available time series presents a seasonal variation with a common period. On the other hand, the trend, if present, is assumed to be adequately described by a family of functions that is capable of capturing a long-term, gradual change. Such families may include polynomial, exponential, and logistic functions.

3.1.1. Extracting Dominant Features

The trend, if present, is assumed to follow a common functional form fitted across all available time series. The estimated coefficients are stored for subsequent analysis. For instance, if the trend follows an S-shaped curve, the Pearl–Reed logistic model,

is used, and parameters

are estimated for

and

.

Several other models capture different growth dynamics. The Gompertz curve, originally developed for human mortality [

55], is widely used for growth data and belongs to the Richards family of three-parameter sigmoidal models [

56]:

where

is the asymptotic upper bound,

b is a scaling parameter, and

c is a growth-rate coefficient. A more general form is the Richards Growth Model [

57]:

which extends logistic and Gompertz models to accommodate more flexible growth patterns [

58].

Simpler trends include the Linear Trend Model, based on linear regression:

where

is a random error term, and Polynomial Trend Models, which introduce higher-order terms to capture acceleration or deceleration:

While applying a common trend model across all series may seem restrictive, these models provide considerable flexibility in capturing asymmetric growth, decay, and both linear and nonlinear behaviors. Moreover, long-term environmental trends are primarily influenced by climate change, which tends to exert a similar regional impact. Consequently, adopting a common family of functions may be a reasonable and effective approach.

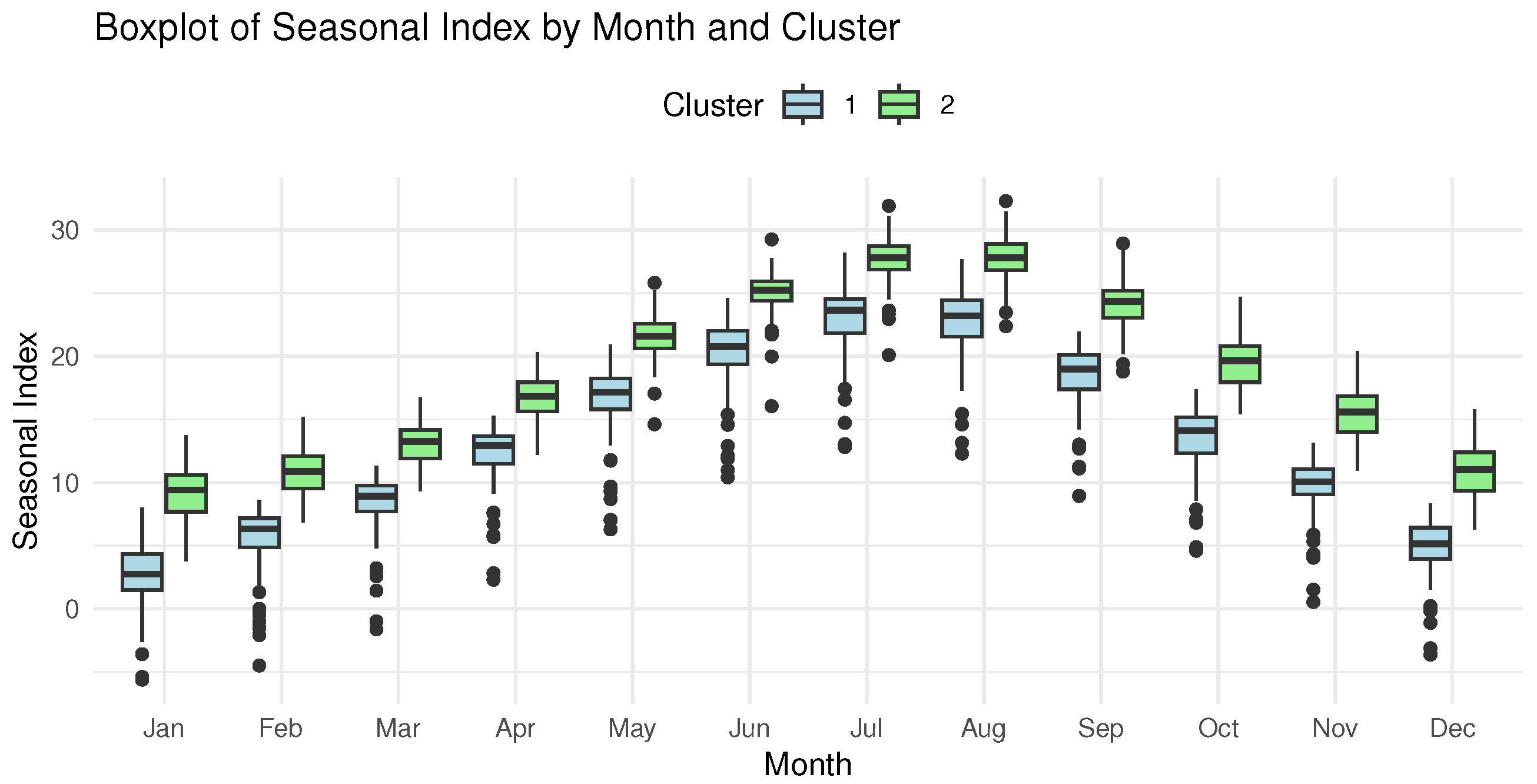

For the seasonal variation, the seasonal indices

for the

jth attribute of the

ith time series can be computed, for example, by averaging over all of the available values for the specific time period. For monthly recorded data, for instance, these seasonal indices, assuming a 12-month period, can be simply calculated by averaging over all the available values for that particular month, i.e., by calculating the following quantities:

for

, each one representing the 12 months (i.e.,

representing the seasonal index for the measurements taken in January, and

in February). This procedure aggregates all available data for each month to capture recurring seasonal patterns, even if the time series has missing values or varying lengths. Again, these indices are stored and, along with the coefficient of the trend analysis, if present, are used in the following steps of the procedure.

3.1.2. Dimensionality Reduction

The coefficients of the trend analysis, if present, and the seasonal indices of the parameters can be used to determine the distance matrix between all the available

k-attribute time series. These distances can then be fed, for example, to the K-means clustering algorithm to identify time series with similar dominant features. However, the K-means algorithm in large and high-dimensional datasets can not only be a challenging task but also become less efficient compared to its application to lower-dimensional data. To overcome this problem, projecting high-dimensional data into low-dimensional data is often initially adopted, and then the K-mean algorithm is applied in the reduced space [

59,

60,

61]. Two of the most frequently used dimensionality reduction methods, regarding the input type, are the principal component analysis (PCA) and the Classical Multidimensional Scaling (CMDS), also known as principal coordinates analysis [

62]. These two methods are closely related and are differentiated by the type of input they take.

PCA is a statistical method that transforms a set of observations of possibly correlated variables into a reduced, in number, set of linearly uncorrelated variables without losing a large amount of information. More specifically, from the

p, let us say, original standardized variables, denoted for example as

—in our case, these variables correspond to the standardized version of the trend coefficients, if present, and the seasonal indices extracted from the previous step—PCA created

p new variables, the so-called principal components (PCs), denoted as

which are linearly uncorrelated and may be written as a linear combination of the original variables. Specifically, the

jth PC can be written in the following form:

where

(

) are appropriate weights that quantify the contribution of the

uth original variable to the

ith PC. For more details on the PCA and its use in various applications, the reader is referred, among others, to [

63,

64,

65].

It is of note that the principal components are constructed in such a manner that the first principal component accounts for the largest possible variance in the dataset (i.e., as much information as possible), the second principal component accounts for the following highest variance with the constrain that it is perpendicular to the preceding, etc. As a result, a smaller number, compared with the original number of variables, can be retained while preserving as much information as possible. The number of the principal components that are retained is usually decided by keeping those that explain a high percentage of the variance of the initial data, for example, at least 85%.

Classical Multidimensional Scaling is a member of the family of Multidimensional Scaling methods [

66] that aims to discover the underlying structures, based on distance measures between objects or cases. The input for an MDS algorithm is an estimated item–item similarity, or equivalently dissimilarity, information, measured by the pairwise distances between every pair of points [

67] while the output is a reduced dimensional space such that the distances among the points in the new space reflect the proximities in the original data. For this, MDS is frequently used as a 2D or 3D data visualization technique but can also be interpreted as a dimensionality reduction technique. However, it should be noted that MDS relies on the similarities or the dissimilarities of the data points, while PCA relies on the data points themselves.

More particularly, the Classical MDS attempts to find an isometry between points distributed in a higher-dimensional space and in a low-dimensional space. In short, it creates projections of the high-

p dimensionality points in a

r-dimensional linear space, with

, by trying to arrange the projections so that the distances, measured by the Euclidean distance, between pairs of them, resemble the dissimilarities between the high-dimensional points. More extensively, MDS starts with a table of dissimilarities or distances, which is converted into a proximity matrix. It then creates a centering Gram matrix

G and makes a spectral decomposition of

G. Finally, the appropriate number of dimensions is decided. Further information on the procedure of CMDS (and generally on MDS) can be sought in the corresponding sections in [

68,

69,

70] as well as in [

71]. As noted and proven by [

70], there is a duality between the principal components analysis and principal coordinates analysis, i.e., classical MDS where the dissimilarities are given by the Euclidean distance.

Thus, the Euclidean distance can be employed in the dominant features of the available time series to calculate the necessary, for the input, distance matrix and discover similar underlying structures. For example, if only seasonal indices are extracted from the time series, the distance between the

r-th (

k-attribute) seasonal index and the corresponding

q-th one is defined as follows:

with

k defining the number of available attributes and

d the total number of distinct time points over a time period (for example, 12 for monthly measurements with yearly seasonality).

Regarding the choice between the two aforementioned dimensionality reduction techniques, it is of note that the PCA can be adopted for the case that single-attribute time series and their dominant features are under study. This is because PCA projects data points on the most advantageous subspace, retaining the majority of the information in the first PCs. On the other hand, MDS can be used when a larger number of attributes are available for each time series in order to maintain, as much as possible, the relative distances between the points and take into account the similarities or the dissimilarities for each attribute themselves and not in projected space.

3.1.3. Clustering Algorithm

The final step of the first level of the proposed algorithm is to perform the cluster analysis. The K-means clustering algorithm was selected for that reason. It is considered one of the most common clustering algorithms (also applicable to time series data), which aims to create clusters of the original data by splitting them into groups. It is a centroid-based clustering algorithm that has its origins in signal processing. In K-means, clusters are represented by their center (the so-called centroid), which corresponds to the arithmetic mean of data points assigned to the cluster, meaning that it is not necessarily a member of the dataset. Every observation of the dataset is assigned to a cluster by reducing the within-cluster sum of squares [

19].

In this work, the K-means clustering algorithm is performed by using the principal components selected as mentioned (i.e., selecting those explaining at least 85% of the variance of the initial data) concerning the univariate case, or the matrix with w columns whose rows give the coordinates of the points chosen to represent the dissimilarities (multivariate case). This procedure reveals the (first level) clusters based on the dominant features of the time series, i.e., the trend, if present, and the seasonal variation.

The

w columns forming the matrix that is employed as an input in the K-means algorithm are derived by initially finding the appropriate number of dimensions in the lower-dimensional subspace. The optimal number of dimensions can be derived by the stress values. The stress function is a measure of the discrepancy between the original distances and the distances in the reduced space. According to [

72], it is reasonable to choose a value of dimensions that makes the stress acceptably small and for which a further increase in the number of dimensions does not significantly reduce the stress. It is also mentioned that a value of

of stress can be considered good optimization for the goodness of fit and

as excellent. Another approach, frequently used, is the examination of the scree plot that depicts the eigenvalues against the number of dimensions that are considered. Then, the known “elbow” criterion is used to find the appropriate number of dimensions. Usually, the lower-dimensional space ends up being a 2-dimensional or 3-dimensional space, and thus a matrix with coordinates in the represented space (dimension 1 in column 1, dimension 2 in column 2, etc.) is formed.

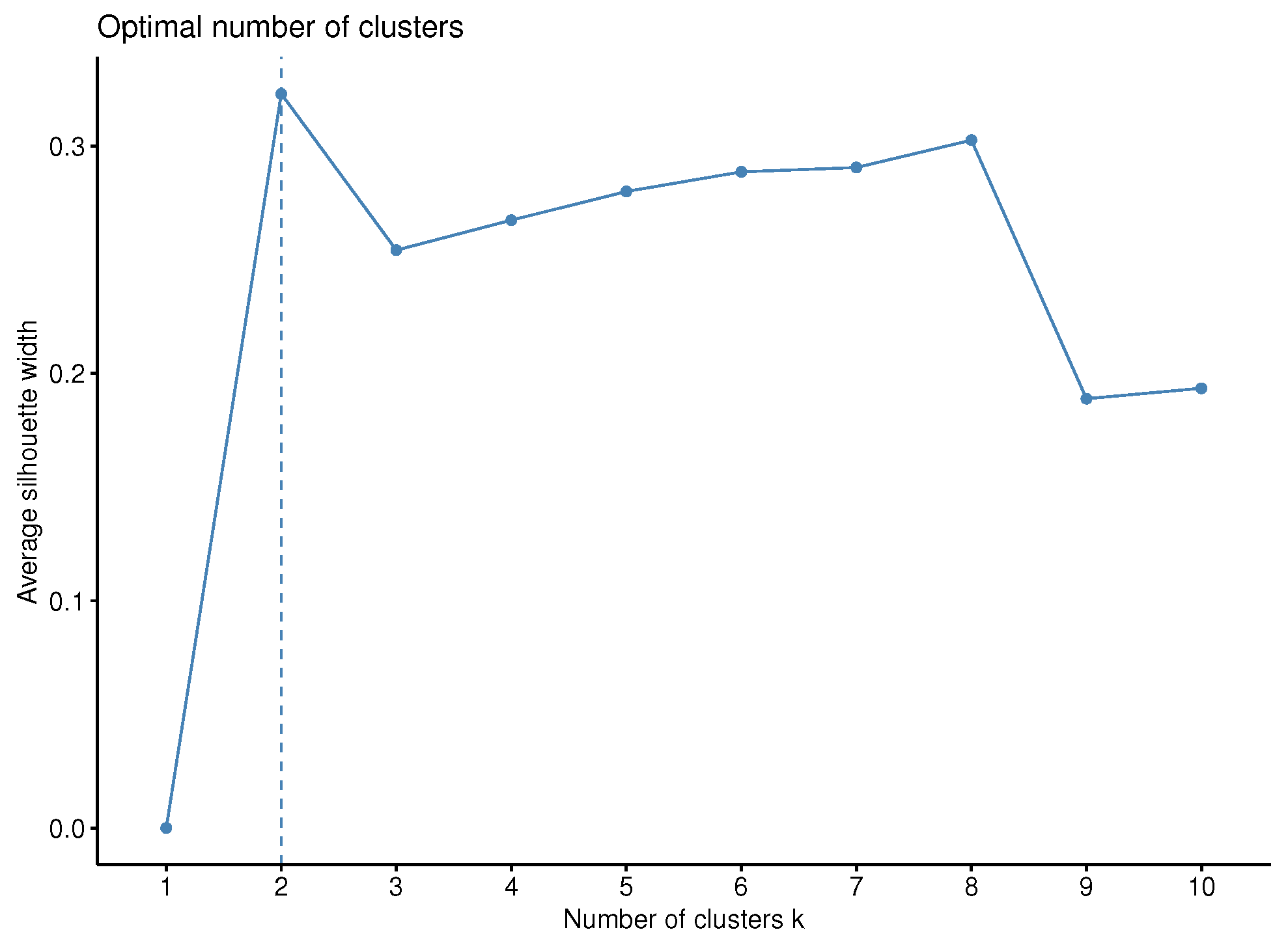

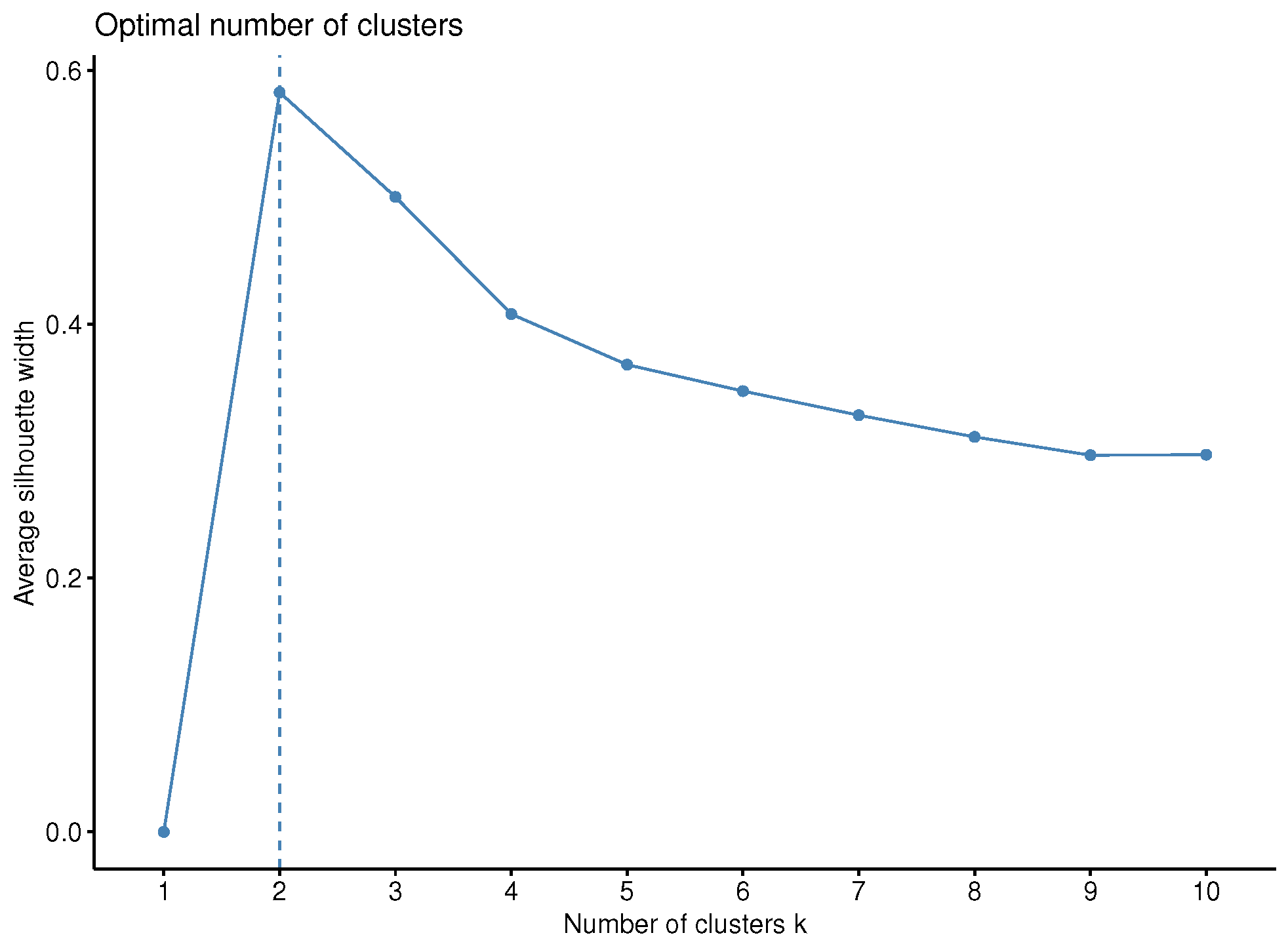

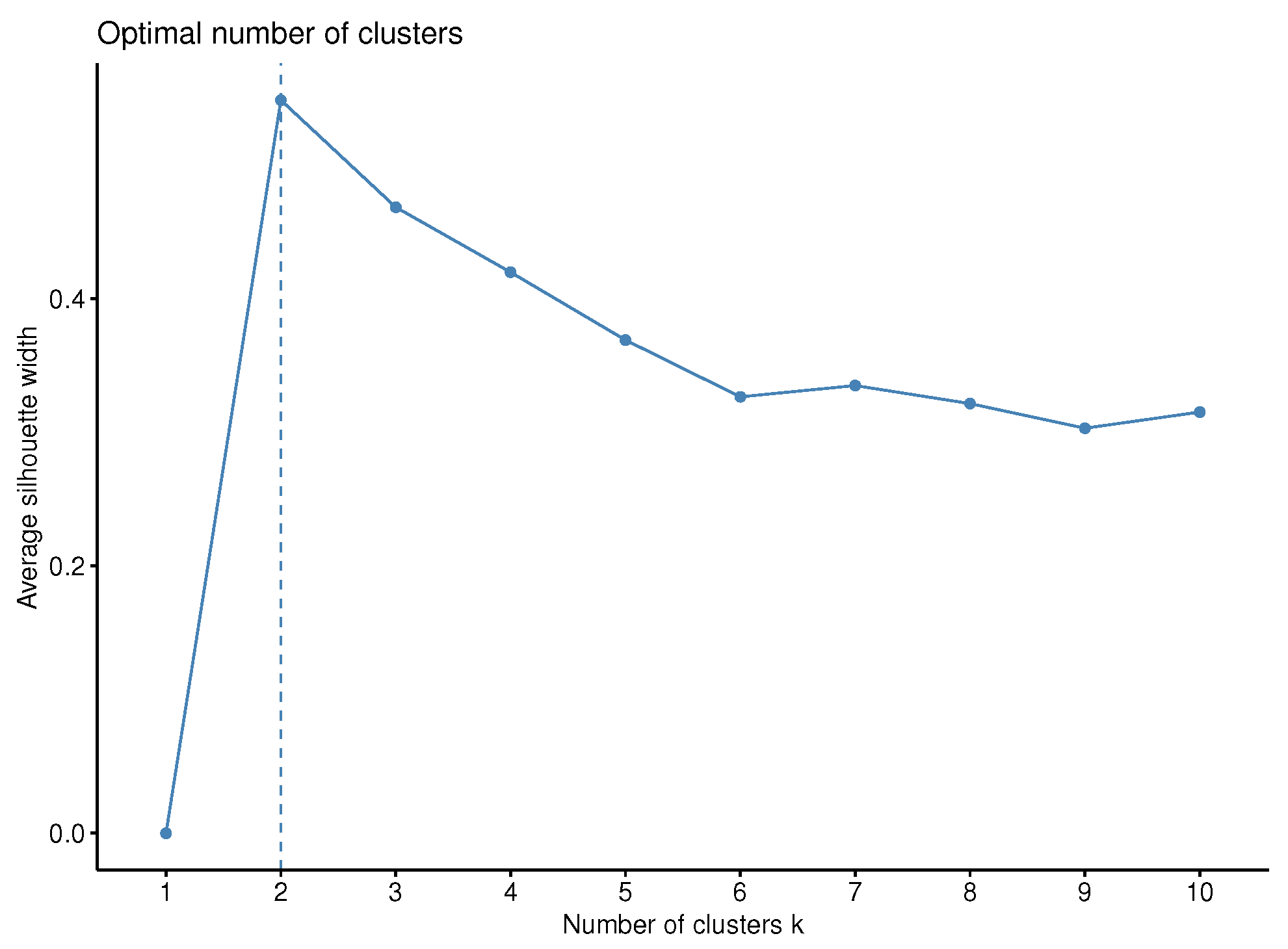

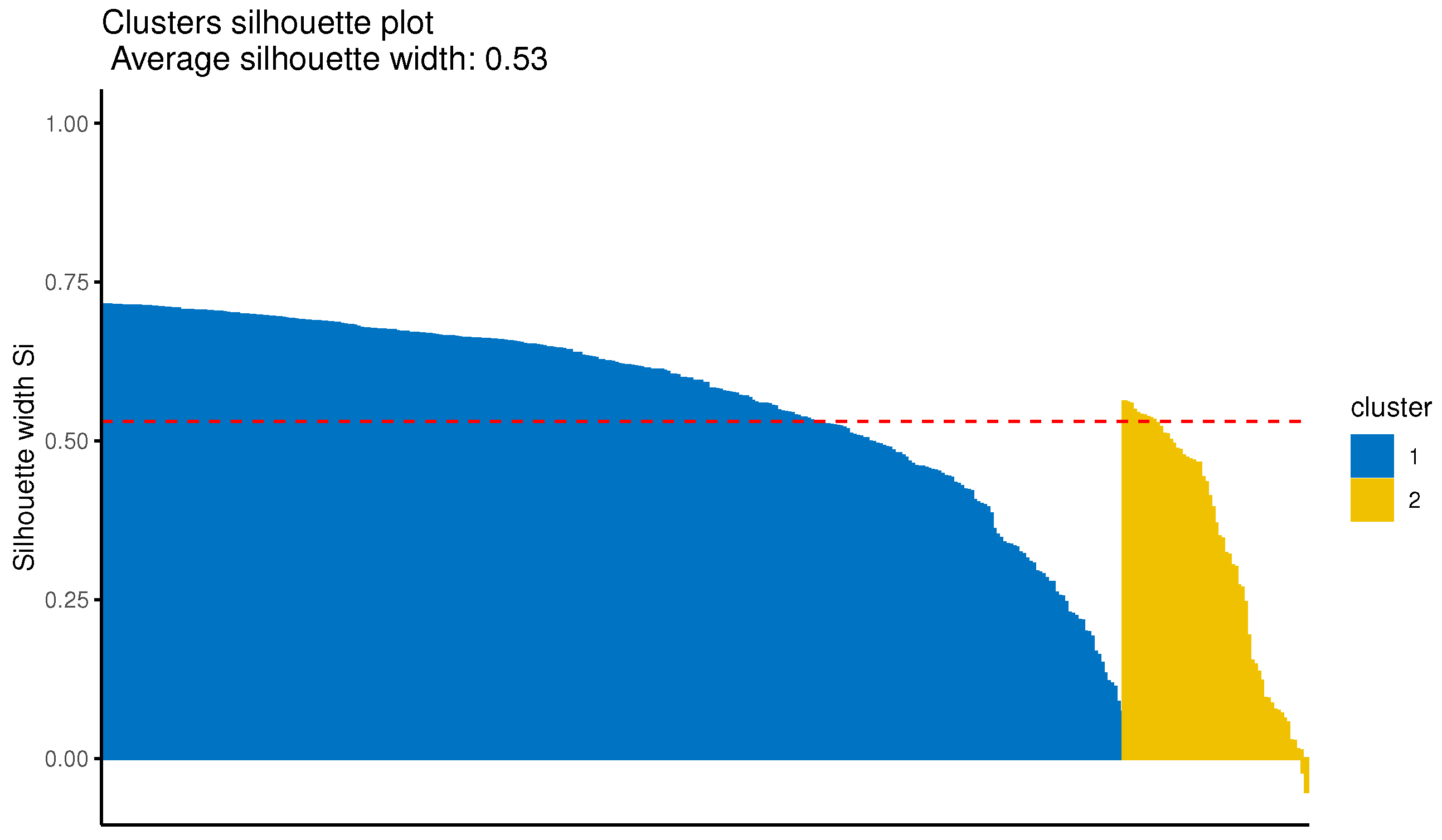

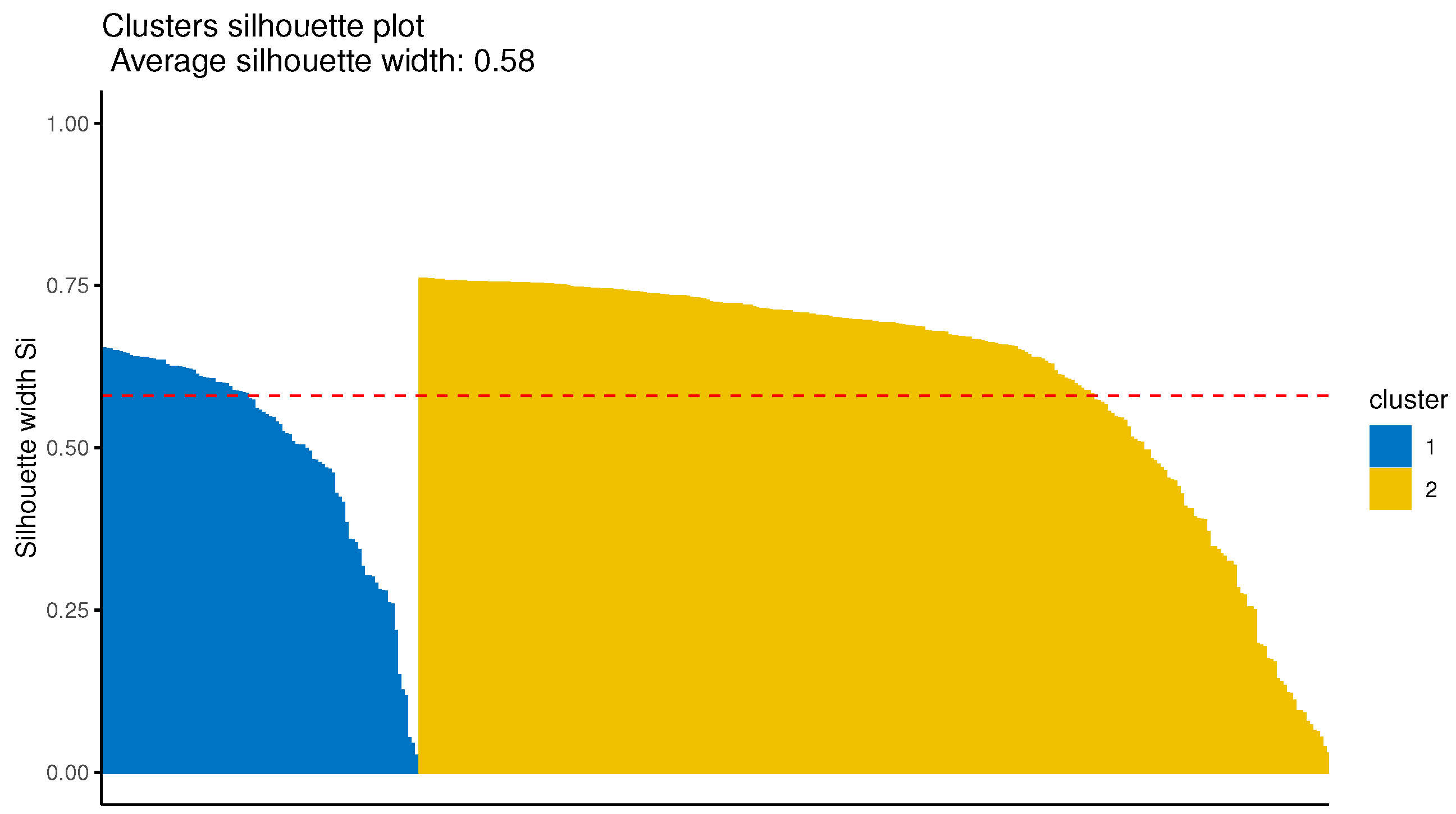

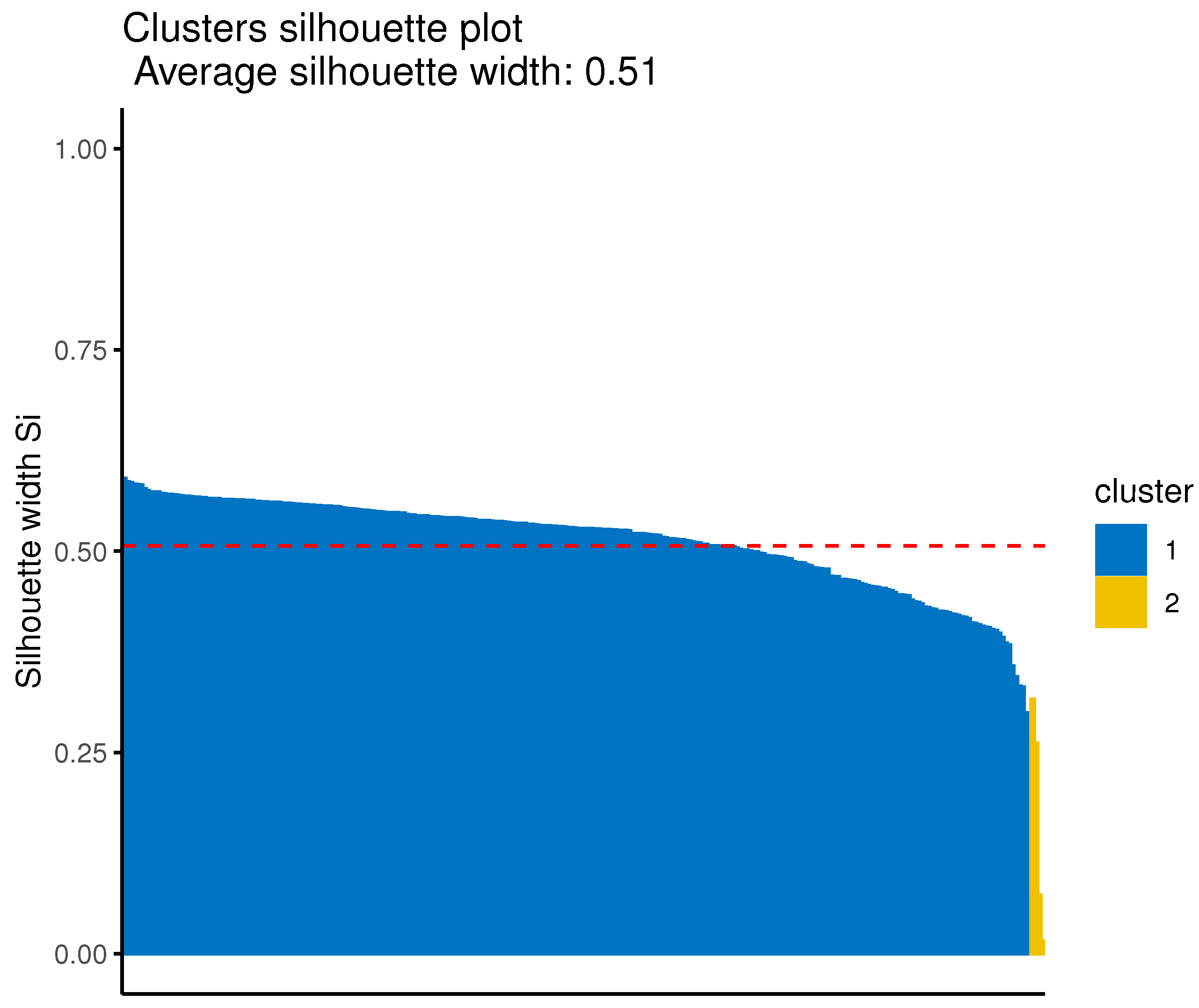

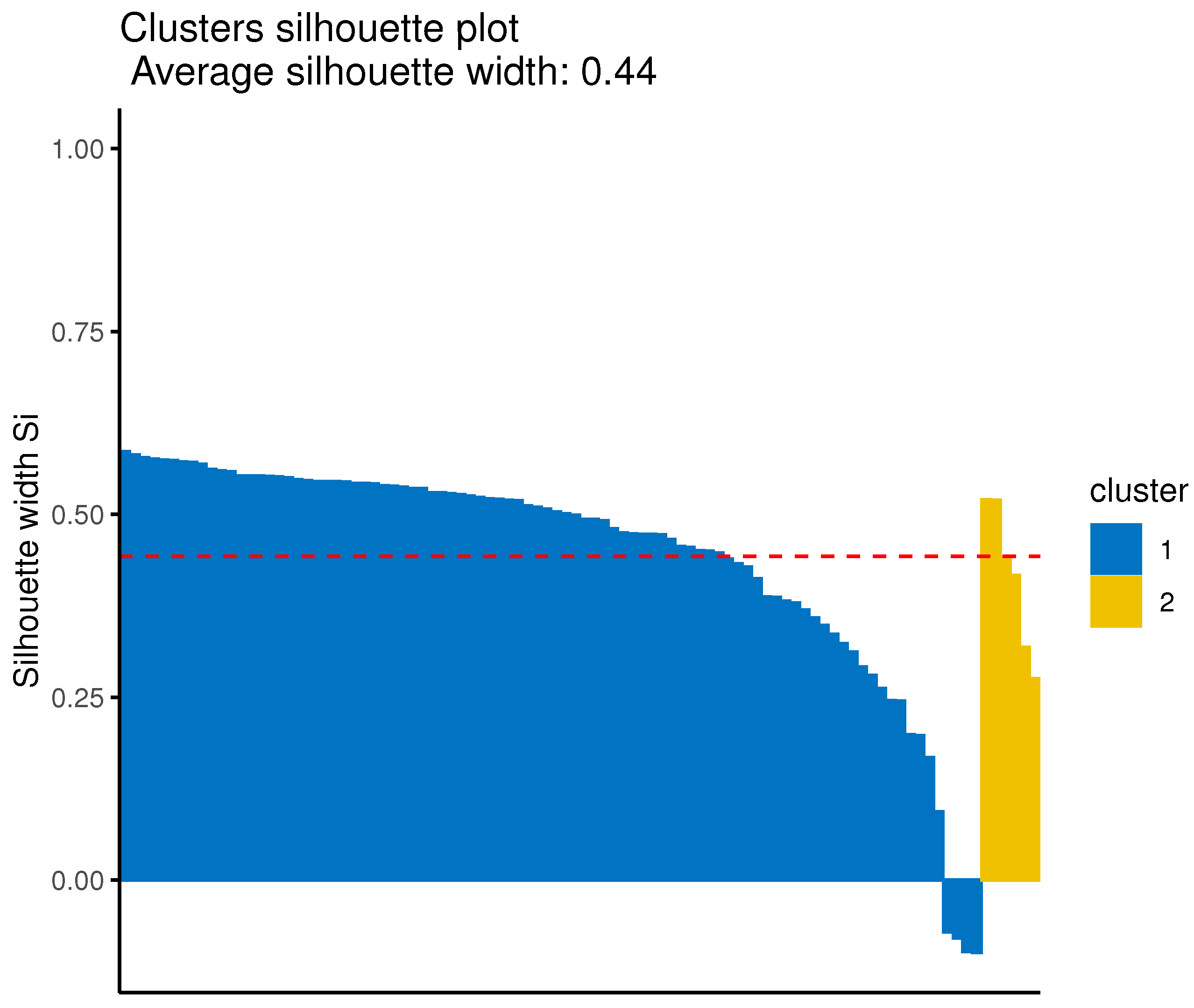

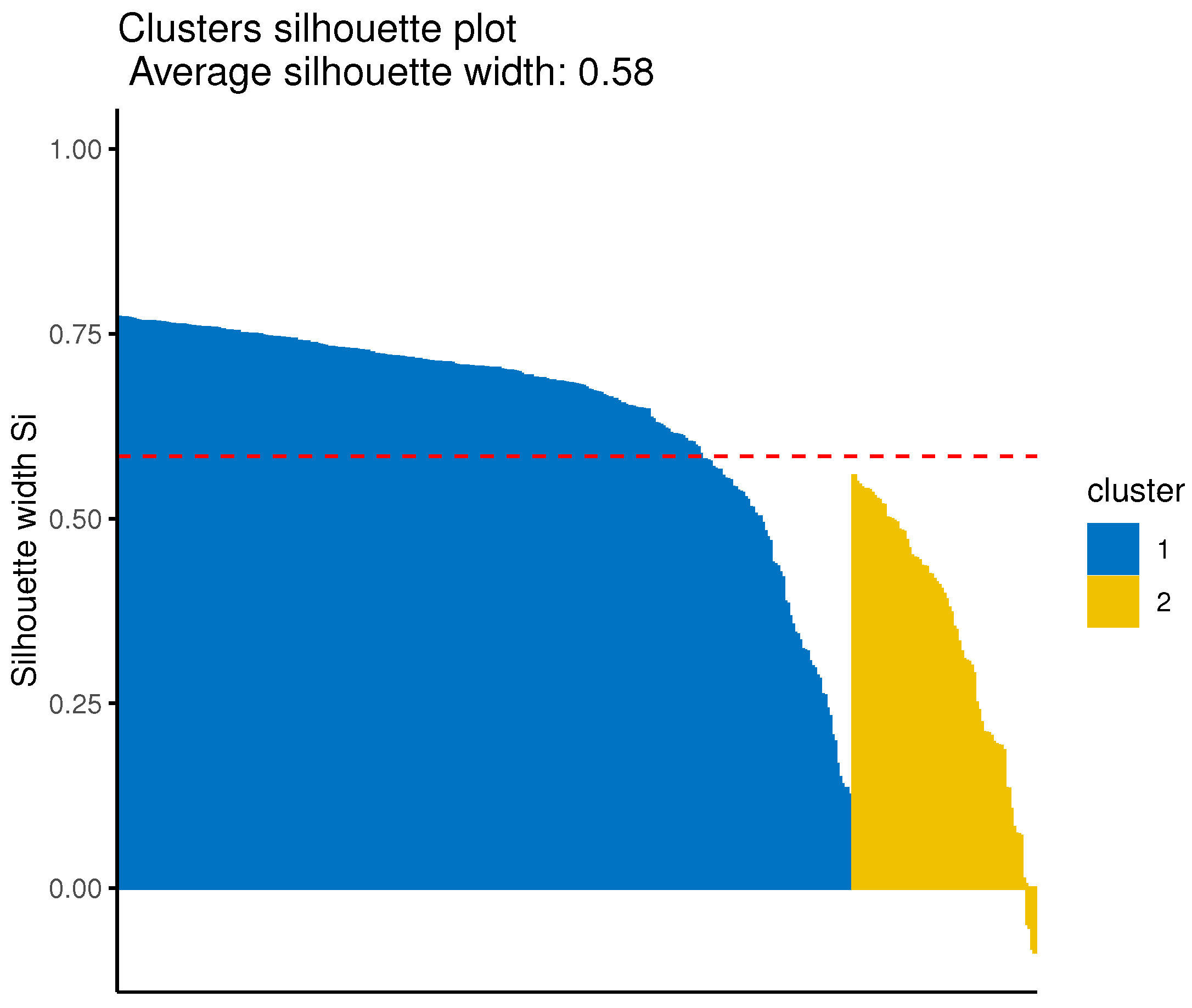

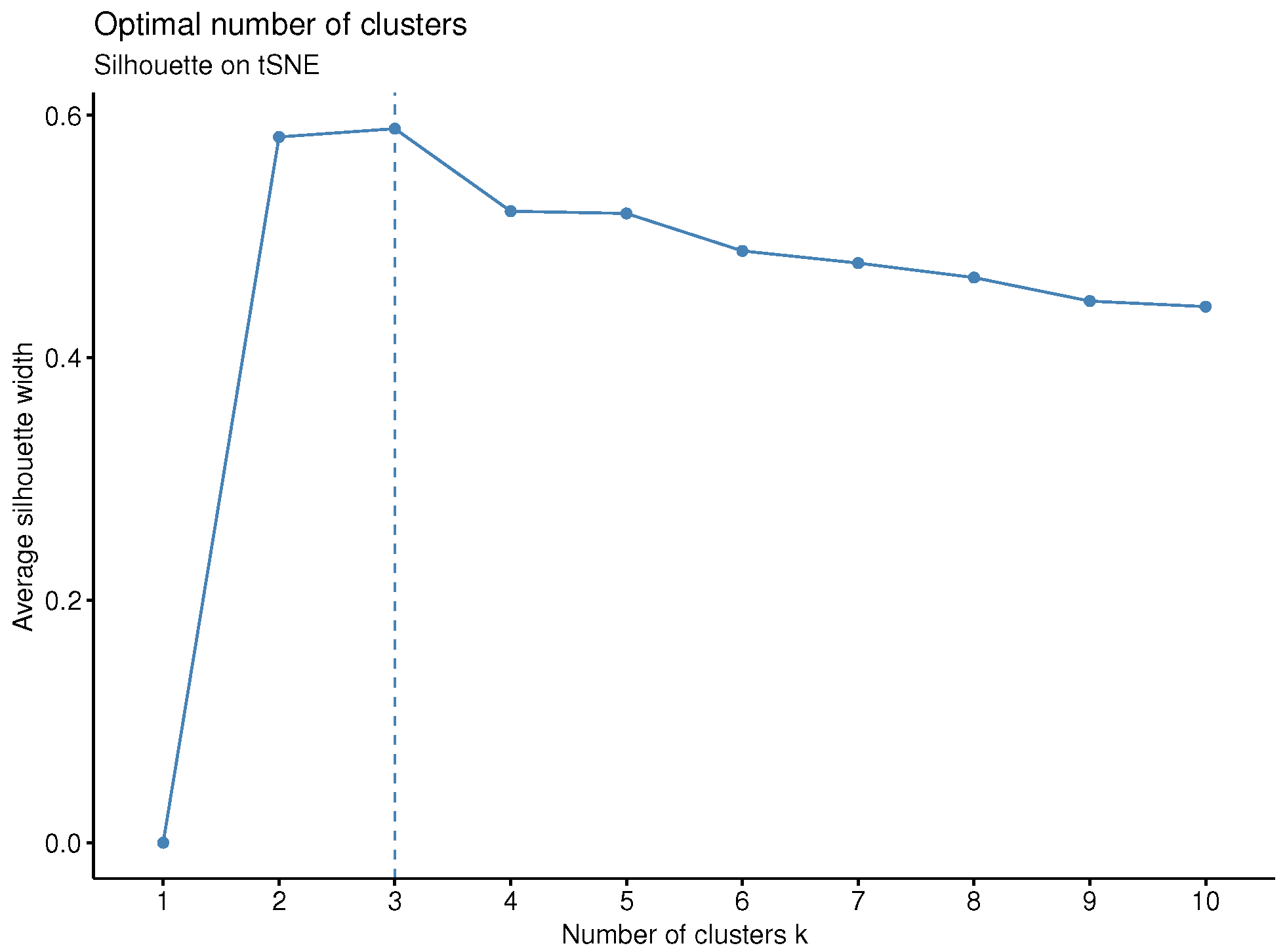

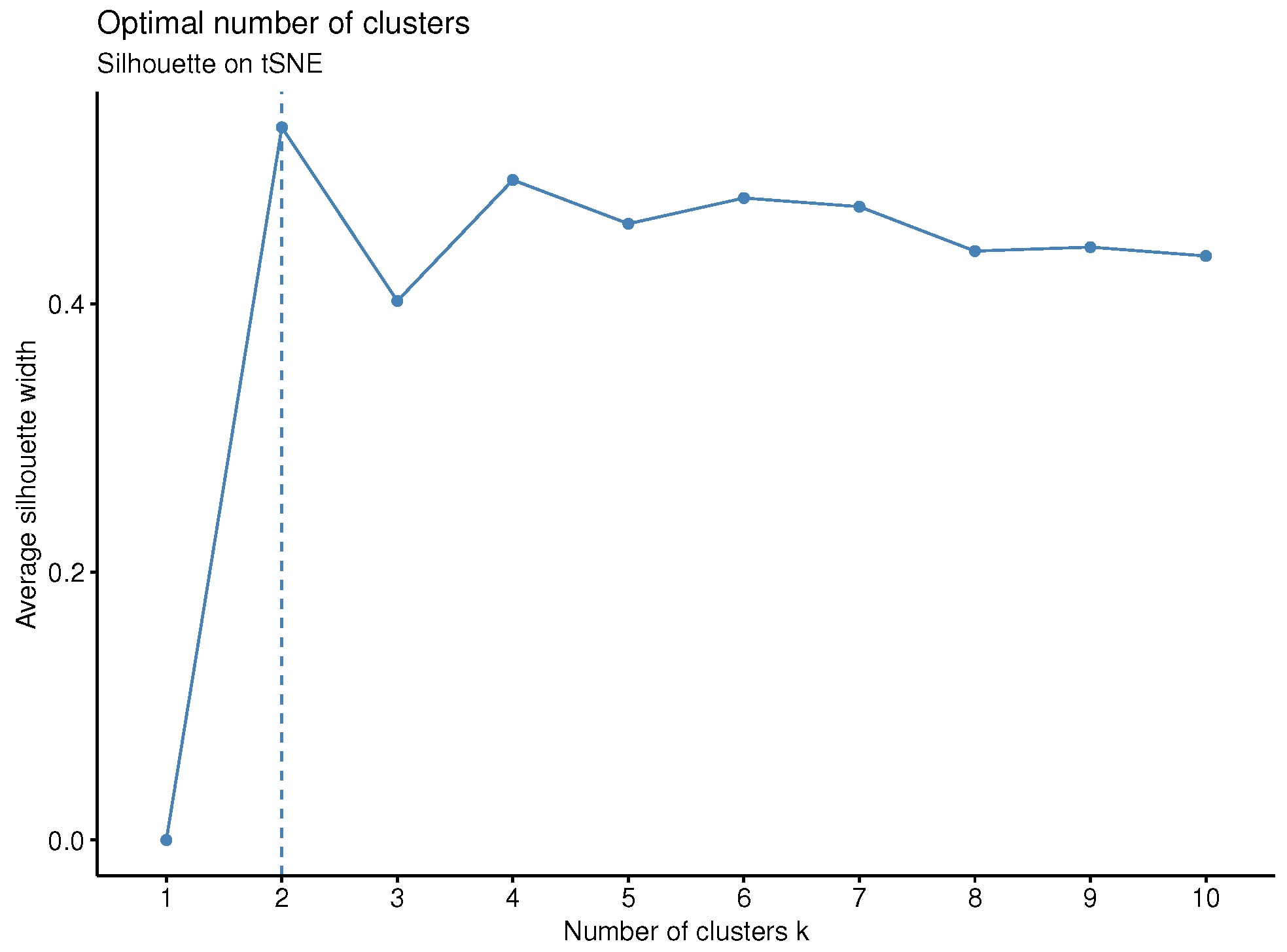

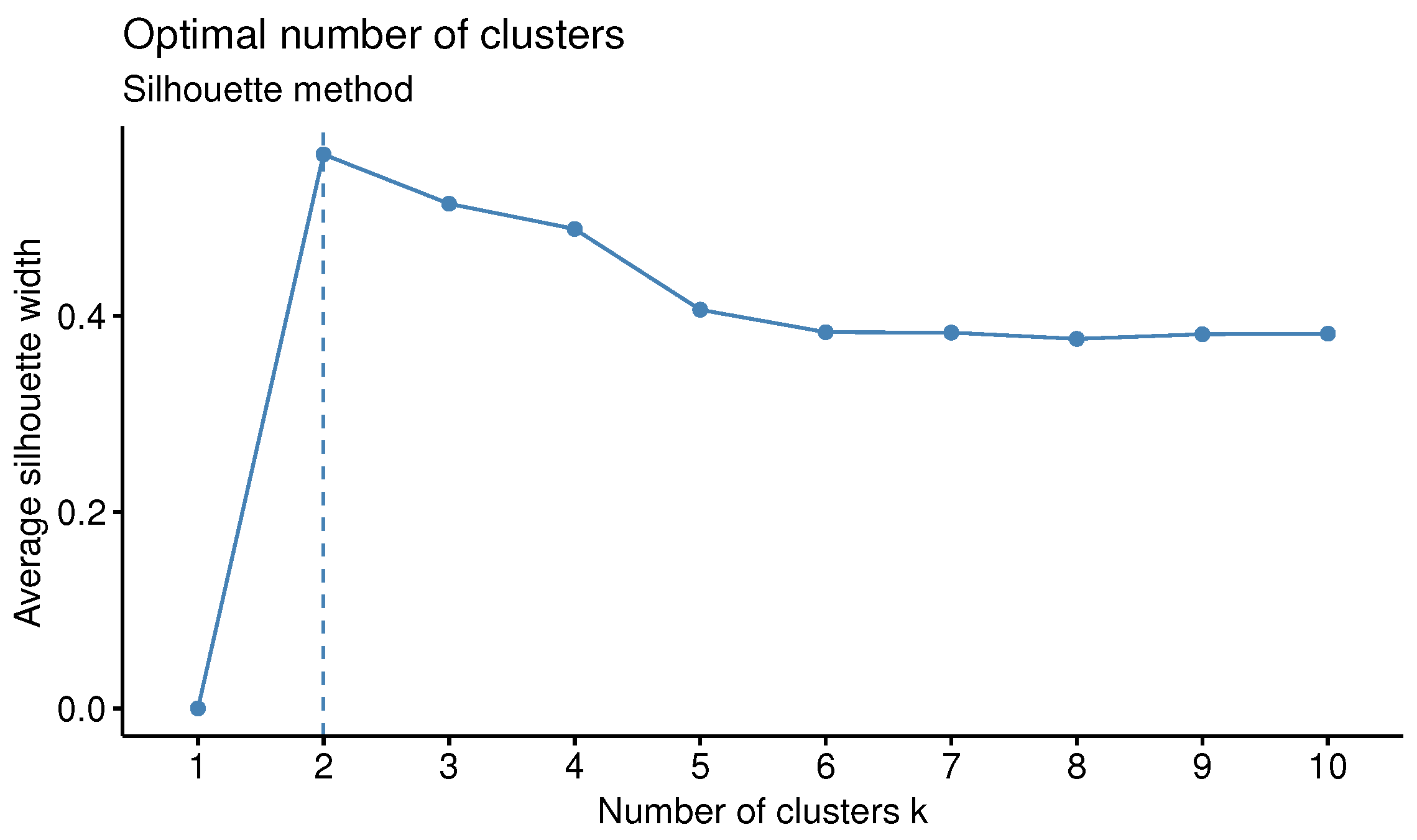

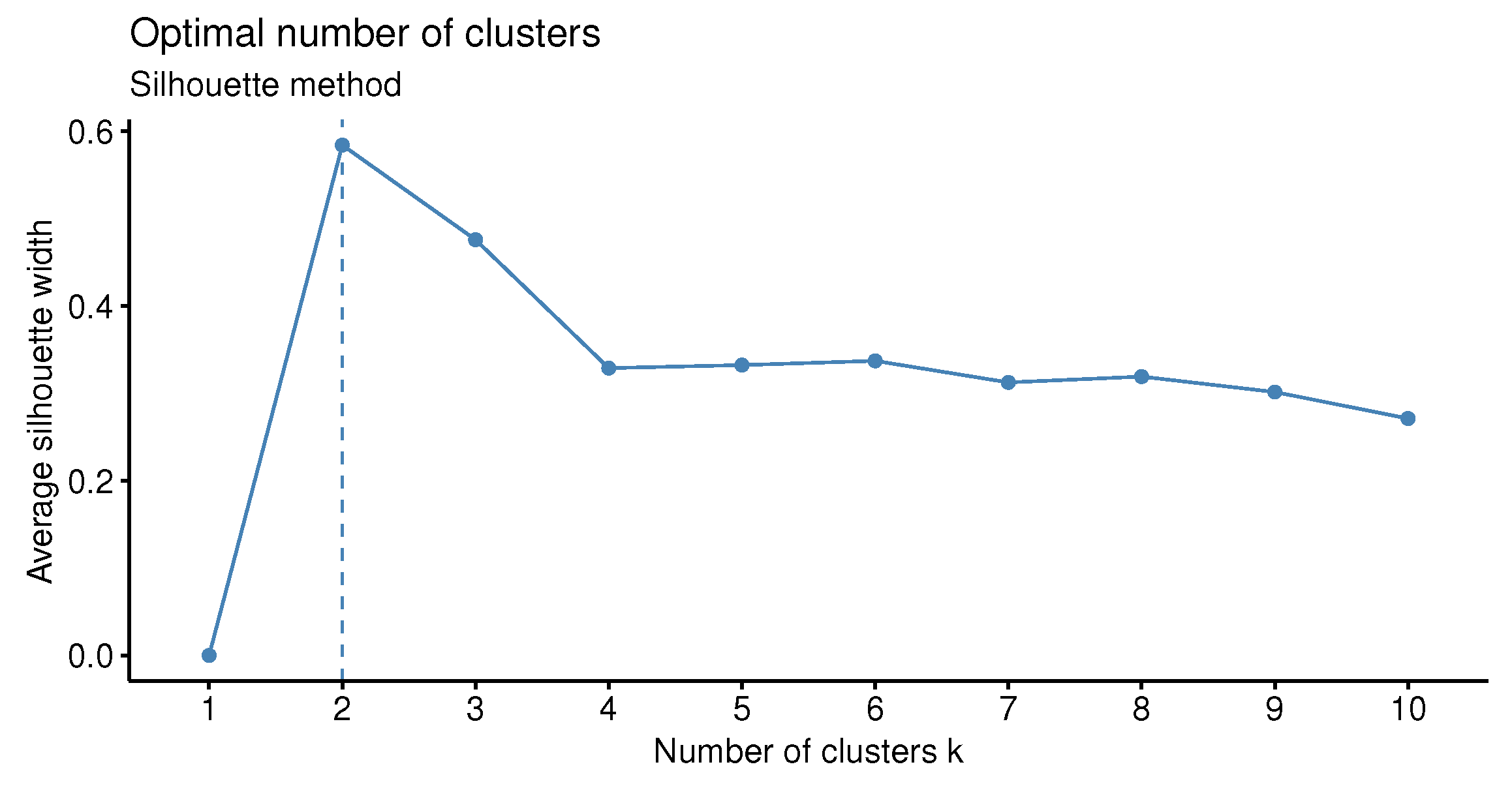

Lastly, the optimal number of clusters is determined by the average silhouette criterion [

73] in both the first case (i.e., the univariate) and the second multivariate. In order, however, to have a more robust choice, the Davies–Bouldin index was computed as well. However, the gap statistic [

74] or the total within the sum of squares could also be adopted as an alternative.

3.2. Secondary Features Clustering

The second level of clustering consists of two steps and relies on the use of the available raw data. Initially, an imputation technique is assisted to complete the missing data in the raw data, and afterwards, one more clustering level is employed on the resulting—from the first level—clusters. In the following subsections, details of these two steps are provided.

3.2.1. Imputation Technique

While no special handling and imputation of missing values is applied during the first stage of clustering, this does not hold for the second level. Missing imputation techniques are crucial for the second-step clustering employing Dynamic Time Warping. Explicitly, Dynamic Time Warping which is employed in the second level does not inherently handle missing data. At this point, the “Seasonally Splitted Missing Value Imputation” (SSMVI) method is suggested for the imputation of values for missing data in the raw time series. This method is included in

imputeTS package on CRAN, a package that specializes in time series imputation and includes several algorithms [

75].

The SSMVI method takes into account the seasonality of the time series during imputation of the missing values. In particular, SSMVI initially divides the time series into seasons, which can be considered a preprocessing step, and then performs imputation separately for each of the resulting time series datasets. The missing values within each season are estimated using interpolation, i.e., by estimating the missing values based on their surrounding points. In this way, the unique characteristics of each season are preserved.

3.2.2. Clustering Algorithm

Following the procedure of the first level of clustering and imputation, the resulting time series objects are grouped based on the clusters identified by the first level of clustering. Thence, time series agglomerative hierarchical cluster analysis is performed in time series objects for every single first-level cluster, comprising the second stage of the method. The linkage criterion employed is the average agglomeration method.

Hierarchical clustering is another example of an unsupervised technique. It can be either agglomerative or divisive. The first variant treats each data point (in our case, time series) as a separate cluster and iteratively merges subsets until all subsets are merged into one. On the contrary, divisive hierarchical clustering begins with all data points in a single cluster and iteratively divides the initial cluster until the data point is located in its cluster [

29]. The above general procedure can be represented in a dendrogram.

One of the main challenges in clustering time series is dealing with time shifts and distortions. Euclidean distance, while simple and computationally efficient, does not account for such misalignments, meaning that similar time series with slight delays would be considered distant. Dynamic Time Warping (DTW) overcomes this issue, ensuring that patterns occurring at different time points can still be recognized as similar. For this reason, DTW was employed in the univariate scenario, as it provides robustness against time shifts and distortions, improving clustering accuracy in cases where the Euclidean distance may fail. Dynamic Time Warping is a commonly accepted technique for finding an optimal alignment between two given (time-dependent) sequences under certain restrictions [

76]. As it can be inferred by the name of this technique, the sequences, let

and

,

, are warped to match each other. The optimal alignment is derived by finding an alignment that has a minimal overall cost of a warping path

p between

X and

Y. This total cost is the sum of local cost measures that are derived from warping paths between pairs of elements of the two sequences. A warping path is a sequence, let

, with

for

, that satisfies certain conditions.

A key limitation of standard DTW is its computational cost, making it inefficient for long sequences. To mitigate this, DTW with Euclidean distance as the local distance function is applied, using the “DTWARP” method from the

TSclust package in CRAN. The function applies standard DTW for alignment and, by default, employs Euclidean distance as the local cost function. However, alternative modifications exist [see for example [

77,

78,

79]] which can reduce the computational burden and improve alignment in certain cases. While these alternatives are not implemented in this study, future research could explore their potential benefits.

In the multivariate case, the choice of distance metric becomes more complex because multiple attributes (e.g., precipitation and temperature) must be compared simultaneously. Multivariate Dynamic Time Warping (mDTW) is employed, as it allows for the comparison of multivariate time series by aligning multiple dimensions simultaneously (e.g., precipitation and temperature). However, it comes with a higher computational cost, making it less practical for large datasets. If computational constraints allow, mDTW can be implemented using the

dwtclust package on CRAN, which extends the functionality of

proxy::dist by providing custom distance functions, including DTW for time series. Applying DTW independently to each attribute and summing the resulting costs is a possible alternative; however, this approach might lead to the loss of inter-attribute dependencies and the computational complexity. Lastly, in the work of [

80], the Euclidean timed and spaced method is selected, so this could also be an alternative.

As the final step of clustering, it is necessary to find an appropriate cutting point of the produced dendrogram that will reveal the number of clusters. The average silhouette width method is again proposed to be employed to identify the optimal number of (sub-)clusters in every first-level cluster. Finally, these sub-clusters, for each first-level cluster, are the final groups identified by the proposed method.

5. Conclusions

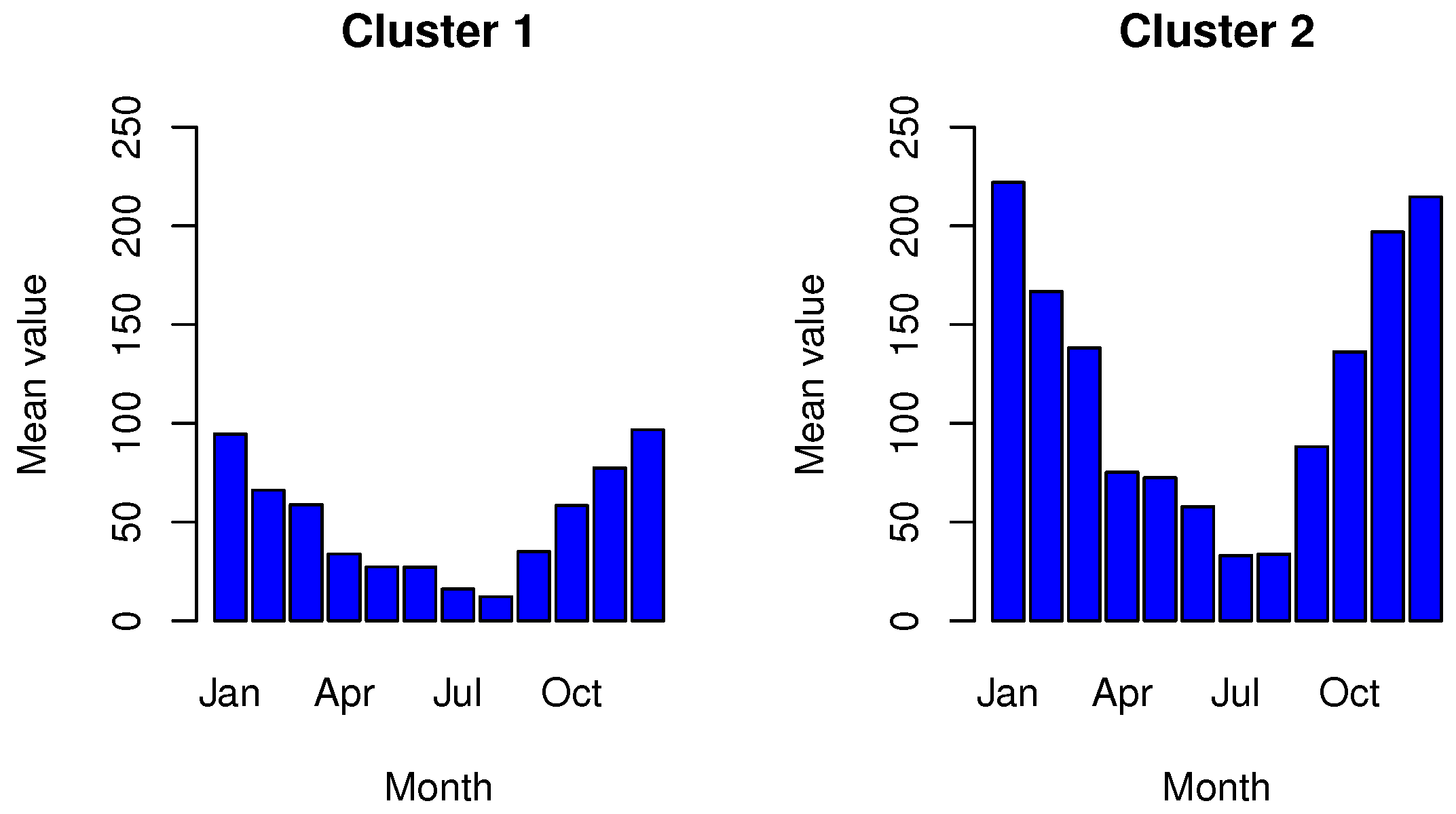

Considering the first case of univariate time series, meaning the case of precipitation time series, the proposed method identified four regions with similar precipitation characteristics and patterns in Greece. These clusters could result from the division of Greece by the Pindos Mountain range, where regions located along the mountain passage (i.e., the most mountainous) receive higher precipitation levels. A similar result was derived from the mean temperature time series, where once again, four regions of Greece were identified as having similar mean temperatures. Regions with higher mean altitudes consistently maintained lower temperatures compared to those at lower altitudes or near the sea.

In the multivariate scenario, the proposed method identified 19 distinct sub-clusters based entirely on temporal patterns in precipitation and temperature. While some clusters align with known geographical features (e.g., the Pindos mountain range), their formation was strictly data driven through seasonal indices and DTW-aligned raw series, prioritizing temporal patterns over spatial intuition. This approach proved particularly effective in Greece’s complex topography, where proximate areas often exhibit markedly different meteorological behaviors. The method revealed meaningful, data-driven subgroups that transcend geography (e.g., high-altitude stations in Crete clustering with northern mountainous areas), demonstrating that temporal patterns can capture climatic relationships that spatial correlations alone might miss. While spatial information could enhance interpretability in some contexts, our results show that temporal features can effectively reveal Greece’s diverse climatic regions.

Finally, it should be taken into consideration that there are parts of Greece where the coverage of automated weather stations is insufficient (e.g., East Peloponnese, and North or Central Greece). For that reason, along with the complexity of topography that controls both temperature and precipitation, any conclusions should be extracted with caution.

The proposed two-level clustering methodology, however, offers both theoretical and practical implications. Theoretically, it contributes to the study of time series clustering by addressing challenges associated with missing data, unequal series lengths, and seasonal structures, particularly in spatiotemporal environmental data. Practically, the framework provides a data-driven approach to identify meteorologically homogeneous regions. This can support national and regional stakeholders—such as environmental agencies, water resource planners, and climate policy advisors—in identifying climate-sensitive zones for more effective infrastructure planning, risk assessment, and resource allocation. Furthermore, the general structure of the methodology ensures that it can be easily applied to other regions or climatic variables, making it a versatile tool for broader geospatial applications under varying environmental contexts.

As in any methodology, limitations exist and are presented with possible suggestions that could also be part of future work. The method assumes a common seasonal time period across all time series, which may not hold true for geographically diverse meteorological stations with varying local climates. For cases where common seasonality does not exist, adaptive seasonal decomposition techniques (e.g., STL or seasonal autoencoder models) could be an alternative. The extensive or non-random missingness of data could lead to implications when imputation is applied in general, distorting original temporal patterns. In this case, however, the original temporal and seasonal patterns are derived from the first-level clustering when no imputation is performed. Model-based or probabilistic imputation techniques, such as Gaussian Process imputation, could be an option.

As already mentioned, the clustering methods in this work were initially selected for their interpretability, computational efficiency, and widespread use in environmental time series clustering, in order to establish a robust baseline for future method comparisons. A point of interest, however, could be a comparison of the known flexible clustering techniques (e.g DBSCAN) or deep learning-based clustering, options that capture complex relationships, with the proposed methodology. Other comparisons could be with the basic approaches of imputation (KNN imputation, pattern DTW matching) or other state-of-the-art methods like GAIN, DTW LSTM, etc. A promising direction for future research is to utilize temporal modeling techniques, such as HMMs or RNNs, that might provide a richer representation of the dynamic evolution of weather patterns. It should be noted that the clustering focus on dominant seasonal patterns was driven by the data’s high proportion of missing values and the prominent seasonal dynamics of the meteorological data. Incorporating clustering based on extreme/recurrent events would add important insights though.

Further, an interesting aspect for future work is to research in the direction of nonlinear dimensionality reduction techniques (e.g., t-SNE and UMAP) to compare the results. Our preliminary applications of t-SNE (see

Appendix C) produced clusters inconsistent with the known climatic regions of Greece. This discrepancy is likely due to the sensitivity of these methods to high dimensionality and parameter tuning, and thus is suggested for further study. In addition to the above-mentioned points, it is acknowledged that the present study has been conducted using a meteorological dataset from Greece, and that its generalizability to other geographical regions and climatic conditions has yet to be empirically demonstrated. Nevertheless, the proposed methodology has been developed within a broadly applicable framework, designed to be easily transferable to datasets from other locations. Thus, it is intended to broaden its generalizability to other climatic variables, regions, or climatic zones of global datasets. To enhance usability, an R-Shiny dashboard is planned to be developed in future work, which would allow users to easily explore the clustering results, upload new data, or dynamically view seasonal and spatial patterns.