Data Cloning Estimation and Identification of a Medium-Scale DSGE Model

Abstract

1. Introduction

2. Estimation and Identification of DSGE Models

2.1. Estimation

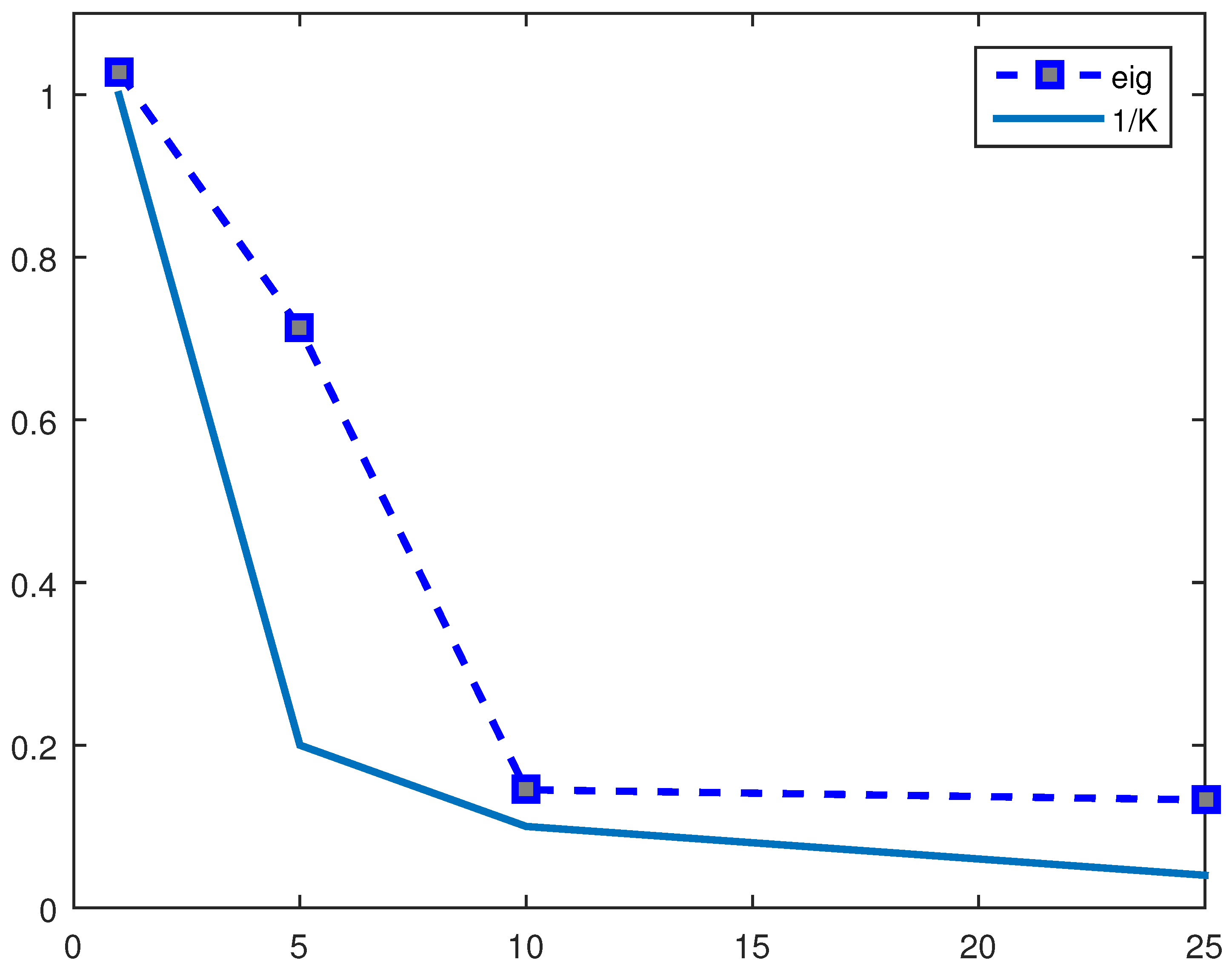

2.2. Identification

3. The Data Cloning Method

4. Model and Estimation Details

5. Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lele, S.R.; Dennis, B.; Lutscher, F. Data cloning: Easy maximum likelihood estimation for complex ecological models using Bayesian Markov chain Monte Carlo methods. Ecol. Lett. 2007, 10, 551–563. [Google Scholar] [CrossRef] [PubMed]

- Ponciano, J.M.; Taper, M.L.; Dennis, B.; Lele, S.R. Hierarchical models in ecology: Confidence intervals, hypothesis testing, and model selection using data cloning. Ecology 2009, 90, 356–362. [Google Scholar] [CrossRef] [PubMed]

- Baghishani, H.; Mohammadzadeh, M. A data cloning algorithm for computing maximum likelihood estimates in spatial generalized linear mixed models. Comput. Stat. Data Anal. 2011, 55, 1748–1759. [Google Scholar] [CrossRef]

- Torabi, M. Likelihood inference in generalized linear mixed models with two components of dispersion using data cloning. Comput. Stat. Data Anal. 2012, 56, 4259–4265. [Google Scholar] [CrossRef]

- Ponciano, J.M.; Burleigh, J.G.; Braun, E.L.; Taper, M.L. Assessing parameter identifiability in phylogenetic models using data cloning. Syst. Biol. 2012, 61, 955–972. [Google Scholar] [CrossRef] [PubMed]

- Torabi, M.; Lele, S.R.; Prasad, N.G. Likelihood inference for small area estimation using data cloning. Comput. Stat. Data Anal. 2015, 89, 158–171. [Google Scholar] [CrossRef]

- Picchini, U.; Anderson, R. Approximate maximum likelihood estimation using data-cloning ABC. Comput. Stat. Data Anal. 2017, 105, 166–183. [Google Scholar] [CrossRef]

- Duan, J.C.; Fulop, A.; Hsieh, Y.W. Data-cloning SMC2: A global optimizer for maximum likelihood estimation of latent variable models. Comput. Stat. Data Anal. 2020, 143, 106841. [Google Scholar] [CrossRef]

- Laurini, M.P. A Hybrid Data Cloning Maximum Likelihood Estimator for Stochastic Volatility Models. J. Time Ser. Econom. 2013, 5, 193–229. [Google Scholar] [CrossRef]

- Marín, J.M.; Rodríguez-Bernal, M.T.; Romero, E. Data cloning estimation of GARCH and COGARCH models. J. Stat. Comput. Simul. 2015, 85, 1818–1831. [Google Scholar] [CrossRef][Green Version]

- de Zea Bermudez, P.; Marín, J.M.; Veiga, H. Data cloning estimation for asymmetric stochastic volatility models. Econom. Rev. 2020, 39, 1057–1074. [Google Scholar] [CrossRef]

- Fernández-Villaverde, J. The econometrics of DSGE models. SERIEs 2010, 1, 3–49. [Google Scholar] [CrossRef]

- Furlani, L.G.C.; Laurini, M.P.; Portugal, M.S. Data cloning: Maximum likelihood estimation of DSGE models. Results Appl. Math. 2020, 7, 100121. [Google Scholar] [CrossRef]

- Canova, F.; Sala, L. Back to square one: Identification issues in DSGE models. J. Monet. Econ. 2009, 56, 431–449. [Google Scholar] [CrossRef]

- Smets, F.; Wouters, R. Shocks and frictions in US business cycles: A Bayesian DSGE approach. Am. Econ. Rev. 2007, 97, 586–606. [Google Scholar] [CrossRef]

- Chari, V.V.; Kehoe, P.J.; McGrattan, E.R. New Keynesian models: Not yet useful for policy analysis. Am. Econ. J. Macroecon. 2009, 1, 242–266. [Google Scholar] [CrossRef]

- Iskrev, N. Local identification in DSGE models. J. Monet. Econ. 2010, 57, 189–202. [Google Scholar] [CrossRef]

- Komunjer, I.; Ng, S. Dynamic identification of dynamic stochastic general equilibrium models. Econometrica 2011, 79, 1995–2032. [Google Scholar]

- Romer, P. The Trouble with Macroeconomics. Available online: https://paulromer.net/trouble-with-macroeconomics-update/WP-Trouble.pdf (accessed on 21 September 2016).

- Chadha, J.S.; Shibayama, K. Bayesian estimation of DSGE models: Identification using a diagnostic indicator. J. Econ. Dyn. Control. 2018, 95, 172–186. [Google Scholar] [CrossRef]

- Christiano, L.J.; Eichenbaum, M.S.; Trabandt, M. On DSGE models. J. Econ. Perspect. 2018, 32, 113–140. [Google Scholar] [CrossRef]

- DeJong, D.N.; Dave, C. Structural Macroeconometrics; Princeton University Press: Princeton, NJ, USA, 2011. [Google Scholar]

- Blanchard, O.J.; Kahn, C.M. The solution of linear difference models under rational expectations. Econometrica 1980, 48, 1305–1311. [Google Scholar] [CrossRef]

- Anderson, G.; Moore, G. A linear algebraic procedure for solving linear perfect foresight models. Econ. Lett. 1985, 17, 247–252. [Google Scholar] [CrossRef]

- King, R.G.; Watson, M.W. The solution of singular linear difference systems under rational expectations. Int. Econ. Rev. 1998, 39, 1015–1026. [Google Scholar] [CrossRef]

- Sims, C.A. Solving linear rational expectations models. Comput. Econ. 2002, 20, 1–20. [Google Scholar] [CrossRef]

- Gamerman, D.; Lopes, H. MCMC—Stochastic Simulation for Bayesian Inference; Chapman & Hall/CRC: London, UK, 2006. [Google Scholar]

- An, S.; Schorfheide, F. Bayesian analysis of DSGE models. Econom. Rev. 2007, 26, 113–172. [Google Scholar] [CrossRef]

- Rothenberg, T.J. Identification in parametric models. Econom. J. Econom. Soc. 1971, 39, 577–591. [Google Scholar] [CrossRef]

- Calvo, G.A. Staggered prices in a utility-maximizing framework. J. Monet. Econ. 1983, 12, 383–398. [Google Scholar] [CrossRef]

- Sargent, T.J. The observational equivalence of natural and unnatural rate theories of macroeconomics. J. Political Econ. 1976, 84, 631–640. [Google Scholar] [CrossRef]

- Qu, Z.; Tkachenko, D. Identification and frequency domain quasi-maximum likelihood estimation of linearized dynamic stochastic general equilibrium models. Quant. Econ. 2012, 3, 95–132. [Google Scholar] [CrossRef]

- Tkachenko, D.; Qu, Z. Frequency domain analysis of medium scale DSGE models with application to Smets and Wouters (2007). Adv. Econom. 2012, 28, 319. [Google Scholar]

- Koop, G.; Pesaran, M.H.; Smith, R.P. On identification of Bayesian DSGE models. J. Bus. Econ. Stat. 2013, 31, 300–314. [Google Scholar] [CrossRef]

- Morris, S.D. DSGE pileups. J. Econ. Dyn. Control. 2017, 74, 56–86. [Google Scholar] [CrossRef]

- Ivashchenko, S.; Mutschler, W. The effect of observables, functional specifications, model features and shocks on identification in linearized DSGE models. Econ. Model. 2019, 88, 280–292. [Google Scholar] [CrossRef]

- Qu, Z.; Tkachenko, D. Global Identification in DSGE Models Allowing for Indeterminacy. Rev. Econ. Stud. 2018, 84, 1306–1345. [Google Scholar] [CrossRef]

- Meenagh, D.; Minford, P.; Wickens, M.; Xu, Y. Testing DSGE Models by Indirect Inference: A Survey of Recent Findings. Open Econ. Rev. 2019, 30, 593–620. [Google Scholar] [CrossRef]

- Kocięcki, A.; Kolasa, M. Global identification of linearized DSGE models. Quant. Econ. 2018, 9, 1243–1263. [Google Scholar] [CrossRef]

- Kocięcki, A.; Kolasa, M. A Solution to the Global Identification Problem in DSGE Models; Working Papers; Faculty of Economic Sciences, University of Warsaw: Warsaw, Poland, 2022. [Google Scholar]

- Lele, S.R.; Nadeem, K.; Schmuland, B. Estimability and likelihood inference for generalized linear mixed models using data cloning. J. Am. Stat. Assoc. 2010, 105, 1617–1625. [Google Scholar] [CrossRef]

- Lele, S.R. Model complexity and information in the data: Could it be a house built on sand? Ecology 2010, 91, 3493–3496. [Google Scholar] [CrossRef]

- van der Vaart, A. Asymptotic Statistics; Cambridge University Press: Cambridge, UK, 1998. [Google Scholar]

- Chernozhukov, V.; Hong, H. An MCMC approach to classical estimation. J. Econom. 2003, 115, 293–346. [Google Scholar] [CrossRef]

- Adjemian, S.; Bastani, H.; Juillard, M.; Mihoubi, F.; Perendia, G.; Ratto, M.; Villemot, S. Dynare: Reference Manual, Version 4; Technical Report; CEPREMAP: Paris, France, 2011. [Google Scholar]

- Dixon, H.; Kara, E. Can we explain inflation persistence in a way that is consistent with the microevidence on nominal rigidity? J. Money Credit. Bank. 2010, 42, 151–170. [Google Scholar] [CrossRef]

- Bils, M.; Klenow, P.J.; Malin, B.A. Reset price inflation and the impact of monetary policy shocks. Am. Econ. Rev. 2012, 102, 2798–2825. [Google Scholar] [CrossRef]

- Bils, M.; Klenow, P.J. Some Evidence on the Importance of Sticky Prices. J. Political Econ. 2004, 112, 947–985. [Google Scholar] [CrossRef]

| Parameter | Definition | Prior | Optimization | Single-Sample (MCMC) | 5 Clones | 10 Clones | 25 Clones | Benchmark | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Density | Mean | Std | Mode | Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | ||

| Investment adj. cost. | N | 4.00 | 1.50 | 5.49 | 5.588 | 2.241 | 6.851 | 1.091 | 6.004 | 0.694 | 5.974 | 1.044 | 5.74 | |

| Inv. elats. intert. subst. | N | 1.50 | 0.38 | 1.47 | 1.435 | 0.328 | 1.401 | 0.327 | 1.406 | 0.243 | 1.468 | 0.061 | 1.38 | |

| h | Consump. habit | B | 0.70 | 0.10 | 0.70 | 0.705 | 0.102 | 0.794 | 0.071 | 0.771 | 0.032 | 0.759 | 0.054 | 0.71 |

| Calvo wage | B | 0.50 | 0.10 | 0.73 | 0.692 | 0.163 | 0.896 | 0.024 | 0.855 | 0.067 | 0.838 | 0.121 | 0.71 | |

| Elast. labour supply | N | 2.00 | 0.75 | 1.67 | 1.655 | 1.259 | 3.791 | 0.433 | 3.267 | 0.731 | 3.398 | 1.852 | 1.83 | |

| Calvo price | B | 0.50 | 0.10 | 0.68 | 0.676 | 0.125 | 0.784 | 0.048 | 0.737 | 0.039 | 0.695 | 0.076 | 0.66 | |

| Index. of wages | B | 0.50 | 0.15 | 0.56 | 0.543 | 0.277 | 0.476 | 0.145 | 0.495 | 0.141 | 0.582 | 0.037 | 0.58 | |

| Index. of prices | B | 0.50 | 0.15 | 0.24 | 0.26 | 0.202 | 0.273 | 0.092 | 0.276 | 0.073 | 0.265 | 0.039 | 0.24 | |

| Capital utilization | B | 0.50 | 0.15 | 0.40 | 0.411 | 0.211 | 0.171 | 0.122 | 0.199 | 0.102 | 0.226 | 0.388 | 0.54 | |

| Fixed cost | N | 1.25 | 0.12 | 1.65 | 1.643 | 0.171 | 1.581 | 0.095 | 1.591 | 0.065 | 1.575 | 0.078 | 1.6 | |

| Response to inflation | N | 1.50 | 0.25 | 1.98 | 2.015 | 0.375 | 2.131 | 0.316 | 1.936 | 0.072 | 1.949 | 0.193 | 2.04 | |

| Interest rate smooth. | N | 0.75 | 0.10 | 0.82 | 0.82 | 0.052 | 0.872 | 0.016 | 0.854 | 0.018 | 0.851 | 0.021 | 0.81 | |

| Response to output | N | 0.13 | 0.05 | 0.09 | 0.095 | 0.052 | 0.138 | 0.069 | 0.123 | 0.029 | 0.118 | 0.007 | 0.08 | |

| Response to output gap | N | 0.13 | 0.05 | 0.22 | 0.222 | 0.064 | 0.191 | 0.013 | 0.185 | 0.027 | 0.192 | 0.047 | 0.22 | |

| Steady state inflation | G | 0.63 | 0.10 | 0.67 | 0.679 | 0.157 | 0.534 | 0.121 | 0.604 | 0.107 | 0.604 | 0.065 | 0.78 | |

| 100 | Discount factor | G | 0.25 | 0.10 | 0.21 | 0.241 | 0.203 | 0.232 | 0.167 | 0.186 | 0.041 | 0.195 | 0.038 | 0.16 |

| Steady state hours worked | N | 0.00 | 2.00 | 0.40 | 0.296 | 2.249 | 2.351 | 1.921 | 0.876 | 1.135 | 1.038 | 1.222 | 0.53 | |

| 100 | Trend growth | N | 0.40 | 0.10 | 0.44 | 0.435 | 0.035 | 0.451 | 0.011 | 0.456 | 0.025 | 0.455 | 0.011 | 0.43 |

| Share of capital | N | 0.30 | 0.05 | 0.32 | 0.314 | 0.092 | 0.354 | 0.106 | 0.371 | 0.031 | 0.356 | 0.067 | 0.19 | |

| Depreciation rate | n.a. | 0.025 | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | |

| Government/Output ratio | n.a. | 0.18 | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | |

| Wage mark-up | n.a. | 1.5 | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | |

| Kimball (wage) | n.a. | 10 | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | |

| Kimball (price) | n.a. | 10 | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | n.a. | |

| Tech. shock to gov. spending | N | 0.50 | 0.25 | 0.60 | 0.587 | 0.204 | 0.553 | 0.097 | 0.644 | 0.116 | 0.625 | 0.036 | 0.52 | |

| AR nonspecific technology | B | 0.50 | 0.20 | 0.95 | 0.948 | 0.0354 | 0.974 | 0.027 | 0.968 | 0.014 | 0.967 | 0.005 | 0.95 | |

| AR risk premium | B | 0.50 | 0.20 | 0.17 | 0.212 | 0.1957 | 0.219 | 0.183 | 0.217 | 0.034 | 0.209 | 0.014 | 0.22 | |

| AR government spending | B | 0.50 | 0.20 | 0.97 | 0.973 | 0.0196 | 0.997 | 0.001 | 0.996 | 0.016 | 0.995 | 0.019 | 0.97 | |

| AR investment | B | 0.50 | 0.20 | 0.74 | 0.747 | 0.134 | 0.647 | 0.083 | 0.671 | 0.101 | 0.681 | 0.045 | 0.71 | |

| AR monetary policy | B | 0.50 | 0.20 | 0.12 | 0.142 | 0.1294 | 0.042 | 0.049 | 0.121 | 0.095 | 0.121 | 0.031 | 0.15 | |

| AR price mark-up | B | 0.50 | 0.20 | 0.90 | 0.879 | 0.1326 | 0.999 | 0.001 | 0.999 | 0.075 | 0.999 | 0.016 | 0.89 | |

| AR wage mark-up | B | 0.50 | 0.20 | 0.97 | 0.959 | 0.0366 | 0.972 | 0.029 | 0.969 | 0.004 | 0.967 | 0.011 | 0.96 | |

| MA price mark-up | B | 0.50 | 0.20 | 0.77 | 0.723 | 0.2217 | 0.966 | 0.013 | 0.952 | 0.115 | 0.927 | 0.081 | 0.69 | |

| MA wage mark-up | B | 0.50 | 0.20 | 0.87 | 0.809 | 0.1694 | 0.945 | 0.048 | 0.914 | 0.026 | 0.904 | 0.029 | 0.84 | |

| Std. technology shock | IG | 0.10 | 2.00 | 0.43 | 0.434 | 0.0506 | 0.472 | 0.029 | 0.445 | 0.011 | 0.446 | 0.026 | 0.45 | |

| Std. risk premium shock | IG | 0.10 | 2.00 | 0.24 | 0.237 | 0.0606 | 0.243 | 0.052 | 0.241 | 0.038 | 0.243 | 0.003 | 0.23 | |

| Std. government spending | IG | 0.10 | 2.00 | 0.51 | 0.516 | 0.0519 | 0.52 | 0.016 | 0.504 | 0.061 | 0.512 | 0.011 | 0.53 | |

| Std. investment shock | IG | 0.10 | 2.00 | 0.43 | 0.435 | 0.0688 | 0.469 | 0.079 | 0.458 | 0.085 | 0.461 | 0.011 | 0.56 | |

| Std. monetary policy | IG | 0.10 | 2.00 | 0.24 | 0.244 | 0.1014 | 0.231 | 0.011 | 0.233 | 0.006 | 0.228 | 0.009 | 0.24 | |

| Std. price mark-up shock | IG | 0.10 | 2.00 | 0.14 | 0.141 | 0.0328 | 0.145 | 0.016 | 0.139 | 0.014 | 0.133 | 0.004 | 0.14 | |

| Std. wage mark-up shock | IG | 0.10 | 2.00 | 0.24 | 0.235 | 0.0359 | 0.231 | 0.024 | 0.217 | 0.008 | 0.224 | 0.028 | 0.24 | |

| Parameters | ||||||

|---|---|---|---|---|---|---|

| 1.000 | 0.389 | 0.373 | 0.582 | 0.445 | 1.841 | |

| 1.000 | 0.441 | 0.348 | 0.344 | 0.33 | 1.471 | |

| 1.000 | 0.178 | 0.142 | 0.081 | 0.132 | 1.004 | |

| 1.000 | 0.406 | 0.434 | 0.685 | 0.328 | 0.799 | |

| 1.000 | 0.176 | 0.174 | 0.151 | 0.318 | 0.738 | |

| 1.000 | 0.428 | 0.545 | 1.149 | 0.495 | 0.733 | |

| 1.000 | 0.338 | 0.361 | 0.207 | 0.515 | 0.733 | |

| 1.000 | 0.339 | 0.343 | 0.382 | 0.409 | 0.61 | |

| 1.000 | 0.621 | 0.575 | 0.853 | 1.046 | 0.543 | |

| h | 1.000 | 0.383 | 0.294 | 0.7 | 0.127 | 0.534 |

| 1.000 | 0.284 | 0.533 | 0.588 | 0.792 | 0.519 | |

| 1.000 | 0.352 | 0.571 | 0.843 | 0.225 | 0.516 | |

| 1.000 | 0.429 | 0.423 | 0.486 | 0.206 | 0.466 | |

| 1.000 | 0.488 | 0.611 | 0.556 | 0.441 | 0.456 | |

| 1.000 | 0.529 | 0.375 | 0.763 | 1.059 | 0.413 | |

| 1.000 | 0.299 | 0.329 | 0.316 | 0.193 | 0.397 | |

| 1.000 | 0.097 | 0.086 | 0.059 | 0.087 | 0.362 | |

| 1.000 | 0.426 | 0.452 | 0.621 | 0.555 | 0.341 | |

| 100 | 1.000 | 0.314 | 0.257 | 0.311 | 0.226 | 0.311 |

| 1.000 | 0.265 | 0.295 | 0.803 | 0.532 | 0.306 | |

| 1.000 | 0.326 | 0.333 | 0.383 | 0.912 | 0.238 | |

| 1.000 | 0.425 | 0.483 | 0.312 | 0.676 | 0.204 | |

| 1.000 | 0.485 | 0.288 | 0.455 | 0.433 | 0.194 | |

| 1.000 | 0.425 | 0.465 | 0.999 | 0.536 | 0.187 | |

| 100 | 1.000 | 0.705 | 0.525 | 0.825 | 0.226 | 0.186 |

| 1.000 | 0.357 | 0.497 | 0.475 | 0.328 | 0.178 | |

| 1.000 | 0.108 | 0.109 | 0.285 | 0.324 | 0.171 | |

| 1.000 | 0.302 | 0.773 | 1.156 | 1.315 | 0.175 | |

| 1.000 | 0.209 | 0.333 | 0.779 | 0.581 | 0.163 | |

| 1.000 | 0.316 | 0.728 | 1.324 | 0.277 | 0.148 | |

| 1.000 | 0.503 | 0.384 | 0.523 | 0.628 | 0.135 | |

| 1.000 | 0.307 | 0.371 | 0.496 | 0.368 | 0.128 | |

| 1.000 | 0.027 | 0.017 | 0.007 | 0.002 | 0.122 | |

| 1.000 | 0.359 | 0.116 | 0.116 | 0.146 | 0.088 | |

| 1.000 | 0.392 | 0.386 | 0.937 | 0.325 | 0.072 | |

| 1.000 | 0.402 | 0.419 | 0.871 | 0.712 | 0.061 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chaim, P.; Laurini, M.P. Data Cloning Estimation and Identification of a Medium-Scale DSGE Model. Stats 2023, 6, 17-29. https://doi.org/10.3390/stats6010002

Chaim P, Laurini MP. Data Cloning Estimation and Identification of a Medium-Scale DSGE Model. Stats. 2023; 6(1):17-29. https://doi.org/10.3390/stats6010002

Chicago/Turabian StyleChaim, Pedro, and Márcio Poletti Laurini. 2023. "Data Cloning Estimation and Identification of a Medium-Scale DSGE Model" Stats 6, no. 1: 17-29. https://doi.org/10.3390/stats6010002

APA StyleChaim, P., & Laurini, M. P. (2023). Data Cloning Estimation and Identification of a Medium-Scale DSGE Model. Stats, 6(1), 17-29. https://doi.org/10.3390/stats6010002