Credibility of Causal Estimates from Regression Discontinuity Designs with Multiple Assignment Variables

Abstract

1. Introduction

- the cut-off scores determining treatment assignment are exogenously set;

- potential outcomes are continuous functions of the assignment scores at the cut-off scores and

- the functional form of the model is correctly specified.

Related Work

- 1

- This is the first time that the MRDD has been applied in the context of South Africa to quantify the causal effect that the household income and matric points have on the probability of eligibility for NSFAS funding, and eligibility to study for a bachelor’s degree. By quantifying the causal effect of household and income, policy makers in South Africa can make informed decisions on funding for for students who qualify to study for a bachelor’s degree.

- 2

- This paper provides the first evidence on whether meeting a matric points threshold and a household income threshold increases the chance of eligibility for NSFAS funding.

- 3

- The paper adds to the literature by combining the estimation of causal estimates using MRDD, and some of the supplementary analysis proposed by [6]. The authors noted as a concern that assessing the validity of the assumptions required for interpreting the estimates as causal effects obtained from regression discontinuity analysis is still lagging behind. Therefore, the paper extends the assumption checking of uni-variate RDD to the MRDD using supplementary analyses. These supplementary analyses are carried out to test for discontinuities in average covariate values at the threshold as well as to assess the credibility of the design, and in particular to test for evidence of manipulation of the forcing variable.This is crucial in practice because if the causal effect are not credible, then they are not useful.

- 4

- We have successfully demonstrated that one can use simulated data that closely mimics real world or original data, and still obtain significant and credible causal effect estimates.

2. Literature Review

2.1. Multivariate Regression Discontinuity Design

- 1.

- Treatment 1: If students score at least 25 matric points and family income is greater than R350,000 (Region 1):

- 2.

- Treatment 2: If students score less than 25 matric points and family income is greater than R350,000 (Region 2):

- 3.

- Treatment 3: If students score less than 25 matric points and family income is at most R350,000 (Region 3):

- 4.

- Treatment 4: If students score at least 25 matric points and family income is at most R350,000 (Region 4):

2.2. Multiple Assignment Variables: Estimation Strategies

- (i)

- the specification of the function f;

- (ii)

- the domain (D) of observations used in estimating the model.

3. Materials and Methods

3.1. Data

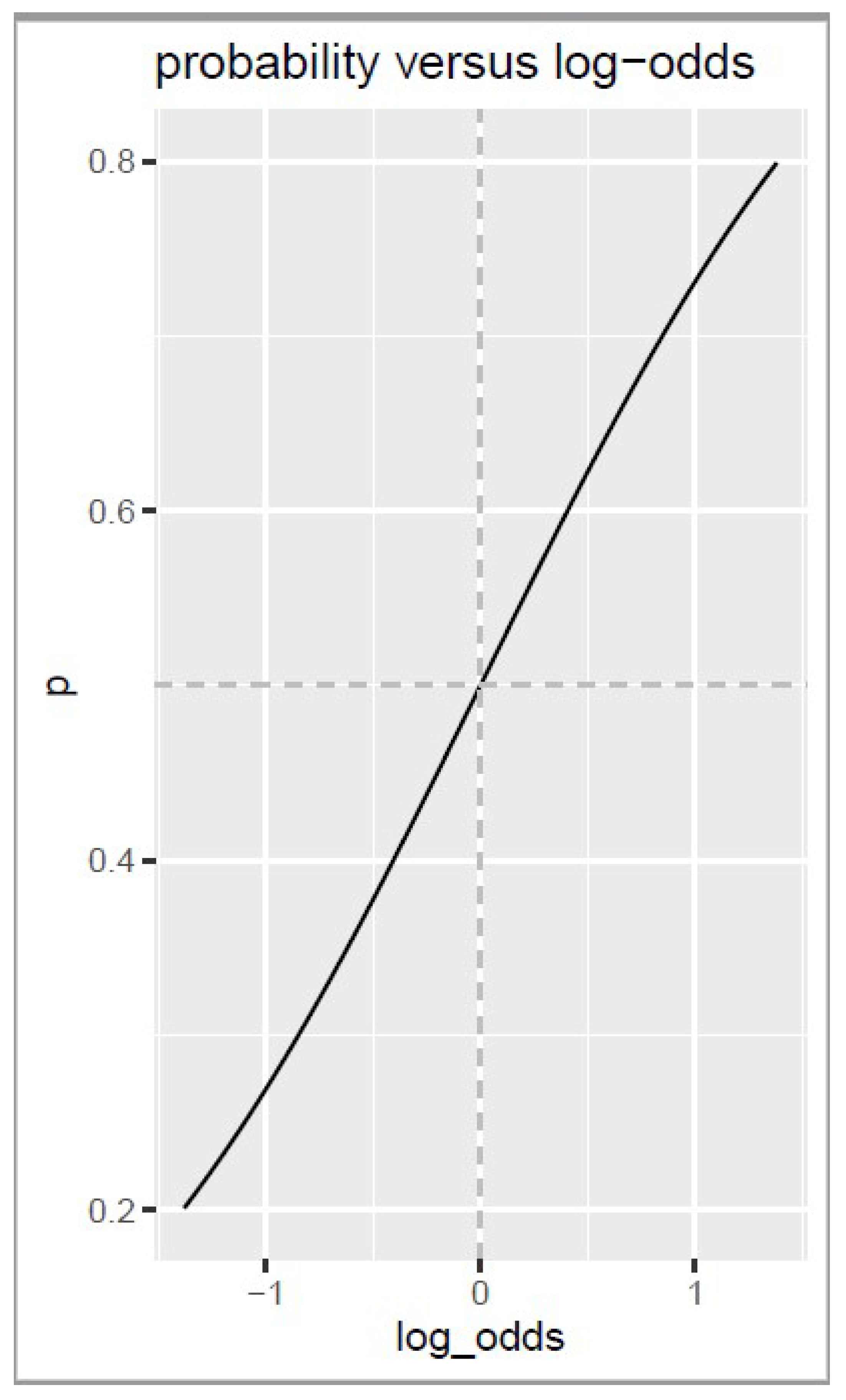

3.2. Estimating Causal Effects Using the Frontier Regression Discontinuity Design (FRDD)

4. Experiments

5. Results and Analysis

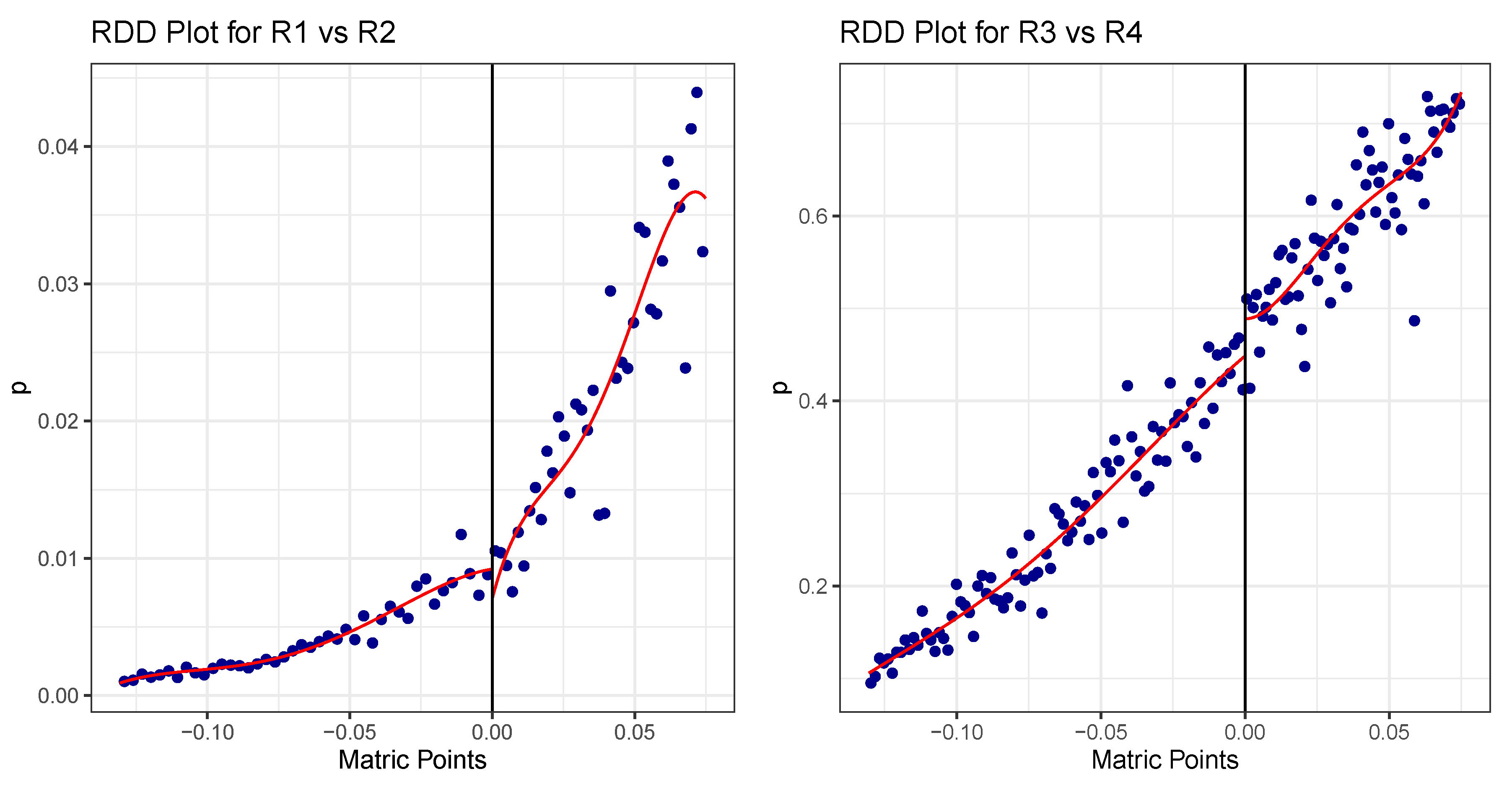

5.1. Estimation of the Causal Effects

5.2. Supplementary Analysis

5.2.1. Checking for Continuity of the Conditional Expectation of Exogenous Variables around the Cut-Off/Threshold Value

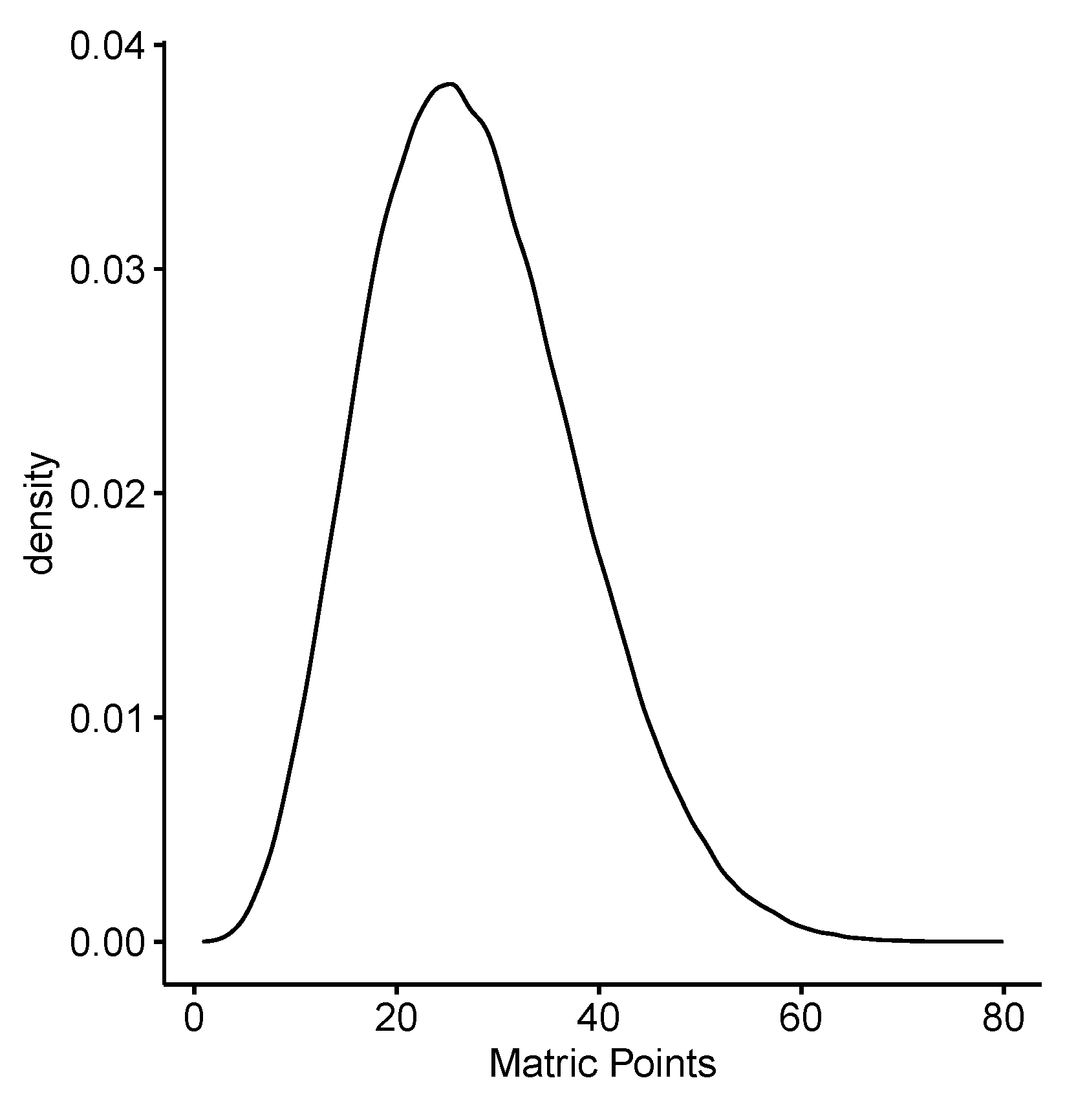

5.2.2. Manipulation Testing Using Local Polynomial Density Estimation

5.2.3. Sensitivity to Optimal Bandwidth Selection

6. Case Study

6.1. Application of the MRDD to the Graduate Admissions Data Set

6.2. Estimation of the Causal Effects of CGPA and GRE

- 1

- Causal Effect 1: < 0 vs. for

- 2

- Causal Effect 2: < 0 vs. for < 0

- 3

- Causal Effect 3: < 0 vs. for

- 4

- Causal Effect 4: <0 vs. for < 0

7. Discussion and Conclusions

7.1. Discussion

7.2. Limitations

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wong, V.C.; Steiner, P.M.; Cook, T.D. Analyzing regression-discontinuity designs with multiple assignment variables: A comparative study of four estimation methods. J. Educ. Behav. Stat. 2013, 38, 107–141. [Google Scholar] [CrossRef]

- Lee, D.S.; Lemieux, T. Regression discontinuity designs in economics. J. Econ. Lit. 2010, 48, 281–355. [Google Scholar] [CrossRef]

- Cheng, Y.A. Regression Discontinuity Designs with Multiple Assignment Variables. 2016. Available online: https://www.econ.berkeley.edu/sites/default/files/EconHonorsThesis_YizhuangAldenCheng.pdf (accessed on 2 April 2021).

- Papay, J.P.; Willett, J.B.; Murnane, R.J. Extending the regression-discontinuity approach to multiple assignment variables. J. Econom. 2011, 161, 203–207. [Google Scholar] [CrossRef]

- Reardon, S.F.; Robinson, J.P. Regression discontinuity designs with multiple rating-score variables. J. Res. Educ. Eff. 2012, 5, 83–104. [Google Scholar] [CrossRef]

- Athey, S.; Imbens, G.W. The state of applied econometrics: Causality and policy evaluation. J. Econ. Perspect. 2017, 31, 3–32. [Google Scholar] [CrossRef]

- McCrary, J. Manipulation of the running variable in the regression discontinuity design: A density test. J. Econom. 2008, 142, 698–714. [Google Scholar] [CrossRef]

- Garrod, N.; Wildschut, A. How large is the missing middle and what would it cost to fund? Dev. South. Afr. 2021, 38, 484–491. [Google Scholar] [CrossRef]

- Cattaneo, M.D.; Titiunik, R.; Vazquez-Bare, G.; Keele, L. Interpreting regression discontinuity designs with multiple cutoffs. J. Politics 2016, 78, 1229–1248. [Google Scholar] [CrossRef]

- Matsudaira, J.D. Mandatory summer school and student achievement. J. Econom. 2008, 142, 829–850. [Google Scholar] [CrossRef]

- Keele, L.J.; Titiunik, R. Geographic boundaries as regression discontinuities. Political Anal. 2015, 23, 127–155. [Google Scholar] [CrossRef]

- Kane, T.J. A Quasi-Experimental Estimate of the Impact of Financial Aid on College-Going; Technical Report; National Bureau of Economic Research: Cambridge, MA, USA, 2003. [Google Scholar]

- Imbens, G.W.; Wooldridge, J.M. Recent developments in the econometrics of program evaluation. J. Econ. Lit. 2009, 47, 5–86. [Google Scholar] [CrossRef]

- Papay, J.P.; Murnane, R.J.; Willett, J.B. High-school exit examinations and the schooling decisions of teenagers: Evidence from regression-discontinuity approaches. J. Res. Educ. Eff. 2014, 7, 1–27. [Google Scholar] [CrossRef] [PubMed]

- Porter, K.E.; Reardon, S.F.; Unlu, F.; Bloom, H.S.; Cimpian, J.R. Estimating causal effects of education interventions using a two-rating regression discontinuity design: Lessons from a simulation study and an application. J. Res. Educ. Eff. 2017, 10, 138–167. [Google Scholar] [CrossRef]

- Herlands, W.; McFowland, E., III; Wilson, A.G.; Neill, D.B. Automated local regression discontinuity design discovery. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 1512–1520. [Google Scholar]

- Van der Berg, S. Current poverty and income distribution in the context of South African history. Econ. Hist. Dev. Reg. 2011, 26, 120–140. [Google Scholar] [CrossRef][Green Version]

- Sala-i Martin, X. The World Distribution of Income (Estimated from Individual Country Distributions). 2002. Available online: https://www.nber.org/papers/w8933 (accessed on 13 March 2021).

- Arthurs, N.; Stenhaug, B.; Karayev, S.; Piech, C. Grades Are Not Normal: Improving Exam Score Models Using the Logit-Normal Distribution. In Proceedings of the 12th International Conference on Educational Data Mining (EDM), Montreal, QC, Canada, 2–5 July 2019; Available online: https://eric.ed.gov/?id=ED599204 (accessed on 17 August 2021).

- Von Hippel, P. Linear vs. logistic probability models: Which is better, and when. Stat. Horizons 2015. Available online: https://statisticalhorizons.com/linear-vs-logistic (accessed on 15 September 2021).

- Angrist, J.D.; Pischke, J.S. Mostly Harmless Econometrics: An Empiricist’s Companion; Princeton University Press: Princeton, NJ, USA, 2008. [Google Scholar]

- Deke, J. Using the Linear Probability Model to Estimate Impacts on Binary Outcomes in Randomized Controlled Trials; Technical Report, Mathematica Policy Research; Department of Health and Human Services, Administration on Children, Youth and Families, Office of Adolescent Health: Washington, DC, USA, 2014. [Google Scholar]

- Cattaneo, M.D.; Jansson, M.; Ma, X. Simple local polynomial density estimators. J. Am. Stat. Assoc. 2020, 115, 1449–1455. [Google Scholar] [CrossRef]

- Calonico, S.; Cattaneo, M.D.; Farrell, M.H.; Titiunik, R. rdrobust: Software for regression-discontinuity designs. Stata J. 2017, 17, 372–404. [Google Scholar] [CrossRef]

- Cattaneo, M.D.; Jansson, M.; Ma, X. Manipulation testing based on density discontinuity. Stata J. 2018, 18, 234–261. [Google Scholar] [CrossRef]

- Cattaneo, M.D.; Idrobo, N.; Titiunik, R. A Practical Introduction to Regression Discontinuity Designs: Foundations; Cambridge University Press: Cambridge, UK, 2020. [Google Scholar]

- Cattaneo, M.D.; Titiunik, R.; Vazquez-Bare, G. rdlocrand: Local Randomization Methods for RD Designs. R Package Version 0.3. 2018. Available online: https://CRAN.R-project.org/package=rdlocrand (accessed on 15 September 2021).

- Goodman, J.; Melkers, J.; Pallais, A. Can online delivery increase access to education? J. Labor Econ. 2019, 37, 1–34. [Google Scholar] [CrossRef]

- Acharya, M.S.; Armaan, A.; Antony, A.S. A comparison of regression models for prediction of graduate admissions. In Proceedings of the 2019 International Conference on Computational Intelligence in Data Science (ICCIDS), Chennai, India, 6–7 September 2019; pp. 1–5. Available online: https://ieeexplore.ieee.org/document/8862140 (accessed on 25 August 2021).

- Raghunathan, K. Demystifying the American Graduate Admissions Process. 2010. Available online: StudyMode.com (accessed on 25 August 2021).

- Cattaneo, M.D.; Frandsen, B.R.; Titiunik, R. Randomization inference in the regression discontinuity design: An application to party advantages in the US Senate. J. Causal Inference 2015, 3, 1–24. [Google Scholar] [CrossRef]

- Hill, R.C.; Fomby, T.B.; Escanciano, J.C.; Hillebrand, E.; Jeliazkov, I. Regression Discontinuity Designs: Theory and Applications; Emerald Group Publishing: Bingley, UK; West Yorkshire, UK, 2017. [Google Scholar]

| Population Group of Household | Average Income | % Households | Number of Households |

|---|---|---|---|

| Black African | 92,983 | 80.42 | 18,800 |

| Coloured | 172,765 | 7.23 | 1690 |

| Indian/Asian | 271,621 | 2.31 | 540 |

| White | 444,446 | 10.04 | 2347 |

| Total | 981,815 | 23,377 |

| Level | Final Mark% | Achievement |

|---|---|---|

| 7 | 80–100% | Outstanding |

| 6 | 70–79% | Meritorius |

| 5 | 60–69% | Substantial |

| 4 | 50–59% | Moderate |

| 3 | 40–49% | Adequate |

| 2 | 30–39% | Elementary |

| 1 | 0–29% | Not Achieved-Fail |

| R3 vs. R4 | N | s.e | p-Value | |||

|---|---|---|---|---|---|---|

| 5000 | −0.1307 | 0.1248 | 0.0388 | 0.0196 | 0.1607 | |

| = 0.00 | 10,000 | −0.1307 | 0.1248 | 0.0371 | 0.0139 | 0.0718 |

| 20,000 | −0.1307 | 0.1248 | 0.0371 | 0.0098 | 0.0119 | |

| 5000 | −0.1307 | 0.1248 | 0.0293 | 0.0186 | 0.2276 | |

| = 0.05 | 10,000 | −0.1307 | 0.1248 | 0.0295 | 0.0131 | 0.1157 |

| 20,000 | −0.1307 | 0.1248 | 0.0293 | 0.0093 | 0.0349 | |

| 5000 | −0.1307 | 0.1248 | 0.0169 | 0.0165 | 0.3484 | |

| = 0.10 | 10,000 | −0.1307 | 0.1248 | 0.0164 | 0.0117 | 0.2728 |

| 20,000 | −0.1307 | 0.1248 | 0.0161 | 0.0082 | 0.1631 | |

| 5000 | −0.1307 | 0.1248 | 0.0086 | 0.0146 | 0.4283 | |

| = 0.15 | 10,000 | −0.1307 | 0.1248 | 0.0080 | 0.0103 | 0.4161 |

| 20,000 | −0.1307 | 0.1248 | 0.0082 | 0.0073 | 0.3301 | |

| R1 vs. R2 | ||||||

| 5000 | −0.1922 | 0.2859 | −0.0763 | 0.0407 | 0.1061 | |

| = 0.00 | 10,000 | −0.1922 | 0.2859 | −0.0790 | 0.0287 | 0.0171 |

| 20,000 | −0.1922 | 0.2859 | −0.0786 | 0.0203 | 0.0008 | |

| 5000 | −0.1922 | 0.2859 | −0.0680 | 0.0393 | 0.1388 | |

| = 0.05 | 10,000 | −0.1922 | 0.2859 | −0.0689 | 0.0276 | 0.0334 |

| 20,000 | −0.1922 | 0.2859 | −0.0685 | 0.0194 | 0.0023 | |

| 5000 | −0.1922 | 0.2859 | −0.0474 | 0.0373 | 0.2668 | |

| = 0.10 | 10,000 | −0.1922 | 0.2859 | −0.0475 | 0.0258 | 0.1139 |

| 20,000 | −0.1922 | 0.2859 | −0.0468 | 0.0180 | 0.0274 | |

| 5000 | −0.1922 | 0.2859 | −0.0272 | 0.0346 | 0.4550 | |

| = 0.15 | 10,000 | −0.1922 | 0.2859 | −0.0275 | 0.0243 | 0.3304 |

| 20,000 | −0.1922 | 0.2859 | −0.0278 | 0.0171 | 0.1718 |

| R2 vs. R3 | N | s.e | p-Value | |||

|---|---|---|---|---|---|---|

| 5000 | −0.5097 | 0.5114 | −0.0003 | 0.0040 | 0.6029 | |

| = 0.00 | 10,000 | −0.5097 | 0.5114 | −0.0003 | 0.0028 | 0.6055 |

| 20,000 | −0.5097 | 0.5114 | −0.0004 | 0.0019 | 0.5977 | |

| 5000 | −0.5097 | 0.5114 | −0.0004 | 0.0055 | 0.5924 | |

| = 0.05 | 10,000 | −0.5097 | 0.5114 | −0.0004 | 0.0038 | 0.5874 |

| 20,000 | −0.5097 | 0.5114 | −0.0004 | 0.0027 | 0.5802 | |

| 5000 | −0.5097 | 0.5114 | −0.0007 | 0.0098 | 0.5685 | |

| = 0.10 | 10,000 | −0.5097 | 0.5114 | −0.0004 | 0.0068 | 0.5667 |

| 20,000 | −0.5097 | 0.5114 | −0.0004 | 0.0048 | 0.5695 | |

| 5000 | −0.5097 | 0.5114 | −0.0002 | 0.0156 | 0.5554 | |

| = 0.15 | 10,000 | −0.5097 | 0.5114 | −0.0003 | 0.0108 | 0.5637 |

| 20,000 | −0.5097 | 0.5114 | −0.0003 | 0.0076 | 0.5615 | |

| R1 vs. R4 | ||||||

| 5000 | −0.4200 | 0.2970 | −0.0014 | 0.1425 | 0.5046 | |

| = 0.00 | 10,000 | −0.4200 | 0.2970 | −0.0017 | 0.0996 | 0.4979 |

| 20,000 | −0.4200 | 0.2970 | −0.0007 | 0.0699 | 0.4798 | |

| 5000 | −0.4200 | 0.2970 | −0.0033 | 0.1365 | 0.4917 | |

| = 0.05 | 10,000 | −0.4200 | 0.2970 | −0.0008 | 0.0960 | 0.4957 |

| 20,000 | −0.4200 | 0.2970 | 0.0007 | 0.0675 | 0.4890 | |

| 5000 | −0.4200 | 0.2970 | 0.0041 | 0.1261 | 0.4851 | |

| = 0.10 | 10,000 | −0.4200 | 0.2970 | −0.0014 | 0.0884 | 0.4938 |

| 20,000 | −0.4200 | 0.2970 | 0.0044 | 0.0620 | 0.4953 | |

| 5000 | −0.4200 | 0.2970 | 0.0019 | 0.1134 | 0.4928 | |

| = 0.15 | 10,000 | −0.4200 | 0.2970 | −0.0005 | 0.0792 | 0.4970 |

| 20,000 | −0.4200 | 0.2970 | 0.0003 | 0.0558 | 0.4791 |

| R3 vs. R4 | N | s.e | p-Value | |||

|---|---|---|---|---|---|---|

| 5000 | −0.1307 | 0.1248 | 0.0375 | 0.0067 | 0.0001 | |

| = 0.00 | 10,000 | −0.1307 | 0.1248 | 0.0374 | 0.0047 | 0.0000 |

| 20,000 | −0.1307 | 0.1248 | 0.0371 | 0.0033 | 0.0000 | |

| 5000 | −0.1307 | 0.1248 | 0.0296 | 0.0056 | 0.0002 | |

| = 0.05 | 10,000 | −0.1307 | 0.1248 | 0.0292 | 0.0039 | 0.0000 |

| 20,000 | −0.1307 | 0.1248 | 0.0291 | 0.0028 | 0.0000 | |

| 5000 | −0.1307 | 0.1248 | 0.0163 | 0.0037 | 0.0019 | |

| = 0.10 | 10,000 | −0.1307 | 0.1248 | 0.0162 | 0.0026 | 0.0000 |

| 20,000 | −0.1307 | 0.1248 | 0.0161 | 0.0018 | 0.0000 | |

| 5000 | −0.1307 | 0.1248 | 0.0080 | 0.0022 | 0.0084 | |

| = 0.15 | 10,000 | −0.1307 | 0.1248 | 0.0080 | 0.0015 | 0.0001 |

| 20,000 | −0.1307 | 0.1248 | 0.0080 | 0.0011 | 0.0000 | |

| R1 vs. R2 | ||||||

| 5000 | −0.1922 | 0.2859 | −0.0778 | 0.0362 | 0.0843 | |

| = 0.00 | 10,000 | −0.1922 | 0.2859 | −0.0796 | 0.0256 | 0.0111 |

| 20,000 | −0.1922 | 0.2859 | −0.0782 | 0.0181 | 0.0003 | |

| 5000 | −0.1922 | 0.2859 | −0.0682 | 0.0341 | 0.1078 | |

| = 0.05 | 10,000 | −0.1922 | 0.2859 | −0.0692 | 0.0241 | 0.0200 |

| 20,000 | −0.1922 | 0.2859 | −0.0685 | 0.0169 | 0.0012 | |

| 5000 | −0.1922 | 0.2859 | −0.0469 | 0.0298 | 0.1961 | |

| = 0.10 | 10,000 | −0.1922 | 0.2859 | −0.0473 | 0.0210 | 0.0675 |

| 20,000 | −0.1922 | 0.2859 | −0.0468 | 0.0147 | 0.0101 | |

| 5000 | −0.1922 | 0.2859 | −0.0273 | 0.0249 | 0.3434 | |

| = 0.15 | 10,000 | −0.1922 | 0.2859 | −0.0275 | 0.0176 | 0.2041 |

| 20,000 | −0.1922 | 0.2859 | −0.0278 | 0.0126 | 0.0746 |

| R2 vs. R3 | N | s.e | p-Value | |||

|---|---|---|---|---|---|---|

| 5000 | −0.5097 | 0.5114 | −0.0004 | 0.0031 | 0.5879 | |

| = 0.00 | 10,000 | −0.5097 | 0.5114 | −0.0004 | 0.0021 | 0.6032 |

| 20,000 | −0.5097 | 0.5114 | −0.0004 | 0.0015 | 0.5758 | |

| 5000 | −0.5097 | 0.5114 | −0.0005 | 0.0041 | 0.5817 | |

| = 0.05 | 10,000 | −0.5097 | 0.5114 | −0.0004 | 0.0029 | 0.5836 |

| 20,000 | −0.5097 | 0.5114 | −0.0004 | 0.0020 | 0.5763 | |

| 5000 | −0.5097 | 0.5114 | −0.0004 | 0.0067 | 0.5835 | |

| = 0.10 | 10,000 | −0.5097 | 0.5114 | −0.0005 | 0.0046 | 0.5589 |

| 20,000 | −0.5097 | 0.5114 | −0.0005 | 0.0033 | 0.5558 | |

| 5000 | −0.5097 | 0.5114 | −0.0006 | 0.0093 | 0.5558 | |

| = 0.15 | 10,000 | −0.5097 | 0.5114 | −0.0008 | 0.0065 | 0.5368 |

| 20,000 | −0.5097 | 0.5114 | −0.0001 | 0.0045 | 0.5427 | |

| R1 vs. R4 | ||||||

| 5000 | −0.4200 | 0.2970 | −0.0014 | 0.0842 | 0.5050 | |

| = 0.00 | 10,000 | −0.4200 | 0.2970 | −0.0016 | 0.0591 | 0.4956 |

| 20,000 | −0.4200 | 0.2970 | −0.0007 | 0.0414 | 0.5179 | |

| 5000 | −0.4200 | 0.2970 | −0.0009 | 0.0787 | 0.4877 | |

| = 0.05 | 10,000 | −0.4200 | 0.2970 | −0.0003 | 0.0552 | 0.5116 |

| 20,000 | −0.4200 | 0.2970 | −0.0006 | 0.0389 | 0.5114 | |

| 5000 | −0.4200 | 0.2970 | 0.0011 | 0.0668 | 0.4903 | |

| = 0.10 | 10,000 | −0.4200 | 0.2970 | 0.0005 | 0.0470 | 0.4963 |

| 20,000 | −0.4200 | 0.2970 | 0.0035 | 0.0330 | 0.5022 | |

| 5000 | −0.4200 | 0.2970 | 0.0037 | 0.0537 | 0.5147 | |

| = 0.15 | 10,000 | −0.4200 | 0.2970 | −0.0005 | 0.0377 | 0.4982 |

| 20,000 | −0.4200 | 0.2970 | 0.0011 | 0.0265 | 0.4886 |

| Causal Effect | Bandwidth | p-Value | |

|---|---|---|---|

| R3 vs. R4 | (−0.1307, 0.1248) | 0.4809 | 0.6306 |

| R1 vs. R2 | (−0.1922, 0.2859 ) | 0.2300 | 0.8181 |

| R2 vs. R3 | (−0.5097, 0.5114) | 0.9122 | 0.3617 |

| R1 vs. R4 | (−0.4200, 0.2970) | 0.3571 | 0.721 |

| Treatment Region | Window |

|---|---|

| R3 vs. R4 | (−0.0037, 0.0029) |

| R1 vs. R2 | (−0.0455, 0.0541) |

| R2 vs. R3 | (−0.095, 0.1336) |

| R1 vs. R4 | (−0.0973, 0.1237) |

| Treatment Region | DM Test Statistic (T) | p-Value |

|---|---|---|

| R3 vs. R4 | 0.383 | 0.000 |

| R1 vs. R2 | 0.138 | 0.000 |

| R2 vs. R3 | −0.003 | 0.103 |

| R1 vs. R4 | −0.078 | 0.132 |

| Causal Effect | s.e | p-Value | |||

|---|---|---|---|---|---|

| Causal Effect 1 | −0.400 | 0.550 | 0.403 | 0.104 | 0.000 |

| Causal Effect 2 | −0.200 | 0.480 | −0.035 | 0.016 | 0.025 |

| Causal Effect 3 | −24.0 | 18.0 | 0.607 | 0.028 | 0.000 |

| Causal Effect 4 | −23.0 | 13.0 | 0.118 | 0.018 | 0.000 |

| Causal Effect | (, | p-Value | ||

|---|---|---|---|---|

| Causal Effect 1 | −0.400 | 0.550 | −1.110 | 0.267 |

| Causal Effect 2 | −0.200 | 0.480 | −0.701 | 0.483 |

| Causal Effect 3 | −24.0 | 18.0 | 0.921 | 0.357 |

| Causal Effect 4 | −23.0 | 13.0 | 1.505 | 0.133 |

| Causal Effect | Minimum Window | DM Test | p-Value |

|---|---|---|---|

| (Bandwidth) | Statistic (T) | ||

| Causal Effect 1 | (−0.4, 0.42) | 0.347 | 0.001 |

| Causal Effect 2 | (−0.2, 0.19) | 0.005 | 0.035 |

| Causal Effect 3 | (−2, 1) | 0.665 | 0.000 |

| Causal Effect 4 | (−4, 5) | 0.310 | 0.000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Whata, A.; Chimedza, C. Credibility of Causal Estimates from Regression Discontinuity Designs with Multiple Assignment Variables. Stats 2021, 4, 893-915. https://doi.org/10.3390/stats4040052

Whata A, Chimedza C. Credibility of Causal Estimates from Regression Discontinuity Designs with Multiple Assignment Variables. Stats. 2021; 4(4):893-915. https://doi.org/10.3390/stats4040052

Chicago/Turabian StyleWhata, Albert, and Charles Chimedza. 2021. "Credibility of Causal Estimates from Regression Discontinuity Designs with Multiple Assignment Variables" Stats 4, no. 4: 893-915. https://doi.org/10.3390/stats4040052

APA StyleWhata, A., & Chimedza, C. (2021). Credibility of Causal Estimates from Regression Discontinuity Designs with Multiple Assignment Variables. Stats, 4(4), 893-915. https://doi.org/10.3390/stats4040052