1. Introduction

Judicial prediction is the ability to predict what a judge will decide on a given case. Is it possible to develop efficient predictive models to automatize such predictions? This question has long been driving several initiatives at the crossroads of Artificial Intelligence and Law—in particular, through the development of predictive models based on the alignment of computable features of the case that were available to the judge prior to the judgment, with computable features of the judge’s decision on the case. In this line of work, this paper presents a study towards the development of such predictive models taking advantage of Machine Learning and Natural Language Processing techniques. The legal vocabulary being notoriously ambiguous, we first detail important concepts that will be used thereafter.

A case begins with a complaint requesting a remedy for harm suffered due to the wrongdoing of the defendant. The features of the case are the circumstances existing prior to the filing of the complaint that is a set of facts sufficient to justify a right to file a complaint.

A claim is a request made by a plaintiff against a defendant, seeking legal remedy. Claims can be grouped into different categories, depending on the rule applicable and the type of remedy sought (e.g., injunctive relief, cease and desist order, damages).

A judgment summarizes the different rulings made by a judge about a certain case into a document. Judgments therefore contain many features that can be extracted (e.g., type of court, name of the parties, claims made by the parties, judges decisions on the claims). A complaint is a judgment that can contain many different claims, seeking different types of remedy. Therefore, in general, a judgment concern different types of claims.

The decision is a ruling made on a particular claim. We further consider that the judges decision on a claim is either accepted or rejected. Note that a judgment must be distinguished from the judge’s decision on a specific claim.

In recent years, the methodology of judicial predictions were mostly exclusively based on the employ of neural networks, which may be seen as the most flexible models for classification and predictions of legal decisions when large datasets are available. Chalkidis and Androutsopoulos [

1] use a Bi-LSTM network running on words on a task of extracting contractual clauses. Wei et al. [

2] have shown the superiority of convolutional networks over Support Vector Machines for the classification of texts on large specific datasets. The use of a Bi-GRU has become a standard approach, see [

3]. Performance of 92% was obtained on the identification of criminal charges and on judicial outcomes from Chinese criminal decisions [

4]. These types of approaches can also be used successfully on judgments in civil matters [

5]. Bi-LSTM networks coupled with a representation of the judgment in the form of a tensor achieve performance around 93% on a corpus of 1.8 million Chinese criminal judgments [

6]. This work has been successfully replicated on a body of judgments of the European Court of Human Rights in English, with

F-measure performance of 80% for bi-GRU networks with attention and Hierarchical BERT [

7]. On the same corpus, the development of a specific lexical embedding ECHR2Vec makes it possible to reach performances around 86% [

8]. Similar performances of 79% are obtained by TF-IDF (Term Frequency-Inverse Document Frequency) in the Portuguese language [

9]. Although neural networks enable very good performances to be achieved, we defend in this paper the use of compression machine learning models based on word representations

such as TF-IDF with different variants corresponding to different weighting schemes. These approaches are particularly suited dealing with small- to medium-size annotated datasets.

As we stressed, claims can be grouped into specific categories depending on their nature, e.g., several claims may refer to the notion of child care; such categories are defined a priori by jurists for the analysis of a corpus of judgments of interest. In addition, a judgment most of the time only contains a single claim of a given category (A corpus description and a descriptive analysis are provided in the next section). In this context, we are interested in the definition of predictive models able to predict the judge’s decision expressed in a judgment for a specific category of claims. Stated otherwise, knowing that a judgment contains a single claim of a given category, the model will have to answer the following question analyzing the judgment (textual document): has the claim been accepted or rejected? Developing efficient predictors of the outcome of specific categories of claims is of major interest for the analysis of large corpus of judgments. It, for instance, paves the way for large statistical analysis of correlations between aspects of the case (e.g., parties, location of the court) and outcomes for specific categories of claims. Such analyses are important for theoretical studies on law enforcement and future development of models able to predict the outcome of cases. Note that traditional text classification techniques obtain good performance predicting if a judgment contains a claim of a specific category, see [

10]. Obtaining relevant statistics about judge’s decisions on a given category of claim would therefore be based on (i) applying the aforementioned model to distinguish judgments containing a claim of the category of interest, and (ii) applying the type of models studied in this paper to know the outcome of previously identified judgments.

The methodology of judicial predictions therefore depends on the ability of a model to predict the judge’s decision on a claim inherent to a given category—without knowing the precise localization of the statement of the judge’s decision inside a judgment. In this context, extracting the result of a claim can be formulated as a task of binary text classification. To tackle this task, we consider in this paper the supervised machine learning paradigm assuming that a set of annotated judgments, i.e., labelled dataset, is provided for each category of claims of interest. We therefore aim to use the labeled dataset for training an algorithm to recognize whether the request has been rejected or accepted. Considering this setting, the paper presents various models and empirically compares them on a corpus of French judgments. A statistical analysis of the impact of various technical aspects generally involved in the classification of texts which consists of a combination of representations of judgments and classification algorithms is proposed. This analysis sheds light on certain configurations making it possible to determine judges’ decisions of a claim. We also propose the generalized Gini-PLS algorithm which is an extension of the simple Gini-PLS model [

11]. This generalized Gini-PLS consists in adding a regularization parameter that makes it possible to better adapt the regression with respect to the information in the distribution tails while attenuating, as in the simple Gini-PLS, the influence exerted by outliers. We also propose a new regression (LOGIT-Gini-PLS) which is better suited to the explanation of a target variable when the latter is a binary variable. These two models have never been applied to text classification.

The paper is organized as follows:

Section 2 presents characteristics of the corpus used for this study and motivates the modeling of the task adopted in this paper (i.e., decision outcome prediction as a binary text classification).

Section 3 presents the different TF-IDF vectorizations of the judgments.

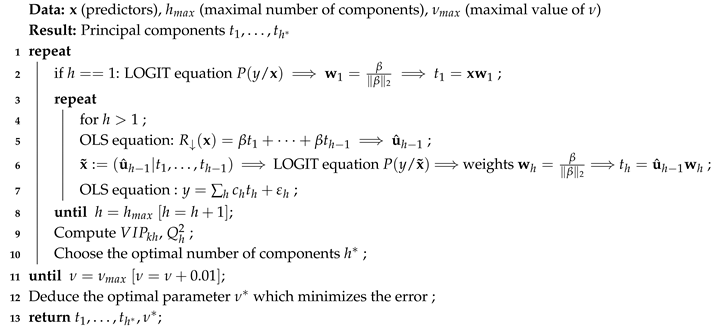

Section 4 presents the proposed generalized (LOGIT) Gini-PLS algorithms for text classification.

Section 5 presents our experiments and results.

Section 6 concludes our study.

2. Datasets and Modeling Motivations

We assume in this paper that predicting judges decisions may be studied through the lens of the definition of binary text classification models. This positioning is based on discussions with jurists and motivated by analyses performed on labeled datasets of French judgments. Six datasets built from a corpus of French judgments are considered in our study, one for each of the six categories of claims introduced in

Table 1.

The semantics of the membership of a judgments into a category is: the judgments contain a claim of that category, i.e., all the judgments into the ACPA category contain a claim related to Civil fine for abuse of process.

Table 2 presents sections of a judgment of that category [ACPA]. The sections refer to the mentions of the claim and to the corresponding decision, respectively.

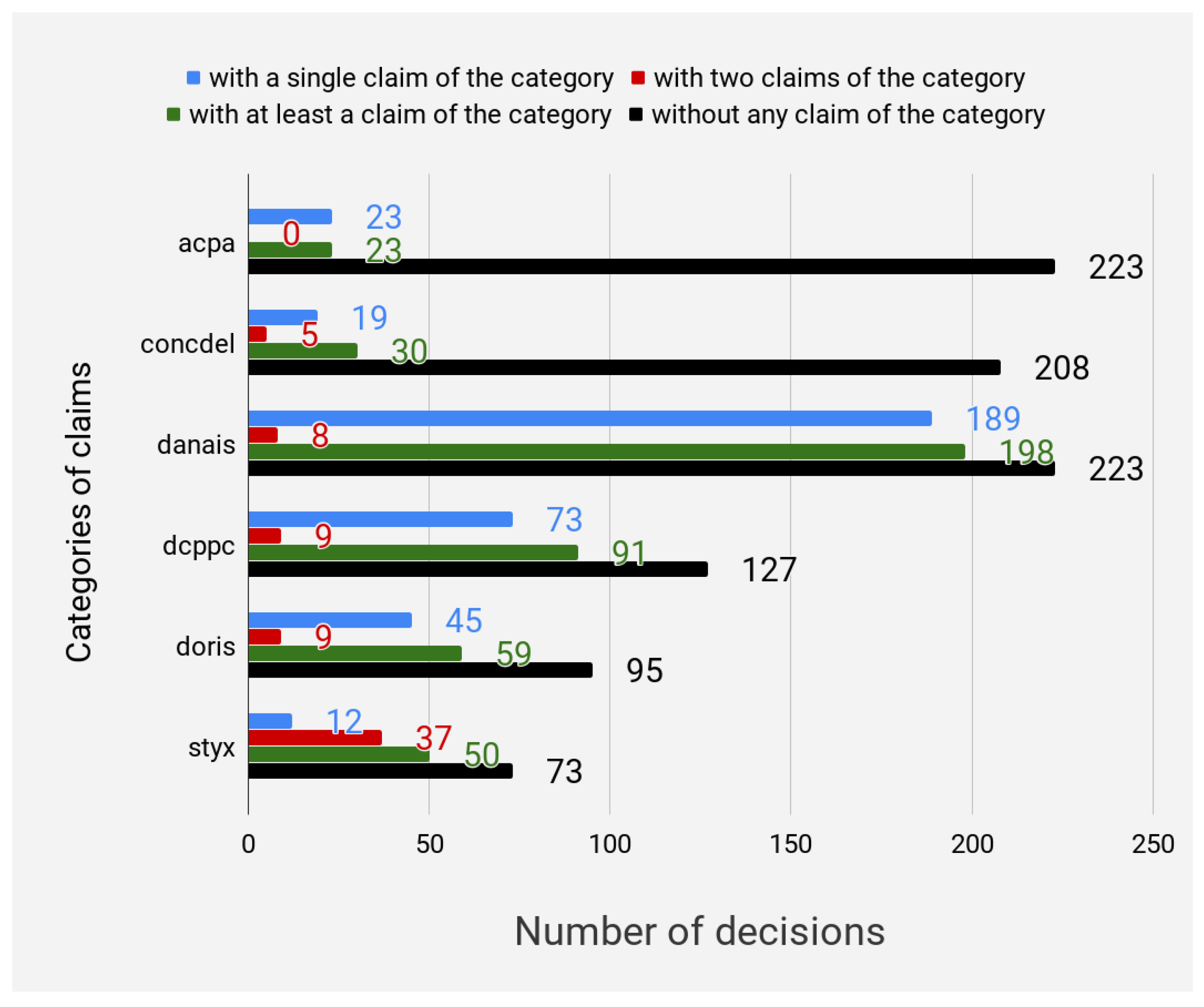

Figure 1 presents additional details about the datasets, in particular the number of claims of a category found in the judgments.

Observation 1. Decisions most often only contain a single claim of a specific category.

On the one hand, the statistics on the labelled data show that the judgments contain for the most part a single claim of a category (or at least one claim of the category). The percentage of judgments having only one request of a category is respectively: 100% for ACPA, 63.33% for CONCDEL, 95.45% for DANAIS, 80.22% for DCPPC, and 76.21% for DORIS. However, we note the exception of the STYX category (damages on article 700 CPC), where, in most of the judgments, there are instead two claims. This exception can be justified by the fact that each party generally makes this type of request because it relates to the reimbursement of legal costs.

On the other hand, few judgments with two or more claims exist. In this case, the classification task of any claim becomes difficult since specific vocabulary and sentences may appear in the judgment related to other claims (although there are in the same category). This may be embodied by noise or outliers in the dataset of each claim category. The use of Gini estimators is therefore welcome to handle outlying observations.

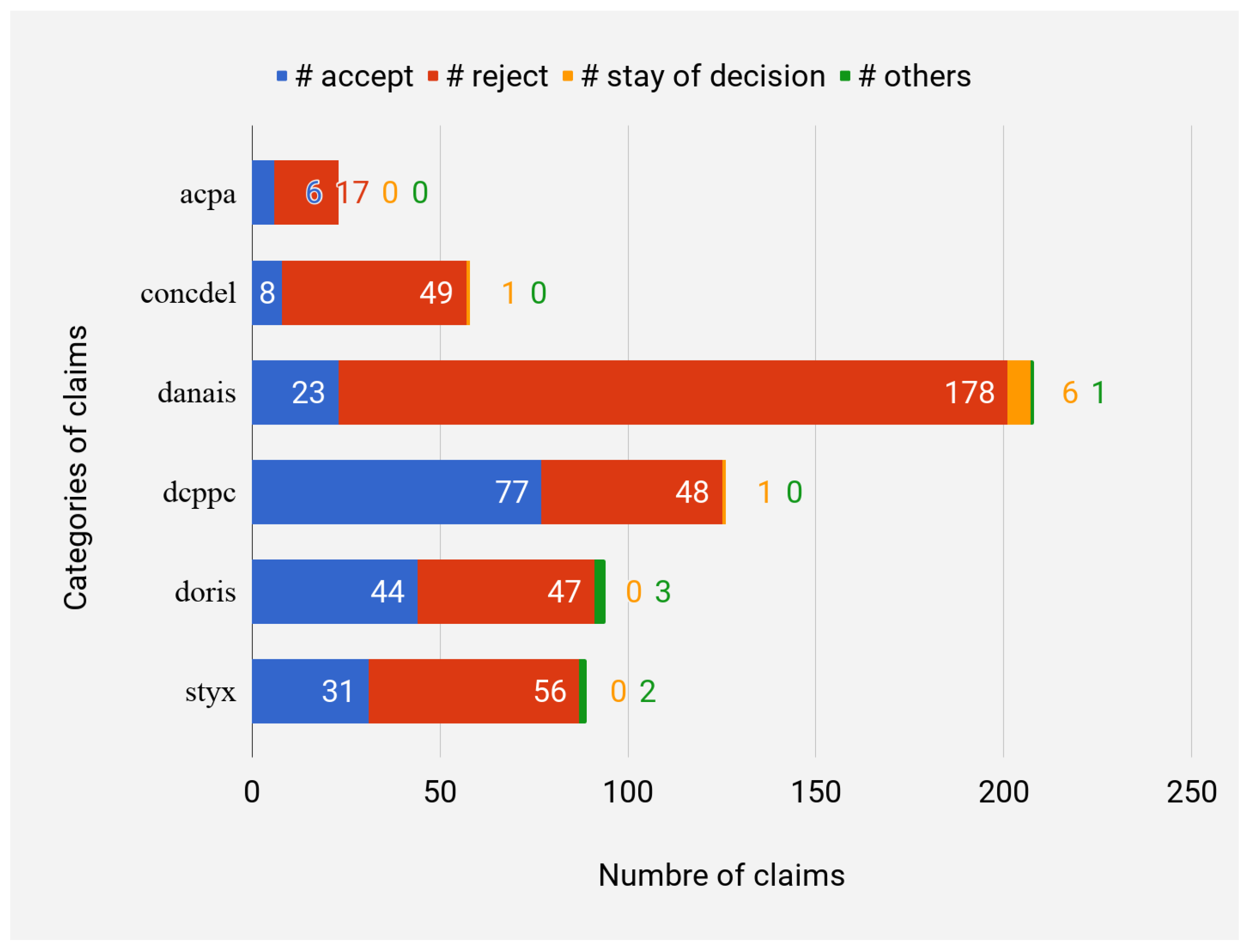

Observation 2. Most judges’ decisions are binary: accept or reject.

Figure 2 highlights the fact that outcomes of a given claim are most often accepted or rejected, and that other forms of results are very rare. Theses observations motivate the interest of developing a binary classifier for predicting the outcome of a claim appertaining to a specific category.

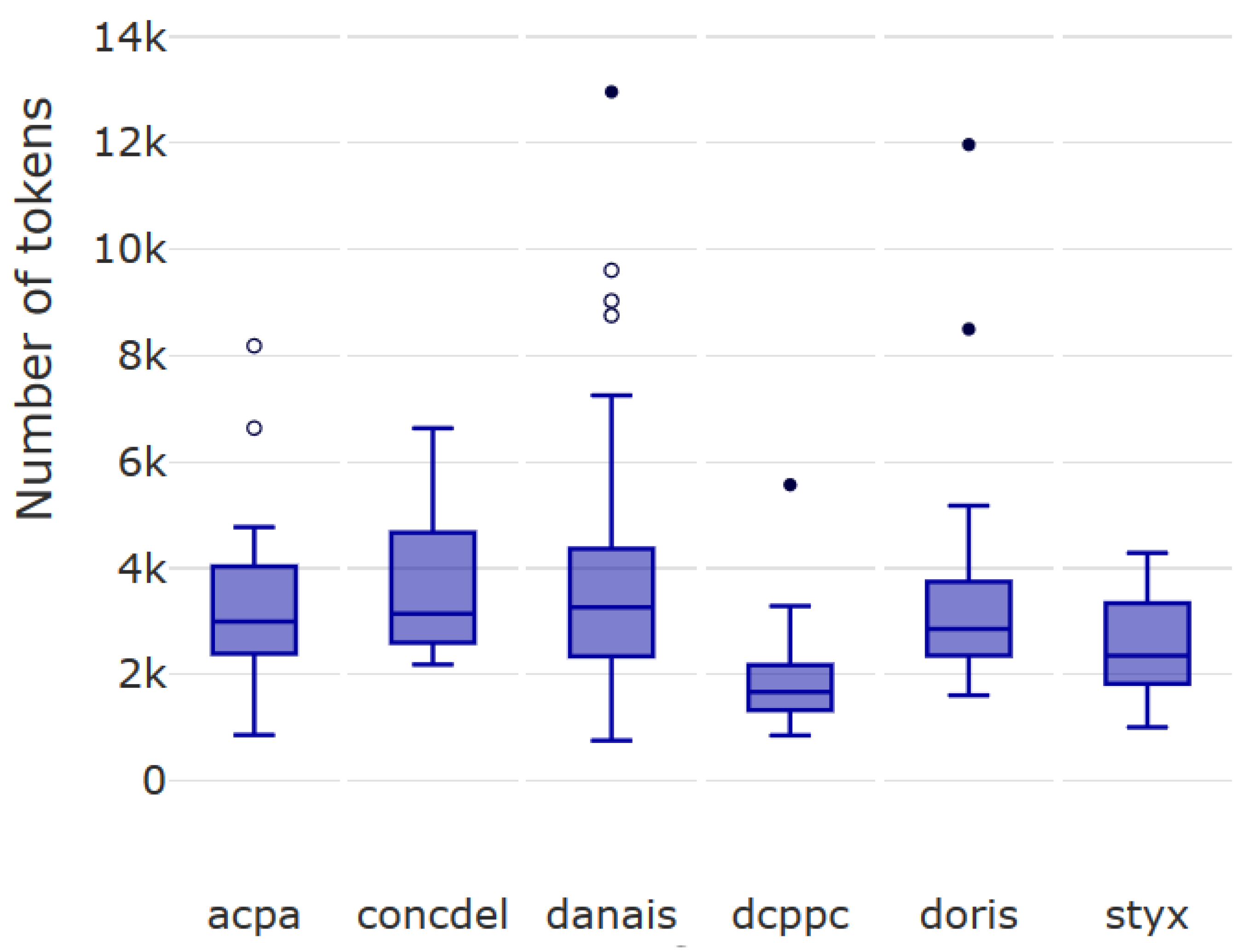

Observation 3. The algorithm must be able to deal with a large number of tokens of judgments.

Figure 3 illustrates the distribution of the judgments’ lengths (number of tokens, i.e., words). We note that the texts are long in comparison to those usually considered by state-of-the-art text classification approaches. As we will discuss later, this particularity will hamper the use of some efficient existing approaches such as PLS algorithms for compression.

Observation 4. In some claim categories, a strong imbalance may exist between the outcomes accept/reject.

Table 3 presents the final statistics of the dataset used for both training and evaluating the predictive models evaluated in this study. As can be seen, four claim categories out of six exhibit strong unbalanced decisions.

3. Texts Classification

Text classification allows judgments to be organized in predefined groups. This technique has received a large audience for a long time. Two technical choices mainly influence the performance of the classification: the representation of the texts and the choice of the classification algorithm. In the following, the predicted variable is denoted y, the predictors are denoted x, the learning base including the observations of the sample is expressed as , and C represents claim categories.

Considering a vocabulary

, we further assume that every judgment

is represented as a TF-IDF vector embedding (

Term Frequency-Inverse Document Frequency) [

12]

, where each dimension

refers to word

and

is the weight of

in

d defined as the normalized product of a global weight

depending on the training corpus and a local weight

stressing the importance of

in judgment

d:

with

a normalization factor.

Table 4 summarizes the notations used in the paper. The global weight is computed following one of the methods presented in

Table 5. The local weight is computed from the frequency of occurrences of the word in the judgment using one of the methods of

Table 6.

The vector representation of texts generally results in high-dimensional vectors whose coordinates are mostly zero. Consequently, dimension reduction (compression) techniques, such as PLS regressions, make it possible to obtain vectors more relevant to classification tasks.

5. Experiments and Results

We discuss the performance of various popular algorithms and the impact of data quantity and imbalance, heuristics, and explicit restriction of judgments to sections (regions) related to the claim category, as well as their ability to ignore other requests in the judgment. These experiments also aim to compare the effectiveness of LOGIT-Gini-PLS with other machine learning techniques. As in Im et al. [

33], we compare different combinations of classification algorithms and term weighting methods (used for text representation). These combinations represent 600 experimental configurations including: (See

https://github.com/tagnyngompe/taj-ginipls to enjoy the Python code of the Gini-PLS algorithms).

12 algorithms of classification: Naive Bayes (NB), Support Vector Machine (SVM),

K-nearest neighbors (KNN), Linear and quadratic discriminant analysis (LDA/QDA), Tree, fastText, Naive Bayes SVM (NBSVM), generalized Gini-PLS (Gini-PLS), LOGIT-PLS [

34], generalized LOGIT-Gini-PLS (LogitGiniPLS), and the usual PLS algorithm (StandardPLS);

11 global weighting schemes (cf.

Table 5):

,

,

,

,

,

,

,

,

,

,

(mean of the global metrics);

6 local weighting schemes (cf.

Table 6):

,

,

,

,

, et

(mean of the local metrics).

5.1. Assessment Protocol

Two assessment metrics are used: precision and

-measure. To take into account the imbalance between the classes, the macro-average is preferred (As suggested by a reviewer, the MCC could be used for the data imbalance). It is the aggregation of the individual contribution of each class. It is calculated from the macro-averages of the precision (

) and of the recall (

), which are calculated according to the average numbers of true positives (

) , false positives (

), and false negatives (

) as follows: [

35]:

,

.

The efficiency of algorithms often depends on the hyper-parameters for which optimal values must be determined. The

scikit-learn [

36] library implements two strategies for finding these values: RandomSearch and GridSearch. Despite the speed of the RandomSearch method, it is non-deterministic and the values it finds give a less accurate prediction than the default values. It is the same for the GridSearch method, which is very slow, and therefore impractical in view of the large number of configurations to be evaluated. Consequently, the values used for the experiments are the values defined by default (

Table 7).

5.2. Classification on the Basis of the Whole Judgment

By representing the entire judgment using various vector representations, the algorithms are compared with the representations that are optimal for them. We note from the results of

Table 8 that the trees are on average better for all the categories even if on average the

-measure is limited to 0.668. The results of PLS extensions are not very far from those of trees with differences of

-measure around 0.1 (if we choose the right representation scheme).

The

average scores of the NBSVM and fastText algorithms generally do not exceed 0.5 despite being specially designed for texts. It can be noticed that they are very sensitive to the imbalance of data between the categories (more rejections than acceptances). Furthermore, it is more difficult to detect the acceptance of the requests. Indeed, these algorithms classify all the test data with the majority label (meaning) i.e., rejection, and therefore, they hardly detect some request acceptance. The case of the categories

doris and

dcppc for the NBSVM (

) tends to demonstrate the strong sensitivity to negative cases of these algorithms since the

-measure of “reject” is always higher than that of “accept” (

Table 9).

PLS algorithms systematically exceed the performance (-measurement) of fastText and NBSVM from 10 to 20 points. This tends to demonstrate the effectiveness of PLS techniques in their role of reduction of dimensions. Gini-PLS algorithms do not operate any better than conventional PLS algorithms. Presumably, the reduction of dimensions is done while still retaining too much noise in the data. This is confirmed by the results of the trees which remain very mixed for which the -measure (0.668) that exceeds barely that of Logit-PLS (0.648). It therefore seems necessary to proceed with zoning in the judgment to better identify relevant information and thereby reduce the noise.

5.3. Classification Based on Sections of Judgments Including the Vocabulary of the Category

Since the judgments are related to several categories of claim, we experiment with restrictions of the textual content based on different types of regions in judgments. The first types of regions are sections of the judgment: the description of facts and proceedings (Facts and Proceedings), judges’ reasoning to justify their decisions (Opinion), the summary of judges’ decisions (Holding). The sections are identified using a text labeling method [

37]. Other types of regions are statements extracted from the sections related to the category of claim. They express either a claim, a result, a previous result (result_a), judges’ reasoning about the category (context). These statements are extracted using regular expressions and are used in the restriction only if they contain a key-phrase of the category. The region-vector representation-algorithm combinations are compared in

Table 10. The accuracy rate (

) increases significantly with the reduction of the judgment to regions, except for the

doris category. The best restriction combines regions including the vocabulary of the category in the section Facts and Proceedings (request and previous result), in the Opinion section (context), and in the Holding section (result).

It is noteworthy that restricting the training of the model to the section facts and proceedings corresponds to the prediction of the judge’s outcome. When additional information is employed to train the model, such as opinion and holding, the task is reduced to an identification or an extraction of the judge’s outcome.

After reducing the size of the judgment, the trees provide excellent results, followed very closely by our GiniPLS and LogitGiniPLS algorithms. For example, in the

dcppc category (see

Table 5), Tree performance (

= 0.985) slightly exceeds the LogitPLS (0.94) and standard PLS (0.934) algorithms. In the category

concdel, Tree performance (

= 0.798) is still closely followed by LogitGiniPLS (0.703) and standard PLS (0.657) algorithms.

The most interesting case is concerned with neighborhood disturbances (doris category). These judgments often involve multiple information that is sometimes difficult to synthesize, even for humans. The argumentation exposed in doris is related to multiple information (problems of views, sunshine, trees, etc.) so that the factual elements conditioning the identification of the judicial outcomes are sometimes complex. This information can be either under-represented or over-represented depending on the vectorization scheme. Our GiniPLS algorithm (like our LogitGiniPLS) seems to be particularly suitable for this category of request. The -measures obtained in this category amount to 0.806 (for GiniPLS and LogitGiniPLS) and 0.772 for StandardPLS while the trees of decisions are not part of the relevant algorithms for this category of request (or among the best three algorithms). This result reinforces the idea that our GiniPLS algorithms can sometimes compete with decision trees that act as a benchmark in the literature dealing with small datasets. This result would make it possible in the future to consider including our GiniPLS algorithms in ensemble methods to broaden the spectrum of algorithms robust to outliers and which at the same time play a role of data compression.