Bayesian Prediction of Order Statistics Based on k-Record Values from a Generalized Exponential Distribution

Abstract

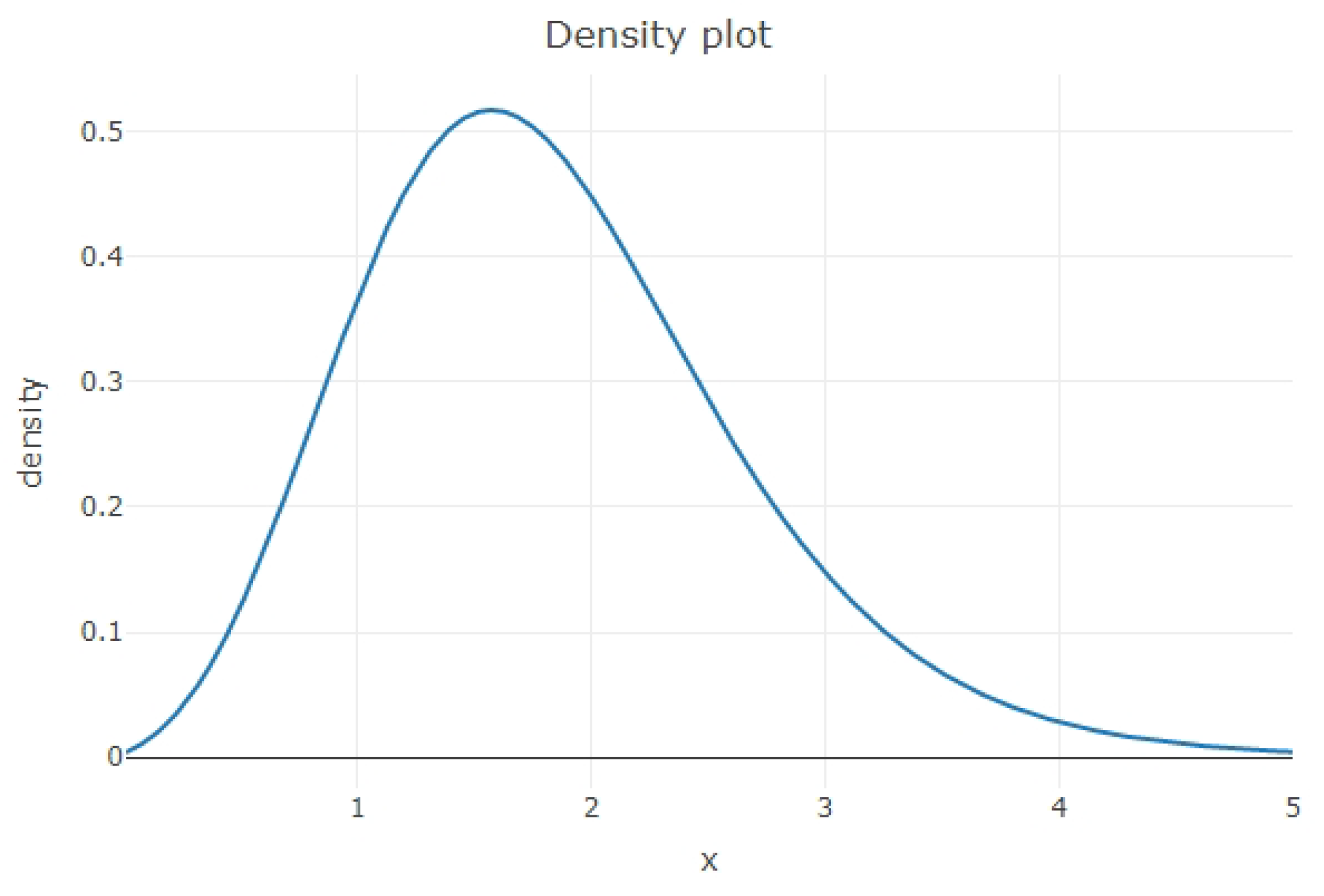

:1. Introduction

2. Prior Information and Predictive Distributions

3. Prediction Intervals of Order Statistics

4. Point Predictors of Order Statistics

5. An Illustrative Examples

| i | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 0.9672 | 0.7750 | 0.6944 | |||||

| 1.0726 | 0.9672 | 0.8246 | 0.775 | ||||

| 1.0726 | 1.0416 | 1.0292 | 0.9672 | 0.8246 | |||

| 5.2824 | 3.4658 | 2.7404 | 1.0726 | 1.0416 | 1.0292 | 0.9672 |

6. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Chandler, K.N. The distribution and frequency of record values. J. R. Stat. Soc. Ser. (Methodol.) 1952, 14, 220–228. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Arnold, B.C.; Nagaraja, H.N. Records; Wiley: Hoboken, NJ, USA, 1998. [Google Scholar]

- Nevzorov, V.B. Records: Mathematical Theory; Translations of Mathematical Monographs; AMS: Providence, RI, USA, 2000. [Google Scholar]

- Arnold, B.C.; Balakrishnan, N.; Nagaraja, H.N. A First Course in Order Statistics; SIAM: Philadelphia, PA, USA, 2008. [Google Scholar]

- David, H.A.; Nagaraja, H.N. Order Statistics; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2003. [Google Scholar]

- Ahsanullah, M. Linear prediction of record values for the two parameter exponential distribution. Ann. Inst. Stat. Math. 1980, 32, 363–368. [Google Scholar] [CrossRef]

- Ahmad, A.A.; Raqab, M.Z.; Madi, M.T. Bayesian prediction intervals for future order statistics from the generalized exponential distribution. J. Iran. Stat. Soc. 2007, 6, 17–30. [Google Scholar]

- Ahmadi, J.; Balakrishnan, N. Prediction of order statistics and record values from two independent sequences. Statistics 2010, 44, 417–430. [Google Scholar] [CrossRef]

- Ahmadi, J.; MirMostafaee, S.M.T.K.; Balakrishnan, N. Bayesian prediction of order statistics based on k-record values from exponential distribution. Statistics 2011, 45, 375–387. [Google Scholar] [CrossRef]

- Gupta, R.D.; Kundu, D. Theory & methods: Generalized exponential distributions. Aust. N. Z. J. Stat. 1999, 41, 173–188. [Google Scholar]

- Gupta, R.D.; Kundu, D. Closeness of gamma and generalized exponential distribution. Commun. -Stat.-Theory Methods 2003, 32, 705–721. [Google Scholar] [CrossRef]

- Gupta, R.D.; Kundu, D. Discriminating between Weibull and generalized exponential distributions. Comput. Stat. Data Anal. 2003, 3, 179–196. [Google Scholar] [CrossRef]

- Raqab, M.M.; Ahsanullah, M. Estimation of the location and scale parameters of generalized exponential distribution based on order statistics. J. Stat. Comput. Simul. 2001, 69, 109–123. [Google Scholar] [CrossRef]

- Jaheen, Z.F. Empirical Bayes inference for generalized exponential distribution based on records. Commun.-Stat.-Theory Methods 2004, 33, 1851–1861. [Google Scholar] [CrossRef]

- Raqab, M.Z. Generalized exponential distribution: moments of order statistics. Statistics 2004, 38, 29–41. [Google Scholar] [CrossRef]

- Chen, M.H.; Shao, Q.M. Monte Carlo estimation of Bayesian credible and HPD intervals. J. Comput. Graph. Stat. 1999, 8, 69–92. [Google Scholar]

- Gradshteyn, I.S.; Ryzhik, I.M. Table of Integrals, Series, and Products; Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Asgharzadeh, A.; Valiollahi, R.; Raqab, M.Z. Estimation of Pr(Y<X) for the two-parameter generalized exponential records. Commun. -Stat.-Simul. Comput. 2017, 46, 379–394. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2015. [Google Scholar]

| m | j | Equi-Tailed (L) | HPD (L) | Point Predictor | ||

|---|---|---|---|---|---|---|

| 5 | 1 | (0.0002, 0.8441)(0.8439) | (0, 0.4078)(0.4078) | 0.1979 | 0.3539 | |

| 4 | (0.1518, 2.9883)(2.9883) | (0.0071, 2.5511)(2.544) | 1.2169 | 1.0381 | ||

| 5 | (0.4213, 5.2704)(4.8491) | (0.1649, 4.6581)(4.4933) | 2.1937 | 2.1237 | ||

| 10 | 1 | (0.0001, 0.4914)(0.4913) | (0, 0.152)(0.152) | 0.1059 | 0.2134 | |

| 4 | (0.0229, 1.2191)(1.1962) | (0.0114, 0.6015)(0.5901) | 0.4488 | 0.4485 | ||

| 9 | (0.5101, 3.7272)(3.2171) | (0.3605, 3.4754)(3.1149) | 1.843 | 1.1235 | ||

| 10 | (0.8827, 5.9623)(5.0795) | (0.6198, 5.4447)(4.825) | 2.831 | 2.1681 | ||

| 20 | 1 | (0, 0.3007)(0.3007) | (1.0004, 1.9989)(0.9985) | 0.0592 | 0.1422 | |

| 4 | (0.0035, 0.6687)(0.6652) | (0.72, 2.0887)(1.3688) | 0.1095 | 0.2697 | ||

| 10 | (0.0849, 1.3732)(1.2883) | (0.0194, 1.2548)(1.2354) | 0.6277 | 0.4575 | ||

| 18 | (0.7677, 3.5322)(2.7645) | (0.6768, 3.4091)(2.7322) | 2.0063 | 0.9099 | ||

| 20 | (1.4513, 6.6548)(5.2035) | (1.1921, 6.1853)(4.9932) | 3.4943 | 2.1922 | ||

| 5 | 1 | (0.0004, 0.7382)(0.738) | (0, 0.6065)(0.6065) | 0.1664 | 0.9503 | |

| 4 | (0.1544, 2.8678)(2.7134) | (0.021, 2.508)(2.487) | 1.1481 | 4.3966 | ||

| 5 | (0.4156, 5.1632)(4.7475) | (0.1711, 4.5431)(4.3719) | 2.1188 | 7.3214 | ||

| 10 | 1 | (0.0004, 0.4006)(0.4005) | (0, 0.1592)(0.1592) | 0.0833 | 0.5601 | |

| 4 | (0.0265, 1.0913)(1.0648) | (0.004, 0.5882)(0.5842) | 0.4021 | 1.8838 | ||

| 9 | (0.5153, 3.605)(3.0897) | (0.367, 3.3418)(2.9748) | 1.7666 | 5.9481 | ||

| 10 | (0.8751, 5.8549)(4.9798) | (0.6159, 5.3185)(4.7026) | 2.7516 | 8.4322 | ||

| 20 | 1 | (0, 0.2271)(0.2271) | (0, 0.0171)(0.0171) | 0.043 | 0.4344 | |

| 4 | (0.0048, 0.5561)(0.5513) | (0, 0.5804)(0.5804) | 0.1776 | 1.001 | ||

| 10 | (0.0966, 1.2327)(1.1362) | (0, 1.831)(1.831) | 0.5684 | 2.52 | ||

| 18 | (0.782, 3.3997)(2.6178) | (0.686, 3.2627)(2.5767) | 1.9287 | 6.0765 | ||

| 20 | (1.4425, 6.5473)(5.1048) | (1.1834, 6.0553)(4.8719) | 3.4133 | 9.5048 |

| m | j | Equi-Tailed (L) | HPD (L) | Point Predictor | ||

|---|---|---|---|---|---|---|

| 5 | 1 | (0.0002, 0.8366)(0.8364) | (0, 0.4079)(0.4079) | 0.1587 | 0.4678 | |

| 4 | (0.1642, 2.9848)(2.8206) | (0.0177, 2.6214)(2.6037) | 1.2222 | 1.3447 | ||

| 5 | (0.4395, 5.2719)(4.8324) | (0.1854, 4.6633)(4.4779) | 2.2024 | 2.4408 | ||

| 10 | 1 | (0.0001, 0.4832)(0.4831) | (0, 0.1464)(0.1464) | 0.1053 | 0.2956 | |

| 4 | (0.0274, 1.2083)(1.181) | (0.0073, 0.657)(0.6497) | 0.4552 | 0.6576 | ||

| 9 | (0.5335, 3.7237)(3.1902) | (0.3848, 3.472)(3.0872) | 1.8502 | 1.4587 | ||

| 10 | (0.9072, 5.9637)(5.0565) | (0.6453, 5.4435)(4.7982) | 2.8392 | 2.5194 | ||

| 20 | 1 | (0, 0.2931)(0.2931) | (0, 0.0271)(0.0271) | 0.0579 | 0.1985 | |

| 4 | (0.0047, 0.6569)(0.6522) | (0, 0.491)(0.491) | 0.2146 | 0.4057 | ||

| 10 | (0.0966, 1.3597)(1.2631) | (0, 0.9271)(0.9271) | 0.6309 | 0.694 | ||

| 18 | (0.7977, 3.5255)(2.7278) | (0.706, 3.4001)(2.6941) | 2.0138 | 1.2505 | ||

| 20 | (1.4795, 6.6562)(5.1767) | (1.2203, 6.1813)(4.961) | 3.5031 | 2.5501 | ||

| 5 | 1 | (0.0003, 0.7386)(0.7383) | (0, 0.3248)(0.3248) | 0.1682 | 0.9574 | |

| 4 | (0.1633, 2.8722)(2.7089) | (0.03, 2.5178)(2.4878) | 1.1558 | 4.3035 | ||

| 5 | (0.429, 5.1705)(4.7415) | (0.1853, 4.553)(4.3677) | 2.1285 | 6.8663 | ||

| 10 | 1 | (0.0001, 0.3996)(0.3994) | (0, 0.0837)(0.0.0837) | 0.0841 | 0.5643 | |

| 4 | (0.0299, 1.0902)(1.0603) | (0.0021, 0.6624)(0.6603) | 0.4062 | 1.8799 | ||

| 9 | (0.532, 3.6094)(3.0774) | (0.3844, 3.3467)(2.9623) | 1.7762 | 5.6689 | ||

| 10 | (0.8932, 5.8623)(4.9691) | (0.6347, 5.3249)(4.6903) | 2.7622 | 7.7939 | ||

| 20 | 1 | (0.0001, 0.2256)(0.2255) | (0, 0.0085)(0.0085) | 0.0434 | 0.3443 | |

| 4 | (0.0058, 0.5532)(0.5475) | (0, 0.3071)(0.3071) | 0.1796 | 1.0142 | ||

| 10 | (0.1053, 1.2299)(1.1246) | (0, 0.9707)(0.9707) | 0.574 | 2.4084 | ||

| 18 | (0.8032, 3.4023)(2.599) | (0.707, 3.2642)(2.5571) | 1.9387 | 5.6668 | ||

| 20 | (1.4634, 6.5547)(5.0913) | (1.2044, 6.06)(4.8556) | 3.4244 | 9.0046 |

| m | j | Equi-Tailed (L) | HPD (L) | Point Predictor | ||

|---|---|---|---|---|---|---|

| 5 | 1 | (0.0004, 0.8245)(0.8241) | (0, 0.4076)(0.4076) | 0.1983 | 0.5943 | |

| 4 | (0.1848, 2.9793)(2.7945) | (0.0426, 2.6312)(2.5887) | 1.2306 | 1.6946 | ||

| 5 | (0.4685, 5.2742)(4.8057) | (0.2178, 4.6699)(4.452) | 2.2139 | 2.8466 | ||

| 10 | 1 | (0.0004, 0.4698)(0.4697) | (0, 0.137)(0.137) | 0.1048 | 0.3808 | |

| 4 | (0.0356, 1.1906)(1.1551) | (0.0032, 0.7704)(0.7672) | 0.4582 | 0.8932 | ||

| 9 | (0.5709, 3.7181)(3.1472) | (0.4236, 34657)(3.0422) | 1.8608 | 1.8736 | ||

| 10 | (0.9457, 5.9661)(5.0203) | (0.6855, 5.4411)(4.7556) | 2.8521 | 2.9438 | ||

| 20 | 1 | (0.0001, 0.2805)(0.2804) | (0, 0.0218)(0.0218) | 0.0572 | 0.2559 | |

| 4 | (0.0072, 0.6373)(0.6302) | (0, 0.4761)(0.4601) | 0.2146 | 0.5685 | ||

| 10 | (0.1172, 1.3372)(1.22) | (0, 0.9402)(0.9402) | 0.6358 | 0.9711 | ||

| 18 | (0.8455, 3.5147)(2.6692) | (0.7525, 3.3851)(2.6327) | 2.0257 | 1.6483 | ||

| 20 | (1.5235, 6.6586)(5.1351) | (1.2645, 6.1749)(4.9103) | 3.5167 | 3.0199 | ||

| 5 | 1 | (0.0004, 0.7391)(0.7387) | (0, 0.3311)(0.3311) | 0.1712 | 0.9406 | |

| 4 | (0.1789, 2.8797)(2.7008) | (0.0457, 2.5329)(2.4873) | 1.169 | 3.9559 | ||

| 5 | (0.4517, 5.183)(4.7312) | (0.2092, 4.569)(4.3599) | 2.1449 | 6.3233 | ||

| 10 | 1 | (0.0001, 0.3976)(0.3975) | (0, 0.0825)(0.0825) | 0.0856 | 0.5619 | |

| 4 | (0.0362, 1.0882)(1.052) | (0.0003, 0.784)(0.7836) | 0.4133 | 1.8005 | ||

| 9 | (0.5605, 3.6169)(3.0564) | (0.4138, 3.3547)(2.9409) | 1.7923 | 5.1354 | ||

| 10 | (0.9235, 5.8747)(4.9512) | (0.6661, 5.3355)(4.6694) | 2.78 | 7.1274 | ||

| 20 | 1 | (0.0001, 0.2228)(0.2227) | (0, 0.0076)(0.0076) | 0.044 | 0.3505 | |

| 4 | (0.0078, 0.5481)(0.5403) | (0, 0.306)(0.306) | 0.1831 | 1.001 | ||

| 10 | (0.1211, 1.2248)(1.1037) | (0, 0.9725)(0.9725) | 0.5834 | 2.3601 | ||

| 18 | (0.8392, 3.4065)(2.5673) | (0.7426, 3.2664)(2.5238) | 1.9556 | 5.3681 | ||

| 20 | (1.4982, 6.5671)(5.069) | (1.2396, 6.0678)(4.8282) | 3.4431 | 8.1053 |

| m | j | Equi-Tailed (L) | HPD (L) | Point Predictor | ||

|---|---|---|---|---|---|---|

| 5 | 1 | (0.0001, 0.8527)(0.8526) | (0, 0.4075)(0.4075) | 0.1977 | 0.4131 | |

| 4 | (0.1376, 2.9923)(2.8548) | (0.0097, 2.4327)(2.423) | 1.2106 | 0.9424 | ||

| 5 | (0.3998, 5.2686)(4.8689) | (0.1401, 4.6506)(4.5105) | 2.1864 | 1.9324 | ||

| 10 | 1 | (0.0001, 0.501)(0.5009) | (0, 0.1583)(0.1583) | 0.1064 | 0.3042 | |

| 4 | (0.0184, 1.2316)(1.2132) | (0.0174, 0.5405)(0.5231) | 0.4512 | 0.4798 | ||

| 9 | (0.4826, 3.7313)(3.2488) | (0.3315, 3.4788)(3.1473) | 1.8341 | 1.0576 | ||

| 10 | (0.8534, 5.9605)(5.1071) | (0.5891, 5.4459)(4.8568) | 2.8212 | 1.9971 | ||

| 20 | 1 | (0, 0.3096)(0.3096) | (0, 0.0349)(0.0349) | 0.0595 | 0.1999 | |

| 4 | (0.0025, 0.6823)(0.6798) | (0, 0.5055)(0.5055) | 0.2141 | 0.2011 | ||

| 10 | (0.0723, 1.3888)(1.3165) | (0, 0.9072)(0.9072) | 0.6243 | 0.2287 | ||

| 18 | (0.7322, 3.54)(2.8078) | (0.6422, 3.4193)(2.7771) | 1.9972 | 0.8487 | ||

| 20 | (1.4174, 6.653)(5.2356) | (1.1582, 6.1899)(5.0317) | 3.4839 | 1.9905 | ||

| 5 | 1 | (0.0001, 0.7377)(0.7376) | (0, 0.317)(0.317) | 0.1645 | 1.092 | |

| 4 | (0.1445, 2.8628)(2.7183) | (0.0105, 2.4955)(2.485) | 1.1392 | 4.5601 | ||

| 5 | (0.4006, 5.1548)(4.7542) | (0.1552, 4.5314)(4.3762) | 2.1077 | 7.4088 | ||

| 10 | 1 | (0.0001, 0.4018)(0.4017) | (0, 0.0849)(0.0849) | 0.0823 | 0.5972 | |

| 4 | (0.023, 1.0925)(1.0694) | (0.0051, 0.5453)(0.5402) | 0.3975 | 1.9586 | ||

| 9 | (0.4964, 3.5999)(3.1035) | (0.3474, 3.336)(2.9886) | 1.7557 | 6.4913 | ||

| 10 | (0.8546, 5.8465)(4.9919) | (0.5947, 5.3111)(4.7165) | 2.7395 | 8.9099 | ||

| 20 | 1 | (0, 0.2288)(0.2288) | (0, 0.0097)(0.0097) | 0.0426 | 0.3672 | |

| 4 | (0.0038, 0.5591)(0.5553) | (0, 0.3072)(0.3072) | 0.1754 | 1.5641 | ||

| 10 | (0.0873, 1.2357)(1.1485) | (0,0.9673)(0.9673) | 0.5622 | 2.5221 | ||

| 18 | (0.7579, 3.3968)(2.6389) | (0.6621, 3.2609)(2.5987) | 1.9173 | 6.318 | ||

| 20 | (1.4187, 6.5389)(5.1202) | (1.1594, 6.0499)(4.8905) | 3.4007 | 10.2724 |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vidović, Z. Bayesian Prediction of Order Statistics Based on k-Record Values from a Generalized Exponential Distribution. Stats 2019, 2, 447-456. https://doi.org/10.3390/stats2040031

Vidović Z. Bayesian Prediction of Order Statistics Based on k-Record Values from a Generalized Exponential Distribution. Stats. 2019; 2(4):447-456. https://doi.org/10.3390/stats2040031

Chicago/Turabian StyleVidović, Zoran. 2019. "Bayesian Prediction of Order Statistics Based on k-Record Values from a Generalized Exponential Distribution" Stats 2, no. 4: 447-456. https://doi.org/10.3390/stats2040031

APA StyleVidović, Z. (2019). Bayesian Prediction of Order Statistics Based on k-Record Values from a Generalized Exponential Distribution. Stats, 2(4), 447-456. https://doi.org/10.3390/stats2040031