Simulation-Based Evaluation of Incident Commander (IC) Competencies: A Multivariate Analysis of Certification Outcomes in South Korea

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants and Exam Procedure

- Goshiwon Fire: A small dormitory characterized by narrow, crowded spaces, complicating both rescue operations and hose deployment. Originally, Goshiwon facilities provided tiny, private rooms intended for students studying intensively for national exams. However, recently, these dormitories have evolved into affordable housing options primarily for low-income individuals. Dense smoke quickly accumulated due to poor ventilation, significantly increasing firefighting difficulty.

- Karaoke Room Fire: A layout of multiple compartmentalized rooms presented challenges in navigation, search, and rescue. Flammable interior materials produced thick toxic smoke, intensifying suppression difficulties.

- Residential Villa Fire: A multi-story building scenario with fire initially on a lower floor and quickly spreading upward via internal stairways, cutting off escape routes and complicating victim extraction and suppression tactics.

- Apartment Fire: A high-density residential building where a mid-level fire rapidly spread to adjacent apartments, creating multi-room fires that complicated evacuation, rescue operations, and firefighting strategy. Stairwell congestion and high fuel loads posed additional operational challenges.

- Construction Site Fire: A partially constructed building introducing hazards such as structural instability, scaffolding obstructions, limited access, and absence of fixed water supply points. The presence of flammable construction materials and the dynamic nature of the scenario required rapid tactical adaptation.

2.2. Performance Measures and Scoring

2.3. Statistical Analysis

2.3.1. Group Comparisons (Pass vs. Fail)

2.3.2. Competency–Outcome Correlation Analysis

2.3.3. Logistic Regression Model

2.3.4. Random Forest Classification and Feature Importance

2.3.5. Principal Component Analysis (PCA)

2.4. Scenario Impact Analysis

3. Results

3.1. Sample & Overall Pass Rates

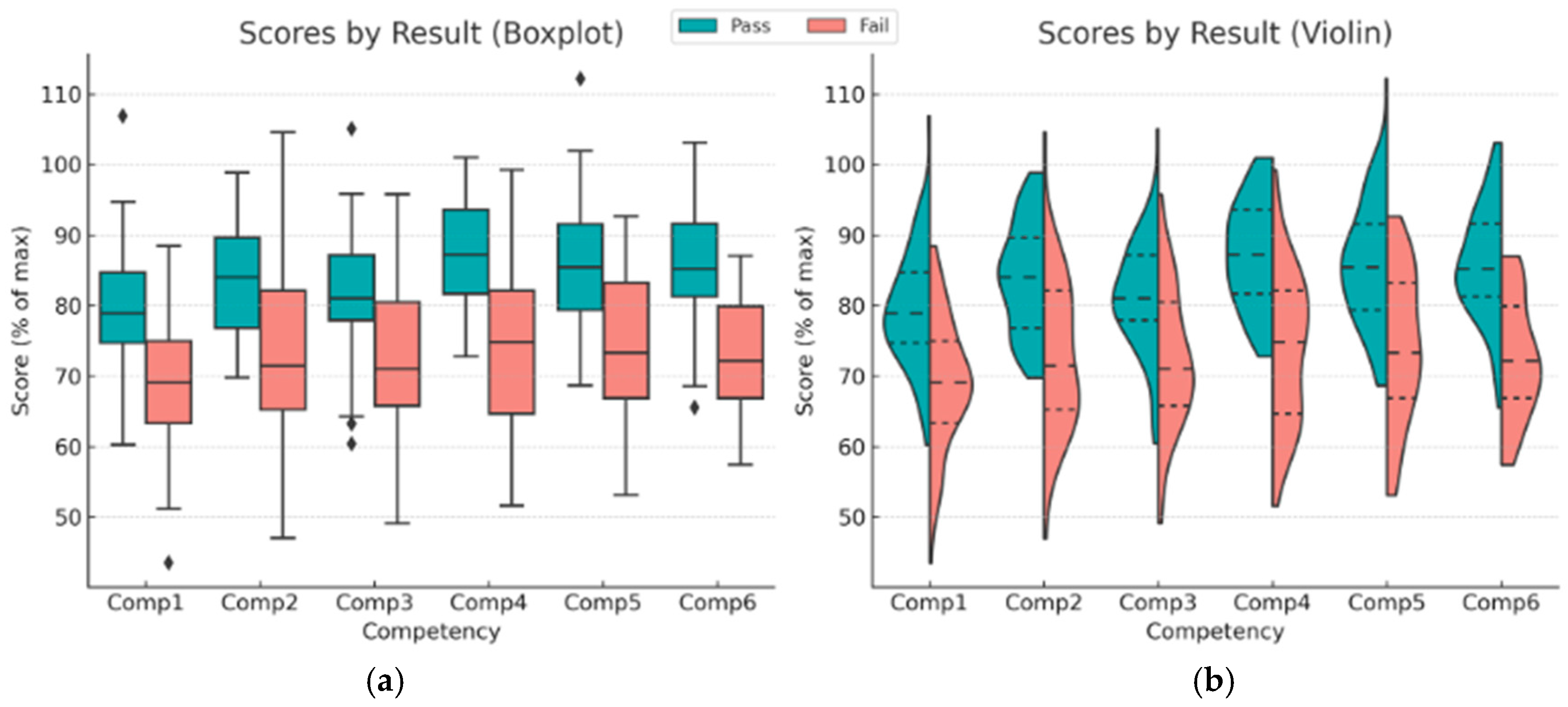

3.2. Competency Score Differences (Pass vs.Fail)

3.2.1. Higher-Order Competencies

- Crisis Management & Leadership (Competency 6): Successful candidatesSuccessful candidates averaged about 85.4% of points in this area vs 71.2% for unsuccessful candidates—a gap of ~14.2 percentage points (95% CI [9%, 19%]). In terms of raw score, successful candidates scored ~5.7 points higher (out of 40) in this category on average than unsuccessful ones. This was the biggest gap observed among all competencies. This corresponds to a large effect size (Cohen’s d ≈ 1.3), underlining the practical significance of this performance gap.

- Progress Management (Competency 4): Successful candidatesSuccessful candidates averaged ~86.8% vs. Unsuccessful candidates ~72.7% (a ~14.0 point percentage difference, ~4.9 raw points out of 35).

3.2.2. Sub-Competency Differences

- Several Crisis Management & Leadership sub-competencies showed very large differences. For instance, the sub-competency “6—3” (a crisis leadership task, e.g., related to operational execution as a leader) had successful candidates scoring about 19.7 percentage points higher on average than unsuccessful candidates. Similarly, “6—1” (responding to sudden crisis situations) showed ~19.3% gap. These represent some of the highest disparities in the entire exam—indicating that strong leadership under crisis conditions is a key hallmark of those who passed.

- Multiple Progress Management sub-competencies also exhibited large gaps. For example, “4—3” (related to prioritization and reporting of actions) showed a ~17.3% difference, “4—5” (managing tactical priorities) about ~16.6%, and “4—2” (implementing measures to improve/worsen situations) ~16.4% gap in favor of passing candidates. These suggest that the ability to manage the progress of the incident (e.g., adjusting tactics and priorities appropriately) clearly separated those who passed from those who failed.

- Other notable gaps were observed in a Communication sub-competency—“5—5”, which appears to correspond to efficient communication—where successful candidates scored ~14.5% higher on average. Also, a Response Activities sub-competency “3—8” (possibly documenting the tactical situation board) had about a 15.5% gap.

3.3. Key Predictors of Success

3.3.1. Correlation Analysis

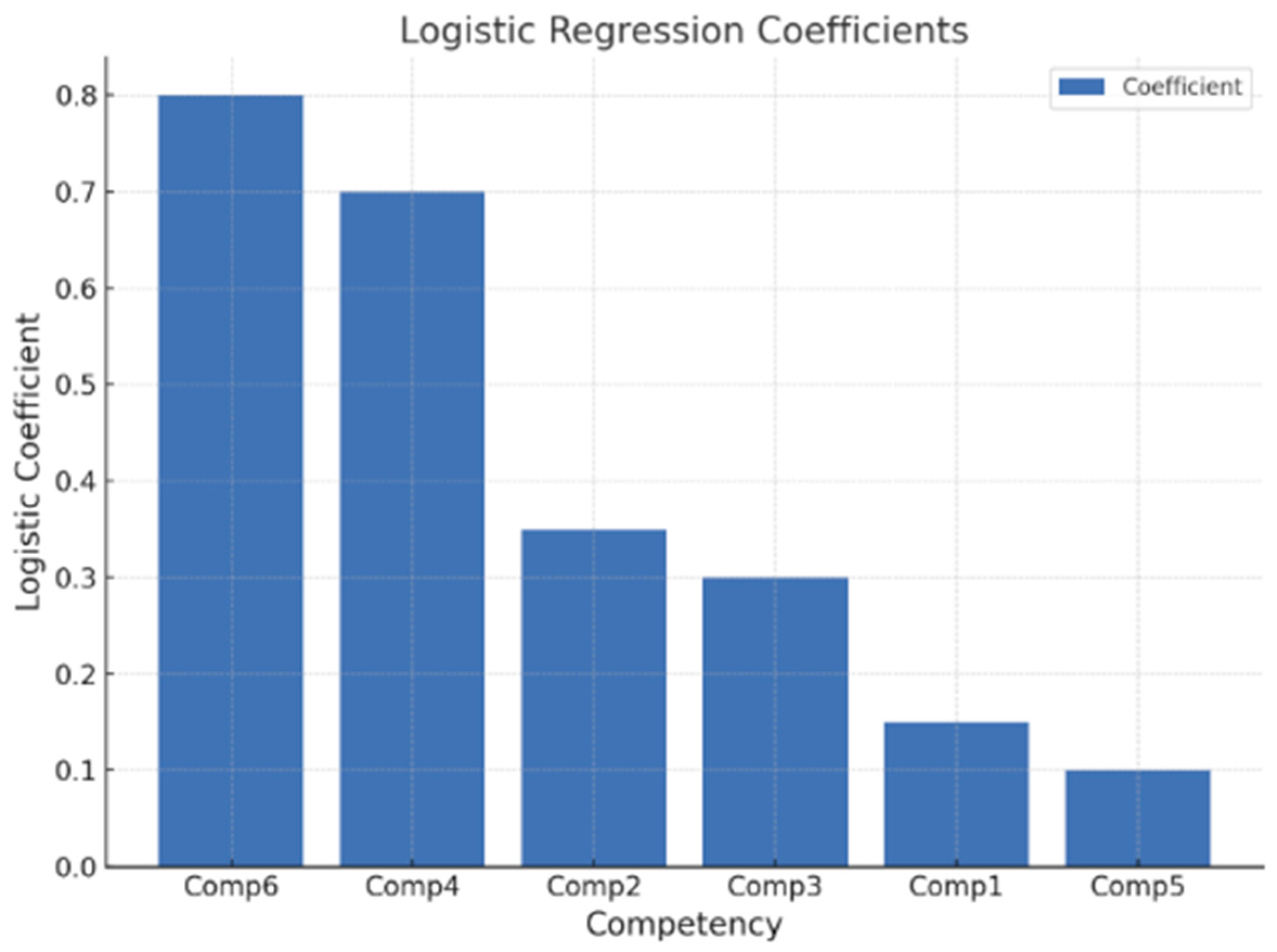

3.3.2. Logistic Regression Analysis

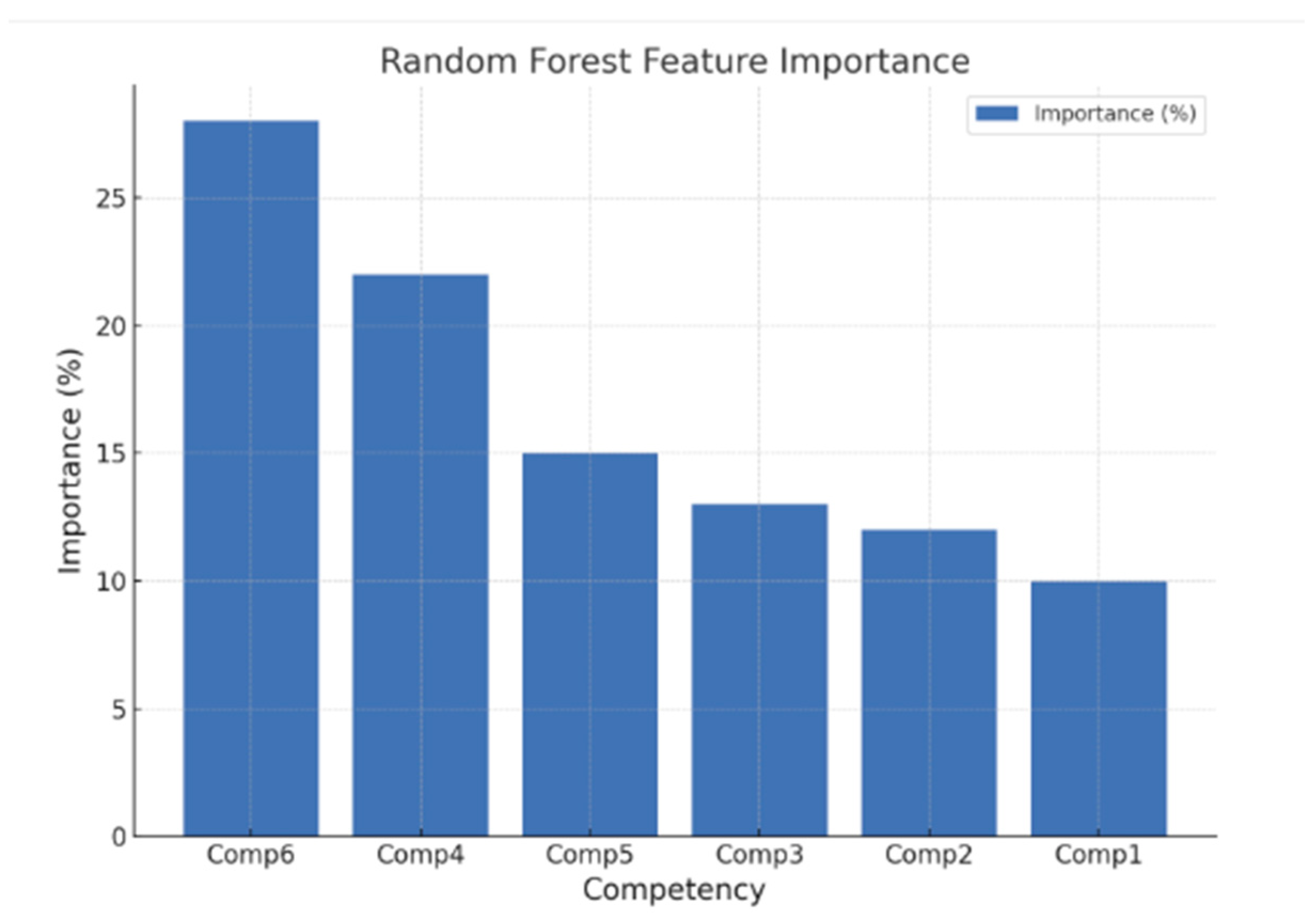

3.3.3. Random Forest Analysis

3.3.4. Pattern Discovery via PCA

3.3.5. Impact of the Disaster Scenario Environment

4. Discussion

4.1. Implications of Pass Rates Across Cohorts and Scenarios

4.2. Competency Performance Gaps Between Successful and Unsuccessful Candidates

4.3. Key Factors in Certification Success

5. Conclusions

5.1. Theoretical Contribution

5.2. Practical Implications

5.3. Limitations and Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Assessment Indicators for Intermediate Incident Commanders

Assessment Indicators: 6 Evaluation Categories and 35 Behavioral Indicators

- -

- Practical: Must score 160 or more out of 200, and must not receive a ‘Low’ rating on any of the 7 key behavioral indicators by at least two evaluators.

- -

- Interview: Combined average of internal and external evaluators; only those with ‘Low’ ratings in 20% or less of the criteria are considere

| Evaluation Category (6) | Points (200) | Behavioral Indicators |

|---|---|---|

| 1. Situation Assessment | 28 pts | Collection of dispatch information (3) Instruction by first-arriving unit leader (5) Declaration of command by IC (5) Initial situation assessment (10) Identification of key information (5) |

| 2. Determination of Command Post Location | 28 pts | Assessing appropriateness of first-arriving unit’s response (3) Assessing site hazards (5) Designating personnel entry/traffic control points (5) Identifying and adjusting site access route (10) Establishing rescue base location (5) |

| 3. Response Activities | 28 pts | Resource allocation and coordination (10) Setting and controlling fire operation zones (5) Establishing fire hydrant supply system (5) Organizing situation by operational stages (5) Coordination with unit commanders at site (5) Issuing urgent orders (5) Recording operational situation (5) Operation of emergency response plans (3) |

| 4. Tactical Situation Management | 40 pts | Identification of situation developments (10) Monitoring fire expansion and implementing containment (5) Submitting mid-operation reports (5) Disseminating situation updates to internal units (5) Recording situation reports (5) Directing personnel evacuation (10) |

| 5. Communication and Coordination | 28 pts | Maintaining cooperative systems (5) Maintaining reporting structure (5) Presiding over tactical meeting and summarizing judgment (5) Coordinating with related agencies (5) Effective exchange of command intent (8) |

| 6. Crisis Management and Leadership | 40 pts | Response to unexpected crisis situations (10) Stress management (5) Motivating personnel and demonstrating leadership (5) Achieving search & rescue objectives (10) Ensuring safety management during operations (10) Determining termination and demobilization plans (5) |

Appendix B. Certification Evaluation (Practical & Interview)

General Information

| Eligibility | Applicants must be those who have passed the Level 2 Integrated Training Program(IC with at least one year of field experience are eligible to apply). ※ If the individual is promoted to a senior rank after completing the Level 2 training, they must apply for certification immediately |

| Evaluation Method | Simulated disaster scenarios will be used to evaluate ICs capabilities. ※ Fire, explosion, and hazardous material accidents are simulated; scenarios may be adjusted to match the candidate’s real-world experience and rank. 1. Grant situational authority and responsibilities based on the scenario. 2. Assess the individual’s abilities through interviews on the commander’s decisions and response strategies. 3. Draw one of the five simulated disaster environments by lot before the evaluation begins. |

| Evaluation Panel | At least 3 evaluators for the practical exam and 3 or more for the interview. ※ Must include both internal and at least one external evaluator. 1. A firefighter of the same or higher rank as the candidate. 2. A firefighter who holds a fire command qualification at the same or higher level. 3. A university professor with relevant research or teaching experience in fire science or command. |

| Evaluation Criteria | Behavioral indicators are assessed based on High–Medium–Low ratings. |

| Adjustable Evaluation Items | Excluding the 7 core behavioral indicators, up to 20% of other indicators may be adjusted based on real-world applicability. ※ Adjustments may include deletion, reduction, or redistribution of scores across non-core items |

| Scenario Examples |  |

Appendix C. Operation Committee

Composition of the Operation Committee

- The committee consists of the Operation Committee, Expert Committees, and the Working-level Council.

- -

- Operation Committee: Includes the Director of 119 Operations, the Head of the Disaster Response Division, and key section chiefs from each operating institution.

- -

- Expert Committees: Includes both the Competency Development Expert Committee and the Competency Evaluation Expert Committee.

- -

- Working-level Council: Composed of the Disaster Response Division (lead department) and staff from operating institutions.

- Functions and Deliberation Methods of the Operation Committee

- -

- Function: The committee decides on key matters to ensure consistency in on-site commander competency training and certification assessment, and to maintain appropriateness, fairness, objectivity, and transparency in procedures and content.

- -

- Meeting Convening: As needed—convened by the committee chair if significant matters arise.

- -

- Deliberation Method: A majority vote of present members determines decisions.

| Chairman: Director of 119 Operations -Members: Head of Disaster Response Division, Key Section Chiefs | ||

|---|---|---|

| Competency Development Expert Committee | Competency Evaluation Expert Committee | Working-Level Council |

|

|

|

Appendix D. Summary of Scenario Structure and Difficulty Parameters

Summary of Scenario Structure and Difficulty Parameters

| Scenario | Dispatch Trigger | Initial Conditions | Victim Distribution | Fire Locations | Fire Spread | Challenges | Hazard Notes |

|---|---|---|---|---|---|---|---|

| 1. Goshiwon Fire | Fire reported on 2nd and 3rd floors | Upon arrival, one person is hanging from a 2F window requesting rescue. Deployment of an air rescue cushion is possible; use of an aerial ladder truck is not feasible. | 6 people total; 2F–1 hanging from window, 1 in hallway, 2 inside rooms 218 and 227; 3F–1 trapped on floor; Roof–1 person taking refuge. | 2 (two ignition points on 2F and 3F) | Fire extends upward from 2F to 3F | Delayed arrival due to heavy traffic congestion | City gas supply not shut off (explosion risk); one victim fell from 2F window during escape; Room 227 door locked (entry delayed); thick smoke causing near-zero visibility. |

| 2. Karaoke Room Fire | Fire outbreak on 2nd floor | Upon arrival, heavy smoke is billowing from the 2F windows. One person is visible at a 3F window calling for help. | 5 people total; 3F–1 hanging from window, 1 in corridor; 2F–1 collapsed in hallway, 2 trapped inside karaoke rooms. | 1 (ignition on 2F) | Fire spreads upward from 2F to 3F | Delayed response due to illegally parked cars | Maze-like interior layout hinders search; flammable soundproofing materials produce dense toxic smoke; insufficient emergency exits make egress difficult. |

| 3. Residential Villa Fire | Fire outbreak on 3rd floor | Upon arrival, a resident is found on a 4F balcony awaiting rescue. Fire and smoke are spreading toward the 4th floor. | 5 people total; 4F–1 on balcony; 3F–1 trapped in the burning unit, 1 in stairwell; 2F–1 semi-conscious from smoke; Roof–1 person who fled upward. | 1 (ignition in 3F unit) | Fire spreads from 3F up to 4F | None | Rapid smoke spread through open stairwell; no sprinkler system in building; small LPG gas cylinder in use (potential explosion hazard). |

| 4. Apartment Building Fire | Fire reported on 8th floor | Upon arrival, flames are venting out of an 8F apartment. A resident is spotted on a 9F balcony shouting for help. | 5 people total; 9F–1 on balcony, 1 in apartment above fire; 8F–1 in burning apartment, 1 collapsed in hallway; 7F–1 overcome by smoke on the floor below. | 1 (ignition in 8F apartment) | Fire spreads from 8F up to 9F | None | One resident jumped from 8F before rescue (fatal injuries); broken windows create backdraft risk; high-rise height complicates evacuation and firefighting operations. |

| 5.Construction Site Fire | Fire outbreak in building under construction | Upon arrival, the unfinished structure is engulfed in flames on one side. Debris and construction materials litter the scene. | 4 people total; 3 at site–1 worker on upper scaffolding, 1 trapped under debris, 1 incapacitated at ground level; 1 missing (unaccounted for amid the chaos). | 1 (ignition in scaffold/structure) | Fire spreads through scaffolding and materials on site | None | Multiple fuel and gas cylinders on site (explosion hazard); structural integrity compromised (collapse risk); lack of on-site water source slows firefighting. |

References

- Duczyminski, P. Sparking Excellence in Firefighting Through Simulation Training. Firehouse, 1 November 2024. Available online: https://www.firehouse.com/technology/article/55237747/sparking-excellence-in-firefighting-through-simulation-training (accessed on 12 July 2025).

- Hall, K.A. The Effect of Computer-Based Simulation Training on Fire Ground Incident Commander Decision Making. Ph.D. Thesis, The University of Texas at Dallas, Dallas, TX, USA, 2010. [Google Scholar]

- Butler, P.C.; Honey, R.C.; Cohen-Hatton, S.R. Development of a Behavioural Marker System for Incident Command in the UK Fire and Rescue Service: THINCS. Cogn. Technol. Work 2020, 22, 1–12. [Google Scholar] [CrossRef]

- Lamb, K.J.; Davies, J.; Bowley, R.; Williams, J.-P. Incident Command Training: The Introspect Model. Int. J. Emerg. Serv. 2014, 3, 131–143. [Google Scholar] [CrossRef]

- Boosman, M.; Lamb, K.; Verhoef, I. Why Simulation Is Key for Maintaining Fire Incident Preparedness. In Fire Protection Engineering; Spring: Berlin/Heidelberg, Germany, 2015; Available online: https://www.sfpe.org/publications/fpemagazine/fpearchives/2015q2/fpe2015q24 (accessed on 12 July 2025).

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A Systematic Review of Immersive Virtual Reality Applications for Higher Education: Design Elements, Lessons Learned, and Research Agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Hammar Wijkmark, C.; Metallinou, M.M.; Heldal, I. Remote Virtual Simulation for Incident Commanders—Cognitive Aspects. Appl. Sci. 2021, 11, 6434. [Google Scholar] [CrossRef]

- Klein, G.A.; Calderwood, R. Decision Models: Some Lessons from the Field. IEEE Trans. Syst. Man Cybern. 1991, 21, 1018–1026. [Google Scholar] [CrossRef]

- Lipshitz, R.; Klein, G.; Orasanu, J.; Salas, E. Taking Stock of Naturalistic Decision Making. J. Behav. Decis. Mak. 2001, 14, 331–352. [Google Scholar] [CrossRef]

- Cohen-Hatton, S.R.; Honey, R.C. Goal-Oriented Training Affects Decision-Making Processes in Virtual and Simulated Fire and Rescue Environments. J. Exp. Psychol. Appl. 2015, 21, 395–406. [Google Scholar] [CrossRef] [PubMed]

- Endsley, M.R. Situation Awareness Measurement: How to Measure Situation Awareness in Individuals and Teams; Human Factors and Ergonomics Society: Washington, DC, USA, 2021. [Google Scholar]

- Kauppila, J.; Irola, T.; Nordquist, H. Perceived Competency Requirements for Emergency Medical Services Field Supervisors in Managing Chemical and Explosive Incidents: Qualitative Interview Study. BMC Emerg. Med. 2024, 24, 239. [Google Scholar] [CrossRef]

- Lamb, K.; Farrow, M.; Costa, O.; Launder, D.; Greatbatch, I. Systematic Incident Command Training and Organisational Competence. Int. J. Emerg. Serv. 2020, 10, 222–234. [Google Scholar] [CrossRef]

- Thielsch, M.T.; Hadzhialiovic, D. Evaluation of Fire Service Command Unit Trainings. Int. J. Disaster Risk Sci. 2020, 11, 300–315. [Google Scholar] [CrossRef]

- Gillespie, S. Fire Ground Decision-Making: Transferring Virtual Knowledge to the Physical Environment. Ph.D. Thesis, Grand Canyon University, Phoenix, AZ, USA, 2013. [Google Scholar]

- Berthiaume, M.; Kinateder, M.; Emond, B.; Cooper, N.; Obeegadoo, I.; Lapointe, J.-F. Evaluation of a Virtual Reality Training Tool for Firefighters Responding to Transportation Incidents with Dangerous Goods. Educ. Inf. Technol. 2024, 29, 14929–14967. [Google Scholar] [CrossRef]

- Hancko, D.; Majlingova, A.; Kačiková, D. Integrating Virtual Reality, Augmented Reality, Mixed Reality, Extended Reality, and Simulation-Based Systems into Fire and Rescue Service Training: Current Practices and Future Directions. Fire 2025, 8, 228. [Google Scholar] [CrossRef]

- Stenshol, K.; Risan, P.; Knudsen, S.; Sætrevik, B. An Explorative Study of Police Students’ Decision-Making in a Critical Incident Scenario Simulation. Police Pract. Res. 2023, 25, 401–415. [Google Scholar] [CrossRef]

- Rothstein, N. Training for Warfighter Decision Making: A Survey of Simulation-Based Training Technologies. Int. J. Aviat. Sci. 2016, 1, 134–149. Available online: https://repository.fit.edu/ijas/vol1/iss2/5 (accessed on 12 July 2025).

- Harris, D.J.; Arthur, T.; Kearse, J.; Olonilua, M.; Hassan, E.K.; De Burgh, T.C.; Wilson, M.R.; Vine, S.J. Exploring the Role of Virtual Reality in Military Decision Training. Front. Virtual Real. 2023, 4, 1165030. [Google Scholar] [CrossRef]

- National Fire Agency. Strengthening Fire-Ground Command Capabilities: First Implementation of “Strategic On-Scene Commander” Certification. Disaster Incident News, 2 September 2024. Available online: https://www.nfa.go.kr/nfa/news/disasterNews?boardId=bbs_0000000000001896&mode=view&cntId=208261 (accessed on 12 July 2025).

- Carolino, J.; Rouco, C. Proficiency Level of Leadership Competences on the Initial Training Course for Firefighters—A Case Study of Lisbon Fire Service. Fire 2022, 5, 22. [Google Scholar] [CrossRef]

- Lewis, W. Commander Competency. Firehouse, 9 January 2023. Available online: https://www.firehouse.com/technology/incident-command/article/21288477/the-making-of-the-most-competent-fireground-incident-commanders (accessed on 12 July 2025).

- Cho, E.-H.; Nam, J.-H.; Shin, S.-A.; Lee, J.-B. A Study on the Preliminary Validity Analysis of Korean Firefighter Job-Related Physical Fitness Test. Int. J. Environ. Res. Public Health 2022, 19, 2587. [Google Scholar] [CrossRef]

- UK Research and Innovation. Improving Command Skills for Fire and Rescue Service Incident Response; UK Research and Innovation: Swindon, UK, 2023; Available online: https://www.ukri.org/who-we-are/how-we-are-doing/research-outcomes-and-impact/esrc/improving-command-skills-for-fire-and-rescue-service-incident-response/ (accessed on 12 July 2025).

- Alhassan, A.I. Analyzing the Application of Mixed Method Methodology in Medical Education: A Qualitative Study. BMC Med. Educ. 2024, 24, 225. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.-C.; Lin, C.-Y.; Chuang, Y.-J. The Study of Alternative Fire Commanders’ Training Program during the COVID-19 Pandemic Situation in New Taipei City, Taiwan. Int. J. Environ. Res. Public Health 2022, 19, 6633. [Google Scholar] [CrossRef] [PubMed]

- Simon, S.M.; Glaum, P.; Valdovinos, F.S. Interpreting Random Forest Analysis of Ecological Models to Move from Prediction to Explanation. Sci. Rep. 2023, 13, 3881. [Google Scholar] [CrossRef]

- Crow, I. Training’s New Dimension, with Fire Service College. Int. Fire Saf. J. 2025. Available online: https://internationalfireandsafetyjournal.com/trainings-new-dimension-with-fire-service-college/ (accessed on 12 July 2025).

- Drake, B. “Good Enough” Isn’t Enough: Challenging the Standard in Fire Service Training. Fire Engineering, 15 April 2025. Available online: https://www.fireengineering.com/firefighting/good-enough-isnt-enough-challenging-the-standard-in-fire-service-training/ (accessed on 12 July 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.-c.; Suh, J.-h.; Chae, J.-m. Simulation-Based Evaluation of Incident Commander (IC) Competencies: A Multivariate Analysis of Certification Outcomes in South Korea. Fire 2025, 8, 340. https://doi.org/10.3390/fire8090340

Park J-c, Suh J-h, Chae J-m. Simulation-Based Evaluation of Incident Commander (IC) Competencies: A Multivariate Analysis of Certification Outcomes in South Korea. Fire. 2025; 8(9):340. https://doi.org/10.3390/fire8090340

Chicago/Turabian StylePark, Jin-chan, Ji-hoon Suh, and Jung-min Chae. 2025. "Simulation-Based Evaluation of Incident Commander (IC) Competencies: A Multivariate Analysis of Certification Outcomes in South Korea" Fire 8, no. 9: 340. https://doi.org/10.3390/fire8090340

APA StylePark, J.-c., Suh, J.-h., & Chae, J.-m. (2025). Simulation-Based Evaluation of Incident Commander (IC) Competencies: A Multivariate Analysis of Certification Outcomes in South Korea. Fire, 8(9), 340. https://doi.org/10.3390/fire8090340