Abstract

As high-specification structures, civil aircraft hangars face significant fire risks, including rapid fire propagation and challenging rescue operations. The structural integrity of these hangars is compromised under high temperatures, potentially leading to collapse and making aircraft parking and maintenance unfeasible. The severe consequences of fire in such environments make effective detection essential for mitigating risks and enhancing flight safety. However, conventional fire detectors often suffer from false alarms and missed detections, failing to meet the fire safety demands of large buildings. Additionally, many existing fire detection models are computationally intensive and large in size, posing deployment challenges in resource-limited environments. To address these issues, this paper proposes an improved YOLOv8-based lightweight model for fire detection in aircraft hangars (AH-YOLO). A custom infrared fire dataset was collected through controlled burn experiments in a real aircraft hangar, using infrared thermal imaging cameras for their long-range detection, high accuracy, and robustness to lighting conditions. First, the MobileOne module is integrated to reduce the network complexity and improve the computational efficiency. Additionally, the CBAM attention mechanism enhances fine target detection, while the improved Dynamic Head boosts the target perception. The experimental results demonstrate that AH-YOLO achieves 93.8% mAP@0.5 on this custom dataset, a 3.6% improvement over YOLOv8n while reducing parameters by 15.6% and increasing frames per second (FPS) by 19.0%.

1. Introduction

In the context of rapid economic growth and continuous advancements in construction technology, aircraft hangars have emerged as particularly high-risk structures due to their specialized operational demands and unique fire hazards [1]. Aircraft hangars store high-value assets and often contain significant quantities of aviation fuels and composite materials, which can result in rapid fire spread and extreme temperatures, posing severe threats to life safety and property protection [2]. Compared to general industrial buildings, hangars require stricter fire protection standards [3] due to the distinctive composition of high-value assets, aviation fuels, and composite materials present in aircraft hangars. This combination results in a marked escalation in the risk of rapid fire propagation and the attainment of extreme temperatures.

The rapid detection of fire and smoke is critical in aircraft hangars to minimize potential damage and protect lives. Currently, hangars typically rely on conventional methods, including smoke and heat sensors, coupled with automatic foam suppression systems [4]. These methods, which include thermal sensing, gas sensing, flame sensing, and smoke detection, are widely implemented in residential and public spaces [5,6]. However, they suffer from significant limitations when applied to large, open spaces like hangars, where the smoke distribution is uneven and initial fires are often small or indistinct. Thermal and smoke sensors, for instance, are prone to high false alarm rates and delayed responses, especially in early fire stages [7]. Gas sensors, while highly sensitive, require stable operating conditions and can be costly [8]. Flame sensors, which rely on visible and infrared radiation, are limited by distance and can be obstructed by smoke or heat reflection [9]. Additionally, these sensors often fail to detect fires in time, limiting their effectiveness in critical environments like large spaces.

To address these limitations, vision-based fire detection methods using visible-light cameras and classical image processing techniques have been explored. These typically follow a three-step pipeline: fire region localization, handcrafted feature extraction (e.g., color, shape, and motion), and classification via traditional machine learning. Among them, color-based approaches utilizing RGB [10], HSV [11], YUV [12], YCbCr [13], and LUV [14] color spaces are widely used to distinguish flames based on chromatic signatures. Grayscale and motion-based analyses have also been applied to detect dynamic smoke patterns [15]. While these conventional methods offer real-time performance and are easy to implement, they are highly sensitive to lighting variations and complex backgrounds, often resulting in false alarms or missed detections. Moreover, their limited feature representation hinders generalization across diverse scenarios.

As the demand for robust fire detection grows, convolutional neural networks (CNNs) have become a powerful alternative to traditional image processing methods. CNN-based object detection models eliminate the need for handcrafted features while offering superior representation learning and generalization across diverse environments. Deep learning-based fire detection approaches are commonly categorized into two types: single-stage detectors, such as YOLO (You Only Look Once) [16], SSD [17], and RetinaNet [18], which prioritize real-time performance, and two-stage detectors like Faster R-CNN [19], Cascade R-CNN [20], and Mask R-CNN [21], which typically offer higher accuracy, but incur greater computational complexity. Given the real-time monitoring demands of fire scenarios, particularly in expansive spaces like aircraft hangars, single-stage detectors, such as YOLO, have become widely adopted. YOLO is especially valued for its compact structure, end-to-end detection capability, and speed, making it ideal for real-time fire detection in complex environments.

Building on this trend, several studies have introduced YOLO-based models tailored for constrained environments. For instance, Abdusalomov et al. [22] adapted YOLOv3 for edge deployment, achieving accurate fire detection under low-light and long-distance conditions. Hikmat Yar et al. [23] introduced a lightweight YOLOv5s variant with reduced complexity and improved robustness for detecting fires of varying scales. Xu et al. [24] optimized YOLOv7 with ConvNeXtV2 and attention mechanisms to enhance the detection accuracy in complex forest environments. Despite these advancements, most CNN-based models rely on visible-light inputs and remain vulnerable to challenges such as low illumination, dense smoke, and partial flame occlusion—conditions especially problematic in aircraft hangar environments.

To overcome these limitations, infrared thermal imaging has emerged as a valuable tool for fire detection. Unlike visible-light imaging, which requires clear flame visibility, infrared technology captures heat signatures, allowing for the detection of flames even when obscured by smoke or under low-light conditions [25]. This capability makes infrared imaging particularly advantageous in large complex environments, where conventional methods often fail due to smoke dispersion, variable lighting, or structural occlusions. However, existing research has primarily focused on forest fires [26], with limited exploration of infrared-based fire detection in indoor or aviation-specific scenarios—highlighting the need for customized solutions tailored to these challenging environments.

We hypothesize that integrating a lightweight architecture with infrared thermal data can significantly enhance the fire detection accuracy and robustness in aircraft hangar environments. To validate this, we collected a custom infrared fire dataset through controlled combustion experiments in an aircraft hangar, covering various combustion conditions and fuel types. This dataset was used to evaluate the performance of the AH-YOLO model against state-of-the-art lightweight models, such as RepViT [27], EfficientNetV2 [28], MobileNetV3 [29], YOLOv9 [30], YOLOv10 [31], and YOLOv11 [32]. Unlike our previous work [33], which utilized visible-light images and introduced limited architectural enhancements targeting both flame and smoke detection, this study focuses exclusively on flame detection using infrared thermal imagery. AH-YOLO adopts a new lightweight architecture by integrating MobileOne [34], a Convolutional Block Attention Module (CBAM) [35], and a Dynamic Head (DyHead) [36], enabling improved feature extraction and perception in thermal domains. Additionally, it achieves higher detection precision (93.8% mAP@0.5) and supports longer-range monitoring in low-light, smoke-filled conditions, making it more suitable for real-time fire detection in large aircraft hangars.

This study makes several key contributions:

- YOLO-based aircraft hangar fire safety detection: this paper demonstrates the use of YOLO for real-time fire detection in aircraft hangars, addressing challenges specific to these high-risk environments.

- Lightweight, high-performance detection models: the AH-YOLO model incorporates MobileOne, CBAM, and a DyHead, improving both the accuracy and efficiency.

- Advancement of deep learning for fire detection under resource constraints: the lightweight design of AH-YOLO makes it suitable for real-time fire detection in large buildings with limited computational resources.

- Specialized infrared fire dataset for aircraft hangars: the dataset developed for this study aids future advancements in fire detection technology, particularly for aviation safety.

In conclusion, this research highlights the potential of YOLO-based optimization models for enhancing fire detection in aircraft hangars. As the aviation industry increasingly integrates AI-driven safety technologies, the insights from this work are poised to inform the development of more effective fire alarm systems in high-risk environments.

2. Methodology

2.1. YOLOv8

YOLOv8 [37], developed by Ultralytics, is a next-generation single-stage object detection algorithm that builds upon the YOLOv5 series. It offers notable improvements in speed, accuracy, and versatility, making it a widely adopted baseline in real-time detection tasks. The modular design of YOLOv8 comprises three main components: an input layer, a backbone for multi-scale feature extraction, and a decoupled detection head.

The backbone includes key modules such as CBS (Conv + Batch Normalization (BN) + Sigmoid Gated Linear Unit (SiLU)), C2f, and Spatial Pyramid Pooling-Fast (SPPF). CBS facilitates efficient feature learning, while the C2f module enhances the gradient flow and enables multi-branch representation. SPPF fuses multi-scale spatial information and improves robustness to object size variations in fire targets.

YOLOv8’s neck adopts a Path Aggregation Feature Pyramid Network (PAFPN) structure to strengthen multi-scale feature fusion. The decoupled head separates classification and regression tasks to boost the detection precision. Furthermore, it supports anchor-free detection and uses advanced data augmentation strategies (e.g., Mosaic, MixUp) to improve generalization.

In this study, YOLOv8n is selected as the base framework for fire detection. Nevertheless, the model’s extensive array of parameters and its constrained capacity to adapt to intricate visual settings impede its effectiveness.

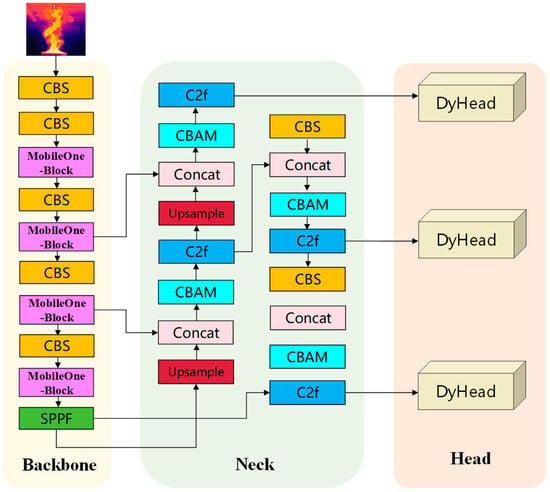

2.2. AH-YOLO: Model Overview

Although YOLOv8n provides a strong foundation for real-time object detection, its relatively high parameter count and limited adaptability to infrared data reduce its effectiveness in visually complex and resource-constrained environments. To address these challenges, we propose AH-YOLO, a lightweight and high-performance model optimized for thermal imagery. As shown in Figure 1, AH-YOLO is built upon the YOLOv8 framework and integrates three architectural modules: MobileOne, CBAM, and a DyHead. These components were carefully selected to achieve a balanced trade-off among the model efficiency, feature discrimination, and robust detection performance.

Figure 1.

AH-YOLO network structure.

Specifically, to reduce the computational load while retaining strong feature extraction capabilities, MobileOne replaces the original C2f modules in the YOLOv8 backbone. In the neck, CBAM is inserted to enhance spatial and channel-level attention, improving the model’s sensitivity to fire-related features under infrared conditions with cluttered or low-contrast backgrounds. Meanwhile, the detection head is upgraded with DyHead, which incorporates multi-level self-attention to enhance scale-awareness, spatial perception, and task-specific adaptability—critical for detecting flames of varying shapes and distances.

Together, these modules were chosen to adapt the model to infrared thermal imagery, where the pixel-level intensity and heat-based contrast differ significantly from visible-light images. The overall design of AH-YOLO aims to ensure the real-time inference speed, strong generalization, and accurate fire localization in large-scale aviation environments.

We hypothesize that this lightweight architecture, when applied to thermal data, can significantly improve the accuracy and robustness of fire detection in aircraft hangars. This hypothesis is validated through experiments under various combustion conditions, as described in Section 3.

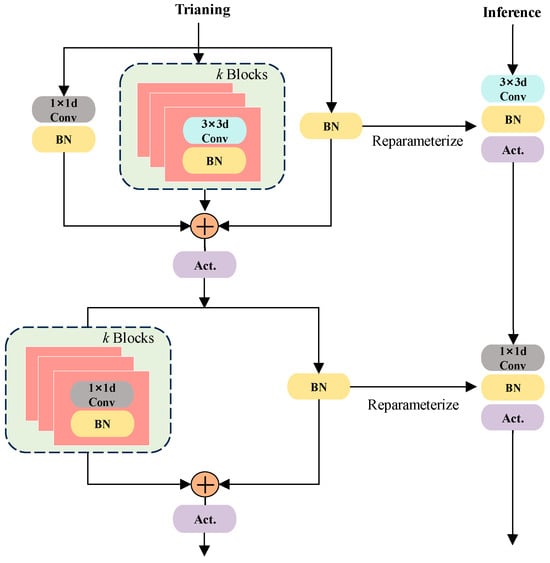

2.2.1. MobileOne

To reduce the computational load of YOLOv8 while preserving the feature extraction capability, the MobileOne module is introduced into the backbone of AH-YOLO. Originally proposed by Vasu et al. [34], MobileOne is a reparameterizable architecture designed for fast deployment, particularly in mobile and edge scenarios.

As illustrated in Figure 2, MobileOne adopts a multi-branch training structure, which includes both depthwise (3 × 3) and pointwise (1 × 1) convolutions. These branches are fused into a single-path inference structure via reparameterization, eliminating redundancy and optimizing the runtime efficiency. Each block integrates convolutional layers, BN, and activation (e.g., ReLU or SiLU), but delays activation until after the branch fusion to maintain mathematical equivalence.

Figure 2.

MobileOne module structure diagram.

During training, depthwise convolution (grouped by input channels) and pointwise convolution work in parallel to enhance feature separability and representation. After reparameterization, residual connections are merged, resulting in a compact architecture that minimizes the memory access cost and computation while maintaining high accuracy.

This replacement significantly reduces the model complexity and accelerates inference, which is critical for real-time detection under resource constraints and thermal imaging conditions.

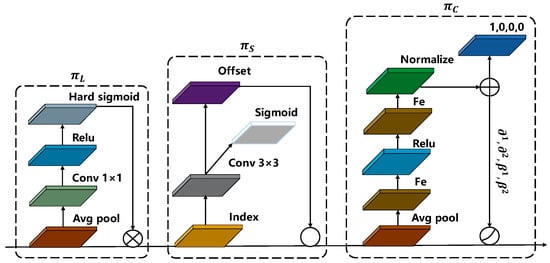

2.2.2. CBAM

Infrared fire imagery often presents cluttered backgrounds, partial occlusions, or subtle thermal gradients, which may hinder accurate flame localization. To address these issues, this study introduces CBAM into the YOLOv8 neck to improve feature discrimination through lightweight attention refinement.

As illustrated in Figure 3, CBAM sequentially applies channel attention and spatial attention. The channel attention module compresses feature maps via global average pooling and global max pooling, followed by a shared Multilayer Perceptron (MLP) with dimensional reduction (using weights and ). This highlights feature channels most relevant to flame-related thermal patterns. The output is then refined through spatial attention, which applies a 7 × 7 convolution over pooled spatial descriptors to emphasize local thermal hotspots and suppress irrelevant noise.

Figure 3.

Comprehensive CBAM structure for target localization.

Although CBAM is not a recent innovation, its low complexity and proven effectiveness in enhancing the small-target focus make it well-suited for resource-limited infrared detection scenarios. Furthermore, the novelty of this study lies not in the module itself, but in how CBAM is integrated into the YOLOv8 architecture, strategically embedded between feature fusion layers and detection heads, a design rarely explored in fire detection tasks.

This integration aims to improve the model’s sensitivity to subtle flame cues under low-contrast or obstructed infrared conditions, while maintaining high inference efficiency.

2.2.3. Dynamic Head

YOLOv8 adopts a decoupled detection head to reduce the interference between classification and localization tasks. However, in large-scale environments like aircraft hangars, small flames often appear at varying distances, angles, and under unstable lighting, which challenges the detection stability. To address this, DyHead introduces a multi-dimensional attention mechanism that enhances feature learning across scale, spatial, and task domains.

As illustrated in Figure 4, DyHead sequentially applies three types of attention: scale-aware attention captures multi-level features to handle flames of different sizes, spatial-aware attention utilizes deformable convolutions to refine position-related features, and task-aware attention reweights channels based on detection goals. This design improves the model adaptability in infrared scenes, where flame regions exhibit low contrast and high variability.

Figure 4.

Dynamic Head network structure.

This sequential arrangement forms a nested attention function, as detailed in Equation (1).

In this context, represents the attention function, while is a three-dimensional tensor with dimensions L × S × C, L denotes the hierarchical level of the feature map, S is the product of its width and height, and CC represents the number of channels. The functions , and are distinct attention operators applied to the L, S, and C dimensions, respectively.

Integrating DyHead into the detection head enhances the model’s ability to capture flame cues while suppressing background interference, thereby improving the detection robustness in large-scale environments with complex spatial layouts and thermal variability.

2.3. Dataset Construction and Experimental Procedure

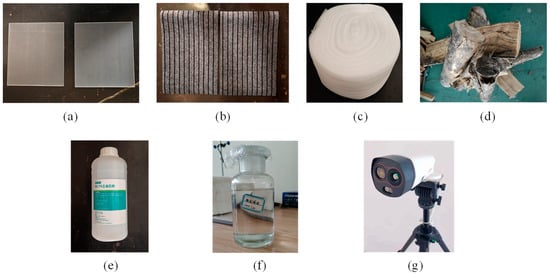

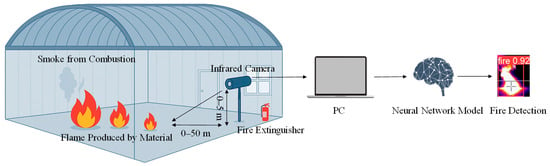

Combustion experiments were conducted in the hangar of Suining Nanba Airport (Figure 5) to build a fire detection dataset relevant to real aviation scenarios.

Figure 5.

The interior and exterior environment of an airplane hangar.

Six combustible materials were selected based on their different flammability characteristics and combustion behavior, as shown in Figure 6a–f, to ensure dataset diversity and improve model robustness. As shown in Figure 6g, a dual-lens thermal imaging device was used, which simultaneously captures both visible and infrared images from the same viewpoint. The infrared module features a 256 × 192 uncooled VOx detector, ≤50 mK thermal sensitivity, ≤25 Hz frame rate, 3.2 mm fixed-focus lens, and a 56° × 42° field of view. It supports a temperature measurement from −15 °C to 150 °C (high gain) and 50 °C to 550 °C (low gain) with an accuracy of ±2 °C or ±2% of the reading.

Figure 6.

Overview of materials and equipment used in combustion experiments. (a) Acrylic sheet, (b) aircraft carpet, (c) expanded polyethylene (EPE) foam, (d) Jujube wood, (e) ethanol, (f) aviation kerosene, (g) infrared thermal imaging camera.

As shown in Figure 7, the experiment generated a variety of fire scenarios by igniting different materials with distinct combustion characteristics. For instance, aviation kerosene burned rapidly, producing large, orange–yellow flames accompanied by thick black smoke. Jujube wood, on the other hand, exhibited stable combustion, with minimal white smoke and no visible flames. The aircraft carpet generated dark red flames with intermittent sparks, progressing slowly with smoldering edges. These diverse combustion characteristics were intentionally selected to represent a range of fire behaviors. Additionally, fires were observed at three distinct heights (1 m, 3 m, and 5 m) and horizontal flame distances (10 m, 30 m, and 50 m) under controlled conditions. This setup captured a variety of fire behaviors, contributing to the diversity of infrared flame images for training the model. The infrared camera recorded the fire dynamics, and the images were transmitted to a PC. The AH-YOLO model was then applied to analyze these images and perform fire detection, demonstrating the capability to detect and recognize fire in real-time under various experimental conditions.

Figure 7.

Schematic diagram of the experimental platform.

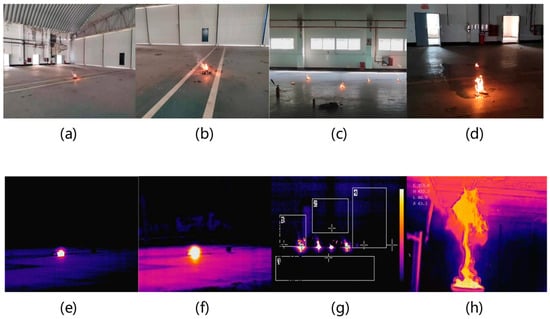

As shown in Figure 8, fire dynamics were captured using a dual-lens thermal imaging camera capable of simultaneously acquiring visible and infrared images from the same viewpoint. This setup ensures the spatial and temporal alignment of multimodal data and facilitates accurate cross-modal learning.

Figure 8.

Visible (a–d) and infrared (e–h) imaging of fire dynamics from various combustible materials at different distances.

The visible-light images (Figure 8a–d) illustrate the flame shape and intensity but are vulnerable to occlusion and lighting interference. In contrast, the infrared images (Figure 8e–h) capture the flames’ thermal signatures clearly, offering a consistent detection performance even under low-light or smoke-obscured conditions.

Additionally, the use of various combustible materials introduces diversity in the flame morphology and intensity across the dataset, enriching the feature variation and helping improve model robustness under real-world fire scenarios.

Each scenario was repeated 20 times under controlled conditions, resulting in a dataset of 1500 infrared fire images. Approximately 50 infrared frames with low thermal contrast or without visible flames were retained as negative samples to improve the model’s ability to distinguish between fire and non-fire scenarios. These negative samples help improve the detection accuracy by training the model to differentiate between fire-related and non-fire-related thermal signatures.

3. Experiment and Analysis

3.1. Experimental Environment and Hyperparameter Setting

The hardware and software configurations used in this study are summarized in Table 1, including the processor, memory, GPU, and deep learning framework versions.

Table 1.

Computation system.

In addition, the specific experimental hyperparameters such as batch size, learning rate, and optimizer are listed in Table 2.

Table 2.

Experimental parameters.

3.2. Evaluation Indicators

We utilized several metrics of evaluation in this research to validate the effectiveness of the proposed object detection algorithm, including precision, recall, parameters, GFLOPs, FPS, mAP@0.5, and mAP@0.5–0.95. Precision is found by dividing true positives by the total of true positives and false positives, while recall is found by dividing true positives by the total of true positive samples (true positives and false negatives). The GFLOPs and parameters metrics quantify the temporal and spatial complexity of the model, respectively. Furthermore, FPS is the processing speed metric in images per second by the model at the input resolution of 640 × 640. The notation mAP@0.5 is the mean precision with an IoU threshold = 0.5, and mAP@0.5–0.95 is the average detection precision with IoU thresholds ranging from 0.5 to 0.95 with a step of 0.05. The formulations for these metrics are provided in Equations (2)–(6):

Here, true positive () denotes correct detections, false positive () indicates erroneous detections, and false negative () corresponds to missed detections. refers to the average precision, while is the mean average precision calculated across all object categories, with representing the total number of categories, and specifies the time taken to process a single image.

3.3. Model Evaluation

3.3.1. Ablation Experiments

To evaluate the contribution of each architectural component, we conducted ablation experiments by progressively integrating MobileOne, CBAM, and DyHead into the YOLOv8n baseline (Table 3). Replacing the original C2f module with MobileOne improved the inference speed and reduced complexity while maintaining comparable accuracy. Adding CBAM further enhanced mAP@0.5 and precision, while DyHead provided scale adaptability and boosted the speed through the efficient decoupling of classification and localization.

Table 3.

Results of ablation experiments.

Compared with previous fire detection studies that used CBAM or DyHead alone, our model achieves a better accuracy–efficiency balance. For example, Xue et al. [38] reported a 0.9% mAP@0.5 gain with CBAM and a drop in FPS, while Hu et al. [39] found that DyHead improved mAP@0.5 by only ~0.5%, but significantly reduced the inference speed. In contrast, our design achieves a 3.6% improvement in mAP@0.5 and a 19% FPS increase over the YOLOv8n baseline. This performance gain is largely attributed to the adoption of the MobileOne backbone, which provides a lightweight yet powerful foundation that allows CBAM and DyHead to operate more effectively without introducing excessive computational overhead. These results highlight the complementary benefits of MobileOne, CBAM, and DyHead: efficient computation, refined attention, and robust multi-scale learning, making AH-YOLO well-suited for deployment in constrained fire safety environments.

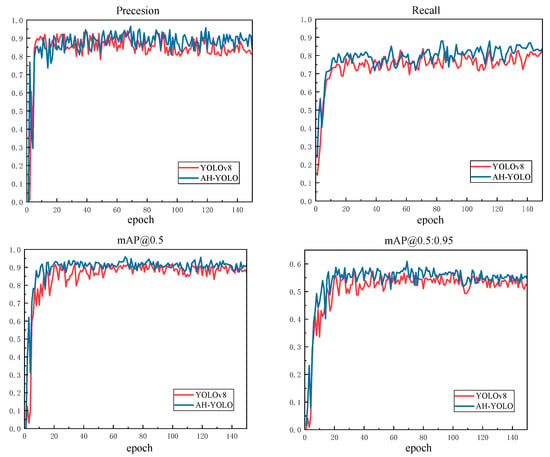

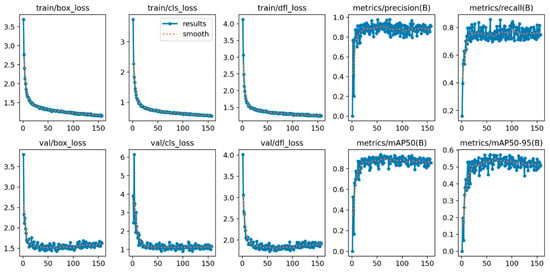

A comparative analysis was conducted through data visualization to systematically evaluate the performance of the proposed algorithm. The enhanced model (AH-YOLO) was compared with the baseline YOLOv8n, assessing their training dynamics over an initially planned 300 epochs, but early stopping was applied at 150 epochs due to convergence. Four key performance metrics were analyzed: Precision, Recall, mAP@0.5, and mAP@0.5:0.95. As illustrated in Figure 9, AH-YOLO consistently outperforms YOLOv8n, demonstrating faster convergence and a superior final performance across all evaluation criteria.

Figure 9.

Comparative training performance curves of YOLOv8 and AH-YOLO on Precision, Recall, mAP@0.5, and mAP@0.5:0.95.

3.3.2. Comparative Experiments

Beyond internal architecture validation, we extended the comparison to include state-of-the-art fire detection models, aiming to benchmark AH-YOLO’s real-world performance, as shown in Table 4. Models like EfficientNetV2 offer strong detection precision (mAP@0.5 = 0.916), but are constrained by large parameters and a high computational cost, limiting the real-time performance. In contrast, models such as YOLOv10n and ShuffleNetV2 prioritize efficiency, but often underperform in accuracy.

Table 4.

Comparison of the different models (bold data in the table indicate the best results).

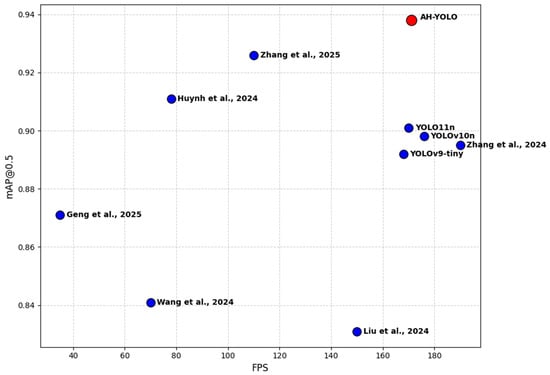

To further contextualize AH-YOLO’s effectiveness, we compared it against six recently proposed fire detection models from the literature. These models were designed for diverse settings—including aircraft cargo compartments, forests, industrial sites, and ships—and apply varied optimization strategies such as attention modules, backbone compression, and custom loss functions. Although these works use different datasets and deployment conditions, they collectively reflect the current state of fire detection research and provide a valuable benchmark for assessing the generalizability of AH-YOLO.

As shown in Figure 10, we plotted mAP@0.5 against FPS to visualize trade-offs between the accuracy and speed across all ten models. The literature-based models include the following:

Figure 10.

Cross-model comparison of real-time fire detection performance (mAP@0.5 vs. FPS), including AH-YOLO and other representative methods [40,41,42,43,44,45].

- Zhang et al. [40] developed a fire detection model (MobileOne-YOLO) for aircraft cargo compartments by integrating FReLU and EIoU, achieving 0.926 mAP@0.5 and 110 FPS. Though a similar scenario, its accuracy and speed were lower than those of AH-YOLO.

- Huynh et al. [41] fine-tuned YOLOv10 with SE attention and SGD for factory fires, reaching 0.911 mAP but only 78 FPS.

- Wang et al. [42] enhanced YOLOv5s for forest fires using ASPP and CBAM, but reported modest results (0.841 mAP, 70 FPS) under outdoor clutter.

- Liu et al. [43] incorporated CBAM into YOLOv8 for general fire detection, achieving 0.831 mAP with 150 FPS, showing limitations in accuracy despite a decent speed.

- Geng et al. [44] constructed YOLOv9-CBM with SE and BiFPN modules, improving accuracy (0.871 mAP), but at the cost of the real-time performance (35 FPS).

- Zhang et al. [45] introduced GhostNetV2 and SCConv into YOLOv8n for shipboard detection, reaching 0.895 mAP and 190 FPS, but its reliability under complex visibility conditions remains uncertain.

In addition, three recent YOLO variants (YOLOv9-tiny, YOLOv10n, and YOLOv11n), evaluated under the same conditions, each excel in individual metrics such as the FPS or parameter count. However, none simultaneously reach AH-YOLO’s level of a balanced performance.

Positioned in the upper-right quadrant of Figure 10, AH-YOLO stands out for achieving both high accuracy (mAP@0.5 = 0.938) and real-time speed (FPS = 171). This suggests its robustness across diverse environments and its strong suitability for deployment in interference-prone, enclosed scenarios like aircraft hangars.

Figure 11 illustrates the training dynamics of AH-YOLO, covering loss functions (box loss, classification loss, and distribution focal loss) and evaluation metrics (precision, recall, mAP@0.5, and mAP@0.5:0.95). The model exhibits fast and stable convergence, with the validation performance plateauing around epoch 60. Early stopping at 150 epochs effectively mitigated overfitting.

Figure 11.

AH-YOLO visualization results.

In addition to deep learning-based models, we further compared AH-YOLO with conventional fire detection technologies, as summarized in Table 5. These include Distributed Optical Fiber Heat Detectors [46], Thermal Resistance Sensors [47], and Miscellaneous Heat Detectors [48]. The comparison considers core indicators such as the detection element, working principle, response time, and detection area. While traditional systems rely on physical phenomena such as heat conduction or temperature sensing, their response times are generally slower (e.g., up to 120 s) and often limited by environmental constraints.

Table 5.

Comparison of different fire detection technologies and their characteristics.

In contrast, AH-YOLO employs infrared imaging and intelligent decision making, enabling a response time of 5.9 ms and an effective performance even in low-visibility conditions like smoke-filled hangars. Although some traditional methods provide advantages in terms of simplicity or material-specific sensitivity, they lack the adaptability and real-time responsiveness required in dynamic scenarios. This highlights the practical advantages of AI-based approaches for time-critical fire safety applications.

3.3.3. Model Comparison and Visualization

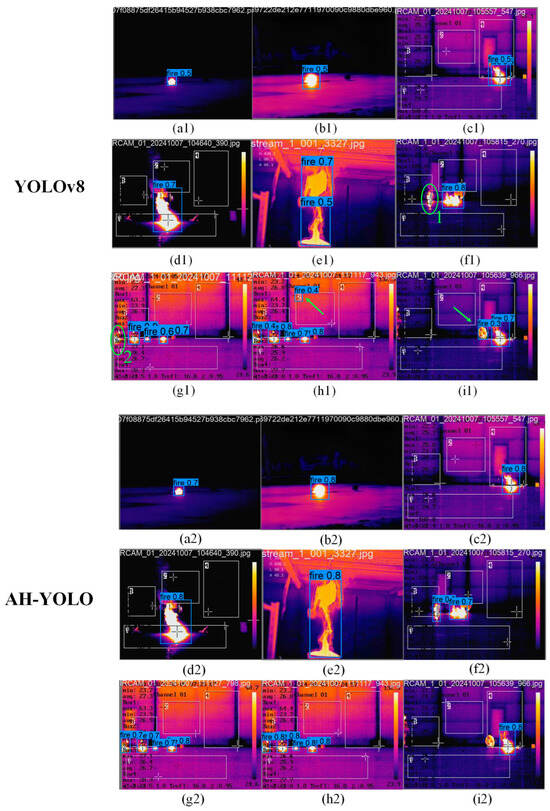

Given the practical deployment setting, we performed a side-by-side comparison of AH-YOLO and YOLOv8n using infrared fire scenarios (Figure 12). These included small fires at varying distances, multi-source fires, and scenes with human interference.

Figure 12.

Comparative performance of AH-YOLO and YOLOv8n in various hangar fire scenarios: YOLOv8n results (a1–i1); AH-YOLO results (a2–i2). Subfigures show detection performance under varying distances, multiple fire sources, complex backgrounds, and human interference.

In near-field and far-field detection (Figure 12(a1–e1) for YOLOv8n, Figure 12(a2–e2) for AH-YOLO), AH-YOLO provided consistently higher confidence scores and tighter bounding boxes. For cluttered scenes and multiple ignition points (Figure 12(f1–h1) for YOLOv8n, Figure 12(f2–h2) for AH-YOLO), YOLOv8n exhibited frequent false positives and missed detections, whereas AH-YOLO maintained accurate localization. Figure 12(i1) and Figure 12(i2) further demonstrate AH-YOLO’s advantage in distinguishing flames from surrounding interference, misclassifying fewer human-initiated ignition events compared to YOLOv8n. These results, in combination with infrared imaging and data augmentation strategies, explain AH-YOLO’s consistent performance under long-distance, low-light, or multi-source interference conditions.

To verify the recognition accuracy of the two models, we performed a quantitative analysis of detection errors, with the results presented in Table 6.

Table 6.

Comparison of detection errors between AH-YOLO and YOLOv8.

Notably, AH-YOLO achieved a false alarm rate of just 3.4%, reflecting its reliability in practical scenarios. To further contextualize this performance, we compared it with three recent sensor-integrated fire detection systems, each employing modern algorithmic strategies. Park et al. [49] employed fuzzy logic on IoT-based fire signals, resulting in a false alarm rate of 13.7%. Dampage et al. [50] used a regression-based forest fire detection system with a 10% false alarm rate, while Beak et al. [51] reported a 7.3% false alarm rate using a KNN-based multi-sensor system. Despite differences in hardware configurations and application domains, AH-YOLO maintains a significantly lower false detection rate, underscoring the advantage of vision-based deep learning in reducing false positives, particularly in enclosed and interference-prone environments such as aircraft hangars.

4. Conclusions and Future Work

This work proposes AH-YOLO, a lightweight fire detection model particularly tailored for conditions in aircraft hangars that cater to principal challenges such as false alarms, computational power, and the early detection of fires in large areas. Compared to YOLOv8n, AH-YOLO integrates MobileOne to improve the computational efficiency, CBAM to optimize features, and DyHead to achieve adaptive target perception, thereby improving the inference speed by 19.0% while achieving 93.8% mAP@0.5. The model’s effectiveness is validated on a custom infrared thermal imaging dataset, collected through controlled combustion experiments with six representative materials, covering distances from 0 to 50 m and heights from 0 to 5 m to enhance the generalizability.

Despite the promising results, AH-YOLO still faces several limitations that warrant further exploration. The current research focuses primarily on software-level evaluation, without actual deployment on embedded or edge hardware. This limits the assessment of its real-time performance and energy efficiency in practical fire detection systems. In addition, the dataset remains relatively small and scene diversity is limited, which constrains the model’s generalization ability in complex real-world scenarios.

Future work will aim to address these challenges by expanding the dataset to include more complex fire conditions—such as high-illumination scenes, multi-scale flames, and interference-rich environments—to enhance the model robustness. Efforts will also be made to deploy the model on embedded platforms such as NVIDIA Jetson Nano or Raspberry Pi, incorporating quantization and pruning to reduce the computational cost and ensure real-time inference under hardware constraints.

Moreover, based on the expanded dataset collected via dual-lens thermal imaging, future work will explore visible-infrared fusion techniques to fully exploit cross-modal information. This direction is expected to improve feature representation, enhance the detection accuracy, and further reduce false positives and false negatives in complex fire scenarios.

In conclusion, these improvements position AH-YOLO as a scalable and cost-effective fire detection solution, delivering high accuracy, a real-time performance, and enhanced adaptability for aircraft hangar fire monitoring.

Author Contributions

Conceptualization, L.D. and Q.L.; Methodology, L.D. and Z.W.; Software, L.D. and Z.W.; Validation, L.D. and Z.W.; Formal analysis, L.D. and Z.W.; Investigation, L.D. and Z.W.; Resources, L.D. and Q.L.; Data curation, Z.W.; Writing—original draft, L.D. and Z.W.; Writing—review and editing, L.D.; Visualization, Z.W.; Supervision, L.D. and Q.L.; Project administration, L.D.; Funding acquisition, L.D. and Q.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Civil Aviation Joint Research Fund of the National Natural Science Foundation of China (U2033206); the Key Laboratory Project of Sichuan Province, No. MZ2022JB01; the Aeronautical Science Foundation of China (ASFC-20200046117001); and the Fundamental Research Funds for the Central Universities (Grant Number 25CAFUC01007).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tanis, F.J.J. Life Safety and Fire Analysis-Aircraft Hangar. Fire Prot. Eng. Culminating Exp. Proj. Rep. 2018. Paper 93. Available online: https://digitalcommons.calpoly.edu/fpe_rpt/93 (accessed on 8 March 2025).

- Wade, J.T.; Lohar, B.; Lippert, K.; Cloutier, R.; Walker, S.; Olanipekun, O. A probabilistic analysis of non-military hangar fire protection systems. J. Fire Sci. 2024, 42, 297–313. [Google Scholar] [CrossRef]

- NFPA 409; Standard on Aircraft Hangars. National Fire Protection Association: Quincy, MA, USA, 2022.

- Iyaghigba, S.D.; Ayhok, C.S. Hangar fire detection alarm with algorithm for extinguisher. Glob. J. Eng. Technol. Adv. 2021, 7, 144–155. [Google Scholar] [CrossRef]

- Yandouzi, M.; Berrahal, M.; Grari, M.; Boukabous, M.; Moussaoui, O.; Azizi, M.; Ghoumid, K.; Kerkour Elmiad, A. Semantic segmentation and thermal imaging for forest fires detection and monitoring by drones. Bull. Electr. Eng. Inform. 2024, 13, 2784–2796. [Google Scholar] [CrossRef]

- Ding, Y.; Wang, M.; Fu, Y.; Wang, Q. Forest Smoke-Fire Net (FSF Net): A Wildfire Smoke Detection Model That Combines MODIS Remote Sensing Images with Regional Dynamic Brightness Temperature Thresholds. Forests 2024, 15, 839. [Google Scholar] [CrossRef]

- Wang, T.; Li, P.; Fang, S.; Zhang, P.; Yang, Y.; Liu, H.; Liu, L. A Multifunctional Sensing and Heating Fabric Based on Carbon Nanotubes Conductive Film. IEEE Sens. J. 2023, 23, 17990–17999. [Google Scholar] [CrossRef]

- Wang, Y.; Li, Q.; Zhang, J.; Yin, C.; Zhang, Q.; Shi, Y.; Men, H. A gas detection system combined with a global extension extreme learning machine for early warning of electrical fires. Sens. Actuators B Chem. 2025, 423, 136801. [Google Scholar] [CrossRef]

- Kong, S.; Deng, J.; Yang, L.; Liu, Y. An attention-based dual-encoding network for fire flame detection using optical remote sensing. Eng. Appl. Artif. Intell. 2024, 127, 107238. [Google Scholar] [CrossRef]

- Bhandari, A.K.; Kumar, I.V.; Srinivas, K. Cuttlefish Algorithm-Based Multilevel 3-D Otsu Function for Color Image Segmentation. IEEE Trans. Instrum. Meas. 2020, 69, 1871–1880. [Google Scholar] [CrossRef]

- Ding, C.; Zhang, Z.; Li, F.; Zhang, J. Traffic Image Dehazing Based on HSV Color Space. In Proceedings of the 2021 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 22–24 May 2021; pp. 5442–5447. [Google Scholar]

- Chang, H.C.; Hsu, Y.L.; Hsiao, C.Y.; Chen, Y.F. Design and Implementation of an Intelligent Autonomous Surveillance System for Indoor Environments. IEEE Sens. J. 2021, 21, 17335–17349. [Google Scholar] [CrossRef]

- Chen, X.; An, Q.; Yu, K. Fire identification based on improved multi feature fusion of YCbCr and regional growth. Expert Syst. Appl. 2024, 241, 122661. [Google Scholar] [CrossRef]

- Pritam, D.; Dewan, J.H. Detection of fire using image processing techniques with LUV color space. In Proceedings of the 2017 2nd International Conference for Convergence in Technology (I2CT), Mumbai, India, 7–9 April 2017; pp. 1158–1162. [Google Scholar]

- Li, H.; Sun, P. Image-Based Fire Detection Using Dynamic Threshold Grayscale Segmentation and Residual Network Transfer Learning. Mathematics 2023, 11, 3940. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2024; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Baratov, N.; Kutlimuratov, A.; Whangbo, T.K. An Improvement of the Fire Detection and Classification Method Using YOLOv3 for Surveillance Systems. Sensors 2021, 21, 6519. [Google Scholar] [CrossRef]

- Yar, H.; Khan, Z.A.; Ullah, F.U.M.; Ullah, W.; Baik, S.W. A modified YOLOv5 architecture for efficient fire detection in smart cities. Expert Syst. Appl. 2023, 231, 120465. [Google Scholar] [CrossRef]

- Xu, Y.; Li, J.; Zhang, L.; Liu, H.; Zhang, F. CNTCB-YOLOv7: An Effective Forest Fire Detection Model Based on ConvNeXtV2 and CBAM. Fire 2024, 7, 54. [Google Scholar] [CrossRef]

- Tsai, P.-F.; Liao, C.-H.; Yuan, S.-M. Using Deep Learning with Thermal Imaging for Human Detection in Heavy Smoke Scenarios. Sensors 2022, 22, 5351. [Google Scholar] [CrossRef]

- Niu, K.; Wang, C.; Xu, J.; Yang, C.; Zhou, X.; Yang, X. An Improved YOLOv5s-Seg Detection and Segmentation Model for the Accurate Identification of Forest Fires Based on UAV Infrared Image. Remote Sens. 2023, 15, 4694. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Lin, Z.; Han, J.; Ding, G. Rep ViT: Revisiting Mobile CNN From ViT Perspective. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 15909–15920. [Google Scholar]

- Tan, M.; Le, Q. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Koonce, B. MobileNetV3. In Convolutional Neural Networks with Swift for Tensorflow: Image Recognition and Dataset Categorization; Koonce, B., Ed.; Apress: Berkeley, CA, USA, 2021; pp. 125–144. [Google Scholar]

- Wang, C.-Y.; Yeh, I.H.; Mark Liao, H.-Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the Computer Vision—ECCV 2024, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2024; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Deng, L.; Wu, S.; Zhou, J.; Zou, S.; Liu, Q. LSKA-YOLOv8n-WIoU: An Enhanced YOLOv8n Method for Early Fire Detection in Airplane Hangars. Fire 2025, 8, 67. [Google Scholar] [CrossRef]

- Vasu, P.K.A.; Gabriel, J.; Zhu, J.; Tuzel, O.; Ranjan, A. MobileOne: An Improved One millisecond Mobile Backbone. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7907–7917. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic Head: Unifying Object Detection Heads with Attentions. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7369–7378. [Google Scholar]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-Time Flying Object Detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar] [CrossRef]

- Xue, Q.; Lin, H.; Wang, F. FCDM: An Improved Forest Fire Classification and Detection Model Based on YOLOv5. Forests 2022, 13, 2129. [Google Scholar] [CrossRef]

- Hu, J.; Liu, W.; Hou, T.; Zhou, C.; Zhong, H.; Li, Z. YOLO-LF: A lightweight model for fire detection. Res. Sq. 2024. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, K.; Zhou, X.J.; Shi, L.; Song, X.S. MobileOne-YOLO: An Improved Real-Time Fire Detection Algorithm for Aircraft Cargo Compartments. J. Aerosp. Inf. Syst. 2025, 1–10. [Google Scholar] [CrossRef]

- Huynh, T.T.; Nguyen, H.T.; Phu, D.T. Enhancing Fire Detection Performance Based on Fine-Tuned YOLOv10. Comput. Mater. Contin. 2024, 81, 57954. [Google Scholar] [CrossRef]

- Wang, J.; Wang, C.; Ding, W.; Li, C. YOlOv5s-ACE: Forest Fire Object Detection Algorithm Based on Improved YOLOv5s. Fire Technol. 2024, 60, 4023–4043. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, R.; Zhong, H.; Sun, Y. YOLOv8 for Fire and Smoke Recognition Algorithm Integrated with the Convolutional Block Attention Module. Open J. Appl. Sci. 2024, 14, 141012. [Google Scholar] [CrossRef]

- Geng, X.; Han, X.; Cao, X.; Su, Y.; Shu, D. YOLOV9-CBM: An Improved Fire Detection Algorithm Based on YOLOV9. IEEE Access 2025, 13, 19612–19623. [Google Scholar] [CrossRef]

- Zhang, Z.; Tan, L.; Tiong, R.L. Ship-Fire Net: An Improved YOLOv8 Algorithm for Ship Fire Detection. Sensors 2024, 24, 727. [Google Scholar] [CrossRef] [PubMed]

- Sun, M.; Tang, Y.; Yang, S.; Li, J.; Sigrist, M.W.; Dong, F. Fire Source Localization Based on Distributed Temperature Sensing by a Dual-Line Optical Fiber System. Sensors 2016, 16, 829. [Google Scholar] [CrossRef] [PubMed]

- Cao, C.; Yuan, B. Thermally induced fire early warning aerogel with efficient thermal isolation and flame-retardant properties. Polym. Adv. Technol. 2021, 32, 2159–2168. [Google Scholar] [CrossRef]

- Kushnir, A.; Kopchak, B.; Gavryliuk, A. Operational algorithm for a heat detector used in motor vehicles. East.-Eur. J. Enterp. Technol. 2021, 3, 111. [Google Scholar] [CrossRef]

- Park, S.H.; Kim, D.H.; Kim, S.C. Recognition of IoT-based fire-detection system fire-signal patterns applying fuzzy logic. Heliyon 2023, 9, e12964. [Google Scholar] [CrossRef]

- Dampage, U.; Bandaranayake, L.; Wanasinghe, R.; Kottahachchi, K.; Jayasanka, B. Forest fire detection system using wireless sensor networks and machine learning. Sci. Rep. 2022, 12, 46. [Google Scholar] [CrossRef]

- Baek, J.; Alhindi, T.J.; Jeong, Y.-S.; Jeong, M.K.; Seo, S.; Kang, J.; Shim, W.; Heo, Y. Real-time fire detection system based on dynamic time warping of multichannel sensor networks. Fire Saf. J. 2021, 123, 103364. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).