1. Introduction

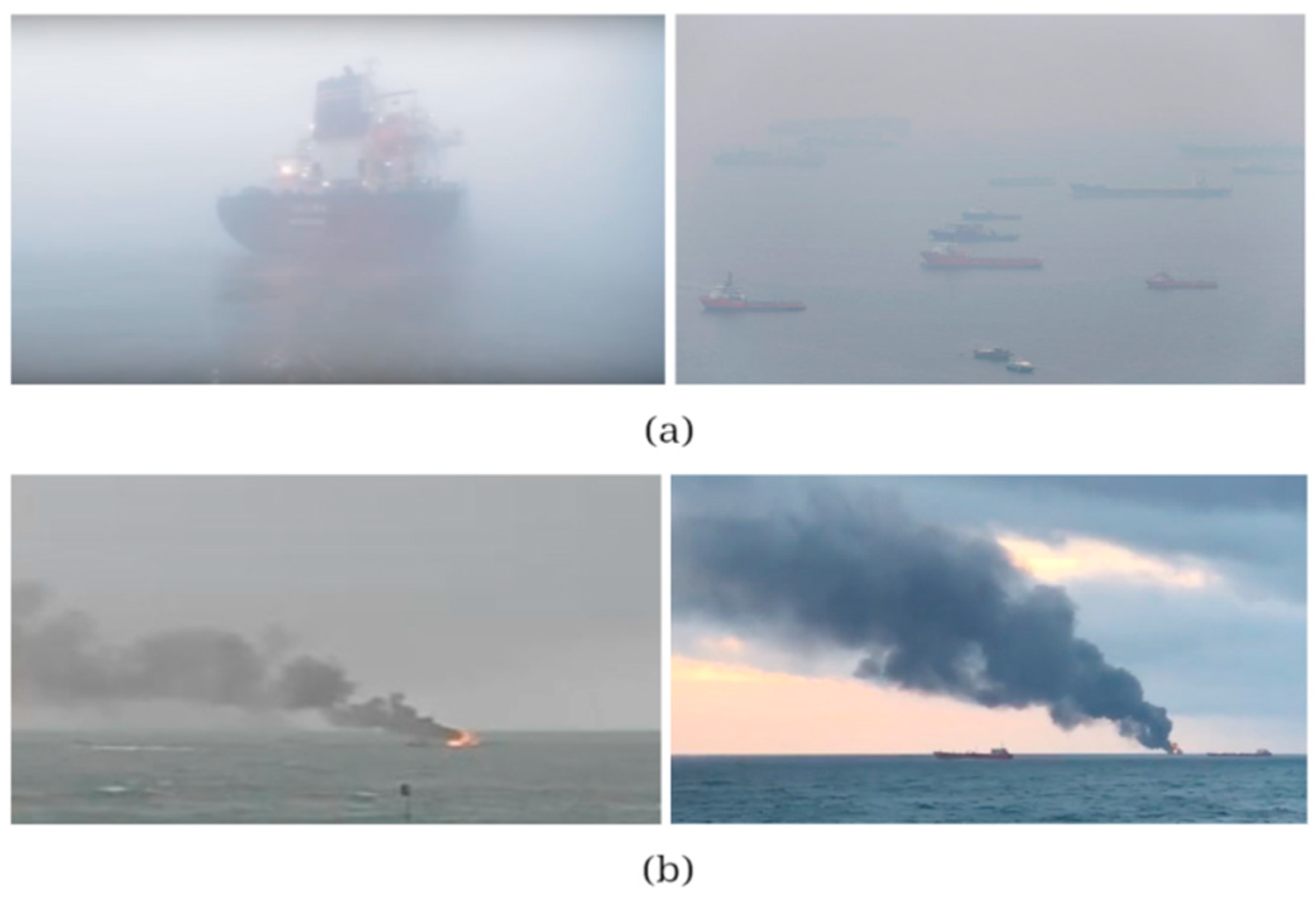

Maritime transportation is a critical component of global trade and logistics, with millions of ships navigating the world’s oceans and waterways annually. Despite the robustness of modern maritime operations, fires onboard ships remain a significant threat to vessel safety, cargo integrity, and human lives. Ship fires can originate from various sources, including engine malfunctions, electrical faults, flammable cargo, and human error. For instance, it is important to note that fires on ships continue to be one of the most severe safety issues in the ocean environment, even if shipping losses have significantly decreased by 50% over the past ten years. In 2022, a significant number of fire occurrences were recorded, totaling 200 incidents, which represents the highest annual figure in the past ten years. Notably, among these incidents, 43 were specifically linked to ships carrying cargo or containers, highlighting an upward vulnerability within this particular segment of the maritime business sector. According to the report of the World Shipping Council (WSC) [

1], there has been a troubling increase in fires aboard containerships, many of which have led to fatalities and total losses. Statistical notes estimate that every 60 days, a serious ship fire occurs. The financial and human toll of ship fires is very big. Allianz Global Corporate & Specialty’s (AGCS) Safety and Shipping Review [

2] reports that fire and explosion incidents were the third most common cause of loss for the global shipping industry in the years 2015 and 2019, accounting for approximately 7% of total losses. In terms of expenses, the average cost of a ship fire can range from tens of thousands to millions of dollars, depending on the severity and the value of the cargo, which is a big financial loss every 60 days. In 2018, the total cost of losses from ship fires was estimated at over USD 1 billion. Furthermore, ship fires have caused numerous fatalities and injuries, highlighting the urgent need for effective detection and prevention measures. There are some possible solutions to avoid ship fires, such as strict safety regulations, regular maintenance, crew training, and application of advanced fire suppression systems related to onboard and outside-the-board situations. Several researchers [

3,

4] actively studied image-based fire detection approaches using artificial intelligence. Convolutional neural networks (CNNs) are incorporated into the domain of image-based fire detection, leading to the advancement of self-learning algorithms that autonomously extract and analyze fire-related features from images [

5,

6]. This integration of CNNs has revolutionized the traditional methods of fire detection by enabling the systems to automatically learn complex patterns and characteristics associated with fire, such as flame color, shape, and dynamic behavior, without relying on manually crafted features. The use of CNNs in this context enhances the accuracy and efficiency of fire detection, particularly in diverse and challenging environments. Building on these advancements, this research proposes the application of computer vision (CV) algorithms for the detection of fires aboard ships. In particular, the proposed method aims to improve the detection of ship fires by addressing the critical need for reliable and efficient detection in the maritime domain. These algorithms apply their capacity to examine video streams not only for onboard camera inputs but also for far distances as well to train to identify particular patterns linked to ship fires. This enables them to quickly and accurately detect the presence of smoke in various compartments of the ship. The main idea is early detection of fire as it starts. Early detection is crucial in fire prevention and mitigation. By identifying smoke at its initial stages, before the situation becomes even worse and there is a full-fledged fire, these CV systems can notify the crew or initiate automated responses. This early intervention can make a critical difference in preventing extensive financial and physical damage or potential loss of life.

Furthermore, in terms of CV applications, integrating smoke detection algorithms with other fire prevention measures can help to accomplish more comprehensive safety systems. For instance, these algorithms can work in conjunction with temperature sensors and gas detectors to provide a multi-faceted approach to fire detection. This synergy enhances the reliability and effectiveness of the overall fire prevention strategy, ensuring that even the smallest signs of a potential fire are promptly addressed.

In addition to detection, CV algorithms can also be used for continuous monitoring of fire-prone areas. By constantly analyzing the video feeds, the system can provide real-time updates on the status of these areas, allowing for rapid and clear responses to any changes. This continuous monitoring is particularly important in high-risk zones such as engine rooms, cargo holds, and kitchens, where fires are more likely to occur. Similarly, regarding the benefits of real-time video analysis, outside-the-board ship analysis is also crucial, for example, in port fires, where a fire originating on one ship spreads to adjacent vessels and infrastructures. This can pose a significant risk to maritime operations and coastal safety. For example, in 2020, a fire at the port of Savannah spread from one container to the others [

7]. This incident prompted a review of fire safety protocols and the adoption of improved fire detection systems. Overall, early detection, continuous monitoring, and coordinated response capabilities play a vital role in preventing fires and mitigating their impact. This integration of advanced technology into traditional safety measures is essential for ensuring the well-being of the crew and the protection of valuable assets at sea.

Recently, applications of the DL algorithms have been significantly integrated with visual data analysis, such as in the field of fire identification. The immediate integration of these algorithms with CV, image processing technologies, hardware computing capabilities, and the proliferation of video surveillance networks have catalyzed a significant shift towards more sophisticated fire detection technologies. CNNs, in particular, have shown exceptional progress in extracting intricate image features. The development of video surveillance systems and the growing intelligence with the automation of contemporary ships present a great opportunity to combine monitoring and DL technologies for fire detection.

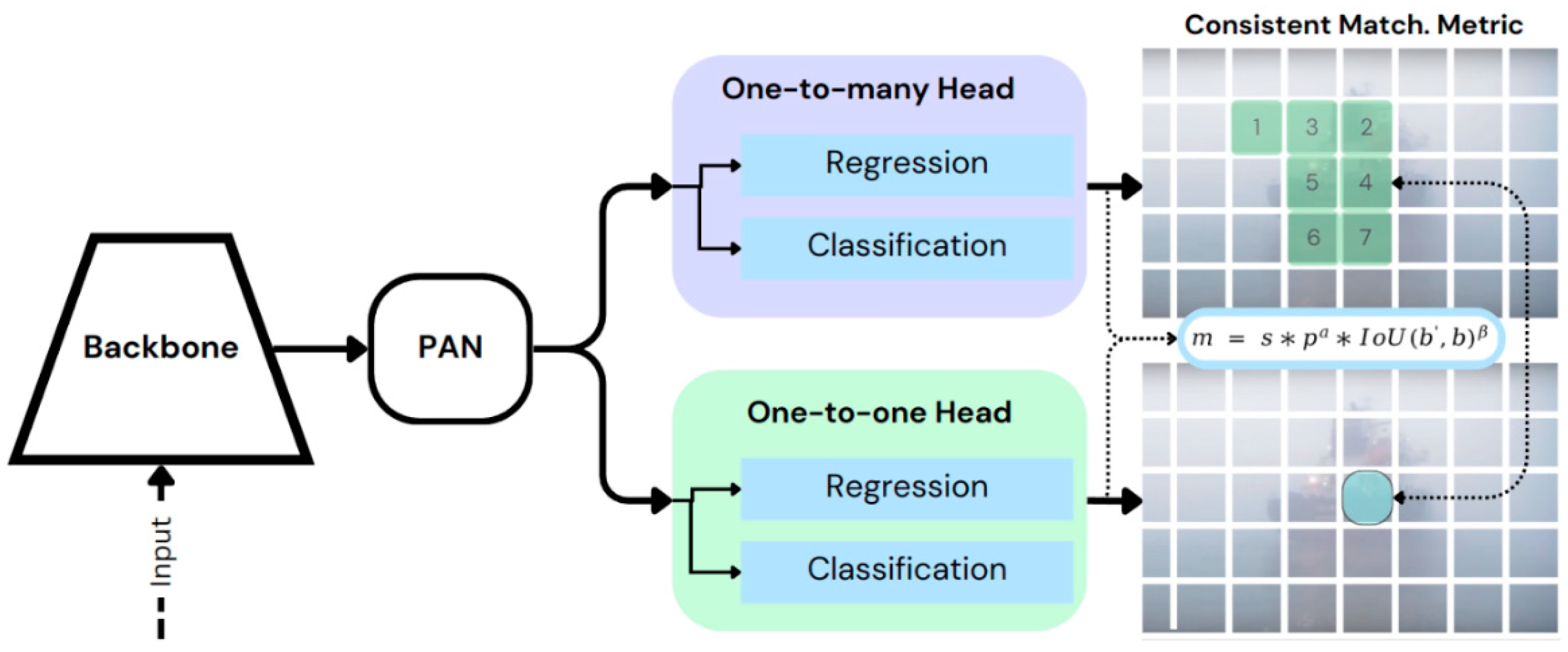

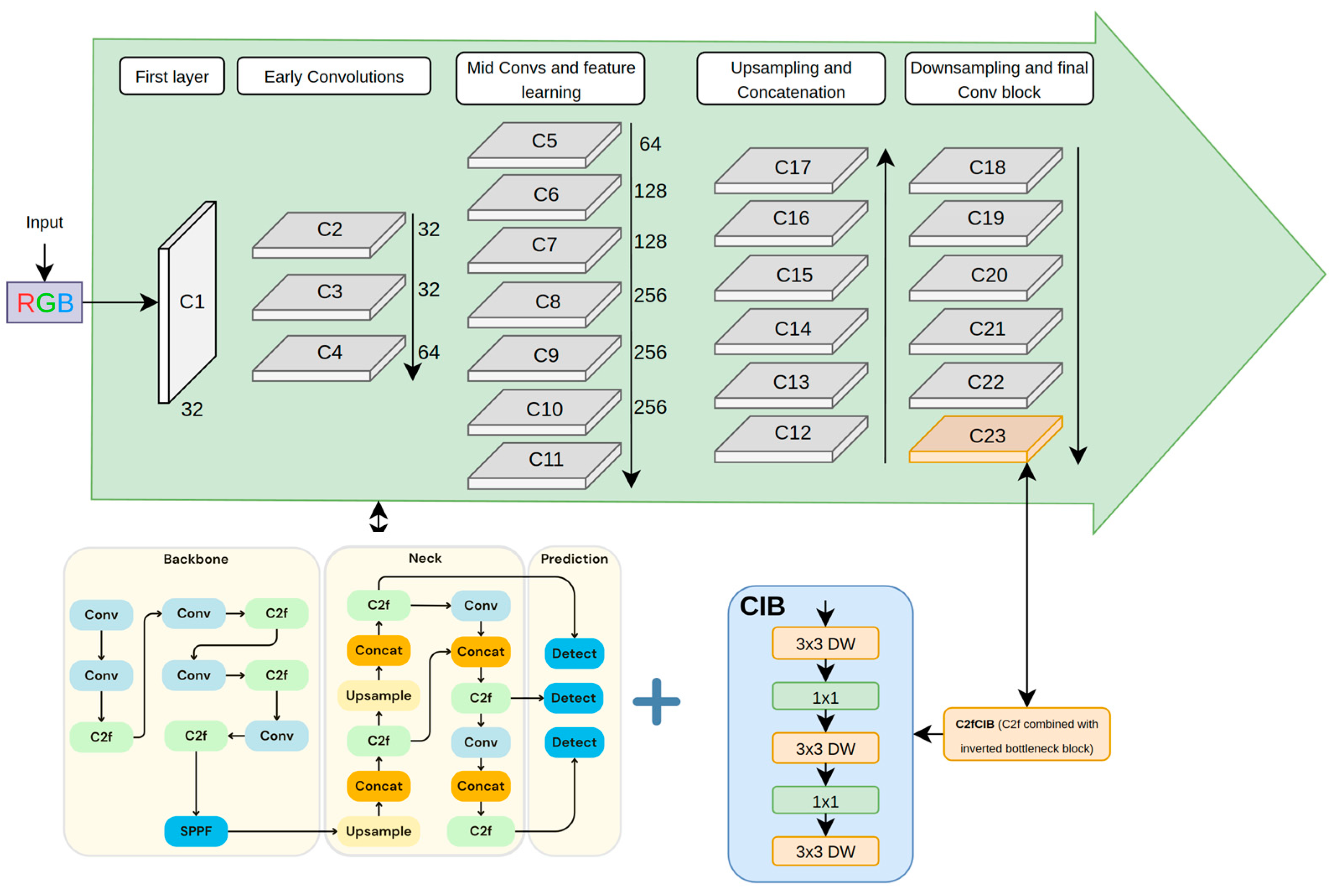

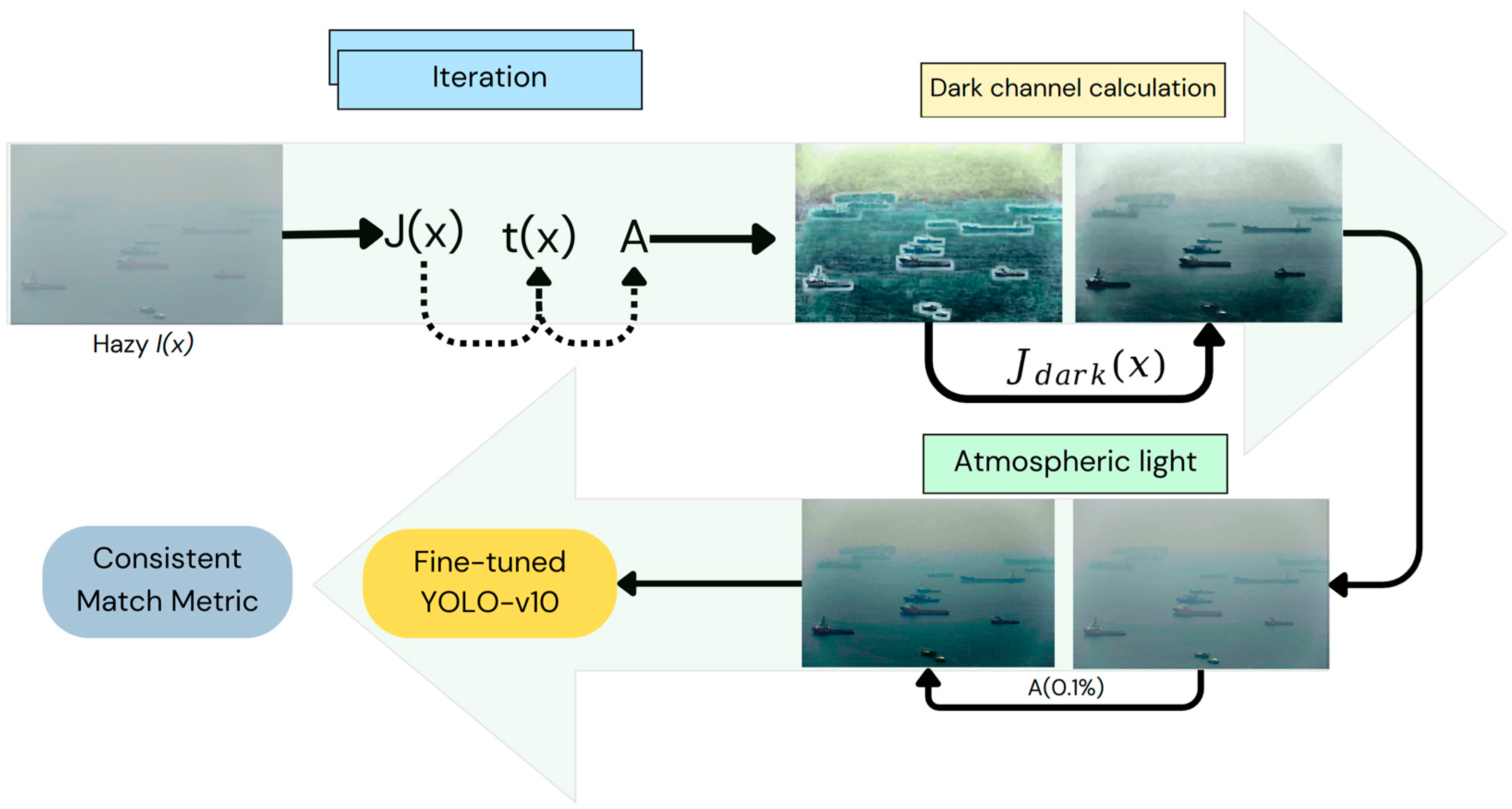

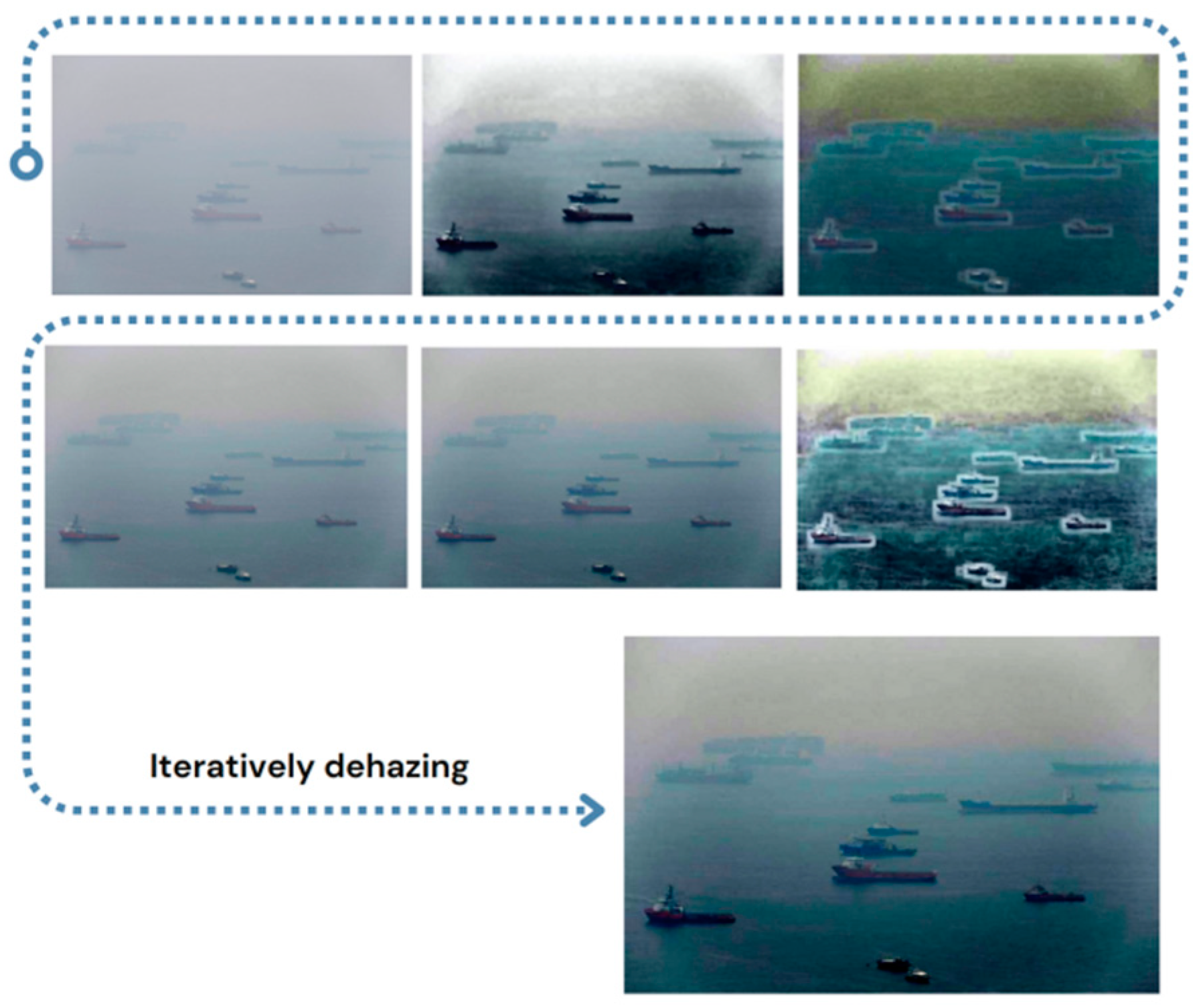

In this paper, we aim to contribute to the use of the YOLO algorithm with the integration of a dehazing technique for ship fire detection. YOLO’s ability to perform, in real time, object detection with high accuracy and speed makes it an ideal candidate for this application. Implementing the YOLO algorithm can be beneficial by ensuring swift and accurate identification of fire incidents, thereby improving overall safety and response efficiency. To improve visual clarity, we contribute to combining the ship fire detection process with dehazing algorithms. Dehazing algorithms are essential in improving image clarity and visibility in the presence of atmospheric haze, fog, and water vapor. These algorithms are useful in maritime environments where visibility can be significantly reduced due to such conditions. We developed our ship fire detection model by fine-tuning the YOLO-v10m [

8] model, which is the strongest model introduced by the researchers from Tsinghua University in terms of its previous releases. A model description will be mentioned in

Section 3.

This study makes three important contributions to marine safety by effectively detecting ship fires:

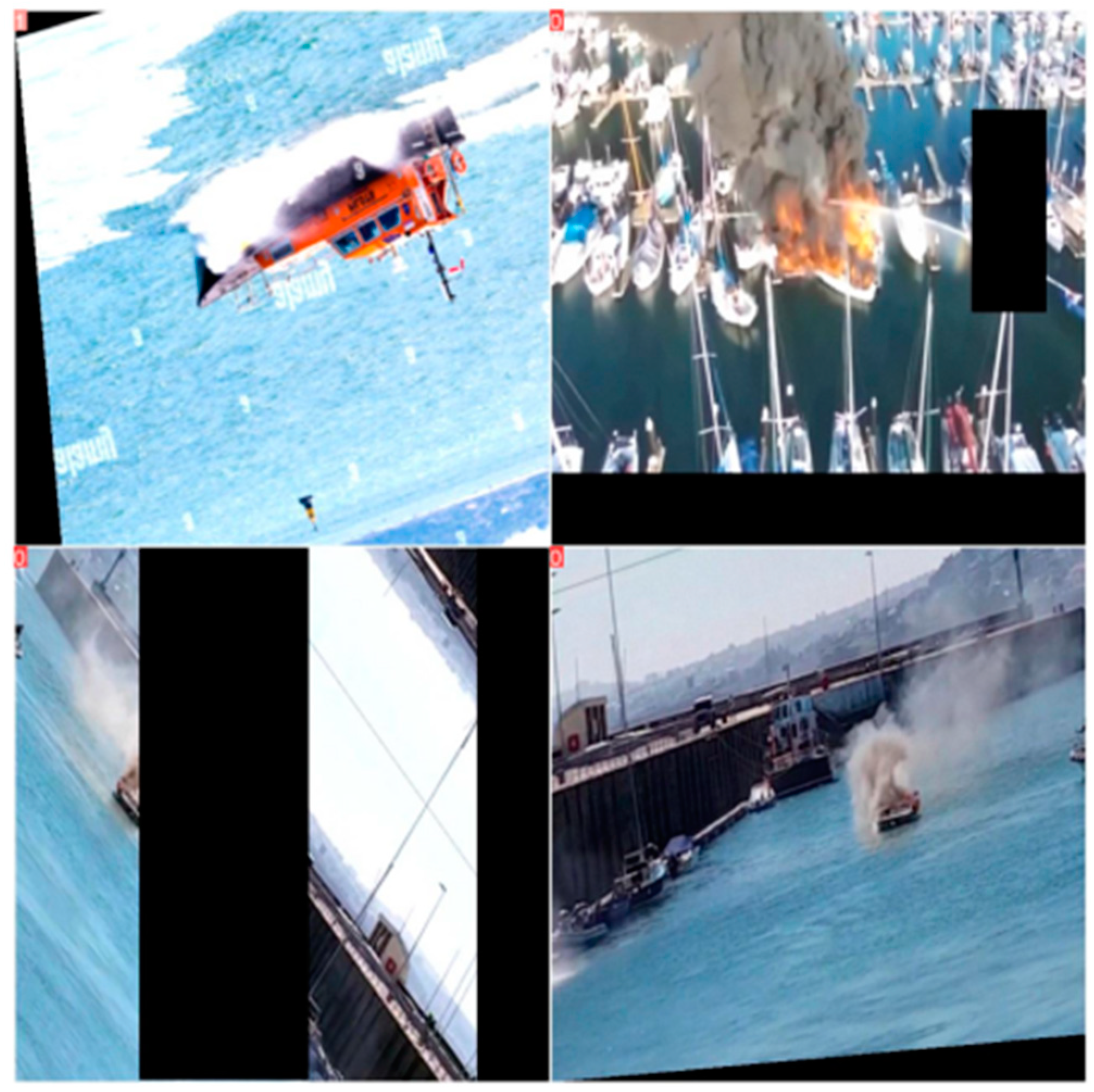

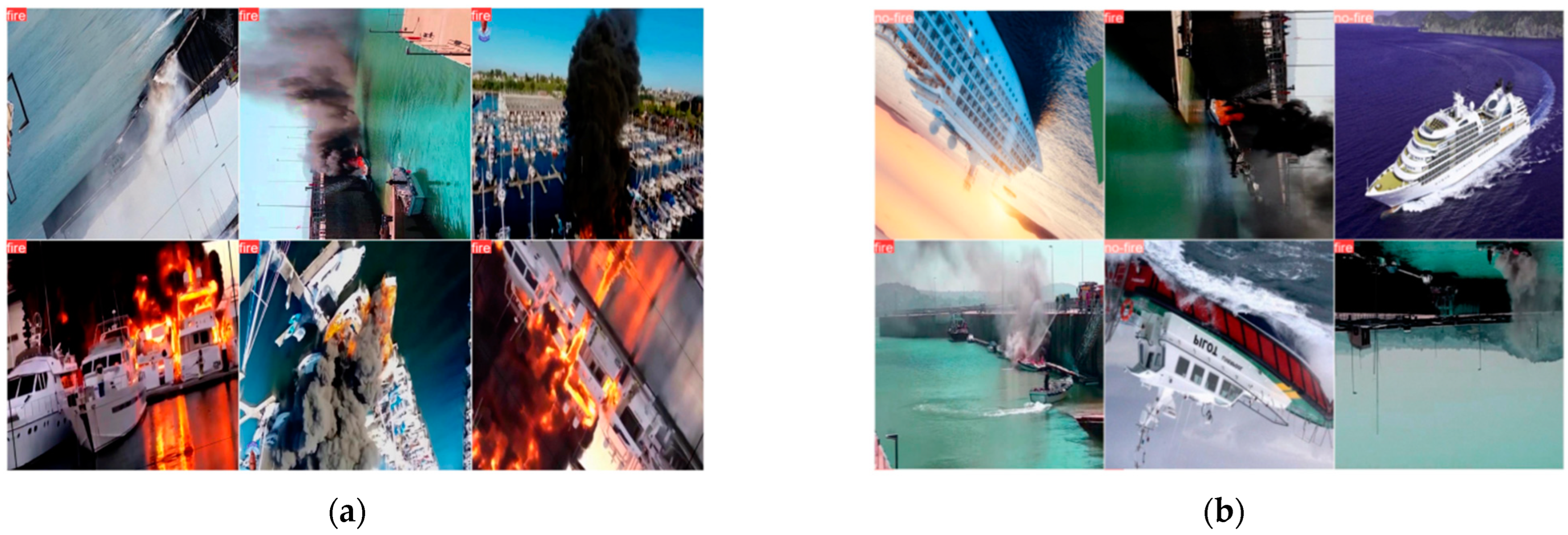

First, we created a massive dataset for ship fire detection.

Second, we constructed a SOTA YOLO-v10-based ship fire detection model.

Third, we integrated dehazing algorithm-based optimization for image ship fire image classification.

This research provides a detailed account of the dataset preparation, model training, and evaluation processes. This dataset is divided into two classes: the “Fire” and “No-fire” classes. Fine-tuning the YOLO-v10 model with a custom dataset and model training involved extensive training and validation to ensure the model’s robustness and accuracy in diverse marine environments. We further enhanced the detection capabilities of our model by incorporating advanced image processing techniques. These optimizations mainly focused on improving the model’s performance by avoiding noisy input data, such as different lighting scenarios and varying wave sun reflected inputs, ensuring high and reliable detection accuracy in real-world applications.

The remainder of this paper is structured as follows:

In

Section 2, this paper describes the related research works, focusing on various methods, datasets, and algorithms previously employed for detecting fire and ship fire images in marine environments. Specifically, we will examine the strengths and limitations of existing approaches, as well as the advancements in technology and methodologies related to our study. In

Section 3, we describe the contributions of this work, which includes a detailed review of our data collection process. We also describe data processing, augmentation, model training, and dehazing model implementations in detail.

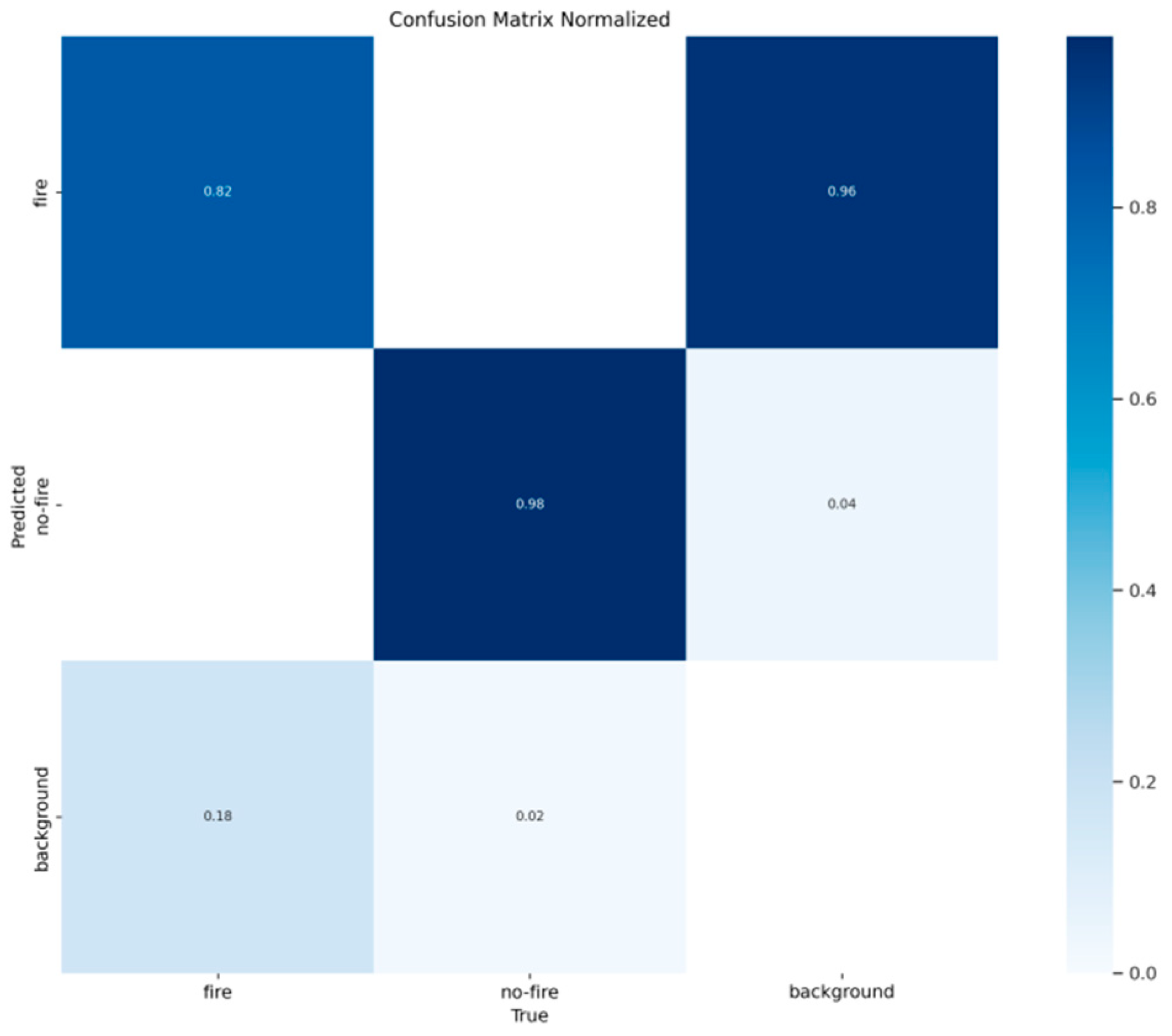

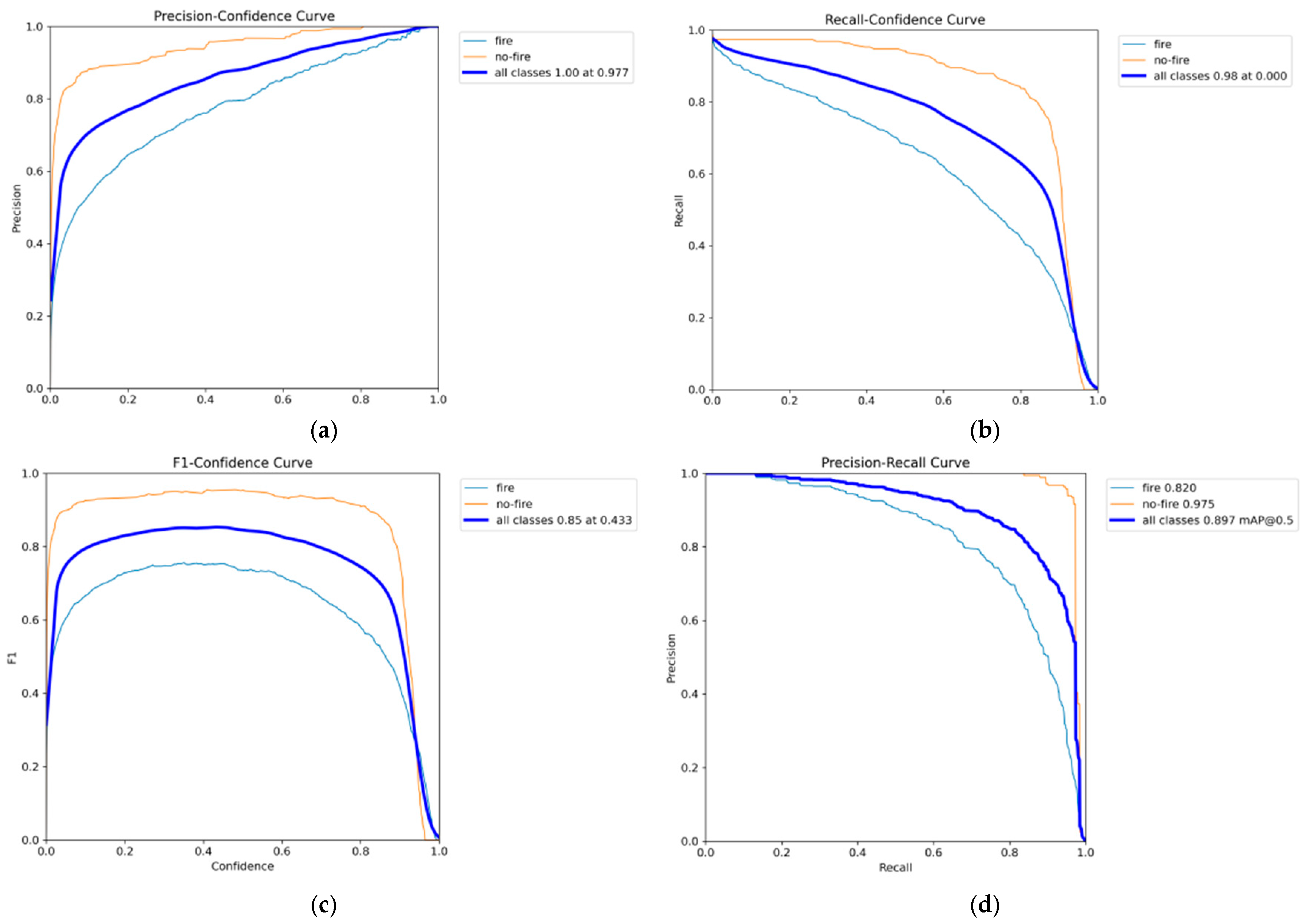

Section 4 highlights the experimental results and analysis of our study. We conduct a comparative analysis of our proposed method with other SOTA (state-of-the-art) models to evaluate the performance of our models. In this section, we can see how our proposed method is outperforming. In addition, this section includes quantitative metrics and visual examples to demonstrate the robustness of our approach. Finally, in

Section 5, we conclude our research findings, key contributions, and potential directions for further improvements and expansions of ship fire detection systems.

2. Related Work

As we know, object detection and recognition algorithms mostly rely on particular types of deep neural networks (DNNs) and CNNs. These neural networks consist of multiple layers, and each one serves a distinct functionality, such as in marine safety, where it would be used for ocean environment analysis, feature extractions, data identification, image enhancement, dehazing, and anomaly detection to achieve accurate and precise object detection rates. Previously, traditional fire detection methods faced challenges related to speed, accuracy, and performance degradation. Conventionally, fire detection relied on identifying and extracting both dynamic and static flame attributes, including color features and shapes, and then employing ML algorithms to recognize these features. Notable progress in this area includes the early warning of fire cases, a mechanism that was presented by Chen et al. [

9]. This approach utilizes video analysis to detect fire together with smoke pixels based on chromatic and disorder measurements within the RGB model. A common fire detection system on a ship integrates sensors for fire, smoke, and heat-related factors, which are all connected to an alarm panel. These systems help to provide visual and audible alerts, indicating the precise location of a fire on the vessel. Flame texture feature extraction is a prominent technique utilized in fire detection and identification [

10,

11,

12,

13,

14]. For instance, Cui et al. [

15] developed a method to analyze the texture of fire smoke by integrating two advanced texture analysis tools, such as wavelet analysis and gray-level co-occurrence matrices (GLCMs). This method allowed for the effective extraction of texture features specific to fire smoke. Similarly, Ye et al. [

16] represented an innovative dynamic texture descriptor by introducing a surface transform and a Hidden Markov Tree (HMT) model. Another researcher [

17] introduced a real-time fire smoke detection approach based on GLCMs, which focused on classifying texture features to distinguish smoke and non-smoke textures efficiently. Chino et al. [

18] developed a novel method for fire detection in static images. Their approach combined color feature classification with texture classification within superpixel regions, enhancing the accuracy of fire detection. With these texture analysis techniques, researchers have significantly improved the accuracy and reliability of fire detection systems.

Recent implementations in fire detection methodologies are exemplified by Foggia et al. [

19], who proposed a method of analyzing surveillance camera videos by integrating color, shape, and motion information through multiple expert systems. Similarly, Premal et al. [

20] conducted research on forest fire detection with color model. Furthermore, Wu et al. [

21] introduced a dynamic fire detection algorithm for surveillance videos that incorporates radiation domain feature models. In the realm of fire detection through image segmentation, the main task involves categorizing individual pixels in an image into distinct categories, such as fire regions or backgrounds. This task is typically addressed using semantic segmentation networks, which are trained end-to-end to generate segmentation masks directly from the original image. Frameworks like U-Net [

22] are commonly employed for this purpose. U-Net, originally designed for biomedical image segmentation, has proven effective in segmenting and classifying fire-related elements at the pixel level. An instance segmentation-based approach not only helps to classify pixels into specific categories but also distinguishes individual instances of those categories. For example, Guan et al. [

23] proposed a forest fire segmentation method that is specifically designed for the early detection and segmentation of forest fires, demonstrating the application of instance segmentation in fire detection.

Research addressing critical issues in CV related to fire detection using UAV-captured video frames from the FLAME dataset has proposed innovative solutions for binary image classification (fire vs. no fire) and fire instance segmentation. To effectively detect fire smoke regions inside photos, segmentation methods perform well. They leverage global information and the U-Net network, which is developed to accurately identify fire smoke regions within images. Combining global contextual information with the U-Net architectures, models can capture meaningful details and spatial relationships which is important for effective segmentation. Zheng et al. [

24] introduced a sophisticated approach to the semantic segmentation of fire smoke, integrating Multi-Scale Residual Group Attention (MRGA) with the U-Net architecture. This method adeptly captures multi-scale smoke features, enhancing the model’s ability to discern subtle nuances in small-scale smoke instances. The combination of MRGA and the U-Net framework significantly improves the model’s perceptual acuity, particularly in detecting and segmenting small-scale smoke regions.

Over the past decade, there has been a significant transformation in fire detection technology. Specifically, the YOLO algorithms have emerged as powerful tools for object detection, addressing many previously existing challenges. The initial evolution starting from YOLO-v1 to the more advanced YOLO-v10 algorithms underscores substantial innovations that have significantly improved their detection capabilities. This development marks a broader paradigm shift in fire detection technologies towards DL.

Color Attributes for Object Detection

Object detection remains one of the most challenging tasks in computer vision due to the substantial variability observed among images within the same object category. This variability is influenced by numerous factors, including differences in perspective, scale, and occlusion, which complicate the accurate identification and classification of objects. SOTA methodologies for object detection predominantly rely on intensity-based features, often excluding color information. This exclusion is primarily due to the significant variation in color that can arise from changes in illumination, compression artifacts, shadows, and highlights. Such variations introduce considerable complexity in achieving robust color descriptions, thereby posing additional challenges to the object detection process.

Within the domain of image classification, integrating color information with shape features has demonstrated remarkable effectiveness. Research indicates that combining these features can substantially enhance classification performance, as color provides additional information that aids in distinguishing objects with similar shapes but different colors [

25,

26,

27,

28,

29,

30,

31]. A parallel concept in CV, often utilized in object detection is the segmentation of the target object. This technique involves classifying each pixel in an image into a specific category, thereby enabling precise identification of various elements such as ships, smoke, fire, clouds, and sea with ship-surrounded objects. Semantic segmentation offers a holistic understanding of the entire image, significantly improving data interpretation and analysis capabilities. This approach is particularly advantageous for processing remote sensing images, which we will consider for further research in our next works. According to Chen et al. [

32], for remote sensing purposes, this capability is important for environmental monitoring where precise mapping of features is essential. However, due to the close similarity of fire and smoke color intensity, we decided to train the model by including three main objects, such as ship, fire, and smoke.

The current SOTA techniques in object recognition rely on exhaustive search strategies, which are computationally intensive and often inefficient. Selective search algorithms focus on generating a smaller set of high-quality region proposals, reducing the computational burden while maintaining or improving detection accuracy. These methods apply a hierarchical grouping of similar regions based on color, texture, size, and shape compatibility to generate fewer and more relevant proposals, leading to more efficient and effective object detection. Advancements in DL, especially CNNs, have significantly improved the field of object detection. Networks, such as Faster R-CNN, YOLO, and SSD have set new benchmarks in detection accuracy and speed by leveraging end-to-end training pipelines and innovative architectural designs. Integration of these advanced techniques and methodologies reflects progress in applications of remote sensing, environmental monitoring, and disaster response, where precise and reliable detection is critical. Color remains a crucial feature in the accurate classification of fire pixels and is employed in nearly all detection methods. Traditionally, various color spaces such as RGB (red, green, and blue), HSV (hue, saturation, and value), HSI (hue, saturation, and intensity), and YCbCr (luminance, chrominance blue, and chrominance red) are meticulously used for fire detection [

33]. The RGB color space is widely used due to its simple representation of images, where fire pixels typically exhibit high red and green values, distinguishing them from other objects. However, RGB can be sensitive to illumination changes, which can affect detection accuracy. HSV and HSI color spaces, which separate chromatic content from intensity information, provide a more robust approach for detecting fire. These spaces reduce the impact of lighting variations, making it easier to distinguish fire regions based on hue and saturation characteristics. For example, fire pixels usually exhibit high hue values and moderate saturation levels. YCbCr, another commonly used color space, separates luminance from chrominance components to enhance the ability to detect fire in complex environments. This separation allows for more effective identification of fire regions based on chrominance values while minimizing the effects of lighting and shadow variations in color-changing environments. In addition to traditional color spaces, modern fire detection methods increasingly incorporate advanced techniques such as ML and DL to enhance color-based fire detection. By leveraging CNNs, these approaches can automatically learn and extract relevant color features from large datasets, improving the robustness and accuracy of fire detection systems. Moreover, multi-spectral imaging and infrared (IR) sensors are being integrated into fire detection systems to complement visible color information. Multi-spectral imaging captures data across various wavelengths, providing additional features for fire detection, while IR sensors detect thermal signatures, which are indicative of fire even in low-visibility conditions. The combination of these technologies with traditional color spaces can offer a comprehensive approach to fire detection, ensuring higher reliability and accuracy. For example, the application of YC

bC

r color space as a generic model is applicable in various rules to detect accurately. Celik et al. [

34] used YC

bC

r color spaces to separate luminance from chrominance and obtained a perfect output. Similarly, Vipin et al. [

35] proposed an algorithm where they used RGB and YC

bC

r color spaces to test two sets of images. Khalil et al. [

36] presented a novel method to detect fire based on combinations of RGB and CIE L*a*b color models by combining motion detection with on-fire objects.