Abstract

Wildfires cause severe consequences, including property loss, threats to human life, damage to natural resources, biodiversity, and economic impacts. Consequently, numerous wildland fire detection systems were developed over the years to identify fires at an early stage and prevent their damage to both the environment and human lives. Recently, deep learning methods were employed for recognizing wildfires, showing interesting results. However, numerous challenges are still present, including background complexity and small wildfire and smoke areas. To address these challenging limitations, two deep learning models, namely CT-Fire and DC-Fire, were adopted to recognize wildfires using both visible and infrared aerial images. Infrared images detect temperature gradients, showing areas of high heat and indicating active flames. RGB images provide the visual context to identify smoke and forest fires. Using both visible and infrared images provides a diversified data for learning deep learning models. The diverse characteristics of wildfires and smoke enable these models to learn a complete visual representation of wildland fires and smoke scenarios. Testing results showed that CT-Fire and DC-Fire achieved higher performance compared to baseline wildfire recognition methods using a large dataset, which includes RGB and infrared aerial images. CT-Fire and DC-Fire also showed the reliability of deep learning models in identifying and recognizing patterns and features related to wildland smoke and fires and surpassing challenges, including background complexity, which can include vegetation, weather conditions, and diverse terrain, detecting small wildfire areas, and wildland fires and smoke variety in terms of size, intensity, and shape. CT-Fire and DC-Fire also reached faster processing speeds, enabling their use for early detection of smoke and forest fires in both night and day conditions.

1. Introduction

Wildland fires cause ecological imbalance and risk to human health. They result in costly economic losses, and air pollution, as they can destroy property, forests, and animal species. For example, Europe had its worst year for wildland fires in 2022, with around 900,000 hectares burnt and 260,000 hectares have already been burned since January 2023, significantly increasing the risk of economic losses [1,2]. Moreover, in Canada, around 7300 wildfires occurred each year over the past 25 years, burning a total of 2.5 million hectares each year [3], with 2023 a record year with 18 million hectares of burned area [4]. The cost of wildfire fighting in Canada varied between CAD 800 million and CAD 1.5 billion per year over the past decade [3]. Numerous wildland fire detection systems were developed to reduce the impact of fires. These systems employ a variety of technologies, including heat sensors, smoke sensors, gas detectors, and flame detectors, to detect forest fires and improve fire prevention [5].

Recently, the integration of vision sensors for wildfire recognition and detection presents a significant advancement in detecting, monitoring, and preventing wildland fires [6,7,8]. As wildfires are distinguished by their color, texture, pattern, and shape, various feature based methods (e.g., YUV color space [9], optical flow [10], dynamic texture [11], etc.) and traditional machine learning methods (e.g., SVM [12], Bayesian classifier [13], Markov model [14], etc.) have demonstrated high performance in this task.

The main challenges associated with the aforementioned methods are the extraction of informative and relevant characteristics that accurately present the wildland fire detection problem. More recently, deep learning (DL) methods completely revolutionized computer vision tasks, solving complex challenges in various fields such as video recognition [15,16], medical image analysis [17,18], motion estimation [19,20], image analysis [21,22], and object tracking [23,24]. These methods showed remarkable ability compared to classical machine learning methods. As a result of their impressive potential, DL methods were used in detecting and recognizing wildfires [25,26]. However, they face a number of limitations, including the detection of small wildfires and smoke areas, the complexity of the background, and the variability of wildfires and smoke in terms of size, shape, and intensity.

To address these challenging limitations, we developed and adopted, in this paper, two deep learning methods, namely DC-Fire [27] and CT-Fire [28], for efficiently recognizing wildland fires using both infrared (IR) and RGB (visible spectrum) aerial images. IR images capture the temperature gradients, highlighting zones with high heat levels, which indicate an active flame, even when flame and smoke are not visible. RGB aerial images provide the visual context to confirm the presence of wildland smoke and fires. DC-Fire combines two deep CNNs (Convolutional Neural Network), DenseNet-201 [29] and EfficientNet-B5 [30] models. CT-Fire integrates the CNN RegNetY-16GF [31] and the vision transformer EfficientFormer v2 [32] to extract deep features related to smoke and fires. These two models were trained and evaluated using a large aerial dataset, namely FLAME2 [33], which includes both visible and IR images. FLAME2 describes numerous forest fire and smoke scenarios collected during prescribed wildfires in Arizona, providing a rich dataset to capture various aspects of fire and smoke patterns. It was widely used in training and testing advanced fire detection models.

Three main contributions are proposed in this paper:

- Two DL methods, namely DC-Fire and CT-Fire, were adopted for recognizing smoke and fires using both IR and visible aerial images in order to improve the performance of wildland fire/smoke classification tasks.

- DC-Fire and CT-Fire showed a promising performance, overcoming challenging limitations, including background complexity, the detection of small wildland fire areas, image quality, and the variability of wildfires regarding their size, shape, and intensity with flame lengths ranging from 0.25 to 0.75 m, occasionally 5 to 10 m.

- DC-Fire and CT-Fire methods showed fast processing speeds, allowing their use for early detection of wildland smoke and fires during both day and night, which is crucial for reliable fire management strategies.

The rest of this paper is organized as follows: Section 2 reports state-of-the-art methods for wildland fire classification using deep learning methods. Section 3 presents DC-Fire and CT-Fire methods, the dataset used, evaluation metrics utilized in this paper, and implementation details. Section 4 illustrates the experimental results of DC-Fire and CT-Fire methods. Section 5 discusses the potential of the two methods for recognizing wildland fires and their limits. Section 6 summarizes this work.

2. Related Works

Many deep learning methods were introduced in recent years to improve the performance of wildland smoke/fire classification in various domains of application using visible (RGB), IR, and both RGB and IR images, as shown in Table 1. Among these methods, Wang et al. [34] proposed a fire detection method in IR video surveillance to recognize fires. First, a CNN method, namely IRCNN (Infrared Convolutional Neural Network), which includes six convolutional layers, ReLU (Rectified Linear Unit) activation functions, four max pooling layers, three fully connected layers, and a dropout layer to avoid overfitting, extracts IR features. Then, an SVM method was adopted to detect the presence of flames. Experimental results were performed using data augmentation techniques, specifically horizontal flip and salt-and-pepper noise. Horizontal flip involves horizontally reversing an image to create a mirror image, while salt-and-pepper noise adds random black and white pixels to an image to create noise. The IR flame dataset used for testing comprised 5300 fire IR images and 6100 non-fire IR images. IRCNN achieved an F1-score of 98.70% and an inference time of 0.487 s per ten images. This performance outperformed the state-of-the-art methods AlexNet [35] by 1.46%. Deng et al. [36] developed a CNN method combined with a capsule network, namely C_CNN, to determine the presence of fire using IR images. They used the SKLFS (State Key Laboratory of Fire Science) dataset, which consists of 5000 images as learning data. C_CNN obtained a detection rate of 95.3% and a fast detection time of 0.3 s, surpassing the baseline methods, AlexNet and VGG-16 by 8.6% and 1.7%, respectively.

Shamsoshoara et al. [37] proposed a DL method for classifying wildfires on RGB aerial images. They used the Xception model [38] to identify the presence of flames. They trained the Xception model using data augmentation techniques, such as random rotation and horizontal flip, and the FLAME dataset [39], consisting of a training set of 39,375 images (25,018 fire images and 14,357 non-fire images) and a test set of 8617 images (5137 fire images and 3480 non-fire images). Xception achieved an accuracy of 76.23% using the test set data. Ghali et al. [40] proposed an ensemble learning method to classify wildfires using visible aerial images. They employed DenseNet and EfficientNet models as a backbone to generate relevant feature maps. This ensemble learning approach obtained an F1-score of 84.77% using the FLAME dataset.

Guo et al. [41] presented a two-channel CNN to identify the presence of forest smoke and fires using RGB images. First, two CNNs based on AlexNet, each composed of five convolutional layers and three pooling layers, simultaneously extracted wildfire features from input data. Then, the SVM method analyzed the extracted feature maps to identify smoke and fires. The improved two-channel CNN achieved a high performance with an accuracy of 98.47% compared to ResNet-50 and VGG-16 using a total of 14,000 images and the PCA_Jittering image enhancement method as a data augmentation technique. Wang et al. [42] developed a novel wildfire classification method, called Reduce-VGGNet, for identifying wildland fires on RGB images. Reduce-VGGNet is a modified VGG-16 method [43], replacing the three fully connected layers by softmax function and two fully connected layers. Using the FLAME dataset (900 fire images and 1000 non-fire images), Reduce-VGGNet reached a better performance with an accuracy of 91.20% compared to the traditional machine learning method SVM.

Anupama et al. [44] designed a lightweight CNN method, X-MobileNet, for identifying forest fires on UAV (Unmanned Aerial Vehicle) RGB images. X-MobileNet is an extended MobileNet v2 method [45], adding a global average pooling layer and a depth-wise separable convolution layer to the output layer. Test results were obtained using diverse data (5313 fire images and 677 non-fire images) and five data augmentation techniques (rotation, zoom, width/height shift, horizontal flip, and fill mode). X-MobileNet achieved high performance with an F1-score of 98.89%, outperforming the VGG, Inception, and ResNet-50 methods. Zhang et al. [46] introduced a novel Dual-channel CNN for wildfire and smoke detection using RGB ground images. Their method employs a CBAM (Convolution Block Attention Module) method to extract relevant characteristics and remove irrelevant ones. In addition, it integrates two feature fusion methods to improve feature expression and address problems related to noise interference and data sparsity. A total of 14,000 images (7000 fire images and 7000 non-fire images) [41] were used to train and evaluate this method. The proposed Dual-channel CNN achieved an accuracy of 98.90%, surpassing the VGG-16 and ResNet-50 by 3.02% and 1.12%, respectively. Islam et al. [47] designed a hybrid multi-stream DL method to classify and localize forest fires using RGB aerial and ground images. They used EfficientNet-B7 to extract deep features from input fire images, ACNet (customized Attention Connected Network) to extract high-level and low-level features, and BO (Bayesian optimization) to optimize the hyperparameters of their proposed hybrid model. Two datasets, FLAME (30,155 fire images and 17,855 non-fire images) and DeepFire (760 images for each fire and non-fire class) [48] were used to train and test this method. The proposed hybrid DL achieved an F1-score of 97.12% and 95.54% using the FLAME and DeepFire datasets, respectively, outperforming state-of-the-art methods.

Aral et al. [49] also proposed a lightweight and attention based CNN method to identify wildfires using vision aerial data. Extensive analysis was performed using the FLAME dataset for training and evaluating numerous DL models, including ResNet-50, Xception, MobileNet v3, VGG-16, MobileNet v2, and EfficientNet-B0, -B2, and -B4, with attention module, with and without transfer learning. EfficientNet-B0 with attention method [50] showed a superior performance with an accuracy of 92.02%, outperforming the other DL models and state-of-the-art methods such as Shamsoshoara et al. [37] and Ghali et al. [40] methods. Kumar et al. [51] introduced an automated fire extinguishing system using DL method. They studied the ability of the AlexNet method with various activation functions (sigmoid, softmax, and softplus), optimizers (Adam, Adamx, Nadam, RMSprop, and SGD), and learning rates (0.01, 0.001, and 0.0001). AlexNet with a learning rate of 0.001, the Adam optimizer, and the softmax activation function showed the best result with an accuracy of 89.64% using RGB data comprising 2582 fire images, 373 smoke images, and 737 non-fire images.

Chen et al. [33] benchmarked the performance of four pretrained DL methods (LetNet5, VGG-16, MobileNet v2, and ResNet-18) in recognizing wildland smoke and fires using RGB, IR, and both IR and RGB aerial images. They collected a very large dataset, called FLAME2, consisting of 25,434 smoke/fire images, 13,700 non-smoke/non-fire images, and 14,317 fire/non-smoke images for each of the IR and visible images. VGG-16 achieved higher accuracy of 99.91% and 97.29% using RGB and IR images, respectively, better than LeNet5, logistic regression, MobileNet v2, ResNet-18, and Xception. MobileNet v2 also showed an impressive performance, with an accuracy of 99.81%, surpassing the published methods, Xception, LeNet5, and ResNet-18, by 1.21%, 1.42%, and 0.37%, respectively, using both RGB and IR images. Khubab et al. [52] proposed a lightweight CNN, namely FireXnet, for detecting smoke, fire, and thermal fire using both RGB and IR data. FireXnet consists of three convolutional blocks, a global average pooling layer, and two dense layers with ReLU function. Each of the first and second convolutional blocks contains two convolutional layers and a max pooling layer. The third block comprises three convolutional layers and a max pooling layer. Each convolutional layer is followed by a ReLU activation function. The SHAP (SHapley Additive exPlanation) method was also employed as explainable artificial intelligence (AI) method to determine relevant visual features that contribute in recognizing wildfires. FireXnet was trained using data augmentation techniques (rotation, width shift, height shift, and zoom) and a total of 3800 images (950 images for each class of fire, smoke, thermal fire, and non-fire) collected from the Kaggle [53], FLAME2, and D-Fire [54] datasets. It obtained an F1-score of 98.42%, surpassing four pretrained DL models, InceptionResNet v2, MobileNet v2, VGG-16, Inception v3, and DenseNet-201, by 2.37%, 0.26%, 3.67%, 1.58%, 0.47%, respectively.

Ghali and Akhloufi introduced two deep learning methods, namely CT-Fire [28] and DC-Fire [27] for recognizing wildfires. CT-Fire combines the EfficientFormer v2 and RegNet models while DC-Fire integrates the two CNNs, EfficientNet-B5 and DenseNet-201. CT-Fire was evaluated using aerial and ground wildland fire images collected from CorsicanFire [55], Fire [56], and DeepFire datasets. This model achieved an accuracy of 99.62%, 87.77%, and 85.29% using ground, aerial, and both ground and aerial images, respectively. Additionally, DC-Fire was evaluated using aerial infrared images and obtained a high performance with an F1-score of 100% better than the baseline DL methods, including MobileNet v2, ResNet-18, VGG-16, and LeNet5. These results demonstrate the ability of CT-Fire and DC-Fire to address wildland fire-related challenges. Ghali and Akhloufi [57] also proposed a novel ensemble learning method, namely BoucaNet, to recognize smoke and handle smoke related challenges such as the complexity of background, the detection of small zones of smoke, and the visual resemblance among smoke, dust, haze, clouds, seaside, and land classes. BoucaNet integrates the EfficientFormer v2 and EfficientNet v2 [58] models. It was trained using the satellite dataset USTC_SmokeRS [59] and data augmentation techniques such as rotation, shear, zoom, and shift. An interesting accuracy of 93.67% was obtained, showing the potential of BoucaNet in classifying smoke and addressing related limitations. In [60], these three ensemble learning methods were studied to demonstrate their ability in sim-to-real detection of wildland fires. These models were trained using a novel synthetic dataset, called SWIFT, without data augmentation techniques and tested using real wildfire images. BoucaNet showed the highest performance with an accuracy of 93.21%, precision of 93.17%, recall of 93.21%, and F1-score of 93.13% compared to DC-Fire, CT-Fire, RegNetY, and ResNeXt models.

Almeida et al. [61] developed a hybrid method, called EdgeFireSmoke++, to detect forest fires in real time. EdgeFireSmoke++ incorporates both an ANN (Artificial Neural Network) and CNN. Initially, the ANN identifies the presence of a forest environment and then, a simple CNN detects and recognizes forest fires. Using a large aerial wildfire dataset [62] divided into four categories Burned-area (9348 images), Fire-smoke (15,579 images), Fog-area (9762 images), and Green-area (14,763 images), EdgeFireSmoke++ achieved an accuracy of 95.41% superior to VGG-16, VGG-19, MobileNet v2, Inception v3, Xception, and DenseNet121 models. Reis and Turk [63] benchmarked various DL methods such as Inception v3, DenseNet121, ResNet50 v2, NASNetMobile, VGG-19, and a hybrid model, which integrates these DL models, classical machine learning methods such as SVM, and random forest, GRU (Gated Recurrent Unit) algorithm, and Bi-LSTM (Bidirectional Long Short-Term Memory). Experiment results were performed using the FLAME dataset, resulting in accuracies of 99.32% and 97.95% for DenseNet121 with and without the transfer learning technique, respectively. Habbib and Khidhir [64] adopted two DL models, VGG-16 and VGG-19, to detect forest fires. They trained and tested these models with a total of 4135 images, which were randomly collected from the internet and various zones in Mosul. Based on the transfer learning technique, VGG-16 and VGG-19 achieved an accuracy of 99.27% and 99.40%, respectively.

Idroes et al. [65] proposed a novel DL method, named TeutongNet, for detecting and classifying wildfires. TeutongNet is a modified version of the pretrained ResNet50 v2 model, adding a global mean pooling layer, a dropout layer, a dense layer, and a sigmoid function. Using the DeepFire dataset, TeutongNet achieved an accuracy of 98.68%, overcoming the pretrained ResNet50 v2 model by 3.94%. AL Duhayyim et al. [66] introduced a novel fusion-based deep learning method (AFFD-FDL) for automated wildfire detection. AFFD-FDL combines the HOG (Histogram of Oriented Gradients) as a handcrafted method with two DL methods, SqueezeNet and Inception v3, to extract deep forest fire features. AFFD-FDL was evaluated using five testing sets collected from various public videos. It obtained an accuracy of 98.60% better than classical machine learning methods, random forest, decision tree, logistic regression, and SVM by 1.60%, 48.60%, 49.60%, and 43.60%, respectively. Guo et al. [67] proposed a two-stage method to recognize smoke. First, pooling layers were adopted to generate more characteristics of fire and smoke in an image. Then, attention modules were used to focus on important and relevant smoke and fire features. Using a large public dataset (15,104 training images and 1881 testing images), this method achieved high performance with an accuracy of 96.1%, outperforming existing deep learning methods such as ResNet, VGG-Net, and GoogLeNet. Jonnalagadda and Hashim [68] proposed a deep learning method, namely SegNet, to detect the presence of flames using visible images. SegNet consists of three convolutional layers followed by ReLU functions, two pooling layers, and two dense layers with ReLU functions. The input to SegNet is a segmented fire image, generated by dividing an input aerial image into twelve smaller segments. SegNet achieved an accuracy of 98.18%, better than existing models.

In summary, both DL and hybrid DL models showed high performance in recognizing wildland fires by analyzing visible and IR images. Nevertheless, there are few methods using both IR and visible images due to the scarcity of such data. Additionally, several challenges remain in this task, including the detection of smaller wildfires, the complexity of the background, which can include vegetation and various terrains, and the variability of wildfires and smoke regarding their intensity, size, and shape. To address these challenges, we will use both IR and visible images to provide a more comprehensive view of wildland fires and smoke. IR images are effective in identifying heat and can detect wildfires through smoke, while visible images capture the visual details of fires and smoke. Moreover, we will develop and adopt DL methods for identifying the presence of wildfires and smoke at an early stage using both IR and visible images, which can improve fire management strategies.

Table 1.

DL models for wildland smoke and fire recognition. IR represents infrared data. Visible refers to RGB data. Both present both visible and IR data.

Table 1.

DL models for wildland smoke and fire recognition. IR represents infrared data. Visible refers to RGB data. Both present both visible and IR data.

| Ref. | Methodology | Object Detected | Dataset | Image Type | Results (%) |

|---|---|---|---|---|---|

| [34] | IRCNN and SVM | Flame | Private: 5300 fire images and 6100 non-fire images | IR | F1-score = 98.70 |

| [36] | C_CNN | Flame | SKLFS: 5000 images | IR | Accuracy = 95.30 |

| [33] | VGG-16 | Smoke Flame | FLAME2: 25,434 smoke/fire images, 13,700 non-smoke/non-fire images and 14,317 fire/non-smoke images | IR | Accuracy = 97.29 |

| [33] | MobileNet v2 | Smoke Flame | FLAME2: 50,868 smoke/fire images, 27,400 non-smoke/non-fire images and 28,634 fire/non-smoke images | Both | Accuracy = 99.81 |

| [52] | FireXnet | Smoke Flame | Kaggle, DT-Fire, and FLAME2: 950 images for each class of fire, smoke, thermal fire, and non-fire | Both | F1-score = 98.42 |

| [37] | Xception | Flame | FLAME: 30,155 fire images and 17,855 non-fire images | Visible | Accuracy = 76.23 |

| [40] | Ensemble learning | Flame | FLAME: 30,155 fire images and 17,855 non-fire images | Visible | F1-score = 84.77 |

| [33] | VGG-16 | Smoke Flame | FLAME2: 25,434 smoke/fire images, 13,700 non-smoke/non-fire images and 14,317 fire/non-smoke images | Visible | Accuracy = 99.91 |

| [41] | Two-channel CNN | Smoke Flame | Private: 7000 fire images and 7000 non-fire images | Visible | Accuracy = 98.52 |

| [42] | Reduce-VGGNet | Flame | FLAME: 900 fire images and 1000 non-fire images | Visible | Accuracy = 91.20 |

| [44] | X-MobileNet | Flame | Private, Kaggle, and FLAME: 5313 fire images and 617 non-fire images | Visible | F1-score = 98.89 |

| [46] | Dual-channel CNN | Smoke Flame | Private: 7000 fire images and 7000 non-fire images | Visible | Accuracy = 98.90 |

| [47] | Hybrid DL | Flame | FLAME: 30,155 fire images and 17,855 non-fire images DeepFire: 760 fire images and 760 non-fire images | Visible | F1-score = 97.12 F1-score = 95.54 |

| [49] | EfficientNet-B0 with attention | Flame | FLAME: 30,155 fire images and 17,855 non-fire images | Visible | Accuracy = 92.02 |

| [51] | AlexNet | Flame Smoke | Private: 582 fire images, 373 smoke images, and 737 non-fire images | Visible | Accuracy = 89.64 |

| [27] | DC-Fire | Flame Smoke | FLAME2: 53,451 images | IR | F1-score = 100.00 |

| [28] | CT-Fire | Flame | FLAME: 47,992 aerial images CorsicanFire, DeepFire, FIRE: 3900 ground images Both aerial and ground images: 51,892 images | Visible | Accuracy = 87.77 Accuracy = 99.62 Accuracy = 85.29 |

| [57] | BoucaNet | Smoke | USTC_SmokeRS: 6225 images | Visible | Accuracy = 93.67 |

| [60] | BoucaNet | Flame | SWIFT, Fire, DeepFire, Corsican: 28,910 images | Visible | Accuracy = 93.21 |

| [61] | EdgeFireSmoke++ | Flame | Private: 49,452 images | Visible | Accuracy = 95.41 |

| [63] | DenseNet121 | Flame | FLAME: 30,155 fire images and 17,855 non-fire images | Visible | Accuracy = 99.32 |

| [65] | TeutongNet | Flame | DeepFire: 1900 images | Visible | Accuracy = 98.68 |

| [66] | AFFD-FDL | Flame | Private: 1710 images | Visible | Accuracy = 98.60 |

| [67] | Two-stage method | Smoke | Public: 9472 smoke/fire images and 9406 non-smoke/-fire images | Visible | Accuracy = 96.10 |

| [68] | SegNet | Flame | Private: 10,242 images | Visible | Accuracy = 98.18 |

3. Materials and Methods

We first introduce, in this section, two deep learning methods, DC-Fire and CT-Fire, for recognizing wildland smoke and fires using visible and infrared images. DC-Fire is a robust ensemble learning method that combines two CNNs, DenseNet-201 and EfficientNet-B5, known for their feature extraction abilities. CT-Fire uses the strengths of both CNNs (RegNetY-16GF) and vision transformers (EfficientFormer v2) to generate rich feature maps. Next, we present the aerial training dataset, FLAME2. Then, we define five evaluation metrics (accuracy, precision, recall, F1-score, and inference time) used to evaluate these two methods. Finally, we report the implementation details used for training and testing the DC-Fire and CT-Fire methods.

3.1. Proposed Methods

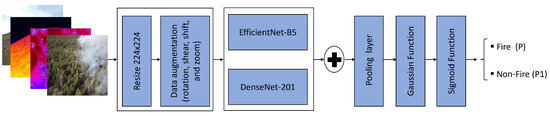

3.1.1. DC-Fire Method

We developed a deep CNN method, called DC-Fire, for detecting and recognizing smoke and forest fires using both visible and IR data. DC-Fire is an ensemble learning method combining the DenseNet-201 [29] and EfficientNet-B5 [30] models. EfficientNet-B5 is a deep CNN model that employs a compound scaling method to uniformly scale the depth, resolution, and width. It includes a 3 × 3 convolutional layer and multiple MBConv blocks with varying kernel sizes of 3 × 3 and 5 × 5 and uses squeeze-and-excitation optimization to extract characteristics at different levels efficiently. In addition, it also consists of a 1 × 1 convolutional layer and a pooling layer to refine extracted characteristics. It achieved high performance with a Top-1 accuracy of 83.3% compared to popular CNN models such as ResNet, Inception v3, Xception, ResNeXt, InceptionResNet v2, SENet, PNASNET, and AmoebaNet using the ImageNet dataset [30]. DenseNet-201 (Dense Convolutional Network) [29] is also a deep CNN, connecting each layer to the previous ones in order to reuse features, reduce the number of parameters, and overcome the vanishing gradient problem. It comprises 201 layers. It starts with a 7 × 7 convolutional layer, followed by a 3 × 3 max pooling layer. It then has four dense blocks, each containing a series of 1 × 1 and 3 × 3 convolutional layers. Between each two dense blocks, there are transition layers consisting of a 1 × 1 convolutional layer and a 2 × 2 average pooling layer. This model performed well in numerous competitive object classification benchmark tasks (CIFAR-100, ImageNet, Street View House Numbers, and CIFAR-10), showing interesting performances compared to ResNet models [29].

Figure 1 depicts the DC-Fire architecture. To diversify the training data, we first use data augmentation methods such as shift, rotation, shear, and zoom. Then, we simultaneously fed both visible and IR input data and newly generated images into the DenseNet-201 and EfficientNet-B5 models to extract rich and relevant wildland smoke- and fire-related features. Next, the two feature maps generated by the EfficientNet-B5 and DenseNet-201 methods are concatenated, combining the extracted features from both models into a larger feature vector and producing rich and diverse feature maps. Subsequently, an average pooling layer is employed to reduce the spatial dimensions of the concatenating feature map. Then, we improved the generalization of DC-Fire by adding noise to the input images using a Gaussian dropout method with a rate of 0.3. Finally, a sigmoid function is utilized to generate a likelihood score between 0 and 1, identifying the presence of forest fires and smoke on IR and visible input images when the probability is greater than or equal to 0.5.

Figure 1.

The proposed architecture of DC-Fire. P1 and P present the predicted probabilities of the input aerial image belonging to the non-fire and fire class.

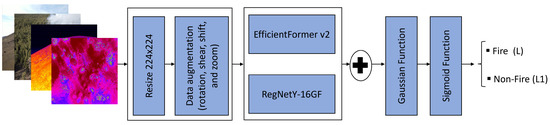

3.1.2. CT-Fire Method

In this section, we introduce a CNN-Transformer method, namely CT-Fire, to identify smoke and forest fires using visible and thermal infrared images. CT-Fire employs the deep CNN, RegNetY-16GF [31], and the vision transformer, EfficientFormer v2 [32], to generate rich and diverse feature maps, including deep features related to vegetation, diverse terrains, wildland fires, and smoke. RegNetY-16GF was proposed by Ilija et al. [31] to explore the network design paradigm and generate a fast and reliable DL model. This model is composed of three main blocks: the stem, the body, and the head. The stem block contains two 3 × 3 convolutional layers, while the body block comprises four stages (from stage 1 to stage 4). Stage 1 includes two convolutional layers, while stages 2, 3, and 4 consist of a series of identical blocks, each comprising 1 × 1 convolutional layers and a group of 3 × 3 convolutional layers. Each of these layers is followed by batch normalization and a ReLU activation function. The model also incorporates Squeeze-and-Excitation (SE) modules in each identical block, extracting relevant features and suppressing less important ones. RegNetY-16GF showed an impressive result with a Top-1 classification error of 22.5% better than existing CNNs such as EfficientNet, ResNeXt, and ResNet models. EfficientFormer v2 is an advanced version of the EfficientFormer method developed to address the higher latency of vision transformers compared to lightweight CNNs. It uses convolutional layers to generate local and low-level details and transformer blocks to capture global and high-level features. In its feed forward network, a depth-wise convolutional layer captures local information. A modified multi-head self-attention mechanism, integrating a 3 × 3 depth-wise convolutional layer, is adopted to extract both global and local features. This model also utilizes a new fine-grained joint search method to optimize the number of parameters and latency simultaneously. It achieved a superior Top-1 classification accuracy of 83.5% compared to popular CNNs and vision transformer models, such as MobileNet v2, EfficientNet, ResNet, MobileNet v3, DeiT, LeViT, CSwin-T, NasViT-Supernet, etc. [32].

As shown in Figure 2, we first resize the IR and visible input images to a resolution of 224 × 224 pixels. Next, we apply various data augmentation techniques, including shearing, rotating, zooming, and shifting, in order to increase the amount of learning images, while avoiding overfitting. Subsequently, the EfficientFormer v2 and RegNetY-16GF models generate two feature maps, comprising distinct features related to complex background, and forest smoke and fire scenarios. Following this, the features generated by both models are integrated into a larger feature vector, combining diverse features from each model and providing a clear comprehension of different wildfire scenarios. Then, the regularization method, Gaussian dropout with a rate of 0.3, is used to improve CT-Fire’s performance. Finally, a dense layer with a sigmoid function is adopted to determine the CT-Fire results, providing a probability value between 0 and 1. These values are used to identify the presence of forest smoke and fires on the input images when the probability is greater than or equal to 0.5.

Figure 2.

The proposed architecture of CT-Fire. L1 and L refer the predicted probabilities of the input aerial image belonging to the fire or non-fire class.

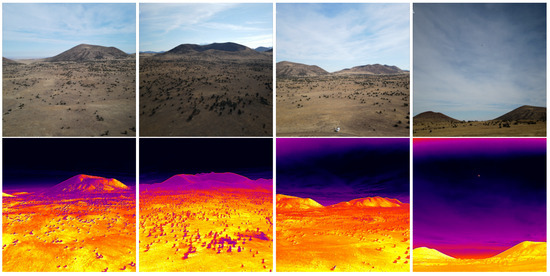

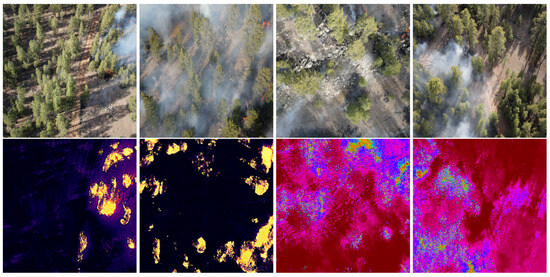

3.2. Dataset

Lots of visible fire data are available to researchers, facilitating the benchmarking of wildfire recognition models. However, this is not the case for thermal IR fire data. For such, we use the available public dataset, FLAME2 [33] for training and testing DC-Fire and CT-Fire. FLAME2 is a very large aerial dataset collected during a prescribed wildfire in Arizona using a DJI mavic 2 enterprise advanced drone. RGB and IR images were captured using a CMOS visual sensor and an uncooled vanadium oxide microbolometer sensor, respectively. Fire intensity in this data varies, with flame lengths ranging from 0.25 to 0.75 m, occasionally attaining 5 to 10 m when large fuels are consumed. FLAME2 consists of 53,451 pairs of images, including 14,317 fire/non-smoke images, 25,434 fire/smoke images, and 13,700 non-fire/non-smoke images for each RGB and IR image. Figure 3 and Figure 4 depict IR/RGB fire and non-fire samples from the FLAME2 dataset.

Figure 3.

FLAME2 dataset example. (Top): RGB non-fire images; (Bottom): Their corresponding IR non-fire images.

Figure 4.

FLAME2 dataset example. (Top): RGB fire images. (Bottom): Their corresponding IR fire images.

3.3. Implementation Details

CT-Fire and DC-Fire models were developed using TensorFlow version 2.11 [69]. A machine equipped with an NVIDIA GeForce RTX 2080Ti GPU, a RAM of 64 GB, and an Intel(R) Xeon(R) CPU was used to train and test these models.

DC-Fire and CT-Fire were trained using RGB and IR images. FLAME2 was utilized as learning data, enabling these models to learn various smoke and wildfire situations using both IR and visible data. A total of 53,451 pairs of image (RGB and IR) were divided between the training (68,416 images), validation (17,104 images), and test (21,382 images) sets, as presented in Table 2.

Table 2.

Dataset subsets.

During training, we used both IR and visible input images with a resolution of 224 × 224 pixels. In addition, shear, rotation, zoom, and shift data augmentation methods were employed to improve CT-Fire and DC-Fire performance and avoid overfitting. The binary cross-entropy loss (BCE) function [70] was also adopted as a loss function to reduce misclassification error during the learning process (see Equation (1)). On the other hand, we selected various hyperparameters, including a batch size of 8, 150 epochs, an optimizer Adam, and a learning rate of 0.001. An early stop was also implemented to interrupt training if model performance did not improve after fifteen epochs. The model showing the lowest validation loss was then saved.

where is the label (in our case, fire and no-fire classes), is the predicted output, and n is the total number of samples in the dataset.

4. Experiments and Results

To evaluate the performance of DC-Fire and CT-Fire, we first analyze their accuracy, precision, recall, F1-score, and inference time, which is the average time to detect and recognize the presence of forest smoke and fires in an input image. We then compare these results with state-of-the-art methods (LeNet5, Xception, MobileNet v2, and ResNet-18) [33] and DL object recognition methods (ResNeXt-50 [71] and Swin Transformer v2 [72]). Secondly, we present the confusion matrix of DC-Fire and CT-Fire on the FLAME2 test dataset. Finally, we illustrate the predicted outputs of these models using RGB and IR images of each fire and non-fire class.

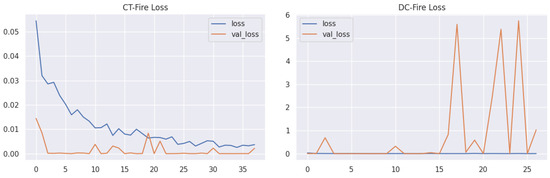

Figure 5 shows the learning and validation loss of the DC-Fire and CT-Fire models. We can see that both models improve rapidly during the first learning epochs, with both training and validation losses rapidly decreasing and converging. While the DC-Fire validation loss shows some variation, the best model was saved before the variation occurred, thanks to early stopping methods. It was then tested on unseen data and achieved high performance with well-classified images, as shown in Table 3, indicating that it learned well and generalized with no overfitting, similar to the CT-Fire model.

Figure 5.

Loss curves for the proposed DC-Fire and CT-Fire during training and validation steps.

Table 3.

Comparative analysis of DC-Fire, CT-Fire, and other DL methods using testing set (both IR and RGB images).

Table 3 shows the performance results of CT-Fire, DC-Fire, and other baseline methods, including LeNet5, Xception, MobileNet v2, ResNet-18, Swin Transformer v2, and ResNeXt-50, using both IR and RGB images (10,691 images each).

We can see that DC-Fire and CT-Fire achieved impressive testing results with an accuracy of 100%, a precision of 100%, a recall of 100%, and an F1-score of 100% for both models thanks to the extraction of deep and relevant features related to background and wildfires by the two deep CNNs (EfficientNet-B5 and DenseNet-201) for DC-Fire, and the vision transformer EfficientFormer v2 and the deep CNN RegNetY-16GF for CT-Fire. This means the CT-Fire and DC-Fire models correctly recognized all positive fire instances and did not make any incorrect predictions. Combining EfficientNet-B5 and DenseNet-201 models can significantly improve the extraction of deep features related to wildland smoke and fires. EfficientNet-B5 offers scalability by efficiently balancing network width, depth, and resolution, enabling the model to generate deep complex features. DenseNet-201 introduces dense connection, allowing the model to learn comprehensive patterns. Moreover, the combination of EfficientFormer v2 and RegNetY-16GF as a backbone generates various feature maps, enhancing the ability of the model to identify complex wildfire and smoke characteristics and providing a powerful approach for identifying wildfire and smoke. EfficientFormer v2 integrates convolutional layers and attention mechanisms, enabling the extraction of local and global features. RegNetY-16GF generates relevant features, including fire characteristics, heat patterns, and smoke plumes. Both CT-Fire and DC-Fire performed better than baseline models LeNet5, Xception, MobileNet v2, ResNet-18, Swin transformer v2, and ResNeXt-50, demonstrating their potential to address challenging limitations such as smaller fire detection, background complexity, and forest fire and smoke variability. DC-Fire and CT-Fire also achieved an interesting inference time of 0.013 and 0.024 s, respectively, showing their ability for early detection of wildland fires and smoke. However, their inference times are slightly slower than the processing time of 0.04 and 0.015 s for ResNeXt-50 and Swin transformer v2, respectively.

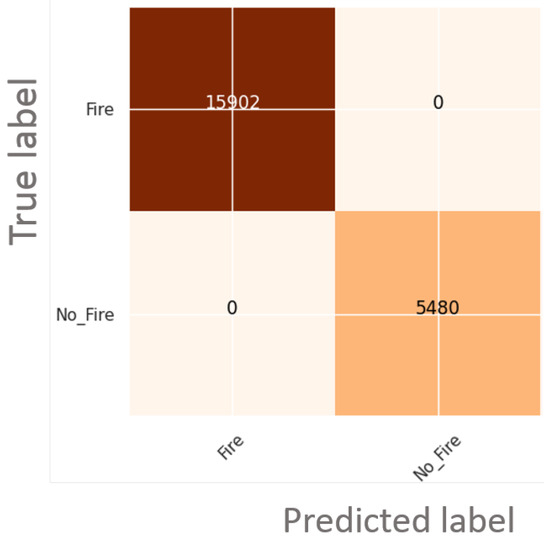

The confusion matrix is an important tool for evaluating the performance of the DC-Fire and CT-Fire models. It provides a comprehensive analysis of their classification performance, enabling us to determine their ability in correctly classify fire and non-fire classes. Figure 6 depicts the confusion matrix of both CT-Fire and DC-Fire methods using the FLAME2 test dataset with both IR and RGB images. We can see that the number of predictions in the diagonal elements (indicating the number of correct classifications of CT-Fire and DC-Fire) is very high and there are no prediction errors in the off-diagonal (representing the number of incorrect classifications of CT-Fire and DC-Fire). This result demonstrates the strong ability of these models in distinguishing between wildfire and non-wildfire scenarios using both IR and visible data and overcoming challenges related to this task.

Figure 6.

Confusion matrix of both DC-Fire and CT-Fire using both IR and RGB images (both models obtained the same results).

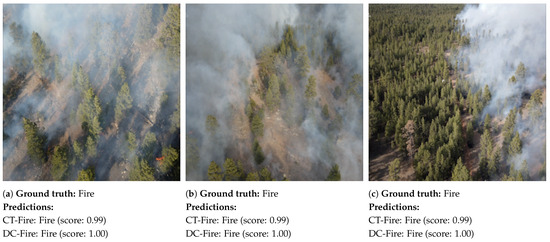

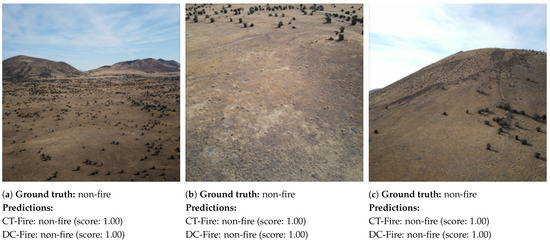

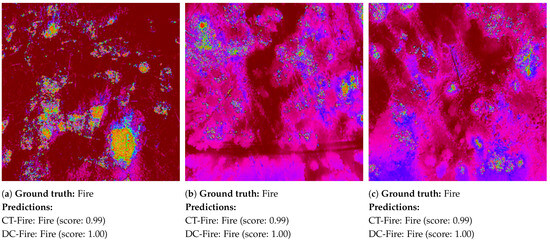

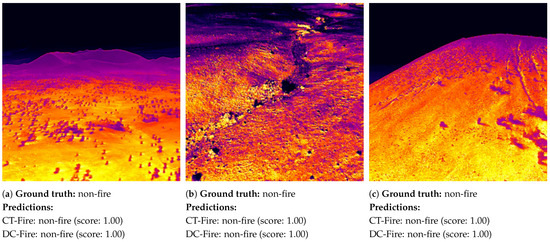

Similar to the numerical results presented in Table 3, the proposed CT-Fire and DC-Fire correctly predict and identify the presence of wildfires using RGB images (as shown in Figure 7 and Figure 8) and infrared images (see Figure 9 and Figure 10) of both fire and non-fire situations. For example, they performed well in classifying IR and RGB aerial fire images with high confidence scores of 0.99 for CT-Fire and 1 for DC-Fire, as depicted in Figure 7c and Figure 9c.

Figure 7.

Classification results of DC-Fire and CT-Fire models using RGB fire images.

Figure 8.

Classification results of DC-Fire and CT-Fire models using RGB non-fire images.

Figure 9.

Classification results of DC-Fire and CT-Fire models using IR fire images.

Figure 10.

Classification results of DC-Fire and CT-Fire models using IR non-fire images.

To summarize, both DC-Fire and CT-Fire demonstrated their effectiveness as a reliable wildland fire recognition method, even for detecting small zones of wildfire, using both RGB and IR aerial images. In addition, they showed their potential in overcoming a number of challenges, notably the variability of wildland fires intensity, with flame lengths varying between 0.25 and 0.75 m (occasionally between 5 and 10 m) [33], the complexity of backgrounds, and the quality of the input image, which can be influenced by various factors such as input image resolution (in our case, 224 × 224 pixels).

5. Discussion

5.1. Results Analysis

IR images are crucial for identifying heat sources and can detect wildfires through heavy smoke, making them essential for early detection and monitoring of wildfires, even in dark conditions and during night and day times. In addition, visible images capture the visual details of wildland fires and smoke, offering a clear representation of these events, their intensity, and their environment. Utilizing both IR and visible images provides a comprehensive view of the diverse features of wildland fires and smoke, enabling DL models to learn a complete representation of forest fire and smoke scenarios during both day and night. This allows more reliable detection of wildland fires and smoke. For such, in this paper, we used both IR and visible images to offer large diversified wildland fire data for training the proposed deep learning models, DC-Fire and CT-Fire, and addressing related wildfire challenges. These methods were trained using a large amount of IR and RGB images (53,451 pairs of images). Their performance was then evaluated using a testing set of 10,691 image pairs, which the models had never seen before. DC-Fire and CT-Fire demonstrated their reliable potential in recognizing forest fires and smoke using both IR and RGB images aerial images. They achieved an interesting performance with an accuracy of 100%, a precision of 100%, a recall of 100%, and an F1-score of 100% for both methods. They also performed better than baseline methods such as LeNet5, Xception, MobileNet v2, ResNet-18, and swin Transformer v2 by 1.55%, 1.37%, 0.18%, 0.50%, 36.56%, and 33.98%, respectively, based on the F1-score. This result was achieved by integrating two CNNs (EfficientNet-B5 and DenseNet-201) for DC-Fire to extract rich and relevant feature maps and combining a CNN (RegNetY-16GF) with a vision transformer (EfficientFormer v2) for CT-Fire to capture global and local features. Combining EfficientNet-B5 and DenseNet-201 models can potentially exploit the scalability benefits of EfficientNet-B5 and the dense connectivity of DenseNet-201, thus improving the extraction of deep features related to wildland smoke and fires. In addition, combining EfficientFormer v2 and RegNetY-16GF as a backbone for extracting wildfire and smoke features allows us to generate diverse feature maps that contains fine details and local and global characteristics related to fire, smoke, and heat patterns. This also shows the ability of CT-Fire and DC-Fire in overcoming wildland fire-related limitations, including the complexity of the background, varying wildfire intensity with flame lengths ranging between 0.25 and 0.75 m, the quality of input images, which can be influenced by input image resolution of 224 × 224 pixels in our case, and the variability of wildfires and smoke regarding their size and shape. Additionally, DC-Fire obtained a fast processing speed with an inference time of 0.013 s better than the inference time of CT-Fire (0.024 s). This shows the ability of these DL methods for early wildland fire detection in real-world applications, during both night and day, when used with a monitoring system or UAV equipped with visible and IR cameras. This flexibility to detect fires at different times of the day ensures continuous monitoring and rapid response. Furthermore, early detection enables rapid intervention to reduce the damage caused by wildland fires and improves forest fire detection strategies and management. In conclusion, the fast processing speed and flexibility of DC-Fire make it suitable for real-time fire applications, improving the ability to rapidly identify fire incidents, and helping to manage wildfires more efficiently.

However, the FLAME2 dataset has certain limitations. This dataset represents a prescribed fire in a specific region (Arizona, USA), which includes ponderosa pine forest and pinyon-juniper woodland. It was collected on a unit composed of particular vegetation types and topographical variations (low fuel type and tree density) and under certain weather conditions, such as a temperature between 14 and 15 degrees Celsius, relative humidity between 18 and 20%, and a southwest wind at 4.5 m/s. These factors limit the generalizability of CT-Fire and DC-Fire to detect fires in other zones with different ecological and climatic conditions, and vegetation types. This affects the potential of their integration into real-world fire applications. Additionally, challenging scenarios related to wildfires remain, including the visual resemblances between wildfire, sunset, lighting, sunrise, etc., and the visual similarity among smoke, and smoke-like objects such as clouds, dust, fog, and haze. These scenarios are not represented in the public dataset, FLAME2, used for training and testing the DC-fire and CT-Fire models. This also reduces their generalization and performance, meaning that they do not perform well in real fire scenarios with these confusing visual elements. To the best of our knowledge, FLAME2 is the only public dataset, which includes both RGB and IR images. It presents a prescribed fire in Arizona. The organization of prescribed burns is a complex process and requires many factors to be considered before obtaining the necessary permission. Consequently, in future work, we plan to use a 3D platform to generate synthetic wildland fire images depicting these challenging scenarios. We will then demonstrate the ability of sim-to-real DL methods in detecting and classifying wildfires and smoke as well as addressing related challenges. We first plan to generate these synthetic data using advanced simulation tools such as Unreal Engine. This data will describe diverse wildfire scenarios by varying parameters such as weather conditions, vegetation types, and topography. Then, we will train proposed deep learning models using both real and synthetic images, while we will test them only on real fire images. On the other hand, the use of explainable artificial intelligence methods in wildfire detection systems tackles the crucial challenge of making the outputs of DL models transparent and comprehensible. These methods ensure that users can easily interpret how and why the DL methods reached a decision, improving trust and reliability in the technology. As a second future work, we will therefore employ advanced explanation methods to provide a comprehensive description of the features extracted by CT-Fire and DC-Fire for identifying wildland smoke and fires. On the other hand, we plan to adopt foundation models as powerful pretrained deep learning models for recognizing smoke and wildfires. We will utilize transfer learning from large datasets to improve wildland fire detection.

5.2. Ethical Issues

Drones offer many practical benefits, notably facilitating fire management and disaster response. They enhance the efficiency and safety of firefighting operations. They also provide real-time monitoring for detecting and recognizing wildfires and predicting their spread in complex forest areas using visible and thermal cameras. Recently, drone swarming technology, which integrates numerous drones simultaneously, has been adopted to cover large monitoring zones, such as the Amazon rainforest, and to detect fire spread. Autonomous water-dropping drones have also been used to detect hotspots and drop fire retardants or water, especially in dangerous and inaccessible zones. However, drone users have limited rights and ethical issues such as privacy concerns and data protection. Firefighters need to respect the laws relating to drone flights in residential and private areas, as unethical drone utilization can result in legal actions.

On the other hand, AI (Artificial Intelligence) based fire recognition and detection methods offer significant benefits, also improving fire management strategies. AI models reliably detect wildfires better than traditional methods, such as human observers, thanks to their ability to analyze large amounts of wildfire data from numerous cameras (thermal and visible) and sensors integrated into UAVs or drones. However, the use of AI in fire detection poses several ethical problems, such as data privacy, since AI models analyze large amounts of data, which can include private information. Additionally, bias and transparency in the decision-making processes of these models are important issues. By using explainability methods, AI models can make their results comprehensible and interpretive.

6. Conclusions

In this work, we used both IR and visible images to provide a comprehensive and detailed view of the various characteristics of forest fires and smoke. This enables deep learning methods to learn a rich visual representation of forest fire and smoke scenarios during day and night times. We then adopted two ensemble learning methods, namely CT-Fire and DC-Fire, for recognizing wildland smoke and fires using both IR and RGB images. CT-Fire employs the deep CNN RegNetY-16GF and the vision transformer EfficientFormer v2 as its backbone, while DC-Fire combines two deep CNNs (EfficientNet-B5 and DenseNet-201) to extract the deep features of forest fires and smoke. Experimental tests were performed using a large aerial dataset, FLAME2, resulting in high performance with an accuracy of 100%, a precision of 100%, a recall of 100%, and an F1-score of 100% for both CT-Fire and DC-Fire. This shows that the CT-Fire and DC-Fire models correctly recognized all positive fire instances and did not make any incorrect detections of fire and non-fire instances. In addition, these models outperformed state-of-the-art methods. They also achieved an interesting processing speed, showing the possibility of using them for early fire detection. Moreover, DC-Fire and CT-Fire demonstrated their potential in overcoming challenging limitations, including the complexity of the background, the variability of wildfires and smoke in terms of size, shape and intensity, and image quality. This demonstrates the potential of the DC-Fire and CT-Fire methods for early wildfire detection in real-world applications, during the day and night when utilized with UAVs or drones equipped with IR and visible cameras. This ability allows rapid intervention, reduces wildfire damage, and improves fire detection management strategies.

Author Contributions

Conceptualization, R.G. and M.A.A.; methodology, R.G. and M.A.A.; software, R.G.; validation, R.G. and M.A.A.; formal analysis, R.G. and M.A.A.; writing—original draft preparation, R.G.; writing—review and editing, M.A.A.; funding acquisition, M.A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was enabled in part by support provided by the Natural Sciences and Engineering Research Council of Canada (NSERC), funding reference number RGPIN-2024-05287.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This work used a publicly available dataset; see reference [33]. More details about this dataset are available under Section 3.2.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence; |

| DL | Deep Learning; |

| IR | Infrared; |

| CNN | Convolutional Neural Network; |

| ReLU | Rectified Linear Unit; |

| SKLFS | State Key Laboratory of Fire Science; |

| CBAM | Convolution Block Attention Module; |

| SHAP | SHapley Additive exPlanation; |

| ACNet | Customized Attention Connected Network; |

| BCE | Cross-Entropy Loss; |

| GRU | Gated Recurrent Unit; |

| Bi-LSTM | Bidirectional Long Short-Term Memory; |

| HOG | Histogram of Oriented Gradients; |

| UAV | Unmanned Aerial Vehicle; |

| BO | Bayesian optimization; |

| ANN | Artificial Neural Network; |

| IRCNN | Infrared Convolutional Neural Network. |

References

- European Commission. 2022 Was the Second-Worst Year for Wildfires. Available online: https://ec.europa.eu/commission/presscorner/detail/en/ip_23_5951 (accessed on 20 May 2024).

- European Commission. Wildfires in the Mediterranean. Available online: https://joint-research-centre.ec.europa.eu/jrc-news-and-updates/wildfires-mediterranean-monitoring-impact-helping-response-2023-07-28_en (accessed on 20 May 2024).

- Government of Canada. Forest Fires. Available online: https://natural-resources.canada.ca/our-natural-resources/forests/wildland-fires-insects-disturbances/forest-fires/13143 (accessed on 20 May 2024).

- Shingler, B.; Bruce, G. Five Charts to Help Understand Canada’s Record Breaking Wildfire Season. Available online: https://www.cbc.ca/news/climate/wildfire-season-2023-wrap-1.6999005 (accessed on 20 May 2024).

- Anshul, G.; Abhishek, S.; Ashok, K.; Kishor, K.; Sayantani, L.; Kamal, K.; Vishal, S.; Anuj, K.; Chandra, M.S. Fire Sensing Technologies: A Review. IEEE Sensors J. 2019, 19, 3191–3202. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. Deep Learning Approaches for Wildland Fires Remote Sensing: Classification, Detection, and Segmentation. Remote Sens. 2023, 15, 1821. [Google Scholar] [CrossRef]

- Khan, F.; Xu, Z.; Sun, J.; Khan, F.M.; Ahmed, A.; Zhao, Y. Recent Advances in Sensors for Fire Detection. Sensors 2022, 22, 3310. [Google Scholar] [CrossRef] [PubMed]

- Ghali, R.; Akhloufi, M.A. Deep Learning Approaches for Wildland Fires Using Satellite Remote Sensing Data: Detection, Mapping, and Prediction. Fire 2023, 6, 192. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Celik, T.; Akhloufi, M.A. Automatic Fire Pixel Detection using Image Processing: A Comparative Analysis of Rule-based and Machine Learning-based Methods. Signal Image Video Process. 2016, 10, 647–654. [Google Scholar] [CrossRef]

- Martin, M.; Peter, K.; Ivan, K.; Allen, T. Optical Flow Estimation for Flame Detection in Videos. IEEE Trans. Image Process. 2013, 22, 2786–2797. [Google Scholar] [CrossRef]

- Kosmas, D.; Panagiotis, B.; Nikos, G. Spatio-Temporal Flame Modeling and Dynamic Texture Analysis for Automatic Video-Based Fire Detection. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 339–351. [Google Scholar] [CrossRef]

- Ishag, M.M.A.; Honge, R. Forest Fire Detection and Identification Using Image Processing and SVM. J. Inf. Process. Syst. 2019, 15, 159–168. [Google Scholar] [CrossRef]

- Ko, B.; Cheong, K.H.; Nam, J.Y. Early Fire Detection Algorithm Based on Irregular Patterns of Flames and Hierarchical Bayesian Networks. Fire Saf. J. 2010, 45, 262–270. [Google Scholar] [CrossRef]

- David, V.H.; Peter, V.; Wilfried, P.; Kristof, T. Fire Detection in Color Images Using Markov Random Fields. In Proceedings of the Advanced Concepts for Intelligent Vision Systems, Sydney, Australia, 13–16 December 2010; pp. 88–97. [Google Scholar]

- Fahad, M. Deep Learning Technique for Recognition of Deep Fake Videos. In Proceedings of the IEEE IAS Global Conference on Emerging Technologies (GlobConET), London, UK, 19–21 May 2023; pp. 1–4. [Google Scholar]

- Ur Rehman, A.; Belhaouari, S.B.; Kabir, M.A.; Khan, A. On the Use of Deep Learning for Video Classification. Appl. Sci. 2023, 13, 2007. [Google Scholar] [CrossRef]

- Rana, M.; Bhushan, M. Machine Learning and Deep Learning Approach for Medical Image Analysis: Diagnosis to Detection. Multimed. Tools Appl. 2023, 82, 26731–26769. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Wang, X.; Che, T.; Bao, G.; Li, S. Multi-task Deep Learning for Medical Image Computing and Analysis: A Review. Comput. Biol. Med. 2023, 153, 106496. [Google Scholar] [CrossRef] [PubMed]

- Jasdeep, S.; Subrahmanyam, M.; Raju, K.G.S. Multi Domain Learning for Motion Magnification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 13914–13923. [Google Scholar]

- Yu, C.; Bi, X.; Fan, Y. Deep Learning for Fluid Velocity Field Estimation: A Review. Ocean Eng. 2023, 271, 113693. [Google Scholar] [CrossRef]

- Harsh, R.; Lavish, B.; Kartik, S.; Tejan, K.; Varun, J.; Venkatesh, B.R. NoisyTwins: Class-Consistent and Diverse Image Generation Through StyleGANs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 5987–5996. [Google Scholar]

- Dhar, T.; Dey, N.; Borra, S.; Sherratt, R.S. Challenges of Deep Learning in Medical Image Analysis—Improving Explainability and Trust. IEEE Trans. Technol. Soc. 2023, 4, 68–75. [Google Scholar] [CrossRef]

- Xu, T.-X.; Guo, Y.-C.; Lai, Y.-K.; Zhang, S.-H. CXTrack: Improving 3D Point Cloud Tracking With Contextual Information. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 1084–1093. [Google Scholar]

- Ibrahim, N.; Darlis, A.R.; Kusumoputro, B. Performance Analysis of YOLO-Deep SORT on Thermal Video-Based Online Multi-Objet Tracking. In Proceedings of the IEEE 13th International Conference on Consumer Electronics—Berlin (ICCE-Berlin), Berlin, Germany, 3–5 September 2023; pp. 1–6. [Google Scholar]

- Saleh, A.; Zulkifley, M.A.; Harun, H.H.; Gaudreault, F.; Davison, I.; Spraggon, M. Forest Fire Surveillance Systems: A Review of Deep Learning Methods. Heliyon 2024, 10, e23127. [Google Scholar] [CrossRef] [PubMed]

- Gonçalves, L.A.O.; Ghali, R.; Akhloufi, M.A. YOLO-Based Models for Smoke and Wildfire Detection in Ground and Aerial Images. Fire 2024, 7, 140. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. DC-Fire: A Deep Convolutional Neural Network for Wildland Fire Recognition on Aerial Infrared Images. In Proceedings of the fourth Quantitative Infrared Thermography Asian Conference (QIRT-Asia 2023), Abu Dhabi, United Arab Emirates, 30 October–3 November 2023; pp. 1–6. [Google Scholar]

- Ghali, R.; Akhloufi, M.A. CT-Fire: A CNN-Transformer for Wildfire Classification on Ground and Aerial images. Int. J. Remote Sens. 2023, 44, 7390–7415. [Google Scholar] [CrossRef]

- Gao, H.; Zhuang, L.; Laurens, v.d.M.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Mingxing, T.; Quoc, L. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Ilija, R.; Prateek, K.R.; Ross, G.; Kaiming, H.; Piotr, D. Designing Network Design Spaces. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10428–10436. [Google Scholar]

- Yanyu, L.; Ju, H.; Yang, W.; Georgios, E.; Kamyar, S.; Yanzhi, W.; Sergey, T.; Jian, R. Rethinking Vision Transformers for MobileNet Size and Speed. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Vancouver, BC, Canada, 17–24 June 2023; pp. 16889–16900. [Google Scholar]

- Chen, X.; Hopkins, B.; Wang, H.; O’Neill, L.; Afghah, F.; Razi, A.; Fulé, P.; Coen, J.; Rowell, E.; Watts, A. Wildland Fire Detection and Monitoring Using a Drone-Collected RGB/IR Image Dataset. IEEE Access 2022, 10, 121301–121317. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, Y.; Jinjun, W.; Zhang, Q.; Bing, C.; Dongcai, L. Fire Detection in Infrared Video Surveillance Based on Convolutional Neural Network and SVM. In Proceedings of the IEEE 3rd International Conference on Signal and Image Processing (ICSIP), Shenzhen, China, 13–15 July 2018; pp. 162–167. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Deng, L.; Chen, Q.; He, Y.; Sui, X.; Liu, Q.; Hu, L. Fire Detection with Infrared Images using Cascaded Neural Network. J. Algorithms Comput. Technol. 2019, 13, 1748302619895433. [Google Scholar] [CrossRef]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.Z.; Blasch, E. Aerial Imagery Pile Burn Detection Using Deep Learning: The FLAME Dataset. Comput. Netw. 2021, 193, 108001. [Google Scholar] [CrossRef]

- Francois, C. Xception: Deep Learning With Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.; Blasch, E. The FLAME Dataset: Aerial Imagery Pile Burn Detection using Drones (UAVs). IEEE Dataport 2020. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A.; Mseddi, W.S. Deep Learning and Transformer Approaches for UAV-Based Wildfire Detection and Segmentation. Sensors 2022, 22, 1977. [Google Scholar] [CrossRef]

- Guo, Y.Q.; Chen, G.; Yi-Na, W.; Xiu-Mei, Z.; Zhao-Dong, X. Wildfire Identification Based on an Improved Two-Channel Convolutional Neural Network. Forests 2022, 13, 1302. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, H.; Zhang, Y.; Hu, K.; An, K. A Deep Learning-Based Experiment on Forest Wildfire Detection in Machine Vision Course. IEEE Access 2023, 11, 32671–32681. [Google Scholar] [CrossRef]

- Karen, S.; Andrew, Z. Very Deep Convolutional Networks for Large-scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Anupama, N.; Prabha, S.; Senthilkumar, M.; Sumathi, R.; Tag, E.E. Forest Fire Identification in UAV Imagery Using X-MobileNet. Electronics 2023, 12, 733. [Google Scholar] [CrossRef]

- Mark, S.; Andrew, H.; Menglong, Z.; Andrey, Z.; Liang-Chieh, C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Zhang, Z.; Guo, Y.; Chen, G.; Xu, Z. Wildfire Detection via a Dual-Channel CNN with Multi-Level Feature Fusion. Forests 2023, 14, 1499. [Google Scholar] [CrossRef]

- Islam, A.M.; Binta, M.F.; Rayhan, A.M.; Jafar, A.I.; Rahmat, U.J.; Salekul, I.; Swakkhar, S.; Muzahidul, I.A.K.M. An Attention-Guided Deep-Learning-Based Network with Bayesian Optimization for Forest Fire Classification and Localization. Forests 2023, 14, 2080. [Google Scholar] [CrossRef]

- Ali, K.; Bilal, H.; Somaiya, K.; Ramsha, A.; Adnan, A. DeepFire: A Novel Dataset and Deep Transfer Learning Benchmark for Forest Fire Detection. Mob. Inf. Syst. 2022, 2022, 5358359. [Google Scholar] [CrossRef]

- Aral, R.A.; Zalluhoglu, C.; Sezer, E.A. Lightweight and Attention-based CNN Architecture for Wildfire Detection using UAV Vision Data. Int. J. Remote Sens. 2023, 44, 5768–5787. [Google Scholar] [CrossRef]

- Sanghyun, W.; Jongchan, P.; Joon-Young, L.; So, K.I. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018, Munich Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Kumar, J.S.; Khan, M.; Jilani, S.A.K.; Rodrigues, J.J.P.C. Automated Fire Extinguishing System Using a Deep Learning Based Framework. Mathematics 2023, 11, 608. [Google Scholar] [CrossRef]

- Khubab, A.; Shahbaz, K.M.; Fawad, A.; Maha, D.; Wadii, B.; Abdulwahab, A.; Mohammad, A.; Alshehri, M.S.; Yasin, G.Y.; Jawad, A. FireXnet: An explainable AI-based Tailored Deep Learning Model for Wildfire Detection on Resource-constrained Devices. Fire Ecol. 2023, 19, 54. [Google Scholar] [CrossRef]

- Dincer, B. Wildfire Detection Image Data. Available online: https://www.kaggle.com/datasets/brsdincer/wildfire-detection-image-data (accessed on 20 May 2024).

- Pedro, V.d.V.; Lisboa, A.C.; Barbosa, A.V. An Automatic Fire Detection System Based on Deep Convolutional Neural Networks for Low-power, Resource-constrained Devices. Neural Comput. Appl. 2022, 34, 15349–15368. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M.A. Computer Vision for Wildfire Research: An Evolving Image Dataset for Processing and Analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef]

- Saied, A. FIRE Dataset. Available online: https://www.kaggle.com/datasets/phylake1337/fire-dataset?select=fire_dataset%2C+06.11.2021 (accessed on 20 May 2024).

- Ghali, R.; Akhloufi, M.A. BoucaNet: A CNN-Transformer for Smoke Recognition on Remote Sensing Satellite Images. Fire 2023, 6, 455. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite Smoke Scene Detection Using Convolutional Neural Network with Spatial and Channel-Wise Attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef]

- Fernando, L.; Ghali, R.; Akhloufi, M.A. SWIFT: Simulated Wildfire Images for Fast Training Dataset. Remote Sens. 2024, 16, 1627. [Google Scholar] [CrossRef]

- Almeida, J.S.; Jagatheesaperumal, S.K.; Nogueira, F.G.; de Albuquerque, V.H.C. EdgeFireSmoke++: A Novel lightweight Algorithm for Real-time Forest Fire Detection and Visualization using Internet of Things-human Machine Interface. Expert Syst. Appl. 2023, 221, 119747. [Google Scholar] [CrossRef]

- Almeida, J.S.; Huang, C.; Nogueira, F.G.; Bhatia, S.; de Albuquerque, V.H.C. EdgeFireSmoke: A Novel Lightweight CNN Model for Real-Time Video Fire–Smoke Detection. IEEE Trans. Ind. Informatics 2022, 18, 7889–7898. [Google Scholar] [CrossRef]

- Reis, H.C.; Turk, V. Detection of Forest Fire using Deep Convolutional Neural Networks with Transfer Learning Approach. Appl. Soft Comput. 2023, 143, 110362. [Google Scholar] [CrossRef]

- Habbib, A.M.; Khidhir, A.M. Transfer Learning Based Fire Recognition. Int. J. Tech. Phys. Probl. Eng. (IJTPE) 2023, 15, 86–92. [Google Scholar]

- Idroes, G.M.; Maulana, A.; Suhendra, R.; Lala, A.; Karma, T.; Kusumo, F.; Hewindati, Y.T.; Noviandy, T.R. TeutongNet: A Fine-Tuned Deep Learning Model for Improved Forest Fire Detection. Leuser J. Environ. Stud. 2023, 1, 1–8. [Google Scholar] [CrossRef]

- Al Duhayyim, M.; Eltahir, M.M.; Omer Ali, O.A.; Albraikan, A.A.; Al-Wesabi, F.N.; Hilal, A.M.; Hamza, M.A.; Rizwanullah, M. Fusion-Based Deep Learning Model for Automated Forest Fire Detection. Comput. Mater. Contin. 2023, 77, 1355–1371. [Google Scholar] [CrossRef]

- Guo, N.; Liu, J.; Di, K.; Gu, K.; Qiao, J. A hybrid Attention Model Based on First-order Statistical Features for Smoke Recognition. Sci. China Technol. Sci. 2024, 67, 809–822. [Google Scholar] [CrossRef]

- Jonnalagadda, A.V.; Hashim, H.A. SegNet: A segmented Deep Learning Based Convolutional Neural Network Approach for Drones Wildfire Detection. Remote Sens. Appl. Soc. Environ. 2024, 34, 101181. [Google Scholar] [CrossRef]

- Pramod, S.; Avinash, M. Introduction to TensorFlow 2.0. In Learn TensorFlow 2.0: Implement Machine Learning and Deep Learning Models with Python; Apress: Berkeley, CA, USA, 2020; pp. 1–24. [Google Scholar] [CrossRef]

- Al-Dabbagh, A.M.; Ilyas, M. Uni-temporal Sentinel-2 Imagery for Wildfire Detection Using Deep Learning Semantic Segmentation Models. Geomat. Nat. Hazards Risk 2023, 14, 2196370. [Google Scholar] [CrossRef]

- Saining, X.; Ross, G.; Piotr, D.; Zhuowen, T.; Kaiming, H. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Ze, L.; Han, H.; Yutong, L.; Zhuliang, Y.; Zhenda, X.; Yixuan, W.; Jia, N.; Yue, C.; Zheng, Z.; Li, D.; et al. Swin Transformer v2: Scaling Up Capacity and Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 12009–12019. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).