Moving toward Smart Manufacturing with an Autonomous Pallet Racking Inspection System Based on MobileNetV2

Abstract

:1. Introduction

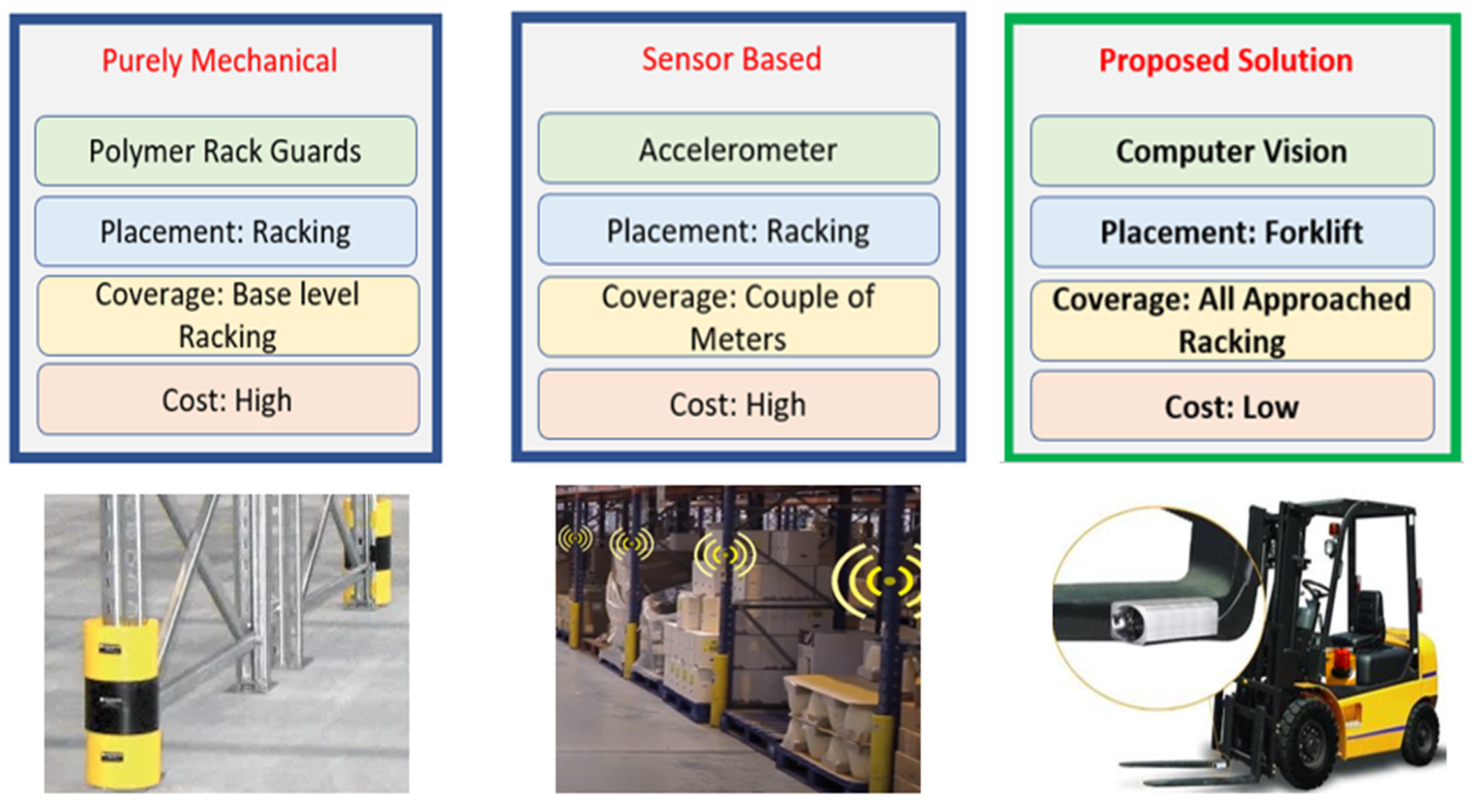

1.1. Literature Review

1.2. Paper Contribution

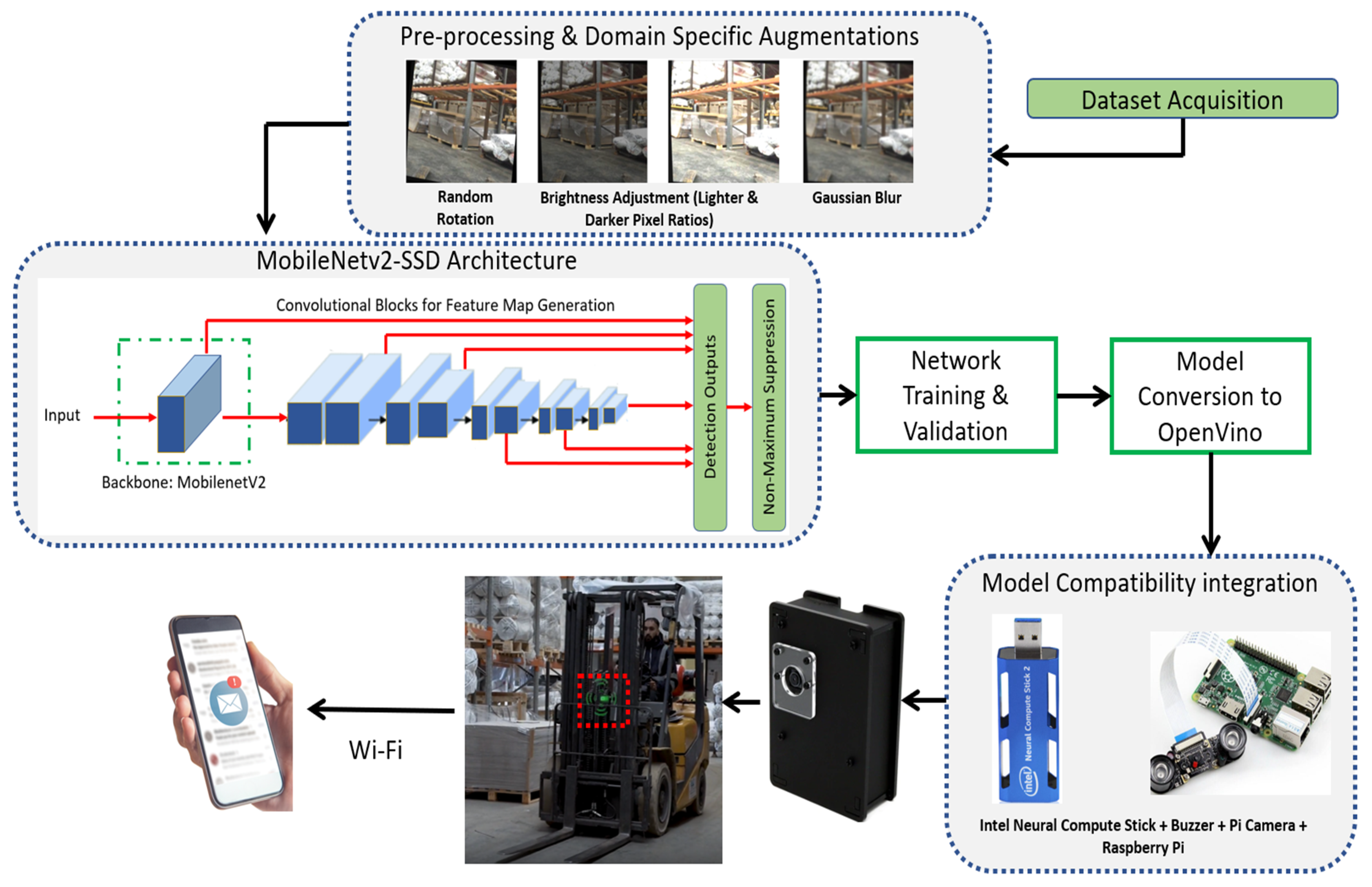

2. Methodology

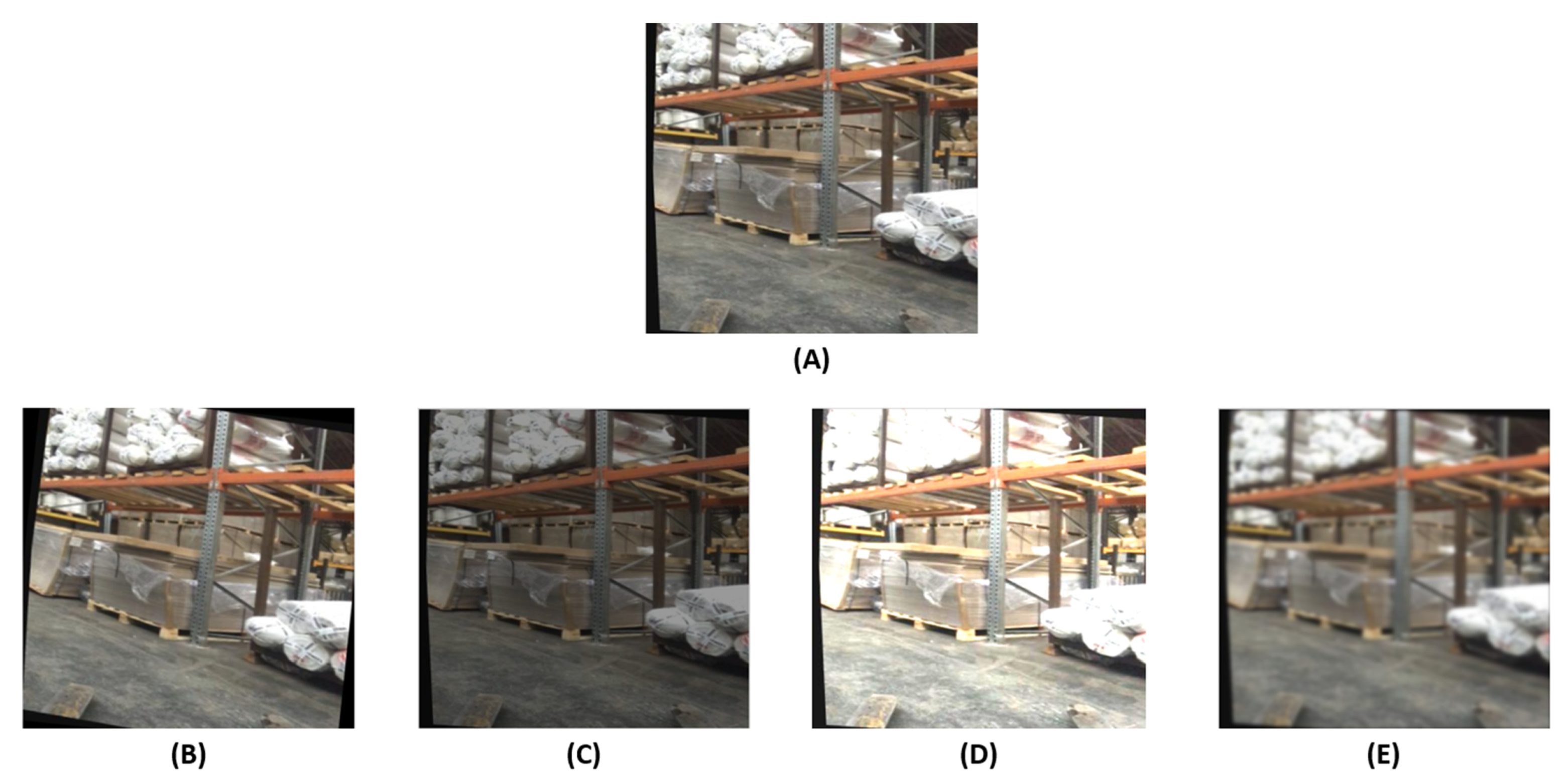

2.1. Data Procurement

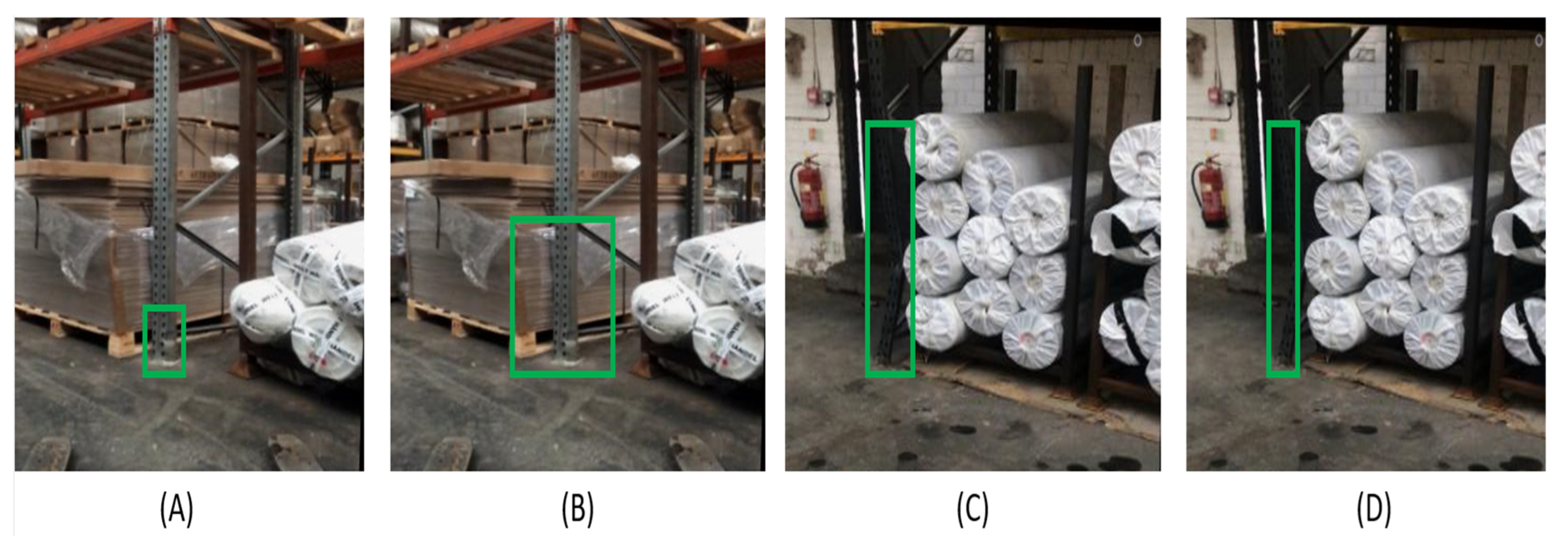

2.2. Data Pre-Processing

2.3. Data Augmentations

2.4. Architecture Selection

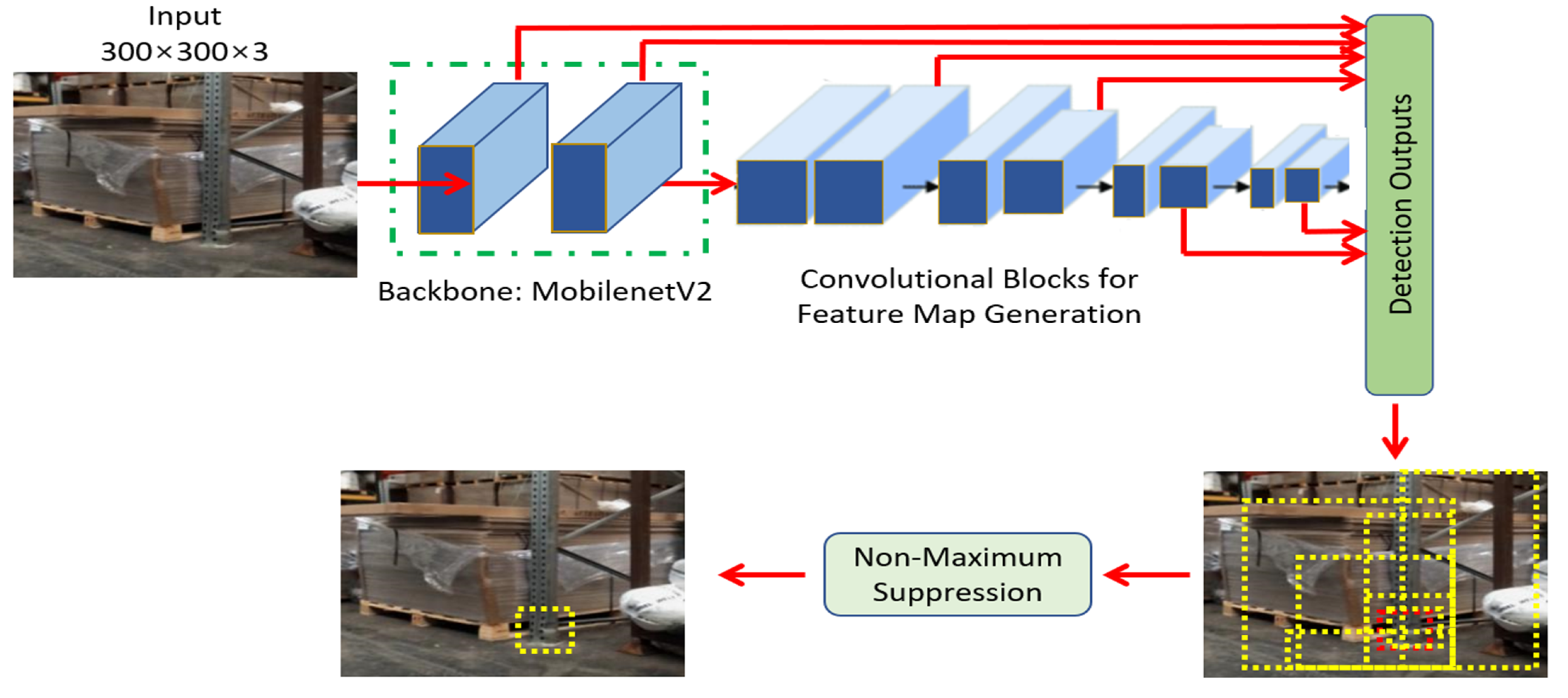

2.5. Examining the Single Shot Multibox Architecture

2.6. MobileNetV2 Coupling with SSD

2.7. System Architecture

2.8. Strategic Placement for Extended Coverage

3. Results

3.1. Hyper-Parameters

3.2. Model Evaluation

4. Discussion

4.1. Two Stage Detector Comparison

4.2. Proposed Solution vs. Similar Research Comparison

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dong, C.-Z.; Catbas, F.N. A review of computer vision–based structural health monitoring at local and global levels. Struct. Health Monit. 2020, 20, 692–743. [Google Scholar] [CrossRef]

- Zhu, H.-H.; Dai, F.; Zhu, Z.; Guo, T.; Ye, X.-W. Smart sensing technologies and their applications in civil infrastructures 2016. J. Sens. 2016, 2016, 8352895. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Ran, H.; Wen, S.; Shi, K.; Huang, T. Stable and compact design of Memristive GoogLeNet neural network. Neurocomputing 2021, 441, 52–63. [Google Scholar] [CrossRef]

- Yang, Z. Classification of picture art style based on VGGNET. J. Phys. Conf. Ser. 2021, 1774, 012043. [Google Scholar] [CrossRef]

- Gajja, M. Brain tumor detection using mask R-CNN. J. Adv. Res. Dyn. Control Syst. 2020, 12, 101–108. [Google Scholar] [CrossRef]

- Liu, S. Pedestrian detection based on Faster R-CNN. Int. J. Perform. Eng. 2019, 15, 1792. [Google Scholar] [CrossRef]

- Fu, L.; Majeed, Y.; Zhang, X.; Karkee, M.; Zhang, Q. Faster R–CNN–based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosyst. Eng. 2020, 197, 245–256. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [Green Version]

- Farinella, G.M.; Kanade, T.; Leo, M.; Medioni, G.G.; Trivedi, M. Special issue on assistive computer vision and robotics—Part I. Comput. Vis. Image Underst. 2016, 148, 1–2. [Google Scholar] [CrossRef]

- Hansen, L.; Siebert, M.; Diesel, J.; Heinrich, M.P. Fusing information from multiple 2D depth cameras for 3D human pose estimation in the operating room. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1871–1879. [Google Scholar] [CrossRef]

- Ghosh, S.; Pal, A.; Jaiswal, S.; Santosh, K.C.; Das, N.; Nasipuri, M. SegFast-V2: Semantic image segmentation with less parameters in deep learning for autonomous driving. Int. J. Mach. Learn. Cybern. 2019, 10, 3145–3154. [Google Scholar] [CrossRef]

- Grigorescu, S.; Trasnea, B.; Cocias, T.; Macesanu, G. A survey of deep learning techniques for autonomous driving. J. Field Robot. 2019, 37, 362–386. [Google Scholar] [CrossRef]

- Grigorescu, S.; Cocias, T.; Trasnea, B.; Margheri, A.; Lombardi, F.; Aniello, L. Cloud2Edge elastic AI framework for prototyping and deployment of AI inference engines in autonomous vehicles. Sensors 2020, 20, 5450. [Google Scholar] [CrossRef] [PubMed]

- Grigorescu, S.M.; Trasnea, B.; Marina, L.; Vasilcoi, A.; Cocias, T. NeuroTrajectory: A Neuroevolutionary approach to local state trajectory learning for autonomous vehicles. IEEE Robot. Autom. Lett. 2019, 4, 3441–3448. [Google Scholar] [CrossRef] [Green Version]

- Cocias, T.; Razvant, A.; Grigorescu, S. GFPNet: A deep network for learning shape completion in generic fitted primitives. IEEE Robot. Autom. Lett. 2020, 5, 4493–4500. [Google Scholar] [CrossRef]

- Zubritskaya, I.A. Industry 4.0: Digital transformation of manufacturing industry of the Republic of Belarus. Digit. Transform. 2019, 3, 23–38. [Google Scholar] [CrossRef] [Green Version]

- Cao, B.; Wei, Q.; Lv, Z.; Zhao, J.; Singh, A.K. Many-objective deployment optimization of edge devices for 5G networks. IEEE Trans. Netw. Sci. Eng. 2020, 7, 2117–2125. [Google Scholar] [CrossRef]

- Sun, S.; Zheng, X.; Villalba-Díez, J.; Ordieres-Meré, J. Data handling in industry 4.0: Interoperability based on distributed ledger technology. Sensors 2020, 20, 3046. [Google Scholar] [CrossRef]

- Wang, X.; Hua, X.; Xiao, F.; Li, Y.; Hu, X.; Sun, P. Multi-object detection in traffic scenes based on improved SSD. Electronics 2018, 7, 302. [Google Scholar] [CrossRef] [Green Version]

- Hu, X.; Liu, Y.; Zhao, Z.; Liu, J.; Yang, X.; Sun, C.; Chen, S.; Li, B.; Zhou, C. Real-time detection of uneaten feed pellets in underwater images for aquaculture using an improved YOLO-V4 network. Comput. Electron. Agric. 2021, 185, 106135. [Google Scholar] [CrossRef]

- Neupane, D.; Kim, Y.; Seok, J.; Hong, J. CNN-based fault detection for smart manufactuing. Appl. Sci. 2021, 11, 11732. [Google Scholar] [CrossRef]

- Adibhatla, V.A.; Chih, H.-C.; Hsu, C.-C.; Cheng, J.; Abbod, M.F.; Shieh, J.-S. Defect detection in printed circuit boards using you-only-look-once convolutional neural networks. Electronics 2020, 9, 1547. [Google Scholar] [CrossRef]

- Li, Y.; Huang, H.; Xie, Q.; Yao, L.; Chen, Q. Research on a surface defect detection algorithm based on MobileNet-SSD. Appl. Sci. 2018, 8, 1678. [Google Scholar] [CrossRef] [Green Version]

- Farahnakian, F.; Koivunen, L.; Makila, T.; Heikkonen, J. Towards Autonomous Industrial Warehouse Inspection. In Proceedings of the 2021 26th International Conference on Automation and Computing (ICAC), Portsmouth, UK, 2–4 September 2021. [Google Scholar] [CrossRef]

- Rack Armour. The Rack Group. Available online: https://therackgroup.com/product/rack-armour/ (accessed on 25 May 2022).

- RE RackBull®. Boplan, 25 September 2015. Available online: https://www.boplan.com/en/products/flex-impactr/rack-protection/re-rackbullr (accessed on 25 May 2022).

- Warehouse Racking Impact Monitoring|RackEye from A-SAFE. A-SAFE. Available online: https://www.asafe.com/en-gb/products/rackeye/ (accessed on 25 May 2022).

- Raspberry Pi 4 Model B. The Pi Hut. Available online: https://thepihut.com/collections/raspberry-pi/products/raspberry-pi-4-model-b (accessed on 25 May 2022).

| Data | Samples |

|---|---|

| Training | 19,600 |

| Validation | 78 |

| Test | 39 |

| Batch Size | 24 |

| Steps | 10,000 |

| Learning Rate | 0.004 |

| Optimizer | RMS-PROP |

| MAP@50 (IOU) | 92.7% |

| Initial Loss | 4.54 |

| Final Loss | 1.96 |

| Training Time | 1 h:54 m:41 s |

| Model | Input | GMAC (G) | Parameters (MB) |

|---|---|---|---|

| MobileNetv2-SSD | 300 × 300 | 2.88 | 22 |

| Faster-RCNN | 600 × 850 | 344 | 523 |

| Our Research | Research by [25] | |

|---|---|---|

| Approach | Object Detection | Image Segmentation |

| Dataset Size | 19,717 | 75 |

| Detector | Single Shot | Two Stage |

| Architecture | MobileNetV2-SSD | Mask-RCNN-ResNet-101 |

| MAP@0.5(IoU) | 92.7% | 93.45% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hussain, M.; Chen, T.; Hill, R. Moving toward Smart Manufacturing with an Autonomous Pallet Racking Inspection System Based on MobileNetV2. J. Manuf. Mater. Process. 2022, 6, 75. https://doi.org/10.3390/jmmp6040075

Hussain M, Chen T, Hill R. Moving toward Smart Manufacturing with an Autonomous Pallet Racking Inspection System Based on MobileNetV2. Journal of Manufacturing and Materials Processing. 2022; 6(4):75. https://doi.org/10.3390/jmmp6040075

Chicago/Turabian StyleHussain, Muhammad, Tianhua Chen, and Richard Hill. 2022. "Moving toward Smart Manufacturing with an Autonomous Pallet Racking Inspection System Based on MobileNetV2" Journal of Manufacturing and Materials Processing 6, no. 4: 75. https://doi.org/10.3390/jmmp6040075

APA StyleHussain, M., Chen, T., & Hill, R. (2022). Moving toward Smart Manufacturing with an Autonomous Pallet Racking Inspection System Based on MobileNetV2. Journal of Manufacturing and Materials Processing, 6(4), 75. https://doi.org/10.3390/jmmp6040075