A Feature-Aware Elite Imitation MARL for Multi-UAV Trajectory Optimization in Mountain Terrain Detection

Abstract

1. Introduction

- We formulate the joint trajectory optimization system that simultaneously accounts for detection accuracy, UAV energy efficiency, and collision avoidance.

- We propose a generalized MARL framework incorporating the EI mechanism and the DQPN structure, which together enhance training stability, accelerate convergence, and reduce inference latency in detection tasks.

- We propose a novel FA-EIMARL algorithm that can rapidly adapt to dynamic task spaces based on the extracted environmental features. Experimental results demonstrate that this algorithm outperforms baselines.

2. Related Works

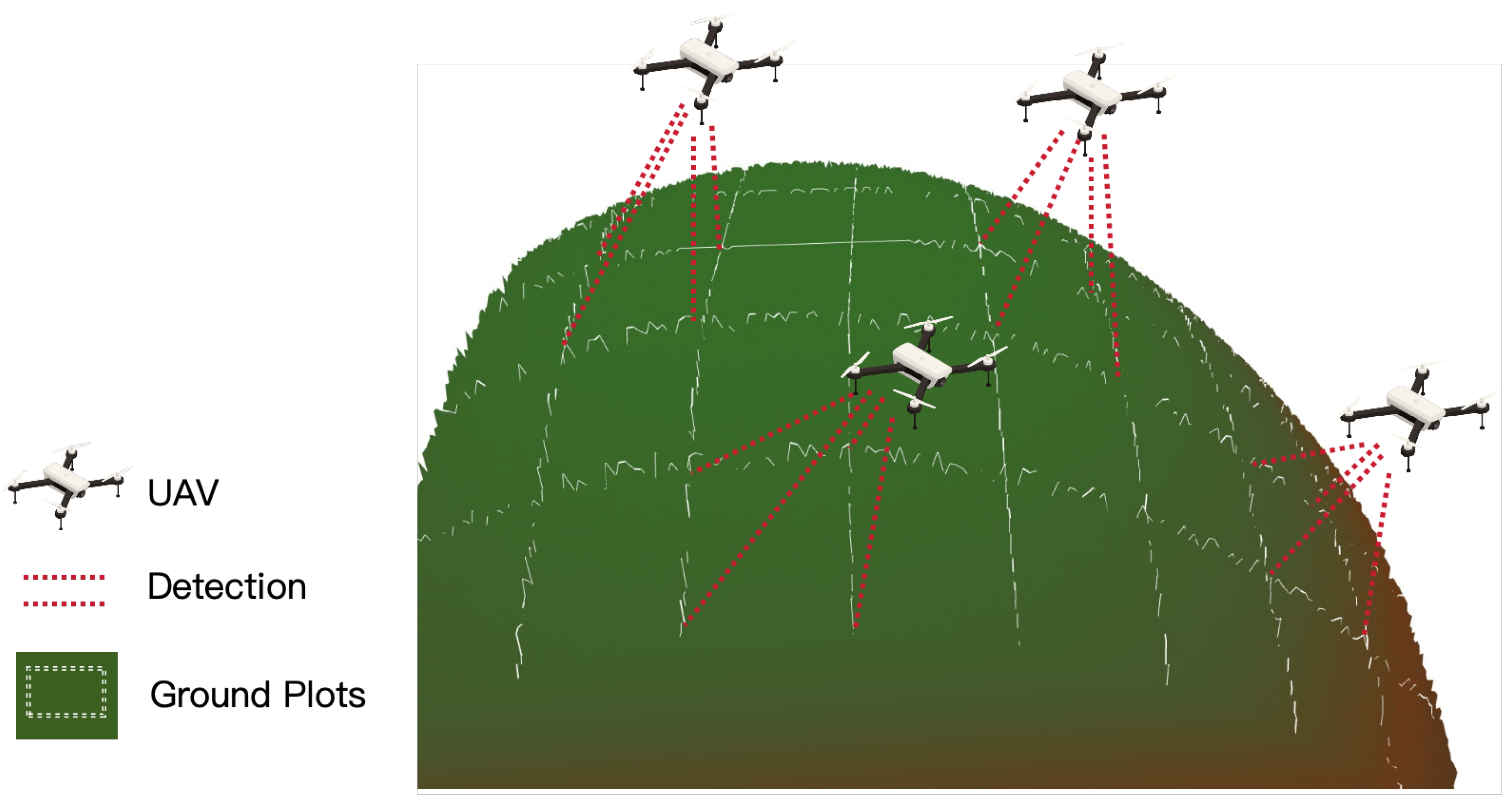

3. System Overview

3.1. Exemplary Scenario

3.2. Methodology

4. System Model

4.1. Energy Consumption

4.2. Communication

- Each UAV periodically broadcasts its current geographic position and the updated visitation map to nearby UAVs according to a predefined broadcast interval in order to improve mission efficiency and avoid collisions.

- continuously transmits the detection data to the remote mountain base stations. Upon successful reception, the base stations return an acknowledgment message to . If no acknowledgment is received within a certain timeout period , initiates retransmission.

- If retransmission attempts fail times and no acknowledgment is received, sends a request to the nearest . Once confirms the request, a communication link is established between the two UAVs. The data is then relayed to , which attempts to forward the information to the base station.

4.3. UAV Detection

4.4. Problem Formulation

5. Feature-Aware Elite Imitation Multi-Agent Reinforcement Learning

5.1. Multi-Agent Markov Decision Process

- Observation of Each Agent : In the considered scenario, the observation space should provide information of UAVs, including their current positions, the nearest UAV position and the distances between them, the detection status of PDs near the UAV, remain power, and the system coverage rate. The observation space can be represented as where represents the nearest UAV of , and represents the detection status of PDs near the .

- Action of Each Agent : The action space, which describes the speed in the x, y, and z direction of , can be expressed as .

- Feature of the Environment : The feature space describes the scenario features agent i obtains, which is introduced in Section 5.4.

- Reward of Each Agent : The reward serves as the environmental feedback received by agent i when observing , extracting features , and executing action . Similarly to the objective function Pro in Equation (32a), the reward guides UAVi to improve detection coverage, maintain suitable flight altitude, reduce energy consumption, and avoid collisions. As shown in Equations (18) to (31), the reward can be defined as

- Set of Actions : denotes the set of actions available to all agents at time step k, which can be formulated as

- The Return of Each Agent : The objective of agent i’s policy is to maximize the expected cumulative reward, which is defined aswhere denotes the discount factor that balances immediate and future rewards, with a value range of . In long-term strategies, future rewards are expected to play a more significant role than immediate ones. Accordingly, the influence of immediate rewards gradually diminishes over time, as the term decreases with increasing time step k.

5.2. Detection Quality Prediction Network

| Algorithm 1 Detection Quality Prediction Model Generation |

|

5.3. Elite Imitation Mechanism

| Algorithm 2 Elite Imitation Mechanism |

|

5.4. Feature-Aware MARL

- Initialize the update parameter for actor network , the update parameters for critic networks and , and the update parameters for target critic networks and . Initialize the replay buffer .

- During an episode , let agent i interact with the environment and store observation , action , rewards , and features to the replay buffer .

- Randomly sample one batch of data , , , , and from .

- Use the target critic networks and the current policy to compute the target value. Specifically, the target Q-values are calculated using the minimum of the two target critics, following the Clipped Double Q-learning strategy to mitigate overestimation bias. The formulation is as follows:

- Minimize the mean squared error (MSE) between the predicted Q-values and the target Q-values for the critic update. We update the primary and secondary critic networks by minimizing the loss function :where denotes the mathematical expectation.

- Update the actor network by maximizing the estimated Q-value from critic . The loss function is expressed as

- Softly update the target critic networks to ensure training stability. Instead of directly copying the weights, the target networks are updated using a weighted average of the current and previous target weights:where is the soft update parameter.

- Repeat steps 2–7 in all training iterations until the policy converges.

| Algorithm 3 Feature-Aware Elite Imitation Multi-Agent Reinforcement Learning |

|

6. Simulation Results

6.1. Experimental Setup

- Reward (M1): Measures the average reward received per agent during one episode.

- Detection Coverage Rate (M2): Measures the overall detection quality across an episode.

- Task Completion Time (M3): Measures the overall detection mission time across an episode.

- Convergence Time (M4): Measures the number of iterations required for the algorithm to converge.

- Stability and Generalization (M5): Measures post-convergence reward fluctuations to assess robustness.

- Scalability (M6): Measures how inference time per time step changes with the number of UAVs.

- Policy Efficiency (M7): Measures the inference time of UAVs during the detection process.

6.2. Training Performance

6.3. Scalability and Complexity Analysis

6.4. Ablation Study

6.5. Three-Dimensional Visualization

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, J.; Zhu, X.; Shan, W. Lightweight Small Target Detection Algorithm for UAV Infrared Aerial Photography Based on YOLOv8. In Proceedings of the 2024 5th International Conference on Artificial Intelligence and Computer Engineering (ICAICE), Wuhu, China, 8–10 November 2024; pp. 395–398. [Google Scholar] [CrossRef]

- Chi, C.; Li, B.; Deng, H.; Zhao, S. Depth Camera-LiDAR Fusion Based UAV Autonomous Navigation for Parcel Delivery in Complex Urban Environment. In Proceedings of the 2024 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Kuching, Malaysia, 6–10 October 2024; pp. 4801–4806. [Google Scholar] [CrossRef]

- Yılmaz, A.; Toker, C. Air-to-Air Channel Model for UAV Communications. In Proceedings of the 2022 30th Signal Processing and Communications Applications Conference (SIU), Safranbolu, Turkey, 15–18 May 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Raman, R. Evolving Remote Sensing Applications as System-of-Systems. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 8181–8184. [Google Scholar] [CrossRef]

- Yang, F.; Ji, X.; Yang, C.; Li, J.; Li, B. Cooperative search of UAV swarm based on improved ant colony algorithm in uncertain environment. In Proceedings of the 2017 IEEE International Conference on Unmanned Systems (ICUS), Beijing, China, 27–29 October 2017; pp. 231–236. [Google Scholar] [CrossRef]

- Massaro, A.; Savino, N.; Selicato, S.; Panarese, A.; Galiano, A.; Dipierro, G. Thermal IR and GPR UAV and Vehicle Embedded Sensor Non-Invasive Systems for Road and Bridge Inspections. In Proceedings of the 2021 IEEE International Workshop on Metrology for Industry 4.0 & IoT (MetroInd4.0&IoT), Rome, Italy, 7–9 June 2021; pp. 248–253. [Google Scholar] [CrossRef]

- Liu, W.; Zheng, X.; Luo, Y. Cooperative search planning in wide area via multi-UAV formations based on distance probability. In Proceedings of the 2020 3rd International Conference on Unmanned Systems (ICUS), Harbin, China, 27–28 November 2020; pp. 1072–1077. [Google Scholar] [CrossRef]

- Dangrungroj, P.; Udompitaksook, M.; Intarasuk, N.; Pluempitiwiriyawej, C.; Silawan, T. Estimation of Eucalyptus DBH from UAV-LiDAR Data Utilizing Advanced Point Cloud Processing Techniques. In Proceedings of the 2024 9th International Conference on Business and Industrial Research (ICBIR), Bangkok, Thailand, 23–24 May 2024; pp. 0356–0361. [Google Scholar] [CrossRef]

- Zhang, K.; Ma, S.; Zheng, R.; Zhang, L. UAV Remote Sensing Image Dehazing Based on Double-Scale Transmission Optimization Strategy. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6516305. [Google Scholar] [CrossRef]

- Sherstjuk, V.; Zharikova, M.; Sokol, I. Forest Fire Monitoring System Based on UAV Team, Remote Sensing, and Image Processing. In Proceedings of the 2018 IEEE Second International Conference on Data Stream Mining & Processing (DSMP), Lviv, Ukraine, 21–25 August 2018; pp. 590–594. [Google Scholar] [CrossRef]

- Harradi, R.; Heller, A.; Hardt, W. Decentralized UAV Hangar: A Study for Water Rescue Missions. In Proceedings of the 2024 International Symposium on Computer Science and Educational Technology (ISCSET), Lauta/Laubusch, Germany, 11–19 July 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Liu, S.; Zhou, W.; Qin, M.; Peng, X. An Improved UAV 3D Path Planning Method Based on BiRRT-APF. In Proceedings of the 2025 5th International Conference on Computer, Control and Robotics (ICCCR), Hangzhou, China, 16–18 May 2025; pp. 219–224. [Google Scholar] [CrossRef]

- Chang, Y.H.; Tsai, D.C.; Chow, C.W.; Wang, C.C.; Tsai, S.Y.; Liu, Y.; Yeh, C.H. Lightweight Light-Diffusing Fiber Transmitter Equipped Unmanned-Aerial-Vehicle (UAV) for Large Field-of-View (FOV) Optical Wireless Communication. In Proceedings of the 2023 Optical Fiber Communications Conference and Exhibition (OFC), San Diego, CA, USA, 5–9 March 2023; pp. 1–3. [Google Scholar] [CrossRef]

- Dou, Y.; Lian, Z.; Wang, Y.; Zhang, B.; Luo, H.; Zu, C. Channel Modeling and Performance Analysis of UAV-Enabled Communications With UAV Wobble. IEEE Commun. Lett. 2024, 28, 2749–2753. [Google Scholar] [CrossRef]

- Xu, C.; Liao, X.; Yue, H.; Deng, X.; Chen, X. 3-D Path-Searching for Uavs Using Geographical Spatial Information. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 947–950. [Google Scholar] [CrossRef]

- Chen, X.; Xu, H.; Huang, X.; Wang, Y.; Wang, L. LandExplorer-1: A 3D Survey and Monitoring Vehicle System for Natural Resources. IEEE J. Miniaturization Air Space Syst. 2025, 6, 215–221. [Google Scholar] [CrossRef]

- Ghazali, S.N.A.M.; Anuar, H.A.; Zakaria, S.N.A.S.; Yusoff, Z. Determining position of target subjects in Maritime Search and Rescue (MSAR) operations using rotary wing Unmanned Aerial Vehicles (UAVs). In Proceedings of the 2016 International Conference on Information and Communication Technology (ICICTM), Kuala Lumpur, Malaysia, 16–17 May 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Saadaoui, H.; El Bouanani, F. Information sharing based on local PSO for UAVs cooperative search of unmoved targets. In Proceedings of the 2018 International Conference on Advanced Communication Technologies and Networking (CommNet), Marrakech, Morocco, 2–4 April 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Bi, J.; Huang, W.; Li, B.; Cui, L. Research on the Application of Hybrid Particle Swarm Algorithm in Multi-UAV Mission Planning with Capacity Constraints. In Proceedings of the 2024 IEEE International Conference on Unmanned Systems (ICUS), Nanjing, China, 18–20 October 2024; pp. 928–933. [Google Scholar] [CrossRef]

- Chen, X.; Lin, Z.; He, H.; Hu, Q.; Cao, R. UAV Path Planning with No-Fly-Zone Constraints by Convex Optimization. In Proceedings of the 2021 40th Chinese Control Conference (CCC), Shanghai, China, 26–28 July 2021; pp. 7713–7717. [Google Scholar] [CrossRef]

- Zhou, Q.; Wang, Y. Design of Anti-Interference Path Planning for Cellular-Connected UAVs Based on Improved DDPG. In Proceedings of the 2024 10th IEEE International Conference on High Performance and Smart Computing (HPSC), New York, NY, USA, 10–12 May 2024; pp. 71–76. [Google Scholar] [CrossRef]

- McEnroe, P.; Wang, S.; Liyanage, M. Towards Faster DRL Training: An Edge AI Approach for UAV Obstacle Avoidance by Splitting Complex Environments. In Proceedings of the 2024 IEEE 21st Consumer Communications & Networking Conference (CCNC), Las Vegas, NE, USA, 6–9 January 2024; pp. 396–399. [Google Scholar] [CrossRef]

- Huang, S.X.; Lung, A.; Chen, J.Y.; Sung, W.M.; Chu, Y.C.; Tu, J.Y.; Hu, C.L. Multi-Hop Data Delivery in Hybrid Self-Organized Networks for Mountain Rescue. In Proceedings of the 2023 IEEE 12th Global Conference on Consumer Electronics (GCCE), Nara, Japan, 10–13 October 2023; pp. 117–118. [Google Scholar] [CrossRef]

- ZHOU, Y.; GOU, L.; WAN, J.; GUAN, W.; XU, N.; CHEN, Z.; HUANG, Y. Detection and Resolution Strategy of UAV Swarms based on KT-MF and De-RAM. IEEE Trans. Aerosp. Electron. Syst. 2025, 1–15. [Google Scholar] [CrossRef]

- Huang, H.; Zhou, H.; Zheng, M.; Xu, C.; Zhang, X.; Xiong, W. Cooperative Collision Avoidance Method for Multi-UAV Based on Kalman Filter and Model Predictive Control. In Proceedings of the 2019 IEEE International Conference on Unmanned Systems and Artificial Intelligence (ICUSAI), Xi’an, China, 22–24 November 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Hu, B.; Li, H.; Bu, S.; Chen, L.; Han, P. OriLoc: Unlimited-FoV and Orientation-Free Cross-View Geolocalization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 15508–15522. [Google Scholar] [CrossRef]

- Ding, Y.; Lu, W.; Zhang, Y.; Feng, Y.; Li, B.; Gao, Y. Energy Consumption Minimization for Secure UAV-enabled MEC Networks Against Active Eavesdropping. In Proceedings of the 2023 IEEE 98th Vehicular Technology Conference (VTC2023-Fall), Hong Kong, China, 10–13 October 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Yang, L.; Guo, D.; Liu, Y.; Feng, L. Joint Trajectory and Power Optimization for UAV-Assisted Communication Networks. In Proceedings of the 2024 10th International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2024; pp. 2542–2547. [Google Scholar] [CrossRef]

- Wang, F.; Yang, H.; Meng, Q. A three-dimensional coverage path planning method for multi-UAV collaboration. In Proceedings of the 2023 30th International Conference on Geoinformatics, London, UK, 19–21 July 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Pehlivanoğlu, Y.V.; Bekmezci, I.; Pehlivanoğlu, P. Efficient Strategy for Multi-UAV Path Planning in Target Coverage Problems. In Proceedings of the 2022 International Conference on Theoretical and Applied Computer Science and Engineering (ICTASCE), Rome, Italy, 29 September–1 October 2022; pp. 110–115. [Google Scholar] [CrossRef]

- HU, Y.; CHEN, Y.; WU, Z. Unmanned Aerial Vehicle and Ground Remote Sensing Applied in 3D Reconstruction of Historical Building Groups in Ancient Villages. In Proceedings of the 2018 Fifth International Workshop on Earth Observation and Remote Sensing Applications (EORSA), Xi’an, China, 18–20 June 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Zheng, K.; Liu, X.; Yang, J.; Cai, Z.; Dai, H.; Zhou, Z.; Xiao, X.; Gong, X. BRR-DQN: UAV path planning method for urban remote sensing images. In Proceedings of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021; pp. 6113–6117. [Google Scholar] [CrossRef]

- Raumonen, P.; Brede, B.; Lau, A.; Bartholomeus, H. A Shortest Path Based Tree Isolation Method for UAV LiDAR Data. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 724–727. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, L.; Luo, L.; Zeng, Y. A path planning method of UAV in mountainous environment. In Proceedings of the 2021 IEEE 5th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Xi’an, China, 15–17 October 2021; Volume 5, pp. 1399–1404. [Google Scholar] [CrossRef]

- Zhou, S.; Cheng, Y.; Lei, X.; Duan, H. Multi-agent few-shot meta reinforcement learning for trajectory design and channel selection in UAV-assisted networks. China Commun. 2022, 19, 166–176. [Google Scholar] [CrossRef]

- Al Baroomi, B.; Myo, T.; Ahmed, M.R.; Al Shibli, A.; Marhaban, M.H.; Kaiser, M.S. Ant Colony Optimization-Based Path Planning for UAV Navigation in Dynamic Environments. In Proceedings of the 2023 7th International Conference on Automation, Control and Robots (ICACR), Lumpur, Malaysia, 4–6 August 2023; pp. 168–173. [Google Scholar] [CrossRef]

- Zhao, C.; Liu, Y.; Yu, L.; Li, W. Stochastic Heuristic Algorithms for Multi-UAV Cooperative Path Planning. In Proceedings of the 2021 40th Chinese Control Conference (CCC), Shanghai, China, 26–28 July 2021; pp. 7677–7682. [Google Scholar] [CrossRef]

- Gruosso, G. Optimization and management of energy power flow in hybrid electrical vehicles. In Proceedings of the 5th IET Hybrid and Electric Vehicles Conference (HEVC 2014), London, UK, 5–6 November 2014; pp. 1–5. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, C.; Jiang, H.; Xiong, F. Online trajectory optimization based on successive convex optimization. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 2577–2582. [Google Scholar] [CrossRef]

- Zhao, W.; Cui, S.; Qiu, W.; He, Z.; Liu, Z.; Zheng, X.; Mao, B.; Kato, N. A Survey on DRL Based UAV Communications and Networking: DRL Fundamentals, Applications and Implementations. IEEE Commun. Surv. Tutor. 2025. Early Access. [Google Scholar] [CrossRef]

- Ren, Y.; Cao, X.; Zhang, X.; Jiang, F.; Lu, G. Joint Space Location Optimization and Resource Allocation for UAV-Assisted Emergency Communication System. In Proceedings of the 2022 IEEE 96th Vehicular Technology Conference (VTC2022-Fall), London, UK, 26–29 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Nayeem, G.M.; Fan, M.; Daiyan, G.M.; Fahad, K.S. UAV Path Planning with an Adaptive Hybrid PSO. In Proceedings of the 2023 International Conference on Information and Communication Technology for Sustainable Development (ICICT4SD), Dhaka, Bangladesh, 21–23 September 2023; pp. 139–143. [Google Scholar] [CrossRef]

- Zheng, J.; Sun, X.; Ji, Y.; Wu, J. Research on UAV Path Planning Based on Improved ACO algorithm. In Proceedings of the 2023 IEEE 11th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 8–10 December 2023; Volume 11, pp. 762–770. [Google Scholar] [CrossRef]

- Zhang, Z.; Tian, J.; Wang, D.; Qiao, J.; Li, T. TD3-based Joint UAV Trajectory and Power optimization in UAV-Assisted D2D Secure Communication Networks. In Proceedings of the 2022 IEEE 96th Vehicular Technology Conference (VTC2022-Fall), London, UK, 26–29 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Wang, Y.; Ren, T.; Fan, Z. Autonomous Maneuver Decision of UAV Based on Deep Reinforcement Learning: Comparison of DQN and DDPG. In Proceedings of the 2022 34th Chinese Control and Decision Conference (CCDC), Hefei, China, 15–17 August 2022; pp. 4857–4860. [Google Scholar] [CrossRef]

- Adhikari, B.; Khwaja, A.S.; Jaseemuddin, M.; Anpalagan, A. Sum Rate Maximization for RIS-assisted UAV-IoT Networks using Sample Efficient SAC Technique. In Proceedings of the 2024 IEEE 10th World Forum on Internet of Things (WF-IoT), Ottawa, ON, Canada, 10–13 November 2024; pp. 463–468. [Google Scholar] [CrossRef]

- Wan, Y.; Zhao, Z.; Tang, J.; Chen, X.; Qi, J. Multi-UAV Formation Obstacles Avoidance Path Planning Based on PPO Algorithm. In Proceedings of the 2023 9th International Conference on Big Data and Information Analytics (BigDIA), Haikou, China, 15–17 December 2023; pp. 55–62. [Google Scholar] [CrossRef]

- Pamuklu, T.; Syed, A.; Kennedy, W.S.; Erol-Kantarci, M. Heterogeneous GNN-RL-Based Task Offloading for UAV-Aided Smart Agriculture. IEEE Netw. Lett. 2023, 5, 213–217. [Google Scholar] [CrossRef]

- Du, Y.; Qi, N.; Li, X.; Xiao, M.; Boulogeorgos, A.A.A.; Tsiftsis, T.A.; Wu, Q. Distributed Multi-UAV Trajectory Planning for Downlink Transmission: A GNN-Enhanced DRL Approach. IEEE Wirel. Commun. Lett. 2024, 13, 3578–3582. [Google Scholar] [CrossRef]

- Liu, Y.; Mao, W.; Li, X.; Huangfu, W.; Ji, Y.; Xiao, Y. UAV-Assisted Integrated Sensing and Communication for Emergency Rescue Activities Based on Transfer Deep Reinforcement Learning. In Proceedings of the 30th Annual International Conference on Mobile Computing and Networking, ACM MobiCom ’24, New York, NY, USA, 18–22 November 2024; pp. 2142–2147. [Google Scholar] [CrossRef]

- Xiao, X.; Yi, M.; Wang, X.; Liu, J.; Zhang, Y.; Hou, R. Meta-Learning Deep Reinforcement Learning for Fresh Data Collection in UAV-Assisted Wireless Sensor Networks. In Proceedings of the 2024 22nd International Symposium on Modeling and Optimization in Mobile, Ad Hoc, and Wireless Networks (WiOpt), Seoul, Republic of Korea, 21–24 October 2024; pp. 118–123. [Google Scholar]

- Dhuheir, M.; Erbad, A.; Al-Fuqaha, A.; Seid, A.M. Meta Reinforcement Learning for UAV-Assisted Energy Harvesting IoT Devices in Disaster-Affected Areas. IEEE Open J. Commun. Soc. 2024, 5, 2145–2163. [Google Scholar] [CrossRef]

- Lin, Y.; Ni, Z.; Tang, Y. An Imitation Learning Method with Multi-Virtual Agents for Microgrid Energy Optimization under Interrupted Periods. In Proceedings of the 2024 IEEE Power and Energy Society General Meeting (PESGM), Seattle, DC, USA, 21–25 July 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Masayuki, U.; Tomoyuki, T. Visualization of Fighting Game Player Skills Using Imitation Learning Agents. In Proceedings of the 2024 IEEE 13th Global Conference on Consumer Electronics (GCCE), Kitakyushu, Japan, 29 October–1 November 2024; pp. 82–83. [Google Scholar] [CrossRef]

- Choi, J.H.; Kim, D.h.; Yoo, J.S.; Kim, B.J.; Hwang, J.T. Enhancing Autonomous Driving with Pre-trained Imitation and Reinforcement Learning. In Proceedings of the 2025 International Conference on Electronics, Information, and Communication (ICEIC), Osaka, Japan, 19–22 January 2025; pp. 1–3. [Google Scholar] [CrossRef]

- Zeng, Y.; Wu, Q.; Zhang, R. Accessing From the Sky: A Tutorial on UAV Communications for 5G and Beyond. Proc. IEEE 2019, 107, 2327–2375. [Google Scholar] [CrossRef]

- Sun, C.; Xiong, X.; Ni, W.; Wang, X. Three-Dimensional Trajectory Design for Energy-Efficient UAV-Assisted Data Collection. In Proceedings of the ICC 2022–IEEE International Conference on Communications, Seoul, Republic of Korea, 16–20 May 2022; pp. 3580–3585. [Google Scholar] [CrossRef]

- TS 36.331; Version 14.2.2; Evolved Universal Terrestrial Radio Access (E-UTRA), Radio Resource Control (RRC), Protocol Specification. 3rd Generation Partnership Project (3GPP): Sophia Antipolis Cedex, France, 2017.

- Survey, U.G. Southern San Andreas Fault from Painted Canyon to Bombay Beach, CA. OT Collection ID: OT.092021.32611.1, Collection Platform: Structure from Motion/Photogrammetry. 2021. Available online: https://portal.opentopography.org/datasetMetadata?otCollectionID=OT.092021.32611.1 (accessed on 5 September 2025).

- TR 25.943 V12.0.0; Requirements for Evolved UTRA (E-UTRA) Physical Layer Aspects. Technical Report. 3Gpp: Sophia Antipolis Cedex, France, 2013.

- TR 25.943 V4.2.0; Requirements for Evolved UTRA (E-UTRA) Physical Layer Aspects. Technical Report. 3Gpp: Sophia Antipolis Cedex, France, 2001.

| Symbol | Definition | Value | Unit |

|---|---|---|---|

| Task Space Left Edge | 0 | m | |

| Task Space Right Edge | 2000 | m | |

| Task Space Back Edge | 0 | m | |

| Task Space Front Edge | 2000 | m | |

| Task Space Bottom Edge | 50 | m | |

| Task Space Top Edge | 100 | m | |

| Maximum Mission Time | 2000 | s | |

| Detection Cycle | 10 | s | |

| Number of DPs in x Direction | 20 | s | |

| Number of DPs in y Direction | 20 | s | |

| Velocity Limitation at Horizontal Direction | 10 | m/s | |

| Velocity Limitation at Vertical Direction | 5 | m/s | |

| Discretized End Time | 2000 | - | |

| UAV Mass | 0.2 | kg | |

| g | Gravitational Acceleration | 9.8 | m/s2 |

| Air Density | 1.225 | kg/m3 | |

| Drag Coefficient | 0.5 | - | |

| UAV Fuselage Area | 0.01 | m2 | |

| Propeller Number | 4 | - | |

| Propeller Radius | 0.1 | m | |

| Mechanical Efficiency | 0.8 | - | |

| UAV Transmit Power | 17 | dBm | |

| Transmit Antenna Gain | 2 | dBi | |

| Receive Antenna Gain | 2 | dBi | |

| System Average Path Loss | 2 | - | |

| UAV Communication Frequency | 3.5 | GHz | |

| Boltzmann Constant | - | ||

| Temperature (Kelvin) | 298 | K | |

| c | Speed of Light | m/s | |

| Battery Capacity | 311,040 | J | |

| Static Power | 4 | W | |

| V | Voltage | 5 | V |

| Clock Frequency | Hz | ||

| Activity Factor | 0.5 | - | |

| Load Capacitance | 6.4 | nF | |

| Grid Number at Each Direction of per DP | 100 | - | |

| Coverage Rate Threshold | 0.85 | - | |

| Distance Threshold | 150 | m | |

| FOV Threshold | 45 | ∘ | |

| Uniform Time Step | 1 | s | |

| UAV Number | 4, 6, 10, 12, 15 | - |

| Symbol | Definition | Value |

|---|---|---|

| Penalty Parameter | 5 | |

| Penalty Parameter | ||

| Penalty Parameter | 50 | |

| Penalty Parameter | ||

| Penalty Parameter | 50 | |

| Weight Parameter | 20 | |

| Weight Parameter | ||

| Discounted Factor | 0.99 | |

| Learning Rate for Actor | ||

| Learning Rate for Critic | ||

| DQPN Training Batch Size | 128 | |

| FEN Training Batch Size | 128 | |

| MARL Training Batch Size | 128 | |

| Replay Buffer Size | ||

| Maximum Training Epoch of DQPN | 100 | |

| Maximum Training Epoch of FEN | 1000 | |

| Maximum Training Episode of MARL | 10,000 | |

| Parameter Initial Value | 1 | |

| Parameter Initial Value | 10 | |

| Maximum Training Epoch | 100 | |

| Soft Update Parameter | 0.01 | |

| Feature Normalization Coefficient | 1 | |

| Scenario Number | 10 | |

| Position Number | 80 |

| Algorithm | Average Task Completion Time |

|---|---|

| MADDPG | 1842 |

| MASAC | 1927 |

| MATD3 | 1839 |

| MAPPO | >2000 |

| FA-EIMARL | 1236 |

| Agent Number | Inference Time |

|---|---|

| 34.71 ms | |

| 35.36 ms | |

| 37.28 ms | |

| 37.42 ms | |

| 39.61 ms |

| Agent Number | Task Rewards |

|---|---|

| 4541.68 | |

| 4356.57 | |

| 4069.32 | |

| 3932.35 | |

| 3614.98 |

| Approach | Operation Time |

|---|---|

| DQPN Prediction | 0.4442 ms |

| Direct Computation | 525.9 ms |

| Average Overhead | Computational Parameter | Total Extra Overhead |

|---|---|---|

| 85.09 ms | 2300 | 872.2 ms |

| Algorithm | Inference Time |

|---|---|

| MADDPG | 162.39 ms |

| MASAC | 320.21 ms |

| MATD3 | 172.74 ms |

| MAPPO | 59.79 ms |

| FA-EIMARL | 34.76 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Q.; Tao, Y.; Su, Q.; Tsukada, M. A Feature-Aware Elite Imitation MARL for Multi-UAV Trajectory Optimization in Mountain Terrain Detection. Drones 2025, 9, 645. https://doi.org/10.3390/drones9090645

Zhou Q, Tao Y, Su Q, Tsukada M. A Feature-Aware Elite Imitation MARL for Multi-UAV Trajectory Optimization in Mountain Terrain Detection. Drones. 2025; 9(9):645. https://doi.org/10.3390/drones9090645

Chicago/Turabian StyleZhou, Quanxi, Ye Tao, Qianxiao Su, and Manabu Tsukada. 2025. "A Feature-Aware Elite Imitation MARL for Multi-UAV Trajectory Optimization in Mountain Terrain Detection" Drones 9, no. 9: 645. https://doi.org/10.3390/drones9090645

APA StyleZhou, Q., Tao, Y., Su, Q., & Tsukada, M. (2025). A Feature-Aware Elite Imitation MARL for Multi-UAV Trajectory Optimization in Mountain Terrain Detection. Drones, 9(9), 645. https://doi.org/10.3390/drones9090645