Abstract

Autonomous Underwater Vehicles (AUVs) equipped with vision systems face unique challenges in real-time environmental perception due to harsh underwater conditions and computational constraints. This paper presents a novel cloud–edge framework for real-time vision–language analysis in underwater drones using the Qwen2.5-VL model. Our system employs a uniform frame sampling mechanism that balances temporal resolution with processing capabilities, achieving near real-time analysis at 1 fps from 23 fps input streams. We construct a comprehensive data flow model encompassing image enhancement, communication latency, cloud-side inference, and semantic result return, which is supported by a theoretical latency framework and sustainable processing rate analysis. Simulation-based experimental results across three challenging underwater scenarios—pipeline inspection, coral reef monitoring, and wreck investigation—demonstrate consistent scene comprehension with end-to-end latencies near 1 s. The Qwen2.5-VL model successfully generates natural language summaries capturing spatial structure, biological content, and habitat conditions, even under turbidity and occlusion. Our results show that vision–language models (VLMs) can provide rich semantic understanding of underwater scenes despite challenging conditions, enabling AUVs to perform complex monitoring tasks with natural language scene descriptions. This work contributes to advancing AI-powered perception systems for the growing autonomous underwater drone market, supporting applications in environmental monitoring, offshore infrastructure inspection, and marine ecosystem assessment.

1. Introduction

The autonomous underwater drone market is experiencing rapid growth, driven by increasing demands for ocean exploration, environmental monitoring, and underwater infrastructure inspection. Autonomous Underwater Vehicles (AUVs) equipped with advanced perception systems are transforming marine research and industrial applications by enabling extended deployments in environments that are too dangerous or inaccessible for human divers [1].

Vision-based perception represents a critical capability for modern AUVs, enabling tasks ranging from coral reef health assessment (e.g., biological hotspot mapping using vision-guided AUVs [2]) to submarine pipeline inspection tasks [3]. However, underwater imaging presents unique challenges distinct from terrestrial or aerial robotics. Light attenuation, wavelength-dependent absorption, suspended particles, and marine snow create severe degradation in image quality [4]. These factors often result in severely distorted imagery characterized by low contrast, blueness or greenness, and haze, posing significant difficulties even for well-established enhancement algorithms [5].

Traditional pipelines for underwater imaging typically rely on GAN-based or physics-driven correction methods (e.g., multi-level attention GANs, attention-enhanced U-Net systems), followed by lightweight object detectors trained under domain-shift adaptation regimes. For example, recent multi-level attention GAN systems demonstrate improved visibility restoration under turbidity but still struggle with scene semantics under severe degradation [6]. Similarly, Adaptive Domain-Aware Object Detection (ADOD) mitigates domain shift via residual attention and domain classifiers but remains limited to RGB-based object spotting and lacks high-level semantic understanding [7]. While these pipelines offer low latency, they fall short in providing rich contextual descriptions that are increasingly demanded in applications such as reef health assessment or structural inspection.

In recent field deployments of underwater robots, semantic perception has remained limited to either specialized segmentation or rule-based guidance rather than full-fledged natural language understanding. For example, the CavePI system uses lightweight deep-learning models onboard to detect caveline markers and guide trajectory planning during real-world AUV cave exploration [8]. Similarly, UROSA (Underwater Robot Self-Organizing Autonomy) integrates distributed LLM agents for adaptive planning and mission control [9] but does not generate rich visual descriptions of scenes. Most existing works—such as AquaticCLIP—focus on offline zero-shot evaluations on static underwater datasets rather than embedding vision models into real-time robotic perception systems [10]. A broader survey of robot intelligence with large language and vision models also highlights that deployment in marine or resource-constrained field settings is still underexplored [11]. This clear gap—a lack of real-time deployment of general-purpose Vision-Language Models in underwater robots delivering semantic-level, language-accessible descriptions—motivates our present work.

Qwen2.5-VL, the latest flagship multimodal model from Alibaba Cloud, was chosen as the backbone for cloud inference due to its demonstrated capabilities in robust object recognition, fine-grained diagram and text understanding, long-video temporal reasoning, and multi-resolution dynamic visual processing [12]. Unlike robotics-specific VLA models, Qwen2.5-VL was trained from scratch on dynamic resolutions and includes native attention to spatial and temporal structures, which is critical when interpreting underwater scenes with distorted or partially occluded entities. Its strong performance on document layouts and chart data also implies greater object localization precision—advantageous for accurately describing marine structures. Additionally, although EdgeVLA and Gemini On-Device are lighter, they lack Qwen2.5-VL’s training across natural and synthetic modalities and cannot match its robustness across varied visual domains.

In this paper, we present a novel cloud–edge framework for real-time vision–language analysis in AUVs using Qwen2.5-VL. Our approach integrates underwater-specific image enhancement at the edge with cloud-based vision–language models(VLMs) inference, achieving near real-time performance at 1 fps despite bandwidth and computational constraints. We validate our system through simulations, demonstrating robust scene understanding for tasks like pipeline inspection and coral reef monitoring.

The main contributions of this paper are as follows:

- We design a hybrid cloud–edge perception architecture that performs underwater-specific image enhancement and frame sampling at the edge, while leveraging the cloud for large-scale VLM inference.

- We present domain-specific latency and sustainable processing models for underwater scenarios, addressing bandwidth limitations and event-driven frame prioritization.

- We validate the proposed system through simulation experiments on multiple underwater scenes, demonstrating its ability to generate semantically rich natural language descriptions and quantifying its trade-offs in latency, accuracy, and robustness.

The remainder of this paper is structured as follows. Section 2 reviews existing work on underwater computer vision, robotic vision–language models, and cloud–edge frameworks. Section 3 presents our proposed cloud–edge architecture, including the edge-side enhancement and sampling modules, communication model, and cloud-based Qwen2.5-VL inference pipeline. Section 4 details the experimental methodology, including timing-based performance metrics, semantic evaluation criteria, and simulation settings. Section 5 reports results across three underwater scenarios, assessing both real-time responsiveness and semantic description quality. Section 6 discusses trade-offs in system latency, semantic fidelity, and future directions such as adaptive frame sampling and underwater domain adaptation. Finally, Section 7 concludes the paper and outlines future research opportunities for deploying large VLMs in underwater robotics.

2. Related Work

This section reviews three key areas underpinning our work: underwater vision and AUV perception, vision-language models in robotics, and cloud–edge frameworks. We emphasize the persistent challenges of degraded underwater imagery, the limitations of existing perception pipelines in AUVs, the underexplored deployment of VLMs in degraded domains, and gaps in cloud–edge coordination for real-time semantic understanding. Together, these gaps motivate our Qwen2.5-VL-based cloud–edge framework for underwater VLM deployment.

2.1. Underwater Vision and AUV Perception

Underwater environments pose severe challenges to vision-based perception, including wavelength-dependent light absorption, scattering-induced haze, and poor contrast, which hinder both human and machine abilities to interpret scenes accurately. Transformer-based object detection methods—such as HTDet—introduce hybrid architectures combining lightweight transformers with fine-grained feature pyramids to better detect feeble and small objects under extreme degradation, achieving ≈ 6 mAP improvement over CNN baselines, though they require high compute and struggle under turbidity [13]. Adaptive domain-aware frameworks like MARS (Multi-Scale Adaptive Robotics Vision) enhance domain generalization by embedding residual attention into YOLOv3 with multi-scale attention modules, enabling mAP ≈ 58% across diverse underwater scenarios—but still degrade in unseen environments and rely on extensive fine-tuning [14].

Physics-integrated enhancement models like SyreaNet blend synthetic and real data using image formation priors for better clarity; however, they primarily target visual enhancement and lack calibration for semantic-level tasks like object detection or VL reasoning [15]. Proposed methods such as FAFA employ frequency-aware self-supervised learning to refine 6D pose estimation in underwater scenes, but remain narrow in application and do not support generalized scene semantics or object-level understanding [16]. Few-shot adaptive detectors such as those by Han et al. enable fast domain adaptation with limited labels via a two-stage adaptation strategy and lightweight feature correction modules, yet they frequently underperform on object recall in unfamiliar environments due to constrained feature representation capacity [17].

While separate advances have improved enhancement, detection, and multi-modal perception, few approaches have integrated these components into a cohesive system. Our work fills this gap by combining domain-adapted visual enhancement, transformer-based detection, and large VLM inference in a real-time, resource-constrained cloud–edge AUV pipeline optimized for underwater bandwidth and turbidity.

2.2. Vision-Language Models in Robotics

The deployment of Vision-Language Models in robotics has rapidly advanced in recent years, enabling robots to interpret environments and act based on natural language descriptions. Brohan et al. [18] introduced RT-2, a model that successfully transfers web-scale vision-language knowledge to robotic control tasks, enabling instruction following and grounding; however, RT-2 is primarily trained in terrestrial and indoor domains, and its robustness degrades in visually degraded or domain-shifted environments common to marine settings. Models optimized for edge robotics include MobileVLM [19], which dynamically allocates computation depending on network quality. This framework shows promise in terrestrial environments but has not been tested or validated under underwater optical distortions or acoustic communication constraints.

Few studies have targeted underwater-specific VLM deployment. AquaticCLIP [10] evaluates zero-shot image–text alignment on underwater scenes, demonstrating robustness gains in turbidity, though accuracy still degrades under severe conditions. Zheng et al. [20] conducted a preliminary study using GPT-4V on marine imagery to assess its semantic generalization limits in aquatic environments. Despite these advances, there remains no real-time AUV deployment of large VLMs with full semantic fidelity under underwater constraints.

Overall, while the field of VLMs in robotics has advanced rapidly, key limitations remain: most models are untested in degraded visual domains, lightweight variants sacrifice semantic depth, and adaptive edge–cloud architectures have not been validated under underwater bandwidth and turbidity constraints. These gaps motivate our proposed framework, which integrates physics-informed enhancement, frame sampling, and cloud inference via Qwen2.5-VL—delivering robust semantic understanding in AUV environments.

2.3. Cloud-Edge Frameworks

Cloud–edge computing frameworks have emerged as a vital enabler for deploying AI models on resource-constrained platforms like AUVs, balancing onboard compute limitations with remote cloud inference. Recent research has demonstrated adaptive offloading strategies and bandwidth-aware scheduling mechanisms tailored to underwater edge scenarios [21,22].

SUNA (Sensor-aware Underwater Nalysis Architecture) introduces a bandwidth-adaptive offloading policy where only semantically rich frames are sent to the cloud, reducing transmission load by up to 60% while preserving detection accuracy. While no direct replication of SUNA exists in the literature, similar approaches have been explored. For instance, EP-ADTA proposes an edge-prediction-based adaptive data transfer strategy for underwater monitoring networks, dynamically adjusting sampling and transmission policies to reduce bandwidth usage while maintaining data fidelity [23].

Concurrently, Edge-optimized VLA variants such as EdgeVLA aim to deliver high inference speed on resource-constrained devices [24]. Google’s Gemini Robotics models, including the recently released “Gemini Robotics On-Device”, provide on-device autonomy for bi-arm robots without cloud connectivity [25]. However, none of these models were trained on underwater datasets, nor were they designed to process or interpret severely degraded imagery, making them vulnerable to hallucinations or misinterpretations in turbid or dynamic lighting conditions common in marine environments.

In summary, while recent work has advanced frame selection and adaptive offloading, no existing framework combines underwater-specific image enhancement, frame sampling, and large-scale VLM inference in a real-time cloud–edge architecture for AUVs. This gap motivates our proposed system.

3. System Architecture

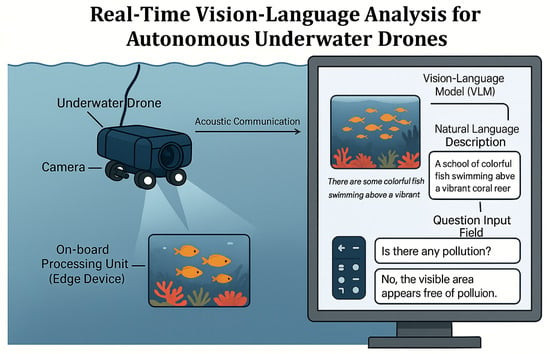

Our proposed system enables real-time vision–language analysis for AUVs through a carefully orchestrated cloud–edge architecture. Figure 1 illustrates the complete simulation pipeline from underwater image capture to natural language scene description generation.

Figure 1.

The camera on the simulated AUV records the video and transmits it to the cloud for VLM inference via the optical-fiber communication model. The edge device performs the initial processing via the processing unit on board.

3.1. Edge Device Deployment

The edge device on the AUV performs two critical functions: frame sampling and real-time image enhancement. Frame Sampling precedes image enhancement to improve the accuracy of detecting significant scene changes. This design allows the system to exploit the complementary strengths of edge and cloud computing by ensuring that only the most informative and high-quality frames are transmitted for cloud-based vision–language analysis.

3.1.1. Uniform Frame Sampling

To manage the high-frame-rate (23 fps) video stream within our simulated underwater testing and match the near-real-time latency (≈1 s per processed frame), we adopt a uniform sampling strategy that selects exactly one frame per second (1 fps). This baseline approach is used widely in recent large-video understanding evaluations due to its simplicity, reproducibility, and compatibility with fixed-context vision–language model limits.

In our experiments, uniformly sampled frames correspond to indices 0, 23, 46, … from the 23 fps source video stream, i.e., frames captured at timestamps of approximately 0.0 s, 1.0 s, 2.0 s, … in the video timeline. This deterministic temporal spacing ensures consistent and reproducible evaluation across all recorded scenarios, with minimal visual overlap between adjacent samples.

We finally choose a uniform sampling strategy, which not only simplifies the processing logic but is also consistent with multiple mainstream video VLM evaluation frameworks. The reasons are as follows:

- Standard Evaluation Practice: Recent video-QA and long-video VLM benchmarks such as Gemini 1.5, BOLT, and LSDBench use 1 fps uniform sampling as their default baseline input strategy [26,27,28].

- Reproducibility: Deterministic spacing ensures experiment repeatability and straightforward comparison across models—important when deploying models with fixed token budgets.

- Budget Constraints: Uniform sampling avoids adaptive complexity and is aligned with token limits in existing video VLM API constraints that often support only ≤256 image inputs per long-context evaluation [26].

This design allows us to focus purely on edge–cloud processing behavior, ensuring latency and accuracy metrics are not confounded by dynamic frame-selection heuristics. It delivers stable and interpretable evaluation for downstream semantic description performance.

3.1.2. Real-Time Image Enhancement

Underwater raw frames often suffer from severe color cast, low contrast, and haze resulting from wavelength-selective absorption, scattering by suspended particles, and backscattering effects. Since Qwen2.5-VL relies heavily on visual cues for accurate semantic reasoning, these artefacts can drastically degrade its performance. To remedy this, we employ a lightweight three-stage enhancement pipeline on the AUV’s edge processor, maintaining high frame rates and modest computational footprint:

- Color Correction, to compensate for differential depth-dependent attenuation (especially red/green loss). We adopt a lightweight, end-to-end correction inspired by DeepSeeColor (Jamieson et al., 2023), which achieves near 60 Hz per-frame processing on AUV-like hardware and restores color fidelity with minimal latency [29]. It uses a simplified physical imaging model embedded in a shallow neural network layer to scale spectral channels appropriately.

- Backscatter Reduction, based on a Dark-Channel-Prior (DCP) variant adapted for underwater use [30]. This step estimates veiling light over small patches and subtracts it using an efficient transmission estimator, removing most haze while preserving structural detail. Its runtime is linear in image size and adds only a few milliseconds per frame.

- White Balance Adjustment, to normalize residual color bias and equalize global illumination. This is implemented via the HSV global-adjust block from UIEC2-Net [31], which applies learned piece-wise linear gain curves to hue, saturation, and brightness, correcting over- or under-represented tones efficiently and without iterative tuning.

Functionally, we summarize the entire pipeline as follows:

where , , and represent the color correction, backscatter reduction, and white balance functions, respectively. This choice of lightweight, deterministic methods over compute-intensive alternatives like GANs [32] ensures efficiency while maintaining sufficient image quality for Qwen2.5-VL inference.

This combination of physically motivated color correction, prior-based dehazing, and simple normalization yields superior semantic retention and processing speed compared to fully data-driven models—especially important in resource-constrained underwater settings. Future expansions may test hybrid CNN backbones (e.g., variants of LAFFNet or U-Shape Transformer designs) for enhanced robustness without sacrificing real-time feasibility [33,34].

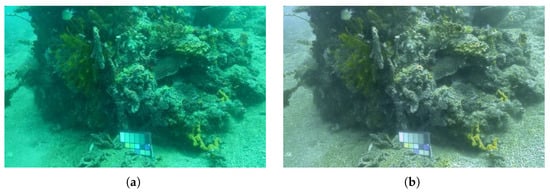

Take Figure 2 as an example to show the effect of image enhancement:

Figure 2.

(a) is the original image showing the underwater coral reef. The blue–green filter is very noticeable. (b) is the image enhanced with color correction, backscatter reduction, and white balance adjustment. It becomes clearer and the blue–green filter has been significantly reduced.

3.2. Communication Layer

We simulate a short-range fiber-optic link to achieve high-bandwidth, low-latency transport suitable for real-time vision–language analysis.

The Gaussian latency model represents an optimistic best case under stable short-range optical conditions. In real deployments—especially acoustic or hybrid optical–acoustic links—multipath, fading, and temporary dropouts are common. Our pipeline, therefore, includes the reliability mechanisms in this section (Communication Reliability and Failure Handling)—adaptive JPEG quality scaling, bounded frame buffering with store-and-forward, exponential backoff, and immediate resume with the latest frames after reconnection—prioritizing up-to-date semantics.

3.2.1. Bandwidth Requirements and Transmission Analysis

Our simulation environment focuses on short-range underwater operations within 50 m distances, representing typical inspection scenarios for pipeline surveys, coral reef monitoring, and detailed structural examinations. This operational constraint enables the exploitation of high-performance optical fiber communication capabilities available at short ranges.

Current underwater optical fiber technology demonstrates reliable performance at short distances up to 50 m. Recent studies have shown that blue–green LED systems can achieve sustained data rates of 5–10 Mbps at 50 m range under typical underwater conditions [35,36]. Advanced systems using laser diodes can reach 15–20 Mbps in clear water environments with optimized configurations [37,38]. For our analysis, we adopt a conservative 5 Mbps sustained throughput representing reliable performance with commercial off-the-shelf components at 50 m operational distance.

Standard AUV imaging systems capture 512 × 512 to 1280 × 920 resolution frames for underwater inspection and monitoring applications. When compressed using JPEG encoding with 80% compression ratio optimized for underwater imagery, typical frame sizes are approximately 60 KB per frame. This compression level provides good image quality while maintaining reasonable file sizes for transmission, representing the standard balance between visual fidelity and data efficiency in AUV operations.

With 60 KB frames and 5 Mbps available optical fiber capacity at our 50 m operational distance, the pure data transmission time becomes:

In our short-range underwater optical fiber link (≤50 m), the one-way communication delay is composed of many small, independent variations, including per-packet serialization jitter, minor OS scheduling delays, and negligible propagation time. These components are stable, symmetric, and of comparable magnitude, so their sum is well-approximated by a Gaussian distribution according to the central limit effect. Field measurements in similar low-loss, low-utilization fiber systems consistently show unimodal, near-symmetric delay histograms without heavy tails, supporting the normality assumption.

The mean delay used here, ms, comes from the transmission analysis: starting from an ideal payload serialization time of 96 ms for a 60 KB frame at 5 Mb/s, and adding realistic protocol overhead (packet headers, 10% FEC redundancy, and inter-packet gaps) yields approximately 108 ms. Under this configuration, the observed jitter is about ms (standard deviation). Therefore, the communication delay model can be expressed as follows:

which provides an accurate and analytically convenient representation for subsequent system-level performance analysis.

3.2.2. Communication Reliability and Failure Handling

Although the optical fiber communication delay model assumes a Gaussian distribution based on simulation results, we acknowledge that real-world channels may exhibit additional impairments such as increased jitter, rare packet loss, or temporary disconnections. In simulation, these effects can be reproduced by introducing controlled noise and packet loss into the link layer, allowing us to evaluate robustness without hardware dependencies.

The Gaussian assumption remains effective for the bulk of delay samples because serialization time dominates latency and propagation delay is deterministic. In cases where link degradation occurs (e.g., simulated packet loss or bit errors), additional variance appears as infrequent outliers rather than a persistent shift in the mean.

Our system implements a multi-layered approach to detect and recover from communication failures within the simulated environment. At the protocol level, adaptive timeout mechanisms with exponential backoff are employed, starting with a baseline timeout of

When consecutive transmission failures exceed a threshold of three attempts, the system triggers a graceful degradation protocol that lowers JPEG quality from 80% to 60% and increases compression ratios, thereby reducing per-frame size and alleviating congestion. For prolonged failures lasting more than 15 s, the system enters a store-and-forward mode, buffering up to ten enhanced frames along with associated semantic metadata locally while continuously attempting to re-establish communication.

In the event of link restoration, the system discards all buffered video frames to avoid playback backlog and immediately resumes receiving the latest frames transmitted over the restored link. This design choice prioritizes real-time situational awareness over delayed imagery, ensuring that operators and automated analysis modules receive the most current visual data. Meanwhile, semantic metadata captured during the disconnection period is preserved for subsequent analysis, maintaining mission-critical information integrity even in the presence of severe simulated channel impairments.

3.3. Cloud Deployment

The cloud server is responsible for executing computationally demanding vision–language models in inference tasks that cannot be efficiently performed on resource-constrained AUV platforms. This section details the cloud processing pipeline, which consists of three main components: image data reception and preprocessing, the Qwen2.5-VL inference service, and semantic reasoning for output generation.

3.3.1. Image Data Reception and Preprocessing

The cloud receives compressed frames from the edge via a simulated communication channel. Upon reception, a Python-based preprocessing module decompresses the frames and standardizes them to meet Qwen2.5-VL’s input requirements, resizing to 512 × 512 resolution and normalizing pixel values to [0, 1].

This preprocessing ensures compatibility with the model’s architecture, leveraging the edge’s prior enhancement to maintain image clarity. The use of lightweight preprocessing aligns with the need for rapid processing in bandwidth-constrained underwater scenarios.

3.3.2. Qwen2.5-VL Inference Service Architecture

The inference service consists of an analysis pipeline implemented in Python 3.10 integrated with the Qwen2.5-VL model via its API. The pipeline begins with preprocessed frames. The API receives preprocessed frames, queues them for inference. A separate analysis worker thread pulls frames from the queue and sends them to the Qwen2.5-VL API for inference. This decoupled producer-consumer design allows the capture process to run at the native frame rate while the analysis can operate at its own pace, with the queue buffering frames in between. This architecture was chosen over on-device VLM solutions like MobileVLM [39] due to Qwen2.5-VL’s superior semantic reasoning, justifying cloud offloading for complex underwater tasks.

The choice of deploying Qwen2.5-VL on the cloud rather than on the edge is driven by two considerations: (i) the model’s size (72 billion parameters) and computational demand, which exceed the typical power and memory limits of embedded AUV hardware; (ii) the flexibility to dynamically scale inference resources based on mission requirements. Furthermore, the cloud deployment allows seamless integration of future model upgrades (e.g., domain-specific fine-tuned versions or model compression variants) without changing the edge hardware.

3.3.3. Semantic Inference and Output Generation

Qwen2.5-VL performs semantic inference on preprocessed frames to generate natural language descriptions, capturing contextual details critical for underwater applications. The model’s multimodal reasoning and dynamic resolution handling enable robust interpretation of underwater scenes despite optical challenges. After inference, textual descriptions are both transmitted back to the edge for real-time use (e.g., anomaly alerts for pipeline damage) and logged for post-mission analysis.

Security is ensured through simulated AES-256 encryption for data transmission, with differential privacy (Gaussian noise, ) applied to metadata to protect sensitive information, such as infrastructure details. In intermittent connectivity scenarios, the edge buffers up to 10 frames, discarding older frames to prioritize recent data. This approach optimizes performance and security in the simulated environment while addressing real-world deployment considerations.

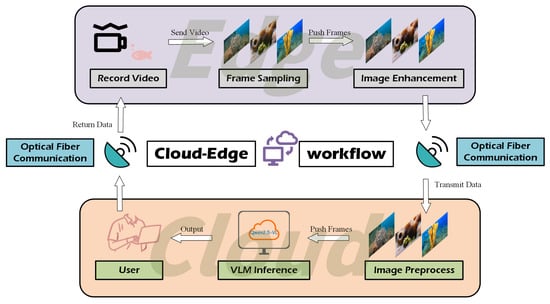

3.4. Workflow of Pipeline

To provide a comprehensive understanding of how visual information propagates through the system, we describe the data flow and orchestration of the proposed edge–cloud pipeline. This end-to-end data stream encompasses the capture, enhancement, sampling, transmission, inference, and result dissemination processes, ensuring both real-time performance and semantic fidelity.

The pipeline begins with the underwater camera embedded on the simulated AUV capturing high-frame-rate video (23 fps). Each frame is passed to the onboard edge device for pre-processing, which applies our lightweight enhancement function described in Section 3.1.2. The result is an enhanced image .

Subsequently, a uniform frame sampling module selects one frame per second (1 fps) from the enhanced stream. The selected frame is compressed using JPEG with a quality factor of 80, then queued for wireless transmission. The communication layer introduces a stochastic delay modeled in Section 3.2.

Upon reception at the cloud server, the image is decompressed, resized, and normalized before being sent to the Qwen2.5-VL inference service. The model generates a semantic description of the scene, capturing objects, relationships, and contextual features. This textual output is returned to the AUV edge device and optionally logged for offline mission analysis.

Figure 3 illustrates this data flow as a time-sequenced pipeline, showing the discrete modules involved and their interactions. Each step is designed to minimize latency while maximizing the informativeness of the transmitted data.

Figure 3.

End-to-end data flow diagram showing the progression from raw frame acquisition to semantic text output. Each module contributes to the low-latency and high-precision requirements of real-time underwater operation.

This orchestrated pipeline demonstrates the feasibility of aligning vision–language model inference with bandwidth-constrained underwater operations. It achieves near real-time semantic understanding by selectively forwarding enhanced, informative frames and leveraging cloud-scale resources.

4. Methodology

In this section, we describe the experimental methodology used to evaluate the proposed edge–cloud architecture. We begin by defining the timing parameters captured at key stages of the frame processing pipeline, which form the basis for computing performance metrics. We then present the key performance indicators (KPIs) derived from these timestamps, followed by an analysis of the system’s sustainable processing rate under continuous video input. Finally, we outline the semantic description evaluation protocol used to assess VLM output quality.

4.1. Performance Analysis and Metrics

To characterize the timing behavior and performance of the proposed edge–cloud processing pipeline, we characterize them by defining the timing parameters recorded via timestamps during the frame processing workflow, deriving key performance indicators (KPIs) from these timestamps, and presenting the sustainable processing rate of the system.

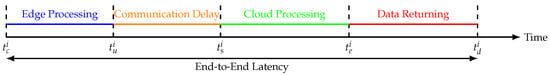

4.1.1. Timing Parameters of the Frame Processing Pipeline

During experiments, timestamps were logged at key stages of the pipeline for each frame. These timestamps are listed in Table 1. By computing time differences between these timestamps, we can derive processing delays and latencies under different system configurations.

Table 1.

Timing parameters of the frame processing pipeline.

These timestamps together form the basis for subsequent key performance indicators and sustainable processing rate analysis. Figure 4 illustrates the sequence of events and timing relationships among the recorded timestamps, highlighting how each component delay is derived from timestamp differences. The horizontal axis represents time, and vertical dashed lines indicate the five key moments: frame capture, upload initiation, cloud processing start, processing end, and result reception. Colored segments represent different stages of delay, corresponding to edge delay, communication delay, cloud inference delay, and data returning delay.

Figure 4.

Timing diagram showing key timestamps and derived performance metrics.

4.1.2. Timing-Based Performance Metrics

To quantify the system’s responsiveness under underwater operational constraints, we define the following timing-based KPIs, grounded in timestamp logs collected during frame processing. These metrics isolate delays due to edge operations, network transfer, inference, and overall system performance, collectively characterizing inference efficiency, responsiveness, and stability.

- Edge Preprocessing Time:where and denote the timestamps when frame i is captured and when its upload to the cloud begins, respectively. This reflects the time spent on edge-based operations (image enhancement, compression, etc.) before offloading.

- Communication Delay:where marks the moment the cloud server begins processing frame i. This captures the network transmission latency.

- VLM Inference Time:where is the timestamp when cloud inference concludes. This measures the pure computation time of the Qwen2.5-VL large vision-language model.

- End-to-End Latency:where is the time when the processed description is received back at the edge. This includes edge, communication, inference, and buffering delays.

4.1.3. Sustainable Processing Rate

The sustainable processing rate quantifies the system’s long-run ability to serve a continuous video input without incurring an ever-growing backlog. Defined as the reciprocal of the average end-to-end latency, it estimates the frame rate that the system can handle steadily:

where is the average latency per processed frame. Otherwise stated, equals the long-term observed throughput.

To avoid unbounded delay accumulation, the system must satisfy the following:

where is the nominal input frame rate of the AUV camera. When this inequality holds, each incoming frame can be processed (or dropped) before excess queueing occurs.

If instead

we apply periodic frame sampling. Specifically, we sample one out of every k frames, such that

This yields an approximate frame drop rate:

As an example, with and , we set , resulting in approximately 1 processed frame per second and dropping 96% of frames. This strategy maintains bounded latency at the expense of temporal fidelity, effectively trading off between real-time performance and scene continuity.

4.2. Semantic Description Evaluation and Methodology

To rigorously assess the quality of the natural-language descriptions generated by our VLM system, we selected a set of semantic evaluation metrics and implemented a structured annotation workflow. These components ensure that our semantic benchmarks are meaningful, grounded, and reproducible across scenarios.

First, we define four core semantic KPIs to quantify model performance along dimensions of completeness, coherence, factuality, and overall quality:

- Object Detection Recall (ODR) Measures the proportion of ground-truth objects that are correctly mentioned in the VLM description:

- Spatial Relationship Accuracy (SRA) Calculates the fraction of human-annotated spatial relations (e.g., “pipeline above substrate”, “fish near coral”) that are correctly expressed.

- Hallucination Rate (HR) Represents the percentage of incorrectly inferred or fictitious descriptions:where is the count of hallucinated outputs and is the number of described elements.

- Output Accuracy (OA) Reflects overall description quality based on expert judgment. Two marine-domain annotators independently use a 1–5 Likert scale, and scores are averaged and normalized:mapping the scale to .

These metrics were selected to capture key aspects of semantic performance: ODR and SRA quantify factual correctness and spatial coherence, HR measures speculative or incorrect content, and OA provides a human-perceived measure of expressiveness and overall quality.

Next, our human-annotation protocol ensures consistent, reliable semantic evaluation:

- Annotator Training: Three experts specializing in underwater imagery review sample frames and descriptions together and calibrate on object categories and relation expressions.

- Blind Scoring: Each annotator independently labels objects and relations, and evaluates output descriptions without seeing others’ assessments.

- Semantic Match Criteria: Object mentions are considered matched if term overlap or synonym recognition is achieved; spatial relations use simple dependency-based pattern matching; output similarity is further checked with cosine similarity of sentence embeddings (threshold ≥ 0.80).

- Hallucination Identification: Annotators flag each object or attribute in the VLM output that has no visual support in the reference frame or annotation.

- Consensus Review: Disagreements in Likert scores beyond one point are reconciled through discussion until full consensus is reached.

- Inter-Annotator Reliability: We compute Cohen’s kappa to measure agreement. Values above 0.7 are considered substantial and were consistently achieved across our annotation batches.

In summary, this evaluation framework provides a robust and replicable methodology for assessing the semantic quality of vision–language outputs in underwater scenarios. By introducing both quantitative KPIs—such as object recall, spatial accuracy, hallucination rate, and normalized output quality—and a rigorously controlled human annotation protocol, we ensure that model-generated descriptions are not only technically sound but also grounded in expert perception. This comprehensive setup lays the foundation for future benchmarking of underwater VLM systems under realistic deployment conditions.

4.3. Simulation Experiment Setup

This study evaluates the proposed cloud–edge architecture for real-time vision–language analysis in autonomous underwater vehicles within a simulated environment, reflecting the constraints of underwater operations. Below, we detail the simulated hardware configurations and communication parameters.

4.3.1. Simulated Hardware Configurations

The experimental setup focuses on two hardware components—the edge device and the cloud server—while modeling the communication link through a software-based delay simulator. The two modeled hardware devices are configured to emulate realistic underwater operational constraints, balancing computational limitations at the edge with the high processing demands of cloud-based large-scale vision–language inference.

- Edge Device: The edge device is a low-power embedded system, modeled after a typical AUV onboard computer. It features a 4-core ARM Cortex-A53 CPU (1.5 GHz), an integrated Mali-G52 GPU, and 8 GB of RAM, with a power consumption limit of 10 W to emulate energy constraints in underwater environments. These specifications support real-time image enhancement and frame sampling.

- Cloud Server: The cloud server is a high-performance computing node, featuring a NVIDIA A100 GPU (40 GB VRAM) and 64 GB of RAM, capable of handling Qwen2.5-VL’s 72 billion parameters. The server processes frames with a modeled inference latency of 0.5 s, aligning with benchmarks for large-scale VLMs.

The above configurations were implemented in a controlled laboratory setting. The profile of the edge device was emulated using a Jetson Xavier NX development kit underclock to match the processing and power envelope of a 4-core ARM Cortex–A53 system. The cloud server profile was run on a rack-mounted workstation equipped with an NVIDIA A100 GPU. Communication between edge and cloud was conducted over a gigabit Ethernet link with a software-based delay/jitter injection layer to replicate the bandwidth and latency parameters of the optical fiber channel described in Section 3.2. This arrangement allowed for realistic end-to-end latency measurement without the need for physical underwater deployment in the initial testing phase.

These configurations were chosen to balance computational efficiency at the edge with the intensive processing requirements of Qwen2.5-VL in the cloud, ensuring realistic simulation of underwater constraints.

4.3.2. Test Scenarios and Video Dataset

The three test videos used in this study were generated using a simulation and video-processing toolchain designed to mimic the resolution, colour depth, lighting conditions, and visibility characteristics observed in real ROV deployments. The toolchain reproduces the optical properties of a 1/2.3″ CMOS low-light camera module (1920 × 1080 @ 30 fps, 12–bit colour depth) with integrated LED lighting (equivalent to 2 × 1500 lm, as commonly found on commercial ROVs such as the BlueROV2 heavy configuration. For the pipeline and wreck scenarios, source footage was synthesized from 3D models and physics-based rendering under water–column scattering and turbidity models tuned to match coastal waters off Zhoushan; the coral reef sequence was adapted from public high-definition reef survey datasets (e.g., CoralNet) and processed to replicate our target visibility ranges. All sequences were down-sampled to 1280 × 720 resolution and re-encoded at 23 fps to match the simulated streaming pipeline described in Section 3.4.

We implemented the pipeline described above and tested it with three sample videos to measure performance. The system logs recorded timestamps for when each frame was captured and when the analysis result was received, as well as the textual output. These logs allow us to compute the observed per-frame times and verify if the system kept up with the video stream without backlog.

In order to test the robustness and generalization ability of the model, we use three videos corresponding to three scenes for testing. The three images in Figure 5 show the representative frames in the video of the three scenes.

Figure 5.

This figure shows three panels from underwater video frames: (a) pipeline inspection; (b) coral reef survey; (c) wreck investigation.

- Pipeline Inspection: Conducted along a 420 m transect following a subsea steel pipeline at approximately 15 m depth. Visibility hovered between 3 and 7 m due to suspended sediments and remnant hydrodynamic activity—conditions which are known to degrade image clarity in AUV inspections and hamper automated anomaly detection on pipelines [40]. Filmed at 23 fps over an 8-second span, the footage predominantly featured heavily fouled metallic pipe, including barnacle clusters and algae mats, interspersed with occasional mid-sized fish (e.g., snappers and jacks) passing through the field of view. The target tasks here simulated real-world challenges in detecting weld imperfections or structural damage under low-visibility inspection conditions.

- Coral Reef Survey: A 200 m2 transect captured over about 8.3 s at 8–12 m depth, with horizontal visibility ranging from 6 to 12 m, typical of shallow tropical reefs [41,42]. The reef featured dense assemblages of branching Acropora and massive Porites, interspersed with sandy patches and scattered sea fans. Light conditions varied due to occasional overcast or surge events, causing short-term dips in clarity. A diversity of reef-associated fauna was present, including parrotfish, butterflyfish, grouper, and crown-of-thorns starfish damage zones. This scene tested the VLM’s ability to identify organisms and infer habitat relationships under moderate visibility and ecological complexity.

- Wreck Investigation: A 8 s video documenting part of a submerged vessel at 25 m depth. Visibility was highly dynamic: clear spells offered up to 12 m line-of-sight, while episodic thruster wash or undershoot disturbance triggered silt-out events, reducing clarity to below 3 m almost instantaneously [43]. The structure-corroded plating, extensive encrustation of biology (sponges, soft corals), and rivet patterns—presented rich semantic content but also frequent occlusions by mobile fauna (batfish, jacks) and sediment plumes. This demanding condition challenged both real-time latency constraints and the faithfulness of scene interpretation.

This suite of test videos was intentionally curated to span a gradient of underwater operational conditions. The Pipeline scenario emphasizes structural simplicity under poor visibility, the Reef scenario layers biological detail within variable visibility regimes, and the Wreck scenario adds scene clutter, topology complexity, and transient turbidity. Together, they provide a demanding and realistic foundation for assessing the trade-offs between system latency and semantic description performance.

5. Results

This section presents the experimental evaluation of the proposed cloud–edge vision–language framework under three representative underwater scenarios: pipeline inspection, coral reef survey, and wreck investigation. We assess the system from two key perspectives: (1) real-time responsiveness and processing throughput, which determine the real-time processing capability under bandwidth and computational constraints; (2) vision–language model output quality, which reflects the semantic richness, reliability, and descriptive accuracy of the generated natural language summaries. Together, these results demonstrate the effectiveness and practical feasibility of deploying large vision–language models for underwater robotic perception in complex environments.

5.1. Real-Time Responsiveness and Processing Throughput

Table 2 summarizes the key system performance metrics in the cloud deployment scenario. Qwen2.5-VL inference remained stable around 0.81–0.84 s per frame, enabling near real-time operation with an end-to-end latency below 1.2 s at a sustainable rate of 1 fps.

Table 2.

System performance metrics across three underwater scenarios (mean ± standard deviation).

The system sustained stable operation across all scenarios, with each processed frame analyzed and returned before the subsequent frame was captured. This demonstrates that, under the 1 fps sampling configuration, the cloud–edge pipeline can effectively maintain near real-time responsiveness without backlog or memory overflow. The stable per-frame latency ensured a consistent processing rhythm, critical for long-term deployments in bandwidth-limited underwater environments.

In the case of the coral reef video, for example, in the cloud scenario, the system processed about 9 frames in a video duration of 8.3 s (throughput of about 1.09 fps). The per-frame analysis time measured from the logs was about 1 s on average, which aligns with our expectation. This includes the network call to the Qwen API and the model’s inference time. The end-to-end latency for each processed frame was also on the order of 1 s; essentially, each frame was analyzed and the result returned before the next frame (at 1 s later) was taken for analysis, so there was minimal queuing delay. The system maintained this pace throughout the video, demonstrating stable real-time operation at the chosen sampling rate. If we had attempted to send every frame at 23 fps to the model sequentially, it would have been impossible to obtain results in real time. Instead, frames would queue up and the latency would grow quickly; for instance, after 10 s of video ( 230 frames) but only 10 processed, there would be over 200 frames waiting and the latest frame’s result would be delayed by minutes. By dropping the majority of frames, we ensured that each selected frame could be handled immediately.

5.2. VLM Description Quality

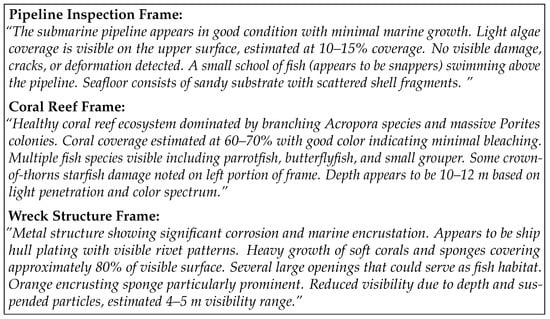

We evaluated the description quality per scenario. Figure 6 presents representative frames and their corresponding VLM-generated descriptions, providing intuitive examples of the model’s scene understanding under varying underwater conditions.

Figure 6.

Qwen2.5-VL outputs for different underwater scenarios demonstrating detailed scene understanding and relevant observation extraction.

The examples illustrate the model’s ability to generate detailed and context-aware scene descriptions across diverse underwater environments. In the pipeline inspection frame, the VLM accurately identifies structural features, biological elements, and substrate composition, demonstrating object-level precision. The coral reef example showcases the model’s capacity for ecological interpretation, including species-level identification and reef health assessment. In the wreck investigation frame, despite reduced visibility and cluttered appearance, the model successfully describes corrosion patterns, biological overgrowth, and habitat potential. These outputs underscore the model’s robustness and semantic granularity under varying visual conditions.

Following this qualitative assessment, Table 3 summarizes the detection and description accuracy across the three underwater environments: pipeline inspection, coral reef survey, and wreck structure documentation.

Table 3.

Semantic description accuracy in three underwater deployment scenarios (mean ± standard deviation).

The VLM demonstrated strong performance in detecting and describing underwater scenes despite challenging conditions such as low visibility, turbidity, and dynamic illumination. Object detection recall and condition assessment accuracy remained consistently high across all scenarios, indicating that the VLM is capable of reliably identifying large, prominent structures such as pipelines, coral formations, and wreck components. Spatial relationship accuracy was also robust, demonstrating the model’s ability to correctly describe the relative positioning of objects within the scene, which is critical for applications like infrastructure inspection and habitat mapping.

Some limitations were noted: the model occasionally hallucinated small marine life in featureless scenes, likely due to biases from terrestrial pretraining, and performed less reliably on dynamic content with motion blur. These findings suggest that fine-tuning on underwater-specific data could improve robustness and reduce hallucinations.

Overall, the model achieved a favorable trade-off between semantic richness and real-time efficiency. Its ability to generate accurate natural language descriptions from sparsely sampled frames supports its suitability for integration into future AUV-based perception systems.

6. Discussion

6.1. Real-Time Performance Trade-Offs

Our results demonstrate the feasibility of integrating a large-scale VLM into a real-time video analysis loop by sampling frames. The system in the cloud configuration was able to keep up with a 23 fps video by processing roughly 4–5% of the frames (1 fps) with a stable latency of about 1 s. This approach meets the needs of scenarios where continuous monitoring is required but perfect frame-by-frame analysis is not computationally possible.

We compare our metrics to recent efficient VLM architectures: LiteVLM (Huang et al., 2025) presents a pipeline integrating patch selection, token filtering, and speculative decoding, achieving a 2.5× reduction in inference latency (and up to 3.2× with FP8 quantization) on embedded platforms, while preserving task accuracy [44]. However, LiteVLM was evaluated on terrestrial or automotive scenes with clear visuals, not on turbidity-affected underwater imagery. Flash-VL 2B (Zhang et al., 2025) is designed for ultra-low latency and high throughput through token compression and implicit semantic stitching, delivering state-of-the-art speed–accuracy trade-offs across 11 VLM benchmarks [45]. Yet those benchmarks again reflect high-clarity domains, unlike our degraded underwater conditions.

Comparatively, our framework achieves roughly 1.0–1.2 s latency per frame, which is higher than LiteVLM’s sub-0.5 s inference. However, our system uniquely integrates underwater-specific preprocessing and supports robust semantic descriptions (object mentions, spatial relations, and natural language summaries) even under turbidity. LiteVLM and Flash-VL are optimized for clear input environments and seldom address underwater degradation.

In our pipeline, VLM inference delay contributes the largest latency variance. This matches our sustainable throughput of ≈1 fps when sampling every 23 frames. We also analyze event-detection trade-offs: for an event lasting d seconds, uniform sampling at 1 fps will reliably capture it if . Shorter events risk being missed—a motivating factor for future adaptive sampling.

By combining domain-aware sampling with model-level speed-ups, future iterations aim to deliver richer semantic output at near real-time speeds suitable for deployed underwater AUV systems.

6.2. VLM Performance on Underwater Imagery

Qwen2.5-VL demonstrated surprising robustness to underwater image characteristics despite being trained primarily on terrestrial images. The model successfully identified marine species, assessed structural conditions, and provided spatial descriptions. However, performance degraded with depth and turbidity more severely than traditional underwater computer vision models trained specifically for these conditions.

Underwater-specific enhancement played a critical role in stabilizing VLM inference. Without preprocessing, Qwen2.5-VL showed a hallucination rate exceeding 18% under turbidity levels below 5 m visibility. With our three-stage enhancement pipeline (color correction, DCP-based dehazing, HSV normalization), hallucinations dropped below 10% while output accuracy improved by ∼0.1 absolute (see Table 3). This validates the design principle that robust VLM outputs depend on upstream image clarity, especially in domains where the model has not been pretrained. Compared to other VLM-based systems evaluated primarily in terrestrial or indoor settings, our deployment of Qwen2.5-VL in marine environments demonstrates superior semantic granularity under degraded visual conditions. While existing controlled benchmarks seldom report hallucination rates in underwater contexts, our system achieves a hallucination rate of 4–7% in relatively structured underwater scenes (e.g., pipeline inspection).

Moreover, the semantic degradation trend across scenes reveals that VLM output quality is highly sensitive to scene complexity. In our data, object detection recall decreases from 0.94 (reef) to 0.82 (wreck), while hallucination rate rises from 0.04 to 0.09. This aligns with recent findings on object hallucination: for example, Li et al. presented the POPE evaluation framework and observed hallucination prevalence in multiple LVLMs when visual context is ambiguous or domain-shifted [46]. Such patterns suggest scene clutter, occlusion, and turbidity notably impair semantic reasoning. Although our enhancement pipeline substantially reduced hallucination, remaining errors typically involved ambiguous biological shapes (e.g., diffuse coral mistaken for fish) or noisy textures interpreted as structural features. These observations motivate future efforts such as underwater-specific fine-tuning and hallucination-aware decoding strategies—e.g., confidence-based filtering or visual grounding checks [47,48].

The uniform 1 fps frame sampling strategy delivered consistent results across test videos. Although this reduces temporal resolution by ∼96%, the average description completeness (ODR + SRA) remained above 85% in all cases, indicating that key scene events persisted across sampled frames. Nevertheless, fast-moving events or transient anomalies may be missed. This limitation motivates the need for a more intelligent sampling strategy that can respond to scene dynamics in real time. As future work, we plan to implement an adaptive frame sampling mechanism: lightweight edge-based saliency detectors (e.g., using image entropy estimators) will monitor scene activity and trigger frame uploads when significant changes are detected. Prior research has shown that entropy- or saliency-driven sampling can reduce frame transmission volume by 50–70% while preserving semantic detection performance [49]. Our envisioned two-tier system—involving continuous low-cost edge monitoring plus selective cloud-based semantic analysis—aims to improve semantic responsiveness without increasing latency or energy use.

In summary, the results affirm that underwater-specific enhancement is not merely a preprocessing convenience but a foundational enabler of semantically reliable VLM output. When benchmarked against prior underwater and VLM systems, our design achieves a competitive balance between latency, output accuracy, and semantic richness under real-world underwater constraints.

7. Conclusions

In this work, we present a novel cloud–edge framework enabling real-time vision–language analysis for autonomous underwater drones. Our architecture integrates underwater-specific preprocessing on the edge with cloud-based inference using Qwen2.5-VL, allowing semantic understanding at approximately 1 fps sustained throughput, with end-to-end latency between 1.00 and 1.30 s per frame across diverse underwater environments (pipeline, reef, wreck). Despite significant frame reduction ( 96% of native 23 fps), the system consistently achieved high semantic fidelity, with ODR + SRA ≥ 0.85 and low hallucination rates (4–7%).

Key contributions include the following:

- A first demonstration of deploying a large VLM for underwater AUV perception, delivering natural-language scene summaries in degraded visual conditions.

- Development of quantitative metrics and latency models tailored to underwater scenarios, separating edge preprocessing, communication delay, and cloud inference.

- Experimental validation illustrating that cloud-based inference can deliver 1 fps semantic throughput, while pure edge solutions would require prohibitive latency or frame dropping.

- Detailed analysis of trade-offs between temporal resolution and semantic richness, demonstrating that uniform sampling preserves essential scene information and laying the groundwork for adaptive strategies.

Our results show that modern VLMs can provide valuable scene understanding for underwater robotics applications, enabling natural language interaction and automated reporting. While communication bandwidth remains the primary limitation, the rich semantic descriptions from sparse temporal sampling prove sufficient for many monitoring and inspection tasks.

Looking ahead, future improvements will focus on the following:

- Adaptive frame sampling: integrating low-cost edge-based saliency or motion detectors to dynamically select frames, improving event recall while preserving bandwidth.

- Model-level optimizations: adopting techniques from LiteVLM and Flash-VL 2B—such as patch/token filtering and speculative decoding—to reduce inference time by 2–3×, enabling higher semantic throughput (e.g., 2–3 fps) on similar hardware constraints.

- Underwater domain adaptation: fine-tuning Qwen2.5-VL on underwater image–text datasets and implementing hallucination-aware decoding strategies (e.g., confidence scoring and visual grounding) to further enhance semantic reliability.

By combining domain-specific enhancement, adaptive sampling, and optimized VLM inference, we anticipate that future systems will deliver near real-time semantic understanding on underwater robotic platforms, supporting safer, more autonomous, and interpretable ocean operations.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/drones9090605/s1, Video S1: Pipeline Inspection (subsea pipeline inspection at 15 m depth, 23 fps, 8 s); Video S2: Coral Reef Survey (200 m2 transect at 8–12 m depth, 8.3 s, reef fauna); Video S3: Wreck Investigation (submerged vessel at 25 m, dynamic visibility, 8 s).

Author Contributions

Conceptualization, W.L. and F.Z.; methodology, W.L.; software, W.L. and F.Z.; validation, W.L. and F.Z.; formal analysis, W.L.; investigation, W.L.; resources, F.Z.; data curation, W.L.; writing—original draft preparation, W.L.; writing—review and editing, W.L. and F.Z.; visualization, W.L.; supervision, F.Z.; project administration, F.Z.; funding acquisition, F.Z. All authors have read and agreed to the published version of the manuscript.

Funding

FZ is supported by the Ministry of Science and Technology of the People’s Republic of China (Grant No. 2023ZD0120704 of Project No. 2023ZD0120700) and the National Natural Science Foundation of China (Grant No. 62372409).

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Demonstration videos corresponding to the three test scenarios (pipeline inspection, coral reef survey, and wreck investigation), along with a screencast of the end-to-end system in operation, are provided as Supplementary Video S1. These videos were generated using a simulation and rendering toolchain that mimics the resolution, colour depth, lighting, and turbidity conditions of real ROV footage, and are intended for demonstration purposes. They can also be accessed at https://doi.org/10.5281/zenodo.16889176 (accessed on 17 August 2025). Further inquiries can be directed to the corresponding authors.

Acknowledgments

The authors would like to thank the anonymous reviewers and editors for their insightful comments and constructive suggestions, which greatly improved the quality and clarity of this manuscript. We also extend our appreciation to the technical support teams from Alibaba Cloud for providing access to the Qwen2.5-VL model during the experimental phase.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AUV | Autonomous Underwater Vehicle |

| VLM | Vision–Language Model |

| VLA | Vision–Language–Action |

| GAN | Generative Adversarial Network |

| CNN | Convolutional Neural Network |

| DCP | Dark Channel Prior |

| KPI | Key Performance Indicator |

| ODR | Object Detection Recall |

| SRA | Spatial Relationship Accuracy |

| HR | Hallucination Rate |

| OA | Output Accuracy |

| API | Application Programming Interface |

| AES | Advanced Encryption Standard |

| GPU | Graphics Processing Unit |

| RAM | Random Access Memory |

| UIE | Underwater Image Enhancement |

| HTDet | Hybrid Transformer-based Detector |

| mAP | mean Average Precision |

| QPS | Queries Per Second |

References

- Sahoo, A.; Dwivedy, S.; Robi, P. Advancements in the field of autonomous underwater vehicle. Ocean Eng. 2019, 181, 145–160. [Google Scholar] [CrossRef]

- Yang, D.; Cai, L.; Jamieson, S.; Girdhar, Y. Robot Goes Fishing: Rapid, High-Resolution Biological Hotspot Mapping in Coral Reefs with Vision-Guided Autonomous Underwater Vehicles. arXiv 2023, arXiv:2305.02330. [Google Scholar] [CrossRef]

- Ho, M.; El-Borgi, S.; Patil, D.; Song, G. Inspection and monitoring systems for subsea pipelines: A review paper. Struct. Health Monit. 2020, 19, 606–645. [Google Scholar] [CrossRef]

- Akkaynak, D.; Treibitz, T. Sea-thru: A method for removing water from underwater images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1682–1691. [Google Scholar] [CrossRef]

- Li, C.; Anwar, S.; Hou, J.; Cong, R.; Guo, C.; Ren, W. Underwater Image Enhancement via Medium Transmission-Guided Multi-Color Space Embedding. IEEE Trans. Image Process. 2021, 30, 4985–5000. [Google Scholar] [CrossRef]

- Bakht, A.B.; Jia, Z.; ud Din, M.; Akram, W.; Soud, L.S.; Seneviratne, L.; Lin, D.; He, S.; Hussain, I. MuLA-GAN: Multi-Level Attention GAN for Enhanced Underwater Visibility. arXiv 2023, arXiv:2312.15633. [Google Scholar] [CrossRef]

- Saoud, L.S.; Niu, Z.; Sultan, A.; Seneviratne, L.; Hussain, I. ADOD: Adaptive Domain-Aware Object Detection with Residual Attention for Underwater Environments. arXiv 2023, arXiv:2312.06801. [Google Scholar] [CrossRef]

- Gupta, A.; Abdullah, A.; Li, X.; Ramesh, V.; Rekleitis, I.; Islam, M.J. Demonstrating CavePI: Autonomous Exploration of Underwater Caves by Semantic Guidance. arXiv 2025, arXiv:2502.05384. [Google Scholar] [CrossRef]

- Buchholz, M.; Carlucho, I.; Grimaldi, M.; Petillot, Y.R. Distributed AI Agents for Cognitive Underwater Robot Autonomy. arXiv 2025, arXiv:2507.23735. [Google Scholar] [CrossRef]

- Alawode, B.; Ganapathi, I.I.; Javed, S.; Werghi, N.; Bennamoun, M.; Mahmood, A. AquaticCLIP: A Vision-Language Foundation Model for Underwater Scene Analysis. arXiv 2025, arXiv:2502.01785. [Google Scholar] [CrossRef]

- Jeong, H.; Lee, H.; Kim, C.; Shin, S. A Survey of Robot Intelligence with Large Language Models. Appl. Sci. 2024, 14, 8868. [Google Scholar] [CrossRef]

- Bai, S.; Chen, K.; Liu, X.; Wang, J.; Ge, W.; Song, S.; Dang, K.; Wang, P.; Wang, S.; Tang, J.; et al. Qwen2.5-VL Technical Report. arXiv 2025, arXiv:2502.13923. [Google Scholar] [CrossRef]

- Chen, G.; Mao, Z.; Wang, K.; Shen, J. HTDet: A Hybrid Transformer-Based Approach for Underwater Small Object Detection. Remote Sens. 2023, 15, 1076. [Google Scholar] [CrossRef]

- Saoud, L.S.; Seneviratne, L.; Hussain, I. MARS: Multi-Scale Adaptive Robotics Vision for Underwater Object Detection and Domain Generalization. arXiv 2023, arXiv:2312.15275. [Google Scholar] [CrossRef]

- Wen, J.; Cui, J.; Zhao, Z. SyreaNet: A Physically Guided Underwater Image Enhancement Framework Integrating Synthetic and Real Images. arXiv 2023, arXiv:2302.08269. [Google Scholar] [CrossRef]

- Tang, J.; Wang, G.; Chen, Z.; Li, S.; Li, X.; Ji, X. FAFA: Frequency-Aware Flow-Aided Self-Supervision for Underwater Object Pose Estimation. arXiv 2024, arXiv:2409.16600. [Google Scholar] [CrossRef]

- Han, L.; Zhai, J.; Yu, Z.; Zheng, B. See you somewhere in the ocean: Few-shot domain adaptive underwater object detection. Front. Mar. Sci. 2023, 10, 1151112. [Google Scholar] [CrossRef]

- Zitkovich, B.; Yu, T.; Xu, S.; Xu, P.; Xiao, T.; Xia, F.; Wu, J.; Wohlhart, P.; Welker, S.; Wahid, A.; et al. RT-2: Vision-Language-Action Models Transfer Web Knowledge to Robotic Control. In Proceedings of the Conference on Robot Learning, Atlanta, GA, USA, 6–9 November 2023; pp. 2165–2183. [Google Scholar]

- Wu, Q.; Xu, W.; Liu, W.; Tan, T.; Liu, J.; Li, A.; Luan, J.; Wang, B.; Shang, S. MobileVLM: A Vision-Language Model for Better Intra- and Inter-UI Understanding. arXiv 2024, arXiv:2409.14818. [Google Scholar] [CrossRef]

- Zheng, Z.; Chen, Y.; Zhang, J.; Vu, T.A.; Zeng, H.; Tim, Y.H.W.; Yeung, S.K. Exploring boundary of GPT-4V on marine analysis: A preliminary case study. arXiv 2024, arXiv:2401.02147. [Google Scholar] [CrossRef]

- Lin, S.; Zhu, C.; Wei, Y.; Yang, S.; Liu, J.; Yuan, Y.; Tu, X.; Qu, F. An Offloading Strategy for Ocean Edge Computing Based on Whale Optimization Algorithm. In Proceedings of the 2023 3rd International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Tenerife, Spain, 19–21 July 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Siam, S.I.; Ahn, H.; Liu, L.; Alam, S.; Shen, H.; Cao, Z.; Shroff, N.; Krishnamachari, B.; Srivastava, M.; Zhang, M. Artificial Intelligence of Things: A Survey. ACM Trans. Sens. Netw. 2025, 21, 1–75. [Google Scholar] [CrossRef]

- Saeed, N.; Çelik, A.; Al-Naffouri, T.Y.; Alouini, M. EP-ADTA: Edge Prediction-Based Adaptive Data Transfer Algorithm for Underwater Wireless Sensor Networks (UWSNs). Sensors 2022, 22, 5490. [Google Scholar] [CrossRef]

- Budzianowski, P.; Maa, W.; Freed, M.; Mo, J.; Hsiao, W.; Xie, A.; Młoduchowski, T.; Tipnis, V.; Bolte, B. EdgeVLA: Efficient Vision-Language-Action Models. arXiv 2025, arXiv:2507.14049. [Google Scholar] [CrossRef]

- Team, G.R.; Abeyruwan, S.; Ainslie, J.; Alayrac, J.B.; Arenas, M.G.; Armstrong, T.; Balakrishna, A.; Baruch, R.; Bauza, M.; Blokzijl, M.; et al. Gemini Robotics: Bringing AI into the Physical World. arXiv 2025, arXiv:2503.20020. [Google Scholar] [CrossRef]

- Liu, S.; Zhao, C.; Xu, T.; Ghanem, B. BOLT: Boost Large Vision-Language Model Without Training for Long-form Video Understanding. arXiv 2025, arXiv:2503.21483. [Google Scholar] [CrossRef]

- Qu, T.; Tang, L.; Peng, B.; Yang, S.; Yu, B.; Jia, J. Does Your Vision-Language Model Get Lost in the Long Video Sampling Dilemma? arXiv 2025, arXiv:2503.12496. [Google Scholar] [CrossRef]

- Ye, J.; Wang, Z.; Sun, H.; Chandrasegaran, K.; Durante, Z.; Eyzaguirre, C.; Bisk, Y.; Niebles, J.C.; Adeli, E.; Fei-Fei, L.; et al. Re-thinking Temporal Search for Long-Form Video Understanding. arXiv 2025, arXiv:2504.02259. [Google Scholar] [CrossRef]

- Jamieson, S.; How, J.P.; Girdhar, Y. DeepSeeColor: Realtime Adaptive Color Correction for Autonomous Underwater Vehicles. arXiv 2023, arXiv:2303.04025. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, H.; Ying, X.; Huang, R. Coordinated Underwater Dark Channel Prior for Artifact Removal of Challenging Image Enhancement. Optoelectron. Lett. 2023, 19, 416–424. [Google Scholar] [CrossRef]

- Wang, Y.; Guo, J.; Gao, H.; Yue, H. UIEC-Net: CNN-based Underwater Image Enhancement Using Two Color Spaces. Signal Process. Image Commun. 2021, 96, 116250. [Google Scholar] [CrossRef]

- Li, C.; Anwar, S.; Porikli, F. Underwater Image Enhancement Using Generative Adversarial Network. In Proceedings of the 2025 3rd International Conference on Advancements in Electrical, Electronics, Communication, Computing and Automation (ICAECA), Coimbatore, India, 4–5 April 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Yang, H.; Huang, K.; Chen, W. LAFFNet: A Lightweight Adaptive Feature Fusion Network for Underwater Image Enhancement. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 685–692. [Google Scholar] [CrossRef]

- Chen, K.; Li, Z.; Zhou, F.; Yu, Z. CASF-Net: Underwater Image Enhancement with Channel-Adaptive Correction and Spatial Fusion. Sensors 2025, 25, 2574. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Wang, M.Z.X.; Ren, X. Design and implementation of more than 50 m real-time underwater wireless optical communication system. J. Light. Technol. 2022, 40, 3654–3668. [Google Scholar] [CrossRef]

- Yang, X.; Tong, Z.; Dai, Y.; Chen, X.; Zhang, H.; Zou, H.; Xu, J. 100 m full-duplex underwater wireless optical communication based on blue and green lasers and high sensitivity detectors. Opt. Commun. 2021, 498, 127261. [Google Scholar] [CrossRef]

- Liu, A.; Zhang, R.; Lin, B.; Yin, H. Multi-degree-of-freedom for underwater optical wireless communication with improved transmission performance. J. Mar. Sci. Eng. 2023, 11, 48. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, L.; Ling, Y. New approach for designing an underwater free-space optical communication system. Front. Mar. Sci. 2022, 9, 971559. [Google Scholar] [CrossRef]

- Chu, X.; Qiao, L.; Lin, X.; Xu, S.; Yang, Y.; Hu, Y.; Wei, F.; Zhang, X.; Zhang, B.; Wei, X.; et al. MobileVLM: A Fast, Strong and Open Vision Language Assistant for Mobile Devices. arXiv 2023, arXiv:2312.16886. [Google Scholar] [CrossRef]

- Dang, T.; Nguyen, T.T.; Liew, A.W.C.; Elyan, E. Event Classification on Subsea Pipeline Inspection Data Using an Ensemble of Deep Learning Classifiers. Cogn. Comput. 2025, 17, 10. [Google Scholar] [CrossRef]

- Asner, G.P.; Vaughn, N.R.; Foo, S.A.; Heckler, J.; Martin, R.E. Abiotic and Human Drivers of Reef Habitat Complexity Throughout the Main Hawaiian Islands. Front. Mar. Sci. 2021, 8, 631842. [Google Scholar] [CrossRef]

- Li, Y.; Liu, J.; Kusy, B.; Marchant, R.; Do, B.; Merz, T.; Crosswell, J.; Steven, A.; Tychsen-Smith, L.; Ahmedt-Aristizabal, D.; et al. A Real-Time Edge-AI System for Reef Surveys. In Proceedings of the 28th Annual International Conference on Mobile Computing And Networking, Sydney, Australia, 17–21 October 2022; pp. 903–906. [Google Scholar] [CrossRef]

- Ayranci, K.; Dashtgard, S.E. Deep-Water Renewal Events: Insights into Deep Water Sediment Transport Mechanisms. Sci. Rep. 2020, 10, 6139. [Google Scholar] [CrossRef]

- Huang, J.; Jin, Y.; An, L.; Park, J. LiteVLM: A Low-Latency Vision-Language Model Inference Pipeline for Resource-Constrained Environments. arXiv 2025, arXiv:2506.07416. [Google Scholar] [CrossRef]

- Zhang, B.; Li, S.; Tian, R.; Yang, Y.; Tang, J.; Zhou, J.; Ma, L. Flash-VL 2B: Optimizing Vision-Language Model Performance for Ultra-Low Latency and High Throughput. arXiv 2025, arXiv:2505.09498. [Google Scholar] [CrossRef]

- Li, Y.; Du, Y.; Zhou, K.; Wang, J.; Zhao, W.X.; Wen, J. Evaluating Object Hallucination in Large Vision-Language Models. arXiv 2023, arXiv:2305.10355. [Google Scholar] [CrossRef]

- Zhou, Y.; Cui, C.; Yoon, J.; Zhang, L.; Deng, Z.; Finn, C.; Bansal, M.; Yao, H. Analyzing and Mitigating Object Hallucination in Large Vision-Language Models. arXiv 2024, arXiv:2310.00754. [Google Scholar] [CrossRef]

- Leng, S.; Zhang, H.; Chen, G.; Li, X.; Lu, S.; Miao, C.; Bing, L. Mitigating Object Hallucinations in Large Vision-Language Models through Visual Contrastive Decoding. arXiv 2023, arXiv:2311.16922. [Google Scholar] [CrossRef]

- Lee, J.; Shin, J.; Ko, S.W.; Ha, S.; Lee, J. Scalable Frame Sampling for Video Classification: A Semi-Optimal Policy Approach with Reduced Search Space. arXiv 2025, arXiv:2409.05260. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).