Mapping Orchard Trees from UAV Imagery Through One Growing Season: A Comparison Between OBIA-Based and Three CNN-Based Object Detection Methods

Abstract

1. Introduction

Previous Work and Current Objectives

- Perform object detection using three CNN methods—Mask R-CNN (a two-shot detector), YOLOv3 (a one-shot detector), and SAM (a zero-shot detector)—to extract individual tree crowns across seven dates during the 2018 growing season;

- Compare the detection results from these CNNs against a reference dataset of tree crowns for the same seven dates, which have been segmented using a UAV-OBIA method;

- Analyze the validation results to assess the performance of each CNN method relative to one another and throughout the growing season, focusing on how their accuracy varies over time;

- Contribute to the research field by adding insights into the use of automated object detection methods (specifically CNNs) for improving orchard crop monitoring and management through UAV imagery; and

- Evaluate the implementation of these CNN models in ESRI’s ArcGIS Pro.

2. Object Detection Methods

2.1. Manual Digitization

2.2. Object-Based Image Analysis (OBIA)

2.3. Convolutional Neural Networks (CNNs)

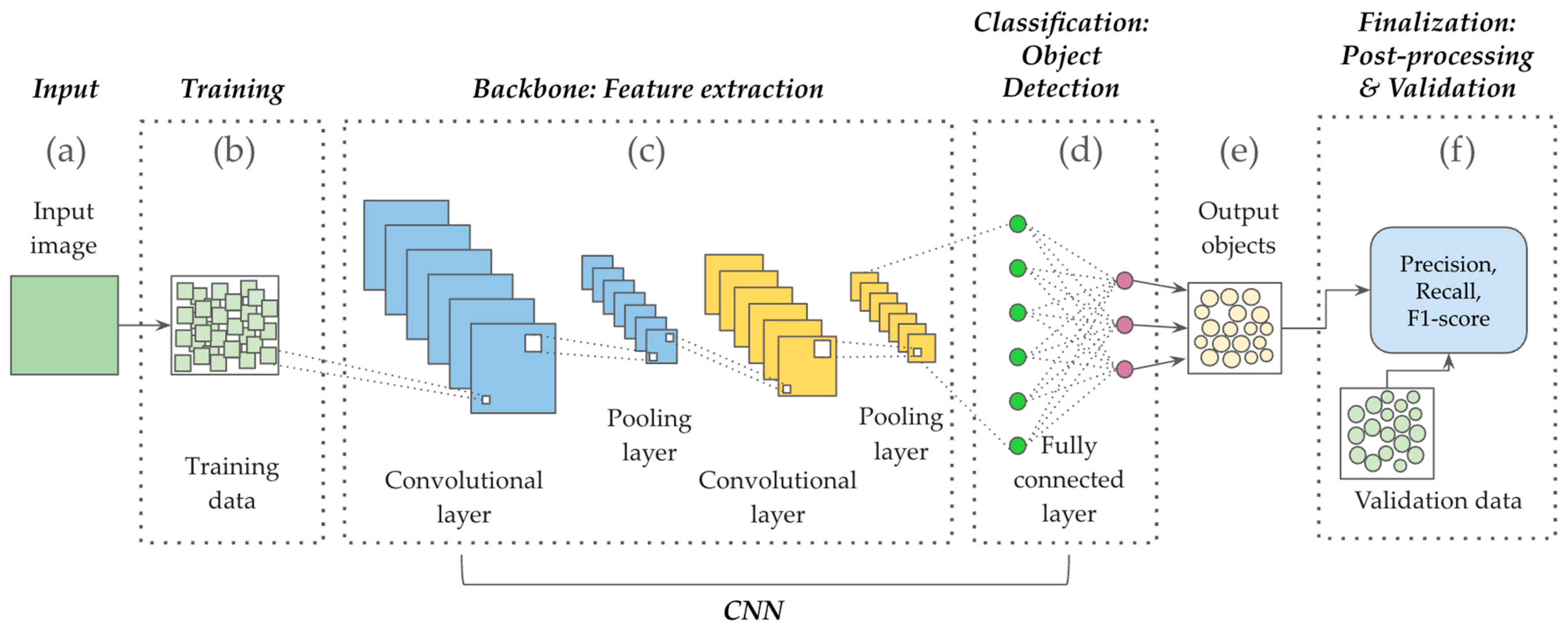

2.3.1. The CNN Workflow

2.3.2. CNN Model Types

2.4. Validation of CNN Models

| Metric | Formula |

|---|---|

| Intersection over union (IoU) or Jaccard index | Predicted ∪ Reference/Predicted ⋂ Reference |

| Precision (P) | TP/(TP + FP) |

| Recall (R) | TP/(TP + FN) |

| F1 score (F1) | 2((P × R)/(P + R)) |

3. Methods and Materials

3.1. Study Area

3.2. UAV Imagery Collection and Pre-Processing

3.3. Reference Dataset Creation: UAV-OBIA Method

3.4. CNN Model Runs

3.4.1. Mask R-CNN

3.4.2. YOLOv3

3.4.3. SAM

3.5. Validation

4. Results

| ESRI Processing Step | MASK R-CNN | YOLOv3 | SAM |

|---|---|---|---|

| Export Training Data for Deep Learning | 1.97 | 1.80 | NA |

| Train Deep Learning Model | 249.46 | 134.33 | NA |

| Detect Objects Using Deep Learning | 1.96 | 2.03 | 0.96 |

| Compute Accuracy for Object Detection | 0.07 | 0.07 | 0.07 |

| Total Compute Time (Minutes) | 253.46 | 138.23 | 1.03 |

5. Discussion

5.1. Tree Crown Objects

5.2. Illumination

5.3. OBIA and CNNs for Tree Crown Detection

5.4. Model Training and Transferability

5.5. Ease of CNN Use

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Parameter | Definition (from ArcGIS Pro Tools) | ESRI Tool | Model |

|---|---|---|---|

| Instance masks | When the image chips and tiles are created, additional chips and tiles are created that include a mask showing a labeled target. | Export Training Data for Deep Learning | MASK R-CNN |

| Metadata format | Specifies the format that will be used for the output metadata labels. | Export Training Data for Deep Learning | MASK R-CNN YOLOv3 |

| Stride distance | The distance to move in the x direction when creating the next image chips. When stride is equal to tile size, there will be no overlap. When stride is equal to half the tile size, there will be 50 percent overlap. | Export Training Data for Deep Learning | MASK R-CNN YOLOv3 |

| Tile size | The size of the image chips | Export Training Data for Deep Learning Detect Objects Using Deep Learning | MASK R-CNN YOLOv3 |

| Backbone model | Specifies the preconfigured neural network that will be used as the architecture for training the new model. This method is known as transfer learning. | Train Deep Learning Model | MASK R-CNN YOLOv3 |

| Batch size | The number of training samples that will be processed for training at one time. | Train Deep Learning Model Detect Objects Using Deep Learning | MASK R-CNN YOLOv3 |

| Chip size | The size of the image that will be used to train the model. Images will be cropped to the specified chip size. | Train Deep Learning Model | MASK R-CNN YOLOv3 |

| Epochs | The maximum number of epochs for which the model will be trained. A maximum epoch of 1 means the dataset will be passed forward and backward through the neural network one time. The default value is 20. | Train Deep Learning Model | MASK R-CNN YOLOv3 |

| Learning rate | The rate at which existing information will be overwritten with newly acquired information throughout the training process. If no value is specified, the optimal learning rate will be extracted from the learning curve during the training process. | Train Deep Learning Model | MASK R-CNN YOLOv3 |

| Non-maximum suppression (duplicates removed) | Specifies whether non-maximum suppression will be performed in which duplicate objects are identified and duplicate features with lower confidence values are removed. | Detect Objects Using Deep Learning | MASK R-CNN YOLOv3 SAM |

| Padding | The number of pixels at the border of image tiles from which predictions will be blended for adjacent tiles. To smooth the output while reducing artifacts, increase the value. The maximum value of the padding can be half the tile size value. The argument is available for all model architectures. | Detect Objects Using Deep Learning | MASK R-CNN YOLOv3 SAM |

References

- Reddy Maddikunta, P.K.; Hakak, S.; Alazab, M.; Bhattacharya, S.; Gadekallu, T.R.; Khan, W.Z.; Pham, Q.-V. Unmanned Aerial Vehicles in Smart Agriculture: Applications, Requirements, and Challenges. IEEE Sens. J. 2021, 21, 17608–17619. [Google Scholar] [CrossRef]

- Ghazal, S.; Munir, A.; Qureshi, W.S. Computer Vision in Smart Agriculture and Precision Farming: Techniques and Applications. Artif. Intell. Agric. 2024, 13, 64–83. [Google Scholar] [CrossRef]

- Zhang, C.; Valente, J.; Kooistra, L.; Guo, L.; Wang, W. Orchard Management with Small Unmanned Aerial Vehicles: A Survey of Sensing and Analysis Approaches. Precis. Agric. 2021, 22, 2007–2052. [Google Scholar] [CrossRef]

- Jiménez-Brenes, F.M.; López-Granados, F.; de Castro, A.I.; Torres-Sánchez, J.; Serrano, N.; Peña, J.M. Quantifying Pruning Impacts on Olive Tree Architecture and Annual Canopy Growth by Using UAV-Based 3D Modelling. Plant Methods 2017, 13, 55. [Google Scholar] [CrossRef]

- Stateras, D.; Kalivas, D. Assessment of Olive Tree Canopy Characteristics and Yield Forecast Model Using High Resolution UAV Imagery. Collect. FAO Agric. 2020, 10, 385. [Google Scholar] [CrossRef]

- Campos, J.; Llop, J.; Gallart, M.; García-Ruiz, F.; Gras, A.; Salcedo, R.; Gil, E. Development of Canopy Vigour Maps Using UAV for Site-Specific Management during Vineyard Spraying Process. Precis. Agric. 2019, 20, 1136–1156. [Google Scholar] [CrossRef]

- Zhang, J.; Yu, F.; Zhang, Q.; Wang, M.; Yu, J.; Tan, Y. Advancements of UAV and Deep Learning Technologies for Weed Management in Farmland. Agronomy 2024, 14, 494. [Google Scholar] [CrossRef]

- Popescu, D.; Ichim, L.; Stoican, F. Orchard Monitoring Based on Unmanned Aerial Vehicles and Image Processing by Artificial Neural Networks: A Systematic Review. Front. Plant Sci. 2023, 14, 1237695. [Google Scholar] [CrossRef]

- Csillik, O.; Cherbini, J.; Johnson, R.; Lyons, A.; Kelly, M. Identification of Citrus Trees from Unmanned Aerial Vehicle Imagery Using Convolutional Neural Networks. Drones 2018, 2, 39. [Google Scholar] [CrossRef]

- Jacygrad, E.; Kelly, M.; Hogan, S.; Preece, J.; Golino, D.; Michelmore, R. Comparison between Field Measured and UAV-Derived Pistachio Tree Crown Characteristics throughout a Growing Season. Drones 2022, 6, 343. [Google Scholar] [CrossRef]

- Hogan, S.D.; Kelly, M.; Stark, B.; Chen, Y. Unmanned Aerial Systems for Agriculture and Natural Resources. Calif. Agric. 2017, 71, 5–14. [Google Scholar] [CrossRef]

- Ameslek, O.; Zahir, H.; Latifi, H.; Bachaoui, E.M. Combining OBIA, CNN, and UAV Imagery for Automated Detection and Mapping of Individual Olive Trees. Smart Agric. Technol. 2024, 9, 100546. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A Survey on Object Detection in Optical Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The Application of Small Unmanned Aerial Systems for Precision Agriculture: A Review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, Aircraft and Satellite Remote Sensing Platforms for Precision Viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Zhao, H.; Morgenroth, J.; Pearse, G.; Schindler, J. A Systematic Review of Individual Tree Crown Detection and Delineation with Convolutional Neural Networks (CNN). Curr. For. Rep. 2023, 9, 149–170. [Google Scholar] [CrossRef]

- Blanco, V.; Blaya-Ros, P.J.; Castillo, C.; Soto-Vallés, F.; Torres-Sánchez, R.; Domingo, R. Potential of UAS-Based Remote Sensing for Estimating Tree Water Status and Yield in Sweet Cherry Trees. Remote Sens. 2020, 12, 2359. [Google Scholar] [CrossRef]

- Tu, Y.-H.; Phinn, S.; Johansen, K.; Robson, A.; Wu, D. Optimising Drone Flight Planning for Measuring Horticultural Tree Crop Structure. ISPRS J. Photogramm. Remote Sens. 2020, 160, 83–96. [Google Scholar] [CrossRef]

- Freudenberg, M.; Magdon, P.; Nölke, N. Individual Tree Crown Delineation in High-Resolution Remote Sensing Images Based on U-Net. Neural Comput. Appl. 2022, 34, 22197–22207. [Google Scholar] [CrossRef]

- Fawcett, D.; Bennie, J.; Anderson, K. Monitoring Spring Phenology of Individual Tree Crowns Using Drone-acquired NDVI Data. Remote Sens. Ecol. Conserv. 2021, 7, 227–244. [Google Scholar] [CrossRef]

- Ball, J.G.C.; Hickman, S.H.M.; Jackson, T.D.; Koay, X.J.; Hirst, J.; Jay, W.; Archer, M.; Aubry-Kientz, M.; Vincent, G.; Coomes, D.A. Accurate Delineation of Individual Tree Crowns in Tropical Forests from Aerial RGB Imagery Using Mask R-CNN. Remote Sens. Ecol. Conserv. 2023, 9, 641–655. [Google Scholar] [CrossRef]

- Modica, G.; Messina, G.; De Luca, G.; Fiozzo, V.; Praticò, S. Monitoring the Vegetation Vigor in Heterogeneous Citrus and Olive Orchards. A Multiscale Object-Based Approach to Extract Trees’ Crowns from UAV Multispectral Imagery. Comput. Electron. Agric. 2020, 175, 105500. [Google Scholar] [CrossRef]

- Yang, K.; Zhang, H.; Wang, F.; Lai, R. Extraction of Broad-Leaved Tree Crown Based on UAV Visible Images and OBIA-RF Model: A Case Study for Chinese Olive Trees. Remote Sens. 2022, 14, 2469. [Google Scholar] [CrossRef]

- Park, J.Y.; Muller-Landau, H.C.; Lichstein, J.W.; Rifai, S.W.; Dandois, J.P.; Bohlman, S.A. Quantifying Leaf Phenology of Individual Trees and Species in a Tropical Forest Using Unmanned Aerial Vehicle (UAV) Images. Remote Sens. 2019, 11, 1534. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P. Geographic Object-Based Image Analysis–towards a New Paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef]

- Guirado, E.; Tabik, S.; Alcaraz-Segura, D.; Cabello, J.; Herrera, F. Deep-Learning Versus OBIA for Scattered Shrub Detection with Google Earth Imagery: Ziziphus Lotus as Case Study. Remote Sens. 2017, 9, 1220. [Google Scholar] [CrossRef]

- Peña, J.M.; Torres-Sánchez, J.; de Castro, A.I.; Kelly, M.; López-Granados, F. Weed Mapping in Early-Season Maize Fields Using Object-Based Analysis of Unmanned Aerial Vehicle (UAV) Images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Lan, Y.; Yang, A.; Zhang, Y.; Wen, S.; Deng, J. Deep Learning versus Object-Based Image Analysis (OBIA) in Weed Mapping of UAV Imagery. Int. J. Remote Sens. 2020, 41, 3446–3479. [Google Scholar] [CrossRef]

- Guo, Q.; Kelly, M.; Gong, P.; Liu, D. An Object-Based Classification Approach in Mapping Tree Mortality Using High Spatial Resolution Imagery. GISci. Remote Sens. 2007, 44, 24–47. [Google Scholar] [CrossRef]

- Ke, Y.; Quackenbush, L.J. A Review of Methods for Automatic Individual Tree-Crown Detection and Delineation from Passive Remote Sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Baena, S.; Moat, J.; Whaley, O.; Boyd, D.S. Identifying Species from the Air: UAVs and the Very High Resolution Challenge for Plant Conservation. PLoS ONE 2017, 12, e0188714. [Google Scholar] [CrossRef]

- Yurtseven, H.; Akgul, M.; Coban, S.; Gulci, S. Determination and Accuracy Analysis of Individual Tree Crown Parameters Using UAV Based Imagery and OBIA Techniques. Measurement 2019, 145, 651–664. [Google Scholar] [CrossRef]

- Tu, Y.-H.; Johansen, K.; Phinn, S.; Robson, A. Measuring Canopy Structure and Condition Using Multi-Spectral UAS Imagery in a Horticultural Environment. Remote Sens. 2019, 11, 269. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; de Castro, A.I.; Peña, J.M.; Jiménez-Brenes, F.M.; Arquero, O.; Lovera, M.; López-Granados, F. Mapping the 3D Structure of Almond Trees Using UAV Acquired Photogrammetric Point Clouds and Object-Based Image Analysis. Biosyst. Eng. 2018, 176, 172–184. [Google Scholar] [CrossRef]

- Marques, P.; Pádua, L.; Adão, T.; Hruška, J.; Peres, E.; Sousa, A.; Sousa, J.J. UAV-Based Automatic Detection and Monitoring of Chestnut Trees. Remote Sens. 2019, 11, 855. [Google Scholar] [CrossRef]

- Sun, Y.; Sun, Z.; Chen, W. The Evolution of Object Detection Methods. Eng. Appl. Artif. Intell. 2024, 133, 108458. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.; Mohamed, A.-R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.; et al. Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Hoeser, T.; Bachofer, F.; Kuenzer, C. Object Detection and Image Segmentation with Deep Learning on Earth Observation Data: A Review—Part II: Applications. Remote Sens. 2020, 12, 3053. [Google Scholar] [CrossRef]

- Hoeser, T.; Kuenzer, C. Object Detection and Image Segmentation with Deep Learning on Earth Observation Data: A Review—Part I: Evolution and Recent Trends. Remote Sens. 2020, 12, 1667. [Google Scholar] [CrossRef]

- Ren, Y.; Yang, J.; Zhang, Q.; Guo, Z. Multi-Feature Fusion with Convolutional Neural Network for Ship Classification in Optical Images. NATO Adv. Sci. Inst. Ser. E Appl. Sci. 2019, 9, 4209. [Google Scholar] [CrossRef]

- Carranza-García, M.; Torres-Mateo, J.; Lara-Benítez, P.; García-Gutiérrez, J. On the Performance of One-Stage and Two-Stage Object Detectors in Autonomous Vehicles Using Camera Data. Remote Sens. 2020, 13, 89. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. ISBN 9783319106014. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4015–4026. [Google Scholar]

- Osco, L.P.; Wu, Q.; de Lemos, E.L.; Gonçalves, W.N.; Ramos, A.P.M.; Li, J.; Junior, J.M. The Segment Anything Model (SAM) for Remote Sensing Applications: From Zero to One Shot. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103540. [Google Scholar] [CrossRef]

- Carraro, A.; Sozzi, M.; Marinello, F. The Segment Anything Model (SAM) for Accelerating the Smart Farming Revolution. Smart Agric. Technol. 2023, 6, 100367. [Google Scholar] [CrossRef]

- Sun, X.; Wang, B.; Wang, Z.; Li, H.; Li, H.; Fu, K. Research Progress on Few-Shot Learning for Remote Sensing Image Interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2387–2402. [Google Scholar] [CrossRef]

- Ferguson, L.; Haviland, D. Pistachio Production Manual; UCANR Publications: Davis, CA, USA, 2016; ISBN 9781601078773. [Google Scholar]

- Ferguson, L.; Polito, V.; Kallsen, C. The Pistachio Tree; Botany and Physiology and Factors That Affect Yield; University of California Fruit & Nut Research Information Center: Davis, CA, USA, 2005. [Google Scholar]

- Conrad, O.; Bechtel, B.; Bock, M.; Dietrich, H.; Fischer, E.; Gerlitz, L.; Wehberg, J.; Wichmann, V.; Boehner, J. System for Automated Geoscientific Analyses (SAGA) Version 7.3.0 x64; United Nations: New York, NY, USA, 2015. [Google Scholar]

- Anthony, B.M.; Minas, I.S. Optimizing Peach Tree Canopy Architecture for Efficient Light Use, Increased Productivity and Improved Fruit Quality. Agronomy 2021, 11, 1961. [Google Scholar] [CrossRef]

- Guirado, E.; Tabik, S.; Alcaraz-Segura, D.; Cabello, J.; Herrera, F. Deep-Learning Convolutional Neural Networks for Scattered Shrub Detection with Google Earth Imagery. arXiv 2017, arXiv:1706.00917. [Google Scholar] [CrossRef]

- Yang, M.; Mou, Y.; Liu, S.; Meng, Y.; Liu, Z.; Li, P.; Xiang, W.; Zhou, X.; Peng, C. Detecting and Mapping Tree Crowns Based on Convolutional Neural Network and Google Earth Images. Int. J. Appl. Earth Obs. Geoinf. 2022, 108, 102764. [Google Scholar] [CrossRef]

- Damm, A.; Guanter, L.; Verhoef, W.; Schläpfer, D.; Garbari, S.; Schaepman, M.E. Impact of Varying Irradiance on Vegetation Indices and Chlorophyll Fluorescence Derived from Spectroscopy Data. Remote Sens. Environ. 2015, 156, 202–215. [Google Scholar] [CrossRef]

- Guillen-Climent, M.L.; Zarco-Tejada, P.J.; Berni, J.A.J.; North, P.R.J.; Villalobos, F.J. Mapping Radiation Interception in Row-Structured Orchards Using 3D Simulation and High-Resolution Airborne Imagery Acquired from a UAV. Precis. Agric. 2012, 13, 473–500. [Google Scholar] [CrossRef]

- Berra, E.F.; Gaulton, R.; Barr, S. Commercial Off-the-Shelf Digital Cameras on Unmanned Aerial Vehicles for Multitemporal Monitoring of Vegetation Reflectance and NDVI. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4878–4886. [Google Scholar] [CrossRef]

- Wu, Q.; Osco, L.P. Samgeo: A Python Package for Segmenting Geospatial Data with the Segment Anything Model (SAM). J. Open Source Softw. 2023, 8, 5663. [Google Scholar] [CrossRef]

- Ye, Z.; Yang, K.; Lin, Y.; Guo, S.; Sun, Y.; Chen, X.; Lai, R.; Zhang, H. A Comparison between Pixel-Based Deep Learning and Object-Based Image Analysis (OBIA) for Individual Detection of Cabbage Plants Based on UAV Visible-Light Images. Comput. Electron. Agric. 2023, 209, 107822. [Google Scholar] [CrossRef]

- Wang, Y.; Zhao, Y.; Petzold, L. An Empirical Study on the Robustness of the Segment Anything Model (SAM). Pattern Recognit. 2024, 155, 110685. [Google Scholar] [CrossRef]

- Meskó, B. Prompt Engineering as an Important Emerging Skill for Medical Professionals: Tutorial. J. Med. Internet Res. 2023, 25, e50638. [Google Scholar] [CrossRef]

- Baziak, B.; Bodziony, M.; Szczepanek, R. Mountain Streambed Roughness and Flood Extent Estimation from Imagery Using the Segment Anything Model (SAM). Hydrology 2024, 11, 17. [Google Scholar] [CrossRef]

| MASK R-CNN | YOLOv3 | SAM | |

|---|---|---|---|

| Model type | Two-shot | One-shot | Zero-shot |

| Backbone | ResNet family (e.g., 18, 50, 101, 152, etc.) | Darknet-53 | Vision Transformer (ViT) |

| Output | Polygon | Bounding box | Polygon |

| training/Validation data split | Training and validation required | Training and validation required | Only validation required |

| Input training data | Image chips | Image chips | Text prompts |

| Pre-training data | Can be pre-trained using Common Objects in Context (COCO) or other, depending on the backbone | Pre-trained using Common Objects in Context (COCO) | Segment Anything 1 billion mask dataset (SA-1B) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kelly, M.; Feirer, S.; Hogan, S.; Lyons, A.; Lin, F.; Jacygrad, E. Mapping Orchard Trees from UAV Imagery Through One Growing Season: A Comparison Between OBIA-Based and Three CNN-Based Object Detection Methods. Drones 2025, 9, 593. https://doi.org/10.3390/drones9090593

Kelly M, Feirer S, Hogan S, Lyons A, Lin F, Jacygrad E. Mapping Orchard Trees from UAV Imagery Through One Growing Season: A Comparison Between OBIA-Based and Three CNN-Based Object Detection Methods. Drones. 2025; 9(9):593. https://doi.org/10.3390/drones9090593

Chicago/Turabian StyleKelly, Maggi, Shane Feirer, Sean Hogan, Andy Lyons, Fengze Lin, and Ewelina Jacygrad. 2025. "Mapping Orchard Trees from UAV Imagery Through One Growing Season: A Comparison Between OBIA-Based and Three CNN-Based Object Detection Methods" Drones 9, no. 9: 593. https://doi.org/10.3390/drones9090593

APA StyleKelly, M., Feirer, S., Hogan, S., Lyons, A., Lin, F., & Jacygrad, E. (2025). Mapping Orchard Trees from UAV Imagery Through One Growing Season: A Comparison Between OBIA-Based and Three CNN-Based Object Detection Methods. Drones, 9(9), 593. https://doi.org/10.3390/drones9090593