Abstract

Addressing the significant challenge of speech enhancement in ultra-low-Signal-to-Noise-Ratio (SNR) scenarios for Unmanned Aerial Vehicle (UAV) voice communication, particularly under edge deployment constraints, this study proposes the Edge-Deployed Band-Split Rotary Position Encoding Transformer (Edge-BS-RoFormer), a novel, lightweight band-split rotary position encoding transformer. While existing deep learning methods face limitations in dynamic UAV noise suppression under such constraints, including insufficient harmonic modeling and high computational complexity, the proposed Edge-BS-RoFormer distinctively synergizes a band-split strategy for fine-grained spectral processing, a dual-dimension Rotary Position Encoding () mechanism for superior joint time–frequency modeling, and to optimize computational efficiency, pivotal for its lightweight nature and robust ultra-low-SNR performance. Experiments on our self-constructed DroneNoise-LibriMix (DN-LM) dataset demonstrate Edge-BS-RoFormer’s superiority. Under a −15 dB SNR, it achieves Scale-Invariant Signal-to-Distortion Ratio (SI-SDR) improvements of 2.2 dB over Deep Complex U-Net (DCUNet), 25.0 dB over the Dual-Path Transformer Network (DPTNet), and 2.3 dB over HTDemucs. Correspondingly, the Perceptual Evaluation of Speech Quality (PESQ) is enhanced by 0.11, 0.18, and 0.15, respectively. Crucially, its efficacy for edge deployment is substantiated by a minimal model storage of 8.534 MB, 11.617 GFLOPs (an 89.6% reduction vs. DCUNet), a runtime memory footprint of under 500MB, a Real-Time Factor (RTF) of 0.325 (latency: 330.830 ms), and a power consumption of 6.536 W on an NVIDIA Jetson AGX Xavier, fulfilling real-time processing demands. This study delivers a validated lightweight solution, exemplified by its minimal computational overhead and real-time edge inference capability, for effective speech enhancement in complex UAV acoustic scenarios, including dynamic noise conditions. Furthermore, the open-sourced dataset and model contribute to advancing research and establishing standardized evaluation frameworks in this domain.

1. Introduction

Unmanned Aerial Vehicles (UAVs), commonly known as drones, have been widely adopted in various outdoor sectors such as search and rescue, disaster monitoring, agricultural surveillance, traffic management, power line inspection, and logistics delivery owing to their high efficiency, flexibility, and cost effectiveness [1,2,3,4,5,6]. Furthermore, they exhibit significant potential in indoor intelligent service applications within large venues like airports, hotels, and exhibition centers, particularly for tasks such as navigation guidance, food delivery, and information consultation [7,8]. In these applications, speech data acquired by microphones mounted on UAVs are crucial not only for real-time communication and command reception but also, through speech-to-text technology, for automatic recording and analysis, and even as input for Multimodal Large Language Models (MLLMs) to achieve semantic understanding and complex decision-making [9,10,11]. Therefore, obtaining high-quality speech signals is paramount to fully leveraging the application value of UAVs.

However, UAV operation faces a significant challenge: the intense broadband noise generated by their own components, particularly propellers and motors [12,13]. This self-noise often reaches sound pressure levels as high as 70–90 dB under near-field conditions, resulting in target speech signals frequently being submerged in ultra-low-Signal-to-Noise-Ratio (SNR) environments, often below −15 dB or even lower [14,15]. Compounding the difficulty, this noise is highly non-stationary, with its spectral characteristics rapidly varying according to the UAV’s flight status (e.g., hovering, ascending, turning) [16,17]. Moreover, UAV noise typically contains prominent harmonic components [18], which severely overlap in frequency with crucial human speech bands (especially below 2 kHz) [17], making speech enhancement exceptionally challenging.

Traditional signal processing methods, such as spectral subtraction [19] and Wiener filtering [20], while conceptually simple, exhibit limited efficacy in handling such strong, non-stationary, low-SNR UAV noise, often introducing artifacts or residual noise [21]. In recent years, deep-learning-based approaches, particularly those employing Convolutional Neural Networks (CNNs) like the U-Net architecture [17], have achieved significant progress in the broader field of speech enhancement [22]. However, directly applying these methods to the UAV scenario remains challenging. On the one hand, existing models may still struggle to adequately model the complex harmonic structures and dynamic variations inherent in UAV noise. On the other hand, many high-performance models entail substantial computational complexity, rendering them unsuitable for the stringent on-board computational resource and power constraints of UAV platforms. Meanwhile, although advanced architectures from related fields like music source separation (MSS) (e.g., the Band-Split RoPE Transformer (BS-RoFormer) [23] and the Demucs series [24,25]) show promise in handling complex signal mixtures, effectively lightweighting these often computationally intensive models and adapting them for the edge computing limitations of UAVs remain underexplored challenges.

To address these multifaceted challenges, this paper proposes a novel, lightweight model: the Edge-Deployed Band-Split Rotary Position Encoding Transformer (Edge-BS-RoFormer). This model is specifically designed to tackle the problem of speech enhancement in ultra-low-SNR UAV environments while adhering to edge computing constraints. Edge-BS-RoFormer distinctively synergizes a band-split strategy for the fine-grained processing of different frequency components of noise and speech; incorporates a dual-dimension Rotary Position Encoding (RoPE) mechanism [26] to enhance the model’s capability for the joint modeling of complex time–frequency structures (including harmonics) in speech and the dynamic variations of noise; and leverages FlashAttention [27] technology to significantly optimize the efficiency and memory footprint of attention computations, making it suitable for resource-constrained edge platforms.

The main contributions of this paper are as follows:

- The proposal of Edge-BS-RoFormer, a novel lightweight Transformer architecture specifically designed for UAV ultra-low-SNR speech enhancement and optimized for edge deployment which demonstrates robust performance even under dynamic noise conditions.

- Extensive experimental validation on our self-constructed DroneNoise-LibriMix (DN-LM) dataset. Under a −15 dB SNR, Edge-BS-RoFormer achieves Scale-Invariant Signal-to-Distortion Ratio (SI-SDR) improvements of 2.2 dB, 25.0 dB, and 2.3 dB, and Perceptual Evaluation of Speech Quality (PESQ) enhancements of 0.11, 0.18, and 0.15 compared to Deep Complex U-Net (DCUNet), the Dual-Path Transformer Network (DPTNet), and HTDemucs, respectively. Qualitative analysis also confirms its superior handling of dynamic noise.

- Comprehensive edge deployment validation on an NVIDIA Jetson AGX Xavier, showcasing its practical viability with only 11.617 GFLOPs, 8.534 MB model storage, a sub-500 MB runtime memory footprint, a Real-Time Factor (RTF) of 0.325 (latency: 330.830 ms), and a power consumption of 6.536W, fulfilling real-time processing demands.

- The construction and open-sourcing of the DroneNoise-LibriMix (DN-LM) dataset and the Edge-BS-RoFormer model, providing valuable resources for advancing research and standardized evaluation in UAV speech enhancement.

2. Related Work

Deep learning methodologies have become the dominant paradigm in the field of speech enhancement [28]. The CHiME-6 Challenge dataset [22] serves as a standardized benchmark, facilitating the development and evaluation of multi-channel speech enhancement algorithms. The rapid advancement of dual-path architectures has allowed models to capture time–frequency features more effectively [29,30].

In the evolution of UAV self-noise suppression methods, spatial filtering techniques based on beamforming [12], while foundational, face challenges due to UAV self-noise directionality and platform mobility, though deep-learning-assisted beamforming shows promise [31]. Blind source separation (BSS) methods [32] offer another approach, but their performance is often limited by strong assumptions about source independence and non-Gaussianity, alongside permutation ambiguity issues, especially in low-SNR UAV contexts. In recent years, the introduction of deep learning frameworks, such as dilated CNNs [33] and Multi-Channel Time–Frequency Spatial Filtering fusion architectures [34], has significantly enhanced processing performance in non-stationary noise environments. Mukhutdinov et al. [17] systematically evaluated the performance of 12 deep learning architectures in UAV speech enhancement tasks. Among these, the DCUNet [35] model, based on a complex U-Net structure, demonstrated significant advantages, although its performance under ultra-low-SNR conditions requires further improvement [17]. Furthermore, time-domain processing models, exemplified by DPTNet [29], provide an alternative approach that avoids spectral information loss. Recent advancements also include sophisticated self-attention mechanisms within Transformers [36,37], low-latency designs crucial for real-time applications [38], and adaptation techniques like lightweight adapters to fine-tune models for specific drone noise characteristics [39].

The rapid development of MSS techniques has significantly influenced research in UAV speech enhancement. The Demucs model series [24,25] achieved breakthroughs in MSS tasks through multi-stage optimization, with its HTDemucs variant further achieving six-track separation. Enhanced Transformer architectures such as the Spectral Temporal Transformer in Transformer [40] and methods employing band-splitting like the Band-Split RNN (BSRNN) [41] improved spectral modeling precision. The BS-RoFormer [23] further enhanced spectral reconstruction accuracy by incorporating technology [26]. These successful applications in MSS, particularly techniques like band-splitting [41] and advanced positional encodings [23], serve as valuable references for speech enhancement algorithm design in challenging UAV noise scenarios.

The current research in UAV speech enhancement exhibits three critical gaps, lacking a lightweight model design suitable for on-board processing, deployment optimization under edge resource constraints, and robust time–frequency feature extraction for ultra-low-SNR scenarios dominated by dynamic UAV noise. The proposed Edge-BS-RoFormer model in this paper presents a systematic innovative solution addressing these challenges.

3. Proposed Method

In this study, we present Edge-BS-RoFormer, an edge computing framework specifically designed for real-time speech enhancement on UAVs. The Edge-BS-RoFormer system integrates a band-split strategy with a dual-dimension Transformer architecture to achieve efficient UAV noise suppression in computationally constrained environments.

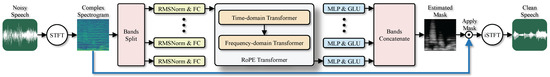

As depicted in Figure 1, the proposed system employs a complex spectral domain processing approach. The input noisy speech waveform is transformed into a time–frequency representation via Short-Time Fourier Transform (), followed by spectral segmentation into non-uniform sub-bands using the band-split strategy for independent processing. The core Transformer module features a dual-dimension processing architecture, comprising a Time Transformer and a Frequency Transformer to capture temporal dependencies and inter-frequency relationships, respectively. Subsequently, Multilayer Perceptron () and Gated Linear Unit () operations are applied to each sub-band, with the outputs concatenated along the temporal axis to form complex ideal ratio masks (). These masks are element-wise applied to the original spectrum before reconstructing enhanced speech through Inverse Short-Time Fourier Transform (). The architecture is specifically optimized for edge computing constraints, comprising a hidden dimension of 48, three stacked Transformer layers, and a multi-head attention mechanism with six parallel attention heads, each operating in a 48-dimensional subspace.

Figure 1.

Architecture of the proposed Edge-Deployed Band-Split Rotary Position Encoding Transformer (Edge-BS-RoFormer) model. The system achieves Unmanned Aerial Vehicle (UAV) noise suppression through a band-split strategy and a dual-dimension Transformer Rotary Position Encoding () architecture. The input noisy speech is first converted into a complex spectral representation via Short-Time Fourier Transform (), followed by non-uniform sub-band division using the band-split strategy. Each sub-band undergoes and layer processing before sequentially passing through time-domain and frequency-domain dual-dimension Transformer modules to capture temporal sequence features and band relationships, respectively. Subsequently, Multilayer Perceptron () and Gated Linear Unit () components generate complex ideal ratio masks () for each sub-band. The merged sub-bands are multiplied with the original spectrum and reconstructed into enhanced speech through Inverse Short-Time Fourier Transform ().

3.1. Speech Signal Processing Fundamentals

The cornerstone of speech enhancement systems lies in the transformation between time-domain and frequency-domain representations and complex spectrum processing. Let the input noisy speech waveform be denoted as , where L denotes the number of audio samples. The waveform is then transformed into a time–frequency representation via , where T and F represent the number of frames and frequency bins, respectively.

Edge-BS-RoFormer targets the cIRM [42], which aims to simultaneously recover both the amplitude and phase information of the speech signal. Let represent a neural network parameterized by learnable parameters . The output of this network, denoted as , is expressed as . The enhanced complex spectrogram, denoted as , is obtained through element-wise multiplication (represented by ⊙) of the estimated mask and the input complex spectrogram as follows:

Finally, to reconstruct the enhanced time-domain signal , the is applied to transform the enhanced complex spectrogram back to the time domain. In this study, a dual-domain hybrid supervision strategy is employed to optimize the model. Building upon the original loss function of BS-RoFormer [23], a weighting coefficient is introduced to precisely modulate the relative contribution of time-domain and frequency-domain losses. The composite loss function, denoted as , is defined as the sum of the time-domain loss and the weighted frequency-domain loss, as shown below:

where represents the set of multi-resolution parameters, encompassing five distinct window sizes: 4096, 2048, 1024, 512, and 256. The L1 loss for the frequency-domain complex spectrum is computed as the sum of the L1 norms of the real and imaginary components, which can be written as follows:

3.2. Band-Split Strategy

The band-split strategy is a key component of the Edge-BS-RoFormer system, enabling the model to learn specialized representations across distinct frequency bands while enhancing robustness against cross-band vagueness [23]. This approach has been previously validated in BSRNN [41] to effectively improve the performance of frequency-domain methods.

In our implementation, we adopt a band-split strategy inspired by BSRNN [41], segmenting the input complex spectrogram along the frequency axis into N non-uniform and non-overlapping sub-bands. Let denote the input to the n-th sub-band, where represents the number of frequency bins within that sub-band. Collectively, all sub-bands form the complete complex spectrogram , satisfying . Each sub-band is initially processed by an layer [43], an efficient regularization technique based on root mean square normalization. Subsequently, a linear transformation is applied via a learnable matrix of dimensions and a learnable bias of dimension D, where D denotes the hidden feature dimension. The transformed sub-band output is denoted as , with a shape of . All sub-band representations () are stacked along the sub-band axis to form an integrated representation of shape . This aggregated representation serves as the input to the subsequent Transformer module.

3.3. Transformer Architecture

The core of Edge-BS-RoFormer’s processing capability lies in its bespoke Transformer architecture, which incorporates several key mechanisms, detailed in the following subsections.

3.3.1. Rotary Position Encoding Mechanism

is the core mechanism of the Edge-BS-RoFormer system, with its theoretical foundation rooted in the RoFormer architecture [26]. Mathematically, is applied to the query matrix and key matrix within the self-attention mechanism:

where denotes the encoder that applies rotation matrices to each embedding vector. Compared with conventional absolute positional encoding, offers advantages by better preserving the norm scale, more effectively encoding relative positions, and maintaining the translation equivariance of self-attention. This characteristic proves particularly crucial for processing variable-length speech sequences.

3.3.2. Time-Domain Transformer Module

In contrast to methods employing stacked multilayer Transformer architectures [23], Edge-BS-RoFormer incorporates only two complementary Transformer modules: a Time Transformer and a Frequency Transformer. This streamlined design reduces computational complexity while preserving the model’s feature extraction capability.

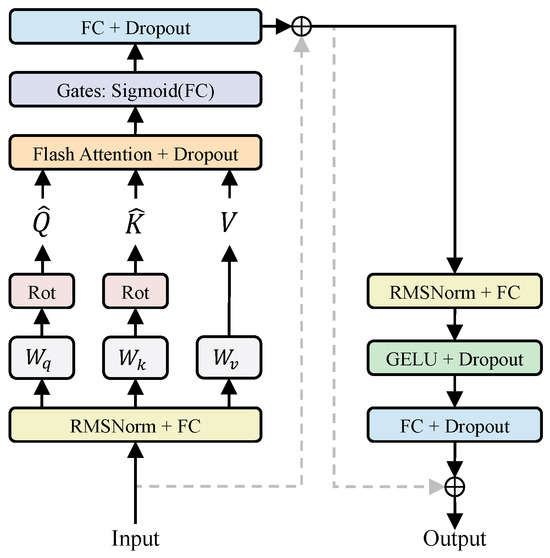

As illustrated in Figure 2, the Time Transformer processes the input along the temporal dimension to capture long-range dependencies. Initially, with dimensions is reshaped to by merging the batch dimension B and sub-band dimension N. Following normalization, the query, key, and value matrices are projected as , , and , where , , and represent learnable weight matrices. After applying , multi-head self-attention [44] is performed with h attention heads:

Figure 2.

Architecture of the Time Transformer layer. This module processes input features via to generate query (Q), key (K), and value (V) representations, with applied to Q and K. The attention output computed by is modulated through a gating mechanism and subsequently integrated with the original input via a residual connection. The resultant features are processed by a feedforward network comprising , activation, and layers, forming a secondary residual connection to produce the final output.

The Time Transformer subsequently incorporates a gating mechanism [45] using a fully connected layer with activation to regulate information flow:

where and are learnable parameters. The gated attention output is projected through a fully connected layer with dropout:

A residual connection yields the Time Transformer’s attention module output:

The Frequency Transformer processes along the frequency band dimension to model inter-band relationships. The input is reshaped from to by merging batch and temporal dimensions, establishing the sub-band dimension as the sequence axis. Its architecture is the same as that of the Time Transformer, comprising , self-attention, gating mechanisms, and residual connections. After processing by the Frequency Transformer, the output is reshaped back to , producing the Transformer module’s final output .

To enhance computational efficiency, we implement [27] for optimized attention computation, significantly reducing the memory footprint and processing time. This reduction is critical for real-time edge device deployment.

3.3.3. Feedforward Module Design

As illustrated in Figure 2, the architecture of the feedforward module can be mathematically described as follows:

The input first undergoes normalization by the layer, followed by sequential processing through the first fully connected layer , a activation function [46], regularization, and the second fully connected layer . Finally, a residual connection (element-wise addition) integrates the original input with the transformed features. This skip connection design facilitates effective gradient propagation in deep neural networks.

3.4. Multi-Band Mask Estimation

The multi-band mask estimation module generates ideal ratio masks for each frequency band. This module takes the output from the Transformer module as input. Independent processing pipelines are then applied to each sub-band . As depicted in Figure 1, each sub-band is initially processed by an , followed by modulation through a :

Each sub-band mask contains real and imaginary components for modulating the corresponding sub-band’s complex spectrum. Subsequently, all sub-band masks are concatenated along the frequency axis to form the complete cIRM . The enhanced speech spectrogram is obtained through element-wise multiplication of the estimated mask and original complex spectrogram, as shown in Equation (1). The time-domain signal is subsequently reconstructed via .

3.5. Datasets

To enable robust evaluation under realistic UAV acoustic conditions, particularly ultra-low-SNR scenarios, the DN-LM dataset was synthesized. Its construction and key characteristics are detailed below.

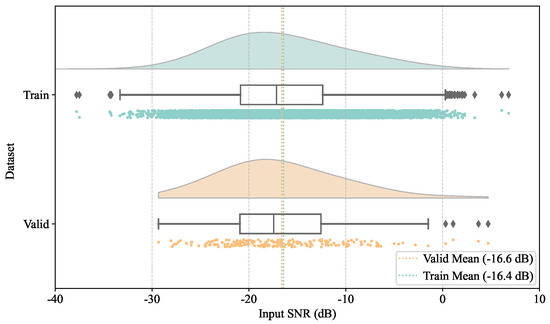

To simulate real-world UAV noise interference in speech communication, we constructed the DN-LM synthetic dataset. Figure 3 illustrates the distribution raincloud plots for the training and validation sets, clearly demonstrating the data distribution characteristics. The data synthesis process primarily involved audio pre-processing, fixed duration alignment, distance attenuation simulation, audio mixing, and SNR calculation. First, speech and UAV noise samples were randomly selected from LibriSpeech [47] and DroneAudioDataset [48], then they were uniformly converted to monophonic audio, resampled to 16 kHz, and normalized. Subsequently, all samples were processed to have a consistent duration of one second (16,000 samples).

Figure 3.

The Signal-to-Noise Ratio (SNR) distribution of the DroneNoise-LibriMix (DN-LM) dataset. This figure illustrates the input SNR distribution characteristics of the training set (top) and validation set (bottom), including density curves, box plots, and scatter plots. Both subsets exhibit similar statistical properties, with mean Signal-to-Noise Ratio (SNR) values of −16.4 dB and −16.5 dB for the training and validation sets, respectively. The data approximately follow a normal distribution, primarily concentrated within the −30 dB to 0 dB range, effectively simulating ultra-low-SNR scenarios under UAV near-field noise interference and providing a statistically consistent data foundation for model training and evaluation.

Following this, sound signal attenuation with distance was simulated based on the free-field sound propagation model [49]. The UAV noise source was positioned at a near-field distance relative to the microphone, while speech signals were simulated as originating from far-field sources with varying propagation distances . The attenuation coefficient was defined as , yielding attenuated signals and , respectively. Subsequently, the attenuated speech signal and UAV noise signal were directly summed in the time domain to obtain the noisy speech signal . Finally, the energy ratio between speech and noise signals in the mixed signal was calculated and converted to decibels (dB) to represent the SNR value:

This SNR value was recorded in metadata files for subsequent analysis. Through this pipeline, a 2 h synthetic dataset containing paired clean speech and UAV-noise-corrupted speech was constructed and partitioned into training and validation sets at a 9:1 ratio, providing high-quality data support for experimental validation.

4. Experiments and Results

The efficacy and performance characteristics of the proposed Edge-BS-RoFormer were validated through a series of comprehensive experiments. This section outlines the experimental design, presents comparative analyses against baselines, and includes ablation studies investigating component contributions.

4.1. Experimental Setup

Our empirical evaluation is grounded in a meticulously defined experimental framework. This includes the baseline models chosen for benchmarking, the training protocols applied for fair comparison, and the suite of metrics used for multifaceted performance assessment, all detailed herein.

4.1.1. Baseline Models

To establish performance benchmarks, three state-of-the-art methods were selected: DCUNet [35], DPTNet [29], and HTDemucs [25]. DCUNet employs a complex spectral domain processing approach based on a U-Net architecture, demonstrating notable speech enhancement efficacy in ultra-low-SNR regimes (−30 dB–0 dB). Empirical studies in UAV noise environments [17] demonstrate DCUNet’s superior performance under complex acoustic conditions. DPTNet, which ranked second in comprehensive UAV noise suppression evaluations, utilizes a dual-path Transformer architecture to effectively process both intra-chunk and inter-chunk information via self-attention mechanisms, enabling the robust modeling of temporal dependencies in speech signals contaminated by drone noise. HTDemucs, developed by Meta, implements a hybrid time–frequency domain Transformer architecture featuring a hierarchical Transformer design that integrates time-domain convolution with frequency-domain attention mechanisms, exhibiting effective modeling capabilities for MSS. These baseline selections enable systematic comparison of the proposed model with leading contemporary methods. For fair comparison, all baseline models were trained using the same training steps, learning rates, and early stopping strategies as the proposed model.

4.1.2. Training Protocol

All experiments in this study were conducted on a computational platform equipped with one RTX 2080 Ti GPU, utilizing CUDA 12.4 and PyTorch 2.6.0 + cu124. The implementation leveraged the open-source training framework from Solovyev et al. [50]. The model was trained using the AdamW optimizer with an initial learning rate of combined with an adaptive learning rate scheduling strategy that reduced the learning rate by a factor of 0.95 when the validation set SI-SDR showed no improvement for two consecutive epochs. To mitigate overfitting, a band random masking strategy (p = 0.2) and an early stopping mechanism with a 30-epoch patience threshold were implemented. Training configurations included 200 steps per epoch, a batch size of 12, FP32 precision, and an Exponential Moving Average mechanism with 0.999 momentum to enhance optimization stability. A consistent random seed was employed across all experiments to ensure reproducibility.

4.1.3. Evaluation Metrics

To comprehensively evaluate the performance of the proposed speech enhancement model, this study employed metrics assessing both speech quality and computational efficiency.

Speech Quality Metrics: Three objective evaluation metrics were adopted:

- Scale-Invariant Signal-to-Distortion Ratio (SI-SDR) [51] was utilized to assess signal reconstruction quality:

- Short-Time Objective Intelligibility (STOI) [52] quantified speech intelligibility:

- Perceptual Evaluation of Speech Quality (PESQ) [53] simulated subjective human evaluation:

Computational Efficiency Metrics: The following six indicators were selected for real-world deployment evaluation on the edge platform:

- Floating Point Operations (FLOPs) [54] quantified the total computational volume per inference.

- Model Storage (MB) indicated the disk space required for the model parameters.

- Peak Runtime Memory (MB) was measured during inference to assess on-device RAM requirements.

- Latency (ms) denoted the actual processing time for a standard input audio segment.

- Real-Time Factor (RTF) [55] quantified processing speed relative to the audio duration:

- Power Consumption (W) was measured on the edge device during model inference to evaluate energy efficiency.

4.2. Main Results

Following the established protocol, this subsection presents the principal findings from our evaluations. These encompass quantitative comparisons of Edge-BS-RoFormer against baselines, qualitative spectrogram analyses, and ablation study outcomes.

4.2.1. Model Performance Comparison

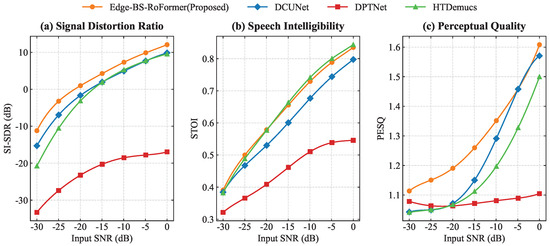

Figure 4 presents a comparative analysis of the proposed Edge-BS-RoFormer model against baseline methods across input SNR levels ranging from −30 dB to 0 dB. Detailed evaluations are conducted through three key metrics: SI-SDR, STOI, and PESQ.

Figure 4.

Performance comparison of various speech enhancement methods across input SNRs ranging from −30 dB to 0 dB: (a) Scale-Invariant Signal-to-Distortion Ratio (SI-SDR); (b) Short-Time Objective Intelligibility (STOI); (c) Perceptual Evaluation of Speech Quality (PESQ). Comparison across multiple metrics shows that Edge-BS-RoFormer (orange) significantly outperforms all baseline methods, namely, Deep Complex U-Net (DCUNet) (blue), the Dual-Path Transformer Network (DPTNet) (red), and HTDemucs (green), in terms of both speech perception and noise suppression effectiveness, demonstrating the robustness of the proposed method in complex acoustic environments.

As shown in Figure 4a, the Edge-BS-RoFormer model achieves the highest SI-SDR values across all input SNR conditions, indicating its significant advantage in signal distortion suppression. Notably, at the representative low-SNR level of −15 dB, Edge-BS-RoFormer improves the SI-SDR by 2.2 dB and 2.3 dB compared to DCUNet and HTDemucs, respectively, and by 25.0 dB compared to DPTNet. This demonstrates Edge-BS-RoFormer’s enhanced capability to recover UAV-noise-masked speech signals while minimizing algorithmic distortion.

Figure 4b illustrates the models’ performance in terms of the STOI metric. Edge-BS-RoFormer and HTDemucs demonstrate comparable performance curves, with both consistently outperforming DCUNet across the entire SNR range. Notably, Edge-BS-RoFormer exhibits superior performance over HTDemucs when the input SNR falls below −20 dB, highlighting its enhanced capability in ultra-low-SNR conditions. Furthermore, all three models significantly surpass DPTNet, which exhibits substantially lower STOI scores across all evaluated SNR conditions, indicating its limited efficacy in preserving speech intelligibility under UAV noise interference.

The PESQ results, serving as a critical indicator of perceptual speech quality, are presented in Figure 4c. Edge-BS-RoFormer achieves the highest PESQ scores across all SNR conditions, confirming its superior auditory perception quality. At the −15 dB SNR, Edge-BS-RoFormer surpasses DCUNet and HTDemucs by 0.11 and 0.15 points in PESQ, respectively, further validating its excellence in enhancing speech intelligibility and naturalness. Notably, DPTNet exhibits consistently lower PESQ values, remaining below 1.1 across virtually all input SNR levels, indicating its significant limitations in preserving perceptual speech quality under UAV noise conditions.

Comprehensive quantitative evaluations confirm that the proposed Edge-BS-RoFormer model significantly outperforms baseline methods across all input SNR levels. Under the critical −15 dB condition, Edge-BS-RoFormer achieves notable performance improvements over all baseline models: a 2.2 dB SI-SDR improvement compared to DCUNet, a 25.0 dB SI-SDR improvement compared to DPTNet, and a 2.3 dB SI-SDR improvement compared to HTDemucs. In terms of perceptual quality, Edge-BS-RoFormer surpasses DCUNet and HTDemucs by 0.11 and 0.15 points in PESQ, respectively. This performance advantage stems from the synergistic interaction between two key architectural innovations, the band-specific splitting strategy and the mechanism, which collectively enhance the model’s ability to capture crucial speech features under ultra-low-SNR conditions. Experimental evidence establishes the proposed method as a new performance benchmark for UAV speech enhancement tasks.

4.2.2. Time–Frequency Characteristics Analysis

To qualitatively assess spectral processing, this subsection analyzes the time–frequency characteristics of enhanced speech signals using spectrogram comparisons. To provide a comprehensive evaluation, samples from two representative scenarios are analyzed: (1) relatively stationary UAV noise conditions (e.g., during hovering) and (2) dynamic UAV noise conditions (e.g., simulating flight maneuvers with varying propeller speeds).

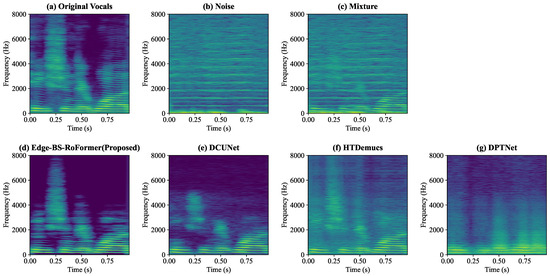

First, we examine the model performance under stationary UAV noise. To visually compare the spectral reconstruction capabilities of the models in this environment, a comparative analysis of spectrograms for clean speech, UAV noise, noisy speech, and speech enhanced by the four models was conducted, as depicted in Figure 5.

Figure 5.

Spectrogram comparison of speech enhancement methods under stationary UAV noise conditions. In these spectrograms, yellow indicates high energy and blue indicates low energy. Subplots display (a) original clean vocals; (b) stationary UAV noise, characterized by consistent harmonic structures; (c) a mixture of clean vocals and stationary UAV noise; (d) speech enhanced by the proposed Edge-BS-RoFormer (EBS-RoF); (e) speech enhanced by DCUNet; (f) speech enhanced by HTDemucs; and (g) speech enhanced by DPTNet. Illustrates the comparative efficacy of the models in suppressing periodic noise components while preserving essential speech features.

Figure 5a illustrates the spectrogram of the clean speech signal. Notably, the energy of the speech signal is predominantly concentrated in the low-frequency region (below 2000 Hz), exhibiting distinct harmonic structures manifested as horizontal stripes with higher energy at fundamental frequencies and their multiples. These harmonic components constitute essential elements of speech signals, playing a crucial role in speech intelligibility and timbre perception.

Figure 5b presents the spectrogram of the stationary UAV noise. In contrast to speech signals, UAV noise demonstrates broader energy distribution, spanning the entire frequency spectrum from low to high frequencies. Furthermore, UAV noise exhibits prominent “horizontal stripe” features, primarily attributed to periodic noise generated by relatively constant propeller rotation and associated harmonic components [56].

Figure 5c shows the spectrogram of the mixed speech signal. The horizontal stripe patterns of UAV noise are clearly superimposed onto the speech signal, resulting in severe masking of speech harmonic structures. This pronounced spectral overlap presents a primary challenge for speech enhancement.

Figure 5d demonstrates the spectrogram processed by the Edge-BS-RoFormer model. Compared with the mixed signal, Edge-BS-RoFormer achieves significant suppression of UAV noise stripes while effectively preserving speech harmonic structures. Particularly in the critical 1.25-3 kHz frequency band, Edge-BS-RoFormer recovers noise-obscured speech formant information, which is vital for improving speech intelligibility. These results indicate that Edge-BS-RoFormer effectively extracts speech components from complex time–frequency mixtures while suppressing UAV noise interference under stationary conditions.

Figure 5e, f, and g, respectively, display spectrograms processed by DCUNet, HTDemucs, and DPTNet baseline models. Compared with Edge-BS-RoFormer, DCUNet (e) recovers speech information below approximately 5.2 kHz but with some loss of finer details compared to the proposed method. Above 5.2 kHz, the spectrum is nearly blank, containing no useful high-frequency speech information. HTDemucs (f) restores some high-energy spectral details of the speech, but a noticeable layer of background noise persists across the entire spectrogram, degrading the overall clarity. DPTNet (g) recovers some speech information, primarily below 800 Hz; however, above this frequency, it exhibits a tendency to replicate low-frequency patterns into higher-frequency regions, thereby introducing spurious high-frequency details rather than genuine speech components.

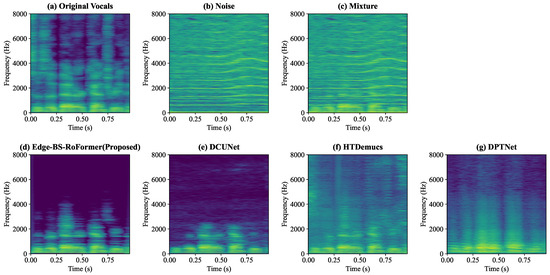

Next, to evaluate the adaptability of the models to more challenging conditions, we analyzed their performance under dynamic UAV noise, simulating scenarios such as flight maneuvers where propeller speeds vary. Figure 6 presents the spectrogram comparisons for this dynamic noise environment.

Figure 6.

Spectrogram comparison of speech enhancement methods under dynamic UAV noise conditions, simulating varying operational states such as flight maneuvers. Colors indicate energy levels (yellow: high, blue: low). Subplots display (a) original clean vocals; (b) dynamic UAV noise, characterized by time-varying harmonic structures (undulating bands); (c) a mixture of clean vocals and dynamic UAV noise; (d) speech enhanced by the proposed EBS-RoF; (e) speech enhanced by DCUNet; (f) speech enhanced by HTDemucs; and (g) speech enhanced by DPTNet. This figure highlights the models’ adaptability to non-stationary noise and their effectiveness in preserving speech integrity amidst changing noise patterns.

Figure 6a shows the clean speech, identical to the previous scenario. Figure 6b displays the spectrogram of dynamic UAV noise. While still broadband, the key characteristic here is that the “horizontal stripe” features, corresponding to the propeller harmonics, now exhibit time-varying frequencies and intensities, appearing as undulating bands. This reflects the changing operational state of the UAV and presents a more complex challenge for noise suppression algorithms. Consequently, in the mixed signal shown in Figure 6c, the speech harmonics are masked by these non-stationary noise patterns.

Figure 6d presents the output of the Edge-BS-RoFormer model under these dynamic conditions. The model effectively suppresses the time-varying noise stripes. Below approximately 4 kHz, Edge-BS-RoFormer restores a significant portion of the original speech details, including harmonic and formant structures. Notably, the proposed method demonstrates remarkable fidelity in preserving crucial speech components within the sub-4 kHz frequency band, which is essential for maintaining speech intelligibility in communication systems. Above 4 kHz, the spectrum is largely devoid of prominent speech components, indicating that, while higher-frequency details are not fully reconstructed, the model does not introduce spurious high-frequency artifacts.

Examining the baseline models in Figure 6, it can be seen that DCUNet (e) achieves good restoration of speech information below 2.5 kHz, with some details in this very-low-frequency range appearing comparable to, or in some instances slightly clearer than, the proposed method. However, above 2.5 kHz, useful speech components are not recovered, and some time-varying artifacts from the dynamic noise persist. HTDemucs (f) and DPTNet (g) exhibit more significant limitations. HTDemucs fails to restore useful information above approximately 2 kHz, and the speech restored below this frequency lacks clarity. It also exhibits the previously noted characteristic of introducing spurious high-frequency details by replicating lower-frequency patterns. DPTNet shows some information recovery only below 800 Hz with poorly defined vocal harmonics, and similarly introduces artificial high-frequency content. The proposed method, Edge-BS-RoFormer, and DCUNet both significantly outperform HTDemucs and DPTNet in this dynamic scenario. The notably poorer performance of HTDemucs and DPTNet might suggest that their underlying architectures or loss functions are less suited for adapting to such highly non-stationary noise conditions.

Through comparative spectrogram analysis across both stationary and dynamic UAV noise conditions, it can be concluded that Edge-BS-RoFormer demonstrates superior overall noise suppression and time–frequency feature preservation capabilities. This robust performance, particularly its adaptability to dynamic noise, stems from its key architectural innovations: first, the band-split strategy enables refined and potentially adaptive processing tailored to the spectral characteristics within different frequency sub-bands; second, the mechanism enhances the model’s ability to capture and model the dynamic time–frequency variations of both speech and complex, non-stationary noise.

4.2.3. Training Dynamics Analysis

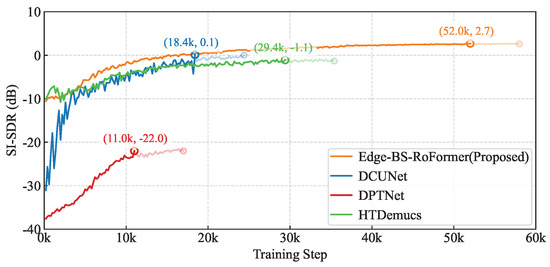

Beyond final metrics, the training process itself offers insights into model learning. This subsection thus examines training dynamics, comparing the convergence behavior and performance evolution of Edge-BS-RoFormer with baseline models.

Figure 7 illustrates the training convergence dynamics of four models, with SI-SDR serving as the early stopping criterion to mitigate overfitting. The experimental results quantitatively demonstrate that, under identical training configurations, the proposed method exhibits improved convergence characteristics. In terms of convergence efficiency, the SI-SDR achieved by the proposed method at 16.6k steps already surpasses the optimal SI-SDR values ultimately achieved by the other models. Regarding the performance ceiling, Edge-BS-RoFormer achieves its optimal performance (SI-SDR of 2.7 dB) at 52k steps, whereas DCUNet, DPTNet, and HTDemucs reach their respective optima at 18.4k, 11.0k, and 29.4k steps, with SI-SDR values of 0.1 dB, −22.0 dB, and −1.1 dB, respectively.

Figure 7.

Comparison of training convergence for different speech enhancement models. The x-axis shows training iterations; the y-axis shows the validation SI-SDR (dB). Each curve contains two phases: a darker segment preceding the optimal value and a lighter continuation post-optimal value, with circles marking the optimal and early stopping points. The proposed Edge-BS-RoFormer model (orange) demonstrates significantly superior convergence trajectories and performance ceilings compared to DCUNet (blue), DPTNet (red), and HTDemucs (green), validating the advantage of the proposed method under identical training parameters.

The performance advantage of Edge-BS-RoFormer can be attributed to its band-split strategy and mechanism, specifically designed to address UAV noise characteristics. This structural design potentially allows the model to better capture intricate time–frequency features, thereby establishing a more favorable inductive bias within the optimization space, which holds significant implications for practical applications.

4.3. Edge Deployment Experiments

To evaluate the practical performance of the proposed Edge-BS-RoFormer in resource-constrained edge environments, experiments were conducted on the NVIDIA Jetson AGX Xavier platform, which features an ARM architecture CPU, a Volta architecture GPU, and a power consumption less than 30 W. The selection of this platform was motivated by the need to simulate the computational constraints of devices such as UAVs, thereby ensuring that the evaluation results are practically relevant.

Table 1 presents comprehensive computational metrics for the evaluated models across multiple deployment-critical parameters. A primary indicator of computational complexity, crucial for edge deployment, is the FLOPs count, which quantifies the total arithmetic operations and offers a direct measure of the model’s computational load per inference. The proposed Edge-BS-RoFormer demonstrates remarkable efficiency, requiring only 11.617 GFLOPs. This represents an 89.6% reduction compared to DCUNet (112.136 GFLOPs) and substantial decreases of 72.2% and 76.0% relative to DPTNet (41.797 GFLOPs) and HTDemucs (48.391 GFLOPs), respectively, underscoring the model’s suitability for resource-constrained environments. Such a significant reduction in computational complexity while maintaining strong performance is primarily attributed to the model’s lightweight Transformer architecture and the efficient time–frequency modeling capabilities of its attention mechanisms, which achieve notable results with a comparatively small computational budget.

Table 1.

Edge deployment performance comparison.

In terms of model storage requirements, Edge-BS-RoFormer exhibits exceptional parameter efficiency (8.534 MB), achieving reductions exceeding 95.4% compared to DPTNet (187.316 MB) and 94.7% compared to HTDemucs (160.331 MB). The model requires a runtime memory of 491.544 MB. While this is higher than some baselines, it reflects the memory needs of its attention mechanisms, which are integral to its enhancement capabilities in challenging acoustic environments. The model maintains real-time processing capability with an RTF of 0.325, well below the critical threshold of 1.0 required for practical deployment. Although DCUNet demonstrates lower latency (192.642 ms versus 330.830 ms for Edge-BS-RoFormer), the choice between models may also consider the acoustic quality improvements, as established in previous analyses. The power consumption profile of Edge-BS-RoFormer (6.536 W) remains within acceptable parameters for edge deployment, comparable to DPTNet (6.578 W). The power requirements relative to DCUNet (5.065 W) and HTDemucs (4.861 W) are noted, and the overall balance between power use, computational efficiency, and noise suppression capability is a key consideration for deployment.

This comprehensive evaluation demonstrates that Edge-BS-RoFormer establishes a notable balance between computational resource utilization and acoustic enhancement performance—a critical consideration for UAV deployment scenarios where both power efficiency and speech intelligibility directly impact operational effectiveness.

4.4. Ablation Study

To quantify the individual contributions of each component to the overall performance, a systematic ablation study was conducted by constructing three model variants while maintaining consistency with the training protocol used in the primary experiments:

- Edge-BS-RoFormer (FlashAttention, RoPE): The proposed model, employing the FlashAttention mechanism and RoPE.

- Edge-BS-RoFormer (FlashAttention, SPE): RoPE is replaced with standard Sine Positional Encoding (SPE) in this variant.

- Edge-BS-RoFormer (LinearAttention, SPE): Based on the SPE configuration, FlashAttention is replaced with standard Linear Attention.

The performance of each variant was evaluated across all input SNR levels, and averaged results are presented in Table 2.

Table 2.

Ablation study comparison.

Experimental results (Table 2) confirm that the Edge-BS-RoFormer (FlashAttention, RoPE) configuration achieves superior acoustic quality (SI-SDR = 2.558 dB, STOI = 0.623, PESQ = 1.229). Notably, all ablated variants exhibit identical theoretical computational complexity (7.480 GFLOPs). This uniformity was anticipated; core architectural parameters (e.g., hidden dimensions, number of layers) remained constant. Mechanisms like FlashAttention primarily optimize memory I/O efficiency—by minimizing data transfers between high-bandwidth memory and on-chip SRAM—rather than altering the fundamental count of arithmetic operations. Similarly, the replacement of SPE with RoPE does not significantly alter the overall FLOP count, as both involve element-wise operations on query and key vectors, which are computationally minor compared to the main matrix multiplications within the Transformer. This controlled FLOPs baseline is crucial for isolating component-specific impacts on practical efficiency metrics such as latency and power consumption.

Despite identical FLOPs, variations in latency and RTF underscore the significant influence of memory access patterns and implementation-specific optimizations on practical runtime performance. The mechanism, for instance, profoundly impacts enhancement efficacy, yielding a 4.273 dB SI-SDR improvement and a 0.104 STOI increase over the SPE variant. This substantial differential validates ’s enhanced capacity for spectro-temporal feature extraction, critical for processing complex UAV noise.

Comparing attention mechanisms under SPE, provides modest computational advantages over LinearAttention (RTF: 0.159 vs. 0.166; memory: 303.437 MB vs. 321.864 MB) with comparable acoustic quality (PESQ: 1.194 vs. 1.197), suggesting that the choice of attention implementation primarily dictates computational efficiency rather than perceptual quality in this context. The deployment analysis further reveals a trade-off: the -enabled configuration incurs a higher latency (231.668 ms) than the -SPE variant (176.328 ms). However, this increased latency is associated with its superior speech intelligibility, a paramount concern for UAV communication. Conversely, the –SPE variant offers optimal energy efficiency (4.444 W), representing a 16.0% reduction compared to the variant (5.288 W).

These findings delineate a clear functional relationship: positional encoding mechanisms, such as , predominantly govern enhancement performance by improving spectro-temporal feature representation, while the specific implementation of the attention mechanism, exemplified by versus LinearAttention, primarily steers computational efficiency and resource utilization (e.g., latency, memory, power). The sustained performance advantage of the configuration, particularly in challenging low-SNR conditions, underscores its importance for achieving high-quality speech enhancement. Concurrently, the observed efficiency benefits of highlight its role in optimizing models for practical edge deployment. This ablation study thus quantifies the distinct contributions of these key architectural choices and their respective implications for developing effective and efficient UAV speech enhancement systems.

5. Conclusions

In this study, we introduced the Edge-BS-RoFormer, an edge-deployable band-split RoPE Transformer specifically designed for UAV speech enhancement. This innovative architecture leverages a strategic band-split strategy in conjunction with a dual-dimension mechanism to achieve efficient UAV noise suppression within computationally constrained platforms. Experimental results demonstrated Edge-BS-RoFormer’s superiority. Under demanding −15 dB SNR conditions, it yielded significant SI-SDR improvements of 2.2 dB over DCUNet, 25.0 dB over DPTNet, and 2.3 dB over HTDemucs, with corresponding PESQ gains of 0.11, 0.18, and 0.15. Qualitative spectrogram analysis further confirmed its enhanced capability in preserving speech integrity under both stationary and dynamic UAV noise. Concurrently, its practical applicability for edge computing was validated on an NVIDIA Jetson AGX Xavier. The model operates with a remarkably low computational load of 11.617 GFLOPs, requires only 8.534 MB of storage, maintains a runtime memory footprint of under 500 MB, achieves a real-time RTF of 0.325 (330.830 ms latency), and consumes 6.536 W of power. These metrics collectively underscore its efficiency and suitability for real-world UAV deployment. Furthermore, the open-sourcing of the DN-LM dataset and the Edge-BS-RoFormer model serves as a valuable contribution to the research community, facilitating standardized benchmarking and further advancements. While Edge-BS-RoFormer has demonstrated significant progress, its generalization to speech captured during extreme UAV maneuvering in real flight scenarios warrants further investigation in future work. Other future research directions include exploring a few-shot transfer learning framework tailored for specific UAV models, quantization-aware cross-compilation deployment strategies for low-power CPU platforms, and end-to-end joint optimization with downstream speech recognition models to further enhance system applicability in complex operational environments.

Author Contributions

Conceptualization, F.L. and M.L.; methodology, F.L. and M.L.; formal analysis, F.L. and H.G.; investigation, W.Z. and J.W.; resources, W.Z.; data curation, L.G.; writing—original draft preparation, F.L.; writing—review and editing, F.L. and M.L.; visualization, M.L. and L.G.; supervision, J.C. and W.Z.; project administration, J.C. and W.Z.; funding acquisition, J.C. and W.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The proposed DN-LM dataset and implementation code are openly available in the repository at https://github.com/LFF8888/Edge-BS-RoFormer-DroneNoise-LibriMix (accessed on 19 May 2025).

Acknowledgments

The author would like to express sincere gratitude to Qihang Yan and Mengmeng Wang for their meticulous guidance and valuable contributions to this manuscript. Special thanks are extended to Ruyan Zhang for her crucial administrative coordination and key suggestions during the preparation of this research.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jahani, H.; Khosravi, Y.; Kargar, B.; Ong, K.L.; Arisian, S. Exploring the role of drones and UAVs in logistics and supply chain management: A novel text-based literature review. Int. J. Prod. Res. 2025, 63, 1873–1897. [Google Scholar] [CrossRef]

- Wu, Q.; Su, Y.; Tan, W.; Zhan, R.; Liu, J.; Jiang, L. UAV Path Planning Trends from 2000 to 2024: A Bibliometric Analysis and Visualization. Drones 2025, 9, 128. [Google Scholar] [CrossRef]

- Shuaibu, A.S.; Mahmoud, A.S.; Sheltami, T.R. A Review of Last-Mile Delivery Optimization: Strategies, Technologies, Drone Integration, and Future Trends. Drones 2025, 9, 158. [Google Scholar] [CrossRef]

- Bine, L.M.; Boukerche, A.; Ruiz, L.B.; Loureiro, A.A. Connecting internet of drones and urban computing: Methods, protocols and applications. Comput. Netw. 2024, 239, 110136. [Google Scholar] [CrossRef]

- Molina, A.A.; Huang, Y.; Jiang, Y. A review of unmanned aerial vehicle applications in construction management: 2016–2021. Standards 2023, 3, 95–109. [Google Scholar] [CrossRef]

- Gu, X.; Zhang, G. A survey on UAV-assisted wireless communications: Recent advances and future trends. Comput. Commun. 2023, 208, 44–78. [Google Scholar] [CrossRef]

- Bevins, A.; Kunde, S.; Duncan, B.A. User-designed human-UAV interaction in a social indoor environment. In Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 11–15 March 2024; pp. 23–31. [Google Scholar]

- Marciniak, J.B.; Wiktorzak, B. Automatic Generation of Guidance for Indoor Navigation at Metro Stations. Appl. Sci. 2024, 14, 10252. [Google Scholar] [CrossRef]

- Lyu, M.; Zhao, Y.; Huang, C.; Huang, H. Unmanned aerial vehicles for search and rescue: A survey. Remote Sens. 2023, 15, 3266. [Google Scholar] [CrossRef]

- Choi, S.H.; Kim, Z.C.; Buu, S.J. Speech-Guided Drone Control System Based on Large Language Model. In Proceedings of the 2025 International Conference on Electronics, Information, and Communication (ICEIC), Osaka, Japan, 19–22 January 2025; pp. 1–4. [Google Scholar]

- Izquierdo, A.; Del Val, L.; Villacorta, J.J.; Zhen, W.; Scherer, S.; Fang, Z. Feasibility of discriminating UAV propellers noise from distress signals to locate people in enclosed environments using MEMS microphone arrays. Sensors 2020, 20, 597. [Google Scholar] [CrossRef]

- Wang, L.; Cavallaro, A. Ear in the sky: Ego-noise reduction for auditory micro aerial vehicles. In Proceedings of the 2016 13th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Colorado Springs, CO, USA, 23–26 August 2016; pp. 152–158. [Google Scholar]

- Wang, L.; Cavallaro, A. Microphone-array ego-noise reduction algorithms for auditory micro aerial vehicles. IEEE Sens. J. 2017, 17, 2447–2455. [Google Scholar] [CrossRef]

- Wang, L.; Cavallaro, A. Acoustic sensing from a multi-rotor drone. IEEE Sens. J. 2018, 18, 4570–4582. [Google Scholar] [CrossRef]

- Manamperi, W.N.; Abhayapala, T.D.; Samarasinghe, P.N.; Zhang, J.A. Drone audition: Audio signal enhancement from drone embedded microphones using multichannel wiener filtering and gaussian-mixture based post-filtering. Appl. Acoust. 2024, 216, 109818. [Google Scholar] [CrossRef]

- Sinibaldi, G.; Marino, L. Experimental analysis on the noise of propellers for small UAV. Appl. Acoust. 2013, 74, 79–88. [Google Scholar] [CrossRef]

- Mukhutdinov, D.; Alex, A.; Cavallaro, A.; Wang, L. Deep learning models for single-channel speech enhancement on drones. IEEE Access 2023, 11, 22993–23007. [Google Scholar] [CrossRef]

- Tengan, E.; Dietzen, T.; Ruiz, S.; Alkmim, M.; Cardenuto, J.; van Waterschoot, T. Speech enhancement using ego-noise references with a microphone array embedded in an unmanned aerial vehicle. arXiv 2022, arXiv:2211.02690. [Google Scholar]

- Karam, M.; Khazaal, H.F.; Aglan, H.; Cole, C. Noise removal in speech processing using spectral subtraction. J. Signal Inf. Process. 2014, 5, 32–41. [Google Scholar] [CrossRef]

- Chen, C. Research on Single Channel Speech Noise Reduction Algorithm Based on Signal Processing. In Proceedings of the 2020 5th International Conference on Technologies in Manufacturing, Information and Computing (ICTMIC 2020), San Jose, CA, USA, 9–12 March 2020. [Google Scholar]

- Lei, Y.; Liqin, T.; Junyi, W. A Study on a Two-Stage UAV Noise Removal Method Based on Deep Residual Neural Networks. Curr. Sci. 2025, 5, 1422–1431. [Google Scholar] [CrossRef]

- Watanabe, S.; Mandel, M.; Barker, J.; Vincent, E.; Arora, A.; Chang, X.; Khudanpur, S.; Manohar, V.; Povey, D.; Raj, D.; et al. CHiME-6 challenge: Tackling multispeaker speech recognition for unsegmented recordings. arXiv 2020, arXiv:2004.09249. [Google Scholar]

- Lu, W.T.; Wang, J.C.; Kong, Q.; Hung, Y.N. Music Source Separation With Band-Split Rope Transformer. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 481–485. [Google Scholar] [CrossRef]

- Défossez, A.; Usunier, N.; Bottou, L.; Bach, F. Music source separation in the waveform domain. arXiv 2019, arXiv:1911.13254. [Google Scholar]

- Rouard, S.; Massa, F.; Défossez, A. Hybrid transformers for music source separation. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Su, J.; Ahmed, M.; Lu, Y.; Pan, S.; Bo, W.; Liu, Y. Roformer: Enhanced transformer with rotary position embedding. Neurocomputing 2024, 568, 127063. [Google Scholar] [CrossRef]

- Dao, T.; Fu, D.; Ermon, S.; Rudra, A.; Ré, C. Flashattention: Fast and memory-efficient exact attention with io-awareness. Adv. Neural Inf. Process. Syst. 2022, 35, 16344–16359. [Google Scholar]

- Loizou, P.C. Speech Enhancement: Theory and Practice; CRC Press: Boca Raton, FL, USA, 2007. [Google Scholar]

- Chen, J.; Mao, Q.; Liu, D. Dual-path transformer network: Direct context-aware modeling for end-to-end monaural speech separation. arXiv 2020, arXiv:2007.13975. [Google Scholar]

- Luo, Y.; Chen, Z.; Yoshioka, T. Dual-path rnn: Efficient long sequence modeling for time-domain single-channel speech separation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 46–50. [Google Scholar]

- Song, Y.; Kindt, S.; Madhu, N. Drone ego-noise cancellation for improved speech capture using deep convolutional autoencoder assisted multistage beamforming. In Proceedings of the 2022 25th International Conference on Information Fusion (FUSION), Linköping, Sweden, 4–7 July 2022; pp. 1–8. [Google Scholar]

- Wang, L.; Cavallaro, A. A blind source separation framework for ego-noise reduction on multi-rotor drones. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2523–2537. [Google Scholar] [CrossRef]

- Tan, Z.W.; Nguyen, A.H.; Khong, A.W. An efficient dilated convolutional neural network for UAV noise reduction at low input SNR. In Proceedings of the 2019 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Lanzhou, China, 18–21 November 2019; pp. 1885–1892. [Google Scholar]

- Wang, L.; Cavallaro, A. Deep learning assisted time-frequency processing for speech enhancement on drones. IEEE Trans. Emerg. Top. Comput. Intell. 2020, 5, 871–881. [Google Scholar] [CrossRef]

- Choi, H.S.; Kim, J.H.; Huh, J.; Kim, A.; Ha, J.W.; Lee, K. Phase-aware speech enhancement with deep complex u-net. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Pandey, A.; Wang, D. Self-attending RNN for speech enhancement to improve cross-corpus generalization. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 1374–1385. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; He, B.; Zhu, W.P. TSTNN: Two-stage transformer based neural network for speech enhancement in the time domain. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 7098–7102. [Google Scholar]

- Sun, T.; Bohté, S. DPSNN: Spiking neural network for low-latency streaming speech enhancement. Neuromorphic Comput. Eng. 2024, 4, 044008. [Google Scholar] [CrossRef]

- Chen, X.; Bi, H.; Lai, W.T.; Ma, F. Monaural speech enhancement on drone via Adapter based transfer learning. In Proceedings of the 2024 18th International Workshop on Acoustic Signal Enhancement (IWAENC), Aalborg, Denmark, 9–12 September 2024; pp. 85–89. [Google Scholar]

- Lu, W.T.; Wang, J.C.; Won, M.; Choi, K.; Song, X. SpecTNT: A time-frequency transformer for music audio. arXiv 2021, arXiv:2110.09127. [Google Scholar]

- Luo, Y.; Yu, J. Music source separation with band-split RNN. IEEE/ACM Trans. Audio Speech Lang. Process. 2023, 31, 1893–1901. [Google Scholar] [CrossRef]

- Williamson, D.S.; Wang, Y.; Wang, D. Complex ratio masking for monaural speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2015, 24, 483–492. [Google Scholar] [CrossRef]

- Zhang, B.; Sennrich, R. Root mean square layer normalization. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Dauphin, Y.N.; Fan, A.; Auli, M.; Grangier, D. Language modeling with gated convolutional networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 933–941. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Panayotov, V.; Chen, G.; Povey, D.; Khudanpur, S. Librispeech: An asr corpus based on public domain audio books. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; pp. 5206–5210. [Google Scholar]

- Al-Emadi, S.; Al-Ali, A.; Mohammad, A.; Al-Ali, A. Audio based drone detection and identification using deep learning. In Proceedings of the 2019 15th International Wireless Communications & Mobile Computing Conference (IWCMC), Tangier, Morocco, 24–28 June 2019; pp. 459–464. [Google Scholar]

- Beranek, L.L.; Mellow, T. Acoustics: Sound Fields and Transducers; Academic Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Solovyev, R.; Stempkovskiy, A.; Habruseva, T. Benchmarks and leaderboards for sound demixing tasks. arXiv 2023, arXiv:2305.07489. [Google Scholar]

- Le Roux, J.; Wisdom, S.; Erdogan, H.; Hershey, J.R. SDR–half-baked or well done? In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 626–630. [Google Scholar]

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. A short-time objective intelligibility measure for time-frequency weighted noisy speech. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 4214–4217. [Google Scholar]

- Rix, A.W.; Beerends, J.G.; Hollier, M.P.; Hekstra, A.P. Perceptual evaluation of speech quality (PESQ)—A new method for speech quality assessment of telephone networks and codecs. In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No. 01CH37221), Salt Lake City, UT, USA, 7–11 May 2001; Volume 2, pp. 749–752. [Google Scholar]

- Hunger, R. Floating Point Operations in Matrix-Vector Calculus; Technical report; Associate Institute for Signal Processing: Munich, Germany, 2005. [Google Scholar]

- Defossez, A.; Synnaeve, G.; Adi, Y. Real time speech enhancement in the waveform domain. arXiv 2020, arXiv:2006.12847. [Google Scholar]

- Schäffer, B.; Pieren, R.; Heutschi, K.; Wunderli, J.M.; Becker, S. Drone noise emission characteristics and noise effects on humans—A systematic review. Int. J. Environ. Res. Public Health 2021, 18, 5940. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).