Abstract

Multi-drone collaborative perception aims to address single-drone viewpoint limitations. The existing matching and association methods based on visual features and spatial topology rely heavily on detection, making it challenging to associate targets under imperfect detection conditions. To address this issue, a Graph-Based Target Association Network (GTA-Net) is proposed to utilize graph matching to associate the key objects before affine transforming and matching both detected and undetected targets. The Key Object Detection Network (KODN) finds the key object that is more likely to be a True Positive and more important. The Graph Feature Network (GFN) treats the key objects as graph nodes and extracts the graph feature. The Association Module utilizes graph matching to associate the top-k-like matching objects and iterable affine transformation to associate all objects. The experiment results show that our method achieved a 42% accuracy improvement on the public dataset. The ablation experiments under imperfect detection simulation demonstrate robust performance.

1. Introduction

The task of multi-view target association is to associate the target in one view with the same target in another view. With the development of drone swarm technology, multi-view target association is emerging as a crucial field. Despite recent advancements [1,2] in multi-perspectives achieved through deep learning techniques, the emphasis has primarily been on cameras at fixed locations. Compared with fixed cameras, high-speed moving drones pose greater challenges for multi-perspective perception. On the one hand, objects appear smaller and more numerous from the perspective of drones, and it is more difficult to conduct joint perception [3,4]. On the other hand, the movement of drones breaks the framework in which objects are modeled in a fixed scene from a fixed perspective [5,6,7,8].

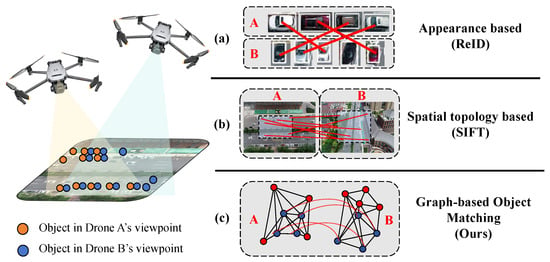

Although some previous studies have proposed some methods like appearance re-identification (ReID) and the spatial topology relationship, as shown in Figure 1, it still faces a lot of challenges. The ReID strategy, based on comparing the similarity of appearance features, performs well when targets have significant appearance differences [9,10,11], when performing under subtle appearance variations, and under imperfect detection conditions. The spatial topology strategy [12] can address imperfect detection by matching point features, but wrong matching feature points take association entirely in error. Thus, proposing a new framework to fuse appearance features and spatial topology relationships to enhance robustness is crucial for multi-drone target association.

Figure 1.

The current methods primarily leverage the appearance features of objects (a) and spatial topology features of objects (b). Our method like (c) employs graph structures to exploit both the topological structures and the appearance features of images.

Based on the aforementioned observations, we propose a Graph-Based Target Association Network (GTA-Net) to introduce a graph structure incorporating both appearance features and spatial topology features, as illustrated in Figure 1c. Unlike traditional methods that associate points using manual feature points, GTA-Net uniquely employs graph matching for object association. To construct a graph structure from the detection results before graph matching, GTA-Net treats all objects as nodes. The line between two objects is treated as an edge. Additionally, the KODN selects the key objects to construct the graph, because not all objects detected are positive for the graph matching. The Association Module utilizes graph matching to associate the top-k-like matching objects and iterable affine transformation to associate all objects. Furthermore, the matching result can be jointly perceived through multi-perspective information complementation. The main contributions can be summarized as follows:

Firstly, this paper presents a novel framework, GTA-Net, which transforms the multi-drone target association problem into a graph-matching problem. To our knowledge, it is the first framework using graph matching in multi-view target association fields. This framework addresses issues such as target occlusion, false detections, and missed detections through the robustness of the graph feature.

Secondly, the KODN selects the more important targets as the nodes of the graph. The GFN encodes the edge features and the node features to construct the graph feature map. Then, the graph is built from the line, which not only represents the target appearance but also represents the spatial relationships in the feature map.

Finally, experiments conducted on the MDMT dataset demonstrate that the proposed approach increases target association accuracy by 42% and decreases the missing rate by 18% relative to existing methods. The ablation experiments under imperfect detection simulation demonstrate robust performance.

2. Related Works

In this section, the key concepts and existing related methods are introduced. Section 2.1 focuses on multi-view remote sensing, which provides the foundation for multi-drone observations. Then, Section 2.2 discusses object detection, a crucial step for identifying targets in drone imagery. Additionally, Section 2.3 and Section 2.4 review re-identification and multi-camera multi-target tracking methods, as they share similarities with our multi-drone target association problem.

2.1. Multi-View Remote Sensing

Compared with normal remote sensing, multi-view remote sensing [13,14] offers a range of advantages and unique perspectives that have significantly enhanced our capabilities in observing and understanding the Earth’s surface. By combining data from multiple views, multi-view remote sensing can improve the accuracy and reliability of observations [15,16,17]. This is particularly useful in applications such as change detection, where the ability to accurately identify and quantify changes over time is crucial. Multi-view remote sensing [18,19,20] can offer higher spatial and temporal resolution than traditional methods. This allows for more detailed and frequent observations, which can be particularly useful in monitoring dynamic processes such as urbanization, deforestation, and climate change.

Multi-drone remote sensing represents an exciting extension of multi-view remote sensing, leveraging the unique capabilities of drones to further enhance our observation and understanding of the Earth’s surface. By deploying multiple drones equipped with various sensors, we can capture data from even more diverse perspectives and improve the accuracy, reliability, and resolution of our observations.

Although multi-drone remote sensing has achieved some results, there is currently no unified cognition and emergence of swarm intelligence through the collaboration of multiple drones.

2.2. Object Detection

Object detection [21,22,23,24] is the base of drones’ remote sensing. The first step of MDOM is object detection. In the past few years, significant progress has been made in object detection techniques with the development of convolutional neural networks and attention mechanisms [25,26,27,28,29]. The task of object detection is to classify and localize objects using bounding boxes. Two-stage methods like Faster-RCNN [25] and Mask RCNN [30] have high accuracy and low speed. On the other hand, one-stage methods like the YOLO series [31,32,33,34] and SSD [35] have higher speed. In order to cope with the challenge of numerous and small targets from the perspective of drones, many studies utilize sparse convolution [36,37] to concentrate attention on the target area.

Although current methods achieve a high accuracy, detection imperfections like missing objects are normal in the real world, which causes a lot of trouble when generating a unified cognition for multiple drones. As a result, knowing how to cope with imperfect detection is important for the unified cognition of multiple drones.

2.3. Re-Identification

One of the current MDOM methods is re-identification [38,39,40,41]. Re-identification can be divided into two-stage and a single-stage methods based on the relationship between the target detection part and the target re-identification feature extraction part. The two-stage ReID network separates object detection and object re-identification, treating them as two independent algorithms for processing. Among the two-stage methods, MGN [42] was designed as a slice structure that combines global and local features. CAR [43] was proposed to simplify current complex re-identification structures. It is more convenient to analyze the effective parts. AGW [38] utilizes a combination of a non-local attention mechanism, fine-grained feature extraction, and weighted regularization of triplet loss to achieve stable re-identification performance. On the other hand, one-stage methods like FairMOT [44] aim to detect objects and extract high-dimensional features in a single inference process. FairMOT is a single-stage network based on anchor-free boxes, achieving the best balance between algorithm speed and performance.

Although re-identification is a direct idea to deal with MDOM, it cannot distinguish between two similar goals and also cannot address the problem of imperfect detection. Any drone that misses an object will cause a missing associate pair, which makes it meaningless for multiple drones to collaborate and complement each other’s information.

2.4. Multi-Camera Multi-Target Tracking

Multi-camera multi-target tracking (MCMTT) is a similar task to MDOM. The main difficulty relating to this task is that it must establish correlations between images captured from different viewpoints and build a model to fuse multi-view information to improve tracking performance. Zhu et al. [45] introduced an efficient greedy matching algorithm based on likelihood functions to match trajectories. Ref. [46] reconstructed multi-target multi-camera tracking into a combinatorial structure optimization problem and proposed a hierarchical combinatorial model.

Although MCMTT is similar to MDOM, the related position of the camera is stable. Therefore, finding correlations between images is easy because of the stability of the related position. To address this, MIA-Net [12] discovers the topological relationship of targets across drones. However, it still depends on prior knowledge of the first frame, which is unachievable in the real world.

3. Method

In this section, the components of GTA-Net are detailed. First, the overall framework, which outlines how the network processes input images to produce target association results, is presented. Then, the individual modules, including the Key Object Detection Network, the Graph Feature Network, and the Match Module, are delved into.

3.1. Overall Framework

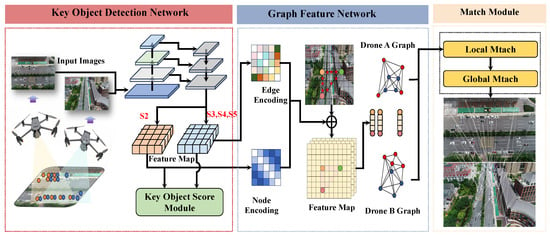

The framework of GTA-Net is shown in Figure 2. The framework takes as input pairs of images of the same region, captured concurrently by multiple freely maneuvering drones. These images exhibit arbitrary viewpoints and temporal discontinuities. The output of the framework is the position of the objects in the two images and the object-matching result. GTA-Net mainly consists of three main modules: the Key Object Detection Network (KOD-Net), the Graph Feature Network (GF-Net), and the Match Module.

Figure 2.

The framework utilizes pairs of images of an identical region as its input. These pairs of images are simultaneously captured by several drones that can move freely. The images showcase viewpoints that are completely arbitrary and possess temporal discontinuities. The framework generates the position of the objects within the two images and the object-matching result as its output. GTA-Net is primarily composed of three key modules: the Key Object Detection Network, the Graph Feature Network, and the Match Module.

Firstly, the KOD-Net is employed to perform object detection across each drone’s viewpoint and to extract key objects that facilitate the matching process. Then, to transform the target-matching problem into a graph-matching problem, each key object is treated as a graph node and the graph edge feature is captured by the GF-Net. The Graph Build Head utilizes the appearance information, background information, and relative positional relationships of the key objects simultaneously, and converts the features into the graph edge features. In the Match Module, Local Match and Global Match are used to find the partially best-matched targets and all the target matching relationships, respectively. In Local Match, graph matching finds the most matching subgraph via the affinity matrix and the Reweighted Random Walk Mechanism. In Global Match, the affine transformer is applied to the images by the control points, and all objects in the two images can be matched by distance.

3.2. Key Object Detection Network

The KOD-Net has two tasks: detecting and choosing the key objects. The detector and key object score module address these problems, respectively.

Detector: The KOD-Net can use an arbitrary convolutional detector as the base for selecting the key object. Without loss of generality, we adopt the YOLOv8 as the base detector. More specifically, except for adding a key object score module and outputting the feature of the P2 stage, there is no other change for the original YOLOv8. The input of the KOD-Net is a pair of images, two images , captured by two drones. After passing through the Backbone and Neck, the features of the P2 stage are mapped to feature maps . The features are input into the Graph Feature Network to build the graph.

Key Object Score Module: As mentioned above, each key object is treated as a graph node. The selection of key objects is highly relevant to the matching accuracy. In matching, defining the key object is a challenging issue. Here, it is assumed that the objects that appear in two views without occlusion are the key objects. Based on the occurrence and occlusion situations in the dataset, we assign a label indicating whether each target in the dataset is important or not. Specifically, we mark key targets as 1 and non-key targets as 0. As a result, we can generate the ground truth key score map G with the slide.

Then, in each detection head, apart from the original classification map, regression map, and confidence head, we add a module for distinguishing whether the target is important or not. This module uses the features of two images simultaneously. For each image, we obtain the global features of its pair and then concatenate them with the features of itself for convolution. After that, we can obtain the key score map of the targets.

where is the key score map of stage P3, is the global feature of its pair in stage P3, and is the feature in stage P3. represents the convolution blocks. are the same.

In training, if all the data in the key score map are used for training, due to the imbalance between positive and negative samples, the model will be more inclined towards negative samples. Although some loss functions can alleviate this influence, it is a simpler approach to directly screen out the invalid negative samples without targets through confidence. For each stage in P3, P4, and P5, the loss function of this module is as follows:

where is the high-confidence position, and p is the stage.

The output of the KOD-Net is , . Targets that are greater than a confidence threshold and greater than a key threshold are selected and defined as the , which is the set of key objects.

3.3. Graph Feature Network

The main task of the proposed GF-NET is to extract graph features between objects. The challenge is in encoding a graph structure from the detection result and feature maps. The inputs are features of P2, and . Firstly, several convolution blocks are applied to deeply capture the features of graph edges. Then, each key object is a node of a graph, and the line between each two nodes is an edge of a graph. To obtain the edge feature, we first calculate the center of these key objects on the feature map. Then, we evenly select n points between the two points for sampling, which are taken as the edge feature of this edge.

There are k key objects from the KOD-Net, and the number of the graph nodes is k. Every two nodes have an edge with a visual feature which is mapped from the feature map by a topological relationship. As a result, the graph contains k nodes and edges. The formula of the edge feature between and can be represented as follows:

where , mean the center of p, and mean the center of q.

where s is the stride of the feature compared with the input feature. is the position transform function from image to feature map. Finally, the edge feature is .

This operation can transform a feature map with a detection result into a graph. Here, every two nodes have an edge feature. Assuming that the length of K is m, the edge feature set can be represented as follows:

Furthermore, means the feature of the edge between p and q.

After obtaining the edge features, it is necessary to use a loss function to guide the network learning, in order to improve the similarity of edges corresponding to the same target and reduce the similarity of edges between different targets in photo pairs taken at the same time, same place, and different angles. In this paper, triplet loss is used to guide the learning of edge feature loss, and cosine similarity is used as a measure of the similarity between two edges. Additionally, when the key object p is in the image and p is also in its pair image, we call the object in its pair . The same as the key object, the edge feature is also called . The edge feature loss is defined as follows:

where is the edge feature with the same starting and ending points . is the edge with different starting and ending points from , and is a constant greater than 0. Thus, a feature map with rich representation ability is ultimately obtained, achieving a robust representation of graph features between objects. represents cosine similarity:

3.4. Match Module

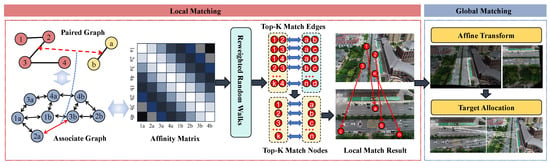

After obtaining the object detection and edge feature sets in the image, this study transformed the multi-drone collaborative object-matching problem into a graph-matching problem by considering the characteristics of input images. In this section, the Local and Global Match are applied to the input graphs and images as shown in Figure 3.

Figure 3.

The workflow of the Match Module. In the Local Match module, the two matching graphs are reshaped as associate graphs, and then the affinity matrix is calculated. Reweighted random walks are used to select the top-k matching edges and nodes. In the Global Match module, affine transforms are applied to two images according to the K matching nodes, and labels are allocated to each target.

Local Match: In the process of establishing a matching relationship between two graphs, it is necessary to establish an affinity matrix to describe the features of the edges between the two graphs. Specifically, assuming the establishment of affinity matrices for two drones, the key object detection results corresponding to the input images of drones A and B are represented as follows:

where and represent the length of the key objects in each view.

Then, affinity matrix is constructed by the two graph features, in which the elements can be represented as follows:

where denotes the similarity of edges and .

When , , which is the diagonal element of the affinity matrix. The edge feature between the target point and itself can be regarded as the self-feature representation for this point according to the calculation formula. That is, it is a simple high-dimensional feature representation of the target itself, achieving a simple target re-identification task.

After the affinity matrix is constructed, the affinity matrix is processed using the classical non-learned RRWM algorithm. The RRWM algorithm achieves anti-noise matching by introducing the iterative updating of the random walk view and exploiting the confidence of the candidate correspondence. After the RRWM algorithm, the corresponding assignment matrix is obtained. Each element corresponds to the degree of similarity between the corresponding points of drones A and B. The greater the corresponding value, the more likely the two values are to be successfully matched. The Hungarian matching algorithm is employed to solve the assignment matrix, aiming to identify the optimal matching plan such that the overall similarity degree is maximized. Consequently, the matching is more accurate in the updated assignment matrix. Nevertheless, in the multi-machine cooperative detection matching problem, only certain targets are the same, while others are out of the field of vision and should not be matched. However, graph matching may assign a corresponding target to each one, leading to false matches. For every pair of false matches, as they do not pertain to the same object, their appearance characteristics differ significantly. Therefore, by utilizing the affinity matrix and considering the body and its own edge characteristics as a representation of its own features, the degree of similarity between the two targets is calculated. When the corresponding value is below a specific threshold, it is considered a false matching relationship and is thus eliminated, yielding the final local matching results.

Global Match: The primary function of the Global Match module is to leverage the high-confidence target association results obtained from the Local Match module to construct a reliable mapping transformation among images captured from multiple drone perspectives. Subsequently, by analyzing the spatial relationships of the mapped objects, the module performs a correlation assessment to achieve global target association.

To establish a mapping between images from drone A and drone B, the homography transformation matrix is defined. Let and denote the coordinates of corresponding target centers in drone A and drone B, respectively. In homogeneous coordinates, the relation is expressed as follows:

where and represent the unnormalized coordinates of the transformed point in the homogeneous coordinate system, while is the scale factor that arises from the perspective transformation. Then, the Cartesian coordinates are recovered via

For each matching point pair and , the transformation yields

When the number of valid matching point pairs is below four, it indicates that the two drones share only a few common targets within their fields of view, primarily due to the limited overlap between their shared vision areas. Under such conditions, target association is predominantly addressed through re-identification, which can yield acceptable results.

When the number of matching points exceeds four, the minimal requirement for estimating the transformation matrix is satisfied. With at least four non-collinear point pairs, these equations form a homogeneous system , where . The system is solved using Singular Value Decomposition (SVD), selecting the solution corresponding to the smallest singular value. Finally, the resulting can be normalized by reshaping and setting .

The perspective transformation is utilized to project and transform the high-confidence targets detected in Graph A into Graph B. Then, the distance between the center point of each target after the perspective transformation and the center of the originally high-confidence targets in Graph B is calculated. When the distance is smaller than the preset threshold, it is considered that the matching between the corresponding points is successful, and these points are included in the set of matching target points from Graph A to Graph B. Using the same method, transmission transformation and high confidence are used to detect the target sets of drones B to A. The set of matching targets that coexist in the set of two matching targets is considered to have a high confidence degree because they have experienced two matches, which is denoted as . The set of matching points with higher confidence is used to solve the transmission transformation and the final matching results.

4. Experiment

In this section, the experimental setup, including the model configuration, dataset used, evaluation metrics, and baseline methods for comparison, is described. Then, the experimental results are presented, comparing GTA-Net with other methods to demonstrate its superiority in terms of matching accuracy and efficiency. Additionally, ablation studies are performed to analyze the impact of different components of the model.

4.1. Experiment Implementation

Model and Training: We developed YOLOv8m as the base detector. The input size was . The key score threshold was 0.5, and the confidence score threshold was also 0.5. The graph matching was supported by pygmtools. Two NVIDIA RTX 4090 GPUs were used to train our model for 5000 epochs with a batch size of 32.

Dataset: A multi-drone multi-target dataset refers to a dataset created when multiple drones collect data simultaneously in the same scene and annotate multiple targets within the scene. At present, research on multi-drone swarm intelligence is at the forefront of the discipline. As far as this article knows, the Multi-Drone Multi-Target Tracking (MDMT) dataset is the world’s first and largest dataset for multi-drone multi-target tracking, greatly promoting the study of multi-drone swarm intelligence. This study conducted experiments and validated the effectiveness of the algorithm based on this dataset. The dataset consists of 44 pairs of video sequences collected by two drones, with a total of 39,678 frames and a resolution of 1920 × 1080. The scenes cover most areas of daily life, such as squares, city roads, rural roads, intersections, overpasses, and parking lots. The collection time covers different weather conditions at different times of the day.

Metrics: We evaluated the algorithm proposed in this article using two types of evaluation indicators, namely, the detection evaluation indicator under the drone platform and the multi-drone target-matching evaluation indicator. The detection evaluation indicator under the drone platform is consistent with the previous description, and the multi-drone target matching is measured by the Multi-Device Target Association (MDA) score and the Multi-Device Target Misassociation (MDMA) score to evaluate the matching accuracy.

MDA focuses on the performance evaluation of ID association across multiple devices, while MDMA focuses on the proportion of missing ID associations across multiple devices. The total number of frames in the video sequence was set to F, and the total number of devices was considered, where k represents the device serial number. Two devices were selected each time to calculate their correlation scores and represent the logarithm that can be selected from multiple devices. The formulas for MDA and MDMA are as follows:

where i represents the i-th frame, j and k represent different capture devices, is the number of target ID pairs that are the same as the true value in the multi-device multi-target association algorithm result, is the total number of true values, that is, the number of correctly associated ID pairs, is the number of incorrectly associated multi-device ID pairs in the multi-device multi-target association algorithm result, and is the number of ID pairs that are not associated but appear in the true value in the multi-device multi-target association algorithm result. Based on the above models and evaluation indicators, we designed and implemented many experiments to verify the effectiveness of the algorithm proposed in this paper.

Frames per second is the speed metric, and the formula is as follows:

where is the number of processing frames and is the number of seconds.

Baseline: In the process of conducting the comparative experiments, appearance-based methods and spatial-based methods were selected to serve as comparisons. Appearance-based methods use the YOLOv8 as the base detector, then use the CAR [43], Circle Loss [47], SCAL [48], SONA [49], and ABDNet [50] to match the objects. For the spatial-based method, SBS [51] and MIA-NET [12] were utilized to match objects.

4.2. Compared with Other Methods

The visualization target association outcomes of the proposed methodology on the MDMT dataset are depicted in Figure 4.

Figure 4.

The results of multi-drone target association on MDMT datasets. (a,b) Target association results for daytime, (c) target association results for a dark night.

The figure illustrates the target association performance of the proposed GTA-Net on the dataset under day and night conditions. The results show that GTA-Net effectively detected vehicle objects across multi-drone viewpoints and achieved robust matching for objects within overlapping regions. Furthermore, the method demonstrated the capability to compensate for occluded objects in single viewpoints through its matching mechanism, highlighting its effectiveness in handling challenging scenarios.

Table 1 shows the final performance of multiple algorithms for multi-drone detection and target association on the MDMT dataset.

Table 1.

Target association performance comparison with other methods.

The accuracy of target association can be directly evaluated using MDMA and MDA. The value of MDMA measures matching failures, where lower values indicate better performance, while the MDA value evaluates successful matches, where higher values reflect superior performance. The experimental results show that, after unifying the detection models for drone A and drone B and accounting for detection inaccuracies, the mAP0.5 for their respective viewpoints was 53.19% and 49.90%. Under these conditions, ReID-based methods achieved MDA values between 0.11 and 0.13 and MDMA values between 0.36 and 0.41, with MEB-Net performing best, achieving an MDA of 0.13 and an MDMA of 0.36. The limited matching performance is primarily due to the high feature repetitiveness of ground objects such as vehicles in UAV remote sensing, as well as challenges such as false detections, misdetections, missed detections, and occlusions.

In contrast, methods based on spatial topology relationships showed significant improvements. The best-performing MIA-Net achieved an MDA of 0.26 and an MDMA of 0.33. This was attributed to the stronger specificity of spatial topology relationships in UAV images compared to target features, as well as the ability of these relationships to correct for missed or occluded targets. The proposed GTA-Net further enhanced target correlations through graph-based construction, significantly improving matching accuracy. It achieved an MDA of 0.37, outperforming MEB-Net by 185% and MIA-Net by 42% while reducing the MDMA to 0.27, a 25% improvement over MEB-Net and an 18% improvement over MIA-Net.

Secondly, from the perspective of model efficiency, ReID-based methods are two-stage approaches that require target detection in each drone viewpoint separately, followed by matching based on the detection results. The ReID process involves traversing targets across all viewpoints, which is computationally intensive due to the typically large number of targets in UAV remote sensing scenarios. As a result, these methods exhibited low operational efficiency, with the FPS ranging between 0.72 and 2.41. Among them, the method based on Circle Loss achieved the highest efficiency, with an FPS of 2.41. In contrast, methods based on spatial topology primarily rely on global relationships between images from different drone viewpoints for matching, thereby reducing the computational cost of local target matching. This approach improved model efficiency, with the SBS method achieving an FPS of 4.81. The proposed GTA-Net further enhanced efficiency by using the Key Object Detection Network, referred to as the KOD-Net, to filter key targets, and the Graph Embedding Fusion Network, referred to as the GF-Net, to transform global spatial information into a graph structure. This process significantly reduced the computational load of target matching, resulting in an FPS of 4.06. Thus, GTA-Net achieves an optimal balance between matching accuracy and operational efficiency. For real-world applications, the target association always works with single-drone multiple-object tracking. The FPS of the target association represents the association frequency across the view in one thread. The single-drone multiple-object tracking methods track the objects between the associations in another thread.

Based on the analysis of the detection results presented in Table 1, it is evident that SBS, MIA-Net, and GTA-NET utilize the same detector, resulting in identical detection accuracy. In contrast, GTA-NET enhances the basic detection results by leveraging the spatial topology relationships among drones. Through an iterative approach that progresses from low-confidence to high-confidence targets, GTA-NET selectively refines low-confidence targets by utilizing the target association relationships across multiple drones. This method effectively supplements low-confidence targets, thereby improving the detection results for each drone compared to its individual detection performance. The overall object detection accuracy is improved by 0.186, demonstrating the effectiveness of GTA-NET in utilizing multi-view perspectives to complement single-perspective information and enhance detection performance.

4.3. Ablation Study

In this subsection, two key aspects will be focused on. First, the effect of imperfect detection on the target association performance of GTA-Net will be studied. Second, the importance of the encoding modules in the graph structure construction will be evaluated.

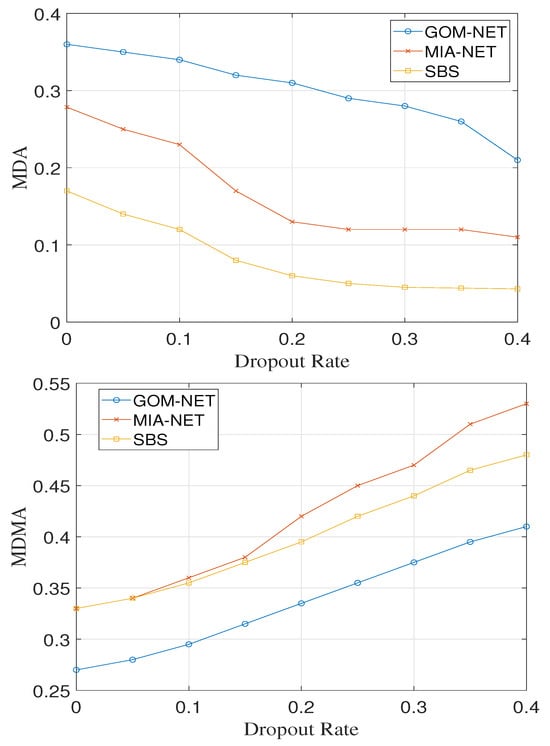

4.3.1. Ablation on Imperfect Detection

This work focuses on target association under imperfect detection conditions, a common challenge in real-world scenarios. To evaluate the robustness of GTA-Net in target association across detection models with varying performance levels, we introduced random dropout to the detection outputs of the original model with its dropout rate ranging from 0 to 0.4. This approach simulates imperfect detection scenarios and allows for quantitative control of detection performance. For comparison, the same dropout procedure was applied to SBS and MIA-NET. The experimental results are presented in Figure 5.

Figure 5.

The performance of different methods with imperfect detection.

The experimental results demonstrate that the performance of target association is closely related to the quality of object detection. As the dropout rate increases, the MDA of all algorithms decreases, while the MDMA increases. Compared to other algorithms, the proposed GTA-Net exhibits significantly superior target association performance. In terms of MDA, when the dropout rate increases from 0 to 0.2, GTA-Net shows a relatively slower decline, decreasing only from 0.37 to 0.31, a reduction of 16%, whereas other algorithms experience an average decline of 54%. This indicates that GTA-Net possesses strong robustness under the influence of imperfect detection factors. Regarding MDMA, while the trends and magnitudes of change are similar across all algorithms, GTA-Net consistently maintains an average advantage that is 0.05 lower than other methods, demonstrating its effectiveness in controlling misassociations. Therefore, GTA-Net achieves better matching accuracy in the presence of imperfect detection.

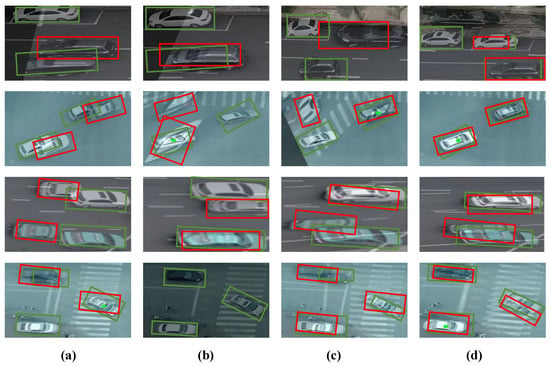

4.3.2. Ablation on Encoding Module

In this study, a graph structure construction model for target association is proposed. We designed node-encoding and edge-encoding modules to transform target information and spatial information from image inputs into node and edge elements in the graph structure. To validate the effectiveness of the two encoding modules, we conducted ablation experiments by activating and deactivating each encoding module on the original model and comparing the target association performance.

Figure 6 serves as compelling evidence that our method achieves optimal performance during the process of affine transformation. The visual data delineated within this figure underscore the robustness and precision of our approach in handling geometric transformations, which is pivotal for applications requiring high fidelity in image registration and object alignment.

Figure 6.

Local matching performance comparison of different Graph Feature Networks: (a) GF-Net without node encoding and edge encoding, (b) GF-Net with node encoding only, (c) GF-Net with edge encoding only, (d) GF-Net in our method.

The experiment compared the matching performance of different graph builder heads. The evaluation metrics were MDMA (the lower the better) and MDA (the higher the better). The experiment was divided into different configurations using node encoding, edge encoding, and the application of encoding at different stages. The results showed that the best performance was achieved when both node and edge encoding were used. The MDMA decreased from 0.35 to 0.27, and the MDA increased from 0.26 to 0.37. As the stage progressed, the MDMA further decreased to 0.24, but the MDA peaked at stage 2 and then gradually declined, indicating that the later stages might slightly reduce the overall accuracy while improving precision.

Both node encoding and edge encoding can significantly improve performance, and their combination has a cumulative effect (Table 2). When using node encoding alone, the MDA increased by 5%. After combining edge encoding, the MDA further increased to 11%. In addition, the experiment verified that the graph-matching method is significantly superior to the re-identification method, especially in complex scenarios. The graph-matching method achieves better performance by utilizing the relationship information between targets.

Table 2.

The matching performance comparison of different Graph Feature Networks.

The experimental results verify the efficacy of the graph-matching method, particularly when multiple information sources like nodes and edges are integrated. However, the decline of MDA in the later stages reveals a trade-off between precision and efficiency. Through experimental analysis, optimizing two modules presents potential avenues for further enhancing the model’s performance. One approach could be to refine the graph construction strategy to better balance these two aspects. Additionally, exploring more features or more intricate graph construction techniques might further boost the matching performance. For instance, delving into the potential of different feature types, such as semantic or dynamic features, and understanding their interaction with the existing graph construction framework could be beneficial. Also, incorporating advanced machine learning algorithms into the graph construction process may help uncover more latent relationships between nodes and edges, thus improving the overall performance of the graph-matching method.

5. Conclusions

In conclusion, the study presents a new approach, GTA-Net, for multi-drone collaborative perception that addresses the limitations of single-drone viewpoints by leveraging graph-based target association. By focusing on identifying high-confidence targets and establishing a graph structure, GTA-Net enhances the robustness of target matching, especially in scenarios with imperfect detection conditions such as occlusion or detection errors. The proposed method significantly improves matching performance, achieving a 42% accuracy increase on a public dataset and demonstrating excellent results in challenging scenarios. Furthermore, the exploration of different graph-matching sub-networks under various object detection models provides insights into the fault tolerance patterns of graph-structure-based matching networks. Overall, GTA-Net represents a significant advancement in multi-drone collaborative perception, offering a promising solution for comprehensive target information acquisition within a scene.

Author Contributions

Conceptualization, Q.T. and X.Y.; methodology, Q.T. and X.Y.; data curation, Y.L.; writing—review and editing, W.L. and Z.Z.; funding acquisition, Q.T. and C.Q.; visualization, J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Fundamental Research Funds for the Central Universities, grant number 2024JBZX010.

Data Availability Statement

The data presented in this study are openly available under: [10.1109/TMM.2023.3234822].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Horyna, J.; Krátký, V.; Pritzl, V.; Báca, T.; Ferrante, E.; Saska, M. Fast Swarming of UAVs in GNSS-Denied Feature-Poor Environments Without Explicit Communication. IEEE Robotics Autom. Lett. 2024, 9, 5284–5291. [Google Scholar] [CrossRef]

- Humais, M.A.; Chehadeh, M.; Azzam, R.; Boiko, I.; Zweiri, Y.H. VisTune: Auto-Tuner for UAVs Using Vision-Based Localization. IEEE Robotics Autom. Lett. 2024, 9, 9111–9118. [Google Scholar] [CrossRef]

- Li, Q.; Yuan, H.; Fu, T.; Yu, Z.; Zheng, B.; Chen, S. Multispectral Semantic Segmentation for UAVs: A Benchmark Dataset and Baseline. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 1–17. [Google Scholar] [CrossRef]

- Zhao, H.; Ren, K.; Yue, T.; Zhang, C.; Yuan, S. TransFG: A Cross-View Geo-Localization of Satellite and UAVs Imagery Pipeline Using Transformer-Based Feature Aggregation and Gradient Guidance. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Xing, L.; Qu, H.; Xu, S.; Tian, Y. CLEGAN: Toward Low-Light Image Enhancement for UAVs via Self-Similarity Exploitation. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Zhao, Y.; Su, Y. Estimation of Micro-Doppler Parameters With Combined Null Space Pursuit Methods for the Identification of LSS UAVs. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Wang, X.; Huang, K.; Zhang, X.; Sun, H.; Liu, W.; Liu, H.; Li, J.; Lu, P. Path planning for air-ground robot considering modal switching point optimization. In Proceedings of the 2023 International Conference on Unmanned Aircraft Systems (ICUAS), Warsaw, Poland, 6–9 June 2023; pp. 87–94. [Google Scholar]

- Zhao, Y.; Su, Y. Sparse Recovery on Intrinsic Mode Functions for the Micro-Doppler Parameters Estimation of Small UAVs. IEEE Trans. Geosci. Remote. Sens. 2019, 57, 7182–7193. [Google Scholar] [CrossRef]

- Liu, X.; Qi, J.; Chen, C.; Bin, K.; Zhong, P. Relation-Aware Weight Sharing in Decoupling Feature Learning Network for UAV RGB-Infrared Vehicle Re-Identification. IEEE Trans. Multim. 2024, 26, 9839–9853. [Google Scholar] [CrossRef]

- Bouhlel, F.; Mliki, H.; Hammami, M. Person re-identification from UAVs based on Deep hybrid features: Application for intelligent video surveillance. Signal Image Video Process. 2024, 18, 8313–8326. [Google Scholar] [CrossRef]

- Li, W.; Chen, Q.; Gu, G.; Sui, X. Object matching of visible-infrared image based on attention mechanism and feature fusion. Pattern Recognit. 2025, 158, 110972. [Google Scholar] [CrossRef]

- Liu, Z.; Shang, Y.; Li, T.; Chen, G.; Wang, Y.; Hu, Q.; Zhu, P. Robust Multi-Drone Multi-Target Tracking to Resolve Target Occlusion: A Benchmark. IEEE Trans. Multim. 2023, 25, 1462–1476. [Google Scholar] [CrossRef]

- Bisio, I.; Garibotto, C.; Haleem, H.; Lavagetto, F.; Sciarrone, A. Vehicular/Non-Vehicular Multi-Class Multi-Object Tracking in Drone-Based Aerial Scenes. IEEE Trans. Veh. Technol. 2024, 73, 4961–4977. [Google Scholar] [CrossRef]

- Khan, M.U.; Dil, M.; Alam, M.Z.; Orakazi, F.A.; Almasoud, A.M.; Kaleem, Z.; Yuen, C. SafeSpace MFNet: Precise and Efficient MultiFeature Drone Detection Network. IEEE Trans. Veh. Technol. 2024, 73, 3106–3118. [Google Scholar] [CrossRef]

- Souli, N.; Kolios, P.; Ellinas, G. Multi-Agent System for Rogue Drone Interception. IEEE Robotics Autom. Lett. 2023, 8, 2221–2228. [Google Scholar] [CrossRef]

- Safa, A.; Verbelen, T.; Ocket, I.; Bourdoux, A.; Catthoor, F.; Gielen, G.G.E. Fail-Safe Human Detection for Drones Using a Multi-Modal Curriculum Learning Approach. IEEE Robotics Autom. Lett. 2022, 7, 303–310. [Google Scholar] [CrossRef]

- Huang, Y.; Liu, W.; Li, Y.; Yang, L.; Jiang, H.; Li, Z.; Li, J. MFE-SSNet: Multi-Modal Fusion-Based End-to-End Steering Angle and Vehicle Speed Prediction Network. Automot. Innov. 2024, 7, 1–14. [Google Scholar] [CrossRef]

- Wang, P.; Wang, Y.; Li, D. DroneMOT: Drone-based Multi-Object Tracking Considering Detection Difficulties and Simultaneous Moving of Drones and Objects. In Proceedings of the IEEE International Conference on Robotics and Automation, ICRA 2024, Yokohama, Japan, 13–17 May 2024; pp. 7397–7404. [Google Scholar]

- Ardö, H.; Nilsson, M.G. Multi Target Tracking from Drones by Learning from Generalized Graph Differences. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshops, ICCV Workshops 2019, Seoul, Republic of Korea, 27–28 October 2019; pp. 46–54. [Google Scholar]

- Cui, J.; Du, J.; Liu, W.; Lian, Z. TextNeRF: A Novel Scene-Text Image Synthesis Method based on Neural Radiance Fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 22272–22281. [Google Scholar]

- Chen, L.; Liu, C.; Li, W.; Xu, Q.; Deng, H. DTSSNet: Dynamic Training Sample Selection Network for UAV Object Detection. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 1–16. [Google Scholar] [CrossRef]

- Xu, J.; Fan, X.; Jian, H.; Xu, C.; Bei, W.; Ge, Q.; Zhao, T. YoloOW: A Spatial Scale Adaptive Real-Time Object Detection Neural Network for Open Water Search and Rescue From UAV Aerial Imagery. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Zhang, X.; Gong, Y.; Lu, J.; Li, Z.; Li, S.; Wang, S.; Liu, W.; Wang, L.; Li, J. Oblique Convolution: A Novel Convolution Idea for Redefining Lane Detection. IEEE Trans. Intell. Veh. 2023, 9, 4025–4039. [Google Scholar] [CrossRef]

- Shi, X.; Yin, Z.; Han, G.; Liu, W.; Qin, L.; Bi, Y.; Li, S. BSSNet: A Real-Time Semantic Segmentation Network for Road Scenes Inspired from AutoEncoder. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 3424–3438. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Gong, Y.; Lu, J.; Liu, W.; Li, Z.; Jiang, X.; Gao, X.; Wu, X. Sifdrivenet: Speed and image fusion for driving behavior classification network. IEEE Trans. Comput. Soc. Syst. 2023, 11, 1244–1259. [Google Scholar] [CrossRef]

- Liu, W.; Lu, J.; Liao, J.; Qiao, Y.; Zhang, G.; Zhu, J.; Xu, B.; Li, Z. FMDNet: Feature-Attention-Embedding-Based Multimodal-Fusion Driving-Behavior-Classification Network. IEEE Trans. Comput. Soc. Syst. 2024, 11, 6745–6758. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Liu, W.; Gong, Y.; Zhang, G.; Lu, J.; Zhou, Y.; Liao, J. GLMDriveNet: Global–local Multimodal Fusion Driving Behavior Classification Network. Eng. Appl. Artif. Intell. 2024, 129, 107575. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.B. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Gong, Y.; Lu, J.; Wu, J.; Liu, W. Multi-modal fusion technology based on vehicle information: A survey. arXiv 2022, arXiv:2211.06080. [Google Scholar]

- Yan, C.; Zhang, H.; Li, X.; Yuan, D. R-SSD: Refined single shot multibox detector for pedestrian detection. Appl. Intell. 2022, 52, 10430–10447. [Google Scholar] [CrossRef]

- Tan, Q.; Yang, X.; Qiu, C.; Jiang, Y.; He, J.; Liu, J.; Wu, Y. SCCMDet: Adaptive Sparse Convolutional Networks Based on Class Maps for Real-Time Onboard Detection in Unmanned Aerial Vehicle Remote Sensing Images. Remote Sens. 2024, 16, 1031. [Google Scholar] [CrossRef]

- Du, B.; Huang, Y.; Chen, J.; Huang, D. Adaptive Sparse Convolutional Networks with Global Context Enhancement for Faster Object Detection on Drone Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13435–13444. [Google Scholar]

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S.C. Deep learning for person re-identification: A survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 2872–2893. [Google Scholar] [CrossRef]

- Ning, E.; Wang, C.; Zhang, H.; Ning, X.; Tiwari, P. Occluded person re-identification with deep learning: A survey and perspectives. Expert Syst. Appl. 2024, 239, 122419. [Google Scholar] [CrossRef]

- Gan, Y.; Liu, W.; Gan, J.; Zhang, G. A segmentation method based on boundary fracture correction for froth scale measurement. Appl. Intell. 2024, 1–22. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, T.; Zhou, M.; Tang, D.; Zhang, P.; Liu, W.; Yang, Q.; Shen, T.; Wang, K.; Liu, H. MIPD: A Multi-sensory Interactive Perception Dataset for Embodied Intelligent Driving. arXiv 2024, arXiv:2411.05881. [Google Scholar]

- Wang, G.; Yuan, Y.; Chen, X.; Li, J.; Zhou, X. Learning Discriminative Features with Multiple Granularities for Person Re-Identification. In Proceedings of the 26th ACM international conference on Multimedia, New York, NY, USA, 22–26 October 2018. [Google Scholar]

- Luo, H.; Gu, Y.; Liao, X.; Lai, S.; Jiang, W. Bag of Tricks and A Strong Baseline for Deep Person Re-identification. arXiv 2019, arXiv:1903.07071. [Google Scholar]

- Zhang, Y.; Wang, C.; Wang, X.; Zeng, W.; Liu, W. FairMOT: On the Fairness of Detection and Re-identification in Multiple Object Tracking. Int. J. Comput. Vis. 2021, 129, 3069–3087. [Google Scholar] [CrossRef]

- Zhu, J.; Li, Q.; Gao, C.; Ge, Y.; Xu, K. Camera-aware re-identification feature for multi-target multi-camera tracking. Image Vis. Comput. 2024, 142, 104889. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, X.; Liu, Y.; Zhu, S. Multi-view People Tracking via Hierarchical Trajectory Composition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 4256–4265. [Google Scholar]

- Sun, Y.; Cheng, C.; Zhang, Y.; Zhang, C.; Zheng, L.; Wang, Z.; Wei, Y. Circle Loss: A Unified Perspective of Pair Similarity Optimization. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; pp. 6397–6406. [Google Scholar]

- Hermans, A.; Beyer, L.; Leibe, B. In Defense of the Triplet Loss for Person Re-Identification. arXiv 2017, arXiv:1703.07737. [Google Scholar]

- Bryan, B.; Gong, Y.; Zhang, Y.; Poellabauer, C. Second-Order Non-Local Attention Networks for Person Re-Identification. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3759–3768. [Google Scholar]

- Chen, T.; Ding, S.; Xie, J.; Yuan, Y.; Chen, W.; Yang, Y.; Ren, Z.; Wang, Z. ABD-Net: Attentive but Diverse Person Re-Identification. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8350–8360. [Google Scholar]

- He, L.; Liao, X.; Liu, W.; Liu, X.; Cheng, P.; Mei, T. FastReID: A Pytorch Toolbox for General Instance Re-identification. In Proceedings of the 31st ACM International Conference on Multimedia, MM 2023, Ottawa, ON, Canada, 29 October–3 November 2023; El-Saddik, A., Mei, T., Cucchiara, R., Bertini, M., Vallejo, D.P.T., Atrey, P.K., Hossain, M.S., Eds.; ACM: New York, NY, USA, 2023; pp. 9664–9667. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).