With the continuous advancement of technology and the increasing maturity of drone technology, multi-drone swarm systems have gained considerable interest because they can offer greater stability, improved fault tolerance, and superior efficiency over individual drones. This technology has achieved remarkable results in various fields, including aerial photography [

1], reconnaissance [

2], surveillance [

3], cargo transportation [

4], and rescue operations [

5]. Currently, the control algorithms for drone swarms mainly include behavior-based [

6], virtual structure [

7], and leader–follower [

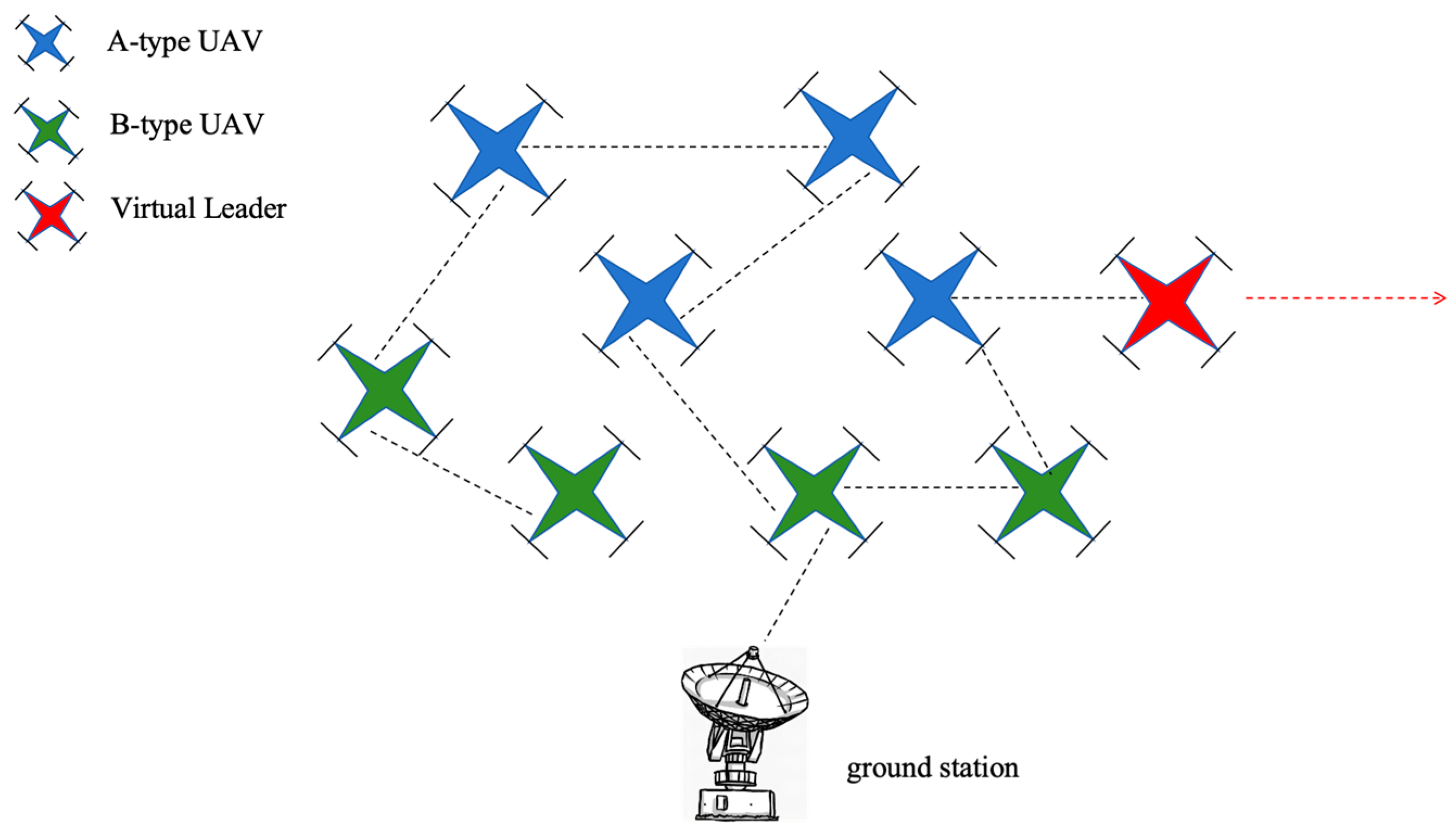

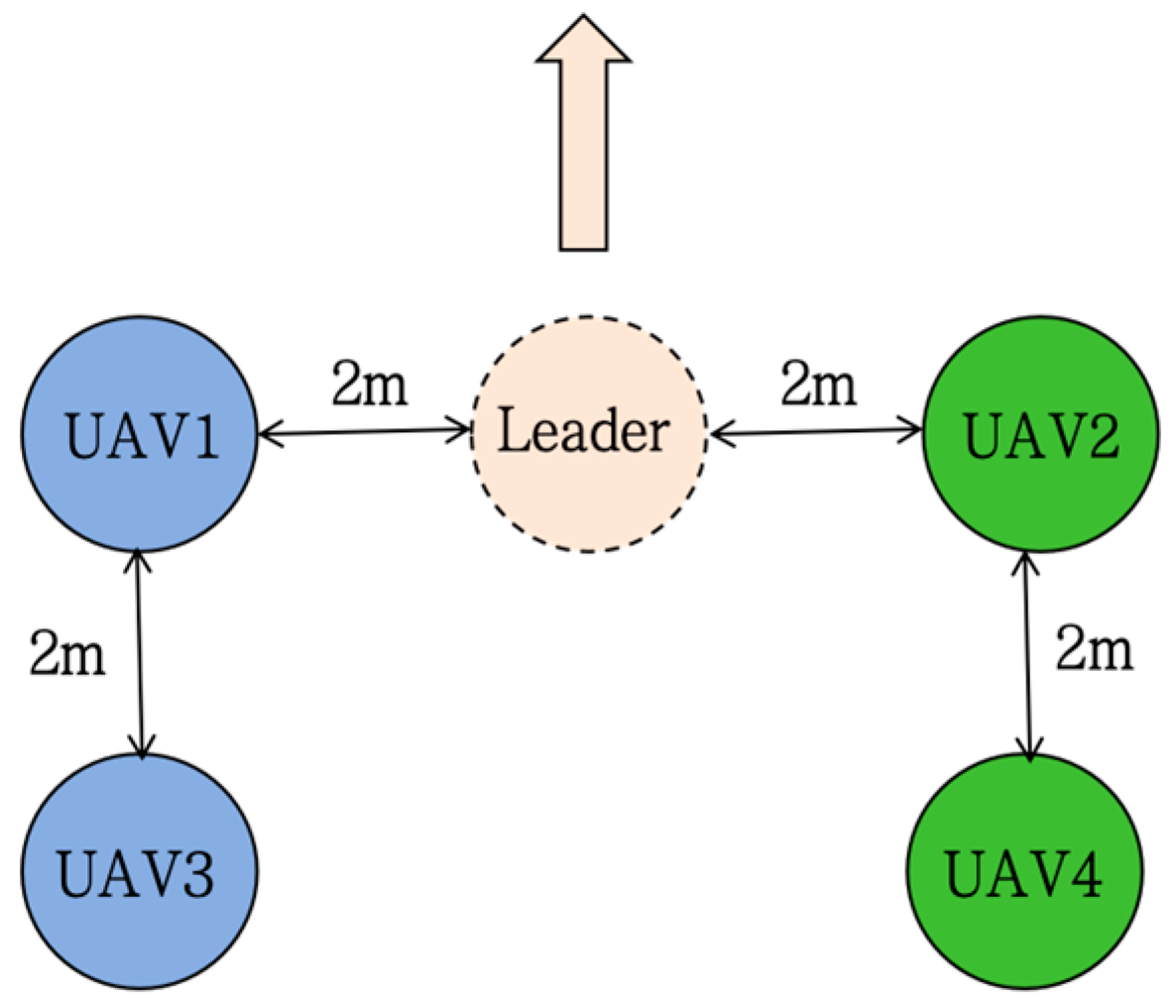

8] methods. The behavior-based approach controls the actions of each drone in the swarm independently, making it suitable for specific task scenarios. However, due to the lack of global coordination, ensuring the stability of the swarm is challenging. The virtual structure approach theoretically addresses this problem and guarantees swarm stability. However, since the formation is pre-defined and its ability to avoid obstacles in dynamic environments is limited, it lacks flexibility. In contrast, the leader–follower approach combines the benefits of both, offering a simple model, strong stability, and good adaptability, which has led to its widespread application in practice. Therefore, the formation control method adopted in this paper is the leader–follower method.

To ensure stable formation flight, the trajectory planning of multi-drone systems has been a key area of research. In an unknown environment, a drone cannot pre-plan its trajectory before movement because it has not built a model of the surrounding area in advance. Therefore, it can only continuously plan its next movement trajectory in real time based on environmental information obtained from sensors during movement. Since the planning is performed online in real time, trajectory planning and execution are closely linked. The planned path must satisfy the drone’s kinematics and dynamics during the planning process to ensure that the trajectory is tracked and executed in a timely manner. This places high demands on both the speed of trajectory generation and the quality of the trajectory. Additionally, due to the unpredictability of the environment and frequent unexpected changes, it is required that the robot can quickly alter its trajectory and perform re-planning promptly to avoid collisions with obstacles. Mainstream planning algorithms include graph-search-based methods [

9,

10], artificial potential field methods [

11,

12], metaheuristic algorithms [

13,

14], and optimal control algorithms such as model-based control [

15,

16]. John Bellingham et al. [

17], in their study of fixed-wing trajectory optimization, transformed the problem into a mixed-integer linear programming problem to optimize the shortest time path to the target point. Y. You et al. [

18] proposed a hybrid algorithm based on an improved A* algorithm and multi-objective artificial potential field (MTAPF) for leader–follower formation planning in static environments. Michael Watterson et al. [

19] proposed a method that combines a short-term receding horizon control (RHC) strategy and long-term receding horizon control strategy, based on the theory of receding horizon control. After using the Delaunay method to convexify the environment, they constructed a quadratic programming problem within it to complete the real-time trajectory generation process. L. Chang et al. [

20] addressed the challenge of real-time obstacle avoidance and maintaining formation flight in complex environments, proposing a combined leader–follower and behavior-based control algorithm using the improved dynamic window approach (DWA). G.Y. Cong et al. [

21] used an improved RRT algorithm and 4D coordination cost function to plan global paths for each drone, with a heuristic artificial potential field (HAPF) algorithm for local collision avoidance, enabling 4D collaborative path planning for all drones. Although these methods can theoretically avoid obstacles, they often fail to consider trajectory tracking performance, which may lead to drones failing to precisely follow planned paths in actual flight, potentially causing collisions or failure to maintain formation. To address this issue, simplified UAV models and state constraints are often considered during the trajectory planning phase. Daniel Mellinger and Vijay Kumar [

22] first proposed the Minimum Snap trajectory planning algorithm, which leverages the differential flatness property of rotary-wing drones. This algorithm can estimate polynomial trajectories and generate the optimal trajectory in real time. By formulating and solving a quadratic programming problem, it ensures safe passage through designated corridors while satisfying constraints on velocity, acceleration, and control inputs. Arul SH [

23] combined trajectory planning with Model Predictive Control (MPC) to propose a distributed multi-UAV trajectory planning method that satisfies dynamic constraints, enabling real-time path planning for collision avoidance. M.Q.Cheng et al. [

24] presented a state-constrained cooperative co-evolutionary algorithm (CCEA-ADVS) that improves computational efficiency, convergence speed, and path planning performance through an adaptive decision variable selection strategy and two-phase evolutionary optimization process. J. Wu et al. [

25] added simple constraints such as air resistance and thrust to a kinematic model. P. Yao et al. [

26] employed a trajectory propagation approach to iteratively solve the dynamics equations of the vehicle, considering both the planned path and the constraints of UAV dynamics. While these methods account for some dynamic constraints, they still have significant gaps with real flight constraints and fail to adequately consider controller performance, leaving doubts about accurate path tracking in practice. Moreover, applying the same constraints to all drones limits their applicability in heterogeneous UAV formations. Y. Lin et al. [

27] combined vehicle models with controllers to simulate the vehicles’ trajectories using elementary control signals. L.Y. Heng et al. [

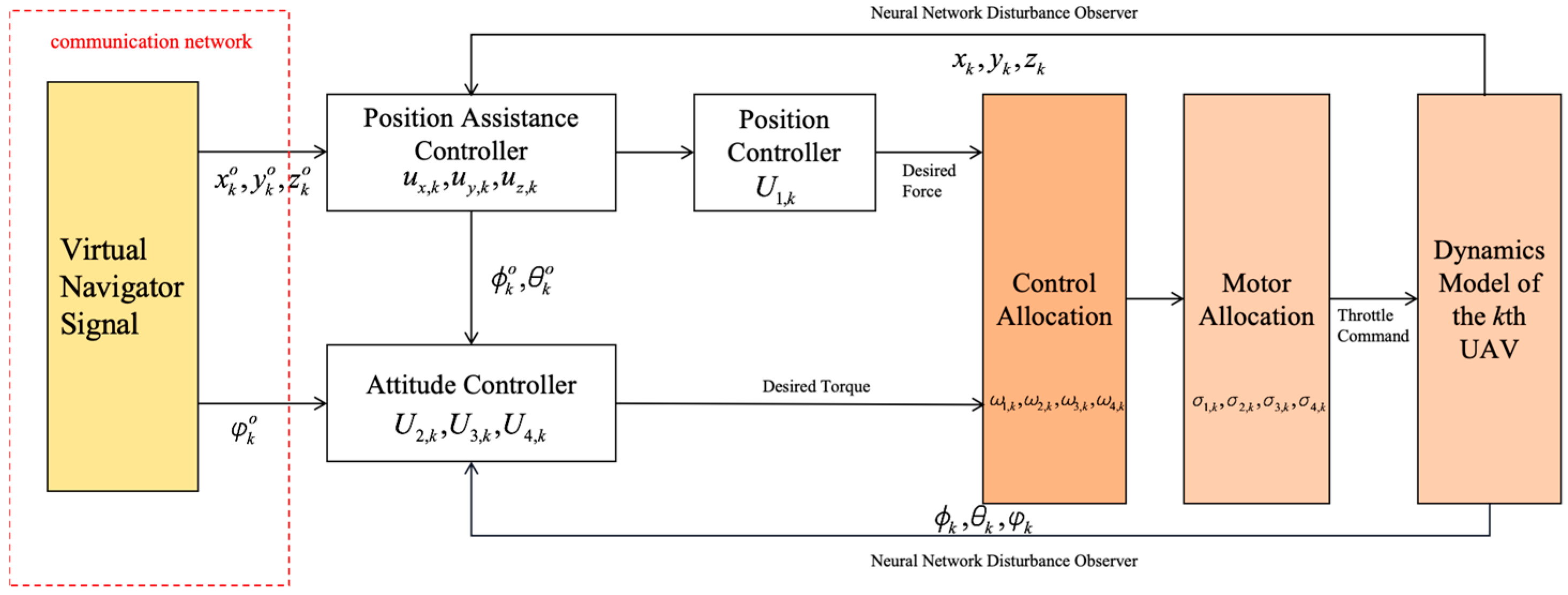

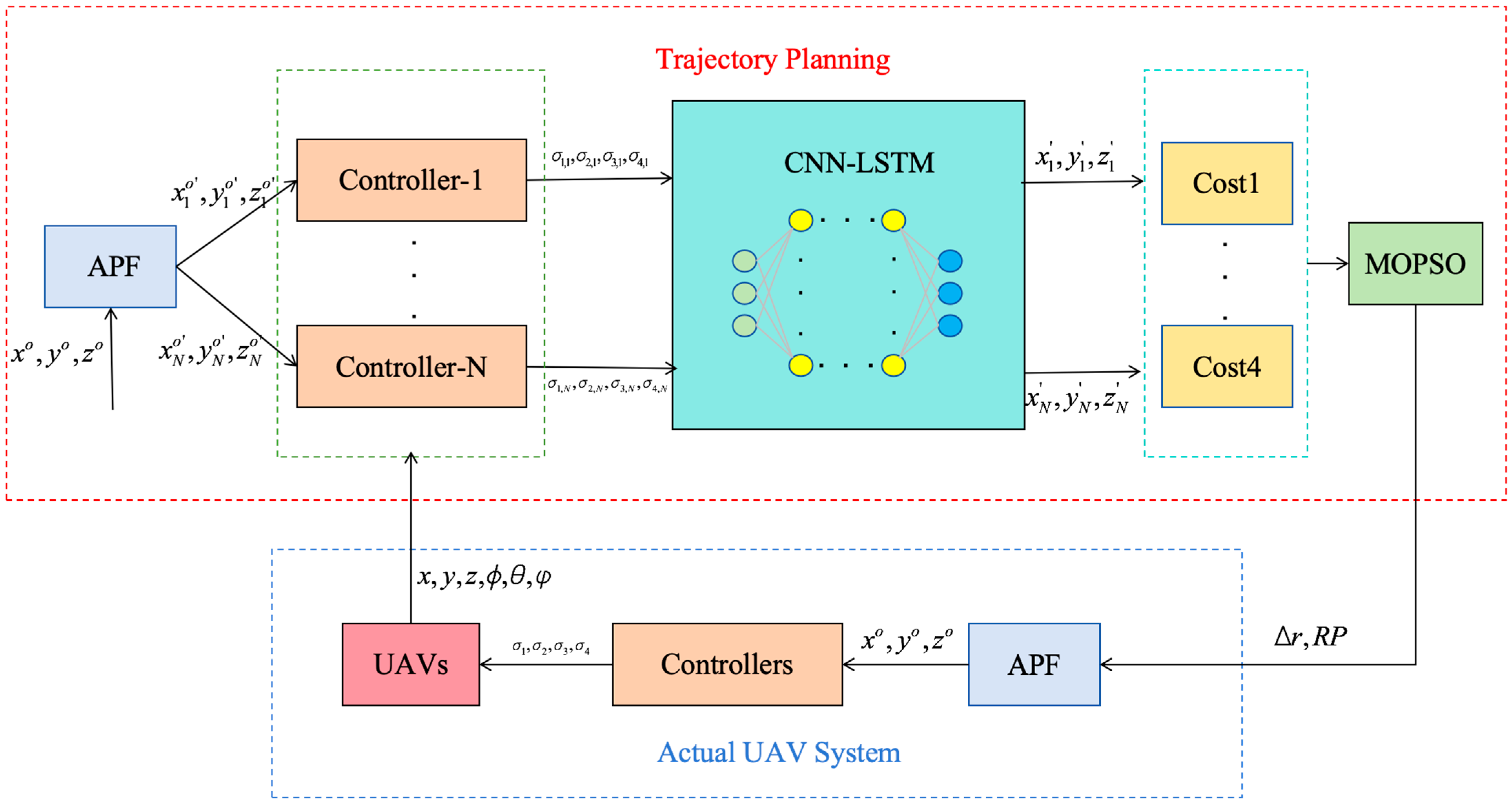

28] integrated low-level controllers with a UAV’s six-dimensional nonlinear motion model to predict flight trajectories during the planning phase. Inspired by these approaches, in the process of trajectory planning, the dynamics model of a quadcopter can be integrated with tracking control algorithms to forecast real flight paths. The artificial potential field method is computationally simple, is easy to implement, and offers good real-time performance, enabling it to quickly respond to environmental changes. It is particularly suitable for real-time navigation in dynamic environments. Furthermore, the algorithm’s parameters are relatively intuitive to adjust, allowing for flexible optimization based on different task requirements. By constructing a cost function based on the predicted actual flight trajectory and using particle swarm optimization, the optimal parameters for the artificial potential field method can be found, enabling the drone to track the planned trajectory as accurately as possible during actual flight. Additionally, for heterogeneous UAV formations, trajectory prediction can be based on the dynamics models and control algorithms of each UAV, allowing for tailored flight trajectory planning.

However, the quadcopter model is represented by a set of interconnected nonlinear differential equations [

29], and each step requires integration, resulting in low planning efficiency. At the same time, the accuracy of the prediction relies significantly on the precision and accuracy of the quadcopter model. Therefore, using this model for prediction is both time-consuming and difficult to ensure sufficient accuracy with. As computer technology and artificial intelligence continue to evolve, deep learning is increasingly being adopted across various industries. Significant achievements have been made in fields such as image processing, machine translation, speech recognition, and human–computer games [

30,

31,

32,

33]. Motivated by the benefits of deep learning, we investigated its potential to address the trajectory prediction challenge. In fact, drone trajectory prediction can be viewed as a mapping between control signals produced by the control system and the resulting flight path, which fundamentally represents a time series forecasting problem. Data-driven methods can not only effectively capture this mapping relationship but also significantly reduce the complexity of modeling. H. Ping et al. [

34] used LSTM to predict 4D motion trajectories. W. Ru [

35] proposed a multi-information fusion Convolutional Neural Network (MI-CNN) based on attention mechanisms to predict pedestrian trajectories. X. Gou et al. [

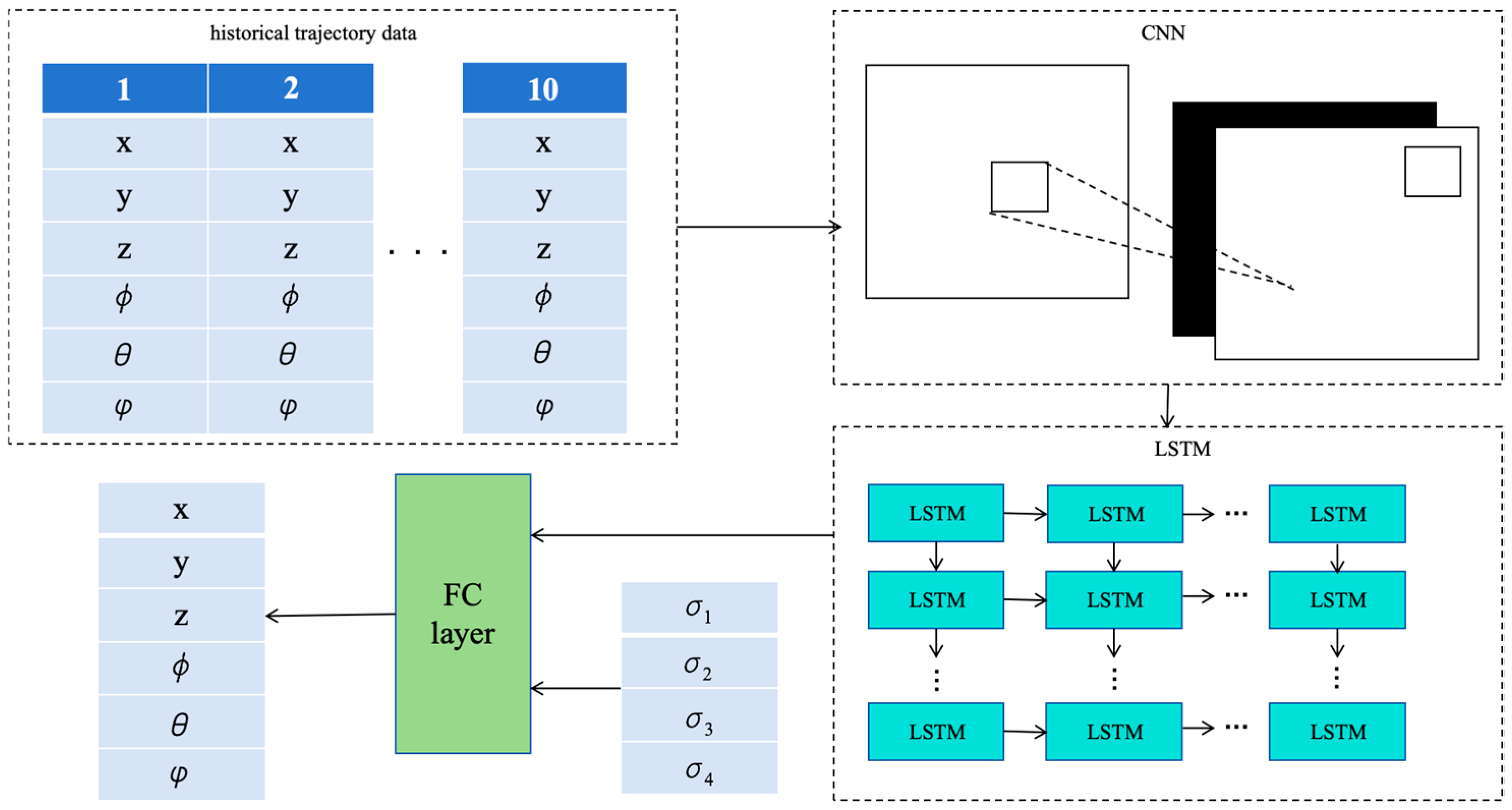

36] proposed a vehicle trajectory prediction model combining CNN and LSTM neural networks. CNN networks are adept at handling spatial information and can effectively extract spatial features from trajectory data, while LSTM networks possess long-term and short-term memory capabilities, making them suitable for processing time series data. By combining the strengths of CNNs and LSTM, the spatiotemporal features of the data can be fully leveraged.

Inspired by the above analysis and research, this paper proposes a real-time trajectory planning method for heterogeneous UAV formations based on a CNN-LSTM trajectory prediction network with a focus on control. The main contributions of this paper are summarized as follows: