1. Introduction

The increasing demand for sustainable agricultural practices and effective resource management has driven the development of advanced remote sensing technologies. Among these, hyperspectral imaging (HSI) stands out as a powerful tool for analyzing soil properties, crop health, and environmental conditions due to its ability to capture detailed spectral information across multiple wavelengths [

1]. By integrating HSI capabilities with unmanned aerial vehicles (UAVs), researchers have unlocked new possibilities for precision agriculture and soil texture mapping, enabling high-resolution, real-time monitoring of vast agricultural landscapes [

2].

UAVs have revolutionized remote sensing in agriculture by offering flexibility, efficiency, and cost-effectiveness compared to traditional satellite or manned aircraft systems. UAVs equipped with hyperspectral sensors provide a unique advantage by delivering high-resolution data with rapid turnaround times, even in remote or inaccessible regions [

3]. Recent advancements in UAV design, such as lightweight frames, extended flight durations, and robust navigation systems, have further enhanced their applicability in agricultural monitoring [

4]. These systems are now capable of supporting complex missions, including soil texture mapping, crop stress detection, and nutrient analysis, with unprecedented accuracy [

5].

Soil texture plays a critical role in determining agricultural productivity, influencing water retention, nutrient availability, and crop growth patterns. Conventional methods for soil texture analysis, such as laboratory testing, are labor-intensive, time-consuming, and often fail to capture spatial variability at the field scale [

6]. UAV-based hyperspectral imaging offers a transformative solution by enabling non-invasive, large-scale soil texture assessments through the analysis of spectral signatures. This approach has been validated by studies demonstrating strong correlations between hyperspectral data and soil composition parameters, such as clay, silt, and sand content [

7]. The integration of artificial intelligence (AI) algorithms into UAV-based hyperspectral imaging workflows further enhances the potential of this technology. Robust AI models, including Convolutional Neural Networks (CNNs) and transformer-based architectures, have shown remarkable performance in analyzing hyperspectral datasets, identifying patterns, and predicting soil characteristics with high accuracy [

8]. These models leverage advanced techniques such as spectral–spatial feature extraction and transfer learning to process vast amounts of data efficiently, even under challenging conditions, such as varying lighting or atmospheric interference [

9]. For instance, state-of-the-art AI frameworks like U-Net and DeepLabV3+ have been successfully applied to semantic segmentation tasks, facilitating precise soil texture classification and mapping [

10].

Despite these advancements, several challenges remain in the deployment of UAV-based hyperspectral imaging for soil texture mapping. One key challenge lies in the calibration and preprocessing of hyperspectral data to account for environmental factors such as illumination variability and sensor noise [

11]. Moreover, the integration of AI algorithms necessitates substantial computational resources and robust training datasets to ensure generalizability across diverse agricultural settings [

12]. To address these challenges, recent research has focused on the development of lightweight, edge-computing solutions for real-time data processing and the creation of comprehensive spectral libraries for training AI models [

13].

The proposed paper aims to contribute to this growing body of research by developing a UAV-based hyperspectral imaging system integrated with robust AI algorithms for soil texture mapping. The system will leverage advanced UAV platforms equipped with hyperspectral sensors and gamma spectrometers, coupled with machine learning frameworks for data analysis and interpretation. By focusing on vineyard and vegetable crops, this paper seeks to demonstrate the feasibility of real-time, high-resolution soil texture mapping in diverse agricultural scenarios, paving the way for more sustainable and efficient farming practices.

2. Literature Review

Remote sensing technologies have revolutionized the field of precision agriculture, offering innovative solutions for crop monitoring, stress detection, and soil analysis. The use of satellite-based multispectral imaging has been extensively studied and applied in agricultural settings. These methods provide broad coverage, enabling large-scale observation of crop health and environmental conditions. However, their utility is often constrained by low spatial resolution, atmospheric interference, and the delayed availability of data, which limit their effectiveness for real-time decision-making [

14]. In response to these limitations, unmanned aerial vehicles (UAVs) have emerged as a transformative tool in precision agriculture. UAVs offer unparalleled advantages, including high spatial and temporal resolution, cost-effectiveness, and the flexibility to conduct targeted surveys even in inaccessible regions [

15].

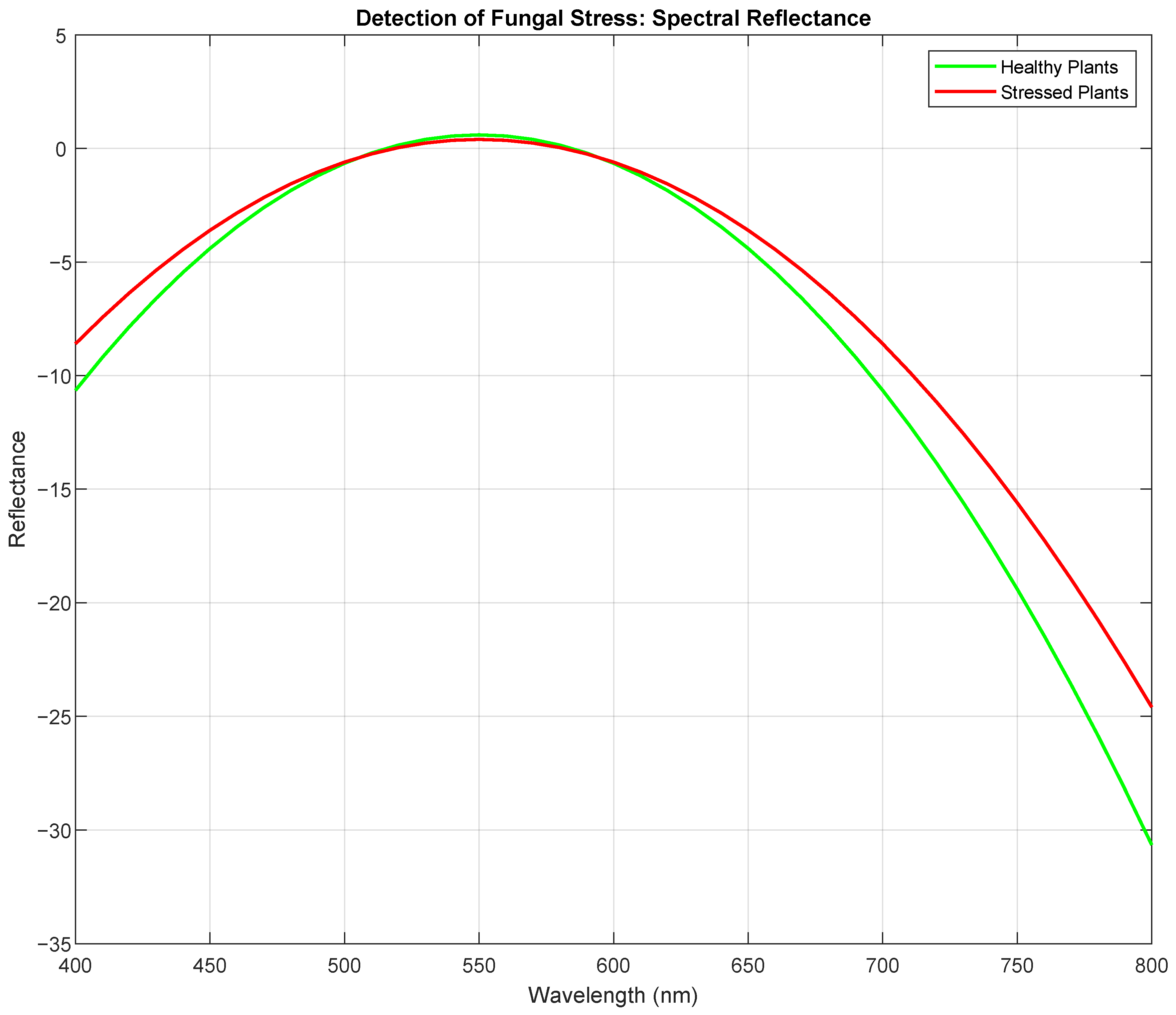

Hyperspectral imaging (HSI) has garnered significant attention for its ability to capture detailed spectral signatures across a wide range of wavelengths. This technology enables the detection of subtle physiological changes in crops, such as variations in chlorophyll content, water stress, and nutrient deficiencies. Studies have demonstrated the effectiveness of hyperspectral imaging in identifying early-stage crop stress, which is critical for timely intervention and yield optimization [

16]. For instance, specific vegetation indices derived from hyperspectral data, such as the Normalized Difference Vegetation Index (NDVI) and the Photochemical Reflectance Index (PRI), have been validated as reliable indicators of crop health and productivity [

17].

Thermal imaging, another critical component of modern remote sensing, has proven particularly effective in assessing plant water status and identifying heat-induced stress. By detecting variations in surface temperature, thermal cameras can pinpoint areas of reduced evapotranspiration, which often correlate with water stress or disease onset. The integration of thermal imaging with other sensing modalities enhances the comprehensiveness of agricultural monitoring systems [

18]. For example, thermal data combined with hyperspectral indices provide a multi-dimensional understanding of crop and soil conditions, enabling precise interventions tailored to specific stress factors.

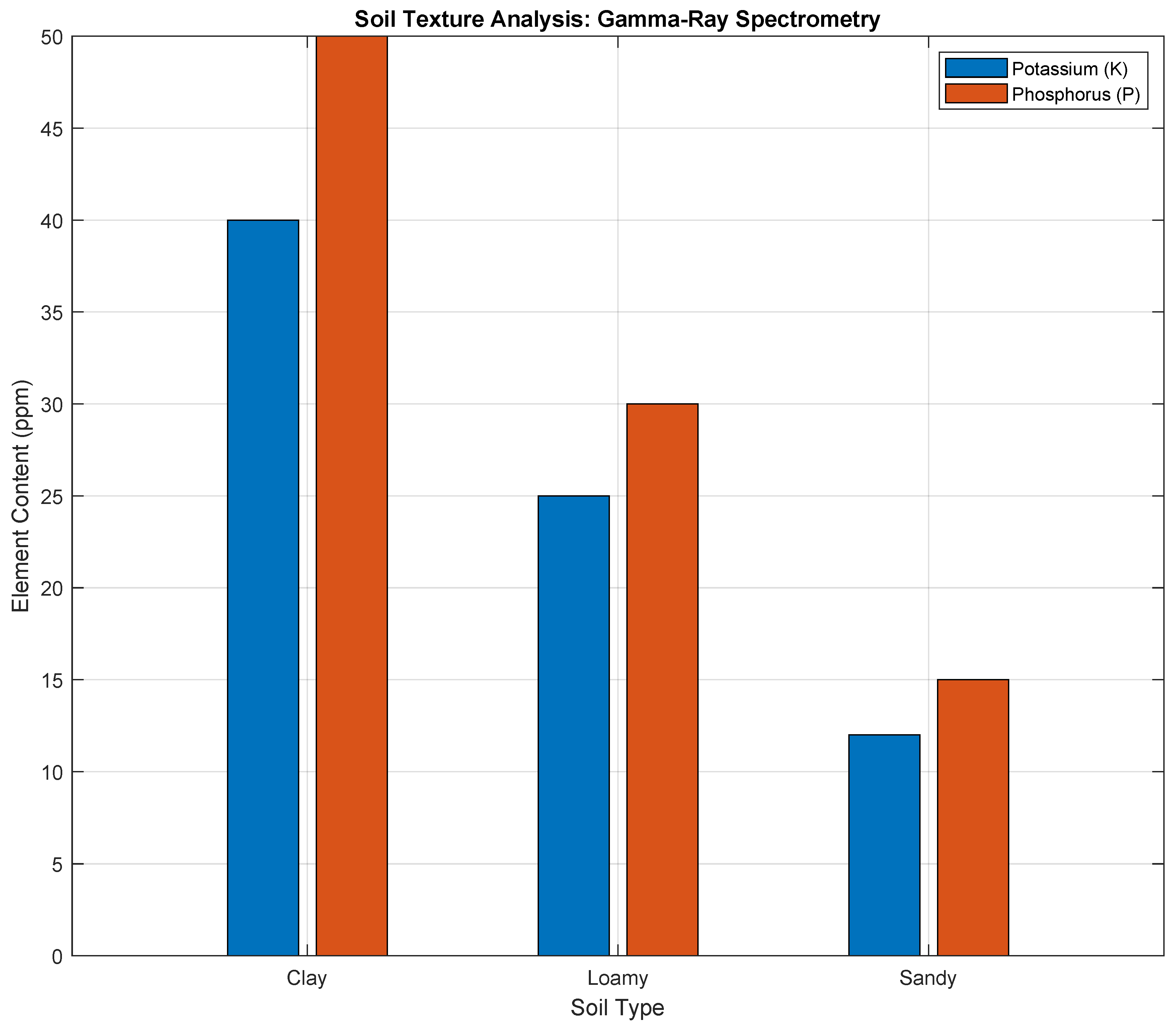

Gamma-ray spectrometry, though relatively underutilized in agricultural research, offers unique insights into soil composition and nutrient distribution. This technology measures the natural radioactivity of isotopes such as potassium, thorium, and uranium, which are indicative of soil fertility and texture. Recent studies highlight the potential of gamma-ray spectrometry to complement traditional soil analysis techniques by providing rapid, non-invasive assessments of soil properties [

19]. When integrated with hyperspectral and thermal imaging, gamma-ray data contribute to a holistic understanding of the agroecosystem, addressing critical challenges in soil management and crop productivity. Despite these advancements, existing remote sensing systems often fall short in their ability to integrate multi-modal data streams effectively.

The lack of advanced data fusion and machine learning algorithms in traditional systems limits their classification accuracy and scalability. Recent developments in artificial intelligence (AI) and deep learning have opened new avenues for overcoming these limitations. Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have demonstrated exceptional performance in processing high-dimensional data, enabling the extraction of spatial, spectral, and temporal features with unprecedented precision [

20]. For example, hybrid CNN-RNN models are particularly well suited for multi-modal applications, as they combine the strengths of CNNs in spatial feature extraction with the temporal analysis capabilities of RNNs. These models have shown promise in distinguishing between abiotic and biotic stressors, as well as in classifying soil texture and composition [

21].

The proposed Quad Hopper system represents a significant leap forward in this context. By integrating hyperspectral, thermal, and gamma-ray sensors with a robust hybrid deep learning framework, the system addresses critical gaps in existing technologies. The incorporation of real-time data processing capabilities further enhances its utility, enabling on-the-fly analysis and adaptive decision-making during UAV missions. This real-time capability is particularly valuable in large-scale agricultural operations, where timely interventions can prevent significant yield losses [

22]. In addition to its technical advancements, the Quad Hopper system exemplifies the trend toward multi-modal sensor integration in precision agriculture. Unlike traditional systems that rely on a single sensor type, the Quad Hopper combines complementary data streams to provide a comprehensive understanding of the agroecosystem. This approach not only improves classification accuracy but also enhances the system’s adaptability to diverse environmental conditions and crop types [

10]. For instance, hyperspectral data can identify nutrient deficiencies, thermal imaging can detect water stress, and gamma-ray spectrometry can assess soil fertility—all within a single UAV mission. Such multi-faceted capabilities make the Quad Hopper an indispensable tool for modern precision agriculture. The literature underscores the critical role of advanced sensing technologies and AI algorithms in transforming agricultural practices.

While significant progress has been made, challenges remain in scaling these technologies for widespread adoption. The Quad Hopper system, with its innovative design and cutting-edge capabilities, offers a promising solution to these challenges, setting a new benchmark for integrated remote sensing in precision agriculture.

3. Materials, Methods, and Methodology

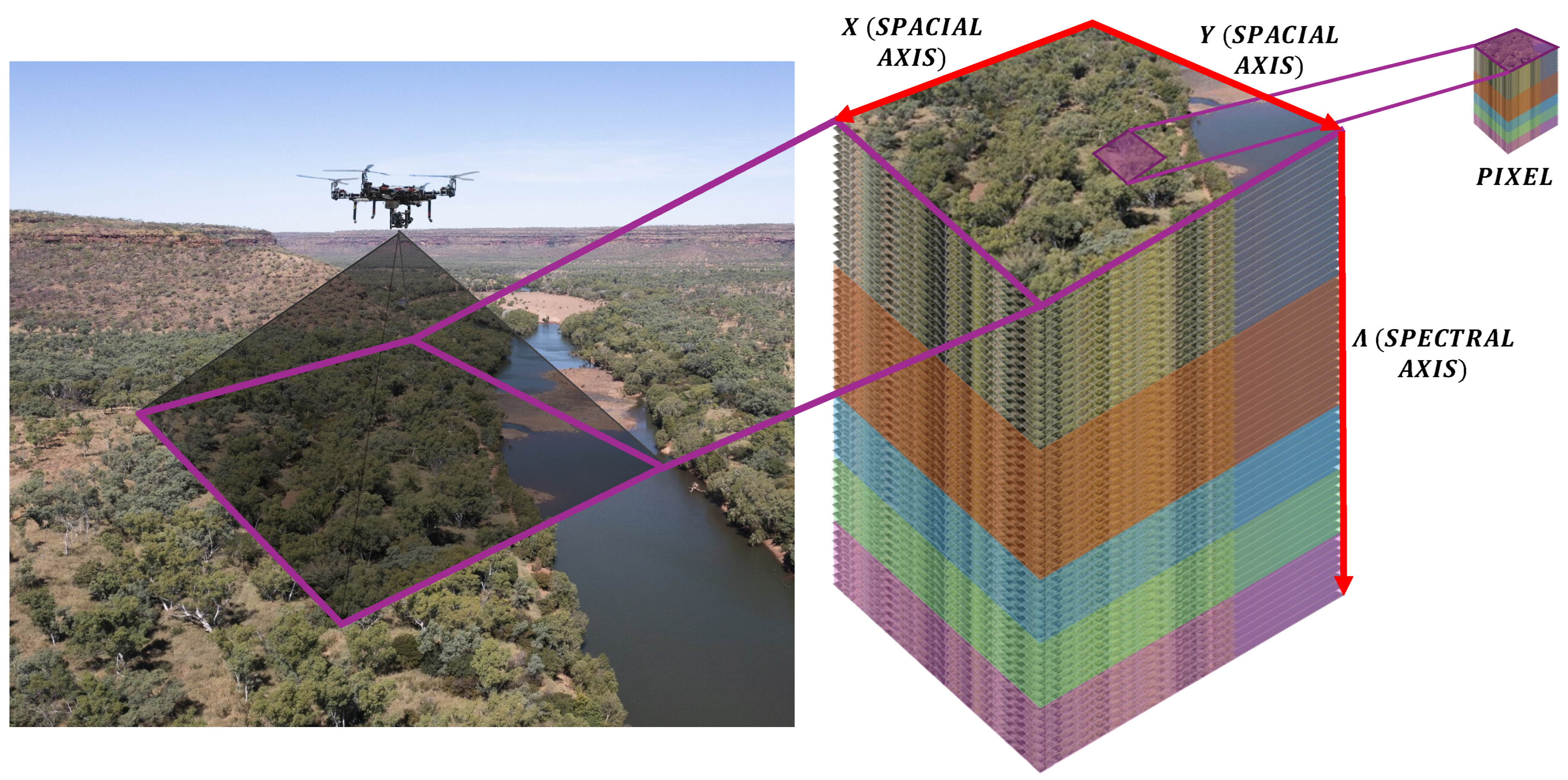

The UAV system utilized in this paper, named the Quad Hopper, represents a significant advancement in UAV-based soil texture mapping and agricultural stress detection. The system integrates multiple cutting-edge sensors and components, each tailored for precise data acquisition and analysis. These include hyperspectral and RGBD cameras, gamma spectrometry sensors, thermal cameras, and an onboard computing unit capable of real-time artificial intelligence (AI) and machine learning (ML) inference. Collectively, these components equip the system to monitor and assess soil properties, crop health, and environmental conditions with exceptional accuracy and efficiency.

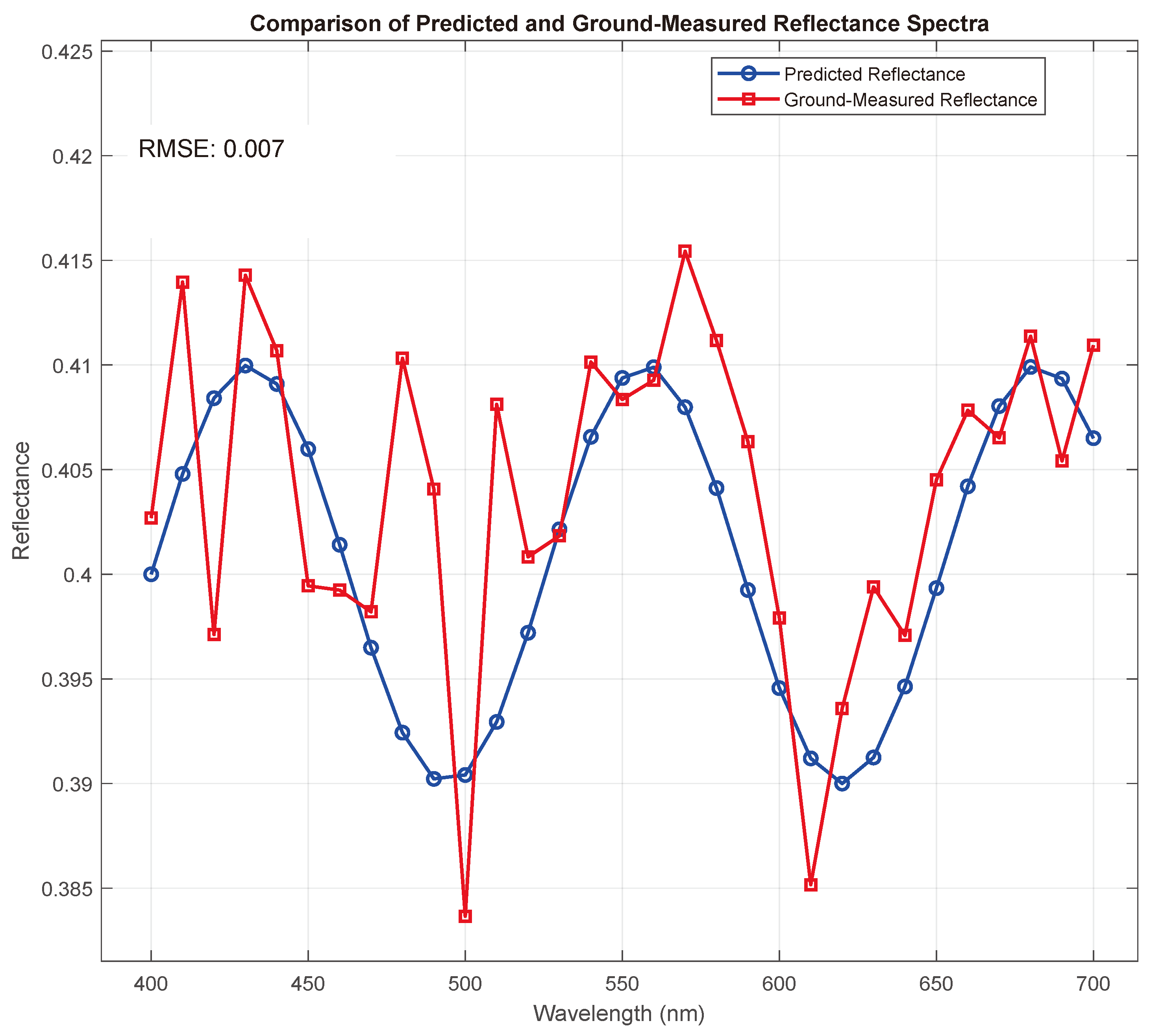

The hyperspectral camera onboard the Quad Hopper captures detailed spectral data across a wavelength range of 400–1000 nm, with a fine spectral resolution of 5 nm intervals. This capability allows for the identification of subtle variations in soil texture and vegetation characteristics, providing insights into soil composition and crop stress. Hyperspectral imaging has been extensively validated as a tool for soil analysis, with studies showing its ability to map soil properties such as clay, silt, and sand composition [

23].

In parallel, the gamma spectrometry sensors measure soil radioactivity and isotopic distribution, employing Bayesian inversion algorithms to enhance the precision and reliability of these measurements [

24]. The thermal cameras add another layer of functionality by detecting temperature variations with a precision of ±0.5 °C, enabling accurate assessments of soil moisture levels and the early detection of plant stress caused by drought or excessive heat [

25].

A key feature of the Quad Hopper is its onboard computing unit, which facilitates real-time data processing. This unit leverages state-of-the-art AI and ML algorithms to analyze incoming data streams, enabling rapid decision-making and adaptive control during flights. Real-time processing not only reduces latency but also ensures that actionable insights are available immediately, even in remote field conditions. This feature is especially critical for large-scale agricultural operations, where timely interventions can significantly enhance productivity and sustainability [

26].

By processing data directly onboard, the system also reduces the need for extensive post-flight analysis, streamlining workflows and improving efficiency. The UAV employs sophisticated flight path planning algorithms to optimize data acquisition and field coverage. The Boustrophedon path algorithm ensures systematic coverage by guiding the UAV along parallel, non-overlapping tracks, making it ideal for uniform terrains. Meanwhile, the Dubins path algorithm minimizes energy consumption and flight time by optimizing UAV turns, ensuring efficient navigation even in irregular or constrained environments [

27]. By combining these algorithms, the Quad Hopper achieves a balance between comprehensive data collection and operational efficiency, addressing the demands of modern precision agriculture.

Figure 1 showcases the Quad Hopper UAV in operation, illustrating its structural design and the integration of its various components. The robust, modular frame accommodates a diverse array of sensors and payloads, making it highly adaptable for different agricultural applications. The figure emphasizes the UAV’s capability to navigate complex environments with precision and stability, underscoring its utility as a versatile data collection platform.

To provide additional context,

Figure 2 illustrates the individual sensors and equipment utilized in this paper, including the hyperspectral camera, thermal camera, and gamma spectrometer. Each device is meticulously calibrated to ensure optimal performance under diverse environmental conditions.

The hyperspectral camera, depicted in the figure, is compact yet highly sensitive, capturing detailed spectral data critical for soil texture mapping. The thermal camera is shown alongside its mounting assembly, emphasizing its role in detecting temperature variations with exceptional accuracy. Finally, the gamma spectrometer is displayed with its protective casing, highlighting its durability and precision in measuring soil radioactivity.

In addition, the onboard computing unit used in the Quad Hopper system is equipped with an NVIDIA Jetson Xavier NX, featuring a 384-core Volta GPU with 48 Tensor Cores, an 8-core ARM CPU, and 8GB of LPDDR4x memory. This system enables efficient real-time data processing during UAV missions. The AI/ML algorithms employed include Convolutional Neural Networks (CNNs) for spatial feature extraction from hyperspectral and thermal data and Recurrent Neural Networks (RNNs) for temporal pattern recognition in gamma-ray spectrometry data. The combination of these algorithms allows for accurate classification of soil texture and crop stress conditions in real time.

Figure 1 and

Figure 2 highlight the advanced technological framework of the Quad Hopper UAV system. By integrating state-of-the-art sensors with robust path planning algorithms and real-time data processing capabilities, the system establishes a new benchmark for UAV-based soil texture mapping and stress detection. The comprehensive design ensures the system is well equipped to meet the challenges of modern agriculture, empowering researchers and farmers with actionable insights for sustainable and efficient farming practices.

3.1. Study Area and Climatic Conditions

The study was conducted in Thessaloniki, Greece, during a piloting case study scenario within the project E-SPFdigit funded by the European Union, under Grant Agreement No. 101157922; the field is characterized by a Mediterranean climate with mild winters and hot summers. The UAV missions were performed at an average flight altitude of 50 m to balance spatial resolution and coverage.

3.2. NDVI and PRI Description

The Normalized Difference Vegetation Index (NDVI) is a widely used indicator for vegetation health, calculated using the reflectance in the near-infrared (NIR) and red wavelengths. It is defined as NDVI = (NIR − Red)/(NIR + Red), where higher values typically indicate healthier vegetation [

28]. On the other hand, the Photochemical Reflectance Index (PRI) is derived from the reflectance at

and

, and is primarily used to assess photosynthetic activity and stress responses in plants. Together, these indices provide valuable insights into plant health and stress detection when analyzed using hyperspectral imaging [

29].

3.3. Sensors

The multi-modal stress detection system leverages three advanced sensing technologies: hyperspectral imaging, gamma-ray spectrometry, and thermal imaging. Each of these sensors contributes uniquely to the precision and robustness of agricultural monitoring by capturing specific environmental and physiological data. Hyperspectral imaging is a critical component of the system, enabling detailed spectral analysis across a wide range of wavelengths. This sensor provides reflectance data at

intervals across the visible (

), near-infrared (

), and shortwave infrared (

) spectra. With a reflectance accuracy of ±2 °C, hyperspectral imaging is highly sensitive to variations in plant chlorophyll content, water stress, and soil properties, making it indispensable for calculating vegetation indices such as the NDVI and PRI [

30].

Gamma-ray spectrometry offers insights into soil composition by detecting isotopes and measuring gamma radiation counts. This sensor achieves high accuracy with an energy resolution of less than

at

and sensitivity to radiation levels as low as 10 counts per second (cps). By employing advanced noise correction techniques, such as Bayesian inversion, gamma-ray spectrometry enables precise mapping of soil nutrient levels and the detection of abiotic stresses like salinity [

31]. Thermal imaging captures surface temperature data of crops and soil, essential for assessing evapotranspiration and water stress. It offers a spatial resolution of 640 × 480 pixels, with a ground sampling distance (GSD) of

at an altitude of 50 m. With a temperature accuracy of ±0.5 °C and sensitivity to temperature differences as small as ±0.1 °C, thermal imaging effectively identifies heat stress in crops. Collectively, these sensors form the backbone of the multi-modal stress detection system, ensuring comprehensive and reliable data acquisition [

32].

3.4. UAV Platform

The Quad Hopper drone is the UAV platform utilized for multi-modal stress detection. Designed for heavy payloads and extended flight durations, the Quad Hopper integrates seamlessly with the sensor suite, ensuring efficient and precise data collection [

33]. With a payload capacity of

, the Quad Hopper can accommodate hyperspectral, gamma-ray, and thermal imaging sensors simultaneously. Its petrol engine-powered propulsion system provides a robust power source, enabling a flight autonomy of up to

[

34,

35].

The drone features a redundant power plant transmission system, which integrates power from its four engines and transfers it effectively to the rotor set. This design ensures operational reliability and reduces the risk of system failure during critical missions. The Quad Hopper’s onboard systems are equipped with real-time data processing capabilities, allowing the drone to analyze sensor data during flight. This capability reduces latency and facilitates timely decision-making in the field [

36]. Additionally, the drone’s navigation system is enhanced with RTK GPS, providing centimeter-level positional accuracy for precise aerial scanning. Capable of covering large agricultural areas in a single mission, the Quad Hopper offers unmatched efficiency for soil and crop monitoring tasks. The platform is further designed to withstand harsh environmental conditions, including light rain and wind speeds of up to

. Its adaptability, combined with its advanced payload capacity and power system, makes the Quad Hopper an optimal choice for multi-modal stress detection in precision agriculture.

3.5. Field Implementation Challenges

Deploying the Quad Hopper system in real-world agricultural settings poses several challenges. Environmental variability, including fluctuating light conditions, wind interference, and temperature extremes, can impact sensor performance. To mitigate these effects, the system employs real-time calibration techniques, such as adaptive reflectance normalization and dynamic thermal offset adjustments. Data transmission is another critical challenge, particularly in remote areas with limited connectivity. The onboard preprocessing capabilities of the Quad Hopper reduce data size, enabling efficient storage and transmission. Furthermore, the diverse agricultural environments require extensive testing across various crop types and geographic regions to ensure consistent performance. Addressing these challenges is essential for large-scale adoption and reliable stress detection in precision agriculture.

3.6. Economic Viability

The economic viability of the Quad Hopper system is a pivotal consideration for its adoption in the agricultural industry. Traditional stress detection methods often involve labor-intensive manual inspections or costly satellite imagery subscriptions, both of which can be prohibitive for smallholder farmers [

37]. In contrast, the Quad Hopper offers a cost-effective alternative by combining multi-modal sensors and autonomous UAV operations. The system reduces operational costs by enabling early stress detection and targeted interventions, thereby minimizing input waste and increasing crop yield. Additionally, the modular design allows scalability, catering to both small-scale farms and large agricultural enterprises. Preliminary cost analyses indicate a significant return on investment, particularly when implemented over multiple growing seasons, highlighting the system’s potential to transform agricultural practices economically.

3.7. Ethical and Environmental Impact

The Quad Hopper system aligns with sustainable agricultural practices by promoting resource efficiency and reducing environmental impact. Early stress detection minimizes the overuse of fertilizers and pesticides, lowering the risk of chemical runoff and soil degradation [

38]. By enabling precise, localized interventions, the system supports the conservation of natural resources, including water and energy. Ethical considerations include ensuring equitable access to this technology, particularly for resource-constrained farmers in developing regions. Partnerships with governmental and non-governmental organizations can facilitate affordability and accessibility. Moreover, the system’s design prioritizes minimal environmental disruption, with low-noise operations and compliance with UAV regulatory standards. The ethical and environmental benefits of the Quad Hopper reinforce its role in advancing sustainable and inclusive agriculture.

3.8. Algorithm Development

The algorithm for stress detection integrates multi-modal data from hyperspectral, thermal, and gamma-ray sensors, employing advanced data preprocessing, vegetation index computation, and machine learning models to achieve precise soil and crop condition classification. The preprocessing stage is crucial to ensure data consistency and reliability. Hyperspectral data, represented as

(reflectance values at wavelength

), are normalized to reduce variability using the following equation:

where

and

denote the minimum and maximum reflectance values, respectively.

Thermal data

, representing temperature at pixel

, are calibrated using reference blackbody temperatures to adjust for environmental factors:

where

accounts for ambient temperature fluctuations.

Gamma-ray spectrometry data

, indicating counts at energy levels

E, are corrected for background noise using Bayesian inversion techniques, yielding

:

Following preprocessing, vegetation indices are calculated to derive critical features. The Normalized Difference Vegetation Index (NDVI), essential for assessing vegetation health, is computed as follows:

where

and

are the reflectance values in the near-infrared and red bands, respectively.

The Photochemical Reflectance Index (PRI), indicative of photosynthetic activity, is derived as follows:

where

and

are reflectance values at 531 nm and 570 nm wavelengths.

The core of the algorithm employs a hybrid deep learning framework for feature extraction and classification. Convolutional Neural Networks (CNNs) extract spatial features

from hyperspectral and thermal data:

Recurrent Neural Networks (RNNs) are then applied to capture temporal features

from the gamma-ray time series data:

These features are concatenated into a unified feature vector

:

The classification layer maps

into stress categories (e.g., abiotic or biotic stress) using a softmax activation function:

where

W and

b are the weights and biases of the classification layer.

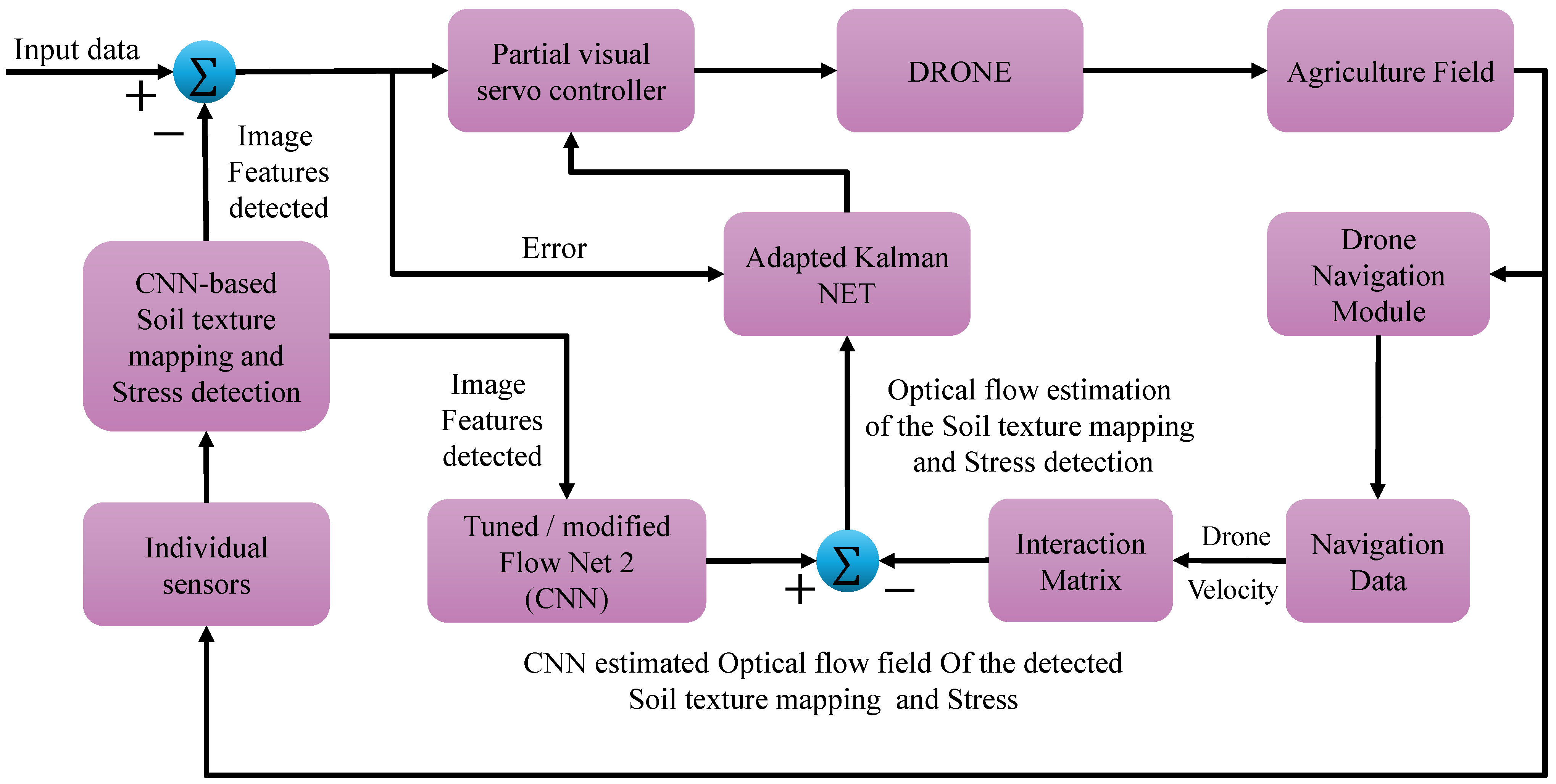

The sequence of the algorithm is described in Algorithm 1, while a flowchart depicting the complete data flow is illustrated in

Figure 3.

| Algorithm 1 Multi-modal stress detection |

- 1:

Input: Hyperspectral data , Thermal data , Gamma-ray data - 2:

Normalize hyperspectral data using Equation ( 1) - 3:

Calibrate thermal data using Equation ( 2) - 4:

Apply background correction to gamma-ray data using Equation ( 3) - 5:

Compute NDVI using Equation ( 4) - 6:

Compute PRI using Equation ( 5) - 7:

Extract spatial features using CNNs (Equation ( 6)) - 8:

Extract temporal features using RNNs (Equation ( 7)) - 9:

Concatenate features into (Equation ( 8)) - 10:

Classify into stress categories using softmax (Equation ( 9)) - 11:

Output: Predicted stress class (abiotic or biotic stress)

|

In addition,

Figure 4 provides a detailed visualization of the methodology employed in this study. It illustrates the workflow of the proposed UAV-based hyperspectral imaging and advanced AI algorithm integration for soil texture mapping and stress detection. The figure outlines the sequence of operations, starting with UAV data acquisition using hyperspectral, thermal, and gamma-ray sensors. It then highlights the preprocessing steps, such as noise reduction and data alignment, followed by the hybrid deep learning framework for feature extraction and classification. This workflow demonstrates the seamless integration of multi-modal data and advanced computational techniques, ensuring efficient and accurate agricultural analysis.

5. Discussion

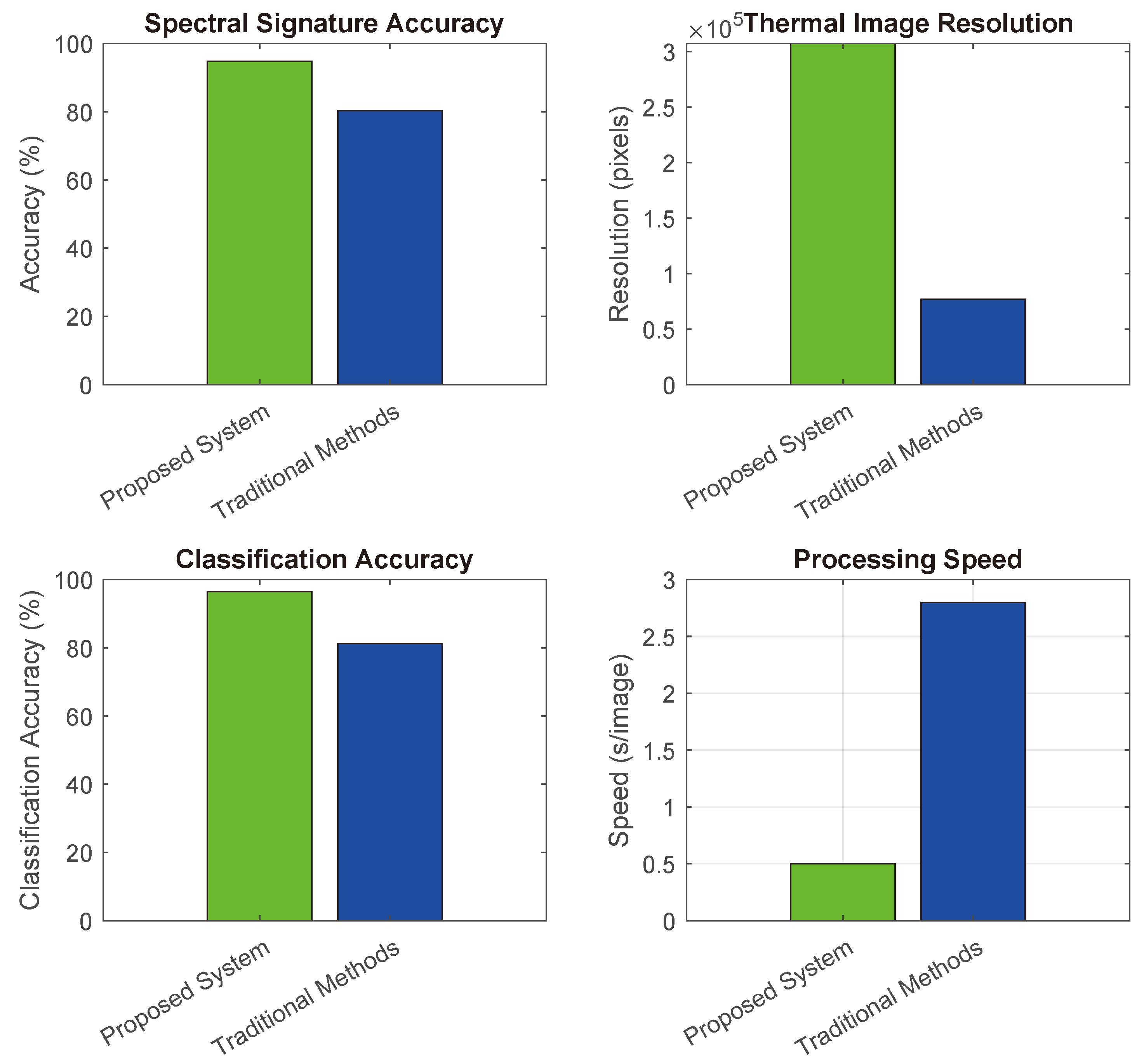

The results indicate that the integration of multi-modal sensors and advanced deep learning algorithms offers a transformative approach to stress detection and soil analysis. Compared to traditional techniques, which rely heavily on manual data collection and basic image processing, the proposed system delivers significantly higher accuracy and faster processing speeds. For instance, while conventional methods often fail to detect early-stage fungal infections, the hyperspectral imaging and thermal data in this system provide early warning signs, enabling timely intervention. Furthermore, the real-time capabilities of the UAV platform, combined with its ability to process large datasets autonomously, reduce operational costs and improve scalability. By addressing the limitations of traditional methods, this system has the potential to revolutionize agricultural monitoring, ensuring more sustainable and efficient farming practices.

The discussion integrates related studies, such as those by [

38,

39], emphasizing the relevance of UAV-based soil texture analysis.

6. Conclusions and Future Perspectives

The proposed Quad Hopper system represents a significant advancement in UAV-based precision agriculture, combining multi-modal sensing, real-time data processing, and advanced machine learning algorithms for efficient stress detection and soil analysis. The results demonstrate exceptional accuracy and processing speed, outperforming traditional methods and addressing critical challenges in agricultural monitoring. The traditional methods referenced include laboratory-based soil texture analysis, which typically involves mechanical sieving and sedimentation techniques to separate soil fractions (sand, silt, and clay). Additionally, visual inspection and manual field sampling are commonly employed, which are time-consuming, labor-intensive, and lack spatial resolution. These conventional approaches often fail to provide real-time insights, making them less effective compared to UAV-based hyperspectral imaging integrated with advanced AI algorithms.

The integration of hyperspectral, thermal, and gamma-ray data, coupled with hybrid CNN-RNN models, enables precise classification of biotic and abiotic stress factors, as well as accurate soil texture mapping. However, challenges related to environmental variability, data transmission, and scalability must be addressed through continued research and development. Future work will focus on expanding the system’s capabilities to include additional sensors, enhancing its adaptability to diverse crops and environments, and optimizing economic feasibility for broader adoption. By fostering sustainable and efficient farming practices, the Quad Hopper has the potential to revolutionize modern agriculture and contribute to global food security.