Smart Glove: A Cost-Effective and Intuitive Interface for Advanced Drone Control

Abstract

1. Introduction

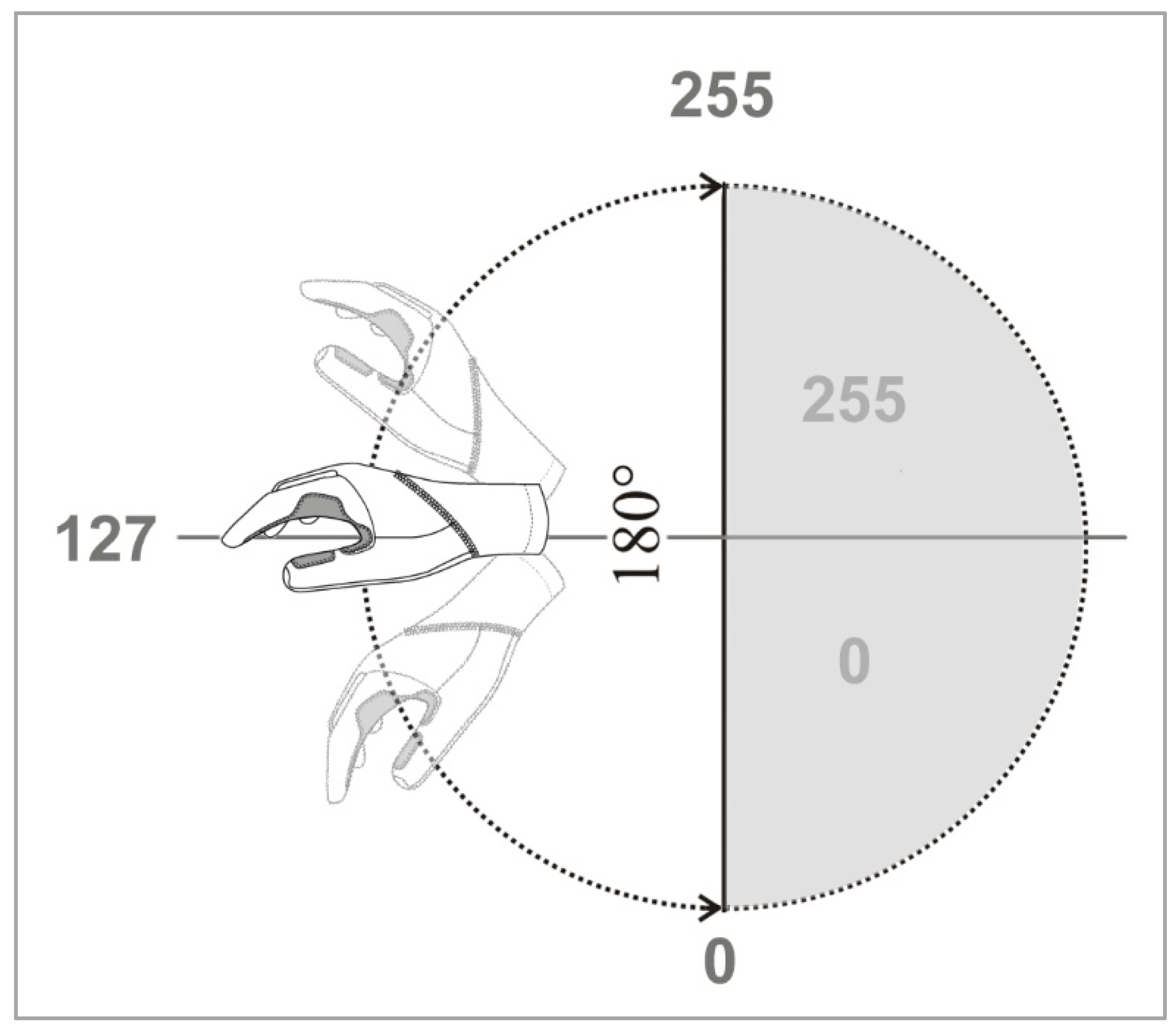

2. Methods

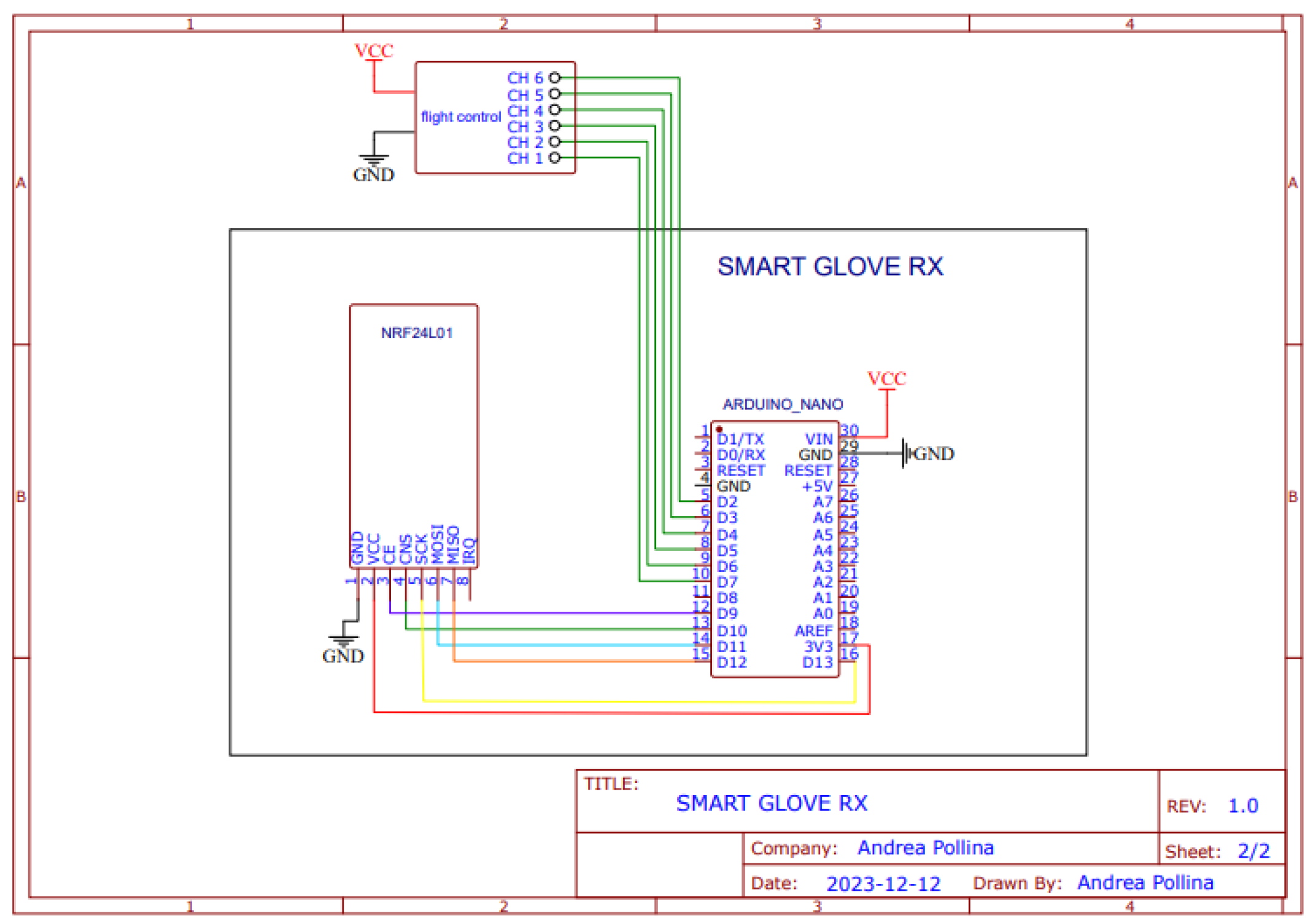

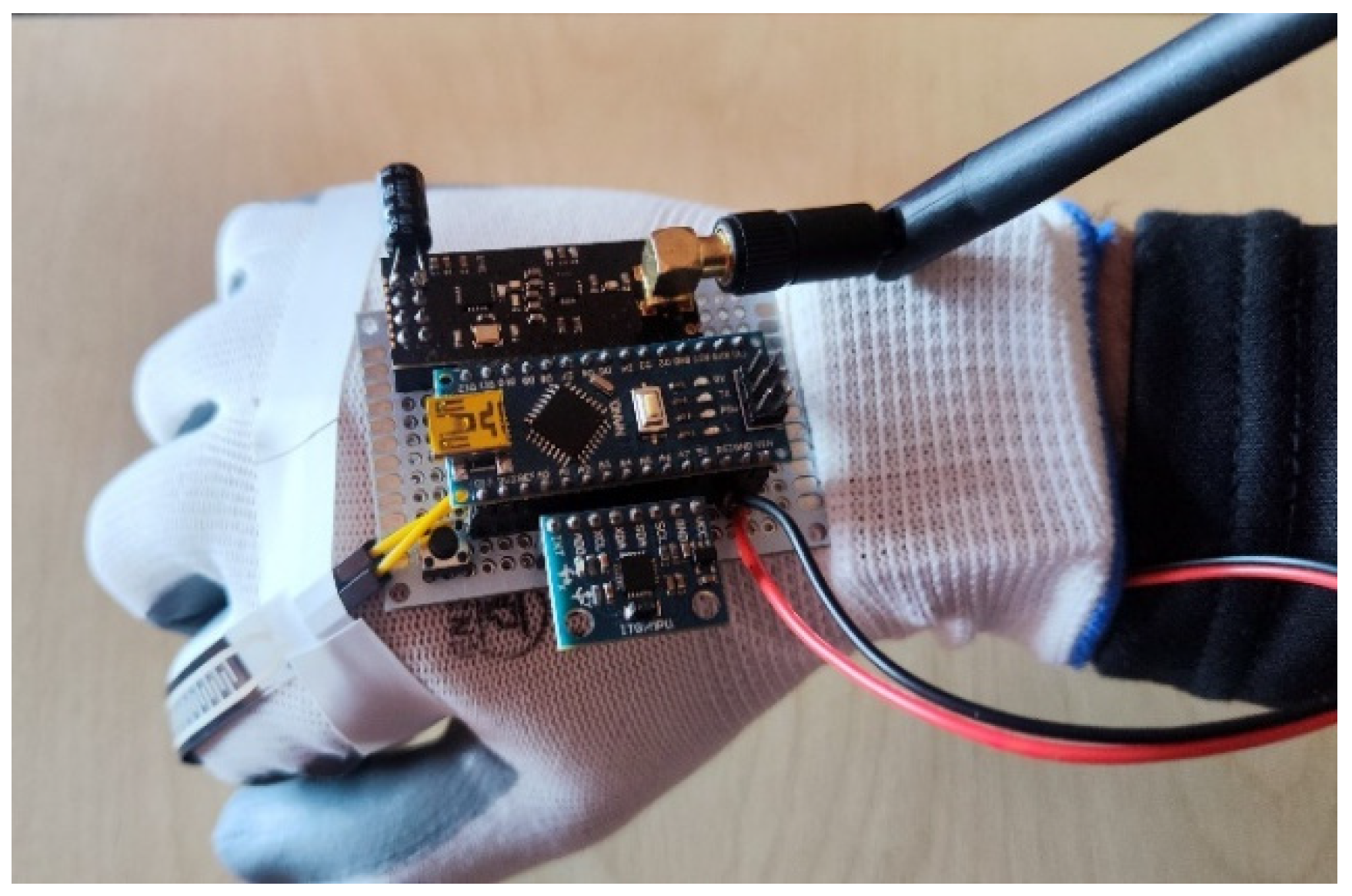

- Two Arduino Nano boards with Atmega 328P processors for data management and processing, one mounted on the glove and the other mounted on the drone.

- Two nRF24L01+PA+LNA Wi-Fi modules (TX and RX) for transmitting and receiving communication signals. This device allows you to transmit the signal up to a distance of 1100 m, a significant increase compared to the 100 m of the basic version, which is typically used to create this type of prototype. The extended border can only be made if the SMA connector with the SMA antenna and range extension chip RFX2401C are present; the latter comprises PA, LNA, and switching circuits between the transmitter and receiver.

- An MPU6050 is a motion sensing device based (Motion Processing Unit) on an MEMS (Micro-Electro Mechanical System) that integrates a 6-degrees-of-freedom (6-DOF) sensor in a single compact chip in terms of a three-axis accelerometer and 3-axis gyroscope. We integrated this sensor into the Smart Glove v1.0 device to simultaneously measure linear acceleration and angular velocity on the x, y, and z axes. This device communicates efficiently with microcontrollers like Arduino via serial data transmission using the I2C bus.

- One Flex sensor, placed on the glove’s index finger, detects deflection or flexion. The flex sensor is a device that changes its resistance based on its curvature or bending. These sensors are typically made of a thin layer of resistive material, such as graphene or conductive ink, deposited on a flexible substrate. Graphene-based materials are particularly noteworthy for their high conductivity and flexibility among piezoresistive materials.

- One LiPo battery, 1200 mAh 7.2 V, whose capacity was chosen to ensure more than 10 h of the continuous Smart Glove v1.0 operation, has a longer operating time than the drone’s time of approximately 45 min.

- A. Sensor Calibration

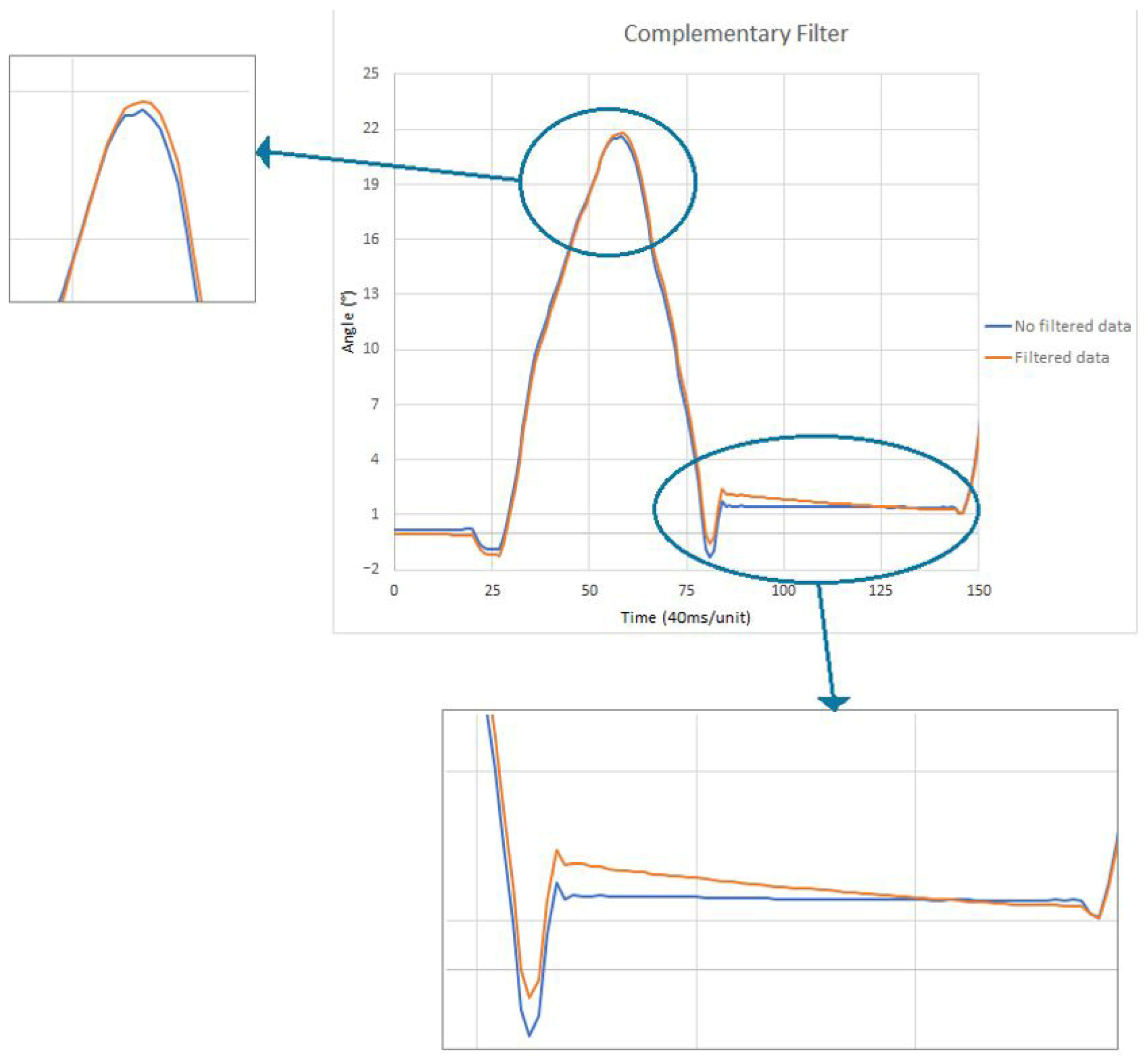

- B. Complementary Filter and Angle Estimation

- Total_angle represents the total Euler angle calculated.

- Gyro_angle denotes the rotational angle measured by the gyroscope.

- Acc_angle signifies the rotation angle derived from the accelerometer.

3. Results and Discussion

- A. Sensor Calibration results

- B. Complementary Filter and Angle Estimation results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tezza, D.; Andujar, M. The State-of-the-Art of Human–Drone Interaction: A Survey. IEEE Access 2019, 7, 167438–167454. [Google Scholar] [CrossRef]

- Hassanalian, M.; Abdelkefi, A. Classifications, applications, and design challenges of drones: A review. Prog. Aerosp. Sci. 2017, 91, 99–131. [Google Scholar] [CrossRef]

- Hassanalian, M.; Rice, D.; Abdelkefi, A. Evolution of space drones for planetary exploration: A review. Prog. Aerosp. Sci. 2018, 97, 61–105. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhang, R.; Lim, T.J. Wireless communications with unmanned aerial vehicles: Opportunities and challenges. IEEE Commun. Mag. 2016, 54, 36–42. [Google Scholar] [CrossRef]

- Ryan, A.; Zennaro, M.; Howell, A.; Sengupta, R. An overview of emerging results in cooperative UAV control. In Proceedings of the 43rd IEEE Conference on Decision and Control, Nassau, Bahamas, 14–17 December 2004. [Google Scholar] [CrossRef]

- Nunes, E.C. Employing Drones in Agriculture: An Exploration of Various Drone Types and Key Advantag. arXiv 2023, arXiv:2307.04037. [Google Scholar]

- Karar, M.E.; Alotaibi, F.; Rasheed, A.A.; Reyad, O. A pilot study of smart agricultural irrigation using unmanned aerial vehicles and IoT-based cloud system. arXiv 2021, arXiv:2101.01851. [Google Scholar]

- Rajapakshe, S.; Wickramasinghe, D.; Gurusinghe, S.; Ishtaweera, D.; Silva, B.; Jayasekara, P.; Panitz, N.; Flick, P.; Kottege, N. Collaborative Ground-Aerial Multi-Robot System for Disaster Response Missions with a Low-Cost Drone Add-On for Off-the-Shelf Drones. In Proceedings of the 2023 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Tomar, Portugal, 26–27 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 75–80. [Google Scholar]

- Mnaouer, H.B.; Faieq, M.; Yousefi, A.; Mnaouer, S.B. FireFly Autonomous Drone Project. arXiv 2021, arXiv:2104.07758. [Google Scholar]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A Review on UAV-Based Applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Farhan, S.M.; Yin, J.; Chen, Z.; Memon, M.S.A. Comprehensive Review of LiDAR Applications in Crop Management for Precision Agriculture. Sensors 2024, 24, 5409. [Google Scholar] [CrossRef] [PubMed]

- Baykar. Bayraktar Akinci. Available online: https://baykartech.com/en/uav/bayraktar-akinci/ (accessed on 27 September 2024).

- CAA—Civil Aviation Authority. Available online: https://tcicaa.tc/operations-safety/aircrafts/unmanned-aircraft (accessed on 3 October 2024).

- FAA—Federal Aviation Administration. Available online: https://www.faa.gov/faq/what-unmanned-aircraft-system-uas (accessed on 3 October 2024).

- ENAC. Regolamento ENAC. Available online: www.enac.gov.it (accessed on 4 October 2024).

- Natarajan, K.; Nguyen, T.H.D.; Mete, M. Hand gesture controlled drones: An open source library. In Proceedings of the 2018 1st International Conference on Data Intelligence and Security (ICDIS), South Padre Island, TX, USA, 8–10 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 168–175. [Google Scholar]

- Bello, H.; Suh, S.; Geißler, D.; Ray, L.S.S.; Zhou, B.; Lukowicz, P. CaptAinGlove: Capacitive and inertial fusion-based glove for real-time on edge hand gesture recognition for drone control. In Proceedings of the Adjunct 2023 ACM International Joint Conference on Pervasive and Ubiquitous Computing and the 2023 ACM International Symposium on Wearable Computing, Cancun, Mexico, 8–12 October 2023; pp. 165–169. [Google Scholar]

- Dong, W.; Yang, L.; Fortino, G. Stretchable Human Machine Interface Based on Smart Glove Embedded With PDMS-CB Strain Sensors. IEEE Sens. J. 2020, 20, 8073–8081. [Google Scholar] [CrossRef]

- Ali, A.M.M.; Yusof, Z.M.D.; Kushairy, A.K.; Zaharah, H.F.; Ismail, A. Development of Smart Glove system for therapy treatment. In Proceedings of the 2015 International Conference on BioSignal Analysis, Processing and Systems (ICBAPS), Kuala Lumpur, Malaysia, 26–28 May 2015; pp. 67–71. [Google Scholar] [CrossRef]

- Niazmand, K.; Tonn, K.; Kalaras, A.; Fietzek, U.M.; Mehrkens, J.H.; Lueth, T.C. Quantitative evaluation of Parkinson’s disease using sensor based smart glove. In Proceedings of the 2011 24th International Symposium on Computer-Based Medical Systems (CBMS), Bristol, UK, 27–30 June 2011; pp. 1–8. [Google Scholar] [CrossRef]

- De Fazio, R.; Mastronardi, V.M.; Petruzzi, M.; De Vittorio, M.; Visconti, P. Human–Machine Interaction through Advanced Haptic Sensors: A Piezoelectric Sensory Glove with Edge Machine Learning for Gesture and Object Recognition. Future Internet 2023, 15, 14. [Google Scholar] [CrossRef]

- Fang, B.; Sun, F.; Liu, H.; Liu, C. 3D human gesture capturing and recognition by the IMMU-based data glove. Neurocomputing 2018, 277, 198–207. [Google Scholar] [CrossRef]

- Caeiro-Rodríguez, M.; Otero-González, I.; Mikic-Fonte, F.A.; Llamas-Nistal, M. A Systematic Review of Commercial Smart Gloves: Current Status and Applications. Sensors 2021, 21, 2667. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.-W.; Yu, K.-H. Wearable Drone Controller: Machine Learning-Based Hand Gesture Recognition and Vibrotactile Feedback. Sensors 2023, 23, 2666. [Google Scholar] [CrossRef] [PubMed]

- Shin, S.-Y.; Kang, Y.-W.; Kim, Y.-G. Hand Gesture-based Wearable Human-Drone Interface for Intuitive Movement Control. In Proceedings of the 2019 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 11–13 January 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Lee, J.W.; Kim, K.-J.; Yu, K.-H. Implementation of a User-Friendly Drone Control Interface Using Hand Gestures and Vibrotactile Feedback. J. Inst. Control Robot. Syst. 2022, 28, 349–352. [Google Scholar] [CrossRef]

- Nelson, A.S.O.; Santiago, F.M.D.; De La Rosa, R.F. Virtual Reality and Human-Drone Interaction applied to the Construction and Execution of Flight Paths. In Proceedings of the 2023 International Conference on Unmanned Aircraft Systems (ICUAS), Warsaw, Poland, 6–9 June 2023; pp. 144–151. [Google Scholar]

- Pant, M.; Jadon, J.S.; Agarwal, R.; Sinha, S.K. Smart Monitoring System using Smart Glove. In Proceedings of the 2021 9th In-ternational Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 3–4 September 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Athreya, H.P.; Mamatha, G.; Manasa, R.; Raj, S.; Yashwanth, R. Smart Glove for the Disabled: A Survey. CiiT Int. J. Program. Device Circuits Syst. 2021, 13, 2. [Google Scholar]

- SETI Institute. An Astronaut Smart Glove to Explore the Moon, Mars and Beyond. Press Release, 31 October 2019. Available online: https://www.seti.org/press-release/astronaut-smart-glove-explore-moon-mars-and-beyond (accessed on 4 October 2024).

- Ozioko, O.; Dahiya, R. Smart Tactile Gloves for Haptic Interaction, Communication, and Rehabilitation. Adv. Intell. Syst. 2022, 4, 2100091. [Google Scholar] [CrossRef]

- Lee, K.T.; Chee, P.S.; Lim, E.H.; Lim, C.C. Artificial intelligence (AI)-driven smart glove for object recognition application. Mater. Today Proc. 2022, 64 Pt 4, 1563–1568. [Google Scholar] [CrossRef]

- Hu, B.; Wang, J. Deep Learning Based Hand Gesture Recognition and UAV Flight Controls. Int. J. Autom. Comput. 2020, 17, 17–29. [Google Scholar] [CrossRef]

- De Magistris, G.; Guercio, L.; Starna, F.; Russo, S.; Kryvinska, N.; Napoli, C. A Real-Time Support with Haptic Feedback for Safer Driving Using Monocular Camera. In International Conference of the Italian Association for Artificial Intelligence; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 15450 LNAI; Springer Nature: Cham, Switzerland, 2025; pp. 161–174. [Google Scholar] [CrossRef]

- Randieri, C.; Puglisi, V.F. FPGA Implementation Strategies for Efficient Machine Learning Systems. In Proceedings of the International Conference of Yearly Reports on Informatics, Mathematics, and Engineering, Catania, Italy, 26–29 August 2022; pp. 24–29. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Randieri, C.; Pollina, A.; Puglisi, A.; Napoli, C. Smart Glove: A Cost-Effective and Intuitive Interface for Advanced Drone Control. Drones 2025, 9, 109. https://doi.org/10.3390/drones9020109

Randieri C, Pollina A, Puglisi A, Napoli C. Smart Glove: A Cost-Effective and Intuitive Interface for Advanced Drone Control. Drones. 2025; 9(2):109. https://doi.org/10.3390/drones9020109

Chicago/Turabian StyleRandieri, Cristian, Andrea Pollina, Adriano Puglisi, and Christian Napoli. 2025. "Smart Glove: A Cost-Effective and Intuitive Interface for Advanced Drone Control" Drones 9, no. 2: 109. https://doi.org/10.3390/drones9020109

APA StyleRandieri, C., Pollina, A., Puglisi, A., & Napoli, C. (2025). Smart Glove: A Cost-Effective and Intuitive Interface for Advanced Drone Control. Drones, 9(2), 109. https://doi.org/10.3390/drones9020109