LOFF: LiDAR and Optical Flow Fusion Odometry

Abstract

1. Introduction

- We present a direction-separated data fusion approach that is capable of fusing optical flow odometry in the degeneracy direction of LiDAR SLAM only, ensuring the accuracy of the position estimates of the whole system;

- We present a low-computation failure and recovery detector that enables our data fusion approach to perform fast switching between degeneracy environments and general environments, as well as an offset module to guarantee smoothness during switching;

- The performance of our approach is verified through real-world UAV experiments against the state of the art, as shown in the video https://youtu.be/ITytYCW8y5w (accessed on 21 July 2024).

2. Related Work

3. Method

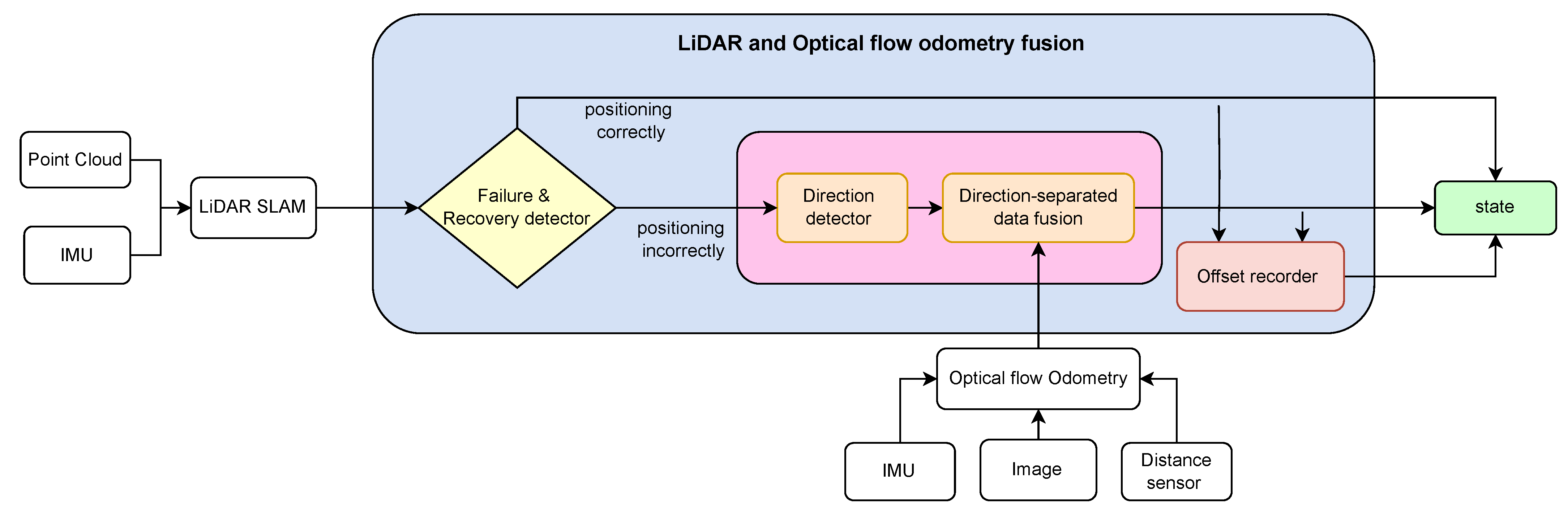

3.1. System Overview

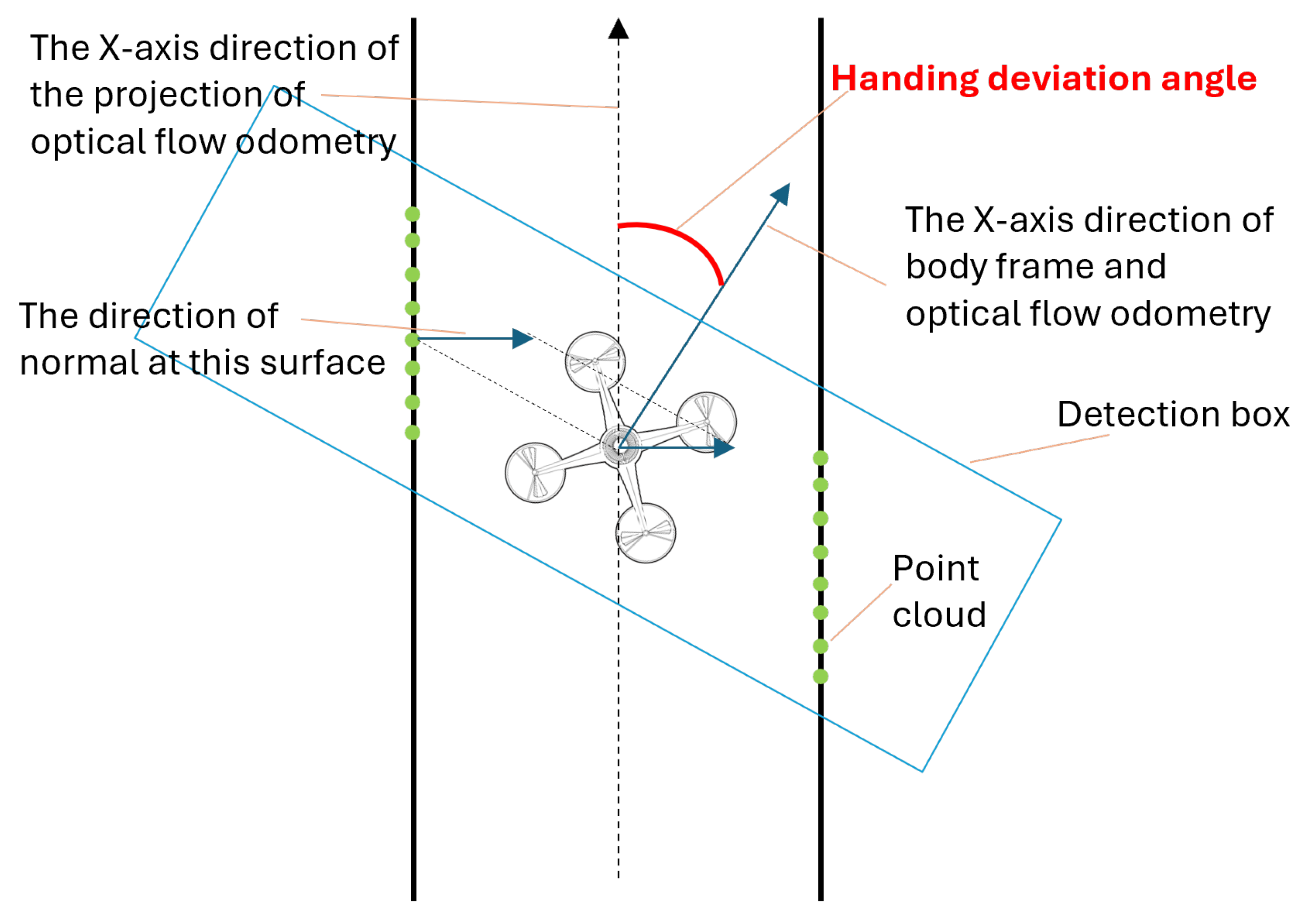

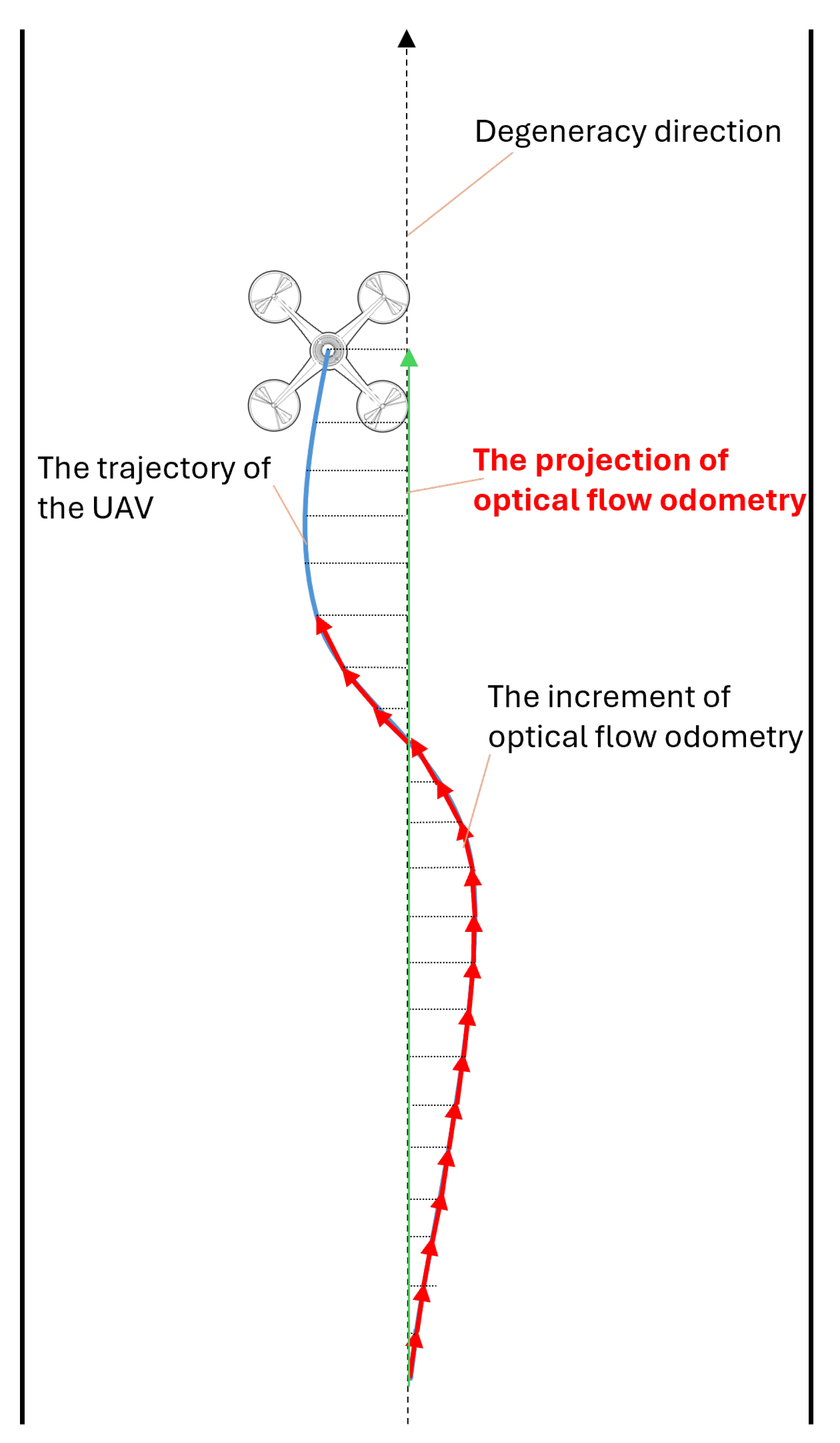

3.2. Direction-Separated Data Fusion

3.3. LiDAR Odometry Failure and Recovery Detector

| Algorithm 1: LiDAR and optical flow odometry fusion approach |

|

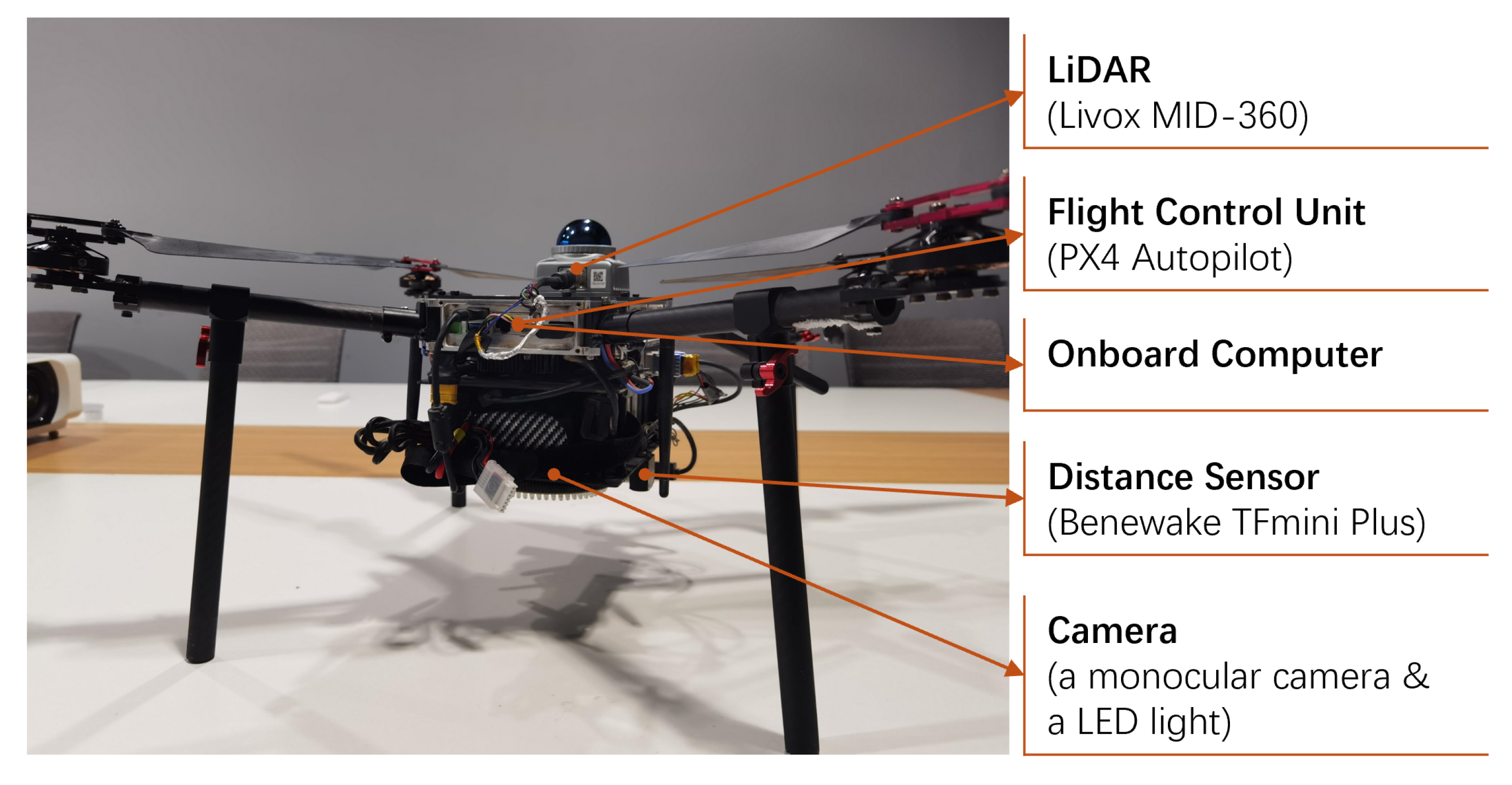

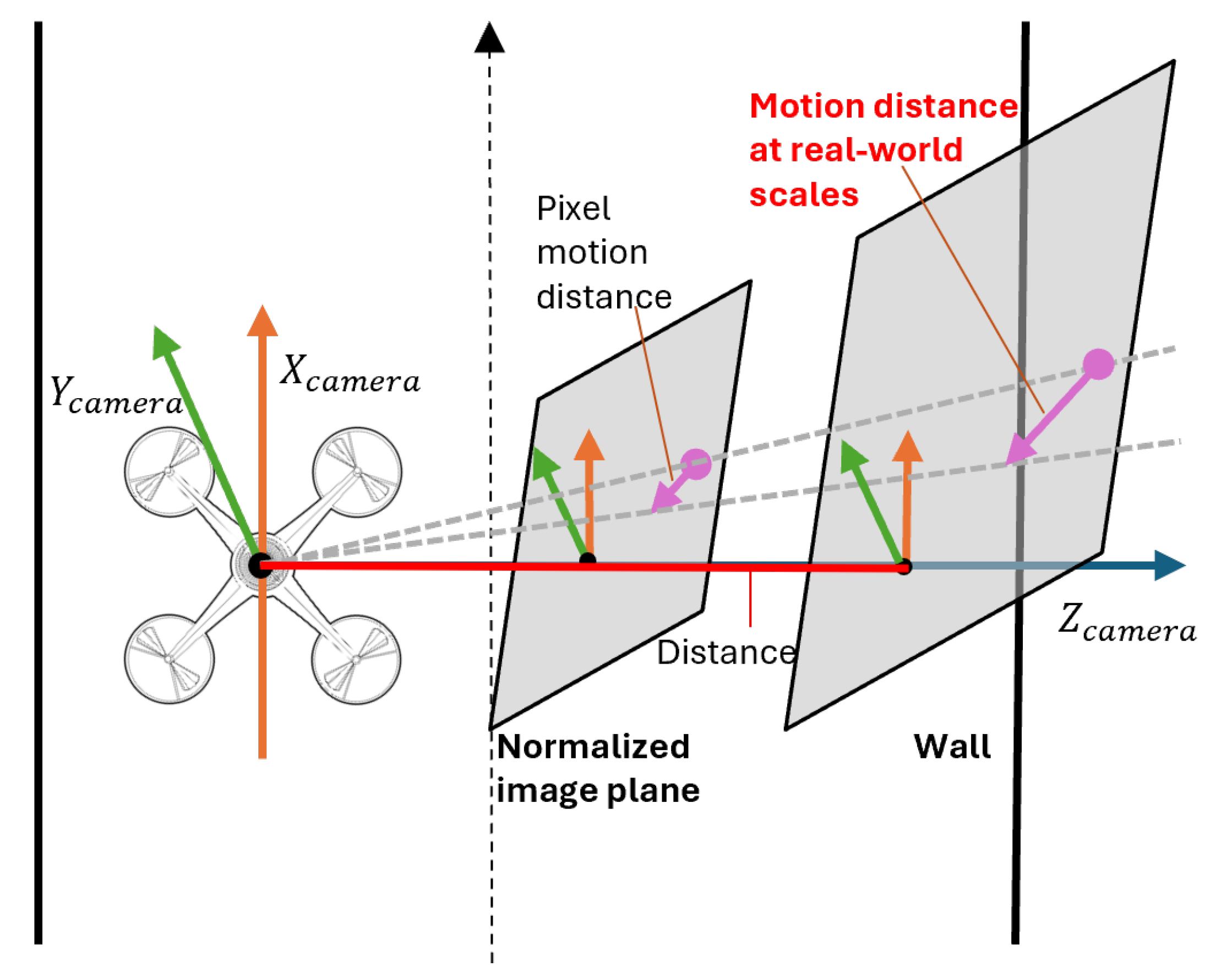

3.4. Optical Flow Odometry

4. Experiments and Results

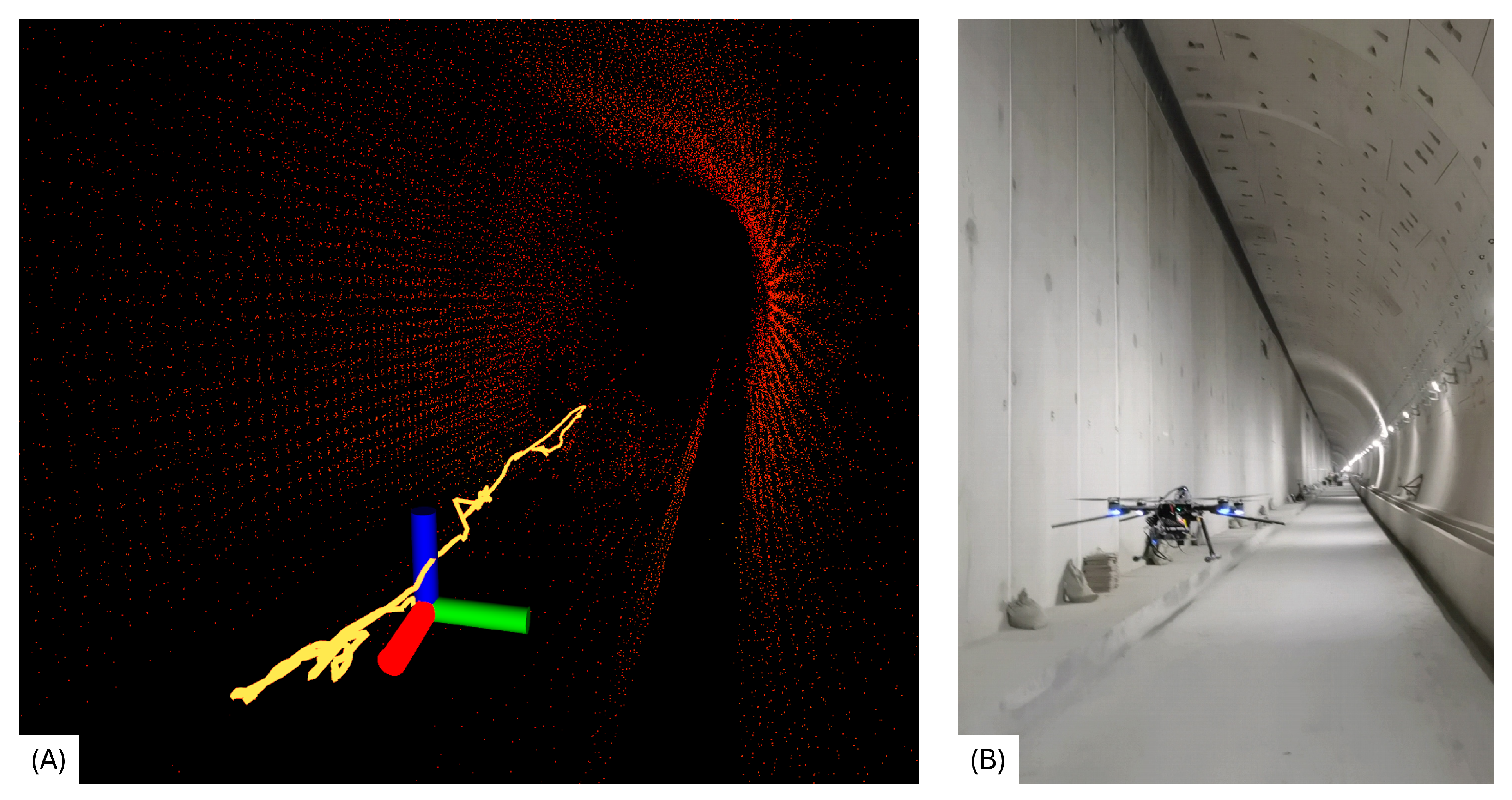

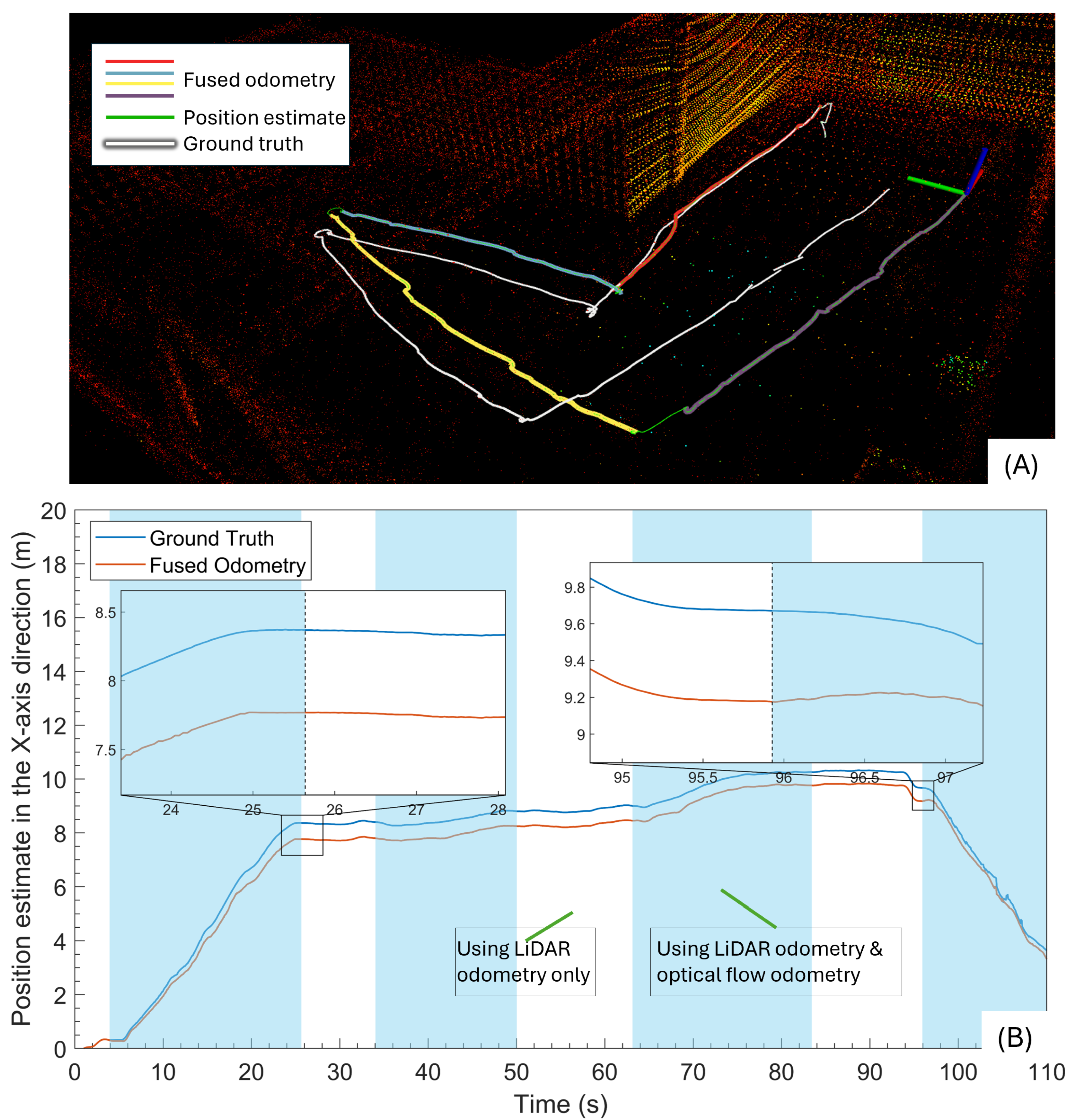

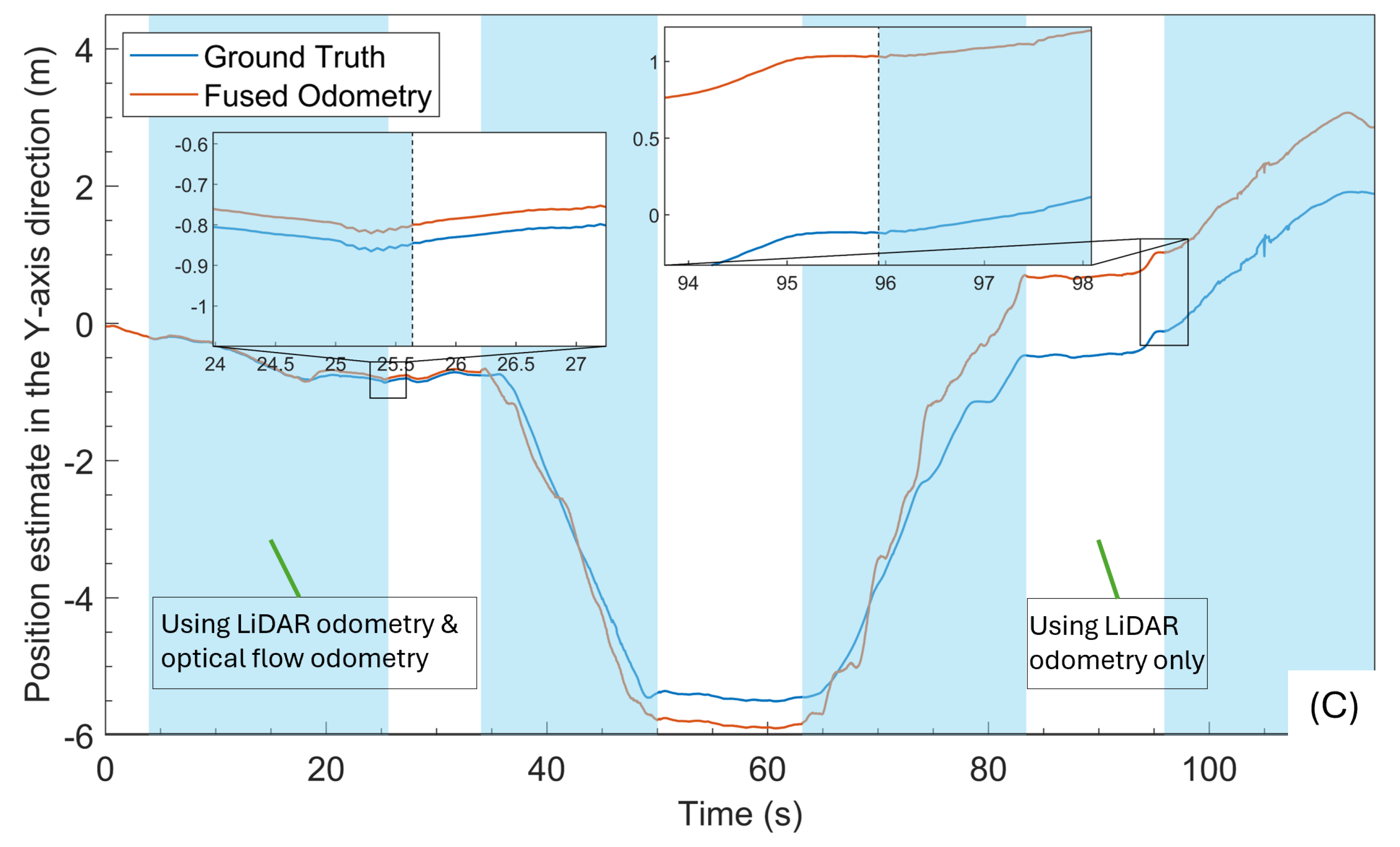

4.1. Indoor Manual Switching Experiment

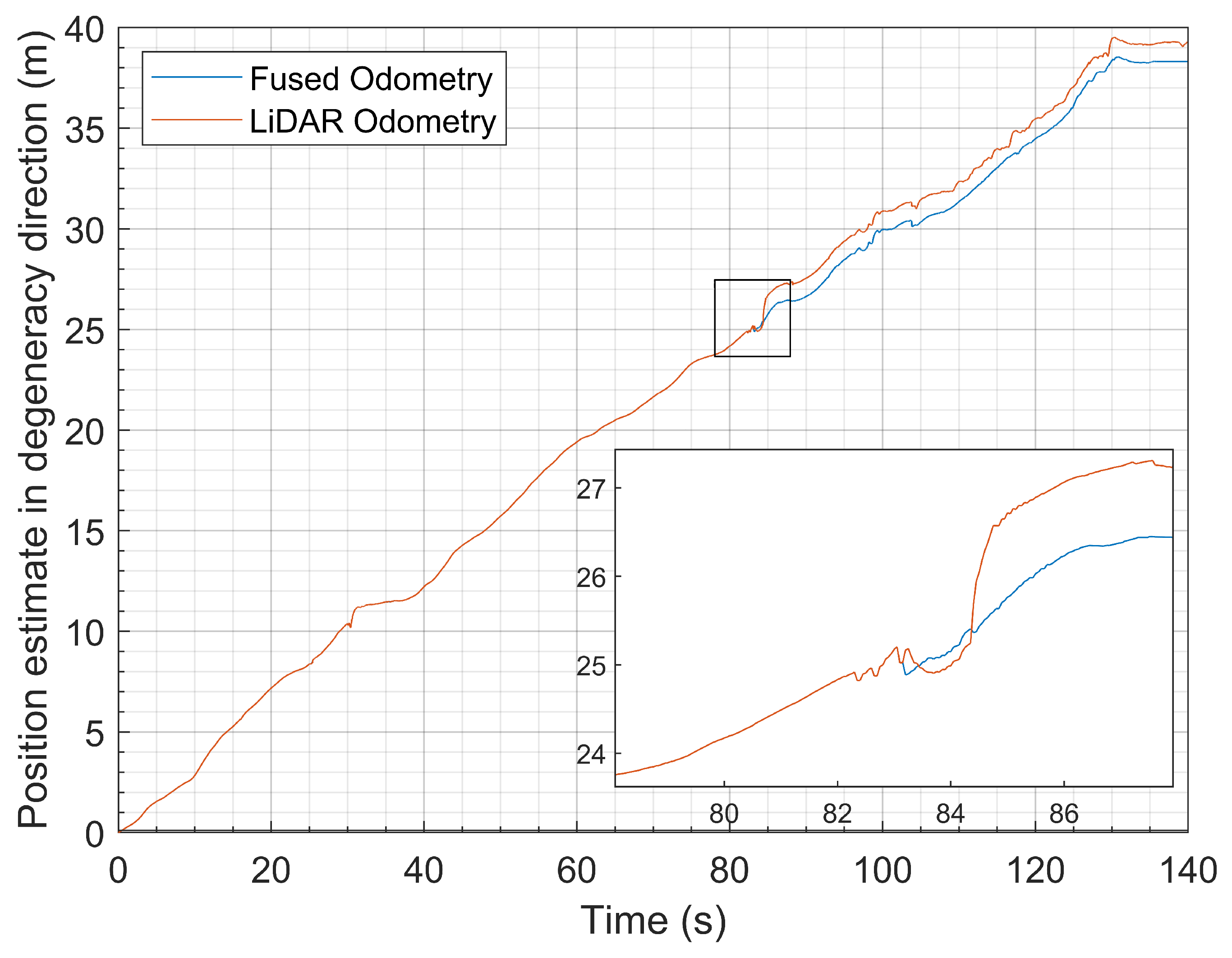

4.2. Failure Detection Experiment

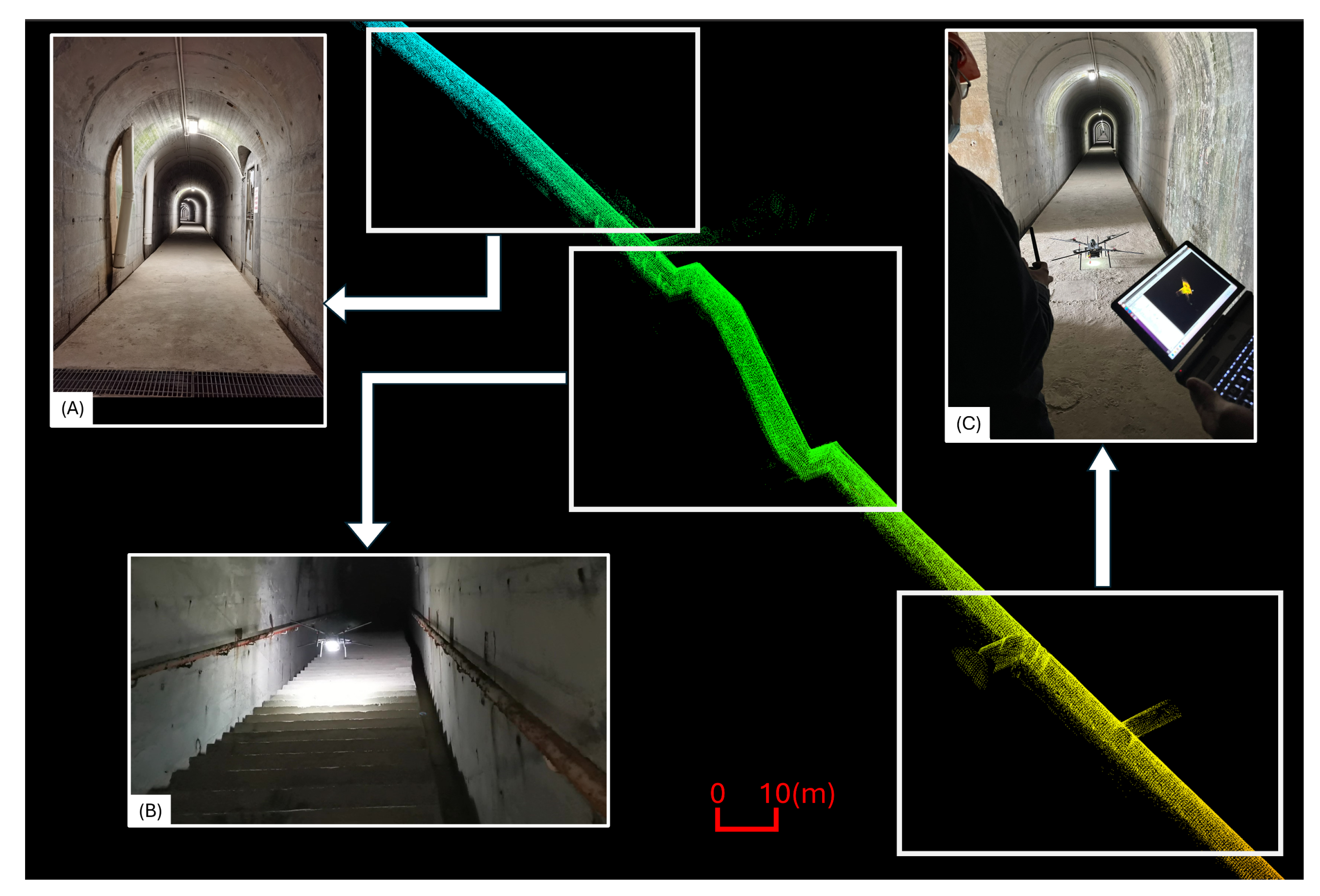

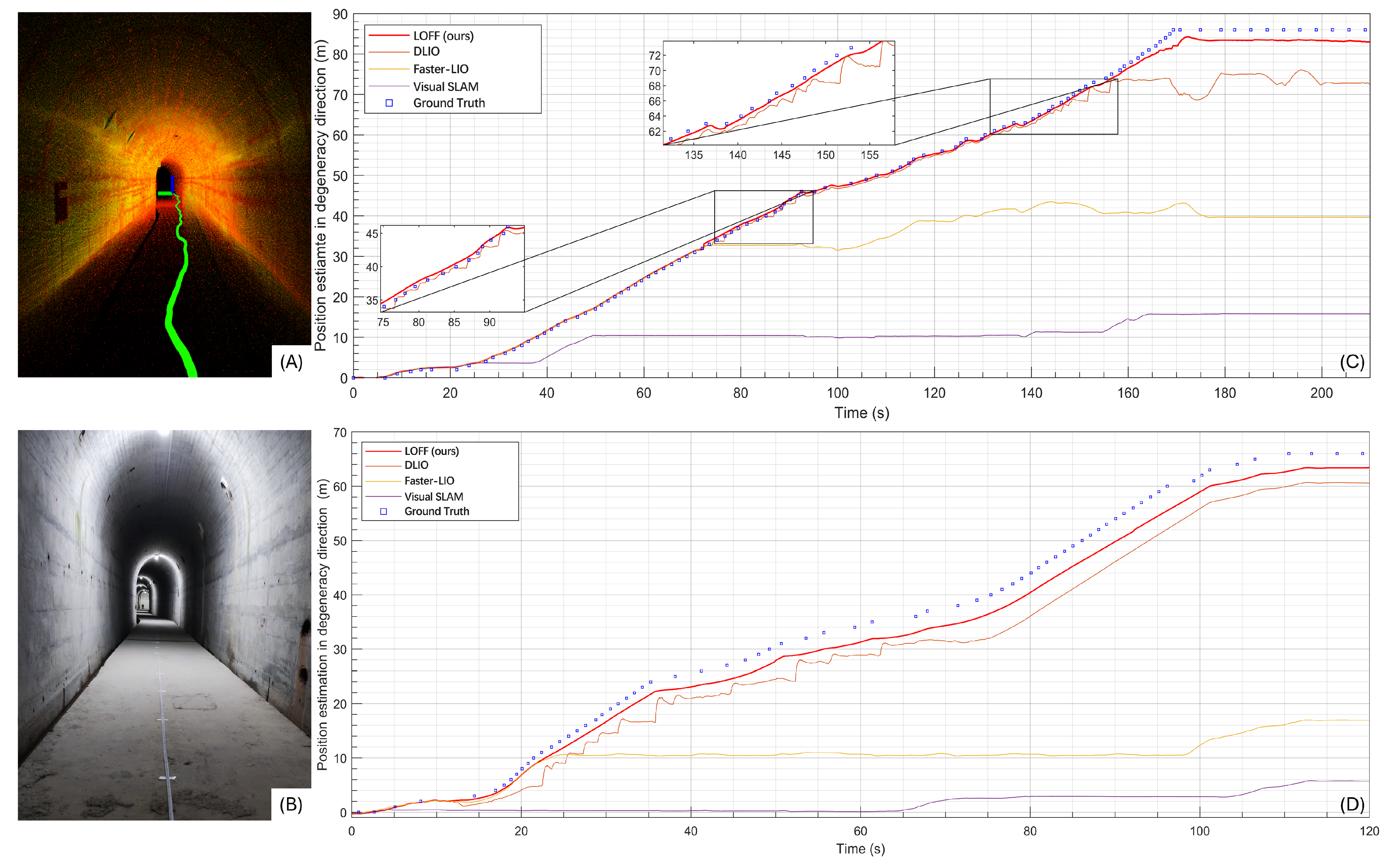

4.3. Tunnel Flight Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ghamari, M.; Rangel, P.; Mehrubeoglu, M.; Tewolde, G.S.; Sherratt, R.S. Unmanned aerial vehicle communications for civil applications: A review. IEEE Access 2022, 10, 102492–102531. [Google Scholar] [CrossRef]

- Kim, I.H.; Jeon, H.; Baek, S.C.; Hong, W.H.; Jung, H.J. Application of crack identification techniques for an aging concrete bridge inspection using an unmanned aerial vehicle. Sensors 2018, 18, 1881. [Google Scholar] [CrossRef] [PubMed]

- Mejía, A.; Marcillo, D.; Guaño, M.; Gualotuña, T. Serverless based control and monitoring for search and rescue robots. In Proceedings of the 2020 15th Iberian Conference on Information Systems and Technologies (CISTI), Seville, Spain, 27 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Huang, D.; Chen, J.; Chen, Y.; Hang, S.; Sun, C.; Zhang, J.; Zhan, Q.; Shen, R. Monocular Visual Measurement Based on Marking Points Regression and Semantic Information. In Proceedings of the 2023 China Automation Congress (CAC), Chongqing, China, 17–19 November 2023; pp. 4644–4649. [Google Scholar] [CrossRef]

- Shahmoradi, J.; Mirzaeinia, A.; Roghanchi, P.; Hassanalian, M. Monitoring of inaccessible areas in gps-denied underground mines using a fully autonomous encased safety inspection drone. In Proceedings of the AIAA Scitech 2020 Forum, Orlando, FL, USA, 6–10 January 2020; p. 1961. [Google Scholar]

- Zhang, S.; Wang, W.; Jiang, T. Wi-Fi-inertial indoor pose estimation for microaerial vehicles. IEEE Trans. Ind. Electron. 2020, 68, 4331–4340. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, W.; Zhang, N.; Jiang, T. LoRa backscatter assisted state estimator for micro aerial vehicles with online initialization. IEEE Trans. Mob. Comput. 2021, 21, 4038–4050. [Google Scholar] [CrossRef]

- Rudol, P.; Wzorek, M.; Conte, G.; Doherty, P. Micro unmanned aerial vehicle visual servoing for cooperative indoor exploration. In Proceedings of the 2008 IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1–10. [Google Scholar]

- Premachandra, H.; Liu, R.; Yuen, C.; Tan, U.X. UWB Radar SLAM: An Anchorless Approach in Vision Denied Indoor Environments. IEEE Robot. Autom. Lett. 2023, 8, 5299–5306. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, S.; Chen, G.; Dong, W. Robust visual positioning of the UAV for the under bridge inspection with a ground guided vehicle. IEEE Trans. Instrum. Meas. 2021, 71, 5000610. [Google Scholar] [CrossRef]

- Chen, J.; Chen, Y.; Huang, D.; Hang, S.; Zhang, J.; Sun, C.; Shen, R. Relative Localization of Vehicle-to-Drone Coordination Based on Lidar. In Proceedings of the International Conference on Autonomous Unmanned Systems, Nanjing, China, 8–11 September 2023; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Qin, T.; Cao, S.; Pan, J.; Shen, S. A General Optimization-based Framework for Global Pose Estimation with Multiple Sensors. arXiv 2019, arXiv:1901.03642. [Google Scholar]

- Bai, C.; Xiao, T.; Chen, Y.; Wang, H.; Zhang, F.; Gao, X. Faster-LIO: Lightweight Tightly Coupled Lidar-Inertial Odometry Using Parallel Sparse Incremental Voxels. IEEE Robot. Autom. Lett. 2022, 7, 4861–4868. [Google Scholar] [CrossRef]

- Chen, K.; Nemiroff, R.; Lopez, B.T. Direct lidar-inertial odometry: Lightweight lio with continuous-time motion correction. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 3983–3989. [Google Scholar]

- Liang, J.; Qiao, Y.L.; Guan, T.; Manocha, D. OF-VO: Efficient navigation among pedestrians using commodity sensors. IEEE Robot. Autom. Lett. 2021, 6, 6148–6155. [Google Scholar] [CrossRef]

- Jiang, C.; Wang, G.; Miao, Y.; Wang, H. 3D scene flow estimation on pseudo-lidar: Bridging the gap on estimating point motion. IEEE Trans. Ind. Inform. 2022, 19, 7346–7354. [Google Scholar] [CrossRef]

- Liu, H.; Liao, K.; Lin, C.; Zhao, Y.; Guo, Y. Pseudo-lidar point cloud interpolation based on 3d motion representation and spatial supervision. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6379–6389. [Google Scholar] [CrossRef]

- Rashed, H.; Ramzy, M.; Vaquero, V.; El Sallab, A.; Sistu, G.; Yogamani, S. Fusemodnet: Real-time camera and lidar based moving object detection for robust low-light autonomous driving. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2393–2402. [Google Scholar]

- Pandya, A.; Jha, A.; Cenkeramaddi, L.R. A velocity estimation technique for a monocular camera using mmwave fmcw radars. Electronics 2021, 10, 2397. [Google Scholar] [CrossRef]

- Zheng, W.; Xiao, J.; Xin, T. Integrated navigation system with monocular vision and LIDAR for indoor UAVs. In Proceedings of the 2017 12th IEEE Conference on Industrial Electronics and Applications (ICIEA), Siem Reap, Cambodia, 18–20 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 924–929. [Google Scholar]

- Yun, S.; Lee, Y.J.; Sung, S. Range/optical flow-aided integrated navigation system in a strapdown sensor configuration. Int. J. Control. Autom. Syst. 2016, 14, 229–241. [Google Scholar] [CrossRef]

- Du, H.; Wang, W.; Xu, C.; Xiao, R.; Sun, C. Real-time onboard 3D state estimation of an unmanned aerial vehicle in multi-environments using multi-sensor data fusion. Sensors 2020, 20, 919. [Google Scholar] [CrossRef] [PubMed]

- Zhen, W.; Scherer, S. Estimating the localizability in tunnel-like environments using LiDAR and UWB. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4903–4908. [Google Scholar]

- Kim, K.; Im, J.; Jee, G. Tunnel facility based vehicle localization in highway tunnel using 3D LIDAR. IEEE Trans. Intell. Transp. Syst. 2022, 23, 17575–17583. [Google Scholar] [CrossRef]

- Zhang, J.; Kaess, M.; Singh, S. On degeneracy of optimization-based state estimation problems. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 809–816. [Google Scholar]

- Hinduja, A.; Ho, B.J.; Kaess, M. Degeneracy-aware factors with applications to underwater slam. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1293–1299. [Google Scholar]

- Tuna, T.; Nubert, J.; Nava, Y.; Khattak, S.; Hutter, M. X-icp: Localizability-aware lidar registration for robust localization in extreme environments. IEEE Trans. Robot. 2023, 40, 452–471. [Google Scholar] [CrossRef]

- Leondes, C.T. Theory and Applications of Kalman Filtering; North Atlantic Treaty Organization, Advisory Group for Aerospace Research: Neuilly sur Seine, France, 1970; Volume 139. [Google Scholar]

- Djuric, P.M.; Kotecha, J.H.; Zhang, J.; Huang, Y.; Ghirmai, T.; Bugallo, M.F.; Miguez, J. Particle filtering. IEEE Signal Process. Mag. 2003, 20, 19–38. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011. [Google Scholar]

- Trawny, N.; Roumeliotis, S.I. Indirect Kalman Filter for 3D Attitude Estimation; Technical Report 2005-002; Department of Computer Science & Engineering, University of Minnesota: Minneapolis, MN, USA, 2005. [Google Scholar]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Proceedings of the Sensor Fusion IV: Control Paradigms and Data Structures, Boston, MA, USA, 14–15 November 1991; SPIE: Bellingham, WA, USA, 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and systems, Seattle, WA, USA, 28 June–1 July 2009; Volume 2, p. 435. [Google Scholar]

- Liu, X.; Zhou, Q.; Chen, X.; Fan, L.; Cheng, C.T. Bias-error accumulation analysis for inertial navigation methods. IEEE Signal Process. Lett. 2021, 29, 299–303. [Google Scholar] [CrossRef]

- Corporation, Intel. Intel RealSense Tracking Camera T265. 2019. Available online: https://www.intelrealsense.com/tracking-camera-t265/ (accessed on 30 April 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Huang, Z.; Zhu, X.; Guo, F.; Sun, C.; Zhan, Q.; Shen, R. LOFF: LiDAR and Optical Flow Fusion Odometry. Drones 2024, 8, 411. https://doi.org/10.3390/drones8080411

Zhang J, Huang Z, Zhu X, Guo F, Sun C, Zhan Q, Shen R. LOFF: LiDAR and Optical Flow Fusion Odometry. Drones. 2024; 8(8):411. https://doi.org/10.3390/drones8080411

Chicago/Turabian StyleZhang, Junrui, Zhongbo Huang, Xingbao Zhu, Fenghe Guo, Chenyang Sun, Quanxi Zhan, and Runjie Shen. 2024. "LOFF: LiDAR and Optical Flow Fusion Odometry" Drones 8, no. 8: 411. https://doi.org/10.3390/drones8080411

APA StyleZhang, J., Huang, Z., Zhu, X., Guo, F., Sun, C., Zhan, Q., & Shen, R. (2024). LOFF: LiDAR and Optical Flow Fusion Odometry. Drones, 8(8), 411. https://doi.org/10.3390/drones8080411