Design and Implementation of UAVs for Bird’s Nest Inspection on Transmission Lines Based on Deep Learning

Abstract

:1. Introduction

2. Materials and Methods

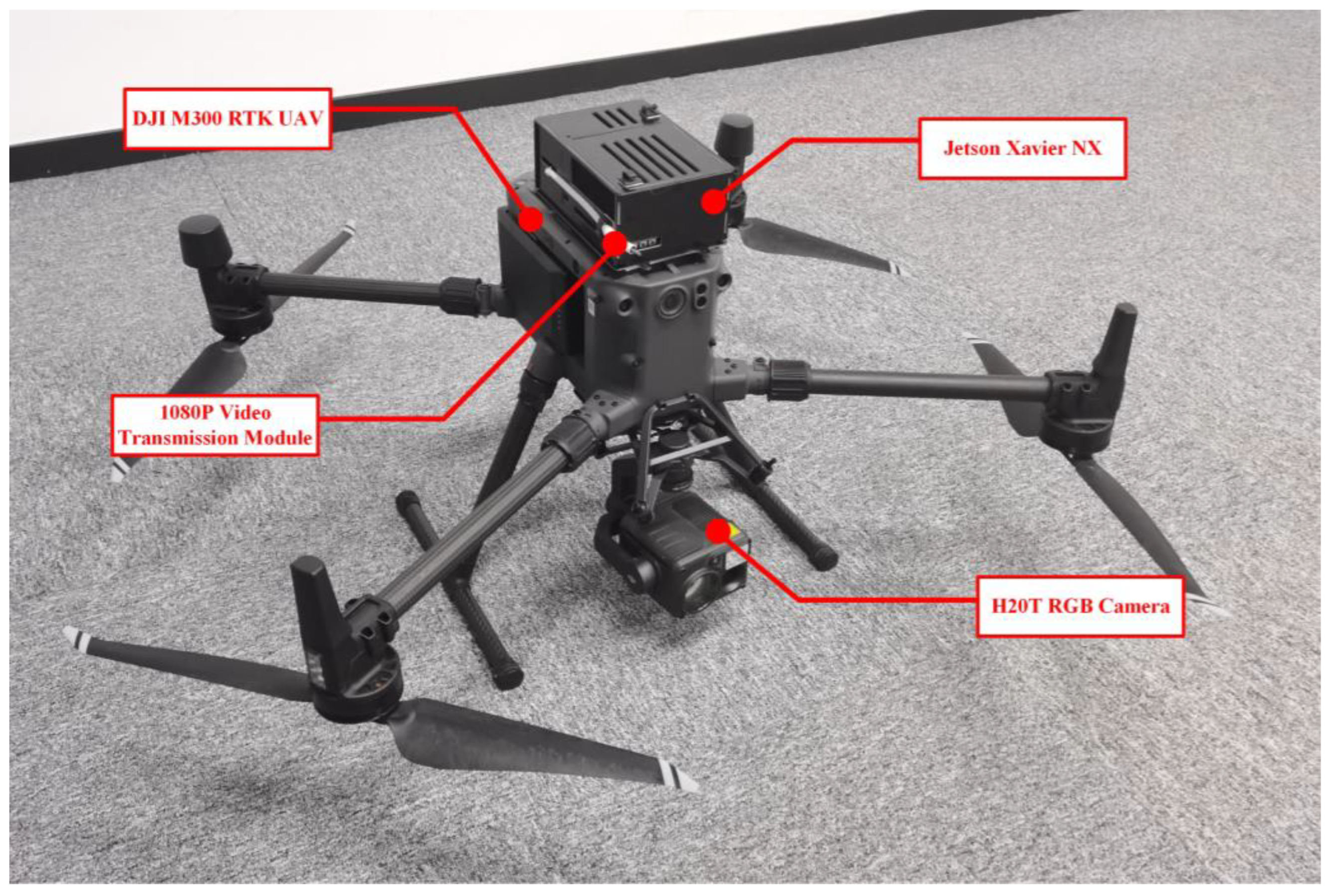

2.1. Hardware Design

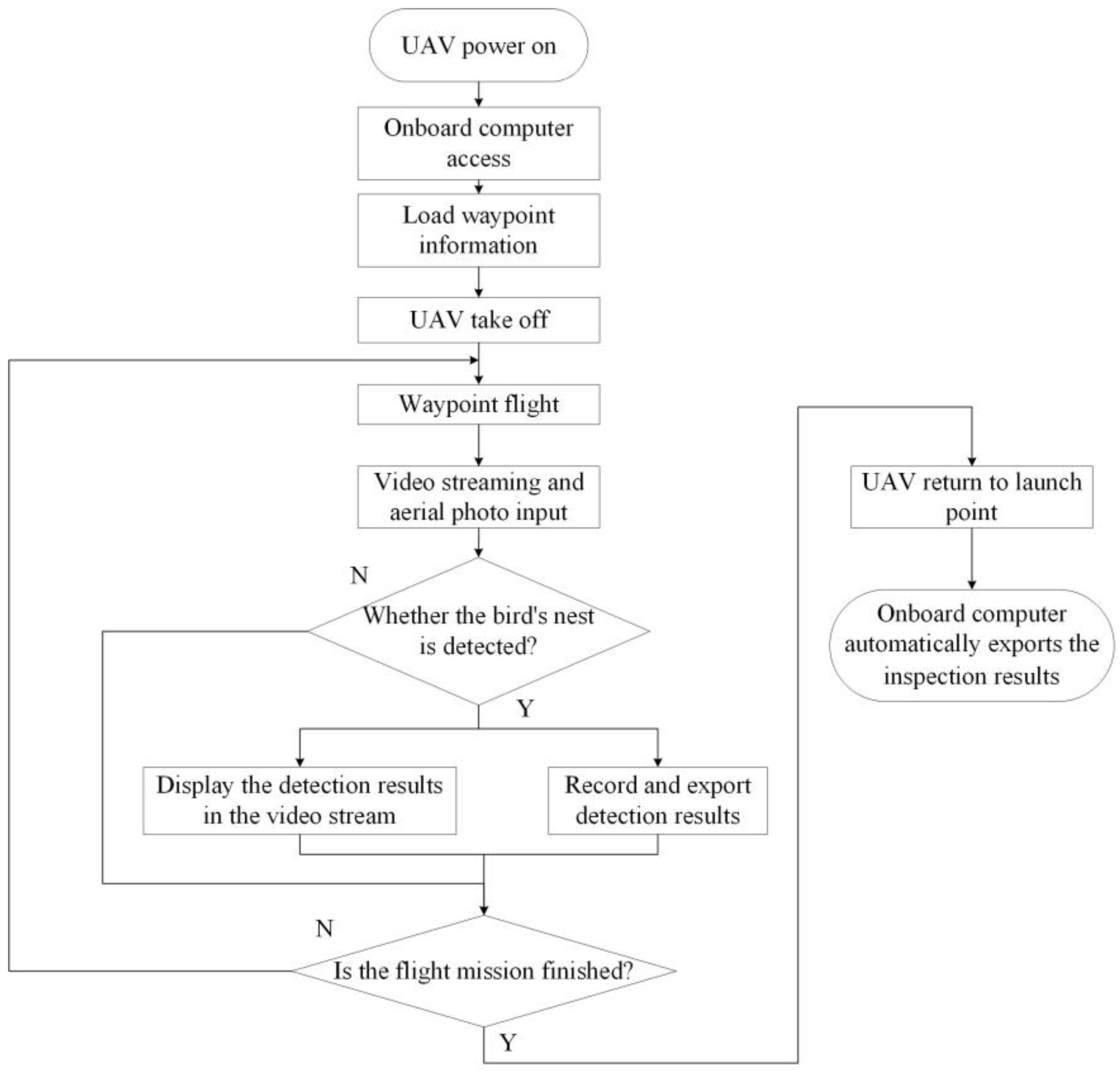

2.2. Software Design

2.2.1. Navigation and Localization Module

2.2.2. Bird’s Nest Detection Module

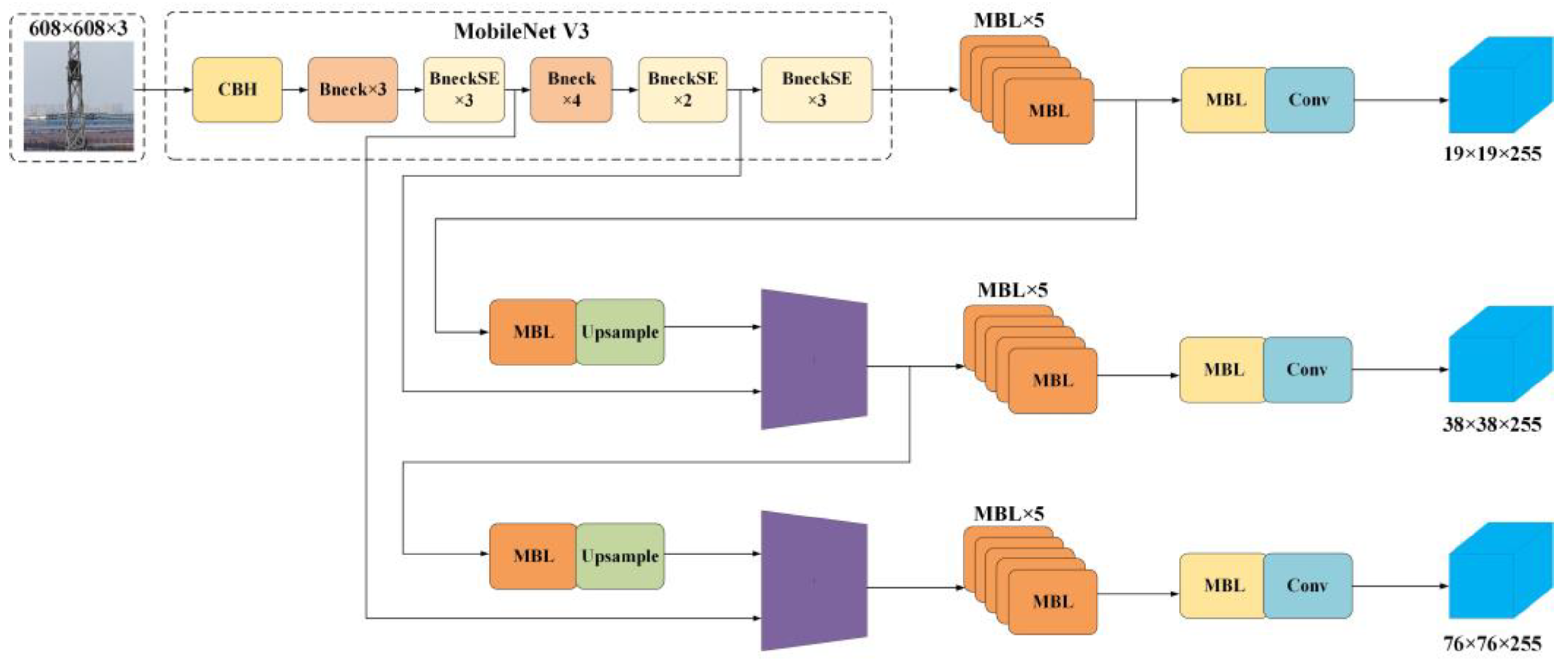

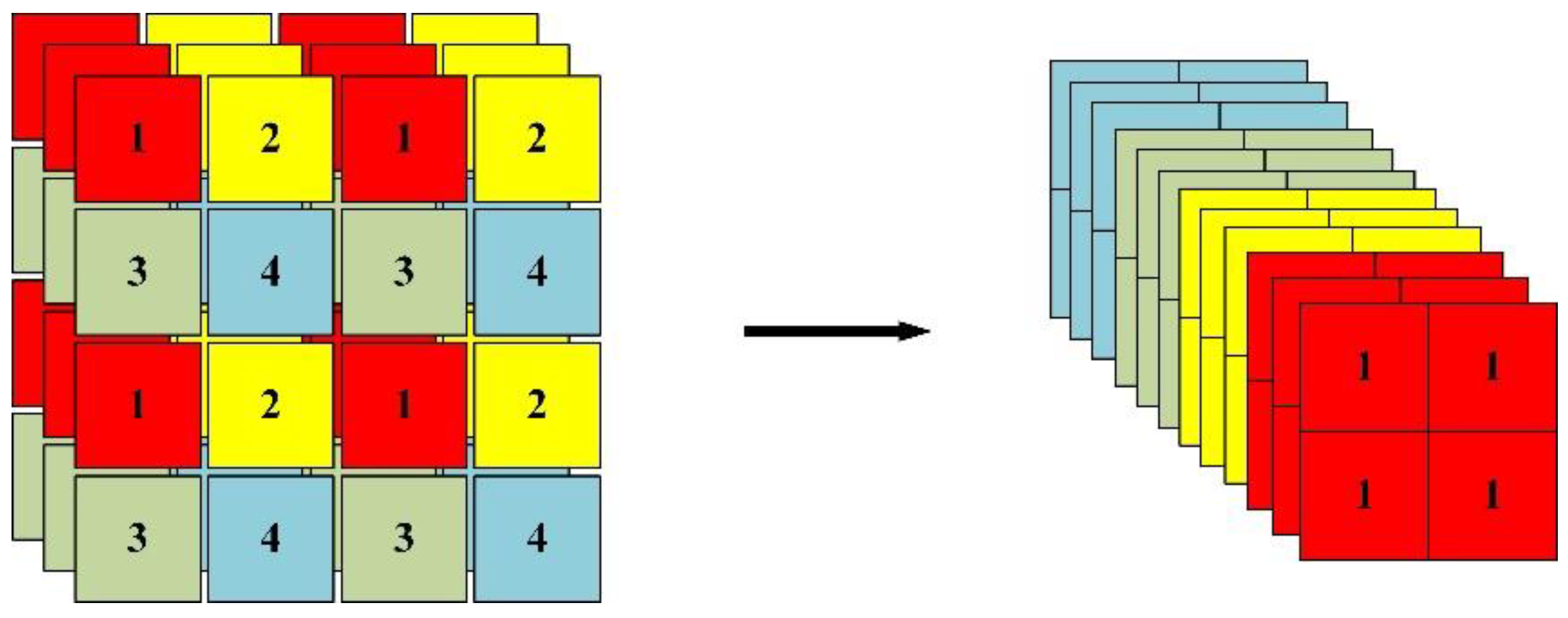

- Improved YOLOv3 detection algorithm based on MobileNetv3-Large

- 2.

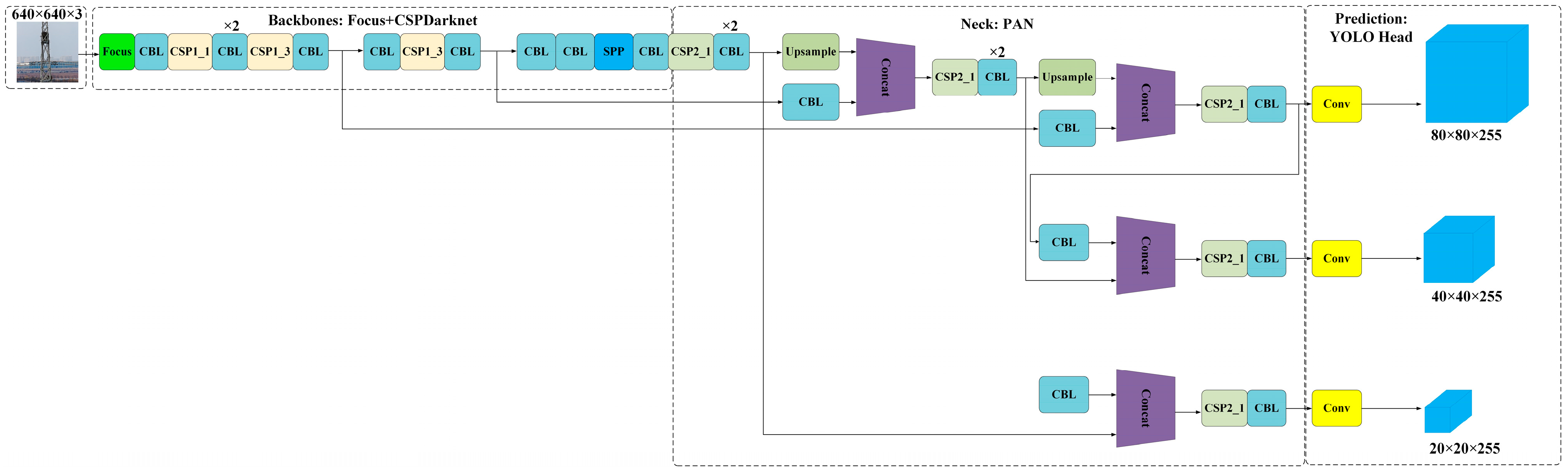

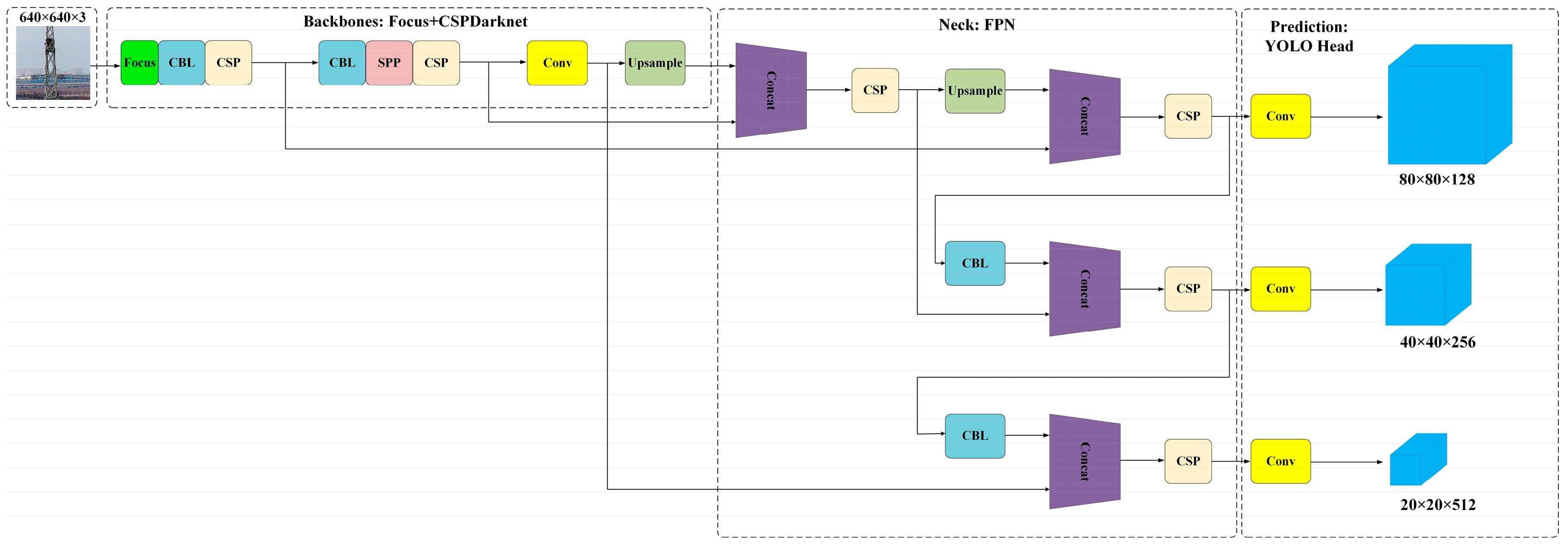

- YOLOv5-s detection algorithm

- 3.

- YOLOX-s detection algorithm

3. Results

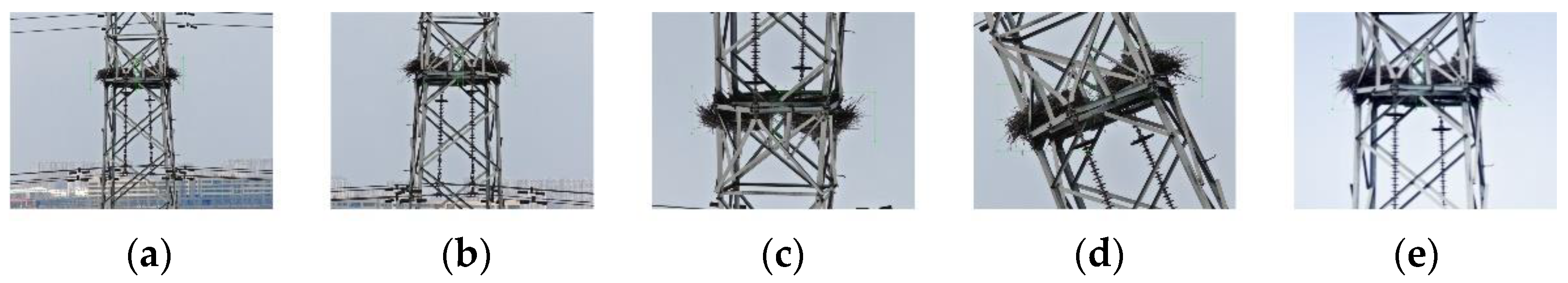

3.1. Bird’s Nest Detection Module Test

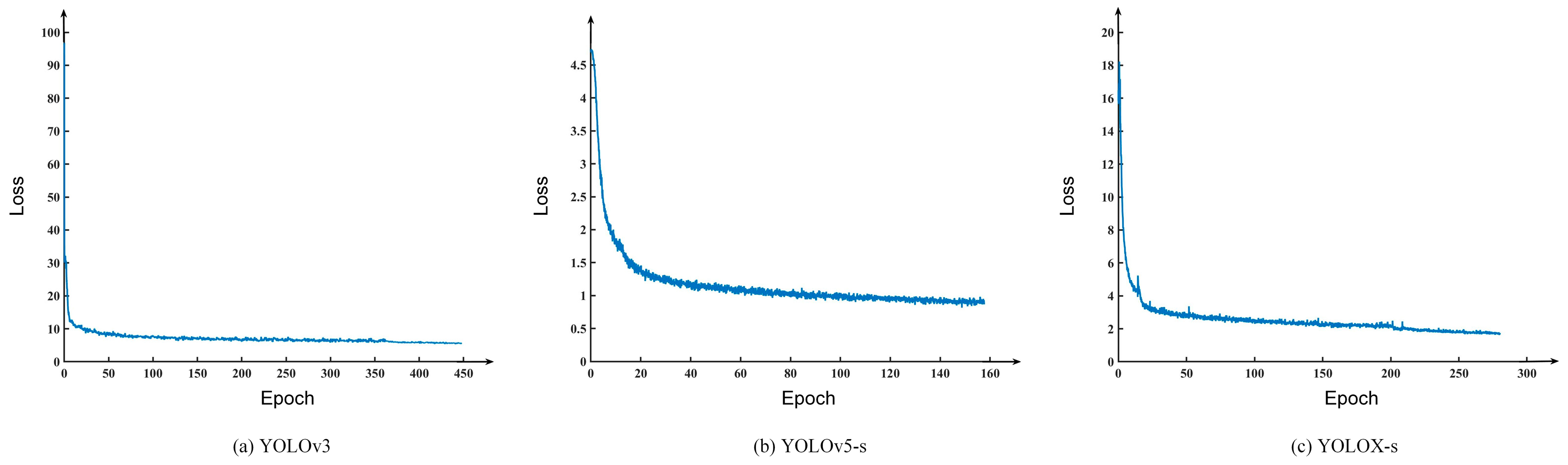

3.1.1. Loss Function

3.1.2. Precision

3.1.3. Recall

3.1.4. Mean Average Precision

3.1.5. Detection Speed

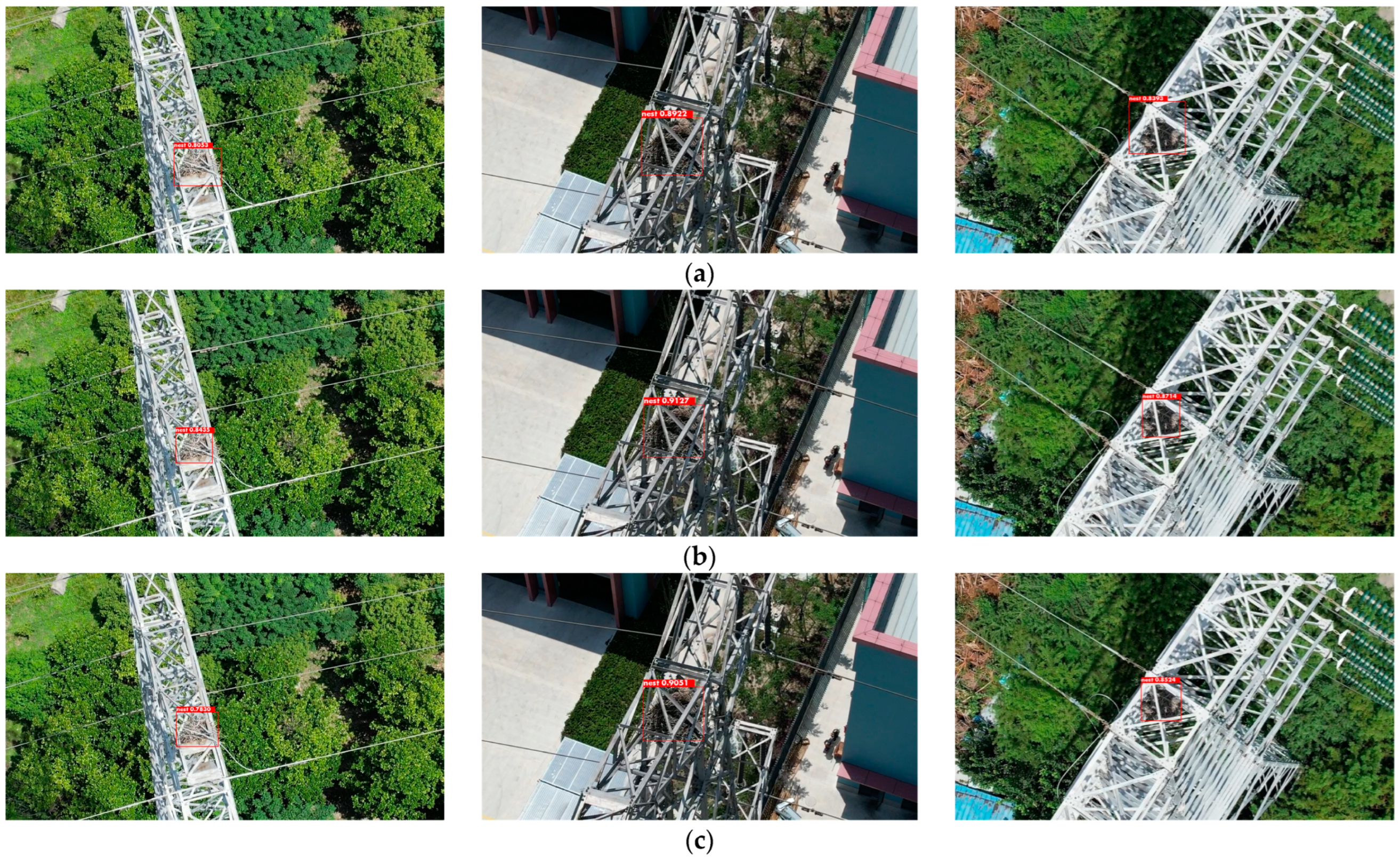

3.1.6. Bird Nest Detection Module Test Results

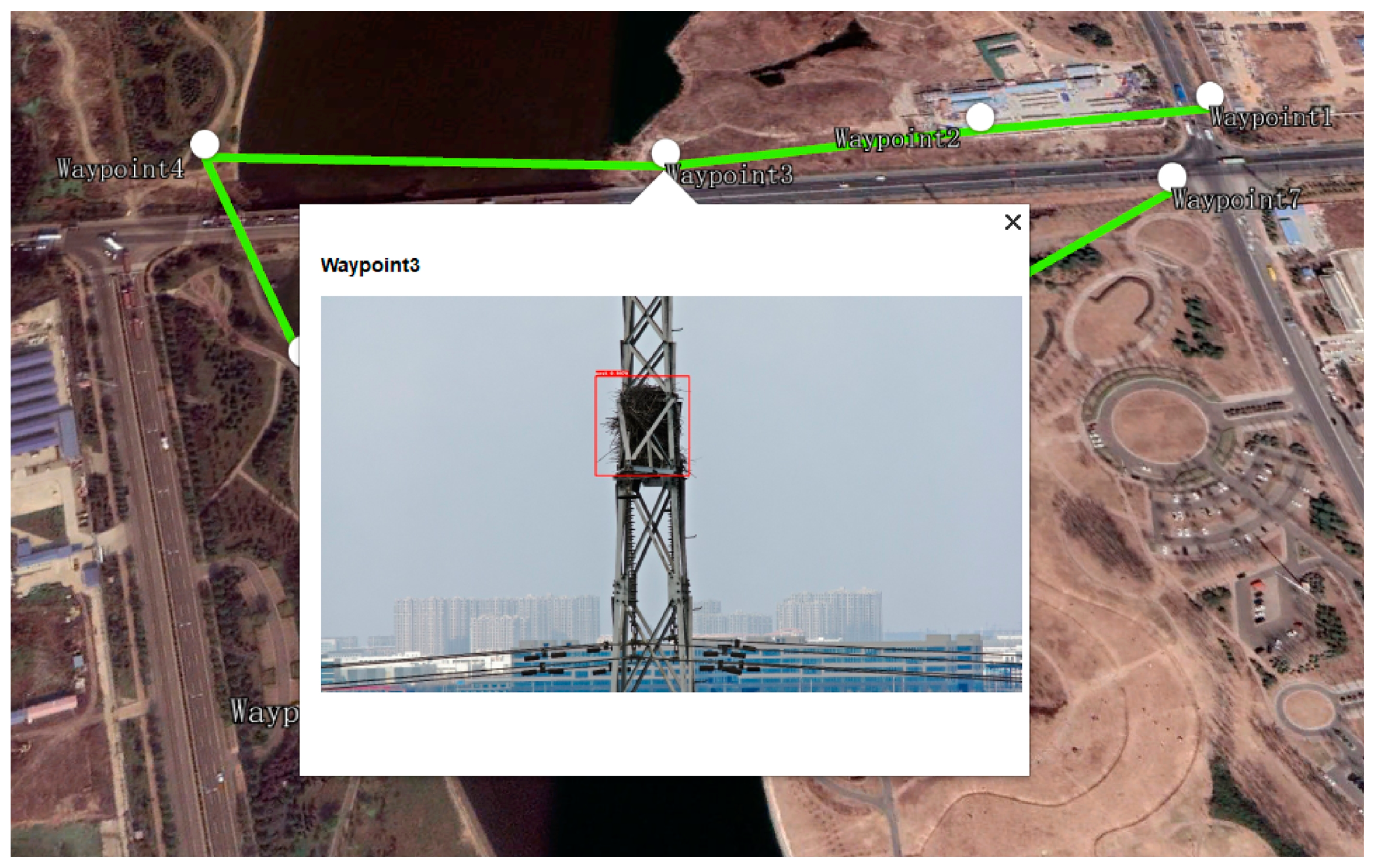

3.2. Flight Test

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hao, J.; Wulin, H.; Jing, C.; Xinyu, L.; Xiren, M.; Shengbin, Z. Detection of bird nests on power line patrol using single shot detector. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3409–3414. [Google Scholar]

- Hui, X.; Bian, J.; Yu, Y.; Zhao, X.; Tan, M. A novel autonomous navigation approach for UAV power line inspection. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, China, 5–8 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 634–639. [Google Scholar]

- Chen, C.; Yang, B.; Song, S.; Peng, X.; Huang, R. Automatic clearance anomaly detection for transmission line corridors utilizing UAV-Borne LIDAR data. Remote. Sens. 2018, 10, 613. [Google Scholar] [CrossRef]

- Hui, X.; Bian, J.; Zhao, X.; Tan, M. Deep-learning-based autonomous navigation approach for UAV transmission line inspection. In Proceedings of the 2018 Tenth International Conference on Advanced Computational Intelligence (ICACI), Xiamen, China, 29–31 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 455–460. [Google Scholar]

- Li, F.; Xin, J.; Chen, T.; Xin, L.; Wei, Z.; Li, Y.; Zhang, Y.; Jin, H.; Tu, Y.; Zhou, X.; et al. An automatic detection method of bird’s nest on transmission line tower based on faster_RCNN. IEEE Access 2020, 8, 164214–164221. [Google Scholar] [CrossRef]

- Wong, S.Y.; Choe, C.W.C.; Goh, H.H.; Low, Y.W.; Cheah, D.Y.S.; Pang, C. Power transmission line fault detection and diagnosis based on artificial intelligence approach and its development in uav: A review. Arab. J. Sci. Eng. 2021, 46, 9305–9331. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef] [PubMed]

- Odo, A.; McKenna, S.; Flynn, D.; Vorstius, J.B. Aerial Image Analysis Using Deep Learning for Electrical Overhead Line Network Asset Management. IEEE Access 2021, 9, 146281–146295. [Google Scholar] [CrossRef]

- Sadykova, D.; Pernebayeva, D.; Bagheri, M.; James, A. IN-YOLO: Real-time detection of outdoor high voltage insulators using UAV imaging. IEEE Trans. Power Deliv. 2019, 35, 1599–1601. [Google Scholar] [CrossRef]

- Chen, H.; He, Z.; Shi, B.; Zhong, T. Research on recognition method of electrical components based on YOLO V3. IEEE Access 2019, 7, 157818–157829. [Google Scholar] [CrossRef]

- Li, J.; Yan, D.; Luan, K.; Li, Z.; Liang, H. Deep learning-based bird’s nest detection on transmission lines using UAV imagery. Appl. Sci. 2020, 10, 6147. [Google Scholar] [CrossRef]

- Brewer, K.; Clulow, A.; Sibanda, M.; Gokool, S.; Odindi, J.; Mutanga, O.; Naiken, V.; Chimonyo, V.G.P.; Mabhaudhi, T. Estimation of Maize Foliar Temperature and Stomatal Conductance as Indicators of Water Stress Based on Optical and Thermal Imagery Acquired Using an Unmanned Aerial Vehicle (UAV) Platform. Drones 2022, 6, 169. [Google Scholar] [CrossRef]

- Chen, D.-Q.; Guo, X.-H.; Huang, P.; Li, F.-H. Safety distance analysis of 500kv transmission line tower uav patrol inspection. IEEE Lett. Electromagn. Compat. Pract. Appl. 2020, 2, 124–128. [Google Scholar] [CrossRef]

- Zhang, W.; Ning, Y.; Suo, C. A method based on multi-sensor data fusion for UAV safety distance diagnosis. Electronics 2019, 8, 1467. [Google Scholar] [CrossRef]

- Bian, J.; Hui, X.; Zhao, X.; Tan, M. A novel monocular-based navigation approach for UAV autonomous transmission-line inspection. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–7. [Google Scholar]

- Polensky, J.; Regenda, J.; Adamek, Z.; Cisar, P. Prospects for the monitoring of the great cormorant (Phalacrocorax carbo sinensis) using a drone and stationary cameras. Ecol. Inform. 2022, 70, 101726. [Google Scholar] [CrossRef]

- Hossain, S.; Lee, D. Deep learning-based real-time multiple-object detection and tracking from aerial imagery via a flying robot with GPU-based embedded devices. Sensors 2019, 19, 3371. [Google Scholar] [CrossRef] [PubMed]

- Verucchi, M.; Bartoli, L.; Bagni, F.; Gatti, F.; Burgio, P.; Bertogna, M. Real-Time clustering and LiDAR-camera fusion on embedded platforms for self-driving cars. In Proceedings of the 2020 Fourth IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 9–11 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 398–405. [Google Scholar]

- Barba-Guaman, L.; Eugenio Naranjo, J.; Ortiz, A. Deep learning framework for vehicle and pedestrian detection in rural roads on an embedded GPU. Electronics 2020, 9, 589. [Google Scholar] [CrossRef]

- Sabirova, A.; Fedorenko, R. Drone cinematography system design and new guideline model for scene objects interaction. In Proceedings of the 2020 International Conference Nonlinearity, Information and Robotics (NIR), Innopolis, Russia, 3–6 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Ferranti, L.; Bonati, L.; D’Oro, S.; Melodia, T. SkyCell: A prototyping platform for 5G aerial base stations. In Proceedings of the 2020 IEEE 21st International Symposium on “A World of Wireless, Mobile and Multimedia Networks (WoWMoM)”, Cork, Ireland, 31 August–3 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 329–334. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Yang, G.; Feng, W.; Jin, J.; Lei, Q.; Li, X.; Gui, G.; Wang, W. Face mask recognition system with YOLOV5 based on image recognition. In Proceedings of the 2020 IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1398–1404. [Google Scholar]

- Zhou, F.; Zhao, H.; Nie, Z. Safety helmet detection based on YOLOv5. In Proceedings of the 2021 IEEE International Conference on Power Electronics, Computer Applications (ICPECA), Shenyang, China, 22–24 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 6–11. [Google Scholar]

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for autonomous landing spot detection in faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef] [PubMed]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Zhang, Y.; Zhang, W.; Yu, J.; He, L.; Chen, J.; He, Y. Complete and accurate holly fruits counting using YOLOX object detection. Comput. Electron. Agric. 2022, 198, 107062. [Google Scholar] [CrossRef]

- Zhang, M.; Wang, C.; Yang, J.; Zheng, K. Research on engineering vehicle target detection in aerial photography environment based on YOLOX. In Proceedings of the 2021 14th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 11–12 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 254–256. [Google Scholar]

- Liu, B.; Huang, J.; Lin, S.; Yang, Y.; Qi, Y. Improved YOLOX-S Abnormal Condition Detection for Power Transmission Line Corridors. In Proceedings of the 2021 IEEE 3rd International Conference on Power Data Science (ICPDS), online, 26 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 13–16. [Google Scholar]

- Yang, W.; Jiachun, Z. Real-time face detection based on YOLO. In Proceedings of the 2018 1st IEEE International Conference on Knowledge Innovation and Invention (ICKII), Jeju Island, Korea, 23–27 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 221–224. [Google Scholar]

- Dharneeshkar, J.; Aniruthan, S.A.; Karthika, R.; Parameswaran, L. Deep Learning based Detection of potholes in Indian roads using YOLO. In Proceedings of the 2020 International Conference on Inventive Computation Technologies (ICICT), Coimbatore, Tamil Nadu, 26–28 February 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 381–385. [Google Scholar]

- Krawczyk, Z.; Starzyński, J. Bones detection in the pelvic area on the basis of YOLO neural network. In Proceedings of the 19th International Conference Computational Problems of Electrical Engineering, Banska Stiavnica, Slovakia, 16–19 September 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–4. [Google Scholar]

- Ma, Y.; Yu, D.; Wu, T.; Wang, H. PaddlePaddle: An open-source deep learning platform from industrial practice. Front. Data Domputing 2019, 1, 105–115. [Google Scholar]

| Specifications | Value | |

|---|---|---|

| DJI M300 RTK UAV | Dimensions | 810 × 670 × 430 mm |

| Max Takeoff Weight | 9 kg | |

| Max Speed | 23 m/s | |

| Max Ascent Speed | 6 m/s | |

| Max Descent Speed | 5 m/s | |

| Hovering Accuracy | Vertical: ±0.1 m (RTK enabled) Horizontal: ±0.1 m (RTK enabled) | |

| Max Flight Time | 55 min | |

| Max Transmitting Distance | 8 km | |

| Obstacle Sensing Range | Forward/Backward/Left/Right: 0.7–40 m Upward/Downward: 0.6–30 m | |

| Operating Temperature | −20 °C to 50 °C | |

| H20T RGB Camera | Photo Size | 5184 × 3888 |

| Sensor | 1/1.7″ CMOS, 20 MP | |

| Lens | DFOV: 66.6–4° Focal length: 6.83–119.94 mm | |

| ISO Range | 100–25,600 | |

| Photo Format | JPEG |

| Specifications | Value | |

|---|---|---|

| Jetson Xavier NX | GPU | 384-core Volta GPU with Tensor Cores |

| CPU | 6-core ARM v8.2 64-bit CPU, 6 MB L2 + 4 MB L3 | |

| Memory | 16 GB 128-Bit LPDDR4x| 59.7 GB/s | |

| Storage | 16 GB eMMC 5.1 | |

| DL Accelerator | (2×) NVDLA Engines | |

| Size | 103 mm × 90.5 mm × 34 mm |

| Input | Operator | Exp Size | # Out | SE | NL | s |

|---|---|---|---|---|---|---|

| 6082 × 3 | cov2d | - | 16 | - | HS | 2 |

| 3042 × 16 | bneck, 3 × 3 | 16 | 16 | - | RE | 1 |

| 3042 × 16 | bneck, 3 × 3 | 64 | 24 | - | RE | 2 |

| 1522 × 24 | bneck, 3 × 3 | 72 | 24 | - | RE | 1 |

| 1522 × 24 | bneck, 5 × 5 | 72 | 40 | √ | RE | 2 |

| 762 × 40 | bneck, 5 × 5 | 120 | 40 | √ | RE | 1 |

| 762 × 40 | bneck, 5 × 5 | 120 | 40 | √ | RE | 1 |

| 762 × 40 | bneck, 3 × 3 | 240 | 80 | - | HS | 2 |

| 382 × 80 | bneck, 3 × 3 | 200 | 80 | - | HS | 1 |

| 382 × 80 | bneck, 3 × 3 | 184 | 80 | - | HS | 1 |

| 382 × 80 | bneck, 3 × 3 | 184 | 80 | - | HS | 1 |

| 382 × 80 | bneck, 3 × 3 | 480 | 112 | √ | HS | 1 |

| 382 × 112 | bneck, 3 × 3 | 672 | 112 | √ | HS | 1 |

| 382 × 112 | bneck, 5 × 5 | 672 | 160 | √ | HS | 2 |

| 192 × 160 | bneck, 5 × 5 | 960 | 160 | √ | HS | 1 |

| 192 × 160 | bneck, 5 × 5 | 960 | 160 | √ | HS | 1 |

| 192 × 160 | cov2d, 1 × 1 | - | 960 | - | HS | 1 |

| 192 × 960 | pool, 7 × 7 | - | - | - | - | 1 |

| 12 × 960 | cov2d 1 × 1, NBN | - | 1280 | - | HS | 1 |

| 12 × 1280 | cov2d 1 × 1, NBN | - | k | - | - | 1 |

| Statistical Classification | Definition |

|---|---|

| True Positive(TP) | A test result that correctly indicates the presence of a condition or characteristic |

| True Negative(TN) | A test result that correctly indicates the absence of a condition or characteristic |

| False Positive(FP) | A test result that indirectly indicates that a particular condition or attribute is present |

| False Negative(FN) | A test result that indirectly indicates that a particular condition or attribute is absent |

| Model | Epoch | Batch Size | Learning Rate | Input Shape | Trainset/Validation |

|---|---|---|---|---|---|

| YOLOv3 | 500 | 32 | 0.005 | 608 × 608 | 9:1 |

| YOLOv5-s | 500 | 32 | 0.005 | 640 × 640 | 9:1 |

| YOLOX-s | 500 | 32 | 0.005 | 640 × 640 | 9:1 |

| Model | mAP/% |

|---|---|

| YOLOv3 | 90.1% |

| YOLOv5-s | 92.1% |

| YOLOX-s | 90.8% |

| Model | FPS |

|---|---|

| YOLOv3 | 23.2 |

| YOLOv5-s | 33.9 |

| YOLOX-s | 31.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Dong, Y.; Liu, Y.; Ai, J. Design and Implementation of UAVs for Bird’s Nest Inspection on Transmission Lines Based on Deep Learning. Drones 2022, 6, 252. https://doi.org/10.3390/drones6090252

Li H, Dong Y, Liu Y, Ai J. Design and Implementation of UAVs for Bird’s Nest Inspection on Transmission Lines Based on Deep Learning. Drones. 2022; 6(9):252. https://doi.org/10.3390/drones6090252

Chicago/Turabian StyleLi, Han, Yiqun Dong, Yunxiao Liu, and Jianliang Ai. 2022. "Design and Implementation of UAVs for Bird’s Nest Inspection on Transmission Lines Based on Deep Learning" Drones 6, no. 9: 252. https://doi.org/10.3390/drones6090252

APA StyleLi, H., Dong, Y., Liu, Y., & Ai, J. (2022). Design and Implementation of UAVs for Bird’s Nest Inspection on Transmission Lines Based on Deep Learning. Drones, 6(9), 252. https://doi.org/10.3390/drones6090252