1. Introduction

The optimal tracking control problem (OTCP) is of major importance in a variety of applications for robotic systems such as wheeled vehicles, unmanned ground vehicles (UGVs), unmanned aerial vehicles (UAVs), etc. The aim is to find a control policy to drive the specified system, given a particular reference path to follow in an optimal manner [

1,

2,

3,

4,

5,

6]. The reference paths are generally generated by a separate mission planner according to specific tasks, and optimization is usually achieved by minimizing an objective function regarding energy cost, tracking error cost, and/or the traveling time cost.

With the rapid development of unmanned systems, algorithms to solve OTCPs have been widely studied in the literature. Addressing the OTCPs involves solving the underlying Hamilton–Jacobi–Bellman (HJB) equation. For linear systems, the HJB equation is replaced by the Riccati equation, and the numerical solution is generally available. However, for nonlinear robotic systems subject to asymmetric input constraints, such as fixed-wing UAVs and autonomous underwater vehicles (AUVs) [

7,

8,

9], it is still a challenging issue. To deal with this difficulty while guaranteeing tracking performance for nonlinear systems, various methods have been developed to find approximate optimal control efficacy. One idea is to simplify or transform the objective function to be optimized to obtain a solution to an approximate or equivalent optimal control problem. For instance, nonlinear model predictive control (MPC) is used to obtain a near optimal path-following control law for UAVs by truncating the time horizon and minimizing a finite-horizon tracking objective function in [

7,

8]. Another idea aims to solve the approximate solution directly. An offline policy iteration (PI) strategy is utilized to obtain the near optimal solution by solving a sequence of Bellman equation iteratively [

10]. However, in the abovmentioned methods, the complete dynamics of the system are generally required and the curse of dimensionality might occur. To deal with this issue, an approximate dynamic programming (ADP) scheme was developed and has received increasing interest in the optimal control area [

11,

12,

13].

ADP, which combines the concept of reinforcement learning (RL) and Bellman’s principle of optimality, was first introduced in [

11] to handle the curse of dimensionality that might occur in the classical dynamic programming (DP) scheme for solving optimal control problems. The main idea is to approximate the solution to the HJB equation using some parametric function approximation techniques, for which aneural network (NN) is the most commonly used scheme, such as a single-NN based value function approximation and the actor–critic dual-NN structure [

14]. For continuous-time nonlinear systems, Ref. [

15] proposed a data-based ADP algorithm to relax the dependence on the internal dynamics of the control system, which is also called integral reinforcement learning (IRL), to learn the solution to the HJB equation using only partial knowledge about the system dynamics. After that, the IRL scheme became widely used in various nonlinear optimization control problems, including optimal tracking control, control with input constraints, control with unknown or partially unknown systems, etc. [

7,

14,

15,

16].

The IRL-based methods are powerful tools used to solve nonlinear optimal control problems. However, the OTCP for nonlinear systems with partially unknown dynamics and asymmetric input constraints, especially for curve path tracking is still open to study. Firstly, the stability of the IRL-based methods for nonlinear constrained systems are generally hard to prove. Moreover, the changing curvature in the curve-path-tracking control problem makes it more difficult to stabilize the tracking error compared to the widely studied regulation control or circular path-tracking control problems. Moreover, the asymmetric input constraints are more difficult to deal with than commonly discussed symmetric constraints.

Motivated by the desire to solve the OTCP with the curve path for partially unknown nonlinear systems with asymmetric input constraints, this paper introduces a feedforward control law to simplify the problem and redesigns the non-quadratic form control input cost function and utilizes an NN-based IRL scheme to solve an approximate optimal control policy. The three main contributions are:

An approximate optimal curve-path-tracking control policy is developed for nonlinear systems with a feedforward control law, which handles the time-varying dynamics of the reference states caused by the curvature variation, and a data-driven IRL algorithm is developed to solve the approximate optimal control policy, in which a single-NN structure for value function approximation is utilized, reducing the computation burden and simplifying the algorithm structure.

The non-quadratic control cost function is redesigned via a constraint transformation with the introduced feedforward control law, which solves the challenge of asymmetric control input constraints that traditional methods cannot handle directly, and satisfactory input constraints are guaranteed with proof.

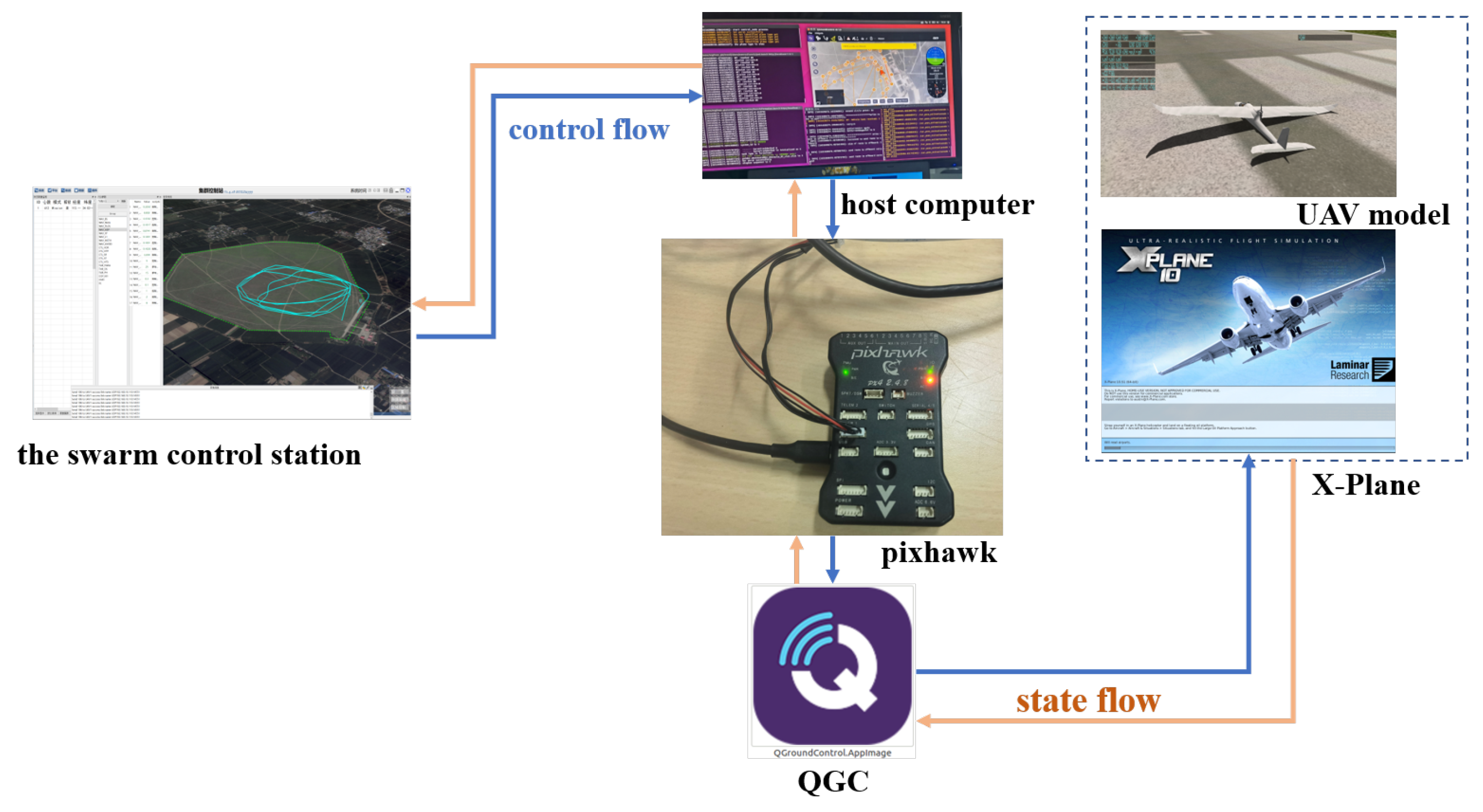

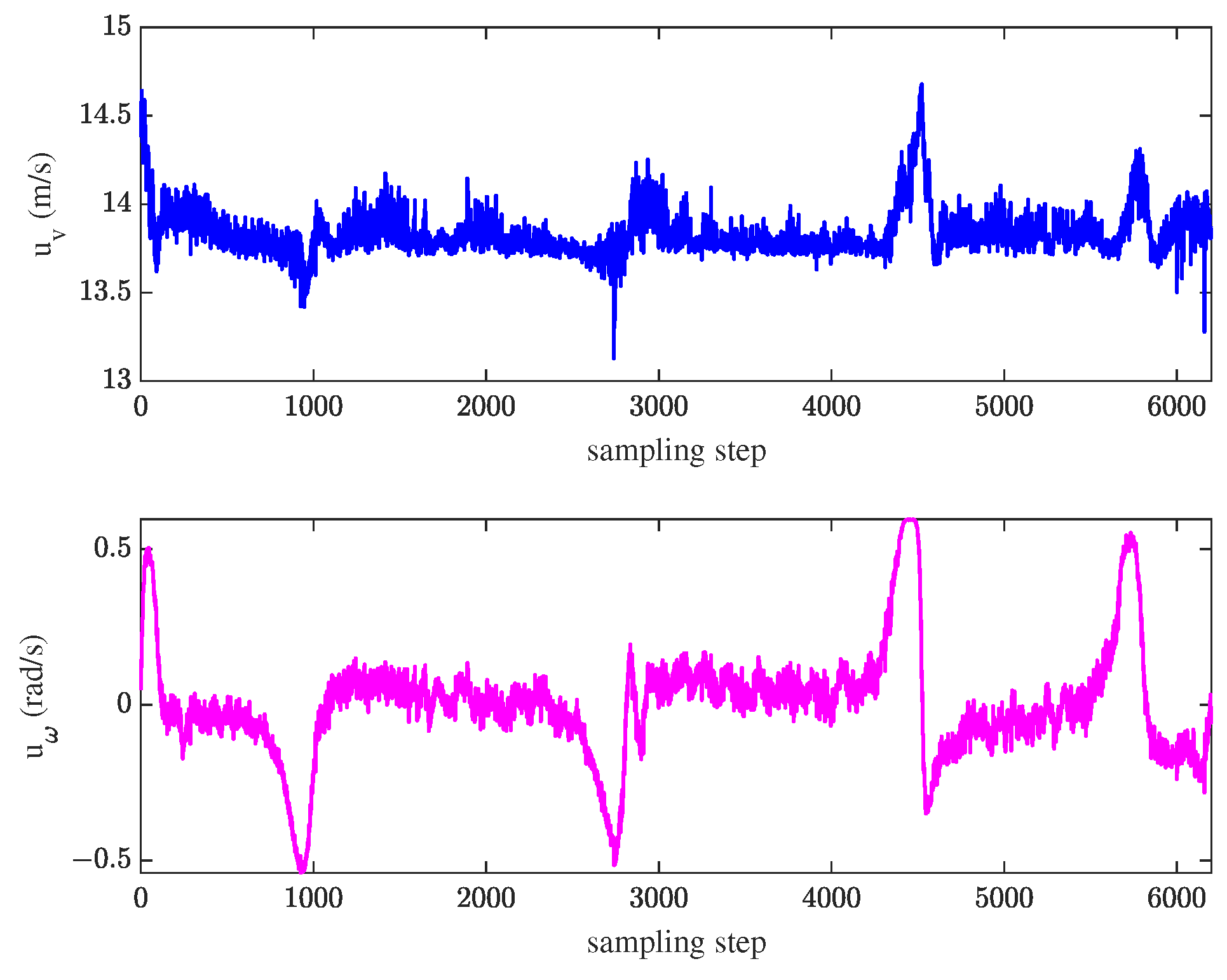

The proposed approximate optimal path-tracking control algorithm is validated via hardware-in-the-loop (HIL) simulations for fixed-wing UAVs in comparison with three other typical path-tracking algorithms. The result shows that the proposed algorithm not only has much less fluctuation and smaller root mean squared error (RMSE) of the tracking error but also naturally meets the control input constraints.

2. Problem Formulation

This section briefly formulates the OTCP of nonlinear systems subject to asymmetric control input constraints.

Consider the following affine nonlinear kinematic systems:

where

is the vector of system motion states that we focus on,

is the internal kinematic dynamics,

is the control input dynamics of system, and

is the control input, which is constrained by

where

and

are the minimum and the maximum thresholds of control input

, which are decided by characteristics of the actuator, and not always satisfying

.

Remark 1. The asymmetric control input constraint (2) is widespread in practical systems, such as fixed-wing UAVs and autonomous underwater vehicles (AUVs) [1,7,8,9,17]. For these systems, existing control algorithms that consider only symmetric input constraints cannot be utilized directly. This paper studies the OTCP with curve paths for system (

1) with input constraint (

2). Thus, we focus on the tracking performance of the above motion states

with reference to the reference motion states

specified by the corresponding virtual target point (VTP)

on the reference path. Then, the considered tracking control system is described as

where

describes the tracking error state,

represents the bounded state vector related to the reference motion states, not subject to human control,

is the reference motion states, and

describes some other related system variables,

. The continuous-time functions,

and

, are internal dynamics and control input dynamics of the tracking error system,

is the dynamics of the reference states and is decided by the task setting. Obviously, the specific form of

and

is closely related to the specific

. For the tracking control problem of system (

3), the complete system state is denoted as

. Then there is

.

Remark 2. Suppose that the reference path is generated by a separate mission planner, and describes system dynamic parameters determined by the task setting, such as the moving speed of the VTP along the reference path. Then, it is reasonable to suppose that is known, which describes the shape of the reference path as well as the motion dynamics of the reference point along the path.

Then, in the problem of curve-path-tracking control, given the reference motion state

corresponding to

, denote the curvature of the reference path at this point as

, and the speed of the point moving along the path as

. The dynamics of the reference states can be more specifically described as

Then the control objective is to find an optimal control policy

that consumes at the least cost to drive the tracking error

to converge to

. To this end, take the objective function as

where

is a compact set containing the origin of the tracking error,

is the quadratic tracking error cost with the positive definite diagonal matrix

, and

is the positive semi-definite control cost to be designed.

Now, referring to the concept in optimal control theory in [

18], we define the admissible control for OTCP as follows.

Definition 1. A control policy is said to be admissible, denoted as , with respect to objective function (5) for the tracking control system (3), if is continuous on and satisfies constraints (2), and the corresponding state trajectory makes . Then, the main objective of this paper is to find the optimal control policy

that minimizes the objective function (

5), and before we illustrate the design of solving

, the following assumption is made in this paper.

Assumption 1. For any initial state , given the dynamic function of the reference state, there exists an admissible control , i.e., , which satisfies constraints (2), is continuous to on set , and stabilizes the tracking error in (3). 3. Optimal Control Design for Curve Path Tracking with Asymmetric Control Input Constraints

To find the optimal curve-path-tracking control policy

for system (

3), this section first introduces a feedforward control law which helps to deal with the variation of the reference state dynamics. Then a dedicated design for a control cost function, which enables natural satisfactory of the asymmetric input constraint, is proposed (

2).

Note that the main difficulty of curve-path-tracking control compared with that of regulation or straight/circular path tracking, is that the dynamics of the reference motion states

is time-varying because of the varying curvature of the reference path. To drive the tracking error to converge to

, when

, it needs

. The point is, different from regulation control problem, there needs to be a non-zero steady-state control law (denoted as

) because of the varying dynamics of

, such that

It is easy to know that this non-zero steady-state control input

mainly depends on the dynamics of reference states. Therefore, we rewrite the dynamic function of the reference motion state in (

4) in the following form:

Importing (

7) and (

1) into (

6), we can obtain

Then for

, we extend the above result to define the feedforward control

as

Remark 3. The rewriting of (7) is reasonable for practical robotic systems since the reference state as well as the associated constraint conditions are well-concerned by the separate mission planner and will be illustrated by examples in later experiments. The feedforward control law here is not an admissible control policy, which cannot drive a non-zero tracking error to , but is to be taken as a part of the control policy for the tracking control system.

Now, this paper explains how to solve the desired optimal tracking control strategy

that satisfies the asymmetric control input constraint (

2) in a simplified way by using

.

Given the dynamic function

of reference states,

can be obtained in real time according to (

8). Then, the complete tracking control policy can be described as

where

is the feedback control to be solved. Importing

into the tracking error state equation in (

3) generates

where

Thus it holds that . Then, to solve the optimal control policy is actually equivalent to solve the optimal feedback control .

Therefore, in the consideration of control input constraint (

2), referring to [

10,

16,

19], the control cost in (

5) is designed as

which is a semi-positive definite function, and the greater the absolute value of the control input component

, the greater the function value. So, being a part of the objective function, it can help to find an energy-optimal solution, and

is the weight coefficient with reference to component

j. The main difference of (

10) compared with that in [

10,

16,

19] is that the threshold parameter in the integrand, i.e.,

, is not a constant directly obtained from a symmetric control constraint but redefined for the asymmetric constraint (

2) with the introduced feedforward control law as

This design allows for the natural satisfaction of the asymmetric control input constraint

2, which will be illustrated later in Lemma 1.

Then for tracking control system (

3) subject to asymmetric control input constraint (

2), given an initial state

and the objective fuction (

5) with (

10), we define the optimal value function

as

Correspondingly, the Hamiltonian is constructed as

where

. Then, according to the principle of optimality,

satisfies

Then using the stationary condition, the optimal feedback control

can be obtained as

where

are diagonal matrices constructed by

and

, respectively, i.e.,

Then the optimal tracking control policy

is

Importing

into (

10), we can obtain the optimal control cost

where

,

represents the vector constructed by the matrix main diagonal elements,

.

Further, importing (

16) into (

13), the tracking HJB equation turns into

Then if one can obtain the solution

by solving (

17), (

15) would provide the desired optimal tracking control policy.

Now we propose the following lemma.

Lemma 1. With the non-quadratic control cost function (10), the optimal control policy in (15) satisfies the asymmetric constraint (2) naturally. Proof. Under Assumption 1, there exists an admissible control

such that

Denote

as

Since

is an admissible control law, according to Definition 1, there must be

Thus we have

Putting

into (

18) generates

Then according to definition of

in (

11) and the extended feedforward control defined in (

8), we have

Since

, according to (

14) and (

11), the feedback control

satisfies

Then combining (

20) with (

15), we have

This completes the proof. □

Next, the following theorem provides the optimality and stability analysis of .

Theorem 1. For tracking control system (3), given the dynamics function of the reference state, initial state and the objective function (5) with (10), assume is a smooth positive definite solution to (17), then the optimal control policy given by (15) has the following properties: , minimizes objective function ;

stabilizes the tracking error gradually.

Proof. First, we prove that minimizes the objective function J.

Given the initial state

and the solution of HJB equation (

17) as

, it holds that

Thus for any admissible control

, the corresponding objective function (

5) can be represented as

Deriving

alone the state trajectory corresponding to

, we have

and

By adding and subtracting

and

to the right side of the equation, it generates

Then combine (

24) with HJB equation (

13), we further obtain

Denote that

Then to prove that

minimizes

J, one needs to prove that

for all admissible control

, and that

if and only if

.

Then importing (

27) to

M, we obtain

To help to analyze, define a function

as

where

,

. Since

increases monotonically, when

, there must be a

, such that

and that

. Then importing

into

generates

Likewise, when

, there must also be a

, such that

and that

. Then importing

into

we have

Further, when

, it holds that

. That is,

only when

, and

, when

.

Combining the above conclusion with (

28),

M can be represented as

Then, there is

Therefore, holds for all , in which the = holds only when .

Next, we prove that the tracking error is gradually stabilized with .

Note that

is a positive semi-definite function. Take

as the Lyapunov function of the tracking control system (

3), then there is

It is known from the proof of Lemma 1 that

, then the “=’’ in (

33) holds only if

. Thus,

gradually stabilizes

.

This completes the proof. □

4. IRL-Based Approximate Optimal Solution

The last section provides the design of the optimal tracking control policy

. However, to solve

involves solving the HJB Equation (

17), which is highly nonlinear to

. In consideration of the difficulty in solving (

17), this section provides an NN-based IRL algorithm to obtain an approximate optimal solution.

With the optimal value function denoted as

, the following integral form of the value function is taken according to the idea of IRL:

where the integral reinforcement interval

.

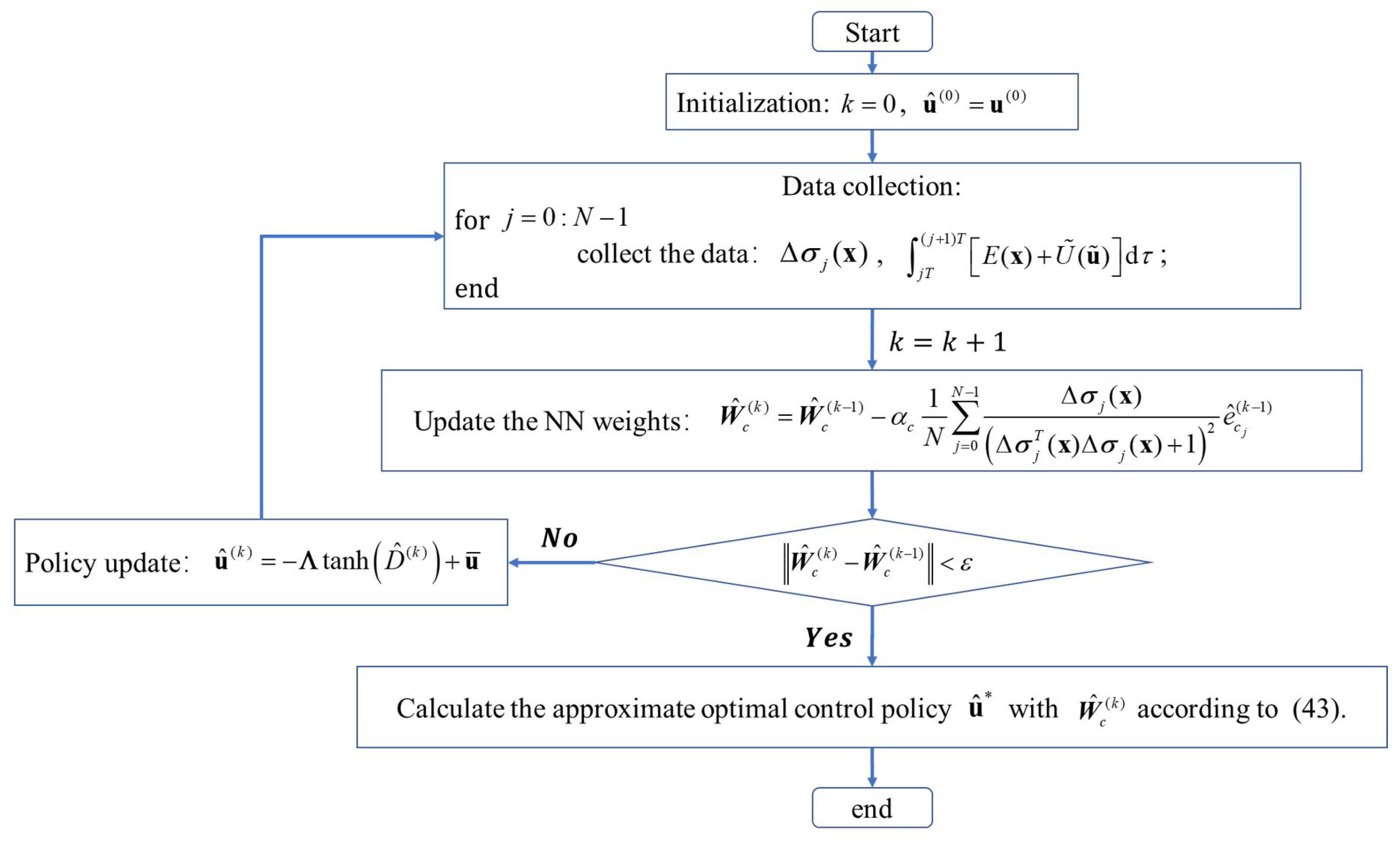

Then the IRL-based PI Algorithm 1 is presented as follows.

| Algorithm 1 IRL-based optimal path-tracking algorithm |

- 1:

Policy evaluation: NN weights update

- 2:

Policy improvement:

where .

|

Remark 4. Equation (34) is equivalent to the HJB equation (17) in the way that (34) and (17) have the same positive definite solution , and according to the result of traditional PI algorithm, given an initial admissible control , then for all , iteratively solving (35) for , there always exists an admissible control with (36), and when , and uniformly converge to and [10,20]. To implement Algorithm 1, this paper introduces a single-layer NN with

p neurons to approximate the value function:

and

where

is the optimal weight vector to approximate

,

is the vector of continuously differentiable bounded basis functions, and

is the approximation error. Then, according to work in [

10], when the number of neurons

, the fitting error

would be close to 0, and [

21] points out that, even when the number of neurons is limited, the fitting error is still bounded. Therefore,

and

are bounded over the compact set

, i.e., there exist constants

and

such that

,

.

Putting (

37) into (

35), we obtain the tracking Bellman error as

where

. Then, there exists a positive constant

such that

.

Since the optimal weight vector

in (

37) is unknown, the value function is approximated in the iteration as

where

is the estimation of

. Then, the estimation of

is

To find the best weight vector

of

, the tuning law of the weight estimation

should minimize the estimated Bellman error

. Utilizing the gradient decent scheme and considering the objective function

, we take the tuning law for the weight vector as

where

is the learning rate, and

is used for normalization [

16]. Then, taking the sampling period as equal to the integral reinforcement interval

T, after each

N sampling period, the NN weights of online IRL-based PI for the approximate tracking control policy after

iterations is updated by

Importing

into (

36), we obtain the improved control policy

where

. Then, given an initial approximated weight

corresponding to an admissible initial control

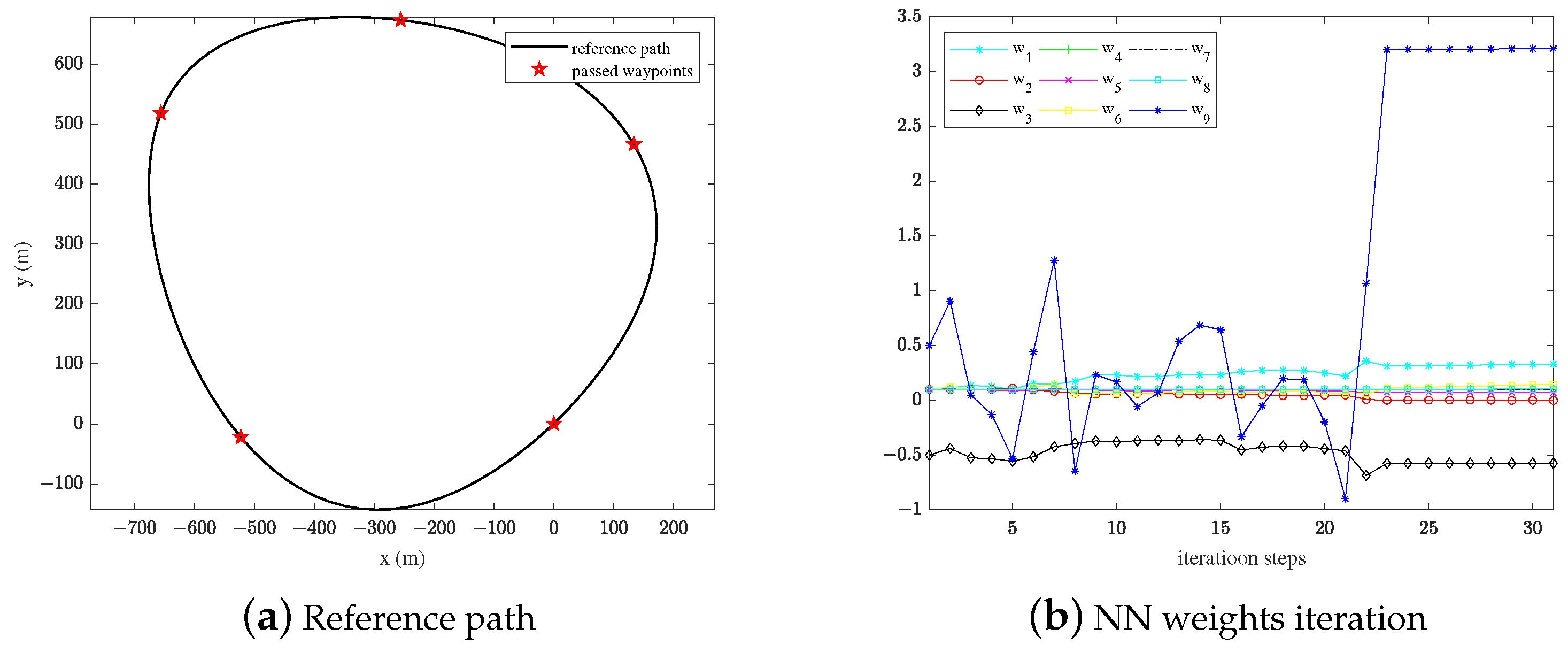

, the online IRL-based PI can be performed as in

Figure 1.

Remark 5. Let be any admissible bounded control policy in the algorithm in Figure 1, and take (42) as the tuning law of the critic NN weights. If is persistently exciting (PE), i.e., if there exist and such that where is the unit matrix, then for the bounded reconstruction error in (41), the critic weight estimation error converges exponentially fast to a residual set [13,14,15].