Mapping Temperate Forest Phenology Using Tower, UAV, and Ground-Based Sensors

Abstract

1. Introduction

- At what spatial extent are above-canopy (UAV-based imagery) remote sensing metrics most representative of below-canopy (ground-based, hemispherical photography) vegetation metrics?

- Do above- and below-canopy measures provide similar phenological transition dates as continuous phenological observational data, including oblique-perspective PhenoCam data, and spaceborne, MODIS, and Landsat data?

2. Materials and Methods

2.1. Site Description

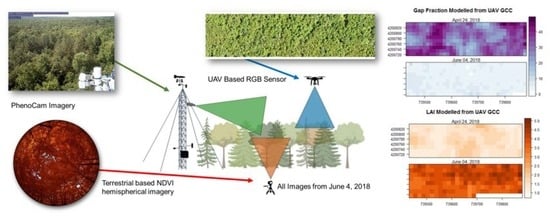

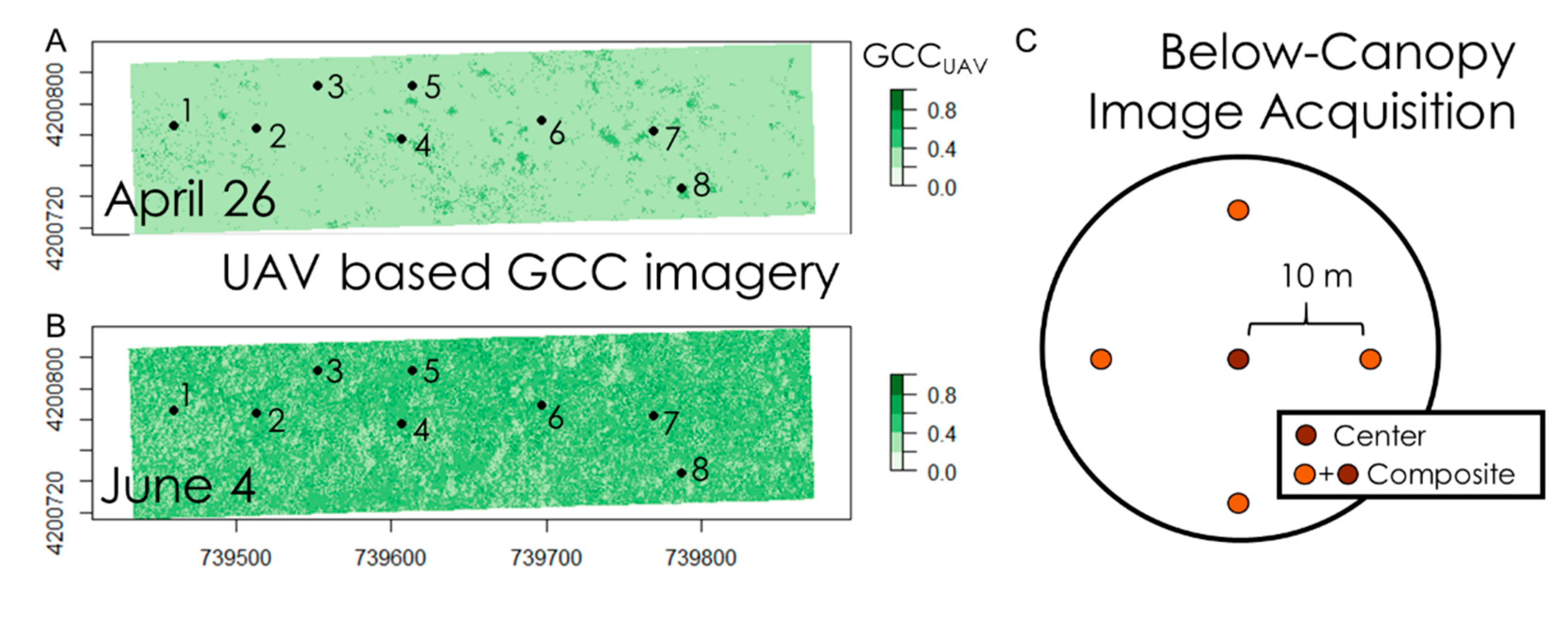

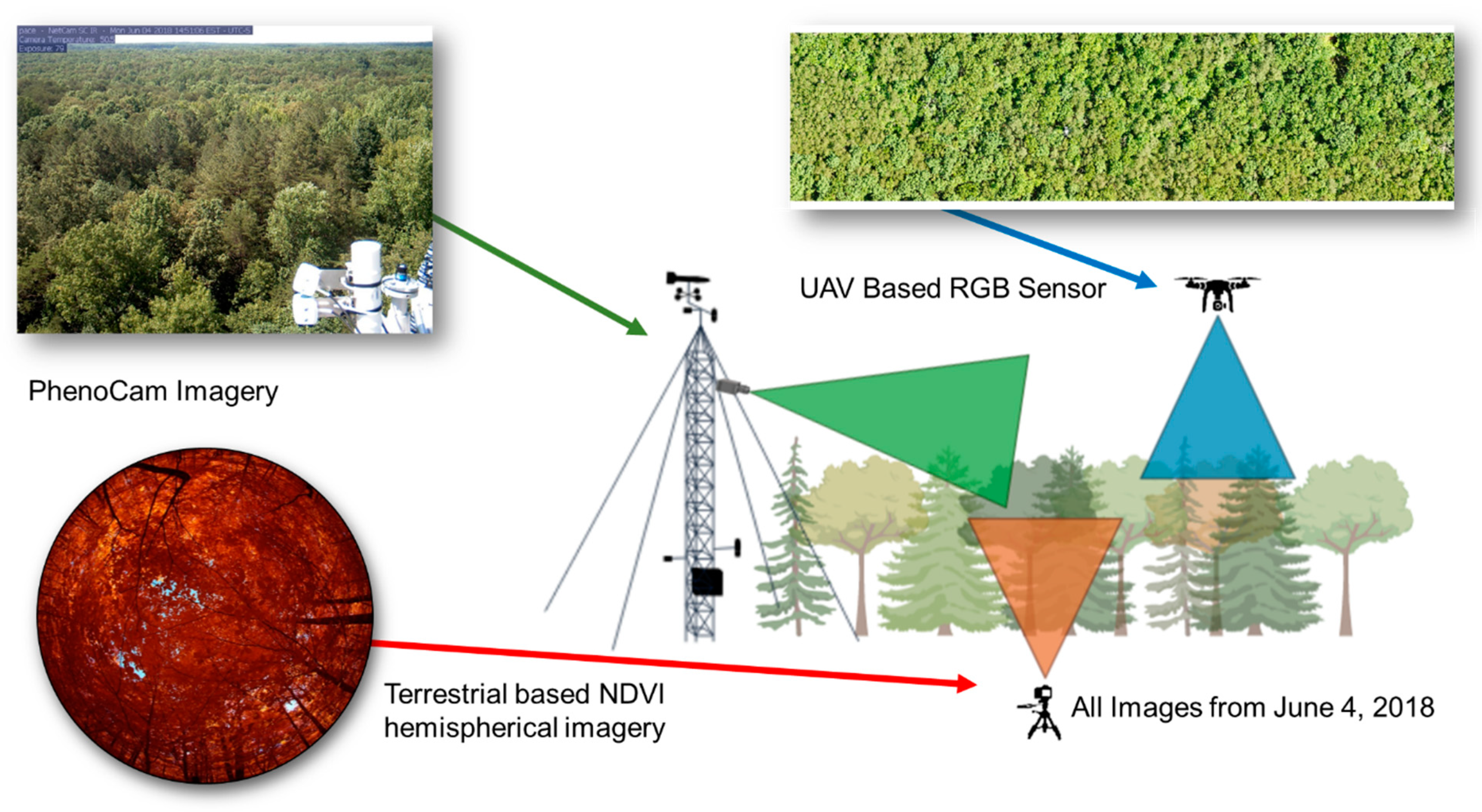

2.2. Above-Canopy, UAV-Based Measurements

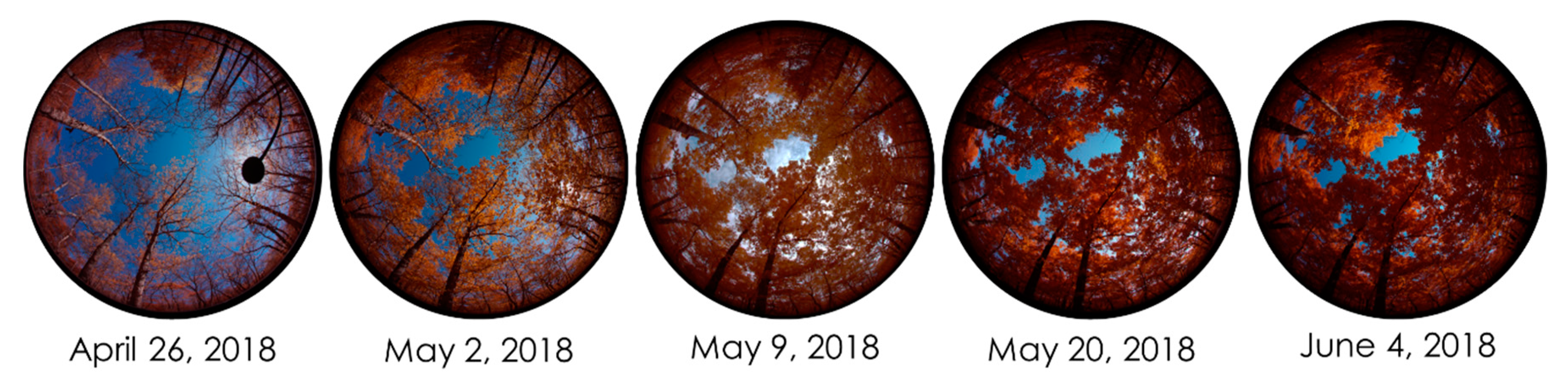

2.3. Below-Canopy Measurements

2.4. Tower-Based Measurements

2.5. Satellite-Based NDVI

2.6. Statistical Analysis

3. Results

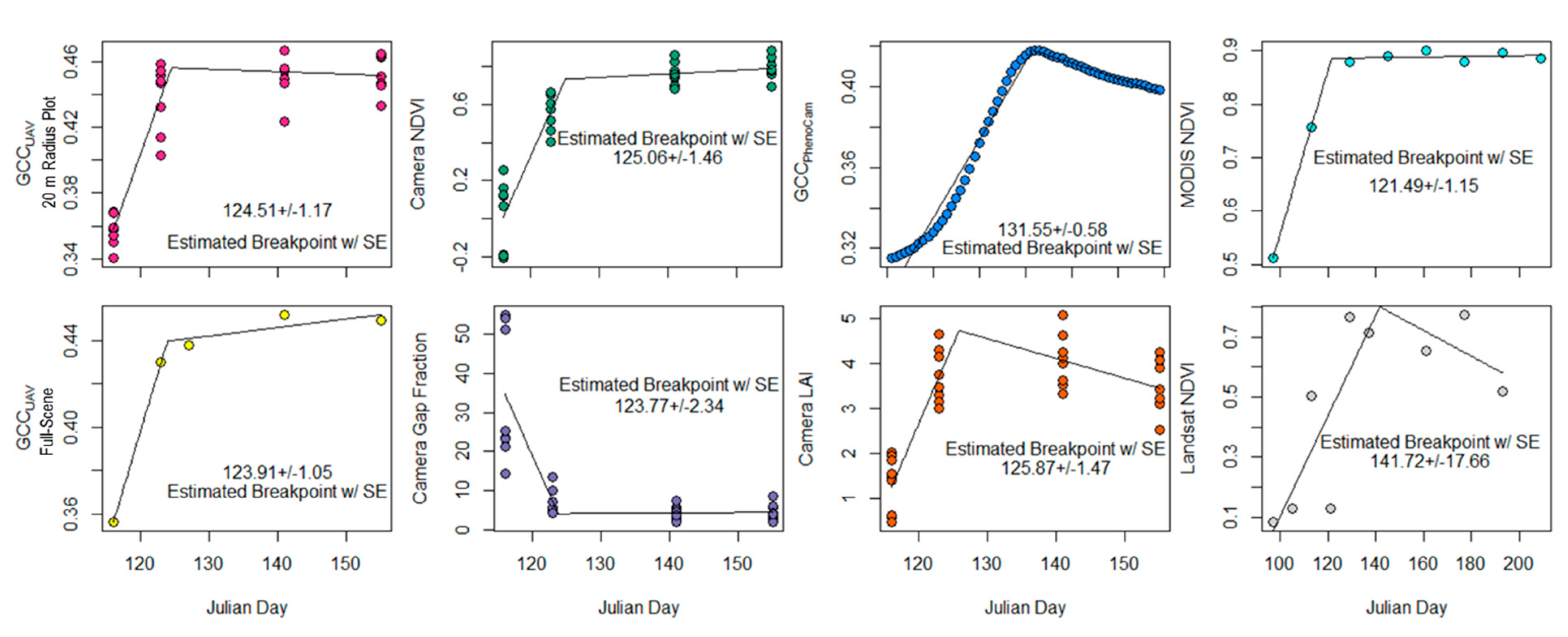

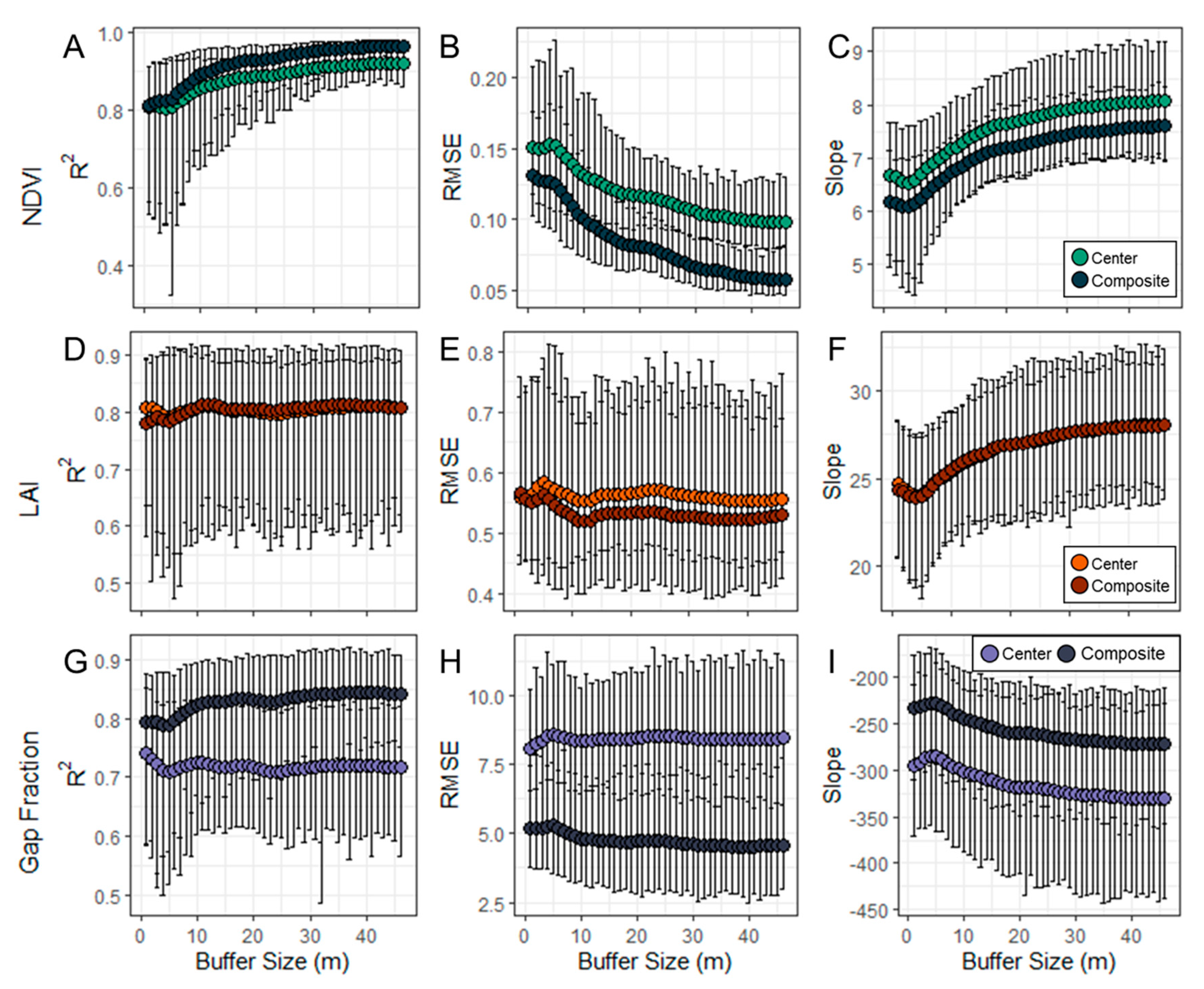

3.1. Above- and Below-Canopy Comparisons

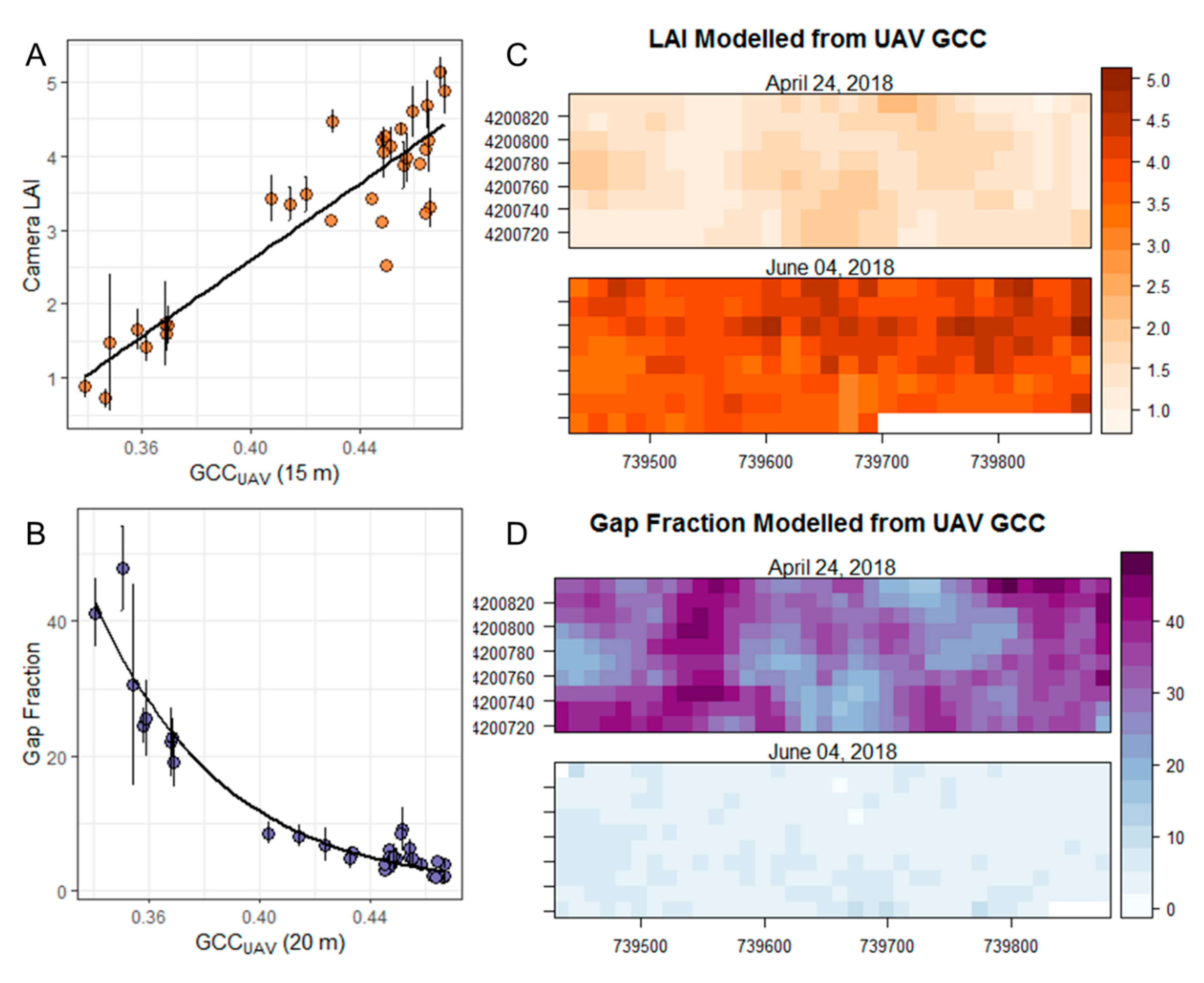

3.2. Estimating Canopy Closure

4. Discussion

4.1. Scaling Above- and Below-Canopy Imagery

4.2. Temporal Resolution

4.3. Spatial Resolution

4.4. Trends, Transition Dates

4.5. Future UAV Applications for High-Resolution Phenology

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

References

- Richardson, A.D.; Keenan, T.F.; Migliavacca, M.; Ryu, Y.; Sonnentag, O.; Toomey, M. Climate change, phenology, and phenological control of vegetation feedbacks to the climate system. Agric. For. Meteorol. 2013, 169, 156–173. [Google Scholar] [CrossRef]

- Migliavacca, M.; Galvagno, M.; Cremonese, E.; Rossini, M.; Meroni, M.; Sonnentag, O.; Cogliati, S.; Manca, G.; Diotri, F.; Busetto, L.; et al. Using digital repeat photography and eddy covariance data to model grassland phenology and photosynthetic CO2 uptake. Agric. For. Meteorol. 2011, 151, 1325–1337. [Google Scholar] [CrossRef]

- Linderholm, H.W. Growing season changes in the last century. Agric. For. Meteorol. 2006, 137, 1–14. [Google Scholar] [CrossRef]

- Yang, X.; Mustard, J.F.; Tang, J.; Xu, H. Regional-scale phenology modeling based on meteorological records and remote sensing observations. J. Geophys. Res. Biogeosci. 2012, 117, 1–18. [Google Scholar] [CrossRef]

- Arora, V.K.; Boer, G.J. A parameterization of leaf phenology for the terrestrial ecosystem component of climate models. Glob. Chang. Biol. 2005, 11, 39–59. [Google Scholar] [CrossRef]

- Richardson, A.D.; Braswell, B.H.; Hollinger, D.Y.; Jenkins, J.P.; Ollinger, S.V. Near-surface remote sensing of spatial and temporal variation in canopy phenology. Ecol. Appl. 2009, 19, 1417–1428. [Google Scholar] [CrossRef]

- Ryan, C.M.; Williams, M.; Hill, T.C.; Grace, J.; Woodhouse, I.H. Assessing the phenology of southern tropical Africa: A comparison of hemispherical photography, scatterometry, and optical/NIR remote sensing. IEEE Trans. Geosci. Remote Sens. 2014, 52, 519–528. [Google Scholar] [CrossRef]

- Rankine, C.; Sánchez-Azofeifa, G.A.; Guzmán, J.A.; Espirito-Santo, M.M.; Sharp, I. Comparing MODIS and near-surface vegetation indexes for monitoring tropical dry forest phenology along a successional gradient using optical phenology towers. Environ. Res. Lett. 2017, 12, 105007. [Google Scholar] [CrossRef]

- Klosterman, S.; Richardson, A.D. Observing spring and fall phenology in a deciduous forest with aerial drone imagery. Sensors 2017, 17, 2852. [Google Scholar] [CrossRef]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Yang, X.; Tang, J.; Mustard, J.F. Beyond leaf color: Comparing camera-based phenological metrics with leaf biochemical, biophysical, and spectral properties throughout the growing season of a temperate deciduous forest. J. Geophys. Res. Biogeosci. 2014, 119, 181–191. [Google Scholar] [CrossRef]

- Lowman, M.; Voirin, B. Drones–our eyes on the environment. Front. Ecol. Environ. 2016, 14, 231. [Google Scholar] [CrossRef]

- Zhang, J.; Hu, J.; Lian, J.; Fan, Z.; Ouyang, X.; Ye, W. Seeing the forest from drones: Testing the potential of lightweight drones as a tool for long-term forest monitoring. Biol. Conserv. 2016, 198, 60–69. [Google Scholar] [CrossRef]

- Hassan, M.A.; Yang, M.; Rasheed, A.; Yang, G.; Reynolds, M.; Xia, X.; Xiao, Y.; He, Z. A rapid monitoring of NDVI across the wheat growth cycle for grain yield prediction using a multi-spectral UAV platform. Plant Sci. 2019, 282, 95–103. [Google Scholar] [CrossRef] [PubMed]

- Klosterman, S.; Melaas, E.; Wang, J.A.; Martinez, A.; Frederick, S.; O’Keefe, J.; Orwig, D.A.; Wang, Z.; Sun, Q.; Schaaf, C.; et al. Fine-scale perspectives on landscape phenology from unmanned aerial vehicle (UAV) photography. Agric. For. Meteorol. 2018, 248, 397–407. [Google Scholar] [CrossRef]

- Berra, E.F.; Gaulton, R.; Barr, S. Assessing spring phenology of a temperate woodland: A multiscale comparison of ground, unmanned aerial vehicle and Landsat satellite observations. Remote Sens. Environ. 2019, 223, 229–242. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Park, J.Y.; Muller-Landau, H.C.; Lichstein, J.W.; Rifai, S.W.; Dandois, J.P.; Bohlman, S.A. Quantifying leaf phenology of individual trees and species in a tropical forest using unmanned aerial vehicle (UAV) images. Remote Sens. 2019, 11, 1534. [Google Scholar] [CrossRef]

- Browning, D.M.; Karl, J.W.; Morin, D.; Richardson, A.D.; Tweedie, C.E. Phenocams Bridge the Gap between Field and Satellite Observations in an Arid Grassland Ecosystem. Remote Sens. 2017, 9, 1071. [Google Scholar] [CrossRef]

- Sonnentag, O.; Hufkens, K.; Teshera-Sterne, C.; Young, A.M.; Friedl, M.; Braswell, B.H.; Milliman, T.; O’Keefe, J.; Richardson, A.D. Digital repeat photography for phenological research in forest ecosystems. Agric. For. Meteorol. 2012, 152, 159–177. [Google Scholar] [CrossRef]

- Toomey, M.; Friedl, M.A.; Frolking, S.; Hufkens, K.; Klosterman, S.; Sonnentag, O.; Baldocchi, D.D.; Bernacchi, C.J.; Biraud, S.C.; Bohrer, G.; et al. Greenness indices from digital cameras predict the timing and seasonal dynamics of canopy-scale photosynthesis. Ecol. Appl. 2015, 25, 99–115. [Google Scholar] [CrossRef] [PubMed]

- Seyednasrollah, B.; Young, A.M.; Hufkens, K.; Milliman, T.; Friedl, M.A.; Frolking, S.; Richardson, A.D. Tracking vegetation phenology across diverse biomes using Version 2.0 of the PhenoCam Dataset. Sci. Data 2019, 6, 222. [Google Scholar] [CrossRef] [PubMed]

- Chianucci, F.; Cutini, A. Digital hemispherical photography for estimating forest canopy properties: Current controversies and opportunities. IForest Biogeosci. For. 2012, 5, 290. [Google Scholar] [CrossRef]

- Origo, N.; Calders, K.; Nightingale, J.; Disney, M. Influence of levelling technique on the retrieval of canopy structural parameters from digital hemispherical photography. Agric. For. Meteorol. 2017, 237–238, 143–149. [Google Scholar] [CrossRef]

- Yang, H.; Yang, X.; Heskel, M.; Sun, S.; Tang, J. Seasonal variations of leaf and canopy properties tracked by ground-based NDVI imagery in a temperate forest. Sci. Rep. 2017, 7, 1267. [Google Scholar] [CrossRef]

- Wen, Z.; Wu, S.; Chen, J.; Lu, M. NDVI indicated long-term interannual changes in vegetation activities and their responses to climatic and anthropogenic factors in the Three Gorges Reservoir Region, China. Sci. Total Environ. 2017, 574, 947–959. [Google Scholar] [CrossRef]

- Chan, W.-Y.S. The Fate of Biogenic Hydrocarbons within a Forest Canopy: Field Observations and Model Results. Ph.D. Thesis, University of Virginia, Charlottesville, VA, USA, 2011. [Google Scholar]

- O’Halloran, T.L.; Fuentes, J.D.; Collins, D.R.; Cleveland, M.J.; Keene, W.C. Influence of air mass source region on nanoparticle events and hygroscopicity in central Virginia, U.S. Atmos. Environ. 2009, 43, 3586–3595. [Google Scholar] [CrossRef]

- Hufkens, K.; Basler, D.; Milliman, T.; Melas, E.K.; Richardson, A.D. An integrated phenology modelling framework in R. Methods in Eco. Evol. 2018, 9, 1276–1285. [Google Scholar] [CrossRef]

- Zhang, X.; Jayavelu, S.; Liu, L.; Friedl, M.A.; Henebry, G.M.; Liu, Y.; Schaaf, C.B.; Richardson, A.D.; Gray, J. Evaluation of land surface phenology from VIIRS data using time series of PhenoCam imagery. Agric. For. Meteorol. 2018, 256–257, 137–149. [Google Scholar] [CrossRef]

- Canty, A.; Ripley, B. Boot: Bootstrap R (S-Plus) Functions: R Package Version 1.3-25. 2020. Available online: https://cran.r-project.org/web/packages/boot/index.html (accessed on 2 May 2020).

- Davison, A.C.; Hinkley, D.V. Bootstrap Methods and Their Application; Cambridge University Press: Cambridge, UK, 1997; ISBN 978-0-521-57471-6. [Google Scholar]

- Bivand, R.; Keitt, T.; Rowlingson, B.; Pebesma, E.; Sumner, M.; Hijmans, R.; Rouault, E.; Warmerdam, F.; Ooms, J.; Rundel, C. Rgdal: Bindings for the “Geospatial” Data Abstraction Library: R Package Version 1.5—12. 2020. Available online: https://cran.r-project.org/web/packages/rgdal/index.html (accessed on 2 May 2020).

- Hijmans, R.J.; van Etten, J.; Sumner, M.; Cheng, J.; Baston, D.; Bevan, A.; Bivand, R.; Busetto, L.; Canty, M.; Forrest, D.; et al. Raster: Geographic Data Analysis and Modeling: R Package Version 3.3-7. 2020. Available online: https://cran.r-project.org/web/packages/raster/index.html (accessed on 2 May 2020).

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2020. [Google Scholar]

- Muggeo, V.M.R. Segmented: An R Package to Fit Regression Models with Broken-Line Relationships. R News 2008, 8, 20–25. [Google Scholar]

- Keenan, T.F.; Darby, B.; Felts, E.; Sonnentag, O.; Friedl, M.A.; Hufkesn, K.; O’Keefe, J.; Klosterman, S.; Munger, J.W.; Toomey, M.; et al. Tracking forest phenology and seasonal physiology using digital repeat photography: A critical assessment. Ecol. Appl. 2014, 24, 1478–1489. [Google Scholar] [CrossRef] [PubMed]

- Filippa, G.; Cremonese, E.; Migliavacca, M.; Galvagno, M.; Sonnentag, O.; Humphreys, E.; Hufkens, K.; Ryu, Y.; Verfaillie, J.; Morra di Cella, U.; et al. NDVI derived from near-infrared-enabled digital cameras: Applicability across different plant functional types. Agric. For. Meteorol. 2018, 249, 275–285. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the use of unmanned aerial systems for environmental monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Roth, L.; Aasen, H.; Walter, A.; Liebisch, F. Extracting leaf area index using viewing geometry effects—A new perspective on high-resolution unmanned aerial system photography. ISPRS J. Photogramm. Remote Sens. 2018, 141, 161–175. [Google Scholar] [CrossRef]

- Baena, S.; Moat, J.; Whaley, O.; Boyd, D.S. Identifying species from the air: UAVs and the very high resolution challenge for plant conservation. PLoS ONE 2017, 12, e0188714. [Google Scholar] [CrossRef]

- Parker, G.G.; Harding, D.J.; Berger, M.L. A portable LIDAR system for rapid determination of forest canopy structure. J. Appl. Ecol. 2004, 41, 755–767. [Google Scholar] [CrossRef]

- Morsdorf, F.; Nichol, C.; Malthus, T.; Woodhouse, I.H. Assessing forest structural and physiological information content of multi-spectral LiDAR waveforms by radiative transfer modelling. Remote Sens. Environ. 2009, 113, 2152–2163. [Google Scholar] [CrossRef]

- Atkins, J.W.; Bohrer, G.; Fahey, R.T.; Hardiman, B.S.; Morin, T.H.; Stovall, A.E.L.; Zimmerman, N.; Gough, C.M. Quantifying vegetation and canopy structural complexity from terrestrial LiDAR data using the forestr r package. Methods Ecol. Evol. 2018, 9, 2057–2066. [Google Scholar] [CrossRef]

- Béland, M.; Baldocchi, D.D.; Widlowski, J.-L.; Fournier, R.A.; Verstraete, M.M. On seeing the wood from the leaves and the role of voxel size in determining leaf area distribution of forests with terrestrial LiDAR. Agric. For. Meteorol. 2014, 184, 82–97. [Google Scholar] [CrossRef]

- Bouriaud, O.; Soudani, K.; Bréda, N. Leaf area index from litter collection: Impact of specific leaf area variability within a beech stand. Can. J. Remote Sens. 2003, 29, 371–380. [Google Scholar] [CrossRef]

- Tang, H.; Brolly, M.; Zhao, F.; Strahler, A.H.; Schaaf, C.L.; Ganguly, S.; Zhang, G.; Dubayah, R. Deriving and validating Leaf Area Index (LAI) at multiple spatial scales through lidar remote sensing: A case study in Sierra National Forest, CA. Remote Sens. Environ. 2014, 143, 131–141. [Google Scholar] [CrossRef]

- Beer, A. Bestimmung der Absorption des rothen Lichts in farbigen Flüssigkeiten. Ann. Phys. 1852, 162, 78–88. [Google Scholar] [CrossRef]

- Lambert, J.H. Photometria Sive De Mensura Et Gradibus Luminis, Colorum Et Umbrae; Klett: Augsberg, Germany, 1760. [Google Scholar]

- Gaulton, R.; Malthus, T.J. LiDAR mapping of canopy gaps in continuous cover forests: A comparison of canopy height model and point cloud based techniques. Int. J. Remote Sens. 2010, 31, 1193–1211. [Google Scholar] [CrossRef]

- Krishna Moorthy, S.M.; Calders, K.; Vicari, M.B.; Verbeeck, H. Improved Supervised Learning-Based Approach for Leaf and Wood Classification From LiDAR Point Clouds of Forests. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3057–3070. [Google Scholar] [CrossRef]

- Filippa, G.; Cremonese, E.; Migliavacca, M.; Galvagno, M.; Folker, M.; Richardson, A.D.; Tomelleri, E. Phenopix: Process Digital Images of a Vegetation Cover. R Package Version 2.4.2. 2020. Available online: https://cran.r-project.org/web/packages/phenopix/index.html (accessed on 15 May 2020).

| Metric | Canopy Closure Date (DOY) | Standard Error (+/−) |

|---|---|---|

| GCCUAV (20 m buffer) | 124.51 | 1.17 |

| GCCUAV (Full Scene) | 123.91 | 1.05 |

| NDVI | 125.06 | 1.46+ |

| Gap Fraction | 123.77 | 2.34 |

| LAI | 125.87 | 1.47 |

| GCCPhenoCam | 131.55 | 0.58 |

| MODIS | 121.49 | 1.15 |

| Landsat 8 | 141.72 | 17.66 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Atkins, J.W.; Stovall, A.E.L.; Yang, X. Mapping Temperate Forest Phenology Using Tower, UAV, and Ground-Based Sensors. Drones 2020, 4, 56. https://doi.org/10.3390/drones4030056

Atkins JW, Stovall AEL, Yang X. Mapping Temperate Forest Phenology Using Tower, UAV, and Ground-Based Sensors. Drones. 2020; 4(3):56. https://doi.org/10.3390/drones4030056

Chicago/Turabian StyleAtkins, Jeff W., Atticus E. L. Stovall, and Xi Yang. 2020. "Mapping Temperate Forest Phenology Using Tower, UAV, and Ground-Based Sensors" Drones 4, no. 3: 56. https://doi.org/10.3390/drones4030056

APA StyleAtkins, J. W., Stovall, A. E. L., & Yang, X. (2020). Mapping Temperate Forest Phenology Using Tower, UAV, and Ground-Based Sensors. Drones, 4(3), 56. https://doi.org/10.3390/drones4030056