Going beyond Church–Turing Thesis Boundaries: Digital Genes, Digital Neurons and the Future of AI †

Abstract

:1. Introduction

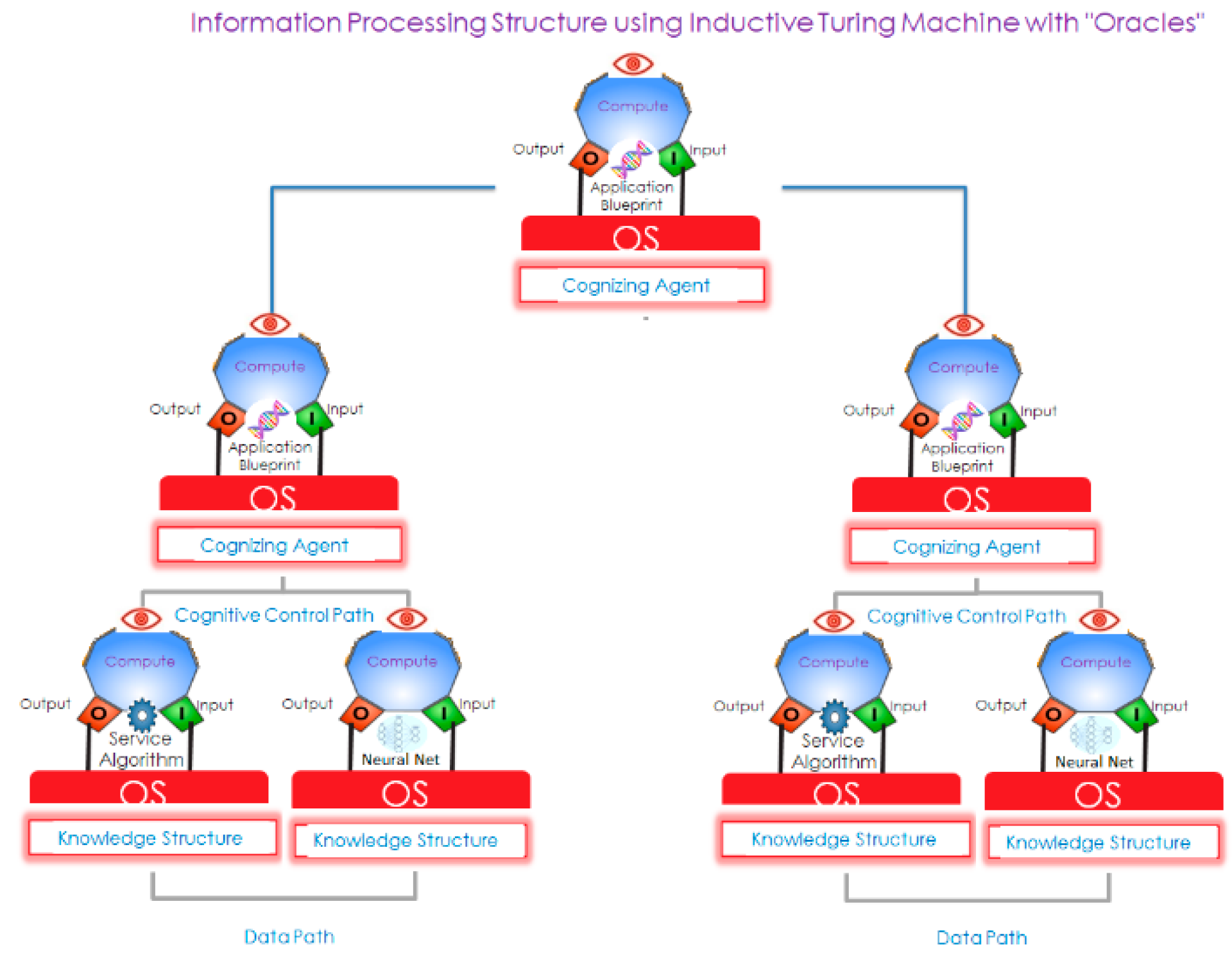

- The success of the general purpose computer has enabled current generation mobile, cloud and high-speed information processing structures whose main criterion for success of their computation is no longer their termination as Turing machines are designed [7], but their response to changes—their speed, generality and flexibility, adaptability and tolerance to error, faults and damage. Current business services demand non-stop operation and their performance adjusted in real-time to meet rapid fluctuations in service demand or available resources without interrupting service. The speed with which the quality of service has to be adjusted to meet the demand is becoming faster than the time it takes to orchestrate the myriad infrastructure components (such as virtual machine (VM) images, network plumbing, application configurations, middleware, etc.) distributed across multiple geographies and owned by different providers. It takes time and effort to reconfigure distributed plumbing coordinating with multiple suppliers, which results in increased cost and complexity. Any new architecture must eliminate moving VM images or reconfigure physical and virtual networks across distributed infrastructure and enable self-managing applications with or without VMs. In this paper we discuss a new class of applications that are managed by an overlay of cognizing agents that is aware of available resources, resources being used and the knowledge of processes to reconfigure the resources.

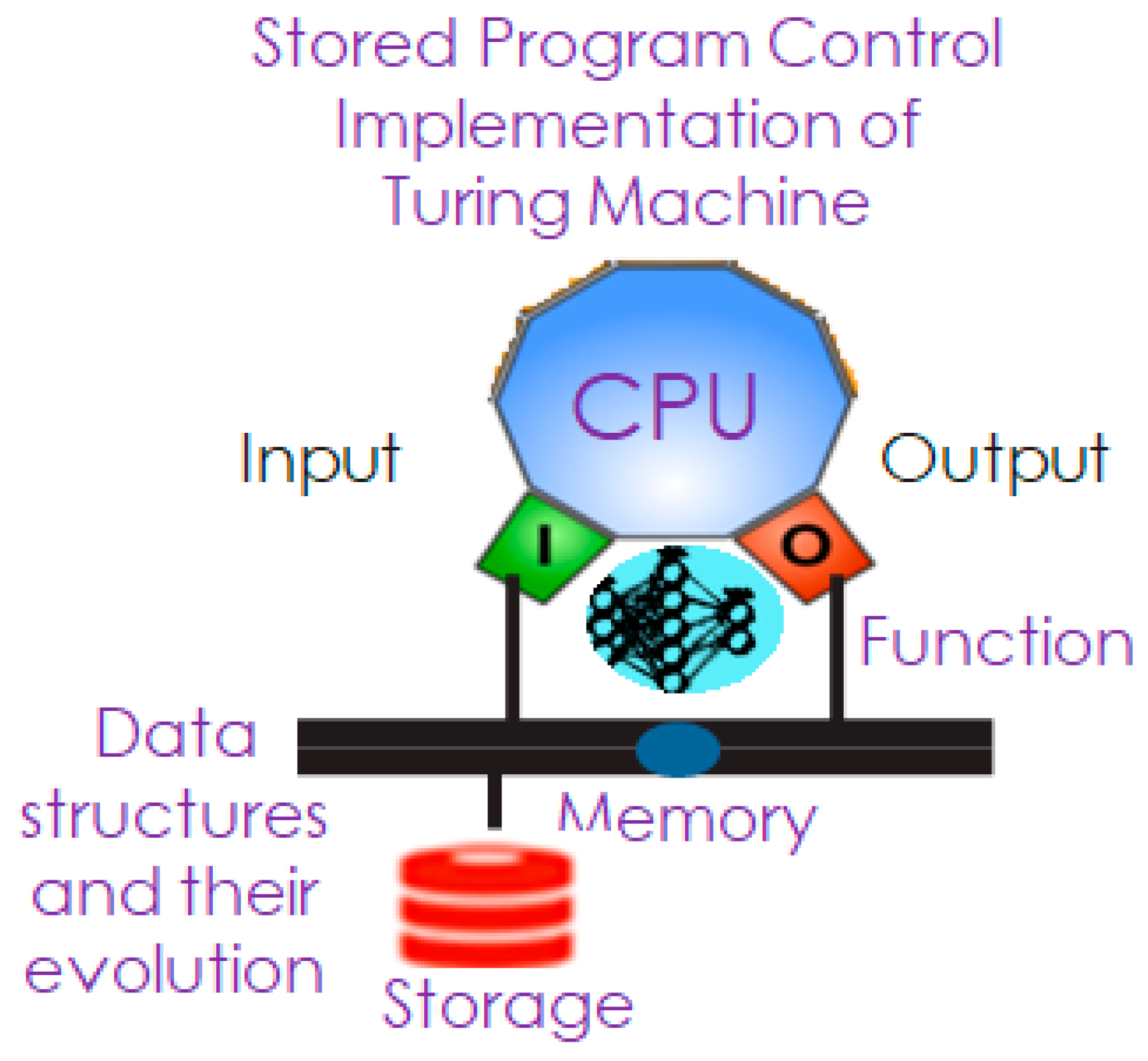

- The general-purpose computers allow them to deterministically model any physical system, of which they are not themselves a part, to an arbitrary degree of accuracy. However, the model falls short in addressing non-deterministic fluctuations. While computerized sensors and actuators allow observations and deep learning algorithms using neural networks (digital genes) provide insights, actions require a knowledge of managing risk, and responding with appropriate action requires an ability to use history and past experience along with deep knowledge about the circumstance. Systems are continuously evolving and interacting with each other. Sentient systems (with the capacity to feel, perceive or experience) evolve using a non-Markovian process, where the conditional probability of a future state depends on not only the present state but also on its prior state history. Digital systems, to evolve to be sentient and mimic human intelligence, must include time dependence and history in their process dynamics. In this paper we discuss the role of knowledge structures and the structural machines to implement systems capable of managing deep knowledge, deep memory and deep reasoning to determine the process evolution based on history.

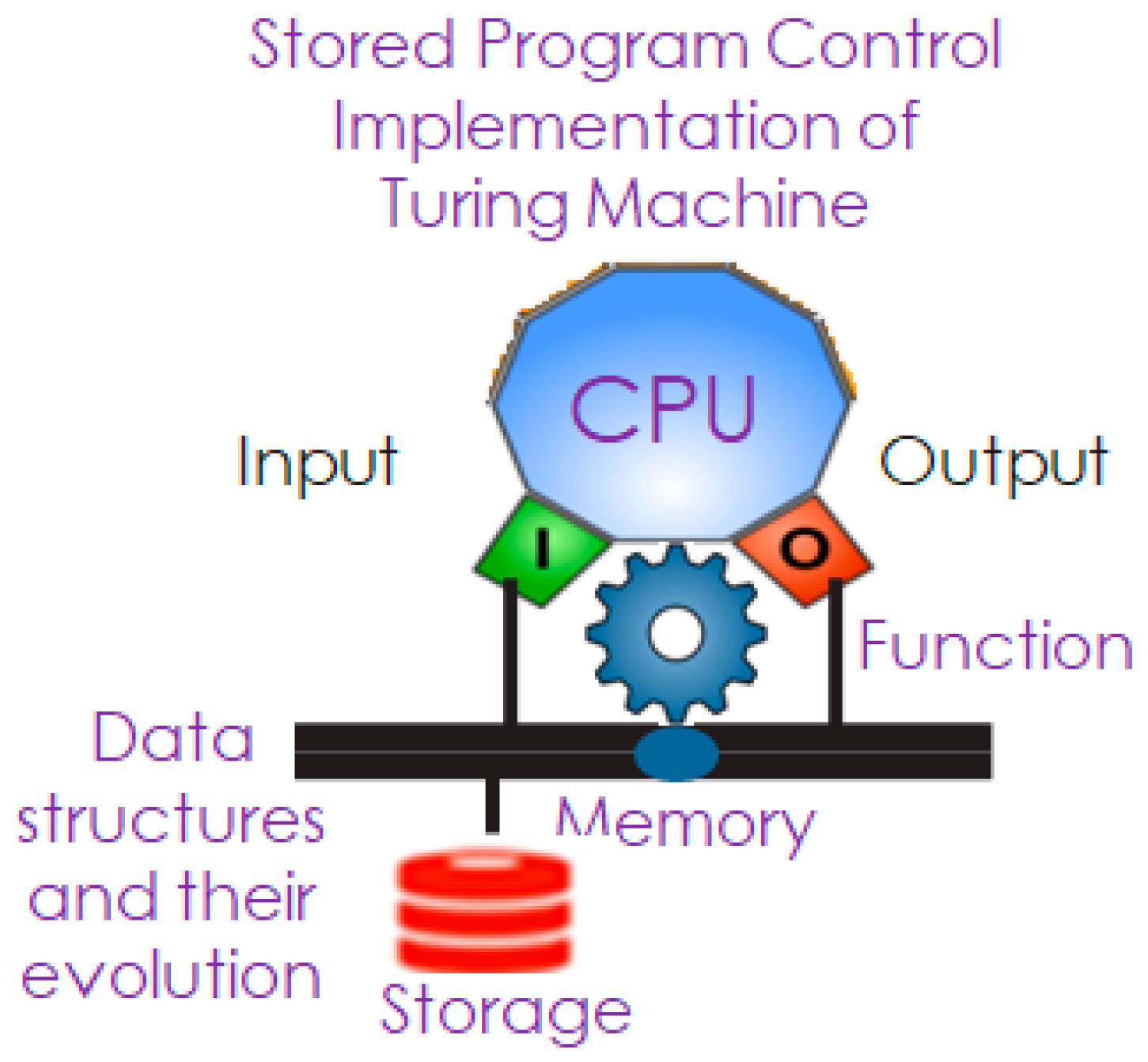

2. Theory of Oracles and Digital Information Processing Structures Going beyond the Church–Turing Thesis

3. Conclusions

Conflicts of Interest

References

- Burgin, M. Theory of Knowledge: Structures and Processes; World Scientific Books: Singapore, 2016. [Google Scholar]

- Burgin, M.; Mikkilineni, R. Cloud computing based on agent technology, super-recursive algorithms, and DNA. Int. J. Grid Utility Comput. 2018, 9, 193–204. [Google Scholar] [CrossRef]

- Turing, A.M. The Essential Turing; Copeland, B.J., Ed.; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

- Cockshott, P.; MacKenzie, L.M.; Michaelson, G. Computation and Its Limits; Oxford University Press: Oxford, UK, 2012. [Google Scholar]

- Gödel, K. Über formal unentscheidbare Sätze der Principia Mathematica und verwandter Systeme I. Monatshefte für Mathematik und Physik 1931, 38, 173–198. [Google Scholar] [CrossRef]

- Turing, A.M. Systems of logics based on ordinals. Proc. Lond. Math. Soc. 1939, 45, 161–228. [Google Scholar] [CrossRef]

- Dodig-Crnkovic, G.; Giovagnoli, R. Computing Nature—A Network of Networks of Concurrent Information Processes. arXiv 2013, arXiv:1210.7784, 1–22. [Google Scholar]

- Mikkilineni, R.; Morana, G.; Burgin, M. Oracles in Software Networks: A New Scientific and Technological Approach to Designing Self-Managing Distributed Computing Processes. In Proceedings of the 2015 European Conference on Software Architecture Workshops (ECSAW ‘15), Dubrovnik Cavtat, Croatia, 7 September 2015; ACM: New York, NY, USA, 2015. [Google Scholar]

- Burgin, M.; Eberbach, E.; Mikkilineni, R. Cloud Computing and Cloud Automata as A New Paradigm for Computation. Comput. Rev. J. 2019, 4, 113–134. [Google Scholar]

- Burgin, M.; Adamatzky, A. Structural Machines as a Mathematical Model of Biological and Chemical Computers. Theory Appl. Math. Comput. Sci. 2017, 7, 1–30. [Google Scholar]

- Mikkilineni, R.; Morana, G. Post-Turing Computing, Hierarchical Named Networks and a New Class of Edge Computing. In Proceedings of the 2019 IEEE 28th International Conference on Enabling Technologies: Infrastructure for Collaborative Enterprises (WETICE), Napoli, Italy, 12–14 June 2019; pp. 82–87. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mikkilineni, R. Going beyond Church–Turing Thesis Boundaries: Digital Genes, Digital Neurons and the Future of AI. Proceedings 2020, 47, 15. https://doi.org/10.3390/proceedings2020047015

Mikkilineni R. Going beyond Church–Turing Thesis Boundaries: Digital Genes, Digital Neurons and the Future of AI. Proceedings. 2020; 47(1):15. https://doi.org/10.3390/proceedings2020047015

Chicago/Turabian StyleMikkilineni, Rao. 2020. "Going beyond Church–Turing Thesis Boundaries: Digital Genes, Digital Neurons and the Future of AI" Proceedings 47, no. 1: 15. https://doi.org/10.3390/proceedings2020047015

APA StyleMikkilineni, R. (2020). Going beyond Church–Turing Thesis Boundaries: Digital Genes, Digital Neurons and the Future of AI. Proceedings, 47(1), 15. https://doi.org/10.3390/proceedings2020047015