Greenhouse Detection from Color Infrared Aerial Image and Digital Surface Model †

Abstract

:1. Introduction

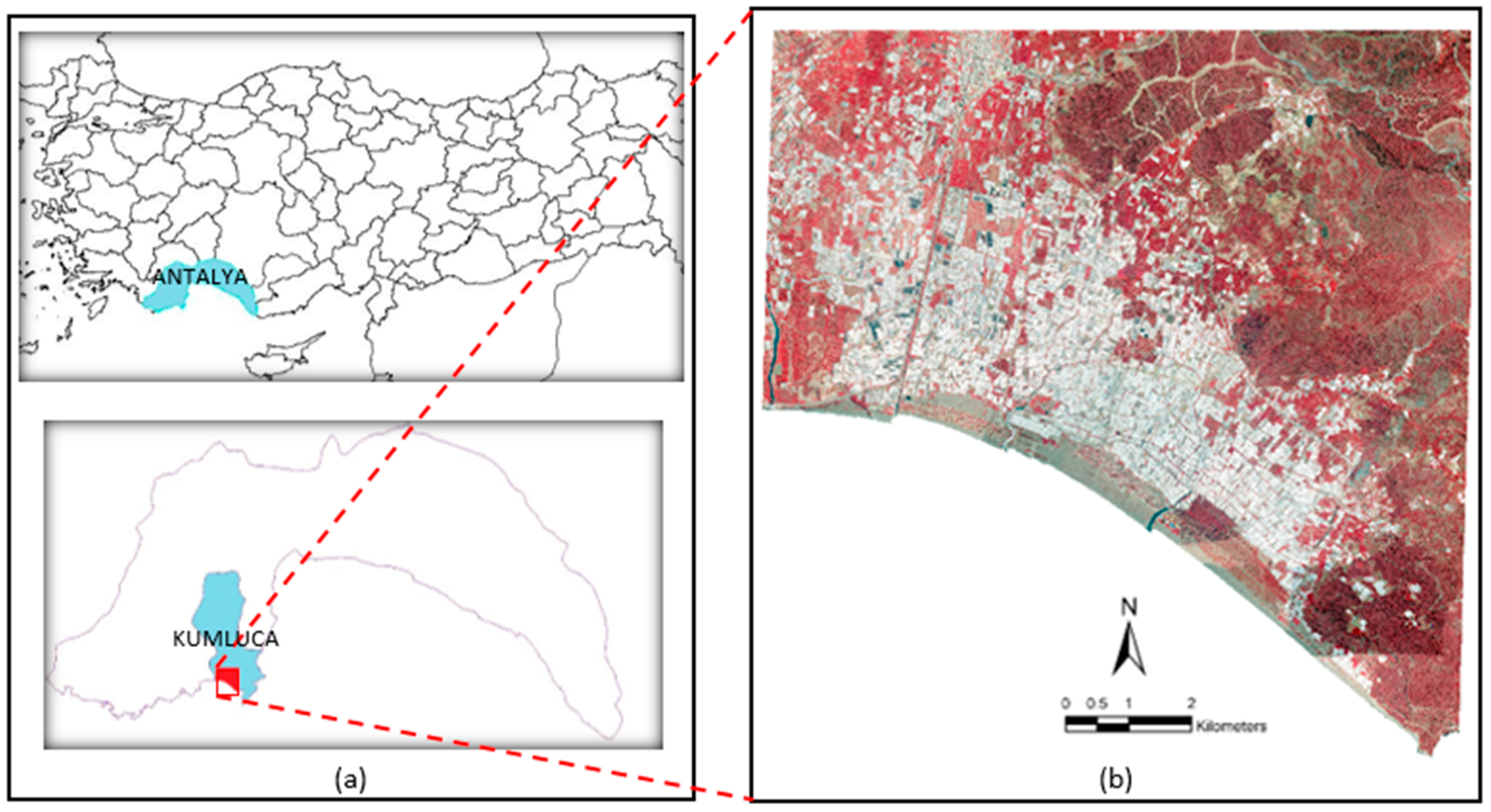

2. Study Area and Data Sets

3. Methodology

3.1. The Preparation of Additional Bands

3.2. Object-Based Image Classification

4. Results and Discussion

5. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Carvajal, F.; Crizanto, E.; Aguilar, F.J.; Agüera, F.; Aguilar, M.A. Greenhouses detection using an artificial neural network with a very high resolution satellite image. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Vienna, Austria, 12–16 July 2006; XXXVI, Part 2. pp. 37–42. [Google Scholar]

- Carvajal, F.; Agüera, F.; Aguilar, F.J.; Aguilar, M.A. Relationship between atmospheric corrections and training-site strategy with respect to accuracy of greenhouse detection process from very high resolution imagery. Int. J. Remote Sens. 2010, 31, 2977–2994. [Google Scholar] [CrossRef]

- Koc-San, D. Evaluation of different classification techniques for the detection of glass and plastic greenhouses from WorldView-2 satellite imagery. J. Appl. Remote Sens. 2013, 7, 073553. [Google Scholar] [CrossRef]

- Koc-San, D.; Sonmez, N.K. Plastic and glass greenhouses detection and delineation from worldview-2 satellite imagery. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, XXIII ISPRS Congress, Prague, Czech Republic, 12–19 July 2016; XLI-B7, pp. 257–262. [Google Scholar] [CrossRef]

- Celik, S.; Koc-San, D. Greenhouse Detection Using Aerial Orthophoto and Digital Surface Model. In Intelligent Interactive Multimedia Systems and Services 2017; De Pietro, G., Gallo, L., Howlett, R., Jain, L., Eds.; KES-IIMSS 2017. Smart Innovation, Systems and Technologies; Springer: Cham, Switzerland, 2015; Volume 76, pp. 51–59. [Google Scholar] [CrossRef]

- Pala, E.; Tasdemir, K.; Koc-San, D. Unsupervised extraction of greenhouses using approximate spectral clustering ensemble. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 4668–4671. [Google Scholar]

- Tasdemir, K.; Koc-San, D. Unsupervised extraction of greenhouses using WorldView-2 images. In Proceedings of the IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 4914–4917. [Google Scholar]

- Aguilar, M.A.; Bianconi, F.; Aguilar, J.F.; Fernandez, I. Object-Based Greenhouse Classification from GeoEye-1 and WorldView-2 Stereo Imagery. Remote Sens. 2014, 6, 3554–3582. [Google Scholar] [CrossRef]

- Aguilar, M.A.; Vallario, A.; Aguilar, F.J.; Lorca, A.G.; Parente, C. Object-Based Greenhouse Horticultural Crop Identification from Multi-Temporal Satellite Imagery: A Case Study in Almeria, Spain. Remote Sens. 2015, 7, 7378–7401. [Google Scholar] [CrossRef]

- Agüera, F.; Liu, J.G. Automatic greenhouse delineation from Quickbird and Ikonos satellite images. Comput. Electron. Agric. 2009, 66, 191–200. [Google Scholar] [CrossRef]

- Chaofan, W.; Jinsong, D.; Ke, W.; Ligang, M.; Tahmassebi, A.R.S. Object-based classification approach for greenhouse mapping using Landsat-8 imagery. Int. J. Agric. Biol. Eng. 2016, 9, 79–88. [Google Scholar] [CrossRef]

- Novelli, A.; Aguilar, M.A.; Nemmaoui, A.; Aguilar, F.J.; Tarantino, E. Performance evaluation of object based greenhouse detection from Sentinel-2 MSI and Landsat 8 OLI data: a case study from Almeria (Spain). Int. J. Appl. Earth Obs. 2016, 52, 403–411. [Google Scholar] [CrossRef]

- TSI (Turkish Statistical Institute). Available online: http://www.tuik.gov.tr/PreTablo.do?alt_id=1001 (accessed on 18 July 2019).

- Estoque, C.R.; Murayama, Y. Classification and change detection of built-up lands from Landsat-7 ETM+ and Landsat-8 OLI/TIRS imageries: a comparative assessment of various spectral indices. Ecol. Indic. 2015, 56, 205–217. [Google Scholar] [CrossRef]

- Dragut, L.; Csilik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. Remote Sens. 2014, 88, 119–127. [Google Scholar] [CrossRef] [PubMed]

- Kavzoglu, T.; Yildiz, M. Parameter-Based Performance Analysis of Object-Based Image Analysis Using Aerial and Quikbird-2 Images. ISPRS Annals of the Photogrammetry. Remote Sens. Spat. Inf. Sci. 2014, II-7, 31–37. [Google Scholar]

- Liu, D.; Xia, F. Assessing object-based classification: advantages and limitations. Remote Sens. Lett. 2010, 1, 187–194. [Google Scholar] [CrossRef]

- Koc-San, D. Approaches for Automatic Urban Building Extraction and Updating from High Resolution Satellite Imagery. Ph.D. Thesis, Middle East Technical University, Ankara, Turkey, 2009. Unpublished work. [Google Scholar]

| Shape | Compactness | Scale Parameter |

|---|---|---|

| 0.1 | 0.5 | 117 |

| 0.3 | 0.5 | 113 |

| 0.5 | 0.5 | 103 |

| Classes | K-NN | RF | SVM | |||

|---|---|---|---|---|---|---|

| PA | UA | PA | UA | PA | UA | |

| Greenhouse | 78.21 | 91.84 | 82.51 | 88.90 | 96.88 | 98.10 |

| Building 1 | 89.21 | 56.57 | 87.41 | 70.24 | 95.44 | 94.67 |

| Building 2 | 92.43 | 71.34 | 85.98 | 61.98 | 82.63 | 97.47 |

| Road | 57.13 | 62.16 | 53.72 | 46.49 | 82.10 | 84.94 |

| Bareland | 83.49 | 84.14 | 74.83 | 83.75 | 94.99 | 90.80 |

| Vegetation | 100 | 100 | 100 | 100 | 100 | 100 |

| Overall Accuracy | 83.47 | 81.46 | 94.80 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Celik, S.; Koc-San, D. Greenhouse Detection from Color Infrared Aerial Image and Digital Surface Model. Proceedings 2020, 42, 29. https://doi.org/10.3390/ecsa-6-06548

Celik S, Koc-San D. Greenhouse Detection from Color Infrared Aerial Image and Digital Surface Model. Proceedings. 2020; 42(1):29. https://doi.org/10.3390/ecsa-6-06548

Chicago/Turabian StyleCelik, Salih, and Dilek Koc-San. 2020. "Greenhouse Detection from Color Infrared Aerial Image and Digital Surface Model" Proceedings 42, no. 1: 29. https://doi.org/10.3390/ecsa-6-06548

APA StyleCelik, S., & Koc-San, D. (2020). Greenhouse Detection from Color Infrared Aerial Image and Digital Surface Model. Proceedings, 42(1), 29. https://doi.org/10.3390/ecsa-6-06548