Real-Time Posture Control for a Robotic Manipulator Using Natural Human–Computer Interaction †

Abstract

:1. Introduction

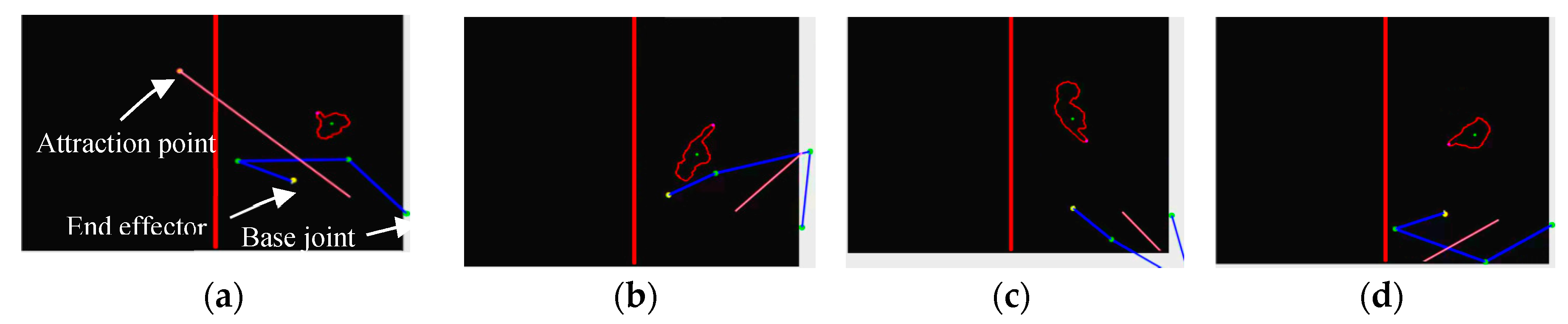

2. Teleoperation with Dedicated Hand for Posture Control—Browse and Select

3. Teleoperation with Posture Control Using a Single Hand—Attraction Point

| Algorithm 1. For searching for the closest posture to equilibrium based on the attraction point position |

| Input: Lp = List of possible arm postures G = Attraction point position Output: A = Possible posture that is the closest to equilibrium |

| 1. For each posture i in Lp: For each moveable joint j in i: DistanceTotal += (Distance(G, j))3 if (DistanceTotal < minDistanceTotal) minDistanceTotal = DistanceTotal A = i; 2. Return A |

4. Experimental Results

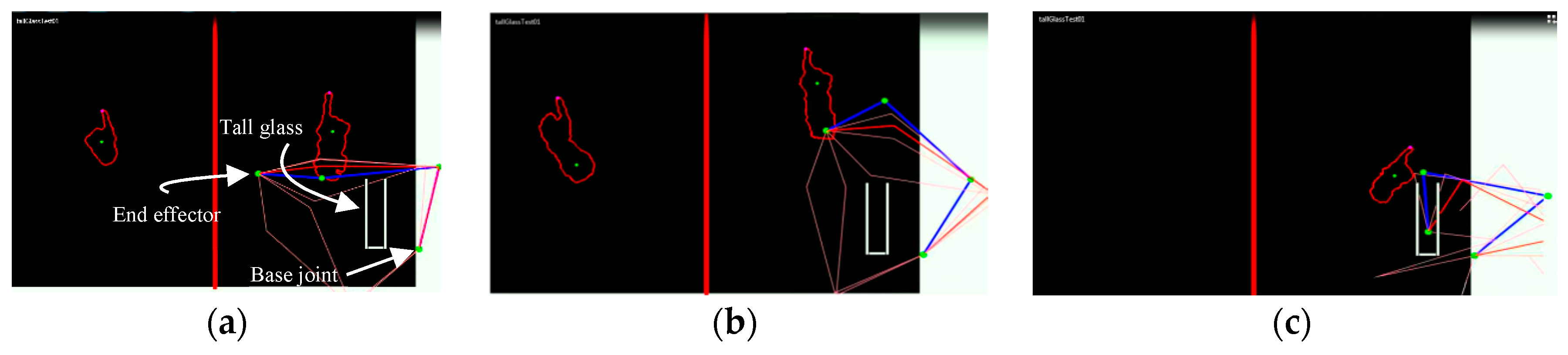

4.1. Static Obstacle Avoidance—The Tall Glass Test

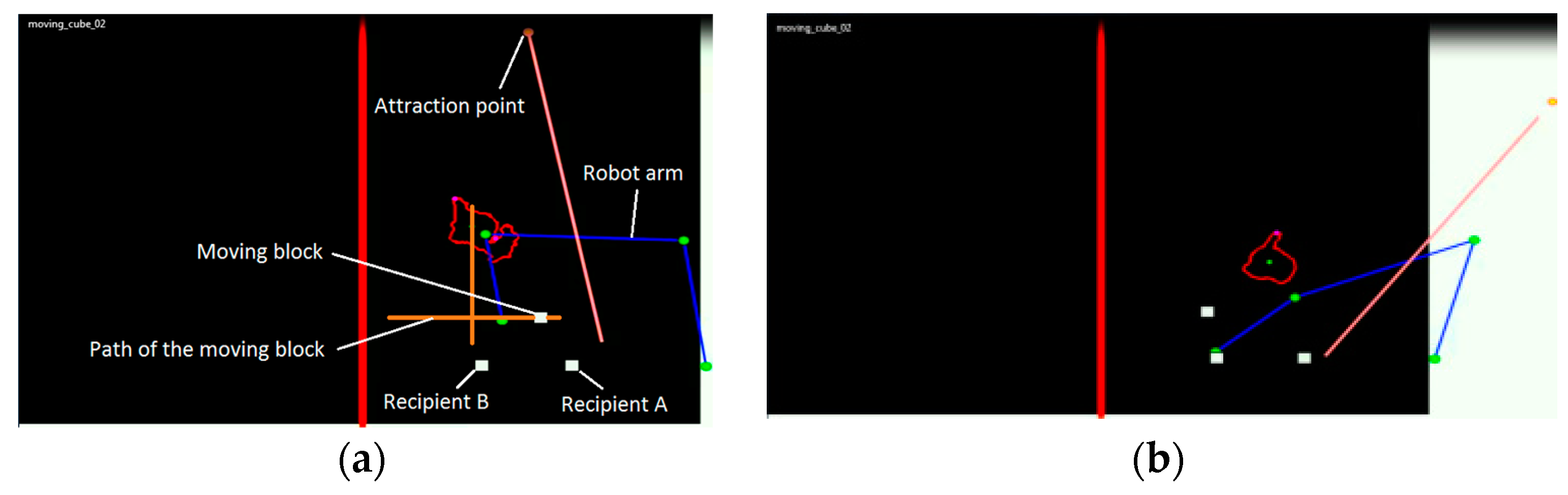

4.2. Dynamic Obstacle Avoidance—Moving Block

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Pan, C.; Liu, X.; Jiang, W. Design and Synchronization Control of Heterogeneous Robotic Teleoperation System. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 406–410. [Google Scholar]

- Ma, T.; Fu, C.; Feng, H.; Lv, Y. A LESS Robotic Arm Control System Based on Visual Feedback. In Proceedings of the IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015; pp. 2042–2047. [Google Scholar]

- Barbeiri, L.; Bruno, F.; Gallo, A.; Muzzupappa, M.; Russo, M.L. Design, prototyping and testing of a modular small-sized underwater robotic arm controlled through a Master-Slave approach. Ocean Eng. 2018, 158, 253–262. [Google Scholar] [CrossRef]

- Chai, A.; Lim, E. Conceptual Design of Master-Slave Exoskeleton for Motion Prediction and Control. In Proceedings of the 2015 International Conference on Advanced Robotics and Intelligent Systems (ARIS), Taipei, Taiwan, 29–31 May 2015; pp. 1–4. [Google Scholar]

- Fuad, M. Skeleton based gesture to control manipulator. In Proceedings of the 2015 International Conference on Advanced Mechatronics, Intelligent Manufacture, and Industrial Automation (ICAMIMIA), Surabaya, Indonesia, 15–17 October 2015; pp. 96–99. [Google Scholar]

- Huertas, M.R.; Romero, J.R.M.; Venegas, H.A.M. A Robotic Arm Telemanipulated through a Digital Glove. In Proceedings of the Electronics, Robotics and Automotive Mechanics Conference (CERMA 2007), Cuernavaca, Mexico, 25–28 September 2007; pp. 470–475. [Google Scholar]

- Lin, H.-I.; Nguyen, X.-A. A manipulative instrument with simultaneous gesture and end-effector trajectory planning and controlling. Rev. Sci. Instrum. 2017, 88, 055107. [Google Scholar] [CrossRef] [PubMed]

- Abdallah, M.A.Y.; Baziyed, M.S.; Fareh, R.; Rabie, T. Tracking Control for Robotic Manipulator Based on FABRIK Algorithm. In Proceedings of the 2018 Advances in Science and Engineering Technology International Conferences (ASET), Abu Dhabi, UAE, 6 February–5 April 2018; pp. 1–5. [Google Scholar]

- Wu, H.; Su, M.; Chen, S.; Guan, Y.; Zhang, H.; Liu, G. Kinect-based robotic manipulation: From human hand to end-effector. In Proceedings of the IEEE Conference on Industrial Electronics and Applications (ICIEA), Hefei, China, 5–7 June 2015; pp. 806–811. [Google Scholar]

- Benabdallah, I.; Bouteraa, Y.; Boucetta, R.; Rekik, C. Kinect-based Computed Torque Control for lynxmotion robotic arm. In Proceedings of the International Conference on Modelling, Identification and Control (ICMIC), Sousse, Tunisia, 18–20 December 2015; pp. 1–6. [Google Scholar]

- Marić, F.; Jurin, I.; Marković, I.; Kalafatić, Z.; Petrović, I. Robot arm teleoperation via RGBD sensor palm tracking. In Proceedings of the 39th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 30 May–3 June 2016; pp. 1093–1098. [Google Scholar]

- Plouffe, G.; Cretu, A.-M. Static and Dynamic Hand Gesture Recognition in Depth Data Using Dynamic Time Warping. IEEE Trans. Instrum. Meas. 2016, 65, 305–316. [Google Scholar] [CrossRef]

- Bensaoui, M.; Chekireb, H.; Tadjine, M. Redundant robot manipulator control with obstacle avoidance using extended Jacobian method. In Proceedings of the Mediterranean Conference Control and Automation, Marrakech, Morocco, 23–25 June 2010; pp. 371–376. [Google Scholar]

- Lee, K.-K.; Komoguchi, Y.; Buss, M. Multiple Obstacles Avoidance for Kinematically Redundant Manipulators Using Jacobian Transpose Method. In Proceedings of the SICE Annual Conference, Takamatsu, Japan, 17–20 September 2007; pp. 1070–1076. [Google Scholar]

- Ju, T.; Liu, S.; Yang, J.; Sun, D. Rapidly Exploring Random Tree Algorithm-Based Path Planning for Robot-Aided Optical Manipulation of Biological Cells. IEEE Trans. Autom. Sci. Eng. 2014, 11, 649–657. [Google Scholar] [CrossRef]

- Maciejewski, A.A.; Kein, C.A. Obstacle Avoidance for Kinematically Redundant Manipulators in Dynamically Varying Environments. Int. J. Robot. Res. 1985, 4, 109–117. [Google Scholar] [CrossRef]

- Luo, R.C.; Shih, B.-H.; Lin, T.-W. Real Time Human Motion Imitation of Anthropomorphic Dual Arm Robot Based on Cartesian Impedance Control. In Proceedings of the IEEE International Symposium on Robotic and Sensors Environments (ROSE), Washington, DC, USA, 21–23 October 2013; pp. 25–30. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Plouffe, G.; Payeur, P.; Cretu, A.-M. Real-Time Posture Control for a Robotic Manipulator Using Natural Human–Computer Interaction. Proceedings 2019, 4, 31. https://doi.org/10.3390/ecsa-5-05751

Plouffe G, Payeur P, Cretu A-M. Real-Time Posture Control for a Robotic Manipulator Using Natural Human–Computer Interaction. Proceedings. 2019; 4(1):31. https://doi.org/10.3390/ecsa-5-05751

Chicago/Turabian StylePlouffe, Guillaume, Pierre Payeur, and Ana-Maria Cretu. 2019. "Real-Time Posture Control for a Robotic Manipulator Using Natural Human–Computer Interaction" Proceedings 4, no. 1: 31. https://doi.org/10.3390/ecsa-5-05751

APA StylePlouffe, G., Payeur, P., & Cretu, A.-M. (2019). Real-Time Posture Control for a Robotic Manipulator Using Natural Human–Computer Interaction. Proceedings, 4(1), 31. https://doi.org/10.3390/ecsa-5-05751