Smarty Pants: Exploring Textile Pressure Sensors in Trousers for Posture and Behaviour Classification †

Abstract

:1. Introduction

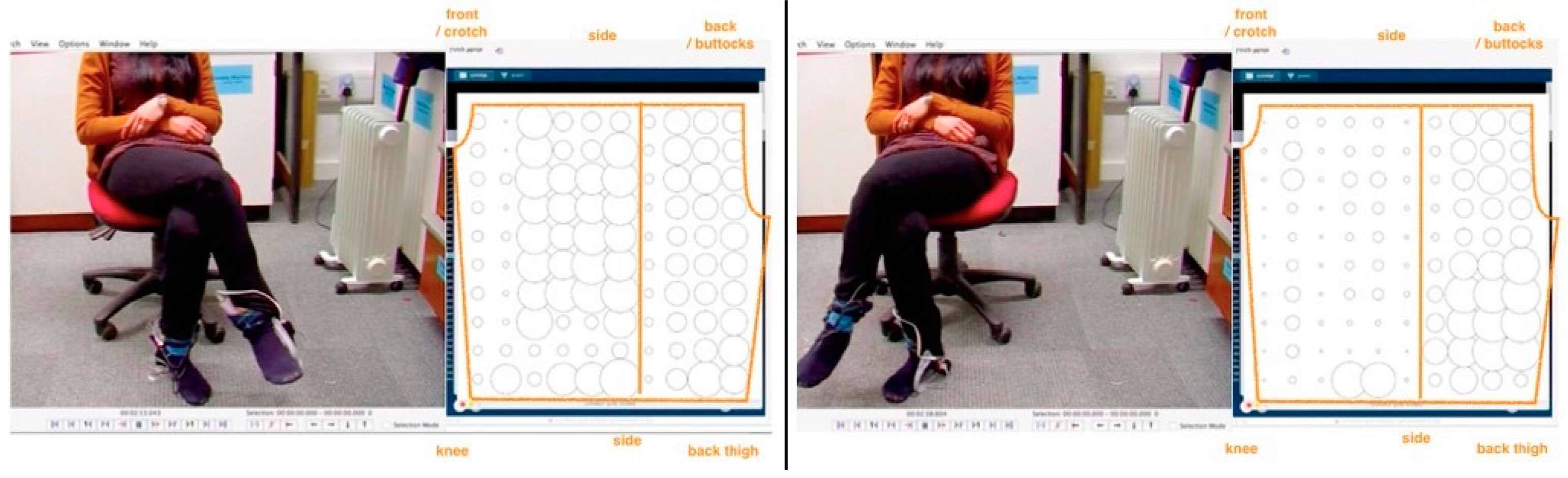

2. Designing Sensing Trousers

3. Methodology

| Mode | Participants | Set Up | Software | Classification | Classes | |

|---|---|---|---|---|---|---|

| Study 1: Postures | single user | 6 | controlled | Weka, Elan | Random Forest | 19 |

| Study 2: Behaviours | multi-party | 20 | spontaneous | Weka, Elan | Random Forest | 4 + 1 |

4. Results

5. Discussion

Funding

Acknowledgments

References

- Olivier, P.; Xu, G.; Monk, A.; Hoey, J. Ambient kitchen: Designing situated services using a high fidelity prototyping environment. In Proceedings of the 2nd International Conference on Pervasive Technologies Related to Assistive Environments, Corfu, Greece, 9–13 June 2009; ACM: New York, NY, USA, 2009; p. 47. [Google Scholar]

- Skach, S.; Healey, P.G.; Stewart, R. Talking Through Your Arse: Sensing Conversation with Seat Covers. In Proceedings of the 39th Annual Meeting of the Cognitive Science Society (CogSci), London, UK, 26–29 July 2017. [Google Scholar]

- Bleda, A.L.; Fernández-Luque, F.J.; Rosa, A.; Zapata, J.; Maestre, R. Smart sensory furniture based on WSN for ambient assisted living. IEEE Sens. J. 2017, 17, 5626–5636. [Google Scholar] [CrossRef]

- Li, T.; An, C.; Tian, Z.; Campbell, A.T.; Zhou, X. Human sensing using visible light communication. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking, Paris, France, 7–11 September 2015; ACM: New York, NY, USA, 2015; pp. 331–344. [Google Scholar]

- Choudhury, T.; Pentland, A. Sensing and modeling human networks using the sociometer. In Proceedings of the Seventh IEEE International Symposium on Wearable Computers, White Plains, New York, USA, 21–23 October 2003; IEEE: Piscataway, NJ, USA, 2003; pp. 216–222. [Google Scholar]

- Katevas, K.; Hänsel, K.; Clegg, R.; Leontiadis, I.; Haddadi, H.; Tokarchuk, L. Finding Dory in the Crowd: Detecting Social Interactions using Multi-Modal Mobile Sensing. arXiv 2018, arXiv:1809.00947. [Google Scholar]

- Lokavee, S.; Puntheeranurak, T.; Kerdcharoen, T.; Watthanwisuth, N.; Tuantranont, A. Sensor pillow and bed sheet system: Unconstrained monitoring of respiration rate and posture movements during sleep. In Proceedings of the 2012 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Seoul, Korea, 14–17 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1564–1568. [Google Scholar]

- Gaver, W.; Bowers, J.; Boucher, A.; Law, A.; Pennington, S.; Villar, N. The history tablecloth: Illuminating domestic activity. In Proceedings of the 6th Conference on Designing Interactive Systems, University Park, PA, USA, 26–28 June 2006; ACM: New York, NY, USA, 2006; pp. 199–208. [Google Scholar]

- Gioberto, G.; Compton, C.; Dunne, L. Machine-Stitched E-Textile Stretch Sensors. Sens. Transducers J. 2016, 202, 25–37. [Google Scholar]

- Perner-Wilson, H.; Buechley, L.; Satomi, M. Handcrafting textile interfaces from a kit-of-no-parts. In Proceedings of the fifth international conference on Tangible, embedded, and embodied interaction, Funchal, Portugal, 22–26 January 2011; ACM: New York, NY, USA, 2011; pp. 61–68. [Google Scholar]

- Donneaud, M.; Honnet, C.; Strohmeier, P. Designing a multi-touch etextile for music performances. In Proceedings of the NIME, Aalborg University, Copenhagen, Denmark, 15–18 May 2017; pp. 7–12. [Google Scholar]

- Skach, S.; Stewart, R.; Healey, P.G.T. Smart Arse: Posture Classification with Textile Sensors in Trousers. In Proceedings of the 20th ACM International Conference on Multimodal Interaction (ICMI ’18), Boulder, CO, USA, 16–20 October 2018; ACM: New York, NY, USA, 2018; pp. 116–124. [Google Scholar] [CrossRef]

- Brugman, H.; Russel, A.; Nijmegen, X. Annotating Multi-media/Multi-modal Resources with ELAN. In Proceedings of the LREC, Lisbon, Portugal, 26–28 May 2004. [Google Scholar]

- Skach, S.; Stewart, R. One Leg at a Time: Towards Optimised Design Engineering of Textile Sensors in Trousers. In Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers, (UbiComp/ISWC ’19) Adjunct, London, UK, 9–13 September 2019; ACM: New York, NY, USA, 2019; pp. 206–209. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Skach, S.; Stewart, R.; Healey, P.G.T. Smarty Pants: Exploring Textile Pressure Sensors in Trousers for Posture and Behaviour Classification. Proceedings 2019, 32, 19. https://doi.org/10.3390/proceedings2019032019

Skach S, Stewart R, Healey PGT. Smarty Pants: Exploring Textile Pressure Sensors in Trousers for Posture and Behaviour Classification. Proceedings. 2019; 32(1):19. https://doi.org/10.3390/proceedings2019032019

Chicago/Turabian StyleSkach, Sophie, Rebecca Stewart, and Patrick G. T. Healey. 2019. "Smarty Pants: Exploring Textile Pressure Sensors in Trousers for Posture and Behaviour Classification" Proceedings 32, no. 1: 19. https://doi.org/10.3390/proceedings2019032019

APA StyleSkach, S., Stewart, R., & Healey, P. G. T. (2019). Smarty Pants: Exploring Textile Pressure Sensors in Trousers for Posture and Behaviour Classification. Proceedings, 32(1), 19. https://doi.org/10.3390/proceedings2019032019