Abstract

Fall detection can improve the security and safety of older people and alert when fall occurs. Fall detection systems are mainly based on wearable sensors, ambient sensors, and vision. Each method has commonly known advantages and limitations. Multimodal and data fusion approaches present a combination of data sources in order to better describe falls. Publicly available multimodal datasets are needed to allow comparison between systems, algorithms and modal combinations. To address this issue, we present a publicly available dataset for fall detection considering Inertial Measurement Units (IMUs), ambient infrared presence/absence sensors, and an electroencephalogram Helmet. It will allow human activity recognition researchers to do experiments considering different combination of sensors.

1. Introduction

Falls are the most common cause of disability and death in older people [1] around the world. The risk of falling increases with age, but it also depends in health status and environmental factors [2]. Along with preventive measures, there is also important to have fall detection solutions in order to reduce the time in which a person who suffered a fall receives assistance and treatment [3].

Fall detection can improve the security and safety of older people and alert when fall occurs. Surveys in the field of automatic fall detection [3,4,5] classify fall detection systems in in three categories. Approaches based on wearable sensors, ambient sensors, and vision. Each method has commonly known advantages and limitations. Wearable sensors are sometimes obtrusive and uncomfortable; smartphones and smart watches have battery limitations and limited processing and storage. Although vision methods are cheap, unobtrusive, and require less cooperation from the person, they have privacy issues and environment conditions can affect the recollection.

In recent years, due to the increasing availability of different modality of data and greater facility to acquire them, there is a trend to use multimodal data to study different phenomenon or system of interest [6]. The main idea is that “Due to the rich characteristics of natural processes and environments, it is rare that a single acquisition method provides complete understanding thereof” [6]. Multimodal and data fusion are also a trends in health systems. The combination of different data sources, processed for data fusion is applied to improve reliability and precision of fall detection systems. Koshmak et al. [7] presented the challenges and issues of fall detection research with focus in multisensor fusion. The authors describe systems of fall detection of multifusion approaches, hence each of them present results in their own dataset making it impossible to compare. Igual et al. [8] present the need of publicly available dataset with great diversity of acquisition modalities to enable comparison between systems and new algorithm performances.

In this work, we present a publicly available multimodal dataset for fall detection. This is the first attempt in our on-going project considering four Inertial Measurement Units (IMUs), four infrared presence/absence sensors, and an electroencephalogram Helmet. This dataset can benefit researchers in the fields of wearable computing, ambient intelligence and sensor fusion. New machine learning algorithms can be proven with this dataset. It will also allow human activity recognition (HAR) researchers to do experiments considering different combination of sensors in order to determine the best placement of wearable and ambient sensors.

The rest of the paper is organized as follows. In Section 2 we reviewed existing publicly available datasets for fall detection. Our UP-fall detection and activity recognition dataset is presented in Section 3. Experiments and results are shown in Section 4. Finally, conclusions and future work are discussed in 5

2. Fall Detection Databases Overview

Mubashir et al. [5] divided the approaches for fall detection in three categories: wearable device-based, ambience sensor based, and vision based. There are few currently publicly available datasets for fall detection based in sensors [7,9,10,11,12], vision based [13,14], and multimodal datasets [15,16,17]. In this section, we present an overview of datasets based in sensors or multimodal approaches. For more extensive surveys including vision-based approaches see [3,6,18].

2.1. Sensor-Based Fall Databases

DLR (German Aerospace Center) dataset [19] is the collection of data from one Inertial Measurement Unit (IMU) worn in the belt of 16 people (6 female and 5 male) whose ages ranged from 20 to 42 years old. They performed seven activities (walking, running, standing, sitting, laying, falling and jumping). The type of fall was not specified.

MobiFall fall detection dataset recent version [20], was developed by the Biomedical Informatics and eHealth Laboratory of Technological Educational Institute of Crete. They captured data generated from inertial-sensors of a Smartphone (3D accelerometer and gyroscope) of 24 subjects, seventeen male and seven female with an age range 22–47 years. The authors recorded four types of falls and nine activities of daily living (ADL).

The tFall dataset developed by EduQTech (Education, Quality and Technology) in Universidad de Zaragoza [21] collected data from ten participant, three female and seven male, with age ranged from 20 to 42 years old. They obtained data from two smartphones carried by the subjects in everyday life for ADL and eight types of simulated falls.

Project gravity dataset [11] acquired from a smartphone and a smart watch worn in the thigh pocket and on the wrist. Three young participants (ranged age 22 to 32) performed seven ADL activities and 12 types of fall done simulating natural ADL and a sudden fall.

SisFall is a dataset [22] of falls and ADL obtained with self-developed Kinects MKL25Z128VLK4 microcontroller an Analog Devices ADXL345 accelerometer a Freescale MMA8451Q accelerometer an ITG3200. The device was positioned in a belt. The dataset was generated with the collaboration of 38 participants with elderly people and young adults from ranged age 19 to 75 years old. They selected 19 ADL activities and 15 interesting types of fall simulated when doing another ADL activity. It is important to notice that this dataset is the only including elderly in their trials.

These datasets only include wearable sensors, commercial, self-developed or embedded in smart devices. We can find other context-aware approaches but they are mostly vision-based or multimodal. Some few authors use only near field image sensor [23], Pressure and infrared sensors [24] or only infrared sensors [25]. To our knowledge, no dataset is publicly available with binary ambient sensors or other type of sensors for fall detection.

2.2. Multimodal Fall Databases

The UR (University of Rzeszow) fall detection dataset [26] was generated recollecting data form an IMU inertial device connected via Bluetooth and 2 Kinects connected via USB. Five volunteers were recorded doing 70 sequences of falls and ADL. Some of these are fall like activities in typical rooms. There were two kinds of falls: falling from standing position and falling from sitting on a chair. Each register contains sequences of depth and RGB images for two cameras and raw accelerometer data.

Multimodal Human Action Database (MHAD) [27] presented by [17] contains 11 actions performed by 12 volunteers (7 male and 5 female). Although the dataset registered very dynamic actions, falls were not considered. Nevertheless, this dataset is important given that actions were simultaneously captured with an optical motion capture system, six wireless accelerometers and four microphones.

3. UP-Fall Detection and Activity Recognition Database

We present a dataset for fall detection that includes data of ADL and simulated falls recollected from wearable and ambient sensors. Four volunteers performed the activities in a controlled environment. The dataset is presented in CSV and is publicly available in https://sites.google.com/up.edu.mx/har-up.

3.1. Data Acquisition System for Fall Detection and Activity Recognition

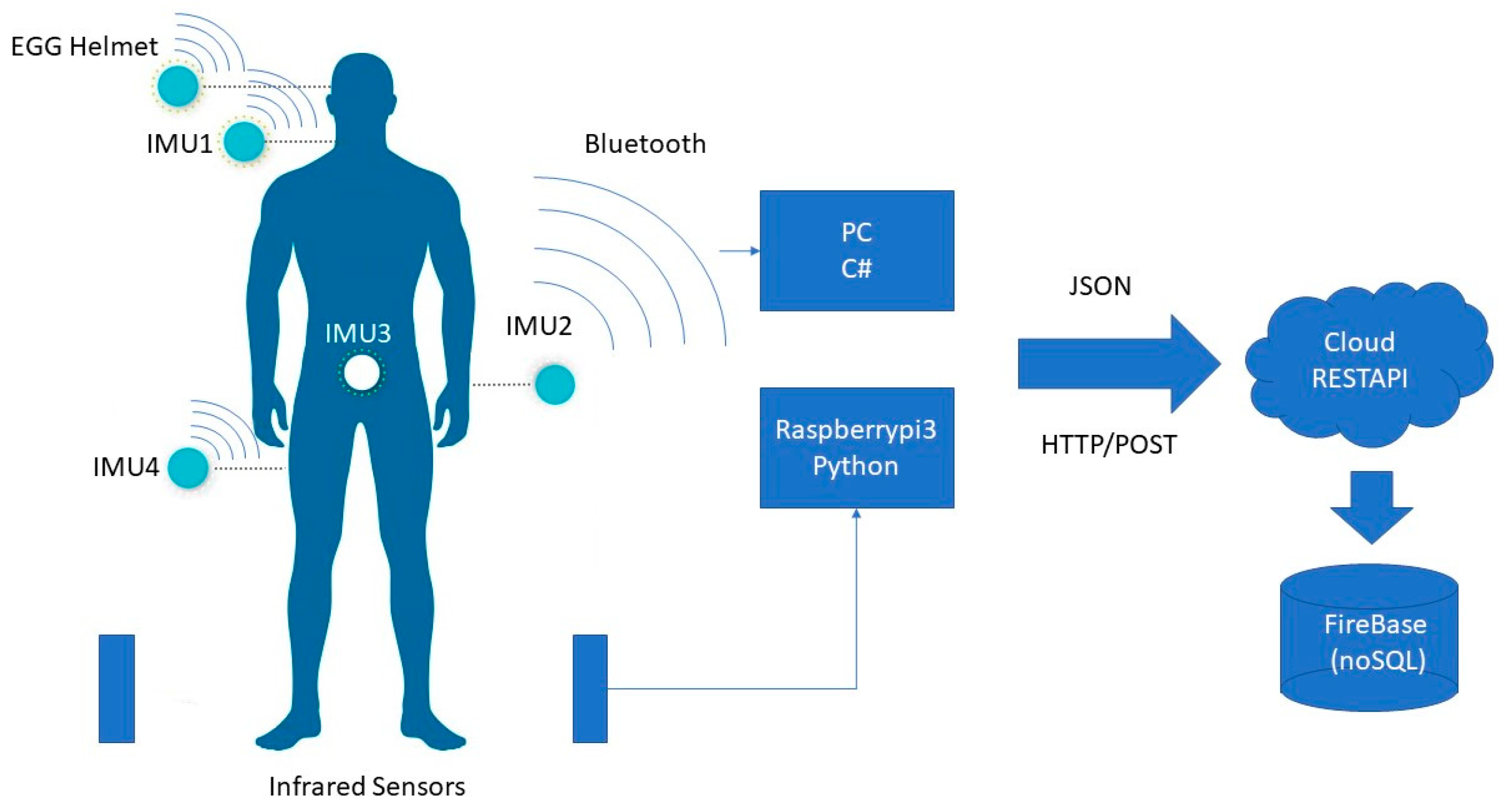

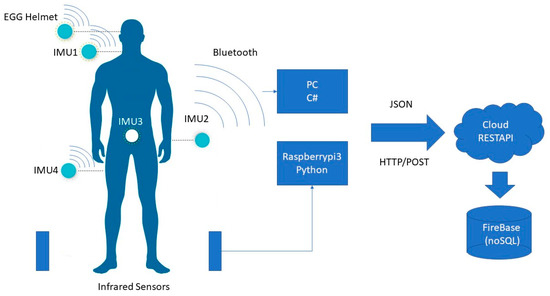

The main objective of this data acquisition system is sensing data of different body parts as neck, waist, thigh, wrist of hand, signals and absence/presence in delimited area. All data is manipulated to be converted in JavaScript Object Notation (JSON) structure and then be sent to Firebase (no SQL database) via API REST communication method. This may throw rich information to classify and detect falls and predict ADL activities.

The components used for this acquisition system were:

- 4 Inertial Measurement Units (IMUs).

- 1 Electroencephalogram Helmet (EEG)

- 4 (absence/presence) Ambient Infrared sensors.

- RaspberryPI3

- PC and External USB Bluetooth

The Data acquisition for this project consists of 3 steps Sensing, Extraction and Storage:

- Sensing.—Each component starts sensing the actions with the different sensors at the same time, the data to be sensing are: IMU’s: Accelerometer (X, Y and Z), Ambient Light (L) and Angular Velocity (X (rad/s), Y (rad/s) and Z (rad/s)). Helmet EEG: signals. Infrared sensor: absence-presence with binary value.

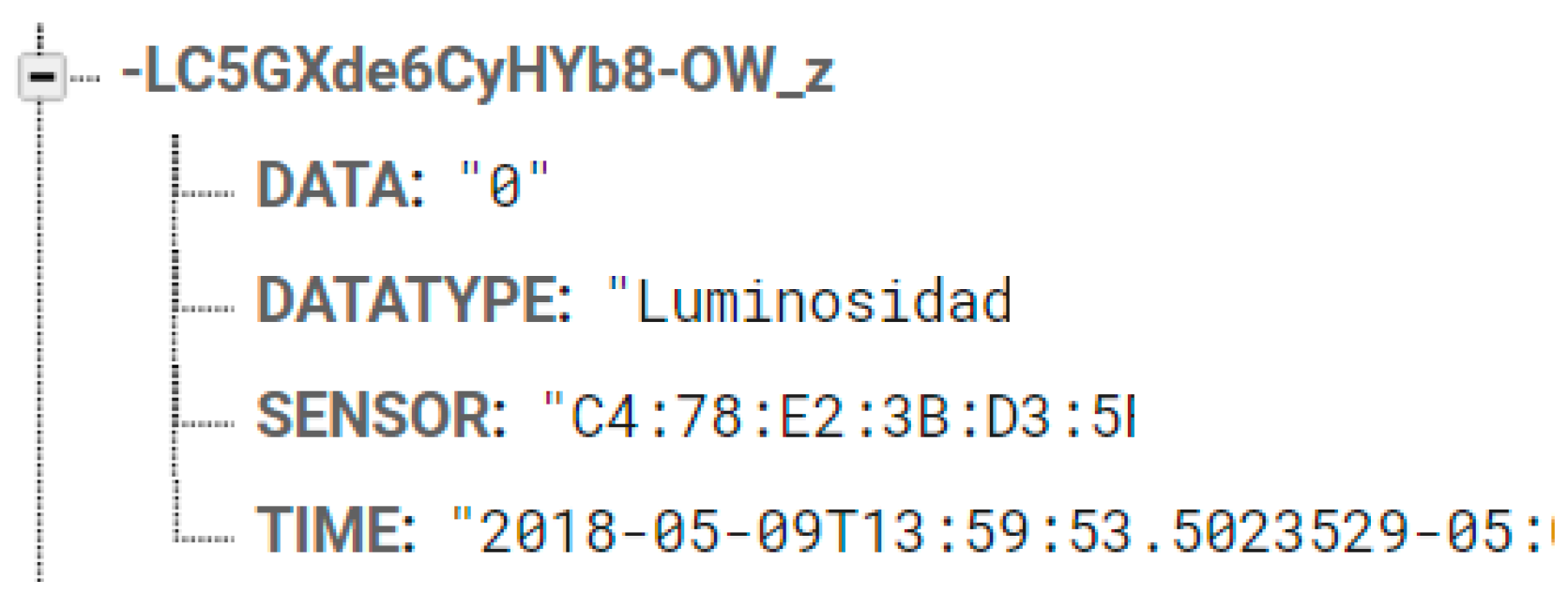

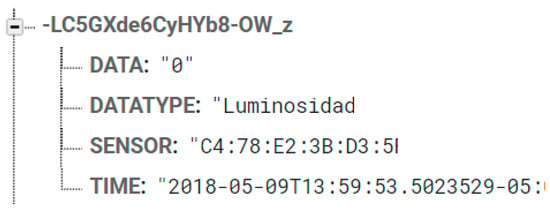

- Recollection.—The recollection phase consists in gathering data through Bluetooth connection, with IMU’s and EEG Helmet devices. Data are converted to JSON structure (Figure 1) to be sent to Cloud (Firebase). This process is made with C# program using SDK’s from IMU’s and EEG Helmet to provide us full access to the sensors data. Infrared sensors are connected directly to raspsberrypy3 in which a Python program allows to take data and convert them to JSON in order to store them in the cloud.

Figure 1. JSON structure is: DATA, DATATYPE, SENSOR, TIME.

Figure 1. JSON structure is: DATA, DATATYPE, SENSOR, TIME. - Storage.—Once that information has been collected and prepared in JSON structure packages, it is sent via POST request to be storage into firebase (noSQL database). In order to achieve this connection, a RESTAPI platform was configured to storage every POST request into firebase database as a new data.

The IMUS were positioned in the neck, belt, thigh, and wrist. These positions were defined after reviewing the most commonly used for fall detection according to literature [3]. The acquisition system is shown in Figure 2.

Figure 2.

Acquisition System.

3.2. Database Description

Four volunteers, two female and two male, from ranged age 22–58 years old per-formed each three trials of six activities of daily living (ADL) and five type falls shown in Table 1. The volunteers have different body complexions and ages as presented in 21. Although simulations were performed in this early stage of our work by a small group of volunteers, we seek to include young and mature volunteers, two female and two male, with different body complexities (Table 2). As falls are very rare events in real life in comparison to all activities performed even in a year, six ADL were included to help classifiers to discriminate falls from ADLs.

Table 1.

Activities and falls included in the dataset.

Table 2.

Volunteers’ body complexions and ages.

The types of falls simulated by volunteers were chosen after a review of most related works reported in Section 2 namely: fall use hands, fall forward knees, fall backwards, fall sideward, and fall sitting in empty chair. These falls are common particularly in elderly.

Data are separated by subject, activity, and trial, and is delivered in CSV format. The dataset was recollected in a laboratory and it was decided not to have complete control with the aim of simulating conditions as realistic as possible. The different sensor signals were synchronized for temporal alignment.

4. Experiments and Results

The intention of our dataset is to provide diversity of sensor information to allow comparison between systems and algorithms. It also allows experimentation in which combinations of sensors are taking into account in order to determine if the consideration of inertial and context data improve the performance of fall detection. In our experiments infrared sensors provide context data.

For the purpose of showing an example of the experiments that can be done with our dataset, two types of experiments were designed. For the first series of experiments, feature datasets were prepared only with data extracted from IMUs. The second series of experiments were done using the whole dataset, which includes context and brain helmet’s information. Three different feature datasets were used for each series of experiments corresponding to 2, 3 and 5 size of windowing for feature extraction.

4.1. Feature Extraction

As mentioned above, in order to apply feature extraction, the time series from the sensors were split in windows of 2, 3 and 5 s for experimentation. Relevant temporal and frequency features were extracted from original raw signals for each size of window generating three datasets. These features are shown in Table 3. Last three features are tags identifying the subject, activity and number of trial.

Table 3.

Extracted features.

4.2. Classification

The following classifiers were used in training processes: Linear Discriminant Analysis (LDA), CART Decision Trees (CART), Gaussian Naïve Bayes (NB), Sup-port Vector Machines (SVM), Random Forest (RF), K Nearest Neighbor (KNN), and Neural Networks (NN). A summary of results in terms of accuracy of using each ma-chine learning method in training process is presented in Table 4.

Table 4.

Results from Training Process.

Unless comparison between IMUs and IMUs with context sensors is quite small, it is interesting to observe that activity recognition is better using no enhancements in the dataset nor in the feature extraction, thus adding up contextual information in this particular dataset improves the performance of human activity recognition. The simulation of conditions can be a factor that causes low metrics for simple machine learning methods.

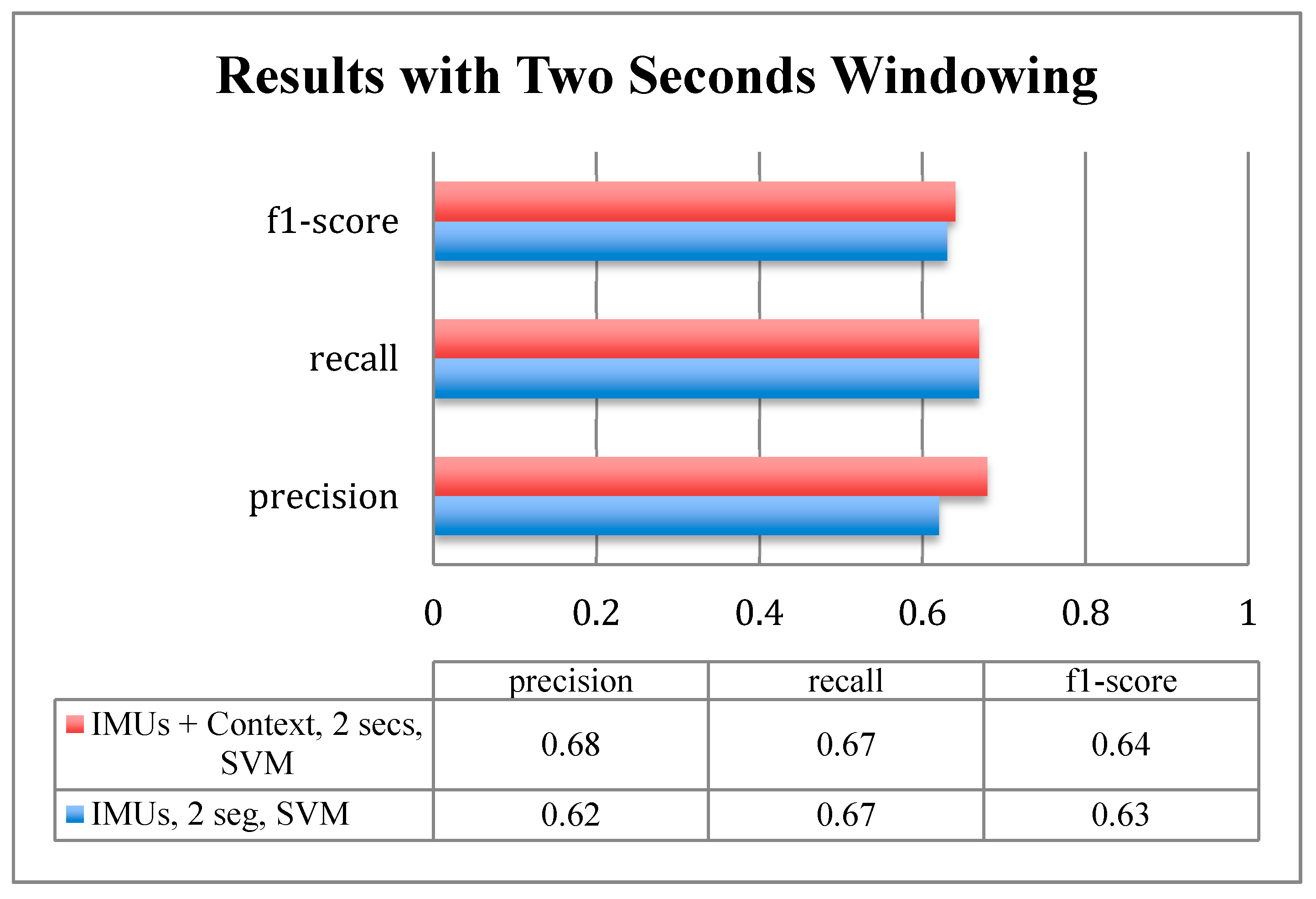

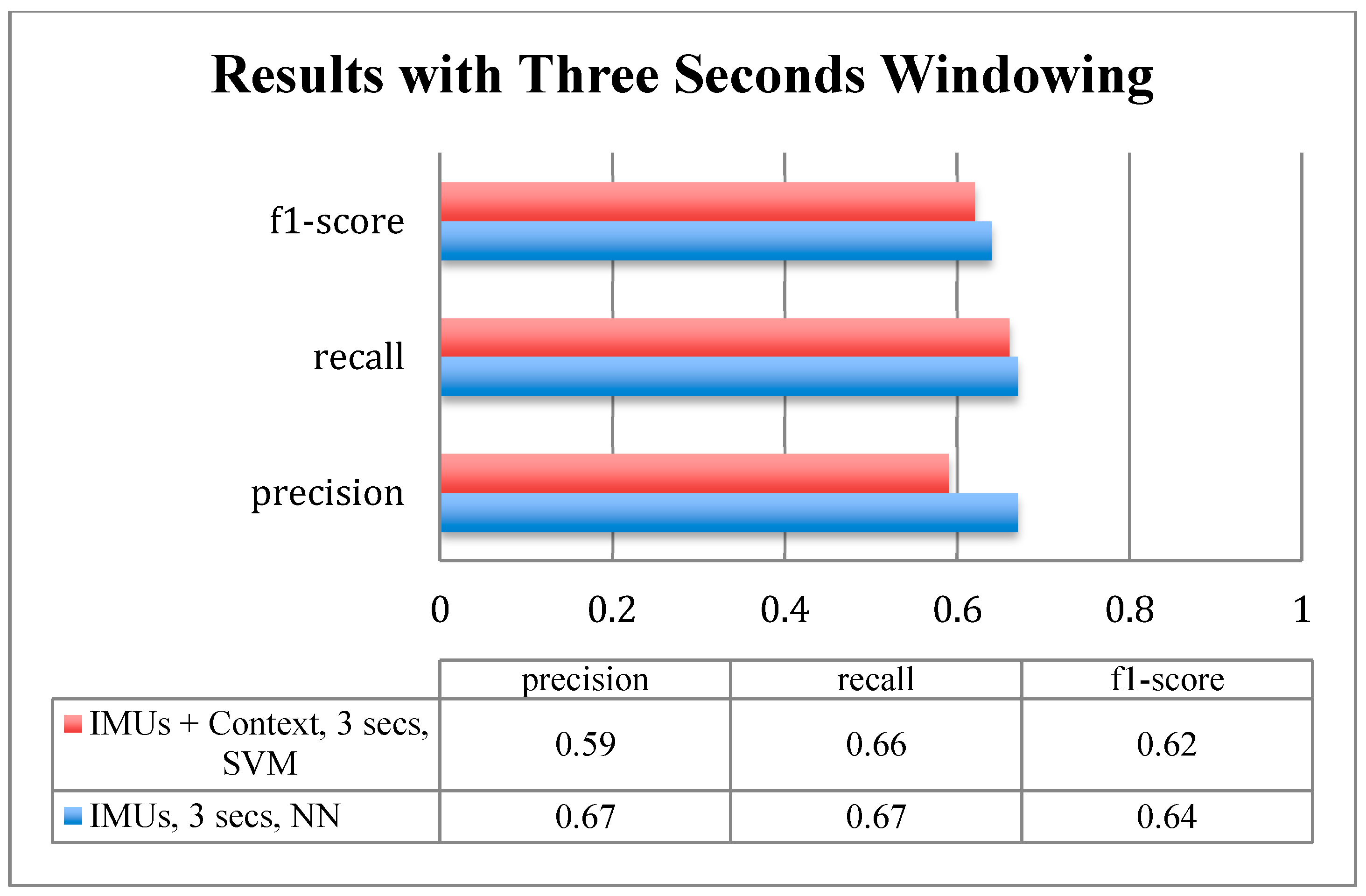

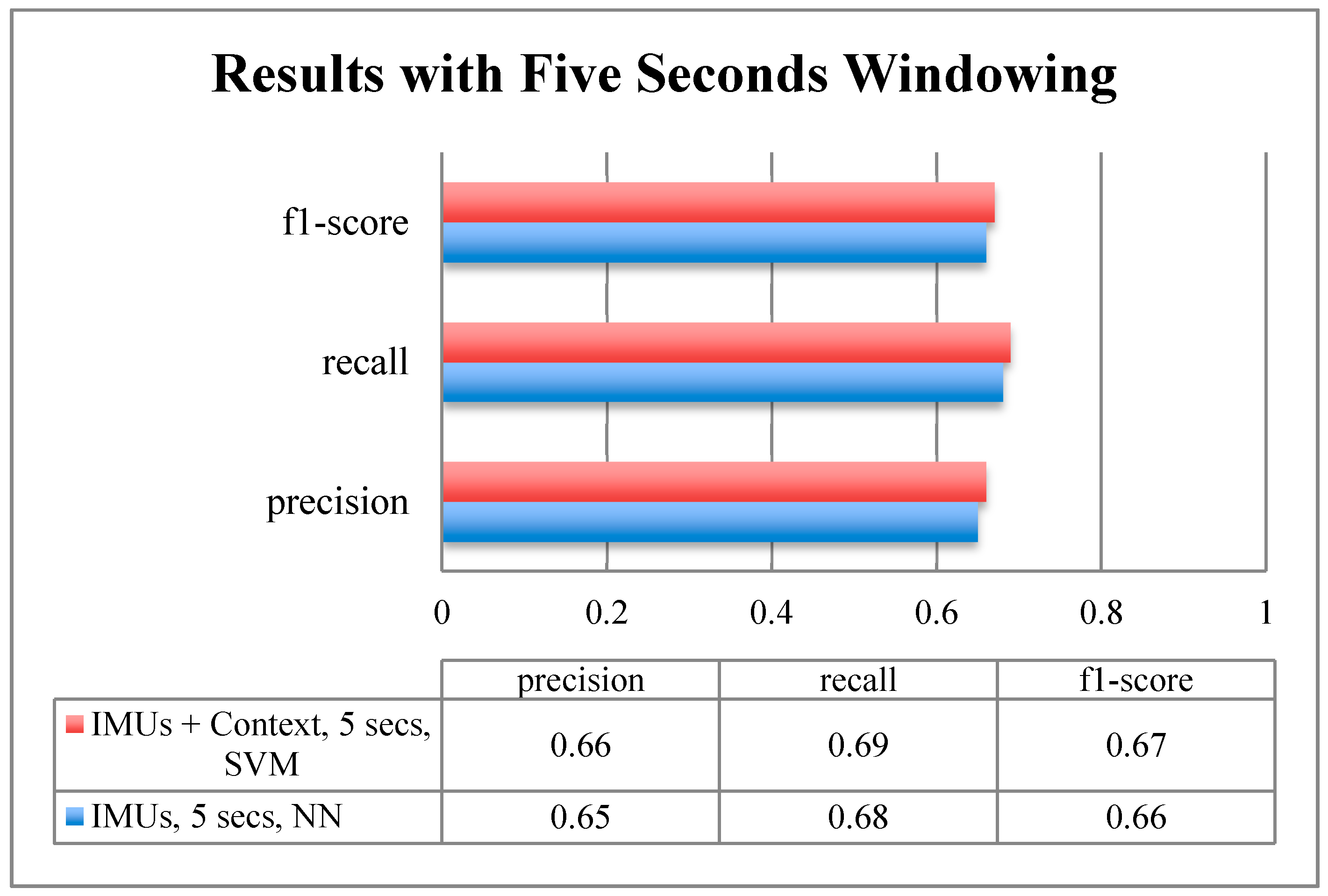

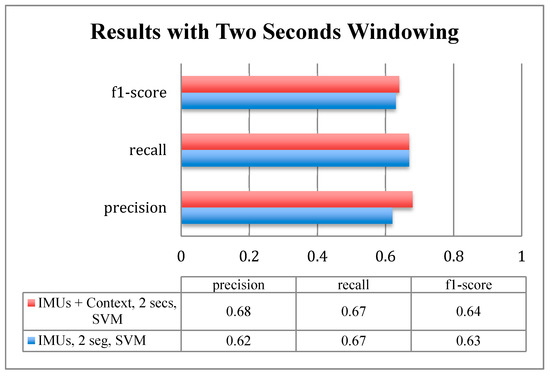

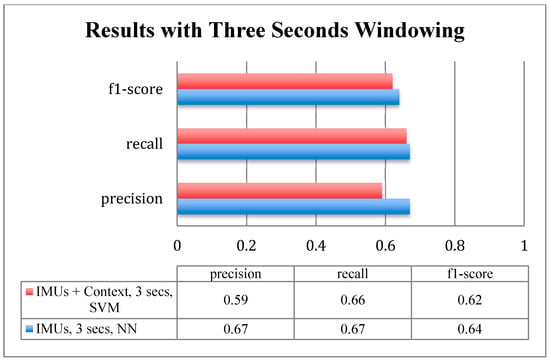

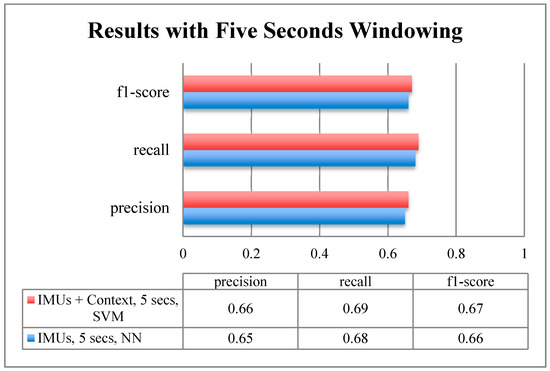

The results of predictions on validation sets using the same classifier with the best performance in training (i.e., SVM and NN) are presented in Figure 3, Figure 4 and Figure 5. A comparison between both experiments, using just IMUs data and IMUs plus context, for the same window size is shown in each figure.

Figure 3.

Average results of prediction with two seconds windowing.

Figure 4.

Average results of prediction with three seconds windowing.

Figure 5.

Average results of prediction with five seconds windowing.

Figure 3 shows the average precision, recall and f1 score of prediction on validation sets created with two seconds windowing. We can see a small improvement in the precision and f1-score measurements when using IMUs plus context data.

In Figure 4, on the contrary, we observe that the performance worsened a bit when adding context information to IMUs data for three seconds cases. Nevertheless, in the results of experiments with five seconds windowing, a similar improvement in the performance of IMUs plus context scenario can be observed.

Further experimentation can be done combining different placements for the purpose of finding the best combination and/or placement for fall detection.

5. Conclusions and Future Work

In this work, we presented a publicly available multimodal dataset for fall detection. The group of volunteers includes young and mature volunteers, two females and two males, with different body complexities. All data were captured with four IMUs, one Electroencephalogram Helmet (EEG), and four Infrared sensors. This dataset can allow comparison for different purposes: fall detection system performance, new algorithms for fall detection, multimodal complementarity, and sensor placement.

As shown, the dataset is challenging, thus we encourage the use of novel and robust machine learning methods by the community to overcome contextual human activity recognition.

In most cases, a slight improvement in performance was achieved when using the whole multimodal dataset, which includes IMUs and contextual data. We believe that with more exhaustive tuning of machine learning models, better results can be obtained. However the main goal of our experimentation is to show an example of usage of the dataset.

In order to improve the diversity of the multimodal dataset, new modality acquisition frameworks will be added, namely cameras and microphones. Further experimentation must be done to verify the complementarity of the various types of data.

Funding

This research has been funded by Universidad Panamericana through the grant “Fomento a la Investigación UP 2017”, under project code UP-CI-2017-ING-MX-02.

References

- Gale, C.R.; Cooper, C.; Sayer, A. Prevalence and risk factors for falls in older men and women: The English Longitudinal Study of Ageing. Age Ageing 2016, 45, 789–794. [Google Scholar] [CrossRef] [PubMed]

- Alshammari, S.A.; Alhassan, A.M.; Aldawsari, M.A.; Bazuhair, F.O.; Alotaibi, F.K.; Aldakhil, A.A.; Abdulfattah, F.W. Falls among elderly and its relation with their health problems and surrounding environmental factors in Riyadh. J. Fam. Commun. Med. 2018, 25, 29. [Google Scholar]

- Igual, R.; Medrano, C.; Plaza, I. Challenges, issues and trends in fall detection systems. Biomed. Eng. Online 2013, 12, 66. [Google Scholar] [CrossRef] [PubMed]

- Noury, N.; Fleury, A.; Rumeau, P.; Bourke, A.K.; Laighin, G.O.; Rialle, V.; Lundy, J.E. Fall detection-principles and methods. In Proceedings of the 29th Annual International Conference of the Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 1663–1666. [Google Scholar]

- Mubashir, M.; Shao, L.; Seed, L. A survey on fall detection: Principles and approaches. Neurocomputing 2013, 100, 144–152. [Google Scholar] [CrossRef]

- Lahat, D.; Adali, T.; Jutten, C. Multimodal data fusion: An overview of methods, challenges, and prospects. Proc. IEEE 2015, 103, 1449–1477. [Google Scholar] [CrossRef]

- Koshmak, G.; Loutfi, A.; Linden, M. Challenges and issues in multisensor fusion approach for fall detection. J. Sens. 2016. [Google Scholar] [CrossRef]

- Igual, R.; Medrano, C.; Plaza, I. A comparison of public datasets for acceleration-based fall detection. Med. Eng. Phys. 2015, 37, 870–878. [Google Scholar] [CrossRef] [PubMed]

- Casilari, E.; Santoyo-Ramón, J.A.; Cano-García, J. Analysis of public datasets for wearable fall detection systems. Sensors 2017, 17, 1513. [Google Scholar] [CrossRef] [PubMed]

- Frank, K.; Nadales, V.; Robertson, M.J.; Pfeifer, T. Bayesian recognition of motion related activities with inertial sensors. In Proceedings of the 12th ACM International Conference Adjunct Papers on Ubiquitous Computing-Adjunct, Copenhagen, Denmark, 26–29 September 2010. [Google Scholar]

- Vavoulas, G.; Pediaditis, M.; Chatzaki, C.; Spanakis, E.G.; Tsiknakis, M. The mobifall dataset: Fall detection and classification with a smartphone. Int. J. Monit. Surveillance Technol. Res. 2014, 2, 44–56. [Google Scholar] [CrossRef]

- Medrano, C.; Igual, R.; Plaza, I.; Castro, M. Detecting falls as novelties in acceleration patterns acquired with smartphones. PLoS ONE 2014, 9, e94811. [Google Scholar] [CrossRef] [PubMed]

- Vilarinho, T.; Farshchian, B.; Bajer, D.; Dahl, O.; Egge, I.; Hegdal, S.S.; Lønes, A.; Slettevold, J.N.; Weggersen, S.M. A combined smartphone and smartwatch fall detection system. In Proceedings of the 2015 IEEE International Conference on Computer and Information Technology; Ubiquitous Computing and Communications; Dependable, Autonomic and Secure Computing; Pervasive Intelligence and Computing (CIT/IUCC/DASC/PICOM), Liverpool, UK,, 26–28 October 2015; pp. 1443–1448. [Google Scholar]

- Sucerquia, A.; López, J.D.; Vargas-Bonilla, J.F. SisFall: A fall and movement dataset. Sensors 2017, 17, 198. [Google Scholar] [CrossRef] [PubMed]

- Kepski, M.; Kwolek, B.; Austvoll, I. Fuzzy inference-based reliable fall detection using kinect and accelerometer. In Artificial Intelligence and Soft Computing of Lecture Notes in Computer Science; Springer: Berlin, Germany, 2012; pp. 266–273. [Google Scholar]

- Kwolek, B.; Kepski, M. Improving fall detection by the use of depth sensor and accelerometer. Neurocomputing 2015, 168, 637–645. [Google Scholar] [CrossRef]

- Ofli, F.; Chaudhry, R.; Kurillo, G.; Vidal, R.; Bajcsy, R. Berkeley MHAD: A comprehensive multimodal human action database. In Proceedings of the 2013 IEEE Workshop on Applications of Computer Vision (WACV), Tampa, FL, USA, 15–17 January 2013; pp. 53–60. [Google Scholar]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor data fusion: A review of the state-of-the-art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- DLRdataset. Available online: www.dlr.de/kn/en/Portaldata/27/Resources/dokumente/04_abteilungen_fs/kooperative_systeme/high_precision_reference_data/Activity_DataSet.zip (accessed on 10 May 2018).

- MobiFalldataset. Available online: http://www.bmi.teicrete.gr/index.php/research/mobifall (accessed on 10 January 2016).

- EduQTech, tFall: EduQTechdataset. Published July 2013. Available online: http://eduqtech.unizar.es/fall-adl-data/ (accessed on 10 May 2018).

- Sistemas Embebidos e Inteligencia Computacional, SISTEMIC: SisFall Dataset. Available online: http://sistemic.udea.edu.co/investigacion/proyectos/english-falls/?lang=en (accessed on 10 May 2018).

- Rimminen, H.; Lindström, J.; Linnavuo, M.; Sepponen, R. Detection of falls among the elderly by a floor sensor using the electric near field. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 1475–1476. [Google Scholar] [CrossRef] [PubMed]

- Tzeng, H.W.; Chen, M.Y.; Chen, J.Y. Design of fall detection system with floor pressure and infrared image. In Proceedings of the 2010 International Conference on System Science and Engineering (ICSSE), Taipei, Taiwan, 1–3 July 2010; pp. 131–135. [Google Scholar]

- Mastorakis, G.; Makris, D. Fall detection system using Kinect’s infrared sensor. J. Real-Time Image Process. 2012, 1–12. [Google Scholar] [CrossRef]

- URFD University of Kseszow Fall Detection Dataset. Available online: http://fenix.univ.rzeszow.pl/mkepski/ds/uf.html (accessed on 10 May 2018).

- Teleimmersion Lab, University of California, Berkeley, 2013, Berkeley Multimodal Human Action Database (MHAD). Available online: http://tele-immersion.citris-uc.org/berkeley_mhad (accessed on 10 May 2018).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).