Abstract

This study investigates how remote multiplayer gameplay, enabled through Augmented Reality (AR), transforms spatial decision-making and enhances player experience in a location-based augmented reality game (LBARG). A remote multiplayer handheld-based AR game was designed and evaluated on how it influences players’ spatial decision-making strategies, engagement, and gameplay experience. In a user study involving 60 participants, we compared remote gameplay in our AR game with traditional hide-and-seek. We found that AR significantly transforms traditional gameplay by introducing different spatial interactions, which enhanced spatial decision-making and collaboration. Our results also highlight the potential of AR to increase player engagement and social interaction, despite the challenges posed by the added navigation complexities. These findings contribute to the engaging design of future AR games and beyond.

1. Introduction

Location-based games (LBGs) create gaming experiences by using the player’s real-world (RW) indoor or outdoor locations, enabling interaction with the physical environment through networked interfaces [1]. Augmented Reality (AR) can enhance these experiences by integrating virtual elements into the RW [2], making interactions more engaging, and allowing players to experience the game in a more contextually rich environment [2,3]. Such location-based augmented reality games (LBARGs) use the player’s physical location to initiate, continue, and improve gameplay [4]; nevertheless, they often do not actually use the unique characteristics of these locations in the gameplay [5,6]. Consider a LBARG, for example, that allows a number of participants in different locations to share their surroundings and engage in a game similar to hide-and-seek. Engaging in physical exploration of the location, exchanging virtual resources between locations, and creating unique gameplay experiences may be possible with this kind of game. Play may become more dynamic and participatory if RW characteristics are incorporated as resources and game elements into the LBARG.

The integration of AR into such games, however, introduces several unique challenges. Players must navigate and interact with both real and virtual elements, requiring them to develop new spatial decision-making strategies. Moreover, the remote nature of these games adds further complexity, as players must rely on virtual cues and AR interfaces to make spatial decisions [7]. Understanding how these factors influence gameplay is crucial for designing engaging gameplay experiences.

This study describes the design and evaluation of a remote multiplayer AR hide-and-seek game.The study focuses on how such a game can influence players’ spatial decision-making strategies, engagement, and overall gameplay experience. Through a user study involving 60 participants, the study investigated how AR changes the way players interact with virtual and physical elements in a shared remote environment and with each other. The contributions of this paper include the following:

- An analysis of how AR impacts spatial decision-making and player experience in remote multiplayer games.

- Insights into the design of AR game mechanics connecting remote players and locations that enhance game engagement.

Unlike prior studies focusing on co-located AR play, this work investigates AR-specific game mechanics that enable engaging remote gameplay. Key mechanics include virtual object manipulation and the real-time representation of remote players within shared virtual spaces. This study demonstrates how AR can transform traditional game formats, such as hide-and-seek, into novel collaborative gaming experiences by bridging the gap between physical and virtual interactions. This work aims to assist AR game developers in designing engaging experiences for players connecting across distances. The paper provides actionable recommendations on using AR mechanics to enhance player engagement and interaction in remote environments.

2. Related Work

2.1. Location-Based Augmented Reality Games

LBGs are a genre that combines the RW environment of players with a virtual one to create a unique gaming experience [8,9]. Through networked interfaces, these games are made to allow players’ physical surroundings, whether they are outside or indoors, to be incorporated into gameplay [1]. Research has shown that LBGs can increase game engagement, facilitate learning, and foster social interactions [1,10]. Using AR [2], LBGs can further transform the RW environment into interactive playgrounds [3]. Building on the existing body of research, Alha et al. [3] provide an analysis of AR features in LBGs. Their findings reveal that AR is often used as a gimmick rather than a central gameplay element, hindered by technical glitches and limitations. Alha et al.’s work also provides critical insights into the current state of AR in gaming, emphasizing the need for better integration of AR game mechanics to fully realize AR’s potential in enhancing player experiences.

Another recent study [11] offers a detailed examination of Mobile Augmented Reality (MAR) video games, focusing on their potential to transform spatial experiences. The study analyzed 46 MAR games and found that AR is frequently underutilized, often appearing as an auxiliary feature rather than a central gameplay element. Their findings also highlight that most MAR games incorporate AR superficially, missing opportunities to integrate it into the core gameplay mechanics. Their findings highlight the gap between the technological potential of AR and its actual implementation in gaming, pointing to the need to explore it further to understand the capabilities of AR in enhancing gameplay experiences.

Bhattacharya et al. [12] explored how LBGs can be redesigned in a global pandemic, highlighting the importance of exploring remote gameplay experiences when physical co-presence is limited. Following this direction, another study [13] explored three different ways of representing remote locations in a remote multiplayer LBARG, i.e., window view, overlay view, and miniature view. The overlay view, superimposed on the player’s location, enhanced spatial presence. This result was different from the window view, which framed the remote location in a windowed display, and the miniature view, which presented the remote location as a small-scale model. Ziegfeld et al. [14] state that in such remote gameplay, having a high level of spatial presence is important as it helps players feel more connected to the remote location .

2.2. Player Experience in Remote Gameplay

Examining the user experience in remote gameplay is important for understanding how such games can promote game engagement, particularly in situations where players are physically separated but remain connected through shared virtual spaces, as studied by Rapp et al. [15] and Papangelis et al. [16]. For instance, the use of remote AR elements, such as virtual objects in the LabXscape prototype [17], demonstrates that, despite differences in technology, both AR and VR can foster deep engagement and social presence through interdependent roles and shared virtual environments.

Papangelis et al. [16] acknowledge that the perception of a location in a game addresses human territoriality and has the potential to significantly influence engagement and motivation to play LBGs using mobile phones. By allowing players to engage in gameplay at a location without being bound by geographical proximity, a new genre of LBGs opens up, offering innovative ways for connecting with each other and their locations.

2.3. Spatial Decision-Making

Spatial decision-making is a cognitive process that involves perceiving, interpreting, and acting upon spatial information to navigate, locate, and interact with objects and environments [18]. In real-world environments, spatial decision-making is also crucial for everyday activities such as navigation, object location, and spatial orientation [19].

On the other hand, AR integrates virtual elements with the physical world, offering unique opportunities for enhancing spatial decision-making [20]. AR can provide additional spatial cues that are not available in purely physical environments, potentially improving navigation and object location efficiency [21].

A study has found that AR systems could enhance spatial understanding and performance in navigation tasks by providing contextual spatial information [22]. Billinghurst et al. [23] presented that participants using an AR navigation system performed better in spatial tasks than those relying solely on physical maps. However, a study by Livingston et al. [24] indicates that the cognitive load associated with processing AR information can hinder spatial decision-making. They found that while AR can provide valuable spatial information, the complexity of AR interfaces can lead to increased cognitive load and reduced performance in some tasks [24]. Based on the related work, we identify that spatial decision-making is a construct that needs to be taken into consideration to understand and evaluate a LBARG that supports real-time remote gameplay through connected locations.

2.4. Summary

While currently AR is mainly used in LBARGs as a gimmick, research has shown that it may also be a core gameplay element. In order to proceed beyond superficial features and truly foster player engagement, Alha et al. [3] highlight the need for the deep integration of AR mechanics into LBARGs. Furthermore, Ziegfeld et al. [14] highlight the importance of spatial presence in remote gameplay, demonstrating how AR’s characteristics may enhance players’ connections to remote locations. Following another study [13], such spatial presence for players can be specifically achieved by representing the remote location using an AR overlay mode. Furthermore, in line with the insights provided in Bhattacharya et al. [12], exploring how to integrate remote locations into the gameplay and how this may enrich the gaming experience by connecting players across locations is important to the field. Therefore, by examining player experience, we aim to identify the specific AR features that allow for social interaction and maintain high levels of engagement, particularly in remote settings. The study builds on this knowledge, focusing on how remote gameplay mechanics can connect players and foster game experience, leading to high engagement across distances.

According to previous work, understanding spatial decision-making in AR games is important for the development of remote LBARGs. This understanding impacts not only the creation of game elements that help users to navigate and engage with both AR and RW elements but also affects the overall gameplay experience and engagement. Therefore, this research aims to connect players across distant locations using novel AR game mechanics, while evaluating how those can affect players’ spatial decision-making. However, to the best of our knowledge, there has been no prior study that specifically investigates how the interaction between players and locations influences gameplay experiences within the context of LBARGs, particularly in exploring games that emphasize remote multiplayer gameplay.

3. Methodology

3.1. Game Scenario

Our study builds on the aforementioned insights and aims to explore spatial decision-making in the context of LBARGs. For this study, we selected a hide-and-seek game with hot and cold variation [25]. This game is selected because it supports movement, object interaction, and decision-making about physical space. The game structure supports distributed play while keeping players socially connected. Prior work by Vetere et al. [26] presented a remote hide-and-seek game designed to connect family members at a distance. Their study shows that hide-and-seek allows players to interact socially and physically, even when separated. They note that “Distributed hide-and-seek is a game between humans. It mediates social interactivity to help build personal relationships” [26].

In this game scenario, one player (the ‘Hider’) hides an object, and the other player (the ‘Seeker’) searches for it, with the Hider providing clues by indicating ‘hot’ when the Seeker gets closer to the object and ‘cold’ when they move away from it. This dynamic provides a gameplay scenario to study how players collaborate in a multiplayer setup and how they make decisions based on spatial cues [27]. By developing an AR game to examine how a remote multiplayer AR hide-and-seek game influences players’ spatial decision-making compared to a co-located gameplay, this study aims to explore how to design AR game mechanics that incorporate AR’s capabilities to make use of the RW for the gameplay and enable remote collaboration between players. The research aims to answer the following primary research question.

- RQ: How does the interaction between players and shared remote locations in a remote augmented reality hide-and-seek game compare to interactions in a co-located real-world hide-and-seek game in terms of players’ spatial decision-making, game engagement, and player experience?

To address this research question, two hypotheses were formulated, grounded in the research gaps and opportunities identified in the literature.

- H1: Players will exhibit different hide-and-seek strategies (hide spot selection, search patterns, spatial decision-making) when playing remotely in AR compared to co-located physical play.This hypothesis is motivated by previous work highlighting how spatial decision-making is influenced by the characteristics of AR. As discussed above, AR introduces virtual spatial cues and overlays that differ from those in purely real-world settings, potentially altering how players perceive space, choose specific spots in a location to perform tasks, and execute movement-based strategies [20,22]. Furthermore, while AR can enhance spatial understanding [23], it may also increase cognitive load and change behavior in dynamic tasks such as hiding and seeking [24]. These factors suggest that remote AR environments will encourage different spatial strategies compared to co-located play.

- H2: Players will report higher levels of game engagement when playing remotely in AR compared to co-located physical play.This hypothesis is motivated by the literature that explores the role of AR in enhancing immersion, presence, and engagement, particularly in remote settings. Previous work reviewed how spatial presence and social cues facilitated by AR can increase players’ involvement even across physical distances [14,15]. Additionally, game prototypes such as “LabXscape” [17] show that remote AR play can support deep collaboration and enjoyment through asymmetrical roles and device-based interaction. These findings collectively suggest that AR-enabled remote play may foster greater engagement compared to co-located formats.

The study was designed with two experimental conditions as follows: RW hide-and-seek gameplay and AR hide-and-seek gameplay. The RW condition represents co-located gameplay that reflects the dynamics of hide-and-seek within a shared physical space. In contrast, the AR condition uses separate rooms to simulate a scenario of remote multiplayer LBARGs, where players interact in real time while being physically apart.

This comparative design enabled us to examine both the opportunities introduced by AR gameplay and the challenges associated with remote interactions. To address RQ, we focused on how AR influences spatial decision-making, player engagement, and the overall gameplay experience, three interrelated dimensions that are crucial for designing compelling LBARGs. These three dimensions have been repeatedly emphasized in prior work as essential to understanding interaction in LBARGs [10,18].

Spatial decision-making refers to the cognitive process by which players perceive, interpret, and act upon spatial cues in order to navigate or place objects within the game environment [18]. In AR games, this process is often augmented by virtual overlays, which can enhance or hinder perception depending on the clarity and context of the information presented [22,23]. Effective spatial decision-making becomes especially critical in remote LBARGs where players interact with unfamiliar virtual representations of real-world environments [20].

Together, spatial decision-making and engagement shape the overall experience, which encapsulates the player’s perceived usability, enjoyment, social connection, and sense of presence [12,16]. In this study, we evaluate these gameplay constructs in AR gameplay in comparison to co-located real-world gameplay.

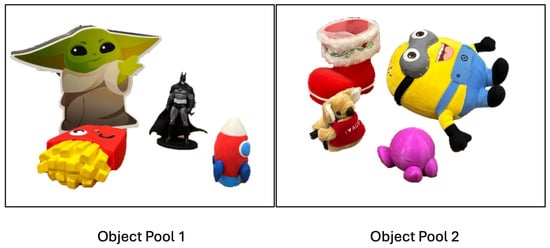

In both conditions, two pools of objects were arranged for hiding. We randomized the selection of the object pool for hiding in both gaming scenarios. Object Pool 1 (Figure 1) included “Baby Yoda", “Batman", “Rocket", and “Fries", while Object Pool 2 included “Koala", “Minion”, “Kirby", and “Christmas Shoe". These were physical objects in the RW condition, and pre-scanned virtual objects were used in the AR condition.

Figure 1.

Object pools used in the study.

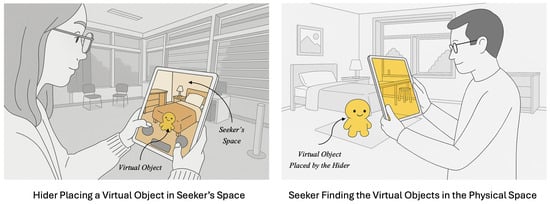

The study was conducted in two rooms, which were used in both the RW and AR versions of the hide-and-seek game. This setup was intentionally chosen to align with the study’s objective of exploring how AR can enable synchronous remote gameplay between physically separated players. In the RW condition, the game followed traditional hide-and-seek rules, where both players occupied the same physical space. By contrast, the AR condition maintained physical separation between players, creating a geographically distributed play (see Figure 2).

Figure 2.

Remote hide-and-seek gameplay. The object’s position in the Seeker’s view is illustrative and may not precisely match the placement shown in the Hider’s view due to diagrammatic simplification.

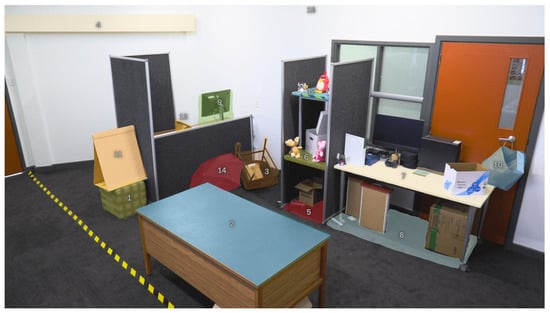

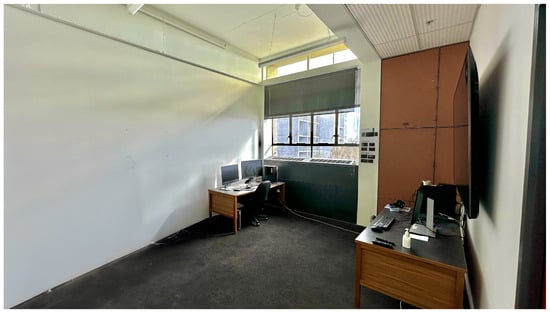

Room 1 (Figure 3) was used as the Seeker’s space in both conditions. It was arranged with various everyday objects and plausible hiding spots. Room 2 (Figure 4) was used in the AR condition and served as the Hider’s space. In the RW version, Room 2 was not used, since both players shared Room 1 in keeping with co-located gameplay norms.

Figure 3.

Gameplay space: Room 1 with Areas of Interest (AOIs) marked. The numbered labels correspond to hide spots listed in Table 3.

Figure 4.

Gameplay space: Room 2.

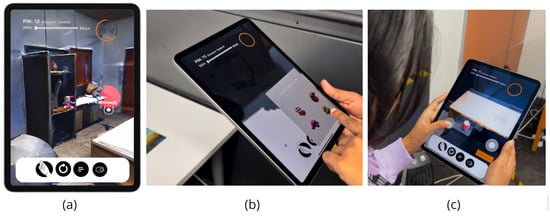

Figure 5a shows how the Hider gets to see the Seeker’s location in AR, how they select an object (Figure 5b), and how they position the object in the space (Figure 5c).

Figure 5.

(a) Hider’s view of Seeker’s space with a 1:1 AR representation. (b) Hider selects an object from the virtual inventory. (c) Hider uses virtual thumbsticks to position the object in the remote space.

The Hider’s view of the Seeker’s space is a 1:1 scale AR representation of the Seeker’s room, which is similar to the “overlay mode” explored in our previous user study [13]. The Hider has to physically move and orient accordingly in the RW space to navigate in the virtual space of the Seeker.

The Hider can click the first button from the left in the bottom bar to open the virtual object inventory, as shown in Figure 5b. Then, the Hider gets virtual thumbsticks on the screen to position the virtual object in the AR representation of the Seeker’s space as shown in Figure 5c. After placing the virtual object, the Hider needs to click the “Confirm Placement” button, and then the clock starts to count down for both players. We set the time limit to 5 min based on observations from 5 playtesting sessions conducted before the main experiment. This duration was chosen to balance gameplay difficulty, giving the Seeker enough time to explore without causing boredom.

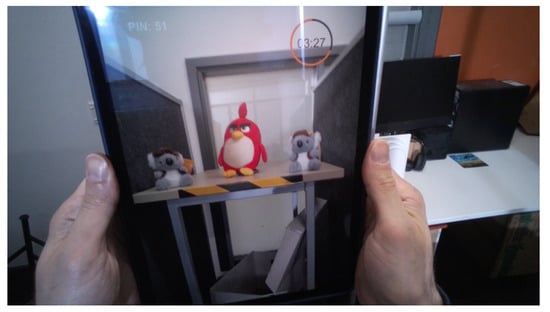

The Seeker then sees a countdown timer and must physically move around the room to search for the hidden object. The object appears in the exact location where the Hider placed it remotely. If the Seeker finds the object and taps on it within the allotted time, it is considered a win for the Seeker. Figure 6 shows how Seekers see the hidden objects in AR.

Figure 6.

Seekers find remotely hidden AR objects in the physical space.

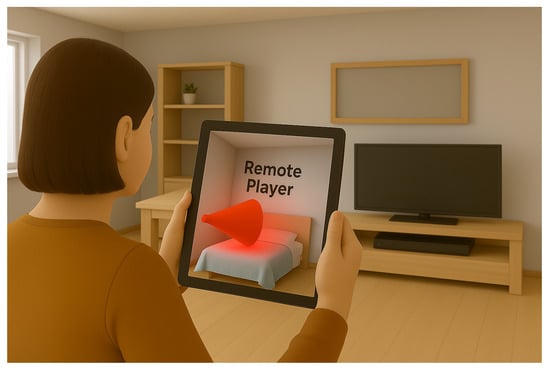

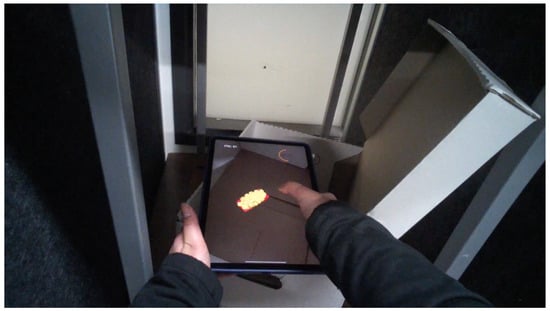

The game includes real-time voice communication between players, allowing them to exchange directional hints such as “hot” and “cold” during AR gameplay, even when physically apart. To help the Hider understand the Seeker’s position and viewing direction, we also introduced a 3D frustum labeled “remote player”, as illustrated in Figure 7. This frustum appears as a virtual cone-shaped object emitting red color light. The cone represents the Seeker’s movement and orientation, while the light indicates their FOV, essentially showing where the Seeker is currently looking.

Figure 7.

A conceptual visualization of the 3D frustum as seen through the Hider’s tablet. The red cone (frustum) represents the Seeker’s orientation and FOV, rendered in the remote environment to assist in collaborative decision-making.

3.2. System Design and Implementation

The AR game was developed using Unity 3D (2022.3 LTS) [28] and Niantic Lightship ARDK [29], including their core AR modules to support remote multiplayer gameplay. The primary goal of the system architecture was to enable spatially accurate object placement and real-time interaction between physically separated players, allowing them to collaborate within a shared virtual environment mapped onto the real world.

A 3D mesh was created of Room 1 using Lightship Scanning Framework [30], which runs on LiDAR-enabled iPads to represent the Seeker’s space in 3D. This scan generated a detailed, textured reconstruction of the Seeker’s physical room, which was then exported into Unity 3D as an .obj file and integrated into the AR scene rendered on the Hider’s device. This approach allowed the Hider to view and interact with the Seeker’s space remotely, at 1:1 scale. For real-time AR content alignment in the real world, the system used Lightship’s VPS (Visual Positioning System) feature. Although the experiment was conducted in a controlled indoor setting, we utilized the VPS’s shared AR support to ensure stable and precise AR object placement within the Seeker’s physical environment. This was achieved through remote AR content authoring, which ARDK provides as part of its feature set. These capabilities enabled the Seeker’s device to accurately track surface geometry and support the precise placement of virtual objects, even when the user moved or the camera view changed.

To synchronize interactions between the Hider and Seeker, Unity’s Netcode was used for GameObjects [31]. This enabled real-time synchronization of object placement, rotations, player transforms, and visibility states. A host–client model was used, with the Seeker’s device acting as host and the Hider joining as a remote client. The devices were connected over a Wi-Fi network.

To support player-to-player communication, real-time voice chat was implemented using Normcore SDK [32]. This plugin allowed low-latency voice streaming directly within Unity, enabling players to exchange verbal cues like “hot” and “cold” during gameplay. The voice chat remained active throughout the session and was integrated with player roles.

4. Study Design

After obtaining ethics approval from the University of Canterbury Human Research Ethics Committee (HREC 2024/21/LR-PS), we recruited participants who all provided informed consent. We also put procedures in place to keep their data confidential. In the following sub-sections, the user study is described in detail. We explain our mixed-methods evaluation approach, how we selected participants, and the instruments we used to gather data.

4.1. Measures

The study employed the below validated questionnaires to gather quantitative data.

- Game Engagement Questionnaire (GEQ): Assesses the level of engagement during gameplay [33] on a 3-point Likert scale (0 = No, 1 = Maybe, 2 = Yes) validated instrument, which is relevant to testing H2, where we hypothesized that players would report higher engagement levels in AR compared to RW gameplay.

- User Engagement Scale (UES-SF): Evaluates user engagement in terms of focused attention, perceived usability, aesthetic appeal, and reward [34] on a 5-point scale (1 = Strongly Disagree to 5 = Strongly Agree) validated instrument. This is selected to further explore both RQ and H2, as it provides results focusing on how the proposed game design impacts the user engagement of remote multiplayer gameplay.

- User Experience Questionnaire-Short (UEQ-S): Assesses the pragmatic and hedonic qualities of the game [35] on a 7-point semantic differential scale ranging from −3 to +3. This validated questionnaire helps in evaluating the overall gameplay experience related to the both RQ and H2.

Additionally, the study recorded in-game metrics, such as time taken to hide and find objects and hide spots, to correlate subjective data with objective gameplay behavior. Furthermore, Tobii Pro Glasses 2 eye tracker was used to record fixations of players in both RW and AR conditions, contributing to understanding both H1 and RQ. We also used a ceiling camera mounted in Room 1 and recorded player behaviors to track movement patterns and hide spot selections, as well as spatial decisions made in the gameplay for further analysis. Finally, we conducted a semi-structured interview. The interview included the questions to understand player behavior, spatial decision-making, engagement, and overall experience across the two game modes, helping to answer the RQ.

4.2. Procedure

Participants were recruited through advertisements posted on relevant social media pages and noticeboards around the research institute. The study was advertised to a general audience, and individuals with normal or corrected-to-normal vision and hearing were eligible to participate.

The experiment was conducted using a within-subject design. Each participant experienced both the RW hide-and-seek game and an AR hide-and-seek game. Each gameplay session included two participants who took turns as the “Hider” and the “Seeker”. We used partial counterbalancing to ensure that half the participants started with the RW condition and the other half with the AR condition. However, logistical constraints prevented full counterbalancing. This limitation may introduce order effects, where participants’ experiences in one condition influence their performance or perception in the subsequent condition. However, these effects were minimized by providing sufficient breaks between conditions to reduce carryover effects. After completing the gameplay sessions, participants were asked to fill out the questionnaires and participate in the semi-structured interview.

5. Results

5.1. Participants

A power analysis [36] was conducted to determine the required sample size for detecting medium effects in a within-subjects design using both parametric and non-parametric tests. Based on an expected medium effect size (Cohen’s ; ; ), a significance level of , and a target power of 0.80, the required sample size was determined to be 56 participants (28 pairs). However, we recruited a total of 60 participants, aged between 18 and 40 years (M = 27.88, SD = 6.05). The sample included 30 females, 28 males, 1 non-binary individual, and 1 participant who preferred not to disclose their gender. Only three pairs consisted of participants with no prior AR experience, while all other pairs had at least one participant with previous AR experience. In terms of relationship dynamics, five pairs were comprised of total strangers, whereas all other pairs included participants who were acquainted with each other.

5.2. Engagement (GEQ and UES-SF)

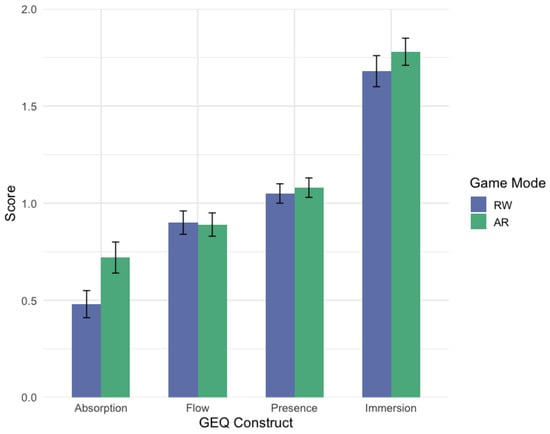

Figure 8 shows the comparison of mean GEQ construct scores across four GEQ constructs, such as Absorption, Flow, Presence, and Immersion, for the RW and AR game conditions. Absorption scores were higher in the AR condition (, ) compared to the RW condition (, ). Flow scores were very similar between conditions (, ). Similarly, Presence scores indicated minimal differences between AR (, ) and RW (, ). Immersion yielded the highest mean scores among all constructs, with AR condition scores (, ) slightly higher than those in the RW condition (, ). Error bars represent standard errors (SEs), indicating variability around the means. Statistical significance and further detailed comparisons are reported in subsequent sections.

Figure 8.

Comparison of mean GEQ construct scores between RW and AR game conditions, with error bars representing the standard error.

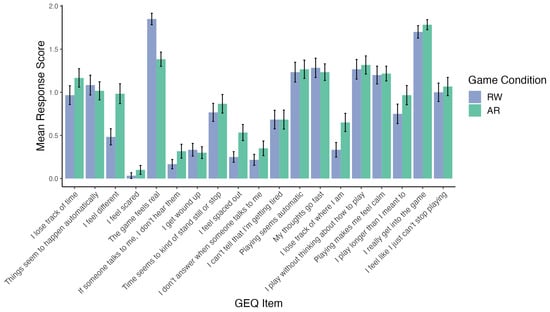

To provide a more granular breakdown, Figure 9 shows the comparison of mean GEQ item scores between the RW and AR game conditions.

Figure 9.

Comparison of mean GEQ item scores between RW and AR game conditions, with error bars representing the standard error.

In the GEQ questionnaire, each item was rated on a 3-point Likert scale (0 = No, 1 = Maybe, 2 = Yes), where higher scores reflect greater levels of the experience described. Error bars represent the standard error of the mean, indicating the variability in participant responses.

To assess the internal consistency of the GEQ responses, Cronbach’s alpha [37] was computed to assess the internal consistency of GEQ responses across the two conditions. Reliability was acceptable for both RW () and AR () conditions, based on data from 60 participants.

To assess differences in GEQ item responses between the RW and AR conditions, we used the Mann–Whitney U test, as the data were ordinal and not normally distributed.

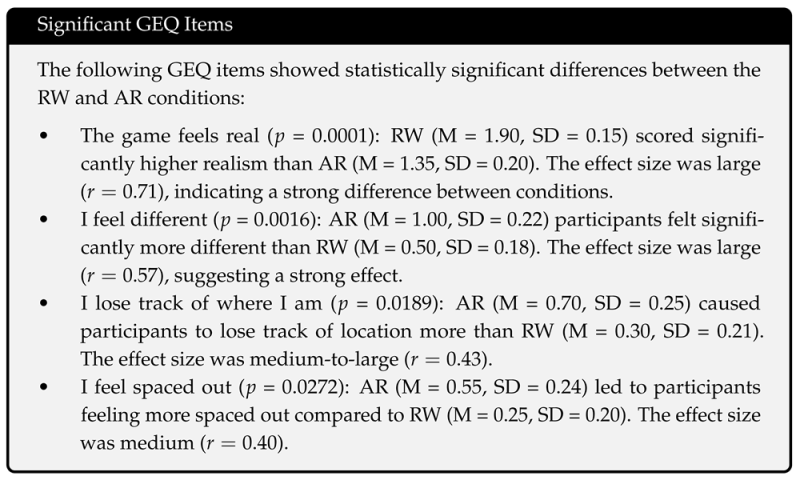

For the remaining GEQ items, no statistically significant differences were found between the RW and AR conditions, as the p-values were greater than 0.05.

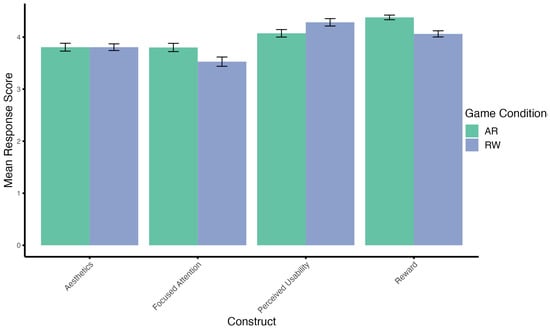

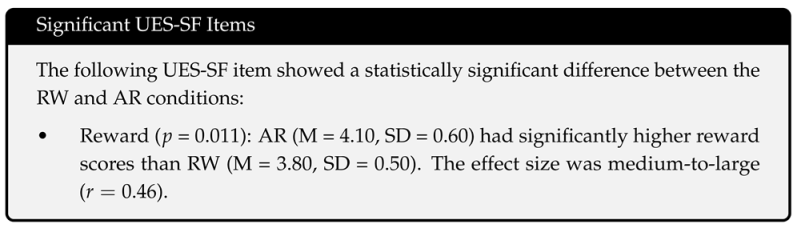

Cronbach’s alpha for UES-SF responses across all participants () was , indicating good internal consistency. This questionnaire was used to assess participants’ engagement across the following four constructs: Focused Attention, Perceived Usability, Aesthetics, and Reward. It is important to note that the PU items are reverse-coded. After handling the reverse-coded PU items, mean scores for each construct were calculated separately for the RW and AR conditions.

The results, as shown in Figure 10, participants reported marginally higher scores across UES-SF constructs in the AR condition, with a significant difference observed only in the Reward subscale (AR: RW: ), indicating that AR was perceived as more fulfilling. However, other UES-SF subscales did not show significant differences between conditions. Specifically, Aesthetic Appeal scores were almost identical in AR (, ) and RW (, ), while Focused Attention scores were comparable between AR (, ) and RW (, ). In contrast, the Perceived Usability subscale was slightly lower in the AR condition (, ) compared to the RW condition (, ).

Figure 10.

Mean UES-SF construct scores (1 = strongly disagree; 5 = strongly agree) for RW and AR conditions, with error bars showing standard error of the mean (SEM). Perceived Usability (PU) items were reverse-coded.

As the UES-SF constructs are based on ordinal data and not normally distributed, we used the Wilcoxon Signed-Rank Test to compare the RW and AR conditions across the following four constructs: Focused Attention, Perceived Usability, Aesthetic Appeal, and Reward.

No statistically significant differences were found between the RW and AR conditions for the remaining constructs, as the p-values were greater than 0.05.

5.3. User Experience

The results from the UEQ-S provide valuable insights into the pragmatic and hedonic quality of the game under both RW and AR conditions. As highlighted in Table 1, the RW condition showed stronger pragmatic quality (M = 2.013), the AR condition was rated significantly higher in hedonic quality (M = 2.004 vs. 0.604). The overall UEQ-S score was also higher in AR (M = 1.821 vs. 1.308).

Table 1.

Comparison of UEQ-S scales between RW and AR conditions.

To evaluate whether condition order influenced the results, we conducted non-parametric comparisons on GEQ and UES-SF constructs. For UEQ-S, since its 7-point semantic differential scale is commonly treated as interval data in prior work [35], a two-way mixed ANOVA was used to examine order effects. However, no significant interactions were found (), suggesting that order effects did not significantly bias the results.

5.4. Game Metrics

During the experiment, we recorded the number of game metrics for each study session, including the “time to hide an object”, the “time to find an object”, and the “place where the Hider hid the object”.

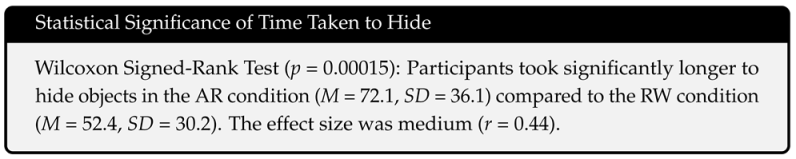

For the “time to hide”, participants’ median time is higher in the AR condition compared to the RW condition, with a wider spread indicating greater variability in the AR condition. Notably, an extreme outlier is visible in the AR condition (above 200 s). We retained this data point in our analysis to reflect real participant behavior.

We did not apply a log transformation to the hiding time data as the Wilcoxon Signed-Rank Test is a non-parametric test that does not assume normality of the data. Since the distribution of hiding times was not normal (as confirmed by a Shapiro–Wilk test), and our analysis aimed to preserve the ordinal ranking of differences between conditions, we opted for the Wilcoxon test without transforming the data [38,39]. This approach maintains the integrity of the rank-based analysis while appropriately handling the non-normal distribution of our experimental data [40].

For the “time to find”, participants took a similar amount of time to find objects in both the RW and AR conditions. Notably, the RW condition exhibited a wider spread and several extreme outliers, with some search times exceeding 400 s. In contrast, the AR condition showed a more concentrated distribution with fewer and less extreme outliers. The density plot shows a broader distribution of finding times in the RW condition compared to the more concentrated distribution in the AR condition. However, there is no statistical significance between the two conditions.

As depicted in Table 2, the usage patterns of various objects differ between the RW and AR conditions. Notably, “Batman”, “Fries”, and “Minion” were more frequently used in the AR condition, with “Batman” having a threefold increase in usage (n = 10) compared to the RW condition (n = 3). Similarly, “Minion” exhibited a substantial increase in usage, from n = 1 in RW to n = 15 in AR.

Table 2.

Object usage comparison between RW and AR conditions.

On the other hand, “Koala” and “Rocket”, which were prominent in the RW condition, indicated a decrease in usage in the AR condition, with “Koala” being selected n = 16 times in RW but only n = 9 times in AR and “Rocket” being used n = 11 times in RW compared to n = 5 times in AR. The object “Christmas Shoe” showed similarly low usage in both conditions (n = 2 in RW and n = 3 in AR), indicating minimal preference by participants across the study.

Table 3 presents a comparison of hide spot usage between the RW and AR conditions. These hide spots were also used as AoIs for the gaze entropy analysis described in Section 5.5. The hide spot Chair was utilized n = 12 times in the RW condition, whereas it was used only n = 1 time in the AR condition. In contrast, “CenterTable” was not used at all in the RW condition (n = 0) but was selected n = 11 times in the AR condition. Other spots, such as “PCDeskUnder” and “PCSecondary”, were consistently used across both conditions, with “PCDeskUnder” being selected n = 12 times in RW and n = 4 times in AR, and “PCSecondary” being used n = 5 times in RW and n = 7 times in AR. Some spots like “Umbrella” and “UpsideCeiling” showed increased usage in AR, while spots like “BoxUnderPaperBoard” and “FloorShelf” were more frequently used in the RW condition. The “TopShelf” and “TopStructure” hide spots also exhibited different usage patterns between the two environments, with “TopShelf” being chosen more often in AR (n = 5) and “TopStructure” having a similar usage across both conditions (n = 2 in RW and n = 3 in AR).

Table 3.

Hide spot comparison between RW and AR conditions.

To analyze player communication, we recorded the frequency of verbal “hot” and “cold” cues exchanged between Hiders and Seekers, comparing strangers and known pairs. The results indicate that strangers relied more on verbal cues in the RW condition (M = 6.64) than in AR (M = 4.27). In contrast, known pairs demonstrated consistent cue usage across both conditions, with a slight reduction in AR (M = 5.59 to M = 5.09). The results, grouped into strangers and known pairs, are presented in Table 4.

Table 4.

Hot and cold cue frequencies by player relationship type.

The results show the following:

- Strangers relied more on verbal cues in the RW condition () compared to AR ().

- Known pairs exhibited consistent cue usage across both conditions, with only a slight decrease in AR ().

The results reveal two distinct trends. Strangers relied more on verbal cues in the RW condition (M = 6.64) compared to AR (M = 4.27). In contrast, known pairs showed relatively consistent cue usage across both conditions, with only a slight decrease in AR (M = 5.59 to M = 5.09).

5.5. Gaze Behavior Analysis

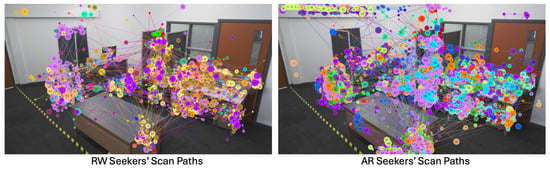

The gaze data were analyzed using the Tobii Pro Glasses 2 eye tracker, which recorded fixation points and scan paths during both RW and AR gameplay sessions. The fixation scan paths (Figure 11) show where players looked during the game and how their attention shifted around the environment. The scan paths show the sequence and direction of players’ gaze movements. The scan paths were mapped relative to the player’s center of FOV to account for physical movements in the AR space. Each color represents a separate scan path by a participant, allowing visual distinction across sessions. Larger circles indicate longer fixation durations, while different colors help differentiate between the gaze behavior patterns of multiple participants.

Figure 11.

Seekers’ scan paths between RW and AR conditions.

The scan paths, as shown in Figure 11 show the sequence and direction of players’ gaze movements. The RW condition scan paths demonstrate diverse exploration routes, covering larger horizontal areas. These include areas such as beneath desks, chair zones, and object shelves visible within the immediate FOV. In contrast, the AR condition shows denser scan path clustering and a greater vertical spread. Fixations extend toward areas above door frames, mid-air regions, and upper structural elements, such as the horizontal concrete beam. Scan paths also include upward trajectories that are not present in the RW condition.

The upward fixation behavior and concentrated scan paths in the AR condition can be attributed to the ability to hide objects in unconventional locations, such as ceilings and walls. These augmented spatial affordances required players to adjust their gaze behavior and search patterns dynamically, reflecting the added spatial complexity of AR gameplay.

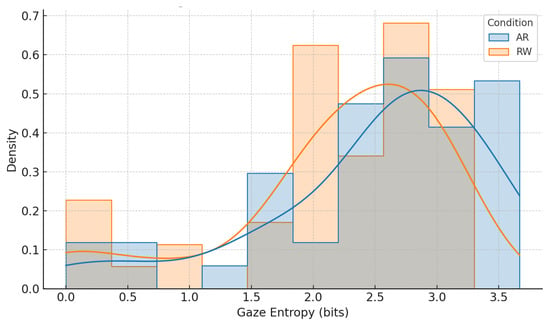

Following the information-theoretic approach outlined by Scott et al. [41], we used Shannon entropy [42] to assess the structure of spatial attention across gameplay conditions. In their study, entropy served as a principal measure of cognitive organization during the development of spatial knowledge. Similarly, in our study, gaze entropy is used to evaluate how Seekers allocated visual attention across the Real-World space, with higher entropy indicating broader, more distributed visual exploration.

The study further examines how Seekers distributed their visual attention across different AoIs. The numbers annotated in Figure 3 correspond directly to the AOIs listed in Table 3. The spatial gaze entropy was calculated for each participant. Spatial gaze entropy quantifies the dispersion of gaze fixations across AoIs and reflects the breadth of visual exploration. A higher entropy value indicates that fixations were more evenly distributed across multiple AoIs, whereas lower entropy values suggest that fixations were concentrated on a smaller subset of regions.

For this analysis, the Total_duration_of_fixations values that were associated with each AoI from the eye-tracking dataset were extracted. These durations were then summed across all intervals for each participant. To compute entropy, each participant’s fixation durations were normalized into a probability distribution over AoIs, where each value represented the proportion of time spent fixating on a specific AoI relative to their total fixation duration.

The entropy H was calculated using the Shannon entropy formula, expressed as follows:

where denotes the proportion of total fixation duration directed toward AoI i. AoIs with zero fixation duration were excluded from the summation to avoid undefined logarithmic terms.

Only the Seekers in both RW and AR conditions were included in this analysis. We then visualized and compared the distributions of spatial gaze entropy across the two game conditions as shown in Figure 12.

Figure 12.

Distribution of spatial gaze entropy for Seekers in RW and AR conditions. Higher entropy values indicate broader, more evenly distributed visual attention across AOIs.

Figure 12 illustrates the distribution of gaze entropy values recorded during the Seeker role across two gameplay conditions. Gaze entropy quantifies the spread of visual attention, with higher entropy values indicating more dispersed fixations across AoIs. The histogram displays normalized density plots for each condition, highlighting the range and frequency of gaze entropy values observed during gameplay.

5.6. Reflexive Thematic Analysis

A RTA [43,44] was conducted to analyze semi-structured interview transcripts of the participants’ qualitative feedback after game play. Participants played both versions of the game before the interview sessions were conducted with the help of the question list prepared Table 5.

Table 5.

Interview questions.

Initial codes were generated inductively, focusing on patterns of meaning related to players’ experiences across the AR and RW conditions. Codes were reviewed and iteratively grouped into potential themes, which were then refined through ongoing reflection and re-reading of the data to ensure they captured coherent, meaningful patterns across participants.

The final set of five themes as shown in Table 6 reported in the subsections below represents recurring ideas that were both semantically and conceptually significant to understanding and interpreting player experience.

Table 6.

Constructed themes from reflexive thematic analysis.

5.6.1. Engagement and Preference

Participants expressed mixed preferences when they were asked about their preferred version of the game. Sixteen participants favored the RW version, describing it as more “straightforward”.

“I like real world game is a little bit more straightforward.”—P20

Forty-four participants preferred the AR version, highlighting its novelty and interactive elements. Four participants noted that the AR gameplay felt more engaging due to its new features, such as remote collaboration and object placement.

“The AR one was a little bit difficult but also more engaging because of the new features.”—P5

Engagement levels were reported as high in both modes, with thirty-eight participants specifically pointing to AR’s ability to let them see the other player’s position and orientation, as well as place virtual objects within remote locations.

5.6.2. Challenges in AR

Sixteen participants found the AR version more challenging due to how navigation worked. They described the need to constantly move around the room to align the AR perspective while placing objects, which was physically demanding and at times disorienting.

“AR one was a little bit difficult because you had this perspective [real-world scale AR overlay] that you had to keep moving around.”—P11

Another challenge, mentioned by sixteen participants, was visual distortion in the AR space. In particular, colors in the AR representation did not match reality, which made recognizing objects and spaces more difficult.

“Colors were a bit distorted compared to reality, which made it harder to find objects.”—P2

5.6.3. Enhanced Feeling of Connection Through AR

Twenty-eight participants mentioned that features unique to the AR condition, such as real-time visualization of the other player’s position and gaze direction, contributed to a stronger feeling of connection during remote gameplay. One participant shared that being able to see the remote player’s avatar movements and hear their voice simultaneously made the experience feel more connected.

“I felt connected because we can see the movement of the player, also hear the player at the same time.”—P4

Another participant also explained that seeing the direction in which the other player was looking enhanced the sense of co-presence, making the gameplay feel collaborative and real-time, despite the players being in separate spaces related to the AR condition.

“It was cool to see where the other player was looking, feel like we were really playing together.”—P52

Similarly, a third participant in the AR mode reflected on how the remote player visualization added contextual information to conversations, enhancing the naturalness of the remote interaction.

“I liked that you could see the other person where they were looking because then that puts a bit more context to when you’re chatting to them.”—P41

However, despite these AR-specific enhancements, twelve participants expressed that they missed the tangible physical interaction present in the RW condition. The RW mode offered shared physical space and co-located gameplay, which some participants felt was not fully replicated through AR-based interaction.

5.6.4. Perception of Time and Space

Thirty-two participants reported that they lost track of time while playing the AR version of the game, even though a visible countdown clock was present on the screen throughout the session. One participant described the time passing quickly due to the engaging nature of the gameplay.

“Five minutes went pretty quick on the AR.”—P16

Sixteen participants found navigating the AR space initially disorienting, as the interface and spatial layout required adjustment. However, eight of them said they adapted quickly after moving around and exploring the environment. One participant shared that while the beginning felt confusing, the experience improved with physical movement.

“I was a bit confused at first but it became straightforward after moving around.”—P24

Another participant described how the process of adapting to the AR environment eventually led to more creative gameplay.

“It took a while to get used to the AR environment, but once I did, it was fun to find creative hiding spots.”—P8

In contrast, the RW condition did not elicit the same sense of temporal distortion. Eight participants explicitly stated that they were more aware of the time and surroundings during RW gameplay. One participant mentioned that the RW setting made it easier to track time and remain grounded.

“In the real-world one, I was more aware of the time and where I was.”—P27

5.6.5. Creative Spatial Decisions

Forty-two participants highlighted that the AR version enabled more varied and imaginative hiding strategies, such as hiding up in the air below the ceiling, compared to the RW version. Players appreciated being able to place objects in unconventional spots not constrained by RW physics. One participant described the ability to hide objects in elevated positions as a particularly creative aspect of the AR gameplay.

“It was pretty creative to have it off on the ceiling.”—P40

In contrast, the RW game limited players to more practical and obvious locations due to physical accessibility. A participant noted that hiding strategies were more constrained in the RW condition.

“In the real world, you have to hide objects in more obvious places.”—P33

In the RW game, 15 out of 60 participants attempted to match the color of the object with surrounding real-world items when selecting a hiding spot, even if their hiding spot remained within the Seeker’s direct line of sight. For example, see Figure 13.

Figure 13.

A frame captured from the eye tracker video, showing a participant hiding the red fries object alongside a similarly colored toy in the RW condition.

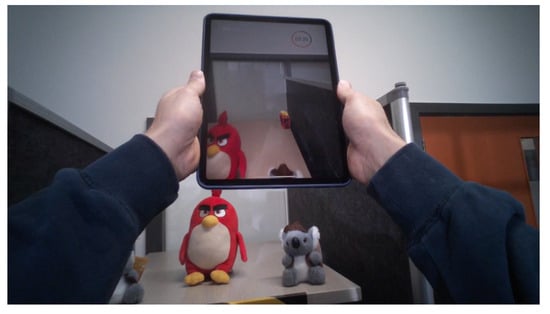

In the AR condition, six participants placed the virtual object behind a physical item in the environment, making use of spatial occlusion to partially obscure it from the Seeker’s viewpoint. Examples of this occlusion strategy are shown in Figure 14 and Figure 15.

Figure 14.

A frame captured from the eye tracker video during AR gameplay, showing the virtual fries object occluded by a physical item.

Figure 15.

A frame captured from the AR gameplay showing the virtual fries object placed behind the soft toy.

However, the majority of participants in the AR condition (40 participants) were observed placing visually prominent virtual objects. One such example is shown in Figure 16, where a participant placed the virtual fries object inside a cardboard box.

Figure 16.

A frame captured from the eye tracker video, showing a Seeker placing the virtual fries object inside a real-world box during AR gameplay.

Another participant emphasized the spatial freedom AR afforded, making the hiding process more open-ended and imaginative.

“In AR, you can place objects wherever you want, even in the air. It’s more creative.”—P29

6. Discussion

This study investigated the interaction between players and shared remote locations in a remote AR hide-and-seek game compared to a co-located RW game. We designed the above-discussed remote AR hide-and-seek game to answer our RQ “How does the interaction between players and shared remote locations in a remote AR hide-and-seek game compare to interactions in a co-located RW hide-and-seek game in terms of players’ spatial decision-making, game engagement and player experience?”. The study compared how players made spatial decisions to test H1, and it evaluated game engagement and overall experience across the two conditions to test H2.

6.1. Player Strategies and Spatial Decision-Making

Following Montello’s view of spatial decision-making as a process of perceiving, interpreting, and acting on spatial information [18], our findings support H1, demonstrating a clear shift in how players engaged with space in the AR condition. Longer hiding times (Section 5), increased upward gaze fixations (Figure 11), and participant reflections (e.g., “It took a while to get used to the AR environment but once did, it was fun to find creative hiding spots.”) show that players adopted distinct hiding strategies. These strategies were enabled by AR game mechanics introduced in this game design. For example, players could position virtual objects without physical constraints like gravity or reachability. This allowed placements in “mid-air” or on “ceilings”.

These novel spatial possibilities introduced greater complexity because they expanded the potential hiding spots beyond traditional physical boundaries. In the RW condition, hiding spots were limited to locations governed by familiar rules of physics, like gravity or flat surface availability. However, the AR condition enabled placements in unconventional areas, such as placements like mid-air or overhead spots. In the AR condition, players had to use different spatial reasoning. They needed to mentally visualize and assess unfamiliar hiding spots. This was different from the RW condition, where spots were predictable and physically constrained. Players demonstrated this shift through longer hiding times and upward gaze patterns, that are reported in the results. It suggests that players actively explored new parts of the space that would usually be ignored in RW gameplay. Participants also described choosing placements that felt “creative” or “playful”, such as hiding objects on ceilings or floating in the air. These behaviors indicate that the spatial reasoning involved in AR was not only different but also often more exploratory, driven by the novelty of the AR game design. However, these creative opportunities simultaneously introduced usability challenges, including difficulties in precisely controlling object placement through virtual thumbsticks and maintaining spatial orientation. This could discourage sustained player engagement, and further studies are needed to investigate this aspect.

6.1.1. Role-Based Analysis of the AR Hide-and-Seek Gameplay

The role-based analysis provides further insights into how Hiders and Seekers adapted their strategies and interactions across AR and RW conditions, focusing on hiding strategies, spatial exploration, and time-based metrics.

In the AR condition, Hiders were able to hide objects in places that would not be possible in the real world, such as mid-air or on ceilings, by using the AR game mechanics such as object placement and manipulating virtual objects using thumbsticks. This expanded the range of hiding spots and fostered innovative spatial decision-making as discussed above. Compared to RW, where physical constraints limited strategies, AR enabled Hiders to exploit vertical spaces and unconventional placements. Quantitatively, the median hiding time in AR was significantly longer than in RW (). This difference may reflect the additional time Hiders needed to explore and make decisions within the more flexible and unfamiliar spatial environment provided by AR. This difference may further suggest that participants spent more time planning placements due to the increased flexibility of the AR environment.

It might also reflect the learning curve participants described in the interviews, where eight participants noted that “it took a while to get used to” unconventional hiding spots made possible by AR. While these findings suggest a potentially more complex spatial decision-making process, further research needs to clarify how much of the additional hiding time is consumed from genuine complexity versus simple adaptation to unfamiliar AR game mechanics.

Eye-tracking data support the notion that AR gameplay changes conventional search behaviors. In RW, scan paths were dispersed primarily at ground-level and horizontal surfaces, suggesting Seekers instinctively searched areas consistent with traditional hiding spots. However, the AR condition yielded denser fixations in higher or mid-air regions (Figure 11), highlighting how participants actively explored vertical dimensions and structural elements, such as the tops of door frames or horizontal concrete beams, rarely noted in RW play. This vertical shift may indicate that AR encouraged Seekers to spend more visual attention on less conventional areas, such as ceilings or mid-air, in response to how Hiders used the expanded spatial possibilities. Although, interestingly, the quantitative measure of “time to find” remained comparable across conditions, the AR-based search showed higher vertical spread in gaze patterns and a more directed focus on potential mid-air targets. These findings highlight that even though players spent a similar amount of time searching in both conditions, AR required Seekers to scan different height ranges and explore less conventional hiding spots. This shift in behavior shows how AR changes the way players make spatial decisions during gameplay.

6.1.2. Object Usage and Visual Saliency

An analysis of object preferences to hide revealed clear differences between the AR and RW conditions. As shown in Table 2, the Minion, Batman, and Fries were much more popular in the AR condition. They were selected 15, 10, and 10 times, respectively. Although other objects were also visually distinctive (see Figure 1), participants described the Minion as easier to see and control when placing it in AR. In the RW condition, the Koala, a toy with less color and visual contrast, was used the most. The Minion was the least selected object, used only once. These results suggest that color, brightness, and clear edges strongly influenced object choice in AR.

This behavior may seem counterintuitive for a game like hide-and-seek, because the game demands Hiders to focus on the concealment of the object. However, according to feedback from participants, it appears Hiders often prioritized ease of placement and visual feedback over concealment effectiveness.

“The yellow Minion stood out more in AR, so it was easier to place where I wanted.”—P35

The preference for visually distinctive objects like the Minion in our AR hide-and-seek game aligns with findings in AR geovisualization research that visual saliency supports user attention and spatial interaction. To better understand how different visual properties affect user guidance, Zhang et al. [45] have evaluated seven visual variables in an outdoor AR geovisualization task. The study was performed using Microsoft HoloLens 2 devices (Microsoft Way, Redmond, WA, USA) equipped with eye-tracking capabilities. Their study considered seven visual variables, comprising five static and two dynamic types. The static variables were natural material color, illuminating material color, shape, angular size, and linear size, while the dynamic variables included vibration (Note: The “vibration” refers to a visually animated oscillation, not a haptic cue [45].) and flicker. Participants were asked to identify target map symbols overlaid in the AR environment as quickly and accurately as possible, allowing the researchers to assess which visual properties most effectively guided attention and supported spatial decision-making. Results indicated that their dynamic variables (vibration and flicker) provided the strongest visual guidance. Among the static variables, shape and illuminating material color were the most effective in capturing user attention. The color saturation and contour clarity of the Minion object likely triggered a similar visual response. These features may have offered perceptual clarity in a cluttered, LiDAR scan-based AR space.

While Zhang et al. [45] focused on passive observation of map symbols, our study shows that saliency also supports active object placement. Hiders not only noticed salient objects, but also they had to rotate, scale, and align them appropriately in the remote 3D environment. Visual saliency likely reduced this effort by improving object visibility and spatial feedback. Our quantitative results show that Hiders took significantly longer to place objects in AR than in RW (M = 72.1 s vs. 52.4 s, ). This increase reflects the added complexity of aligning objects in a 3D AR space without tactile feedback. However, selecting visually salient objects likely helped Hiders manage this complexity.

Sutton et al. [46] also support the above finding. They investigated the subtle saliency modulation (Note: The authors identify saliency modulation as the process of making a target object or a region stand out more naturally (like boosting its contrast or sharpness) so that people notice it faster) in optical see-through AR using a Microsoft HoloLens 1 (e.g., contrast boosting). Their within-subject experiment compared unmodulated images, saliency-modulated images, and images marked with explicit overlays (e.g., circles). Results showed that saliency modulation significantly increased the number of participants fixating on target areas and reduced time to first fixation compared to unmodulated scenes. Although their study involved passive viewing of static images, our interactive gameplay study reveals similar effects in a dynamic, task-driven context, where players use AR. These findings together reinforce the value of perceptually grounded, non-intrusive AR cues in supporting spatial decision-making and engagement.

In contrast, the RW condition favored what could be considered more practical hiding strategies. These involved placing objects in hiding spots that were physically reachable and easier to conceal within the real environment. For example, behind furniture, under tables, or within shelves (see Table 3). Objects like the Koala and Rocket, which were smaller and less visually prominent items, were selected more frequently in RW (16 and 11 times, respectively). No participant mentioned visual feedback as a constraint in RW. Notably, 15 participants in the RW condition employed basic camouflage strategies by aligning the object’s color with the surrounding environment, even when the object remained visible. Figure 13 shows an example of this behavior, where a Hider placed the red color fries object beside a similarly colored toy, attempting to blend it in through color matching rather than concealment through occlusion.

While the majority of AR participants (40 participants) favored visually salient objects for ease of placement and feedback (see Figure 16), 6 participants experimented with placing virtual objects behind physical ones to block them from the Seeker’s view. This behavior is illustrated in Figure 14 and Figure 15, where the virtual fries object was positioned behind a larger physical toy. This demonstrates that while saliency guided most object choices, some participants incorporated spatial depth and alignment to introduce concealment tactics more commonly seen in RW gameplay.

Together, these findings show that visual saliency plays a supportive role in AR gameplay. It helps to reduce the perceptual effort of spatial tasks, especially in interfaces like handheld AR. Even in a game that rewards hiding, Hiders still favored visibility and control. Future LBARGs can incorporate this further by designing virtual assets with strong visual anchors to support player actions in AR spaces.

6.1.3. Gaze Behavior and Search Patterns

Players demonstrated scattered fixations and upward scan paths, particularly due to spatial possibilities such as mid-air and ceiling placements in AR. A study compared visual search across AR, Augmented Virtuality (AV), and VR [47] and found that AR imposes a higher cognitive load than either AV or VR, and that is reflected by more dispersed eye-movement patterns in. In their study, AR users swept their gaze across many distinct regions, rather than homing in on a few likely spots.

In the AR condition of the study, players held the iPad throughout gameplay. This may have forced them to split their attention between moving in the real world and interacting with the AR interface on the screen. Such split attention and added visual complexity can raise cognitive load [47]. Furthermore, this dual-task situation might have constrained their natural spatial exploration, leading to more deliberate and limited movement patterns compared to the RW condition, where participants could freely move without having to manage a handheld device. While Handheld AR reflects a common and accessible form of AR interaction, it represents only one modality of LBARG interfaces. Other platforms, such as HMDs (e.g., Microsoft HoloLens), offer hands-free interaction. However, these systems also introduce challenges such as narrow FOV, eye strain, or fatigue from prolonged use. Future work could compare how different devices affect gameplay strategies and spatial presence in remote multiplayer AR experiences.

In a traditional hide-and-seek scenario, objects cannot be suspended mid-air, but they need to be placed on surfaces or within arm’s reach. These strategies refer to common real-world assumptions about where objects are usually placed, for example, places like on the floor, under the tables, or other horizontal surfaces within reach. Players often rely on these assumptions when searching for hidden objects in familiar environments. In comparison, AR’s capacity to enable mid-air placements triggered a distinct and more vertically oriented search behavior, underscoring how the possibilities in AR challenge and expand traditional spatial decision-making as compared to the RW.

This shift in search patterns is further supported by our analysis of spatial gaze entropy reported in Section 5.5. By calculating the distribution of fixation durations across defined AOIs, Seekers whore were found to in the AR condition exhibited higher gaze entropy than those in the RW condition. This measure reflects a more distributed allocation of visual attention across the scene, confirming that AR gameplay encouraged broader and more exploratory scanning behavior. In contrast, RW Seekers demonstrated lower entropy values, indicating a more focused and structured gaze strategy, consistent with traditional search strategies guided by physical constraints and prior experience.

Seeliger et al. [48] analyzed eye-gaze behavior in an “AR picking-and-assembly task” delivered through a HMD, where visual cues directed users to pick bins both inside and outside their FOV. Although their scenario is industrial component picking, their empirical findings also align with our findings. Their study stated that the AR guidance led users to “sweep their gaze across many distinct regions rather than fixating on a few likely spots”. Such widened and dispersed fixations align with the visual patterns observed in our AR condition. These findings indicate that AR interactions promote a more exploratory and distributed visual attention compared to the RW gameplay. However, future designs must carefully balance such exploratory game mechanics with simple user interfaces and interactions to mitigate user frustration and cognitive load.

6.2. Game Engagement and Player Experience

According to the reported GEQ results (see Section 5.2), realism was rated higher in the RW condition, whereas three absorption-level items, “I feel different”, “I lose track of where I am”, and “I feel spaced out” scored significantly higher in the AR condition. While at first glance these results could be interpreted as disorientation in AR gameplay experience, this response can also signal a higher level of cognitive immersion.

Brockmyer et al. [33] describe engagement as a gradual process that moves from immersion to presence, flow, and ultimately absorption, the deepest level. Items such as “I feel different”, “I lose track of where I am”, and “I feel spaced out” reflect this absorption stage and are associated with more intense, altered cognitive states during gameplay. Losing track of place, therefore, signals more than a simple confusion. Two additional findings from our study support the absorption reading. First, reward scores on the UES-SF were significantly higher in the AR condition () than in the RW condition (), with and an effect size of . Similarly, the hedonic quality from the UEQ-S was markedly higher in AR () compared to RW (). These increases occurred despite a lower perceived usability score in the AR condition () versus RW (), suggesting that players found the AR gameplay stimulating and rewarding rather than overwhelming. Second, Seekers’ performance did not deteriorate in the AR gameplay because the median time-to-find was comparable across both modes. Furthermore, also, only 8 of 60 players reported disorientation in the post-play interview. These results suggest that players remained highly engaged in AR without experiencing a loss of control or confusion.

Gaze data add further context to this. In AR, Seekers’ scan paths spread upward to mid-air and ceiling regions, echoing the finding by Chiossi et al. [47] that AR search induces broader visual sweeps under higher cognitive load. This visual behavior aligns with the drop in verbal cue use by stranger pairs (see Table 7), outlined as follows: attention shifted toward remote spatial cues rather than constant dialog.

Table 7.

Hot–cold cue frequency.

In the RW condition, strangers relied more heavily on verbal hot–cold cues () than known pairs (), suggesting a stronger dependence on verbal feedback when familiarity level was low. However, both known and stranger pairs got higher verbal cue usage scores compared to the AR condition. This aligns with previous research showing that face-to-face interaction fosters spontaneous communication and stronger social engagement [10,22]. In the AR condition, cue use among strangers dropped to , while known pairs showed only a slight decrease to . This pattern indicates that AR reduced strangers’ reliance on verbal coordination, likely due to increased spatial and visual demands, while familiar pairs adapted seamlessly. Therefore, the challenge of balancing verbal communication with AR mechanics appears specific to stranger pairs navigating unfamiliar remote spaces. Furthermore, players in the AR condition had to interact through a screen while navigating a remote space, which may have increased the mental demand required to coordinate. For pairs who were strangers, this shift likely reduced the ease of verbal interaction, redirecting their attention toward individual exploration and visual game elements. This reflects earlier findings by Duenser et al. [22] and Billinghurst et al. [23], who noted that distributed AR systems often require greater cognitive effort, which can lead to less spontaneous verbal communication.

Overall engagement scores did not differ between stranger and known pairs, indicating that familiarity did not significantly affect the primary engagement constructs measured in this study. It did, however, moderate communication style. Stranger pairs showed the largest drop in cues when moving to AR, while friends remained stable. Thus, familiarity shaped how players coordinated rather than how much they enjoyed the game. Future studies should pair GEQ data with conversational analysis to unpack these subtle social behaviors.

Moving virtual objects in the remote space using the virtual thumbstick impacted both usability and engagement. This is reflected in the lower perceived usability score in the AR condition () compared to the RW condition () in the UEQ-S results, suggesting that the interface introduced friction in the interaction flow. Several participants also noted these difficulties in the post-play interviews, describing the need for constant physical repositioning to accurately place objects in the remote scene. For example, one participant mentioned, “You had to keep moving around to get the right angle. It was a bit tiring”. Future research should investigate AR mechanics that simplify object placement and reduce the need for continuous physical adjustments, such as auto-alignment features and tap-to-place-like game mechanics to maintain high engagement while improving usability. Previous studies have also shown that AR systems often introduce cognitive and physical challenges that can affect usability [22]. Designing AR mechanics that simplify input and reduce cognitive effort may improve the overall user experience while maintaining the engagement aspects of the gameplay.

Aggregate GEQ and UES-SF scores indicate that overall engagement did not differ significantly between AR and RW. Specifically, median construct-level scores for Absorption, Flow, Presence, and Immersion (GEQ), as well as Focused Attention, Perceived Usability, and Aesthetic Appeal (UES-SF), showed only marginal or non-significant differences. Nevertheless, two engagement facets did diverge indicating participants found AR gameplay more fulfilling and satisfying.

- Reward (UES–SF) was higher in AR () than RW (), r = 0.46.

- Hedonic quality (UEQ-S) was likewise greater in AR ( vs. ).

Further supporting this, the UEQ-S results demonstrated a significant advantage for AR in terms of Hedonic Quality (, AR vs. , RW), reflecting higher levels of excitement and novelty. Conversely, RW gameplay maintained a higher Pragmatic Quality (, RW vs. , AR), indicating participants found RW gameplay clearer and easier to use. Thus, while overall engagement was comparable across conditions, AR specifically has enhanced aspects related to enjoyment and satisfaction, albeit at a slight cost to usability and difficulties in the interaction.

Previous studies have pointed out that unfamiliar or overly complex interaction methods can reduce usability in AR systems [49]. In particular, when virtual navigation demands constant adjustment using on-screen controls, it can disrupt the natural flow of gameplay and increase user frustration. Laato et al. [10] also found that players often avoid camera-based AR features if they slow down progress or increase task difficulty. As they reported, only 7% of surveyed players regularly used AR features, with the most common reason being that they slowed progression.

Interview comments reflect these quantitative findings. Thirty-eight participants described AR as “more engaging” or “more rewarding”, often citing the novelty of manipulating remote objects and seeing the remote player’s live position and orientation. At the same time, many noted interface friction, consistent with the non-significant drop in Perceived Usability. Taken together, these results suggest that AR heightened specific rewarding and hedonic aspects of play without lifting overall engagement scores significantly. We therefore consider H2 as partially supported as the AR condition fostered certain engagement qualities but not overall engagement as a single construct.

6.3. Enhanced Social Interaction in AR

Reflexive thematic analysis revealed that 38 of 60 participants felt “more connected” or “really playing together” in the AR mode, even though they were playing remotely. Quantitatively, this is reflected further by the hot–cold cue data (see Table 7. Stranger pairs reduced verbal cues from (RW) to (AR) while maintaining identical median time-to-find. In other words, AR let unfamiliar players collaborate effectively with less speech, implying that alternative non-verbal channels were doing the communicative work. Prior work on remote AR collaboration shows similar effects. For example, a study found that visual embodiment cues can substitute for spoken directives and raise perceived co-presence [23].

In our game, we did not include gesture-based input. However, our prototype displayed a simple “frustum avatar” that mimics the remote Seeker’s position and orientation. This helped players to understand what their partner was doing. It also supported coordination between remotely connected spaces without needing to speak much.

Prior studies suggest such directional visual cues support joint attention and task grounding [23]. This may explain why stranger pairs needed fewer verbal cues but still performed just as well. Interview statements such as,

indicate that these two cues already improved the social interaction. This finding aligns with prior collaborative AR studies that highlight the importance of gaze direction and viewpoint for effective spatial coordination. For example, Gauglitz et al. [50] explored remote collaboration where the helper could pan and zoom a stabilized view of the local user’s scene. This allowed them to direct attention without disrupting the user’s first-person perspective. Similarly, Kim et al. [51] tested combinations of visual cues in HMD-based AR tasks and found that pointer cues representing gaze significantly increased co-presence, while these implementations differ from our game setting, because they were using HMDs or explicit pointer tools, the findings support the idea that orientation and position are minimal but effective cues for coordinating shared tasks. In our AR hide-and-seek, the 3D frustum provided this data implicitly, allowing players to predict actions and reduce confusion, especially when other bodily cues like footsteps or shadows were absent.“I liked that you could see where the other person was looking; it put context to our chat.”—P41

Yet, some aspects of in-person play were still missing. Twelve participants said they “missed the physical interaction” of the real-world game. Piumsomboon et al. [52] demonstrate that sharing facial expressions and hand gestures in AR can enhance emotional understanding and improve collaborative awareness. These cues help fill the gap left by missing physical presence, raising social connection by conveying both intent and emotion. Future LBARGs could test each layer separately to reveal how much extra social information is worth the technical effort.

6.4. Contributions of AR Hide-and-Seek Gameplay

Based on our results and discussion, three main contributions are identified.

- Connecting Remote Players through Shared Gameplay Spaces.The AR design allowed Hiders to see a 3D reconstruction of the Seeker’s room and place virtual objects into that space. While physically apart, Hiders could view the Seeker’s live position and orientation (see Figure 5), which enhanced their sense of presence. Thirty-eight participants reported that it “felt like playing together” despite being in separate rooms (see Section 6). Reduced verbal cue use in AR, particularly among stranger pairs who still achieved similar “time to find” scores (see Table 7), suggests that visual cues replaced some spoken interaction. This aligns with prior work showing that basic directional information, like gaze and orientation, can support co-presence in AR collaboration [23,51]. These results highlight how our AR game design enabled real-time coordination through shared gameplay spaces.

- New Spatial Strategies Enabled by AR Gameplay.Compared to RW, the AR gameplay enabled more varied and creative object placements. Players hid objects mid-air, on ceilings, or in otherwise unreachable locations, which made Seekers search in new ways. Eye-tracking showed more upward gaze shifts, and longer hiding times confirmed this increased complexity (see Figure 11, Section 5). Forty-two participants said they enjoyed this freedom and found it more “creative” and “unusual” than the RW condition. These behaviors suggest that AR may support more exploratory spatial reasoning beyond real-world constraints.