Intention to Work with Social Robots: The Role of Perceived Robot Use Self-Efficacy, Attitudes Towards Robots, and Beliefs in Human Nature Uniqueness

Abstract

:1. Introduction

2. Related Work

2.1. Behavioral Intention to Work with Social Robots

2.2. The Role of Perceived Robot Use Self-Efficacy on Acceptance to Work with It

2.3. Attitudes Towards Robots as Antecedents of Robot Use Self-Efficacy and Acceptance to Work with SRs

2.4. Exploring the Role of Beliefs in Human Nature Uniqueness (BHNU) as a Distal Antecedent of Intention to Work with SRs Through Attitudes Towards Robots and Perceived Robot Use Self-Efficacy

3. Study Design

3.1. Participants

3.2. Data Collection and Procedure

3.3. Materials

4. Results

4.1. Data Analysis

4.2. Descriptives Analyses and Control Check

4.3. Hypotheses Testing

5. Discussion

5.1. Implications for Practice

5.2. Limitations

5.3. Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kelly, K. Better Than Human: Why Robots Will—And Must—Take. 24 December 2012. Available online: https://www.wired.com/2012/12/ff-robots-will-take-our-jobs/ (accessed on 8 May 2024).

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Kim, S. Working with Robots: Human Resource Development Considerations in Human–Robot Interaction. Hum. Resour. Dev. Rev. 2022, 21, 48–74. [Google Scholar] [CrossRef]

- Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Bandura, A.; Freeman, W.H.; Lightsey, R. Self-Efficacy: The Exercise of Control; Springer: Berlin/Heidelberg, Germany, 1997. [Google Scholar]

- Rahman, M.S.; Ko, M.; Warren, J.; Carpenter, D. Healthcare Technology Self-Efficacy (HTSE) and its influence on individual attitude: An empirical study. Comput. Hum. Behav. 2016, 58, 12–24. [Google Scholar] [CrossRef]

- Latikka, R.; Turja, T.; Oksanen, A. Self-Efficacy and Acceptance of Robots. Comput. Hum. Behav. 2019, 93, 157–163. [Google Scholar] [CrossRef]

- Rosenthal-von der Pütten, A.; Bock, N. Development and Validation of the Self-Efficacy in Human-Robot-Interaction Scale (SE-HRI). ACM Trans. Hum. Robot. Interact. THRI 2018, 7, 1–30. [Google Scholar] [CrossRef]

- Latikka, R.; Savela, N.; Koivula, A.; Oksanen, A. Perceived Robot Attitudes of Other People and Perceived Robot Use Self-efficacy as Determinants of Attitudes Toward Robots. In HCII 2021; Springer Nature: Berlin, Germany, 2021; pp. 262–274. [Google Scholar]

- Turja, T.; Rantanen, T.; Oksanen, A. Robot use self-efficacy in healthcare work (RUSH): Development and validation of a new measure. AI Soc. 2019, 34, 137–143. [Google Scholar] [CrossRef]

- Piçarra, N.; Giger, J.-C. Predicting intention to work with social robots at anticipation stage: Assessing the role of behavioral desire and anticipated emotions. Comput. Hum. Behav. 2018, 86, 129–146. [Google Scholar] [CrossRef]

- Piçarra, N.; Giger, J.-C.; Pochwatko, G.; Możaryn, J. Designing Social Robots for Interaction at Work: Socio-Cognitive Factors Underlying Intention to Work with Social Robots. J. Autom. Mob. Robot. Intell. Syst. 2016, 10, 17–26. [Google Scholar] [CrossRef]

- de Graaf, M.M.A.; Ben Allouch, S. Exploring influencing variables for the acceptance of social robots. Robot. Auton. Syst. 2013, 61, 1476–1486. [Google Scholar] [CrossRef]

- Robinson, N.L.; Hicks, T.N.; Suddrey, G.; Kavanagh, D.J. The Robot Self-Efficacy Scale: Robot Self-Efficacy, Likability and Willingness to Interact Increases After a Robot-Delivered Tutorial. In Proceedings of the IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020. [Google Scholar]

- Zafari, S.; Schwaninger, I.; Hirschmanner, M.; Schmidbauer, C.; Weiss, A.; Koeszegi, S.T. “You Are Doing so Great!”—The Effect of a Robot’s Interaction Style on Self-Efficacy in HRI. In Proceedings of the 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019. [Google Scholar]

- Spatola, N.; Wudarczyk, O.A.; Nomura, T.; Cherif, E. Attitudes Towards Robots Measure (ARM): A New Measurement Tool Aggregating Previous Scales Assessing Attitudes Toward Robots. Int. J. Soc. Robot. 2023, 15, 1683–1701. [Google Scholar] [CrossRef]

- Naneva, S.; Sarda Gou, M.; Webb, T.L. A systematic review of attitudes, anxiety, acceptance, and trust towards social robots. Int. J. Soc. Robot. 2020, 12, 1179–1201. [Google Scholar] [CrossRef]

- Ajzen, I.; Fishbein, M. Attitudes and the Attitude-Behavior Relation: Reasoned and Automatic Processes. Eur. Rev. Soc. Psychol. 2011, 11, 1–33. [Google Scholar] [CrossRef]

- Nomura, T.; Kanda, T.; Suzuki, T. Experimental investigation into influence of negative attitudes toward robots on human–robot interaction. AI Soc. 2006, 20, 138–150. [Google Scholar] [CrossRef]

- Huang, H.-L.; Cheng, L.-K.; Sun, P.-C.; Chou, S.-J. The Effects of Perceived Identity Threat and Realistic Threat on the Negative Attitudes and Usage Intentions Toward Hotel Service Robots: The Moderating Effect of the Robot’s Anthropomorphism. Int. J. Soc. Robot. 2021, 13, 1599–1611. [Google Scholar] [CrossRef]

- Rantanen, T.; Lehto, P.; Vuorinen, P.; Coco, K. Attitudes towards care robots among Finnish home care personnel—A comparison of two approaches. Scand. J. Caring Sci. 2018, 32, 772–782. [Google Scholar] [CrossRef] [PubMed]

- Piçarra, N.; Giger, J.-C.; Pochwatko, G.; Gonçalves, G. Validation of the Portuguese version of the Negative Attitudes towards Robots Scale. Eur. Rev. Appl. Psychol. 2015, 65, 93–104. [Google Scholar] [CrossRef]

- Giger, J.; Piçarra, N.; Alves-Oliveira, P.; Oliveira, R.; Arriaga, P. Humanization of robots: Is it really such a good idea? Hum. Behav. Emerg. Technol. 2019, 1, 111–123. [Google Scholar] [CrossRef]

- Mori, M.; MacDorman, K.F.; Kageki, N. The uncanny valley [from the field]. IEEE Robot. Autom. Mag. 2012, 19, 98–100. [Google Scholar] [CrossRef]

- Złotowski, J.; Yogeeswaran, K.; Bartneck, C. Can we control it? Autonomous robots threaten human identity, uniqueness, safety, and resources. Int. J. Hum. Comput. Stud. 2017, 100, 48–54. [Google Scholar] [CrossRef]

- Ferrari, F.; Paladino, M.P.; Jetten, J. Blurring Human–Machine Distinctions: Anthropomorphic Appearance in Social Robots as a Threat to Human Distinctiveness. Int. J. Soc. Robot. 2016, 8, 287–302. [Google Scholar] [CrossRef]

- Giger, J.C.; Moura, D.; Almeida, N.; Piçarra, N. Attitudes towards social robots: The role of gender, belief in human nature uniqueness, religiousness and interest in science fiction. In Proceedings of the II International Congress on Interdisciplinarity in Social and Human Sciences, Faro, Portugal, 11–12 May 2017. [Google Scholar]

- Pochwatko, G.; Giger, J.C.; Różańska-Walczuk, M.; Świdrak, J.; Kukiełka, K.; Możaryn, J.; Piçarra, N. Polish Version of the Negative Attitude Toward Robots Scale (NARS-PL). J. Autom. Mob. Robot. Intell. Syst. 2015, 9, 65–72. [Google Scholar]

- Giger, J.-C.; Piçarra, N.; Pochwatko, G.; Almeida, N.; Almeida, A.S.; Costa, N. Development of the Beliefs in Human Nature Uniqueness Scale and Its Associations With Perception of Social Robots. Hum. Behav. Emerg. Technol. 2024, 2024, 5569587. [Google Scholar] [CrossRef]

- Łupkowski, P.; Wasielewska, A. The Cooperative Board Game THREE. A Test Field for Experimenting with Moral Dilemmas of Human-Robot Interaction. Ethics Prog. 2019, 10, 82–97. [Google Scholar] [CrossRef]

- Lee, M.K.; Forlizzi, J.; Rybski, P.; Crabbe, F.; Chung, W.; Finkle, J.; Glaser, E.; Kiesler, S. The Snackbot: Documenting the design of a robot for long-term Human-Robot Interaction. In Proceedings of the 4th ACM/IEEE International Conference on Human-Robot Interaction (HRI), La Jolla, CA, USA, 9–13 March 2009; pp. 7–14. [Google Scholar]

- Hayes, A.F.; Coutts, J.J. Use omega rather than Cronbach’s alpha for estimating reliability. But.... Commun. Methods Meas. 2020, 14, 1–24. [Google Scholar] [CrossRef]

- Hayes, A.F. Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach, 2nd ed.; Guilford Press: New York, NY, USA, 2018. [Google Scholar]

- Baron, R.M.; Kenny, D.A. The moderator–mediator variable distinction in social psychological research: Conceptual, strategic, and statistical considerations. J. Personal. Soc. Psychol. 1986, 51, 1173–1182. [Google Scholar] [CrossRef] [PubMed]

- Curran, J.; West, S.; Finch, J. The Robustness of Test Statistics to Nonnormality and Specification Error in Confirmatory Factor Analysis. Psychol. Methods 1996, 1, 16–29. [Google Scholar] [CrossRef]

- Hair, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM), 3rd ed.; Sage: Thousand Oaks, CA, USA, 2022. [Google Scholar]

- McDonald, T.; Siegall, M. The effects of technological self-efficacy and job focus on job performance, attitudes, and withdrawal behaviors. J. Psychol. Interdiscip. Appl. 1992, 126, 465–475. [Google Scholar] [CrossRef]

- Piçarra, N.; Giger, J.-C.; Pochwatko, G.; Gonçalves, G. Making sense of social robots: A structural analysis of the layperson’s social representation of robots. Eur. Rev. Appl. Psychol. 2016, 66, 277–289. [Google Scholar] [CrossRef]

- Brondi, S.; Pivetti, M.; Battista, S.D.; Sarrica, M. What do we expect from robots? Social representations, attitudes and evaluations of robots in daily life. Technol. Soc. 2021, 66, 1–10. [Google Scholar] [CrossRef]

- Henrich, J.; Heine, S.J.; Norenzayan, A. The weirdest people in the world? Behavioral and Brain Sciences. Behav. Brain Sci. 2010, 33, 61–83. [Google Scholar] [CrossRef] [PubMed]

- Stumpf, S.A.; Brief, A.P.; Hartman, K. Self-efficacy expectations and coping with career-related events. J. Vocat. Behav. 1987, 31, 91–108. [Google Scholar] [CrossRef]

- Sun, G.; Lyu, B. Relationship between emotional intelligence and self-efficacy among college students: The mediating role of coping styles. Discov. Psychol. 2022, 42, 2–8. [Google Scholar] [CrossRef]

- Conner, M.; Norman, P. Understanding the intention-behavior gap: The role of intention strength. Front. Psychol. 2022, 13, 923464. [Google Scholar] [CrossRef] [PubMed]

- Fazio, R.H.; Zanna, M.P. Direct experience and attitude-behavior consistency. Adv. Exp. Soc. Psychol. 1981, 14, 161–202. [Google Scholar]

- Chen, N.; Liu, X.; Zhai, Y.; Hu, X. Development and validation of a robot social presence measurement dimension scale. Sci. Rep. 2023, 13, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Boada, J.P.; Maestre, B.R.; Genís, C.T. The ethical issues of social assistive robotics: A critical literature review. Technol. Soc. 2021, 67, 101726. [Google Scholar] [CrossRef]

- Torras, C. Ethics of Social Robotics: Individual and Societal Concerns and Opportunities. Annu. Rev. Control Robot. Auton. Syst. 2024, 7, 1–18. [Google Scholar] [CrossRef]

- Smids, J.; Nyholm, S.; Berkers, H. Robots in the Workplace: A Threat to—Or Opportunity for—Meaningful Work? Philos. Technol. 2020, 33, 503–522. [Google Scholar] [CrossRef]

| Min–Max | M | SD | Sk | K | α | ωt | |

|---|---|---|---|---|---|---|---|

| BHNUS | 1–7 | 5.71 * | 1.43 | −1.36 | 1.49 | 0.87 | 0.88 |

| NARHT | 1–7 | 3.79 * | 1.26 | 0.01 | −0.14 | 0.86 | 0.71 |

| NATIR | 1–7 | 4.15 * | 1.50 | 0.00 | −0.53 | 0.86 | 0.85 |

| PNARS | 1–7 | 4.13 * | 1.23 | 0.06 | −0.22 | 0.86 | 0.86 |

| PRUSE | 1–7 | 4.68 * | 1.39 | −0.35 | 0.14 | 0.84 | 0.83 |

| IWSR | 1–7 | 3.78 * | 1.71 | 0.24 | −0.71 | 0.91 | 0.91 |

| BHNUS | PNARS | NARHT | NATIR | PRUSE | IWSR | |

|---|---|---|---|---|---|---|

| BHNUS | - | −0.45 ** | −0.54 ** | −0.38 ** | −0.31 ** | −0.28 ** |

| PNARS (R) | - | 0.87 ** | 0.92 ** | 0.60 ** | 0.39 ** | |

| NARHT (R) | - | 0.69 ** | 0.48 ** | 0.44 ** | ||

| NATIR (R) | - | 0.60 ** | 0.32 ** | |||

| PRUSE | - | 0.56 ** | ||||

| IWSR | - |

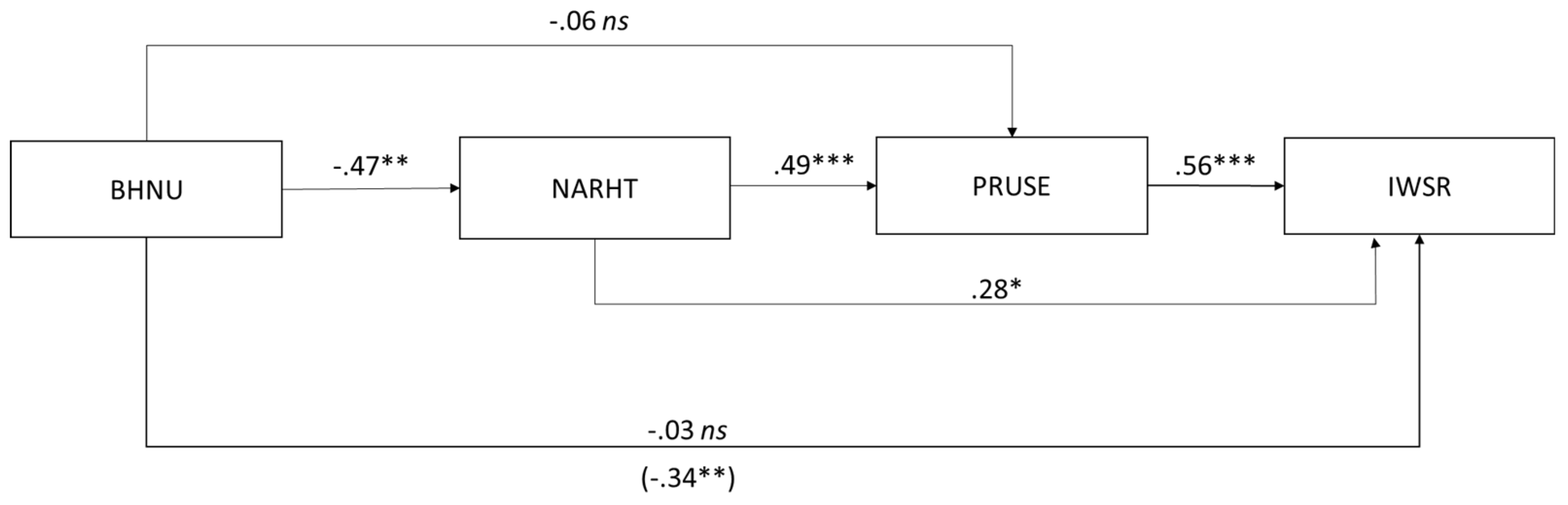

| NARHT (M1) | PRUSE (M2) | IWSR (Y) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Antecedent | Coeff. | SE | p | 95% CI | Coeff. | SE | p | 95% CI | Coeff. | SE | p | 95% CI |

| BHNUS (X) | −0.47 | 0.06 | <0.001 | −0.61; −0.33 | −0.066 | 0.09 | 0.48 | −0.25; 0.12 | −0.03 | 0.10 | 0.72 | −0.25; 0.17 |

| NATHR (R) (M1) | 0.49 | 0.10 | <0.001 | 0.28; 0.70 | 0.28 | 0.13 | 0.03 | 0.01; 0.54 | ||||

| PRUSE (M2) | 0.56 | 0.10 | <0.001 | 0.35; 0.77 | ||||||||

| Constant | 6.51 | 0.40 | <0.001 | 5.71; 7.32 | 3.19 | 0.83 | <0.001 | 1.52; 4.85 | 0.30 | 1.01 | 0.76 | −1.70; 2.31 |

| R2 = 0.29 | R2 = 0.23 | R2 = 0.35 | ||||||||||

| F(1,115) = 47.62, p < 0.001 | F(2,114) = 17.98, p < 0.0001 | F(3,113) = 21.08, p < 0.00001 | ||||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Giger, J.-C.; Piçarra, N.; Pochwatko, G.; Almeida, N.; Almeida, A.S. Intention to Work with Social Robots: The Role of Perceived Robot Use Self-Efficacy, Attitudes Towards Robots, and Beliefs in Human Nature Uniqueness. Multimodal Technol. Interact. 2025, 9, 9. https://doi.org/10.3390/mti9020009

Giger J-C, Piçarra N, Pochwatko G, Almeida N, Almeida AS. Intention to Work with Social Robots: The Role of Perceived Robot Use Self-Efficacy, Attitudes Towards Robots, and Beliefs in Human Nature Uniqueness. Multimodal Technologies and Interaction. 2025; 9(2):9. https://doi.org/10.3390/mti9020009

Chicago/Turabian StyleGiger, Jean-Christophe, Nuno Piçarra, Grzegorz Pochwatko, Nuno Almeida, and Ana Susana Almeida. 2025. "Intention to Work with Social Robots: The Role of Perceived Robot Use Self-Efficacy, Attitudes Towards Robots, and Beliefs in Human Nature Uniqueness" Multimodal Technologies and Interaction 9, no. 2: 9. https://doi.org/10.3390/mti9020009

APA StyleGiger, J.-C., Piçarra, N., Pochwatko, G., Almeida, N., & Almeida, A. S. (2025). Intention to Work with Social Robots: The Role of Perceived Robot Use Self-Efficacy, Attitudes Towards Robots, and Beliefs in Human Nature Uniqueness. Multimodal Technologies and Interaction, 9(2), 9. https://doi.org/10.3390/mti9020009