Towards Universal Industrial Augmented Reality: Implementing a Modular IAR System to Support Assembly Processes

Abstract

:1. Introduction

1.1. Problem Statement

1.2. Research Questions

- What are the relevant capabilities that can be used to describe these modules and to ensure their reusability? (RQ1)

- How can an IAR application for a specific use-case be composed out of these modules so that the right information is displayed to the user? (RQ2)

- How can existing modules be reused and new functionality integrated into existing systems? (RQ3)

2. Prior Works

2.1. Industrial Augmented Reality Use-Cases

2.2. Modular AR Architectures

3. Literature Review

3.1. Materials and Methods

3.2. Results

3.3. Discussion

4. Concept for an IAR Architecture

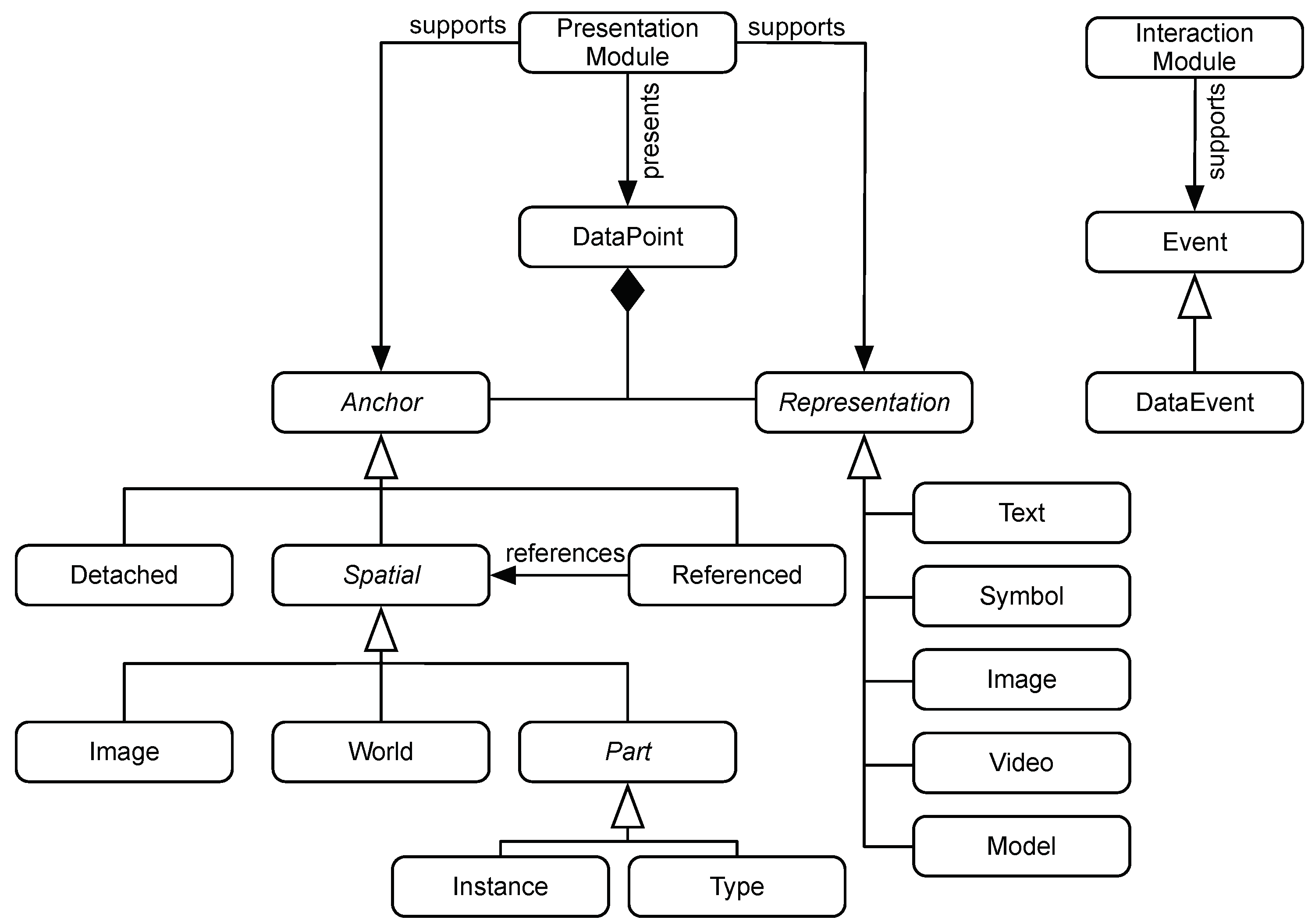

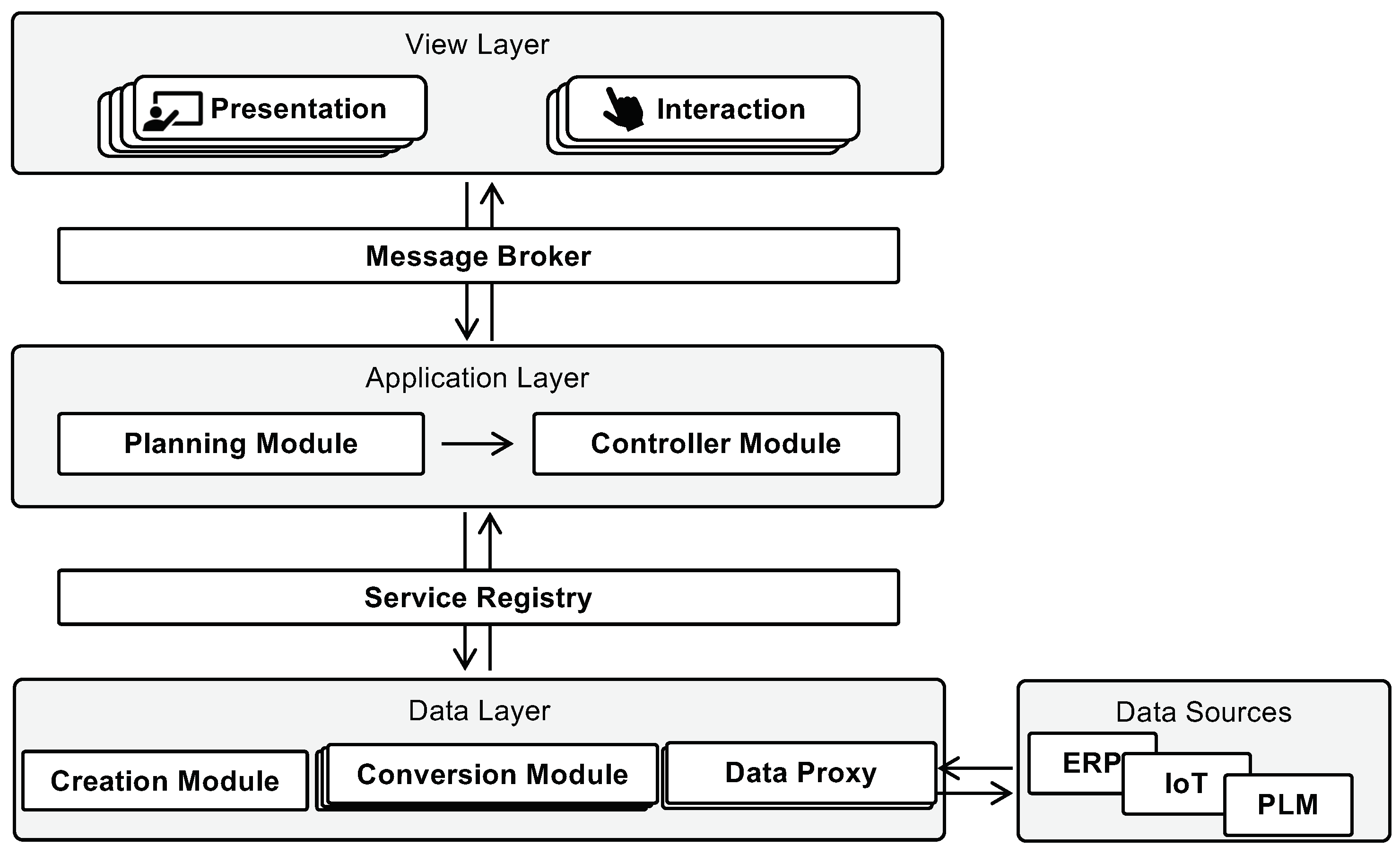

4.1. Modules and Data Model

4.2. Content Preparation

4.3. Application Planning

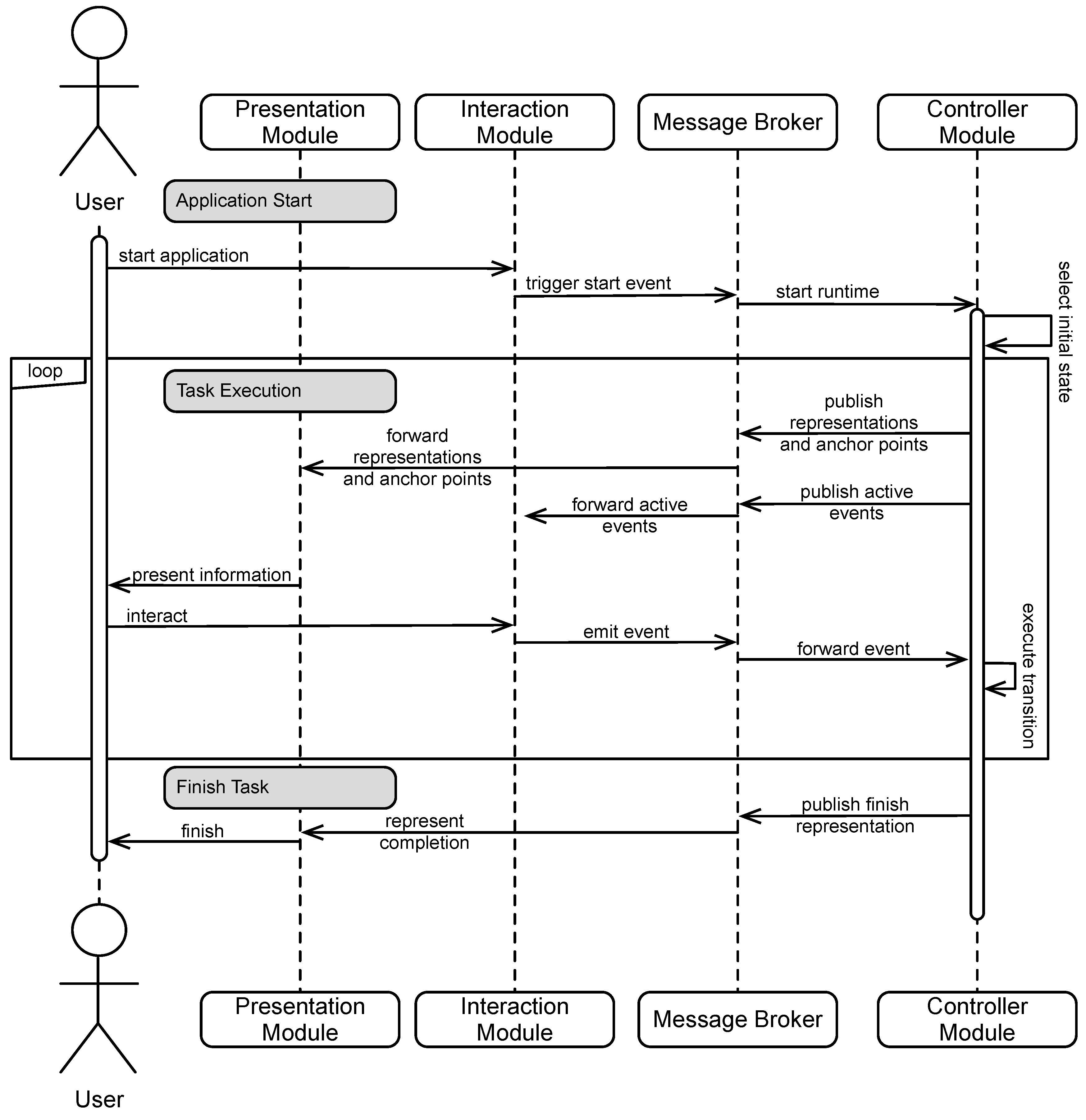

4.4. Task Execution

5. Prototypical Implementation of a Test Case

5.1. Identification of System Module Capabilities

5.2. Prototypical IAR Systems

5.3. Expanding Existing Modules

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fite-Georgel, P. Is there a reality in Industrial Augmented Reality? In Proceedings of the International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; IEEE: Piscataway, NJ, USA, 2011. [Google Scholar]

- Wang, X.; Ong, S.K.; Nee, A.Y.C. A comprehensive survey of augmented reality assembly research. Adv. Manuf. 2016, 4, 1–22. [Google Scholar] [CrossRef]

- Fernández del Amo, I.; Erkoyuncu, J.A.; Roy, R.; Palmarini, R.; Onoufriou, D. A systematic review of Augmented Reality content-related techniques for knowledge transfer in maintenance applications. Comput. Ind. 2018, 103, 47–71. [Google Scholar] [CrossRef]

- Röltgen, D.; Dumitrescu, R. Classification of industrial Augmented Reality use cases. Procedia CIRP 2020, 91, 93–100. [Google Scholar] [CrossRef]

- Ludwig, B. Planbasierte Mensch-Maschine-Interaktion in multimodalen Assistenzsystemen; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar] [CrossRef]

- Funk, M.; Bächler, A.; Bächler, L.; Kosch, T.; Heidenreich, T.; Schmidt, A. Working with Augmented Reality? In Proceedings of the 10th International Conference on PErvasive Technologies Related to Assistive Environments, Rhodes, Greece, 21–23 June 2017; pp. 222–229. [Google Scholar]

- Loizeau, Q.; Danglade, F.; Ababsa, F.; Merienne, F. Evaluating added value of Augmented Reality to assist aeronautical Maintenance Workers—Experimentation on On-field Use Case. In Virtual Reality and Augmented Reality. EuroVR 2019; Bourdot, P., Interrante, V., Nedel, L., Magnenat-Thalmann, N., Zachmann, G., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11883, pp. 151–169. [Google Scholar] [CrossRef] [Green Version]

- Masood, T.; Egger, J. Adopting augmented reality in the age of industrial digitalisation. Comput. Ind. 2020, 115, 103112. [Google Scholar] [CrossRef]

- Bosch, T.; van Rhijn, G.; Krause, F.; Könemann, R.; Wilschut, E.S.; de Looze, M. Spatial augmented reality. In Proceedings of the 13th ACM International Conference on PErvasive Technologies Related to Assistive Environments, Corfu, Greece, 30 June–3 July 2020. [Google Scholar] [CrossRef]

- Palmarini, R.; Erkoyuncu, J.A.; Roy, R.; Torabmostaedi, H. A Systematic Review of Augmented Reality applications in Maintenance. Robot. -Comput.-Integr. Manuf. 2018, 49, 215–228. [Google Scholar] [CrossRef] [Green Version]

- Geng, J.; Song, X.; Pan, Y.; Tang, J.; Liu, Y.; Zhao, D.; Ma, Y. A systematic design method of adaptive augmented reality work instruction for complex industrial operations. Comput. Ind. 2020, 119, 103229. [Google Scholar] [CrossRef]

- Huang, G.; Qian, X.; Wang, T.; Patel, F.; Sreeram, M.; Cao, Y.; Ramani, K.; Quinn, A.J. AdapTutAR: An adaptive tutoring system for machine tasks in Augmented Reality. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Siewert, J.L.; Neges, M.; Gerhard, D. Ein Klassifizierungssystem für Industrielle Augmented Reality Anwendungen; TUDpress: Dresden, Germany, 2021; pp. 401–416. [Google Scholar]

- Siewert, J.L.; Wolf, M.; Böhm, B.; Thienhaus, S. Usability Study for an Augmented Reality Content Management System. In Cross Reality and Data Science in Engineering; Springer: Berlin, Germany, 2020; pp. 274–287. [Google Scholar] [CrossRef]

- Büttner, S.; Prilla, M.; Röcker, C. Augmented Reality Training for Industrial Assembly Work - Are Projection-based AR Assistive Systems an Appropriate Tool for Assembly Training? In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–12. [Google Scholar] [CrossRef]

- Mourtzis, D.; Vlachou, A.; Zogopoulos, V. Cloud-Based Augmented Reality Remote Maintenance Through Shop-Floor Monitoring: A Product-Service System Approach. J. Manuf. Sci. Eng. 2017, 139, 061011. [Google Scholar] [CrossRef]

- Brice, D.; Rafferty, K.; McLoone, S. AugmenTech: The usability evaluation of an AR system for maintenance in industry. In Augmented Reality, Virtual Reality and Computer Graphics. AVR 2020; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12243, pp. 284–303. [Google Scholar] [CrossRef]

- Siewert, J.L.; Vogt, O.; Wolf, M.; Gerhard, D.; Bükrü, S.F. Implementation of the Asset Administration Shell Concept to Industrial Augmented Reality Applications; Springer: Cham, Switzerland, 2023; pp. 255–266. [Google Scholar] [CrossRef]

- Wolf, M.; Siewert, J.L.; Vogt, O.; Gerhard, D. Augmented Reality-Assisted Quality Control Based on Asset Administration Shells for Concrete Elements. In Product Lifecycle Management. PLM in Transition Times: The Place of Humans and Transformative Technologies; Noël, F., Nyffenegger, F., Rivest, L., Bouras, A., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 358–367. [Google Scholar] [CrossRef]

- Szajna, A.; Stryjski, R.; Woźniak, W.; Chamier-Gliszczyński, N.; Królikowski, T. The Production Quality Control Process, Enhanced with Augmented Reality Glasses and the New Generation Computing Support System. Procedia Comput. Sci. 2020, 176, 3618–3625. [Google Scholar] [CrossRef]

- Schwerdtfeger, B.; Pustka, D.; Hofhauser, A.; Klinker, G. Using Laser Projectors for Augmented Reality. In Proceedings of the 2008 ACM Symposium on Virtual Reality Software and Technology, VRST ’08, Bordeaux, France, 27–29 October 2008; pp. 134–137. [Google Scholar] [CrossRef]

- Segovia, D.; Mendoza, M.; Mendoza, E.; González, E. Augmented Reality as a Tool for Production and Quality Monitoring. Procedia Comput. Sci. 2015, 75, 291–300. [Google Scholar] [CrossRef] [Green Version]

- Coscetti, S.; Moroni, D.; Pieri, G.; Tampucci, M. Factory maintenance application using Augmented Reality. In Proceedings of the 3rd International Conference on Applications of Intelligent Systems, Las Palmas de Gran Canaria, Spain, 7–9 January 2020. [Google Scholar] [CrossRef]

- Vogel, C.; Schulenburg, E.; Elkmann, N. Projective- AR Assistance System for shared Human-Robot Workplaces in Industrial Applications. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; pp. 1259–1262. [Google Scholar] [CrossRef]

- Pentenrieder, K.; Bade, C.; Doil, F.; Meier, P. Augmented Reality-based factory planning—An application tailored to industrial needs. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 1–9. [Google Scholar]

- Herr, D.; Reinhardt, J.; Reina, G.; Krüger, R.; Ferrari, R.V.; Ertl, T. Immersive modular factory layout planning using Augmented Reality. Procedia CIRP 2018, 72, 1112–1117. [Google Scholar] [CrossRef]

- Stadler, S.; Kain, K.; Giuliani, M.; Mirnig, N.; Stollnberger, G.; Tscheligi, M. Augmented reality for industrial robot programmers: Workload analysis for task-based, augmented reality-supported robot control. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 179–184. [Google Scholar] [CrossRef] [Green Version]

- Ong, S.K.; Yew, A.; Thanigaivel, N.K.; Nee, A. Augmented reality-assisted robot programming system for industrial applications. Robot. Comput.-Integr. Manuf. 2020, 61, 101820. [Google Scholar] [CrossRef]

- MacWilliams, A.; Reicher, T.; Klinker, G.; Bruegge, B. Design Patterns for Augmented Reality Systems. In Proceedings of the International Workshop Exploring the Design and Engineering of Mixed Reality Systems (MIXER), Funchal, Portugal, 13 January 2004. [Google Scholar]

- Djordjevic, L.; Petrovic, N.; Tosic, M. Ontology based approach to development of augmented reality applications. In Proceedings of the 2019 27th Telecommunications Forum (TELFOR), Belgrade, Serbia, 26–27 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Hervas, R.; Bravo, J.; Fontecha, J.; Villarreal, V. Achieving adaptive Augmented Reality through ontological context-awareness applied to AAL scenarios. J. Univers. Comput. Sci. 2013, 19, 1334–1349. [Google Scholar]

- Abawi, D.F.; Dörner, R.; Grimm, P. A component-based authoring environment for creating multimedia-rich Mixed Reality. In Proceedings of the EUROGRAPHICS Workshop on Multimedia, Ninjing, China, 27–28 October 2004; Correia, N., Jorge, J., Chambel, T., Pan, Z., Eds.; Eurographics Association: Aire-la-Ville, Switzerland, 2004; pp. 31–40. [Google Scholar]

- Kuster, T.; Masuch, N.; Fahndrich, J.; Tschirner-Vinke, G.; Taschner, J.; Specker, M.; Iben, H.; Baumann, H.; Schmid, F.; Stocklein, J.; et al. A distributed architecture for modular and dynamic Augmented Reality processes. In Proceedings of the 2019 IEEE 17th International Conference on Industrial Informatics (INDIN), Helsinki, Finland, 22–25 July 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Gramberg, T.; Kruger, K.; Niemann, J. Augmented Reality for operators in smart manufacturing environments: A case study implementation. In Smart, Sustainable Manufacturing in an Ever-Changing World; von Leipzig, K., Sacks, N., Mc Clelland, M., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 401–413. [Google Scholar] [CrossRef]

- Azuma, R.T. A survey of augmented reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Lechner, M. ARML 2.0 in the context of existing AR data formats. In Proceedings of the 2013 6th Workshop on Software Engineering and Architectures for Realtime Interactive Systems (SEARIS), Orlando, FL, USA, 17 March 2013; IEEE: Piscataway, NJ, USA, 2013. [Google Scholar] [CrossRef]

- Peters, M.D.; Godfrey, C.M.; Khalil, H.; McInerney, P.; Parker, D.; Soares, C.B. Guidance for conducting systematic scoping reviews. Int. J. Evid.-Based Healthc. 2015, 13, 141–146. [Google Scholar] [CrossRef] [Green Version]

- Garcia-Pereira, I.; Gimeno, J.; Morillo, P.; Casanova-Salas, P. A Taxonomy of Augmented Reality Annotations. In Proceedings of the 15Th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Valletta, Malta, 27–29 February 2020; Bouatouch, K., Sousa, A., Braz, J., Eds.; SciTePress: Setúbal, Portugal, 2020; Volume 1, pp. 412–419. [Google Scholar] [CrossRef]

- Gattullo, M.; Evangelista, A.; Uva, A.E.; Fiorentino, M.; Gabbard, J.L. What, How and Why are visual assets used in Industrial Augmented Reality? A systematic review and classification in maintenance, assembly and training (from 1997 to 2019). IEEE Trans. Vis. Comput. Graph. 2022, 28, 1443–1456. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Nee, A.; Ong, S. A state-of-the-art review of augmented reality in engineering analysis and simulation. Multimodal Technol. Interact. 2017, 1, 17. [Google Scholar] [CrossRef] [Green Version]

- Müller, T.; Dauenhauer, R. A Taxonomy for Information Linking in Augmented Reality. In Augmented Reality, Virtual Reality and Computer Graphics, Pt I; DePaolis, L., Mongelli, A., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9768, pp. 368–387. [Google Scholar] [CrossRef]

- Phaijit, O.; Obaid, M.; Sammut, C.; Johal, W. A Taxonomy of Functional Augmented Reality for Human-Robot Interaction. In Proceedings of the 2022 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI ‘22), Sapporo, Japan, 7–10 March 2022; pp. 294–303. [Google Scholar] [CrossRef]

- Runji, J.M.; Lee, Y.J.; Chu, C.H. Systematic Literature Review on Augmented Reality-Based Maintenance Applications in Manufacturing Centered on Operator Needs. Int. J. Precis. Eng.-Manuf.-Green Technol. 2023, 10, 567–585. [Google Scholar] [CrossRef]

- Suzuki, R.; Karim, A.; Xia, T.; Hedayati, H.; Marquardt, N. Augmented Reality and Robotics: A Survey and Taxonomy for AR-enhanced Human-Robot Interaction and Robotic Interfaces. In Proceedings of the 2022 Chi Conference on Human Factors in Computing Systems (CHI’ 22), New Orleans, LA, USA, 29 April–5 May 2022. [Google Scholar] [CrossRef]

- Tobiskova, N.; Malmskold, L.; Pederson, T. Multimodal Augmented Reality and Subtle Guidance for Industrial Assembly—A Survey and Ideation Method. In Virtual, Augmented and Mixed Reality: Applications in Education, Aviation and Industry, Pt Ii, Proceedings of the 14th International Conference, VAMR 2022, Held as Part of the 24th HCI International Conference, HCII 2022, Virtual Event, 26 June– 1 July 2022; Chen, J., Fragomeni, G., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; Volume 13318, pp. 329–349. [Google Scholar] [CrossRef]

- Tönnis, M.; Plecher, D.A.; Klinker, G. Representing information—Classifying the Augmented Reality presentation space. Comput. Graph. 2013, 37, 997–1011. [Google Scholar] [CrossRef]

- Woodward, J.; Ruiz, J. Analytic Review of Using Augmented Reality for Situational Awareness. IEEE Trans. Vis. Comput. Graph. 2023, 29, 2166–2183. [Google Scholar] [CrossRef]

- Zollmann, S.; Langlotz, T.; Grasset, R.; Lo, W.H.; Mori, S.; Regenbrecht, H. Visualization Techniques in Augmented Reality: A Taxonomy, Methods and Patterns. IEEE Trans. Vis. Comput. Graph. 2021, 27, 3808–3825. [Google Scholar] [CrossRef]

- Keil, J.; Schmitt, F.; Engelke, T.; Graf, H.; Olbrich, M. Augmented Reality Views: Discussing the Utility of Visual Elements by Mediation Means in Industrial AR from a Design Perspective; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; pp. 298–312. [Google Scholar] [CrossRef]

- Müller, T. Challenges in representing information with augmented reality to support manual procedural tasks. Aims Electron. Electr. Eng. 2019, 3, 71–97. [Google Scholar] [CrossRef]

- Tönnis, M.; Plecher, D.A. Presentation Principles in Augmented Reality Classification and Categorization Guidelines; Technical Report TUM-I111; Technische Universität München: München, Germany, 2011. [Google Scholar]

- Wither, J.; DiVerdi, S.; Höllerer, T. Annotation in outdoor augmented reality. Comput. Graph. 2009, 33, 679–689. [Google Scholar] [CrossRef]

- Wang, J.; Feng, Y.; Zeng, C.; Li, S. An augmented reality based system for remote collaborative maintenance instruction of complex products. In Proceedings of the 2014 IEEE International Conference on Automation Science and Engineering (CASE), New Taipei, Taiwan, 18–22 August 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar] [CrossRef]

- Scurati, G.W.; Gattullo, M.; Fiorentino, M.; Ferrise, F.; Bordegoni, M.; Uva, A.E. Converting maintenance actions into standard symbols for Augmented Reality applications in Industry 4.0. Comput. Ind. 2018, 98, 68–79. [Google Scholar] [CrossRef]

- Rolim, C.; Schmalstieg, D.; Kalkofen, D.; Teichrieb, V. [POSTER] Design Guidelines for Generating Augmented Reality Instructions. In Proceedings of the 2015 IEEE International Symposium on Mixed and Augmented Reality, Fukuoka, Japan, 29 September–3 October 2015. [Google Scholar] [CrossRef]

- OASIS Open. MQTT Version 5.0. OASIS Standard. Available online: https://docs.oasis-open.org/mqtt/mqtt/v5.0/mqtt-v5.0.html (accessed on 26 June 2023).

| Module | Anchor Types | Representation Types | Used in Example 1 | Used in Example 2 |

|---|---|---|---|---|

| Pick-To-Light | Referenced: Part * | none | • | ◦ |

| Monitor | Detached | Text | ||

| Image | • | ◦ | ||

| Video | ||||

| Projector | Detached | Text | ||

| Referenced: Part * | Image | ◦ | • | |

| Part * | Video |

| Module | Supported Events | Used in Example 1 | Used in Example 2 |

|---|---|---|---|

| Hardware Button | “next” | • | ◦ |

| “previous” | |||

| Virtual Button | “next” | ◦ | • |

| “previous” | |||

| Storage Container Recognition | Data Event (Container ID) | ◦ | • |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gerhard, D.; Neges, M.; Siewert, J.L.; Wolf, M. Towards Universal Industrial Augmented Reality: Implementing a Modular IAR System to Support Assembly Processes. Multimodal Technol. Interact. 2023, 7, 65. https://doi.org/10.3390/mti7070065

Gerhard D, Neges M, Siewert JL, Wolf M. Towards Universal Industrial Augmented Reality: Implementing a Modular IAR System to Support Assembly Processes. Multimodal Technologies and Interaction. 2023; 7(7):65. https://doi.org/10.3390/mti7070065

Chicago/Turabian StyleGerhard, Detlef, Matthias Neges, Jan Luca Siewert, and Mario Wolf. 2023. "Towards Universal Industrial Augmented Reality: Implementing a Modular IAR System to Support Assembly Processes" Multimodal Technologies and Interaction 7, no. 7: 65. https://doi.org/10.3390/mti7070065

APA StyleGerhard, D., Neges, M., Siewert, J. L., & Wolf, M. (2023). Towards Universal Industrial Augmented Reality: Implementing a Modular IAR System to Support Assembly Processes. Multimodal Technologies and Interaction, 7(7), 65. https://doi.org/10.3390/mti7070065