Abstract

Sign language (SL) avatar systems aid communication between the hearing and deaf communities. Despite technological progress, there is a lack of a standardized avatar development framework. This paper offers a systematic review of SL avatar systems spanning from 1982 to 2022. Using PRISMA guidelines, we shortlisted 47 papers from an initial 1765, focusing on sign synthesis techniques, corpora, design strategies, and facial expression methods. We also discuss both objective and subjective evaluation methodologies. Our findings highlight key trends and suggest new research avenues for improving SL avatars.

1. Introduction

Sign language (SL) is a visual–spatial language containing its own set of syntax and sets of rules that differ largely from spoken languages. SL is also the main mode of communication used by the deaf and hard-of-hearing (D/HH) community around the world. It relies on fingerspelling, hand orientation and movements, facial expressions, and body movements to relay meaning. Around 432 million people and 32 million children have hearing problems and need assistance in learning to live with their condition as reported by the World Health Organization (WHO) []. The WHO further estimates that by 2050, approximately 700 million people may have hearing impairments. Furthermore, SL varies amongst different countries and communities. The World Federation of the Deaf reports that there are over 300 sign languages existing around the world []. These sign languages differ from each other with each SL having its own set of rules and standards for communication. Despite the existence of a large number of sign languages and a significant number of the population suffering from hearing impairments, communication systems and technologies with provision for the deaf and hard-of-hearing community are rarely available. This lack of support towards the D/HH community creates considerable communication barriers for them in many different aspects of their day-to-day lives. Most interactions for D/HH individuals are accomplished with the aid of a sign language interpreter. However, the number of individuals working as SL interpreters is relatively low, leading to high service costs. Some D/HH individuals also opt out of utilizing available SL interpreters due to lack of privacy, which further impacts their access to information especially in cases of emergency [,].

One promising solution to bridge the communication gap between the hearing and D/HH communities is the development and integration of sign language avatars. Sign language avatars are computer-generated animations that can translate spoken and/or written language into sign language. SL avatars mostly simulate human representation to provide a natural and spontaneous interaction for the D/HH community []. These avatars can be integrated into different media sources such as websites, tv channels, and mobile applications. With increased advancements in the fields of computer graphics, natural language processing and machine learning, researchers and developers are able to develop avatars with more realistic and expressive features, allowing for an improved human-like interaction and capture of sign language complexities. As a result, research in the domain of sign language avatars has seen a high surge of interest with several research studies and commercial products emerging in this area.

Sign language avatars can help the D/HH community by improving communication and information access in a range of applications such as healthcare, education, and social settings. In healthcare, SL avatars can be used to provide a communication medium between deaf individuals and healthcare providers. SL avatars can be integrated into online healthcare systems to provide seamless access to information regarding healthcare services []. Remote healthcare services, such as telemedicine consultations, can also be implemented for D/HH individuals using SL avatars, without the need for an interpreter. In education, SL avatars provide D/HH students with access to educational content in classrooms and e-learning platforms, allowing them to be at a similar pace to their hearing peers. SL avatars can also be used to provide D/HH students with online mentoring sessions. Another application of sign language avatars is in social interactions, where SL avatars can be integrated in public spaces and environments for the D/HH community to be able to communicate with the hearing persons. With SL avatars, D/HH individuals can obtain access to public information and services, such as information related to flight timings and announcements in airports, emergency alerts, and public announcements in public areas.

The current development state of sign language avatars is still a complex and ongoing effort due to the many challenges existing. One of the most significant challenges in the development phase lies in accurately capturing the movement of the hands and face []. Sign language depends on both the use of manual (hands) and non-manual (such as facial expression, body motion) gestures to express meaning. SL avatars need to accurately represent the linguistic features of these signs, in real-time, for their practical implementation to be successful. Sign languages also have no written form as compared to spoken languages; hence, representing sign languages as an input to the SL avatars is a challenge. The representation of the sign in sign language must hold the linguistic information, movements, and speed amongst other variables to ensure no loss of the semantic information occurs []. A change in timing, movement, or configuration of the different gestures’ parts could result in a whole different meaning []. Another challenge in SL avatars is the need for the avatars to be realistic and natural-looking. A study on the attitudes of D/HH communities of SL avatars showed that the participants had a higher preference for the avatar that was more human-like than the others []. Their results showed that the adoption of SL avatars was not only dependent on the fluid motion of signing but also on the appearance of the SL avatar. On the other hand, researchers have found that SL avatars that fall into the region of “uncanny valley” are less likely to be accepted []. The phenomenon of “uncanny valley” describes the avatar’s realistic features as leaving the users uncomfortable, mostly for human-like avatars. Ref. [] conducted an interdisciplinary workshop to gain different perspectives on the current state of sign language processing. As part of the study, different experts were approached on the current state of sign language avatars and the challenges that were faced in this domain. Unrealistic avatar animation, problems in transitions, and publicly available large-scale datasets were just a few challenges mentioned by experts. Furthermore, SL avatars have no standard evaluation criteria []. This creates a challenge of its own when evaluating SL avatars, since different SL avatars cannot be compared for factors such as performance, linguistic legibility, and acceptance.

Many reviews and surveys have been conducted on the topic of sign language processing, but no in-depth literature review has been conducted on sign language avatars. A survey paper by [] provided a systematic review of the standard and contemporary techniques used for sign language machine translation and generation. Their study discussed sign language machine translation models, sign synthesis techniques, and evaluation methods for these models. Another study reviewed the different dimensions of the sign language translation problem []. One of the dimensions discussed was avatar technology. Although the review described the avatar generation process and presented a background on the avatar technology, it did not go into depth with the different phases of the avatar generation process and the challenges that arise within them. Ref. [] also touched on the existing state of avatar technology as part of their systematic review on sign language production. Ref. [] surveyed the different textual representation techniques for sign language avatars and presented a comparison of these techniques. Ref. [] conducted a survey on the sign language avatars with a focus on the challenges in the development of sign language avatars and sign synthesis. Their survey covered the different approaches adopted to represent signs in the literature. However, it did not go into depth with discussing facial expressions and the animation of the sign language avatars. Ref. [] surveyed facial expressions as part of the sign language animation process and compared different state-of-the-art approaches to facial expression generation in sign language projects.

To our knowledge, this is the first comprehensive systematic literature review conducted with a focus on the techniques and approaches used in the development of sign language avatars. This paper aims to provide a comprehensive literature review of the current state of research on sign language avatars based on the following research questions that will be answered and the motivation behind them:

- RQ1: What is the bibliographic information of the existing SL avatars?

- ⚬

- SRQ1a: What are the most frequent keywords used and what is the related co-occurrence network?

- ⚬

- SRQ1b: What is the trend on the number of papers published per year?

- ⚬

- SRQ1c: What are the different publication venues used by the authors?

- ⚬

- SRQ1d: Which country is the most active in publishing in the area of sign language avatars? What is the collaboration relationship between countries?

- ⚬

- SRQ1e: Who are the most active authors? What is the trend of collaboration amongst authors?

- ⚬

- SRQ1f: What are the most relevant affiliations?

- ⚬

- SRQ1g: What is the sign language most studied for SL avatars?

- RQ2: What are the technologies used in developing SL avatars?

- ⚬

- SRQ2a: What are the methods used for SL synthesis?

- ⚬

- SRQ2b: What are the techniques used to animate SL avatars?

- ⚬

- SRQ2c: What are the characteristics of SL corpus and the annotation techniques used?

- ⚬

- SRQ2d: What are the techniques used for generating facial expressions?

- RQ3: What are the evaluation methods and metrics used to evaluate SL avatar systems?

The remainder of this paper is organized as follows. Section 2 provides the background of sign language avatars. Section 3 discusses the research methodology adopted for the review, whereas Section 4 is aimed at addressing the primary and secondary research questions. Section 5 provides a discussion of the findings of the review with current trends and limitations. Finally, Section 6 presents the conclusion for the paper.

2. Background

Sign language avatars are virtual animations designed to represent the gestures and movements used in sign language. They serve as a solution to reduce communication barriers for the D/HH communities. Research in this area has been extensive, focusing on the development process of sign language avatars. Figure 1 provides an overview of the key phases involved in creating a sign language avatar, which include sign language synthesis, sign language database generation, avatar animations, facial expressions, and evaluation.

Figure 1.

Overview of the SL avatar development process.

2.1. Sign Language Synthesis

Sign language synthesis transforms inputs like text, audio, or motion capture data into understandable sign language for DHH individuals. The process includes acquiring an input, employing linguistic representation, and visualizing output via an avatar. Motion capture, which uses sensors to record human signers, is a popular input method, offering accurate avatar signing but requiring significant resources, and having challenges regarding data modification []. A major challenge in synthesis is the absence of a standardized textual representation for sign language []. Instead, linguistic representations like glosses describe signs textually, though capturing the full meaning remains difficult [].

Various notation methods, such as Stokoe, HamNoSys, SignWriting, and Zebedee notation, have been developed to describe sign language textually using symbols [,,,]. These notations are often digitalized, like SWML for SignWriting or SiGML for HamNoSys. For output generation, articulatory synthesis mimics physical signing movements, while concatenative synthesis pieces together sections of pre-recorded signs for a more authentic output [,]. Machine learning is also increasingly used for generating accurate sign language outputs from inputs.

2.2. Sign Language Databases

Sign language databases are paramount for the sign language avatar generation process, serving as foundational platforms for training, analysis, reference, and evaluation. These databases, characterized by their extensive content and heterogeneity, are annotated using a myriad of methods and tools. This meticulous annotation ensures they serve as invaluable resources for linguistic analysis, standardized references, and system development.

The American Sign Language Lexicon Video Dataset (ASLLVD) corpus, introduced in 2010, encompasses over 3000 signs in American Sign Language (ASL) []. Derived from multiple camera perspectives and sourced from native ASL signers, its annotations, facilitated by SignStream 3, span from gloss labels and temporal codes to nuanced elements like handshape labels. To augment the annotation process, the Lexicon Viewer and Verification Tool (LVVT) was developed, designed to streamline annotations, and mitigate inconsistencies.

The RWTH-PHOENIX-Weather corpus offers a distinct collection of German Sign Language, aggregating 190 weather forecasts from German television []. This comprehensive database, enriched with multiple signers and an expansive sentence collection, utilized the ELAN annotation tool. This tool was employed to delineate glosses, demarcate time boundaries, and furnish translations in written German.

Furthermore, the CORSILE corpus serves as an exemplar of the richness of sign language, focusing on Spanish Sign Language as articulated by Galician signers in Spain []. This corpus, encapsulating various communication genres, initially employed manual annotation. However, with the evolution of annotation strategies, the ELAN tool was integrated for its efficiency. Annotations range from rudimentary glosses to intricate non-manual components such as gaze direction and torso movement.

Collectively, these databases provide a comprehensive snapshot of the extensive and diverse resources available for generating sign language avatars. The uniqueness of each database underscores the considerable investment of effort and resources necessary to curate comprehensive, detailed, and rigorously annotated sign language datasets.

2.3. Animating SL Avatars

SL avatars’ animations mostly contain two main phases of modeling and animation. The modeling of an animation encompasses different parameters of consideration, including the geometry and appearance of the model. In the modeling phase, the color, material, and texture of a model are specified. The geometry of the model can be represented using different techniques with the most common technique being surface-based representations such as polygonal meshes and parametric equations []. Polygonal meshes are a widely employed method for defining the 3D geometry []. They consist of interconnected polygons, such as triangles or quadrilaterals, forming a mesh structure. Manipulating the vertices of the mesh allows for desired deformations and movements. Parametric equations employ the use of mathematical formulas to describe the geometry of avatars, providing precise control over their shape and appearance.

In the animation phase, the avatar is manipulated to perform gestures and signs. One of the relevant approaches for this phase is the skeleton-based approach []. In this approach, the animation comprises a skeleton composed of “bones”. Each bone represents a segment of the body. By applying transformations to the bones, complex movements and gestures that closely resemble those of human signers can be produced. To integrate the skeleton with realistic features, rendering techniques are applied to generate the final visual output, incorporating lighting, shading, and other visual effects. Keyframe animation is another approach utilized, which includes establishing key poses at specified time periods and interpolating between them to achieve smooth and realistic motions []. The method of recording the sign language motion necessary to express the sign is known as motion capture. The collected motions are translated to the skeleton of the avatar, resulting in more realistic and sophisticated animation.

2.4. Facial Expressions

Facial expressions in sign language avatars are crucial as they convey more linguistic and emotional meaning compared to manual signs alone. Facial expressions also aid in improving the realism of the SL avatars. Facial expressions are linguistically required since for some signs the hand movements can be similar, and the differentiation factor is the expression of the signer []. This is more evident when discussing emotional facial expressions. The feelings of frustration, anger, happiness, and sadness are oftentimes expressed through facial expressions since signing alone using manual gestures cannot carry this meaning.

Facial expressions can also serve as grammatical markers through indicating different aspects in sign language sentences such as questions, negations, conditionals, and intensity. Sign language sentences with a declarative tone can be converted to yes/no questions through facial expressions. Negations can be conveyed through a shake of the head. Essentially, the WH questions of “who, what, where” can be indicated through facial expressions. Another aspect to facial expression incorporation in SL avatars is the additional contextual information it provides. With facial expressions, information related to the subject or object, such as spatial referencing, can be conveyed effectively.

Despite the essentiality of face expressions, the literature often does not implement them due to the complexity and laborious task of realizing facial expressions []. One of the many challenges regarding facial expressions is the accuracy and realism of the expressions. Incorporating facial expressions within the SL avatars requires the face and head to be detailed enough to effectively manipulate and demonstrate lifelike expressions []. To achieve this, the face is fragmented into smaller pieces with each fragment being represented by a set of parameters []. This is known as facial parametrization. It is crucial for extracting data from human recordings and controlling 3D avatars in animations. The parameterization should capture facial expressions, synchronize with body movements, and allow for modeling co-occurring expressions. Facial tracking techniques are employed to capture and reproduce facial expressions in SL avatars. This requires the capture of all facial features to ensure comprehensive information is collected. Timing is also crucial in facial expression generation as it should be synchronized with the appropriate hand movements when signing. The facial expressions of the avatars need to be aligned with the emotional intent of the signer for effective communication to take place.

2.5. Evaluation of SL Avatars

Sign language evaluation requires an assessment of numerous factors including technical aspects, user experience, linguistic and cultural aspects, and comparative studies. The evaluation of SL avatars helps to identify the different strengths and weaknesses that can improve SL performance. Further, with these evaluation studies, the user preference towards certain avatar features, gestures, and interaction styles can be identified, which helps to improve SL avatar acceptance by the DHH community.

The technical evaluation focuses on assessing the performance and compatibility of sign language avatars. One aspect of technical evaluation includes the accuracy of avatar movements and sign production, which is the precision with which the avatar reproduces sign language gestures and expressions []. Another factor is the realness and naturalness of the SL avatar. SL avatars are often criticized for their lack of realism and naturalism. The closer the SL avatar is to realistic features, the higher its acceptance is []. However, when making the avatar realistic, care must be taken so it does not fall into the “uncanny” region, which causes uneasiness to users []. The SL avatars should also be evaluated on their responsiveness, which is their ability to respond in real-time to user input []. This allows us to gauge how efficiently the SL avatar can be integrated into digital use, since for real-world applications, swift and synchronized responses are preferred.

The user experience is essential when evaluating SL avatar systems as it assesses the overall user-friendliness and ease of use of the systems. User satisfaction can be evaluated through questionnaires, interviews, or focus groups. Another factor of user experience to be evaluated is usability and efficiency. The evaluation of usability and efficiency gauges the clarity of the avatar-signed communication and the ease of use of the system itself for the users []. This evaluation takes into consideration different factors including signing speed, message clarity, and ease of comprehension. Avatars should not only be linguistically accurate but must also be culturally appropriate to promote inclusive environments []. Furthermore, since no set standards to SL avatars exist [], comparative evaluation can be used to determine the strengths and weaknesses of different SL avatar technologies. Comparative evaluation methods can be utilized to assess the functionality and user experience of different SL avatars. The evaluation of SL avatars is a vital step to ensure the real-world application and integration of these assistive technologies into society.

3. Methodology

This paper follows the methodology as detailed in the review protocol known as the Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) guidelines []. The PRISMA guidelines provide a standard peer-reviewed checklist on conducting a comprehensive systematic literature review. The PRISMA guidelines were carefully implemented with review steps of defining research questions, eligibility criteria, sources for information, search strategies adopted, the selection process, and quality assessment being thoroughly documented. As a final step in the guidelines, we use the selected sources to answer and discuss the research questions defined.

3.1. Eligibility Criteria

The eligibility criteria to determine the inclusion and exclusion of the papers in the initial stage are detailed in Table 1.

Table 1.

Eligibility criteria for papers.

3.2. Information Sources

Six electronic databases were searched for journal articles, conference papers, and theses that met the eligibility criteria. Table 2 provides a list of the databases searched for scholarly sources.

Table 2.

Online databases for scholarly sources.

3.3. Search Strategy

The search strategy involved developing the search string for searching the online databases. Table 3 shows the search string development process. We first documented the list of keywords and terms used in research focused on SL avatars. Our first category contained words related to avatars, whereas the second category included words related to signing.

Table 3.

Search terms identified for strategy.

To develop the search string for the online databases, the two categories were combined using Boolean operators suitable to each database. Table 4 shows the search string combinations used for each database.

Table 4.

Search strings for online databases.

3.4. Selection Process

The resulting papers were extracted from the online databases by the first author using the reference managing tool, Zotero []. In the extraction phase, a total of 1765 papers were retrieved, of which 359 were duplicates, and 2 were retracted. The remaining 1608 papers were exported to an excel file and were screened for the title, abstract, and keywords based on the eligibility criteria set out by the first author. In addition, 14 duplicates were further found and 2 papers were retracted.

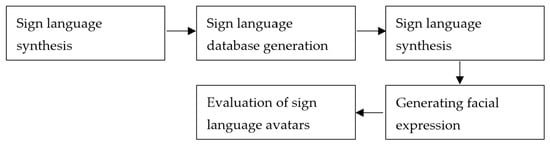

The initial screening resulted in 330 papers being retained. The papers then went through full-paper screening where the first author, referred to as Reviewer 1, screened the paper as to whether they answered the research questions mentioned in Table 1. The papers were assigned one of three labels, “include”, “maybe”, and “exclude”. When the paper was voted as “maybe”, Reviewer 1 and Reviewer 2 (second author) would have to come to a consensus on whether the paper needs to be included or not. A total of 39 papers were found to have no full-text availability. After the full-text screening, 106 papers went to the quality assessment stage. Figure 2 shows the PRISMA flow diagram for the selection process of the literature.

Figure 2.

PRISMA flow diagram for selection process.

3.5. Quality Assessment

We used the study by [] to assess the quality of the literature retained. As a result, we determined the following evaluation questions as relevant for quality assessment for this study:

- Are the aims and research questions clearly stated and directed towards avatar technology and its production?

- Are all the study questions answered?

- Are the techniques/methodologies used in the study for avatar production fully defined and documented?

- Are the limitations of this study adequately addressed?

- How clear and coherent is the reporting?

- Are the measures used in the study the most relevant ones for answering the research questions?

- How well does the evaluation address its original aims and purpose?

The assessment process included a grading system where each paper was scored between 0 and 7. Each quality assessment question represented 1 point where if a paper fully answered a question, it received a point of 1; partial answering of the question received a point of 0.5; and no answer or an inadequate answer to the question resulted in a point of 0. Papers that scored below the cutoff score of 4 points were excluded. We included 47 papers in the final review.

4. Results and Discussion

The review of the selected studies from the scholarly sources based on the research questions is discussed further in the following subsections.

4.1. Selected Studies

We selected 47 studies after the quality assessment stage. The final scholarly studies are presented in Table 5.

Table 5.

Selected studies from scholarly sources.

4.2. RQ1: Bibliometric Analysis of the Selected Studies

Table 6 shows the main information for the selected studies.

Table 6.

Main statistical indicators.

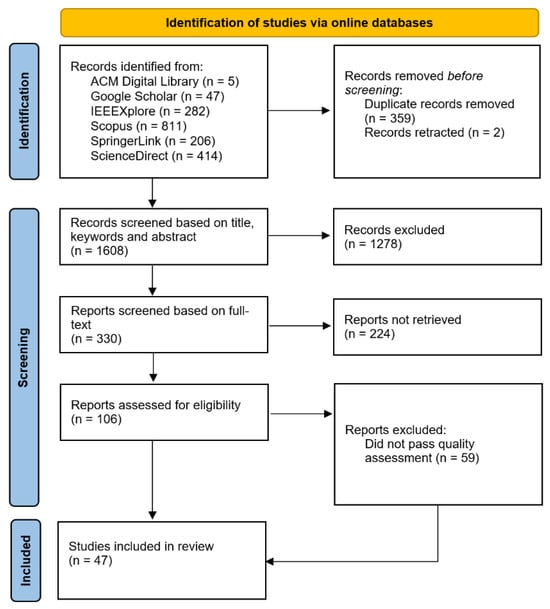

4.2.1. SRQ1a: Keyword Analysis

Keyword analysis was performed using VOSviewer 1.6.19 [], a text-mining technique that constructs co-occurrence networks of keywords. A threshold of two was instituted, implying that keywords must appear a minimum of twice in the bibliometric analysis for inclusion. After meticulous manual data collection, 27 keywords out of an initial 150 were shortlisted for the final analysis. The co-occurrence network derived from these keywords, as depicted in Figure 3, unfolds into seven distinct clusters, showcasing varying degrees of keyword interrelations. Within these clusters, ‘sign language’ prominently stands out as the pivotal keyword, accentuated by the size of its circle. This revelation indicates the diverse thematic concentrations present in the realm of sign language avatars, emphasizing the multifaceted and interlinked nature of research in this domain. For a more granular understanding, Table 7 delineates the frequencies of the top keywords and their proportional representation among the chosen studies.

Figure 3.

Keyword co-occurrence network.

Table 7.

Most frequent keywords used.

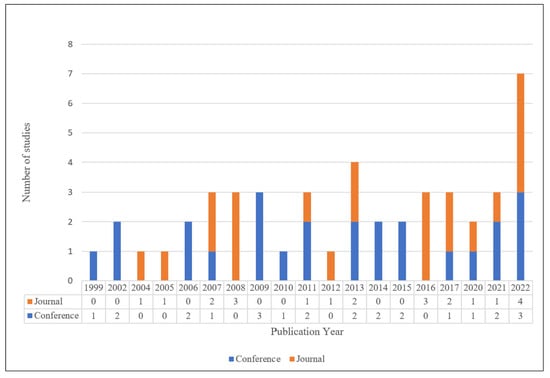

4.2.2. SRQ1b: Trend of Published Papers Per Year

Figure 4 displays the distribution of studies spanning the years 1982 to 2022. From this distribution, an ascendant trajectory in the aggregate number of papers released in both conferences and journals becomes palpable. A notable peak is observed in 2022, marking the zenith of published papers within this timeframe. Delving deeper into the annual publication metrics, we notice variability: conferences consistently outpace journals in terms of publication frequency. To illustrate, 2013 saw four papers, while three papers were disseminated during 2007–2009, 2011, 2016–2017, and 2021. In parallel, two papers were promulgated during 2002, 2006, 2014–2015, and 2020. On the more modest end of the spectrum, solitary publications were recorded in 1999, 2004, 2005, 2010, and 2012. It is worth noting that select years, namely 2001, 2003, 2018, and 2019, registered a complete absence of pertinent publications.

Figure 4.

Published sources per year.

Considering the above distribution and the calculated compound annual growth rate (CAGR) of 3.29%, the domain’s evolution is marked by palpable expansion, culminating in a pronounced upswing in 2022. The fluctuations in annual publication numbers potentially reflect oscillating research engagements, dictated by shifting trends, technological advancements, and recalibrated research imperatives.

4.2.3. SRQ1c: Publication Venues

Of the 47 papers included in the study, 22 papers were journal articles, comprising 46.8% of the total, and 53.2% were conference papers.

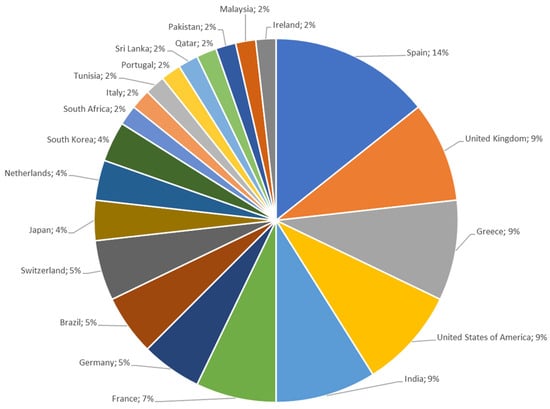

4.2.4. SRQ1d: Country-Wise Analysis

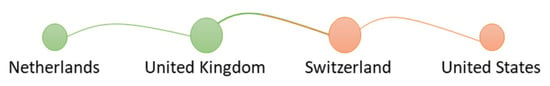

Figure 5 offers insights into the geographical distribution of papers concerning SL avatars. Evidently, Spain stands out as a significant hub for this research, accounting for 15% of the analyzed publications. The United Kingdom, Greece, the United States, and India also present considerable contributions, each constituting 9%. This dominance by Spain, coupled with notable contributions from other European nations, underscores the concentration of SL avatar research within the Western region. This could be attributed to the presence of academic institutions or research clusters focusing on sign language technology in these regions. It is also noteworthy that despite the vastness and diversity of Asia, represented countries from this region collectively contribute to only a fraction of the global research output, i.e., 11 out of 47 papers. Similarly, the Middle East and Africa’s representation is notably sparse, with only three countries between them. Such observations indicate a potential gap in research across different parts of the world. It could signify limited resources, fewer research initiatives, or perhaps different research priorities in these regions. It might also suggest that collaborations, funding, and support for SL avatar research are richer in Western and European regions, thereby leading to a higher volume of publications. Figure 6 further details the collaborative efforts among countries in this domain. The clusters formed, primarily by Western countries, highlight an active exchange of knowledge and collaborative research endeavors within these regions. The collaboration between the UK, the Netherlands, Switzerland, and the USA can be a testament to the shared research interests and possibly institutional partnerships.

Figure 5.

Distribution of papers across countries.

Figure 6.

Collaboration between the countries.

As a result, the country-wise analysis not only maps the distribution of research output but also points towards potential areas or regions where further research impetus is needed. It also underscores the importance of fostering international collaborations to ensure a more holistic and diverse advancement in the domain of SL avatars.

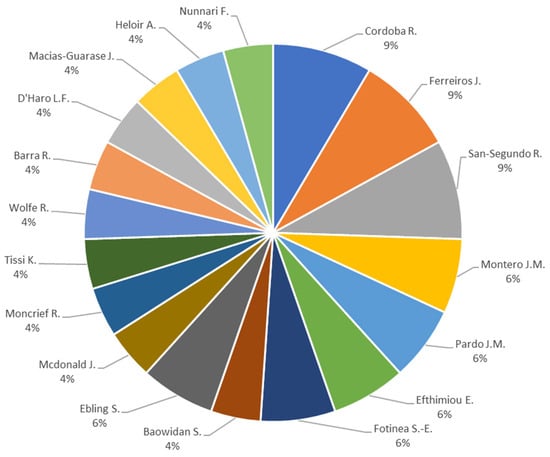

4.2.5. SRQ1e: Author-Wise Analysis

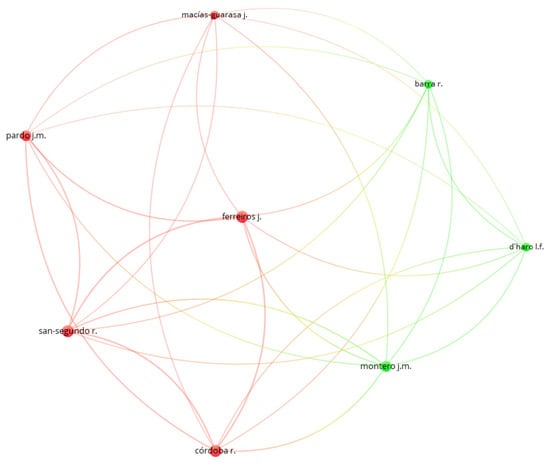

Understanding the major contributors in any academic domain can provide key insights into its foundational works, pivotal research areas, and future directions. An author-wise analysis serves as a tool to pinpoint these significant scholars and comprehend the relationships and collaborations that might be shaping the field’s discourse. Figure 7 showcases the standout authors, determined by the volume and impact of their contributions to the existing body of literature on SL avatars. Cordoba R., Ferreiros J., and San-Segunda R. have marked their dominance with each producing four critical works. Other noteworthy figures include Montero J. M., Pardo J. M., Efthimiou E., Fontinea S.-E., and Ebling S., each with three attributed important publications. To further elucidate the landscape, Figure 8 depicts the intricate collaboration networks among these leading authors. The visualization presents two prominent clusters. The first cluster encompasses Cordoba R., Ferreiros J., Macias-Guarase J., Pardo J. M., and San-Segundo R., signifying their close academic affiliations. In contrast, the second cluster associates Barra R., D’Haro L. F., and Montero J.M. These patterns not only highlight mutual research interests and methodologies but also hint at potential centers of academic excellence in the domain of SL avatars.

Figure 7.

Frequency of publication based on authors.

Figure 8.

Co-authorship map for selected studies.

4.2.6. SRQ1f: Relevant Affiliations

Table 8 displays the significant affiliations based on their citation counts. Despite our study encompassing 63 distinct affiliations, we highlight the most prominent one. Notably, the University of East Anglia stands out as a leader in publishing papers on SL avatars, contributing five papers to this domain. Purdue University, Universidad Politécnica de Madrid, Institute for Language and Speech Processing, University of Zurich, and DePaul University closely follow, each having published two papers in this field.

Table 8.

Active affiliations.

4.2.7. SRQ1g: Most Studied Sign Language for SL Avatars

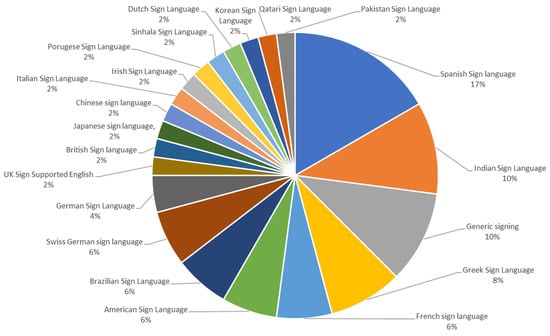

Figure 9 illustrates the distribution of sign languages studied in the published works on SL avatars. Notably, the majority of papers, approximately 17%, focus on Spanish Sign Language, while 10% center on Indian Sign Language and generic signing. The term ‘generic signing’ signifies solutions that are adaptable across various sign languages, rather than being exclusive to a single type. Additionally, Greek Sign Language ranks third in terms of study frequency, accounting for 8% of the papers. This analysis of sign language frequency provides valuable insights into prevalent sign languages and underscores areas that require further exploration. Recognizing the diversity of sign languages is crucial as it varies across regions, necessitating comprehensive consideration.

Figure 9.

Frequency of studied sign languages.

4.3. RQ2: Technologies Used in Development of SL Avatars

4.3.1. SRQ2a: Sign Language Synthesis

Table 9 discusses sign language synthesis techniques used in the selected studies. We observe different studies that have utilized different approaches to converting inputs into sign language representations. These representations are then converted to a suitable format for use to animate the respective SL avatars.

Table 9.

SL synthesis techniques.

4.3.2. SRQ2b: Sign Language Animation Techniques

The design and animation of SL avatars involved the use of different techniques in the literature. For character modeling, [,,] used Autodesk Inc’s Maya software []. Ref. [] employed Poser Animation software [], which facilitated character selection and animation. Ref. [] used 3D Studio Max [] for character modeling. Some papers also used the Blender software [] for character modeling, rigging, and movement such as [,,]. Ref. [] designed their model using MakeHuman [], allowing the customization of features such as gender and age. The model was then imported into Blender, where a skeleton with over 70 bones was attached for animation. Ref. [] used Daz Studio [] for 3D modeling, and then exported their avatars to Blender for adjustments.

Ref. [] used a virtual human named Simon that was developed using DirectX technology. Simon is a 3D avatar with texture-mapped skin. Mocap technology was implemented for recording hand, body, and head positions. Other papers that used Mocap technology for animating SL avatars include [,,,,,,]. Refs. [,,] also used MotionBuilder [] for motion capture.

Ref. [] utilized the H-Anim standard and polygon-based skeletal structure. The keyframe animation method was used to animate the model. Ref. [] also utilized the H-Anim standard and polygon-based skeletal structure. Keyframe animation method was used to animate the model. Ref. [] utilized the H-Anim standard and polygon-based skeletal structure. Keyframe animation method with inverse kinematics was used to animate the model. Ref. [] adopted a skeleton-based animation approach with modifications to enable independent wrist orientation and position.

Some papers adopted previously available avatars into their research. For instance, [] used VGuido, an avatar which was developed as part of the eSIGN project []. The SL avatar consists of a skeleton that can be manipulated to perform different signs by applying different parameters to the bones of the skeleton. The eSIGN project also involves an eSIGN editor that generates signed animation from SiGML notation. Refs. [,] also used the VGuido avatar. Ref. [] also used the SiGML notation system, which was converted to motion data that could be used to manipulate the bones of the skeletal structure of the avatar. Refs. [,,,,,] used the JASigning avatar developed by []. Ref. [] used an EQ4ALL avatar for avatar design. The EQ4ALL avatar player follows a pipeline, involving parsing, composition, timing calculation, and rendering, increasing gesture combinations and natural blending.

Scripted engines were another prominent approach to the animation of SL avatars. Ref. [] employed a virtual agent controlled by the STEP engine [], a scripting technology to design and animate their SL avatar. Ref. [] used a scripted animation engine called Maxine []. Maxine provides virtual actors with full expressive body and facial animation, as well as an emotional state that influences their responses, expressions, and behaviors. Ref. [] used the EMBR character animation engine [], which provided a high degree of animation control and was publicly available. Upper body control was implemented using IK-based spine controls, allowing for shoulder movements.

Other techniques used in SL avatars include the use of Java 3D models []. Ref. [] employed a data-driven approach to animation, where signs were stored in a repository and parameterized based on the context provided by the linguistic input. Manual animation is also involved in cases where automatic techniques cannot provide a suitable solution. Ref. [] used performance-driven animation and puppeteering for SL avatars where performance capture enabled direct control over the avatar’s movements by tracking the user’s hands and face. Meanwhile, [] also used motion capture for SL avatars; they optimized motion capture recordings for ASL, utilizing a custom-built Faceware rig for facial capture, and emphasizing fluid and natural movements that resemble native ASL signers. Ref. [] used a 2D pose estimation model on sign language performance images to obtain 2D keypoints, which were converted into a 3D pose for a human avatar, with separate models for the hands and body, ultimately animating the avatar in Blender.

4.3.3. SRQ2c: Sign Language Corpora Characteristics and Annotation Techniques

Most papers developed their own SL corpora due to a lack of publicly available, comprehensive, and annotated datasets in their targeted sign language. Earlier papers, such as [,,,], mentioned using a sign language lexicon that contained mostly the sign, its equivalent natural language term, and the display time. Ref. [] developed a parallel corpus with Spanish words and their corresponding gesture sequences in Spanish SL. Their corpora contained 135 sentences with more than 258 different words. In addition, the sentences were translated into Spanish Sign Language manually, thereby generating more than 270 different gestures. Ref. [] used a SL database that contained a closed set of phonological components, comprising handshape, location, movement, and palm orientation, which were combined to generate all possible signs in GSL. The sign database was structured based on the HamNoSys notation system. The database also included morphological rules for complex sign formation, allowing the creation of new signs or inflected forms. Ref. [] developed DGS database high-resolution video footage of a professional interpreter translating written German sentences into DGS.

Ref. [] developed an LSE database consisting of 416 sentences containing over 650 different words. A professional translator converted the original set into LSE, using more than 320 different signs. In another paper, [] developed an LSE dataset containing 707 annotated glosses and videos, where 547 sentences were pronounced by government employees and 160 by users. This corpus was expanded to 2124 sentences by adding different variants for Spanish sentences while maintaining the LSE translation. Ref. [] created a SL database that consisted of a collection of sign animations based on the analysis of a LIS dictionary. The database was annotated with information about thematic roles, syntactic numbers, and the meaning of each lemma.

The SL database constructed for the SignCom project was a specially designed corpus of signs from French Sign Language []. The corpus included a set of nouns, depicting and indicating verbs, as well as classifiers, and size and shape specifiers. The corpus was annotated with unique glosses for each sign type, phonetic descriptions of the signs, and their grammatical classes. The annotations follow a multi-tier template that can be adapted to other sign language corpora and motion databases. Ref. [] developed a relational database with four logical levels, where the first level served as a gloss dictionary, and the subsequent levels described the signs in phonetic parameters, phonemes, allophones, and hand orientation and movements. The database allowed for storing different dialect variations and mood realizations of the same concept. Ref. [] developed the Sign4PSL database containing 400 basic signs of PSL and manual features only. These included alphabets, numbers, 40 words, and 25 sentences.

The characteristics of the SL database developed by [] for LibrasTV included storing visual representations of signs in LIBRAS, associating each sign with a gloss for representation. The dictionary allowed for the customization of visual representations, accommodating regional specificities of the language. An annotation technique was used to define translation rules and morphological-syntactic classifications based on human specialists’ input. Ref. [] developed a gesture dictionary that consisted of 472 lemmas, providing vocabulary for Basque to LSE translation. Ref. [] structured their SL database using a JSON file with glosses mapped to the corresponding actions. The database also incorporated contextual information associated with glosses to ensure meaningful gestures. Ref. [] used a text file with multiple hand poses for each sign. Each hand pose was defined with X, Y, Z coordinates for different bones.

Ref. [] developed a GSL database using two GSL sources, the NOEMA lexicon database and the lemmatization of the GSL segment of the Dicta-Sign corpus []. Each lemma within the database is annotated with gloss, GSL videos, HamNoSys annotations, non-manual element coding, modern Greek equivalents, and morphosyntactic information. The ELAN software tool was also used commonly to annotate the databases as seen in [,]. Ref. [] annotated their ISL database to include the HamNoSys notations, along with a SiGML representation to be used as an input for the JASigning application. Their database covered various categories, such as Calendar, Family, Food, Animals, and contained 16,878 English, 16,821 Hindi, and 16,793 Punjabi samples.

A SL database built around an online Indian sign language corpus contained 2950 English words represented in HamNoSys notation []. While the corpus was more for general usage, it was organized into categories such as alphabets, numbers, weekdays, animals, countries, jobs, technical, academic, medical, and legal terms, managed through an admin panel for the addition, deletion, and modification of entries. Ref. [] also developed an ISL database containing 50 commonly used ISL words and dialogues between different users. The database focused on vocabulary items derived from unique ISL words and included four animation sequences for better comprehension. The ISL-based database developed by [] consisted of 1300 animations of signs in ISL with integrated hand and body poses.

Some databases were created for domain-related uses. Ref. [] created a 2000-word database specifically for academic use, whereas [] used the Irish Sign Language database known as ATIS corpus [], specifically developed for the air travel domain. Ref. [] developed a dataset that contained 22,875 distinct KSL instances. KSL annotation data consisted of 419,688 total segmentations with 7949 unique glosses, categorized into weather or emergency announcements. The further division of emergency announcements into 37 subcategories encompassed natural disasters, accidents, health, and service disruptions.

4.3.4. SRQ2d: Sign Language Facial Expressions Generation

Different techniques have been used to generate and integrate facial expressions in SL avatars. Mocap technology was commonly used, as seen in [,,,,,]. Facial expression using face animation parameters was another technique used, meaning that the avatar’s facial features were a set of polygons and they were controlled by manipulating the facial parameters [,,,]. Scripting engines such as Maxine have also been used to generate facial animations []. Ref. [] used the Blender tool [] to generate facial expressions.

Another technique used for facial expressions was the use of a set of deformations for the avatar’s face called morphs []. SiGML notation was then implemented by defining each expression as a mixture of the avatar’s morphs, along with a temporal profile that specified the onset, hold, and release times for each facial movement. Refs. [,,] also used SiGML representation for the non-manual components including the facial expressions. Ref. [] used HamNoSys notation, and internally established code numbers for the representation of some features such as eye gaze and the turning of the torso for facial expressions. This notation was also utilized by [,].

Ref. [] employed procedural animation routines that executed animation functions for facial expressions, head nods, and gaze. Ref. [] utilized the viseme generation capabilities of the OpenMARY speech synthesis system [] to animate mouth movements. Ref. [] generated facial expressions by defining bone movements for facial elements in the 3D avatar. Facial movements were then controlled by setting parameters of location and rotation for each bone.

Ref. [] generated facial expressions by involving a physics-based muscle modeling approach proposed by Waters. This approach employed parametric muscle models based on human facial anatomy, specifically linear and sphincter muscles, to emulate muscle behavior on the skin. The muscles were placed in anatomically correct positions using MPEG-4 Feature Points, and each muscle was defined by key nodes and an area of influence that affects a portion of the skin upon contraction, along with a deformation formula for the influenced vertices. This allowed for automatic and realistic facial animation.

Ref. [] used Organic Motion System software to capture facial expressions and utilize morphing techniques to then modify the avatar’s facial expressions. If an emotion was indicated, the animation speed changed accordingly, and facial expressions were adjusted using morphing. The technique by [] incorporated a Kinect camera and FaceShift software. The Kinect captured 3D facial data, while FaceShift reconstructed and animated the face based on the user’s real-time expressions. Ref. [] involved a custom-built Faceware Pro HD Mark 3.2 Headcam rig that captured high-fidelity facial expressions and extended over the front of the user’s face to allow capturing signs near the head and face while maintaining realistic facial expressions of the SL avatar.

4.4. RQ3: Evaluation Methods and Metrics of SL Avatars

The evaluation of various SL avatar systems encompassed diverse methodologies, aiming to understand their usability, effectiveness, and user satisfaction. Qualitative evaluation involved using questionnaires [,,,], focus groups [,], and participant feedback [,,,,,], which were commonly employed for assessing system usability and acceptability. For instance, [] engaged deaf participants in focus groups, gathering insights on avatar attributes like eye gaze, color choice, and transitions. Preliminary observations, usability expert input, and Wizard-of-Oz experiments also contributed to system assessment in studies by [,], and [], respectively. Ref. [] opted for an online survey among the French deaf community, focusing on avatar actions and facial expression significance.

The system evaluation by [] involved separate assessments of the translation and animation modules. For translation, a real interpreter evaluated the accuracy of translated sentences, achieving a 90.7% correctness rate. Animation engine evaluation included obtaining feedback from hearing-disabled individuals, LSE interpreters, and bilingual individuals. Feedback focused on smooth movement transitions, an enhanced expression of emotion, facial features and body gestures, and improving the naturalness of hand-modeled signs. Ref. [] administered a post-assessment survey that measured the impact of using VR for QSL education. The survey also gained feedback on the feasibility of the VR solution as well as evaluated the quality of hand, body, and facial expression.

Other investigations took a more quantitative approach, evaluating metrics such as accuracy [,,,,,], Absolute Category Rating [], mean per joint position error [], and the Percentage Correct Keypoints [] to evaluate the performance of the model. Specifically, papers measured accuracy using Word Error Rate [,,,], Sign Error Rate [,,,], inter-relater reliability [], and BiLingual Evaluation Understudy (BLEU) [,,,]. Ref. [] gauged translation accuracy and sign synthesis, yielding favorable results for both aspects. Additionally, facial animation evaluation, as demonstrated by [], utilized feature points to assess the accurate simulation of facial muscle movements.

Ref. [] evaluated the avatar Simon on the mean error rate by using test files containing random sign sequences and pausing between signs to allow for a professional sign reader to interpret the signed word. Similarly, [] also showed fifty synthetic signs signed by their proposed system to nine fluent signers. In total, 13% of the signs were misunderstood mainly due to the lack of quality of the graphics and facial animation of the avatar. Ref. [] evaluated their SL avatar system using an isolated sign intelligibility test (ISIT) that was performed to assess the foundation of the animation strategy. This test measured the intelligibility of isolated signs produced by the avatar compared to real interpreters, using the metric to evaluate the SL avatar system.

The evaluation process also involved direct engagement with deaf participants and sign language experts. Refs. [,,,] sought continuous feedback to enhance system usability and effectiveness. Surveys and questionnaires gathered insights on factors such as speech recognition and sign naturalness from participants in studies [,]. Furthermore, [] conducted co-design sessions with participants to refine signing and animation aspects. They also conducted a 3 h focus group session with diverse participants, discussing eight predetermined topics, comparing three sign/phrase variants each, and addressing aspects like time punctuation, subtitles, mouthing, and vocabulary.

Mixed-method approaches were also adopted, combining quantitative and qualitative aspects. TESSA’s evaluation involved questionnaires, open platforms for feedback, and quantitative measures like accuracy and acceptability []. Ref. [] employed Likert scales, cognitive workload indexes, and open-ended interviews to comprehensively understand user experiences and preferences. The evaluation of SL avatar systems by [] utilized a user study, leveraging objective and subjective measures for comprehensibility.

5. Discussion

The findings of this systematic literature review underline several key aspects and developments in the domain of sign language avatars. Predominantly, the upward trend in publications accentuates the growing importance of this research area. The geographical distribution of these studies reveals a disparity, with the Western regions overshadowing the Eastern, indicating potential cultural or resource disparities that might be influencing the research trajectory. Across the studies, sign notation systems, especially HamNoSys, stood out as being preferred, suggesting their reliability and comprehensiveness. Mocap technology’s prevalence underlines its efficacy in capturing intricate facial gestures. However, the absence of a uniform evaluation framework points towards the need for standardization in assessing SL avatars, thereby presenting a potential direction for future studies.

6. Conclusions

This paper provides a comprehensive overview of the field of SL avatars through a systematic literature review. The inclusion of 47 pertinent publications spanning journals and conferences ensures that a wide gamut of methodologies and technological advancements is covered. The primary objective was to shed light on the current state and challenges facing this research domain. We believe that this review, the first of its kind to our knowledge, serves as a foundation for researchers and industry practitioners looking to delve into the realm of SL avatars.

7. Future Directions

From our systematic literature review on sign language avatars, it has become evident that there has been substantial growth in this domain. Yet, a geographical disparity exists in research contributions, which underscores potential gaps and avenues for further exploration. Another pivotal takeaway is the need for standardization in evaluating SL avatars, a challenge that the research community must address. Looking ahead, there are several avenues that future research can consider. The exploration and implementation of machine learning (ML) and artificial intelligence (AI) strategies in this field have the potential to revolutionize the sign synthesis processes. There is a glaring need to address the lack of publicly available and annotated datasets, which could significantly propel advancements in this domain. Moreover, the prospect of developing a multi-language sign language system offers promise. Such a system can bridge linguistic gaps, thereby fostering enhanced communication among global deaf communities, enabling richer cross-cultural academic discourse.

Author Contributions

Conceptualization, Writing—Review & Editing, Writing—Original Draft, M.A. and A.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The study did not report any data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- World Health Organization. Deafness and Hearing Loss. 27 February 2023. Available online: https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss (accessed on 28 May 2023).

- United Nations. International Day of Sign Languages. Available online: https://www.un.org/en/observances/sign-languages-day (accessed on 28 May 2023).

- Yet, A.X.J.; Hapuhinne, V.; Eu, W.; Chong, E.Y.C.; Palanisamy, U.D. Communication methods between physicians and Deaf patients: A scoping review. Patient Educ. Couns. 2022, 105, 2841–2849. [Google Scholar] [CrossRef] [PubMed]

- Quandt, L.C.; Willis, A.; Schwenk, M.; Weeks, K.; Ferster, R. Attitudes toward Signing Avatars Vary Depending on Hearing Status, Age of Signed Language Acquisition, and Avatar Type. Front. Psychol. 2022, 13, 254. [Google Scholar] [CrossRef] [PubMed]

- Naert, L.; Larboulette, C.; Gibet, S. A survey on the animation of signing avatars: From sign representation to utterance synthesis. Comput. Graph. 2020, 92, 76–98. [Google Scholar] [CrossRef]

- Prinetto, P.; Tiotto, G.; Del Principe, A. Designing health care applications for the deaf. In Proceedings of the 2009 3rd International Conference on Pervasive Computing Technologies for Healthcare, London, UK, 1–3 April 2009; pp. 1–2. [Google Scholar] [CrossRef]

- Wolfe, R.; Mcdonald, J.C.; Efthimiou, E.; Fotinea, E.; Picron, F.; van Landuyt, D.; Sioen, T.; Braffort, A.; Filhol, M.; Ebling, S.; et al. The myth of signing avatars. In Proceedings of the 1st International Workshop on Automatic Translation for Signed and Spoken Languages, Virtual, 20 August 2021; Available online: https://www.academia.edu/download/76354340/2021.mtsummit-at4ssl.4.pdf (accessed on 1 June 2023).

- Wolfe, R. Special issue: Sign language translation and avatar technology. Mach. Transl. 2021, 35, 301–304. [Google Scholar] [CrossRef]

- Mori, M.; MacDorman, K.F.; Kageki, N. The uncanny valley. IEEE Robot. Autom. Mag. 2012, 19, 98–100. [Google Scholar] [CrossRef]

- Bragg, D.; Koller, O.; Bellard, M.; Berke, L.; Boudreault, P.; Braffort, A.; Caselli, N.; Huenerfauth, M.; Kacorri, H.; Verhoef, T.; et al. Sign language recognition, generation, and translation: An interdisciplinary perspective. In Proceedings of the 21st International ACM SIGACCESS Conference on Computers and Accessibility, Pittsburgh, PA, USA, 28–30 October 2019; Association for Computing Machinery, Inc.: New York, NY, USA, 2019; pp. 16–31. [Google Scholar] [CrossRef]

- Kipp, M.; Heloir, A.; Nguyen, Q. Sign language avatars: Animation and comprehensibility. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2011; Volume 6895, pp. 113–126. [Google Scholar] [CrossRef]

- Kahlon, N.K.; Singh, W. Machine translation from text to sign language: A systematic review. Univers. Access Inf. Soc. 2021, 22, 1–35. [Google Scholar] [CrossRef]

- Farooq, U.; Rahim, M.S.M.; Sabir, N.; Hussain, A.; Abid, A. Advances in machine translation for sign language: Approaches, limitations, and challenges. Neural Comput. Appl. 2021, 33, 14357–14399. [Google Scholar] [CrossRef]

- Rastgoo, R.; Kiani, K.; Escalera, S.; Sabokrou, M. Sign language production: A review. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3451–3461. Available online: https://openaccess.thecvf.com/content/CVPR2021W/ChaLearn/html/Rastgoo_Sign_Language_Production_A_Review_CVPRW_2021_paper.html (accessed on 31 May 2023).

- Wolfe, R.; McDonald, J.C.; Hanke, T.; Ebling, S.; Van Landuyt, D.; Picron, F.; Krausneker, V.; Efthimiou, E.; Fotinea, E.; Braffort, A. Sign Language Avatars: A Question of Representation. Information 2022, 13, 206. [Google Scholar] [CrossRef]

- Kacorri, H. TR-2015001: A Survey and Critique of Facial Expression Synthesis in Sign Language Animation. January 2015. Available online: https://academicworks.cuny.edu/gc_cs_tr/403 (accessed on 1 June 2023).

- Heloir, A.; Gibet, S.; Multon, F.; Courty, N. Captured motion data processing for real time synthesis of sign language. In Proceedings of the Gesture in Human-Computer Interaction and Simulation: 6th International Gesture Workshop, Berder Island, France, 18–20 May 2005; Springer: Berlin/Heidelberg, Germany, 2006; pp. 168–171. [Google Scholar] [CrossRef]

- Porta, J.; López-Colino, F.; Tejedor, J.; Colás, J. A rule-based translation from written Spanish to Spanish Sign Language glosses. Comput. Speech Lang. 2014, 28, 788–811. [Google Scholar] [CrossRef]

- Arvanitis, N.; Constantinopoulos, C.; Kosmopoulos, D. Translation of sign language glosses to text using sequence-to-sequence attention models. In Proceedings of the 15th International Conference on Signal Image Technology and Internet Based Systems, SISITS 2019, Sorrento, Italy, 26–29 November 2019; pp. 296–302. [Google Scholar] [CrossRef]

- McCarty, A.L. Notation Systems for Reading and Writing Sign Language. Anal. Verbal Behav. 2004, 20, 129–134. [Google Scholar] [CrossRef]

- Bouzid, Y.; Jemni, M. An avatar based approach for automatically interpreting a sign language notation. In Proceedings of the 2013 IEEE 13th International Conference on Advanced Learning Technologies, ICALT 2013, Beijing, China, 15–18 July 2013; pp. 92–94. [Google Scholar] [CrossRef]

- Kennaway, J.R.; Glauert, J.R.; Zwitserlood, I. Providing signed content on the Internet by synthesized animation. ACM Trans. Comput. Interact. 2007, 14, 15. [Google Scholar] [CrossRef]

- Filhol, M. Zebedee: A Lexical Description Model for Sign Language Synthesis. Internal, LIMSI. 2009. Available online: https://perso.limsi.fr/filhol/research/files/Filhol-2009-Zebedee.pdf (accessed on 7 June 2023).

- Havasi, L.; Szabó, H.M. A motion capture system for sign language synthesis: Overview and related issues. In Proceedings of the EUROCON 2005—The International Conference on Computer as a Tool, Belgrade, Serbia, 21–24 November 2005; pp. 445–448. [Google Scholar] [CrossRef]

- Grieve-Smith, A.B. SignSynth: A sign language synthesis application using Web3D and perl. In Gesture and Sign Language in Human-Computer Interaction: International Gesture Workshop; Springer: Berlin/Heidelberg, Germany, 2002; pp. 134–145. [Google Scholar] [CrossRef]

- Neidle, C.; Thangali, A.; Sclaroff, S. Challenges in development of the american sign language lexicon video dataset (asllvd) corpus. In Proceedings of the 5th Workshop on the Representation and Processing of Sign Languages: Interactions between Corpus and Lexicon (LREC), Istanbul, Turkey, 21–27 May 2012; Available online: https://hdl.handle.net/2144/31899 (accessed on 2 March 2023).

- Forster, J.; Schmidt, C.; Hoyoux, T.; Koller, O.; Zelle, U.; Piater, J.H.; Ney, H. RWTH-PHOENIX-weather: A large vocabulary sign language recognition and translation corpus. In Proceedings of the Eighth International Conference on Language Resources and Evaluation (LREC’12), Istanbul, Turkey, 23–25 May 2012; pp. 3785–3789. Available online: http://www-i6.informatik.rwth-aachen.de/publications/download/773/forster-lrec-2012.pdf (accessed on 31 May 2023).

- Cabeza, C.; Garcia-Miguel, J.M.; García-Mateo, C.; Luis, J.; Castro, A. CORILSE: A Spanish sign language repository for linguistic analysis. In Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC ’16), Portorož, Slovenia, 23–28 May 2016; pp. 1402–1407. Available online: https://aclanthology.org/L16-1223/ (accessed on 31 May 2023).

- Preda, M.; Preteux, F. Insights into low-level avatar animation and MPEG-4 standardization. Signal. Process. Image Commun. 2002, 17, 717–741. [Google Scholar] [CrossRef]

- Lombardi, S.; Simon, T.; Saragih, J.; Schwartz, G.; Lehrmann, A.; Sheikh, Y. Neural Volumes: Learning Dynamic Renderable Volumes from Images. ACM Trans. Graph. 2019, 38, 3020. [Google Scholar] [CrossRef]

- Pranatio, G.; Kosala, R. A comparative study of skeletal and keyframe animations in a multiplayer online game. In Proceedings of the 2010 2nd International Conference on Advances in Computing, Control and Telecommunication Technologies, ACT 2010, Jakarta, Indonesia, 2–3 December 2010; pp. 143–145. [Google Scholar] [CrossRef]

- Gibet, S.; Lefebvre-Albaret, F.; Hamon, L.; Brun, R.; Turki, A. Interactive editing in French Sign Language dedicated to virtual signers: Requirements and challenges. Univers. Access Inf. Soc. 2016, 15, 525–539. [Google Scholar] [CrossRef]

- Wong, J.; Holden, E.; Lowe, N.; Owens, R.A. Real-Time Facial Expressions in the Auslan Tuition System. Comput. Graph. Imaging 2003, 7–12. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=da38d324052b63a8de2266fef7655bd545a82f56 (accessed on 30 May 2023).

- Gonçalves, D.A.; Todt, E.; Cláudio, D.P. Landmark-based facial expression parametrization for sign languages avatar animation. In Proceedings of the XVI Brazilian Symposium on Human Factors in Computing Systems (IHC 2017), Joinville, Brazil, 23–27 October 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Papastratis, I.; Chatzikonstantinou, C.; Konstantinidis, D.; Dimitropoulos, K.; Daras, P. Artificial Intelligence Technologies for Sign Language. Sensors 2021, 21, 5843. [Google Scholar] [CrossRef]

- Wolfe, R.; Braffort, A.; Efthimiou, E.; Fotinea, E.; Hanke, T.; Shterionov, D. Special issue on sign language translation and avatar technology. Univers. Access Inf. Soc. 2023, 1, 1–3. [Google Scholar] [CrossRef]

- Kipp, M.; Nguyen, Q.; Heloir, A.; Matthes, S. Assessing the deaf user perspective on sign language avatars. In Proceedings of the 13th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS ’11), Dundee, UK, 24–26 October 2011; ACM: New York, NY, USA, 2011; pp. 107–114. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. BMJ 2009, 339, 332–336. [Google Scholar] [CrossRef]

- Zotero | Your Personal Research Assistant. Available online: https://www.zotero.org/ (accessed on 30 May 2023).

- Kitchenham, B.; Charters, S. Guidelines for performing Systematic Literature Reviews in Software Engineering. 2007. Available online: Chrome-extension://efaidnbmnnnibpcajpcglclefindmkaj/https://www.elsevier.com/__data/promis_misc/525444systematicreviewsguide.pdf (accessed on 19 July 2023).

- Pezeshkpour, F.; Marshall, I.; Elliott, R.; Bangham, J.A. Development of a legible deaf-signing virtual human. In Proceedings of the International Conference on Multimedia Computing and Systems, Florence, Italy, 7–11 June 1999; pp. 333–338. [Google Scholar] [CrossRef]

- Losson, O.; Cantegrit, B. Generation of signed sentences by an avatar from their textual description. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Yasmine Hammamet, Tunisia, 6–9 October 2002; pp. 426–431. [Google Scholar] [CrossRef]

- Cox, S.; Lincoln, M.; Tryggvason, J.; Nakisa, M.; Wells, M.; Tutt, M.; Abbott, S. Tessa, a system to aid communication with deaf people. In Proceedings of the Fifth International ACM Conference on Assistive Technologies, Edinburgh, UK, 8–10 July 2002; p. 205. [Google Scholar] [CrossRef]

- Hou, J.; Aoki, Y. A Real-Time Interactive Nonverbal Communication System through Semantic Feature Extraction as an Interlingua. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2004, 34, 148–155. [Google Scholar] [CrossRef]

- Papadogiorgaki, M.; Grammalidis, N.; Makris, L. Sign synthesis from SignWriting notation using MPEG-4, H-Anim, and inverse kinematics techniques. Int. J. Disabil. Hum. Dev. 2005, 4, 191–204. [Google Scholar] [CrossRef]

- Adamo-Villani, N.; Carpenter, E.; Arns, L. An immersive virtual environment for learning sign language mathematics. In Proceedings of the ACM SIGGRAPH 2006 Educators Program, SIGGRAPH ’06, Boston, MA, USA, 30 July–3 August 2006; ACM: New York, NY, USA, 2006; p. 20. [Google Scholar] [CrossRef]

- San-Segundo, R.; Barra, R.; D’haro, L.F.; Montero, J.M.; Córdoba, R.; Ferreiros, J. A spanish speech to sign language translation system for assisting deaf-mute people. In Proceedings of the Ninth International Conference on Spoken Language Processing, Jeju, Republic of Korea, 17–21 September 2006; Available online: http://lorien.die.upm.es/~lfdharo/Papers/Spanish2SignLanguage_Interspeech2006.pdf (accessed on 18 June 2023).

- Van Zijl, L.; Fourie, J. The Development of a Generic Signing Avatar. In Proceedings of the IASTED International Conference on Graphics and Visualization in Engineering (GVE ’07), Clearwater, FL, USA, 3–5 January 2007; ACTA Press: Calgary, AB, Canada, 2007; pp. 95–100. [Google Scholar]

- Efthimiou, E.; Fotinea, S.-E.; Sapountzaki, G. Feature-based natural language processing for GSL synthesis. Sign Lang. Linguist. 2007, 10, 3–23. [Google Scholar] [CrossRef]

- Fotinea, S.E.; Efthimiou, E.; Caridakis, G.; Karpouzis, K. A knowledge-based sign synthesis architecture. Univers. Access Inf. Soc. 2008, 6, 405–418. [Google Scholar] [CrossRef]

- San-Segundo, R.; Barra, R.; Córdoba, R.; D’Haro, L.F.; Fernández, F.; Ferreiros, J.; Lucas, J.M.; Macías-Guarasa, J.; Montero, J.M.; Pardo, J.M. Speech to sign language translation system for Spanish. Speech Commun. 2008, 50, 1009–1020. [Google Scholar] [CrossRef]

- San-Segundo, R.; Montero, J.M.; Macías-Guarasa, J.; Córdoba, R.; Ferreiros, J.; Pardo, J.M. Proposing a speech to gesture translation architecture for Spanish deaf people. J. Vis. Lang. Comput. 2008, 19, 523–538. [Google Scholar] [CrossRef][Green Version]

- Baldassarri, S.; Cerezo, E.; Royo-Santas, F. Automatic translation system to Spanish sign language with a virtual interpreter. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2009; Volume 5726, pp. 196–199. [Google Scholar] [CrossRef]

- Anuja, K.; Suryapriya, S.; Idicula, S.M. Design and development of a frame based MT system for English-to-ISL. In Proceedings of the 2009 World Congress on Nature and Biologically Inspired Computing (NABIC 2009), Coimbatore, India, 9–11 December 2009; pp. 1382–1387. [Google Scholar] [CrossRef]

- Delorme, M.; Filhol, M.; Braffort, A. Animation generation process for sign language synthesis. In Proceedings of the 2nd International Conferences on Advances in Computer-Human Interactions (ACHI 2009), Cancun, Mexico, 1–7 February 2009; pp. 386–390. [Google Scholar] [CrossRef]

- San-Segundo, R.; López, V.; Martín, R.; Lufti, S.; Ferreiros, J.; Córdoba, R.; Pardo, J.M. Advanced Speech communication system for deaf people. In Proceedings of the Eleventh Annual Conference of the International Speech Communication Association, Chiba, Japan, 26–30 September 2010. [Google Scholar]

- Lombardo, V.; Battaglino, C.; Damiano, R.; Nunnari, F. An avatar-based interface for the Italian sign language. In Proceedings of the International Conference on Complex, Intelligent and Software Intensive Systems (CISIS 2011), Seoul, Republic of Korea, 30 June–2 July 2011; pp. 589–594. [Google Scholar] [CrossRef]

- Gibet, S.; Courty, N.; Duarte, K.; Le Naour, T. The SignCom system for data-driven animation of interactive virtual signers. ACM Trans. Interact. Intell. Syst. 2011, 1, 1–23. [Google Scholar] [CrossRef]

- López-Colino, F.; Colás, J. Hybrid paradigm for Spanish Sign Language synthesis. Univers. Access Inf. Soc. 2012, 11, 151–168. [Google Scholar] [CrossRef]

- De Araújo, T.M.U.; Ferreira, F.L.S.; dos Santos Silva, D.A.N.; Lemos, F.H.; Neto, G.P.; Omaia, D.; de Souza Filho, G.L.; Tavares, T.A. Automatic generation of Brazilian sign language windows for digital TV systems. J. Braz. Comput. Soc. 2013, 19, 107–125. [Google Scholar] [CrossRef]

- Vera, L.; Coma, I.; Campos, J.; Martínez, B.; Fernández, M. Virtual Avatars Signing in Real Time for Deaf Students. In Proceedings of the International Conference on Computer Graphics Theory and Applications and International Conference on Information Visualization Theory and Applications (GRAPP-2013), Barcelona, Spain, 21–24 February 2013; pp. 261–266. [Google Scholar]

- Bouzid, Y.; El Ghoul, O.; Jemni, M. Synthesizing facial expressions for signing avatars using MPEG4 feature points. In Proceedings of the 2013 4th International Conference on Information and Communication Technology and Accessibility (ICTA 2013), Hammamet, Tunisia, 24–26 October 2013. [Google Scholar] [CrossRef]

- Morrissey, S.; Way, A. Manual labour: Tackling machine translation for sign languages. Mach. Transl. 2013, 27, 25–64. [Google Scholar] [CrossRef]

- Brega, J.R.F.; Rodello, I.A.; Dias, D.R.C.; Martins, V.F.; De Paiva Guimarães, M. A virtual reality environment to support chat rooms for hearing impaired and to teach Brazilian Sign Language (LIBRAS). Proceedings of IEEE/ACS International Conference on Computer Systems and Applications (AICCSA), Doha, Qatar, 10–13 November 2014; Volume 2014, pp. 433–440. [Google Scholar] [CrossRef]

- Del Puy Carretero, M.; Urteaga, M.; Ardanza, A.; Eizagirre, M.; García, S.; Oyarzun, D. Providing Accessibility to Hearing-disabled by a Basque to Sign Language Translation System. In Proceedings of the International Conference on Agents and Artificial Intelligence, Angers, France, 6–8 March 2014; pp. 256–263. [Google Scholar]

- Almeida, I.; Coheur, L.; Candeias, S. Coupling natural language processing and animation synthesis in Portuguese sign language translation. In Proceedings of the Fourth Workshop on Vision and Language, Lisbon, Portugal, 18 September 2015; pp. 94–103. [Google Scholar]

- Ebling, S.; Wolfe, R.; Schnepp, J.; Baowidan, S.; McDonald, J.; Moncrief, R.; Sidler-Miserez, S.; Tissi, K. Synthesizing the finger alphabet of Swiss German Sign Language and evaluating the comprehensibility of the resulting animations. In Proceedings of the SLPAT 2015: 6th Workshop on Speech and Language Processing for Assistive Technologies, Dresden, Germany, 11 September 2015; pp. 10–16. [Google Scholar]

- Ebling, S.; Glauert, J. Building a Swiss German Sign Language avatar with JASigning and evaluating it among the Deaf community. Univers. Access Inf. Soc. 2016, 15, 577–587. [Google Scholar] [CrossRef]

- Efthimiou, E.; Fotinea, S.E.; Dimou, A.L.; Goulas, T.; Kouremenos, D. From grammar-based MT to post-processed SL representations. Univers. Access Inf. Soc. 2015, 15, 499–511. [Google Scholar] [CrossRef]

- Heloir, A.; Nunnari, F. Toward an intuitive sign language animation authoring system for the deaf. Univers. Access Inf. Soc. 2016, 15, 513–523. [Google Scholar] [CrossRef]

- Ebling, S.; Johnson, S.; Wolfe, R.; Moncrief, R.; McDonald, J.; Baowidan, S.; Haug, T.; Sidler-Miserez, S.; Tissi, K. Evaluation of animated Swiss German sign language fingerspelling sequences and signs. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2017; Volume 10278, pp. 3–13. [Google Scholar] [CrossRef]

- Punchimudiyanse, M.; Meegama, R.G.N. Animation of fingerspelled words and number signs of the Sinhala Sign language. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2017, 16, 2743. [Google Scholar] [CrossRef]

- De Martino, J.M.; Silva, I.R.; Bolognini, C.Z.; Costa, P.D.P.; Kumada, K.M.O.; Coradine, L.C.; da Silva Brito, P.H.; Amaral, W.M.D.; Benetti, B.; Poeta, E.T.; et al. Signing avatars: Making education more inclusive. Univers. Access Inf. Soc. 2017, 16, 793–808. [Google Scholar] [CrossRef]

- Quandt, L. Teaching ASL Signs using Signing Avatars and Immersive Learning in Virtual Reality. In Proceedings of the ASSETS 2020—22nd International ACM SIGACCESS Conference on Computers and Accessibility, Virtual Event, Greece, 26–28 October 2020. [Google Scholar] [CrossRef]

- Sugandhi; Kumar, P.; Kaur, S. Sign Language Generation System Based on Indian Sign Language Grammar. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2020, 19, 1–26. [Google Scholar] [CrossRef]

- Das Chakladar, D.; Kumar, P.; Mandal, S.; Roy, P.P.; Iwamura, M.; Kim, B.G. 3D Avatar Approach for Continuous Sign Movement Using Speech/Text. Appl. Sci. 2021, 11, 3439. [Google Scholar] [CrossRef]

- Nguyen, L.T.; Schicktanz, F.; Stankowski, A.; Avramidis, E. Automatic generation of a 3D sign language avatar on AR glasses given 2D videos of human signers. In Proceedings of the 1st International Workshop on Automatic Translation for Signed and Spoken Languages (AT4SSL), Virtual, 20 August 2021; Association for Machine Translation in the Americas: Cambridge, MA, USA, 2021; pp. 71–81. Available online: https://aclanthology.org/2021.mtsummit-at4ssl.8 (accessed on 10 August 2023).

- Krishna, S. SignPose: Sign Language Animation Through 3D Pose Lifting. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Montreal, QC, Canada, 11–17 October 2021; pp. 2640–2649. [Google Scholar]

- Dhanjal, A.S.; Singh, W. An automatic machine translation system for multi-lingual speech to Indian sign language. Multimed. Tools Appl. 2022, 81, 4283–4321. [Google Scholar] [CrossRef]

- Yang, F.C.; Mousas, C.; Adamo, N. Holographic sign language avatar interpreter: A user interaction study in a mixed reality classroom. Comput. Animat. Virtual Worlds 2022, 33, e2082. [Google Scholar] [CrossRef]

- Partarakis, N.; Zabulis, X.; Foukarakis, M.; Moutsaki, M.; Zidianakis, E.; Patakos, A.; Adami, I.; Kaplanidi, D.; Ringas, C.; Tasiopoulou, E. Supporting Sign Language Narrations in the Museum. Heritage 2022, 5, 1. [Google Scholar] [CrossRef]

- Van Gemert, B.; Cokart, R.; Esselink, L.; De Meulder, M.; Sijm, N.; Roelofsen, F. First Steps Towards a Signing Avatar for Railway Travel Announcements in the Netherlands. In Proceedings of the 7th International Workshop on Sign Language Translation and Avatar Technology: The Junction of the Visual and the Textual: Challenges and Perspectives, Marseille, France, 24 June 2022; European Language Resources Association: Marseille, France, 2022; pp. 109–116. Available online: https://aclanthology.org/2022.sltat-1.17 (accessed on 3 September 2023).