Autonomous Critical Help by a Robotic Assistant in the Field of Cultural Heritage: A New Challenge for Evolving Human-Robot Interaction

Abstract

:1. Introduction

1.1. Related Work

1.2. Contribution

- Investigate the artistic interests of the user and model the user with respect to those interests by attributing to them specific mental states (beliefs, goals, plans) and creating a complex user model;

- Model the beliefs, goals, and plans of the museum curators;

- Select the most suitable museum tour as a result of a negotiation internal to the agent, between the represented mental states of the user and the represented mental states of the exhibition curators;

- Investigate different dimensions of the user’s satisfaction with respect to the tour proposed by the intelligent agent.

2. Background

- Sub help: agent Y satisfies a sub-part of the delegated world-state (so satisfying just a sub-goal of agent X),

- Literal help: agent Y adopts exactly what has been delegated by agent X,

- Over help: agent Y goes beyond what has been delegated by agent X without changing X plan (but including it within a hierarchically superior plan),

- Critical over help: agent Y realizes an over help and, in addition, modifies the original plan/action (included in the new meta-plan),

- Critical help: agent Y satisfies the relevant results of the requested plan/action (the goal), but modifies that plan/action,

- Critical-sub help: agent Y realizes a sub help and, in addition, modifies the (sub) plan/action.

3. An Overview of the Computational Cognitive Model

- The current state of the environment, excluding the agents involved in the scenario;

- The mental states of the user; that is, the beliefs, goals, and plans that the agent attributes to the user thanks to the capability of having a ToM of the user themselves;

- The mental states of other agents involved in the scenario. In this case, the agents are the museum curators; that is, those who designed, realized, and maintain the museum exhibition;

- General beliefs, which correspond to the agent’s knowledge.

- : the artistic period favorited by the user,

- : the artistic periods in which the user has no interest,

- : the level of accuracy with which the user intends to view the material proposed during the visit to the museum.

4. The Pilot Study

4.1. Experimental Design

- The relevance of an artistic period is defined on the basis of the originality of the artworks that compose it and the impact they had in the field of art history.

- The accuracy, on the other hand, specifies the detail in the description of each artwork present in a thematic room.

- Each thematic tour (artistic period) belongs to a category that collects different artistic periods; for example, the “Impressionism” tour belongs to the same category as the “Surrealism” and “Cubism” tours, which are in the more general class named “modern art”. This is replicated for any artistic period.

4.2. The Heuristic for the Tour Selection

| Algorithm 1 Artistic Period Selection Algorithm. |

|

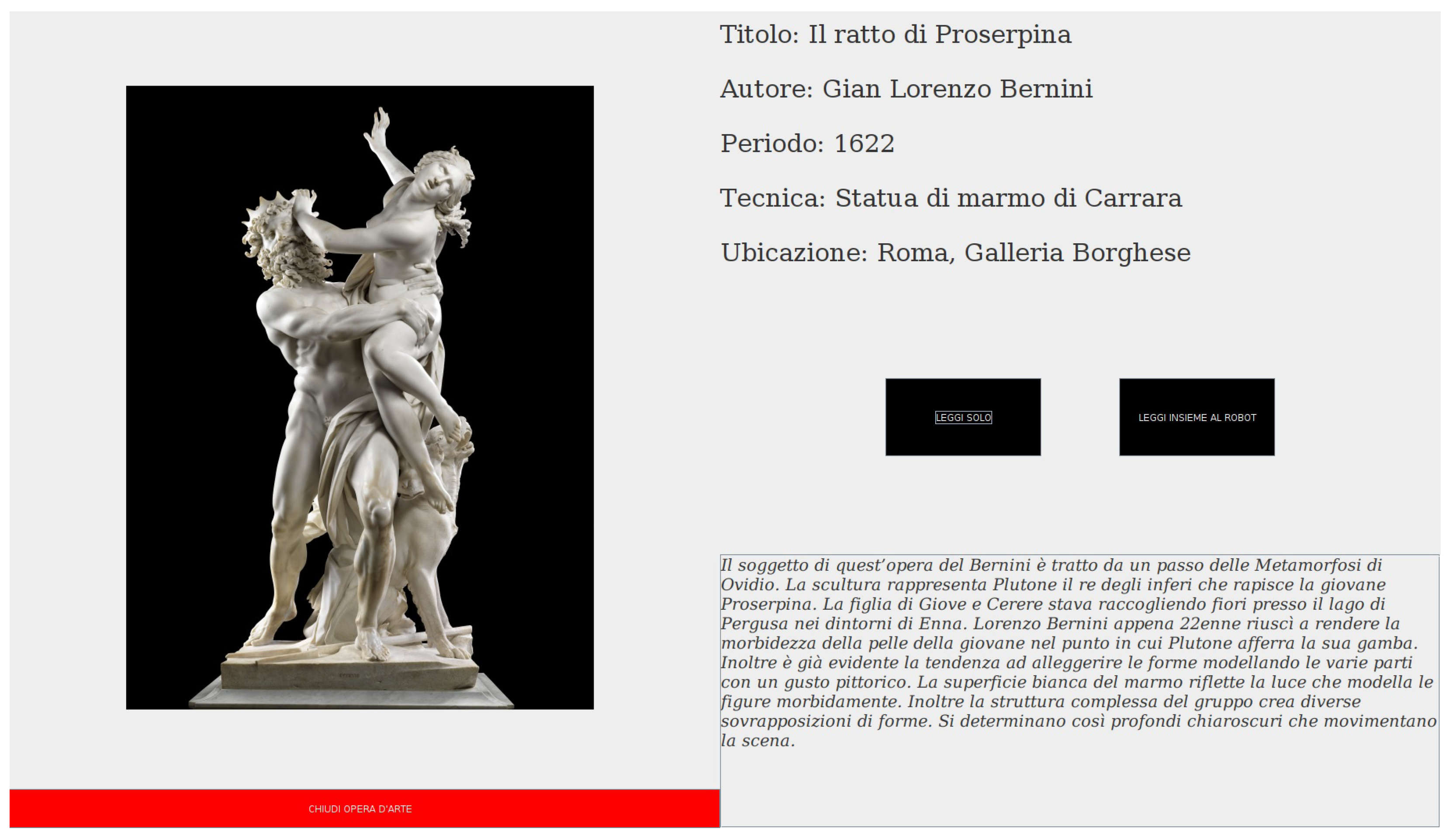

4.3. Experimental Procedure

- Starting interaction: the robot introduces itself to the user, describing its role and the virtual museum it manages.

- User artistic profiling: the robot proposes a series of questions to the user, which aim to investigate their artistic interests in terms of her favorite artistic periods and artistic periods of no interest. In this phase, the interaction is supported by a GUI through which the user can express their artistic preferences, and the robot can collect useful data to profile the user. In addition to defining the artistic periods of interest and non-interest of the user, the robot asks the user with what degree of accuracy they intend to visit the section.

- Tour visit: once the user profile has been established, the robot exploits the heuristic defined in Section 4.2 to select the tour on behalf of the user. Once the selection has been made, the robot activates the corresponding tour in the virtual museum and leaves the control to the user, who can visit the room, selecting the artworks inside.

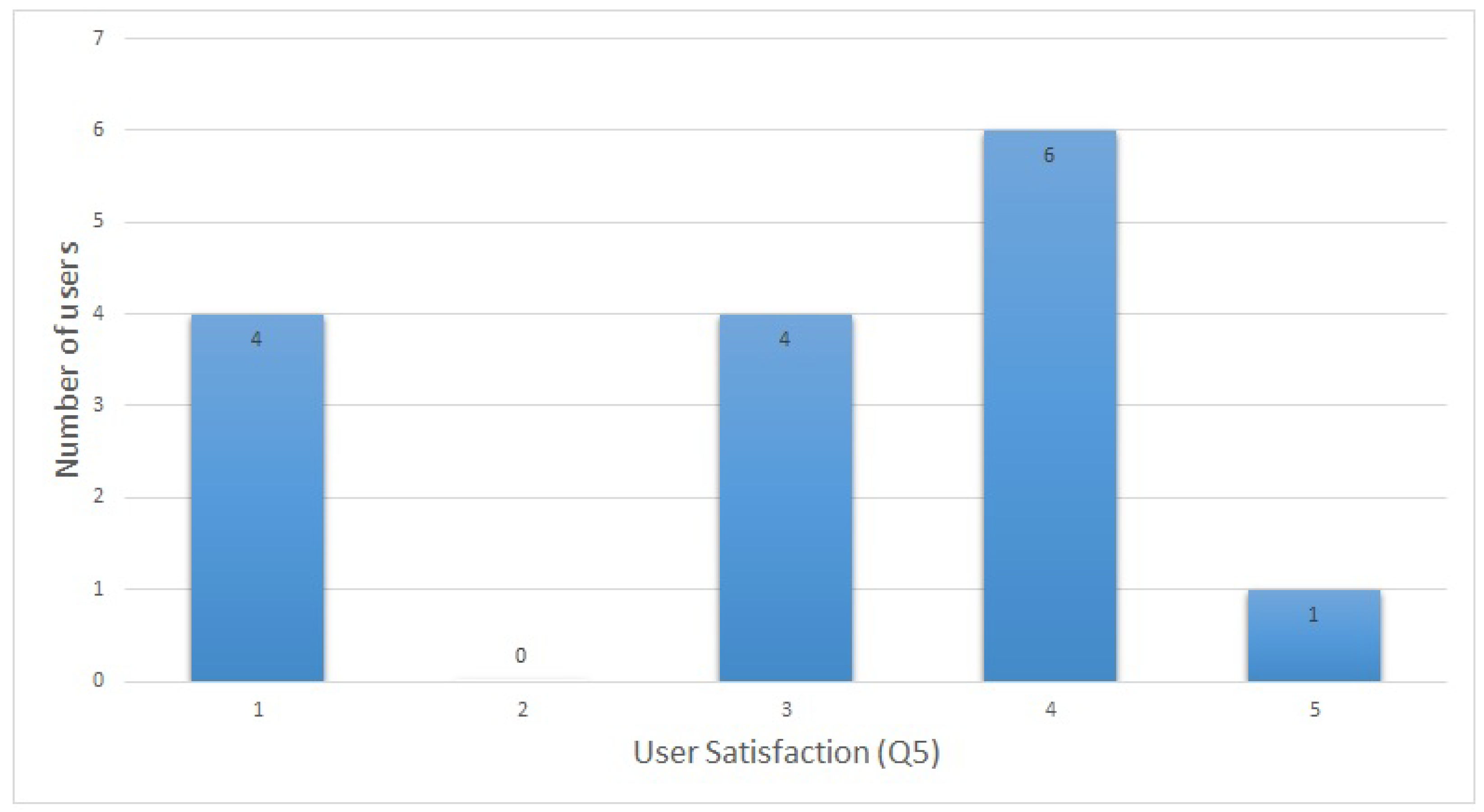

- End museum tour: the user can leave the recommended tour and, therefore, the museum. Once this happens, the robot returns to interact with the user, asking them questions. These questions, which belong to a short survey, are used to investigate how satisfied the user is with the visit. We have decided to adopt a five-level scale to encode the user responses, where value 1 is the worst case, and 5 is the best one.

- Q1: How satisfied were you with the duration of the visit?

- Q2: How satisfied were you with the quality of the artworks?

- Q3: How satisfied were you with the number of the artworks?

- Q4: How surprised were you with the artistic period recommended by the robot compared to the artistic period initially chosen by you?

- Q5: How satisfied are you with the robot’s recommendation given the artistic period initially chosen by you?

5. Results

- RQ1: How risky/acceptable is the critical help compared to the literal help? Does the heuristic proposed help to make this help much more acceptable?

- RQ2: Given the risks that the critical help determines, in what situations and how much critical help can be useful?

Experiment Limitations

6. Conclusions

7. Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Isaac, A.; Haslhofer, B. Europeana linked open data–data. europeana. eu. Semant. Web 2013, 4, 291–297. [Google Scholar] [CrossRef]

- Carriero, V.A.; Gangemi, A.; Mancinelli, M.L.; Marinucci, L.; Nuzzolese, A.G.; Presutti, V.; Veninata, C. ArCo: The Italian cultural heritage knowledge graph. In Proceedings of the International Semantic Web Conference, Auckland, New Zealand, 26–30 October 2019; pp. 36–52. [Google Scholar]

- Dimitropoulos, K.; Manitsaris, S.; Tsalakanidou, F.; Nikolopoulos, S.; Denby, B.; Al Kork, S.; Crevier-Buchman, L.; Pillot-Loiseau, C.; Adda-Decker, M.; Dupont, S.; et al. Capturing the intangible an introduction to the i-Treasures project. In Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; Volume 2, pp. 773–781. [Google Scholar]

- Doulamis, A.D.; Voulodimos, A.; Doulamis, N.D.; Soile, S.; Lampropoulos, A. Transforming Intangible Folkloric Performing Arts into Tangible Choreographic Digital Objects: The Terpsichore Approach. In Proceedings of the VISIGRAPP (5: VISAPP), Porto, Portugal, 27 February–1 March 2017; pp. 451–460. [Google Scholar]

- Fiorucci, M.; Khoroshiltseva, M.; Pontil, M.; Traviglia, A.; Del Bue, A.; James, S. Machine learning for cultural heritage: A survey. Pattern Recognit. Lett. 2020, 133, 102–108. [Google Scholar] [CrossRef]

- Sansonetti, G.; Gasparetti, F.; Micarelli, A.; Cena, F.; Gena, C. Enhancing cultural recommendations through social and linked open data. User Model. User-Adapt. Interact. 2019, 29, 121–159. [Google Scholar] [CrossRef]

- Bekele, M.K.; Pierdicca, R.; Frontoni, E.; Malinverni, E.S.; Gain, J. A survey of augmented, virtual, and mixed reality for cultural heritage. J. Comput. Cult. Herit. (JOCCH) 2018, 11, 1–36. [Google Scholar] [CrossRef]

- Trunfio, M.; Lucia, M.D.; Campana, S.; Magnelli, A. Innovating the cultural heritage museum service model through virtual reality and augmented reality: The effects on the overall visitor experience and satisfaction. J. Herit. Tour. 2022, 17, 1–19. [Google Scholar] [CrossRef]

- Machidon, O.M.; Duguleana, M.; Carrozzino, M. Virtual humans in cultural heritage ICT applications: A review. J. Cult. Herit. 2018, 33, 249–260. [Google Scholar] [CrossRef]

- Burgard, W.; Cremers, A.B.; Fox, D.; Hähnel, D.; Lakemeyer, G.; Schulz, D.; Steiner, W.; Thrun, S. Experiences with an interactive museum tour-guide robot. Artif. Intell. 1999, 114, 3–55. [Google Scholar] [CrossRef]

- Thrun, S.; Bennewitz, M.; Burgard, W.; Cremers, A.B.; Dellaert, F.; Fox, D.; Hahnel, D.; Rosenberg, C.; Roy, N.; Schulte, J.; et al. MINERVA: A second-generation museum tour-guide robot. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation (Cat. No. 99CH36288C), Detroit, MI, USA, 10–15 May 1999; Volume 3. [Google Scholar]

- Nieuwenhuisen, M.; Behnke, S. Human-like interaction skills for the mobile communication robot robotinho. Int. J. Soc. Robot. 2013, 5, 549–561. [Google Scholar] [CrossRef]

- Chella, A.; Liotta, M.; Macaluso, I. CiceRobot: A cognitive robot for interactive museum tours. Ind. Robot. Int. J. 2007, 34, 503–511. [Google Scholar] [CrossRef]

- Gehle, R.; Pitsch, K.; Dankert, T.; Wrede, S. How to open an interaction between robot and museum visitor? Strategies to establish a focused encounter in HRI. In Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 187–195. [Google Scholar]

- Willeke, T.; Kunz, C.; Nourbakhsh, I.R. The History of the Mobot Museum Robot Series: An Evolutionary Study. In Proceedings of the FLAIRS Conference, Key West, FL, USA, 21–23 May 2001; pp. 514–518. [Google Scholar]

- Vásquez, B.P.E.A.; Matía, F. A tour-guide robot: Moving towards interaction with humans. Eng. Appl. Artif. Intell. 2020, 88, 103356. [Google Scholar] [CrossRef]

- Lee, M.K.; Forlizzi, J.; Kiesler, S.; Rybski, P.; Antanitis, J.; Savetsila, S. Personalization in HRI: A longitudinal field experiment. In Proceedings of the 2012 7th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Boston, MA, USA, 5–8 March 2012; pp. 319–326. [Google Scholar]

- Iio, T.; Satake, S.; Kanda, T.; Hayashi, K.; Ferreri, F.; Hagita, N. Human-like guide robot that proactively explains exhibits. Int. J. Soc. Robot. 2020, 12, 549–566. [Google Scholar] [CrossRef]

- Ardissono, L.; Kuflik, T.; Petrelli, D. Personalization in cultural heritage: The road travelled and the one ahead. User Model. User-Adapt. Interact. 2012, 22, 73–99. [Google Scholar] [CrossRef]

- Robaczewski, A.; Bouchard, J.; Bouchard, K.; Gaboury, S. Socially assistive robots: The specific case of the NAO. Int. J. Soc. Robot. 2021, 13, 795–831. [Google Scholar] [CrossRef]

- Boissier, O.; Bordini, R.H.; Hübner, J.F.; Ricci, A.; Santi, A. Multi-agent oriented programming with JaCaMo. Sci. Comput. Program. 2013, 78, 747–761. [Google Scholar] [CrossRef]

- Castelfranchi, C.; Falcone, R. Towards a theory of delegation for agent-based systems. Robot. Auton. Syst. 1998, 24, 141–157. [Google Scholar] [CrossRef]

- Chella, A.; Lanza, F.; Pipitone, A.; Seidita, V. Knowledge acquisition through introspection in human-robot cooperation. Biol. Inspir. Cogn. Archit. 2018, 25, 1–7. [Google Scholar] [CrossRef]

- Falcone, R.; Castelfranchi, C. The human in the loop of a delegated agent: The theory of adjustable social autonomy. IEEE Trans. Syst. Man, Cybern.-Part A Syst. Hum. 2001, 31, 406–418. [Google Scholar] [CrossRef]

- Scassellati, B.M. Foundations for a Theory of Mind for a Humanoid Robot. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2001. [Google Scholar]

- Rao, A.S.; Georgeff, M.P. BDI agents: From theory to practice. In Proceedings of the ICMAS, San Francisco, CA, USA, 12–14 June 1995; Volume 95, pp. 312–319. [Google Scholar]

- Wooldridge, M.; Jennings, N.R. Agent theories, architectures, and languages: A survey. In Proceedings of the International Workshop on Agent Theories, Architectures, and Languages, Amsterdam, The Netherlands, 8–9 August 1994; pp. 1–39. [Google Scholar]

- Bratman, M. Intention, Plans, and Practical Reason; Harvard University Press: Cambridge, MA, USA, 1987; Volume 10. [Google Scholar]

- Argan, G.C.; Oliva, A.B. L’arte Moderna; Sansoni: Florence, Italy, 1999. [Google Scholar]

- Innes, J.M.; Morrison, B.W. Experimental studies of Human-Robot interaction: Threats to valid interpretation from methodological constraints associated with experimental manipulations. Int. J. Soc. Robot. 2021, 13, 765–773. [Google Scholar] [CrossRef]

- Oliveira, R.; Arriaga, P.; Paiva, A. Human-robot interaction in groups: Methodological and research practices. Multimodal Technol. Interact. 2021, 5, 59. [Google Scholar] [CrossRef]

- Hoffman, G.; Zhao, X. A primer for conducting experiments in Human-Robot interaction. ACM Trans. Hum.-Robot Interact. (THRI) 2020, 10, 1–31. [Google Scholar] [CrossRef]

| User | Preferred Artistic Period | User Accuracy | Recommended Tour | Tour Accuracy | Q1 | Q2 | Q3 | Q4 (Surprise) | Q5 (Satisfaction) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Baroque | Medium | Caravaggio | High | 4 | 5 | 4 | 5 | 5 |

| 3 | Baroque | High | Caravaggio | High | 4 | 5 | 5 | 4 | 4 |

| 4 | Impressionism | Medium | Romanticism | Medium | 5 | 4 | 4 | 5 | 3 |

| 4 | Cubism | High | Espressionism | Medium | 3 | 2 | 3 | 5 | 1 |

| 5 | 700 Sculpture | High | 700 Painting | High | 5 | 5 | 4 | 3 | 4 |

| 8 | Cubism | High | Neoclassicism | High | 5 | 4 | 4 | 4 | 3 |

| 10 | Impressionism | High | Espressionism | Low | 4 | 1 | 3 | 5 | 1 |

| 12 | Impressionism | High | Surrealism | Medium | 3 | 3 | 4 | 3 | 4 |

| 15 | Art Nouveau | Medium | Romanticism | Medium | 5 | 5 | 5 | 4 | 3 |

| 17 | Art Nouveau | Medium | Neoclassicism | High | 5 | 5 | 4 | 3 | 4 |

| 20 | Futurism | Medium | Romanticism | Low | 4 | 2 | 4 | 5 | 1 |

| 21 | Cubism | Medium | Surrealism | Medium | 5 | 4 | 3 | 3 | 3 |

| 22 | Baroque | High | 400 Painting | High | 2 | 5 | 3 | 4 | 1 |

| 23 | Romanticism | Medium | Simbolism | High | 1 | 5 | 4 | 3 | 4 |

| 24 | Cubism | Medium | Surrealism | Medium | 5 | 3 | 5 | 5 | 4 |

| User | Preferred Artistic Period | User Accuracy | Recommended Tour | Tour Accuracy | Q1 | Q2 | Q3 | Q4 (Surprise) | Q5 (Satisfaction) |

|---|---|---|---|---|---|---|---|---|---|

| 2 | 500 Italian Painting | High | 500 Italian Painting | High | 4 | 5 | 4 | 1 | 5 |

| 5 | 500 Italian Painting | High | 500 Italian Painting | High | 5 | 5 | 5 | 2 | 5 |

| 6 | Greek Art | Medium | Greek Art | Medium | 5 | 5 | 5 | 1 | 5 |

| 7 | Gothic | Medium | Gothic | Medium | 5 | 5 | 5 | 1 | 4 |

| 9 | 500 Italian Painting | Medium | 500 Italian Painting | High | 5 | 4 | 4 | 2 | 3 |

| 11 | Caravaggio | Low | Caravaggio | High | 5 | 4 | 4 | 1 | 4 |

| 13 | Gothic | Low | Gothic | Low | 1 | 1 | 1 | 1 | 2 |

| 14 | Contemporary Art | Medium | Contemporary Art | Medium | 5 | 3 | 3 | 1 | 5 |

| 16 | 700 Painting | High | 700 Painting | Medium | 4 | 4 | 5 | 2 | 5 |

| 18 | 500 Italian Painting | High | 500 Italian Painting | High | 5 | 3 | 4 | 1 | 5 |

| 19 | Contemporary Art | High | Contemporary Art | Low | 5 | 4 | 4 | 1 | 5 |

| Group | Literal Help | Critical Help |

|---|---|---|

| Mean | 4.36 | 3.00 |

| SD | 1.03 | 1.36 |

| N | 11 | 15 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cantucci, F.; Falcone, R. Autonomous Critical Help by a Robotic Assistant in the Field of Cultural Heritage: A New Challenge for Evolving Human-Robot Interaction. Multimodal Technol. Interact. 2022, 6, 69. https://doi.org/10.3390/mti6080069

Cantucci F, Falcone R. Autonomous Critical Help by a Robotic Assistant in the Field of Cultural Heritage: A New Challenge for Evolving Human-Robot Interaction. Multimodal Technologies and Interaction. 2022; 6(8):69. https://doi.org/10.3390/mti6080069

Chicago/Turabian StyleCantucci, Filippo, and Rino Falcone. 2022. "Autonomous Critical Help by a Robotic Assistant in the Field of Cultural Heritage: A New Challenge for Evolving Human-Robot Interaction" Multimodal Technologies and Interaction 6, no. 8: 69. https://doi.org/10.3390/mti6080069

APA StyleCantucci, F., & Falcone, R. (2022). Autonomous Critical Help by a Robotic Assistant in the Field of Cultural Heritage: A New Challenge for Evolving Human-Robot Interaction. Multimodal Technologies and Interaction, 6(8), 69. https://doi.org/10.3390/mti6080069