1. Introduction

The field of research dedicated to Accessible Digital Musical Instruments (ADMIs), i.e., accessible musical control interfaces used in electronic music, inclusive music practice, and music therapy settings [

1], is growing. Lately there has been a rising interest in work focused on the accessibility of musical expression. For example, the theme of the International Conference on New Interfaces for Musical Expression in 2020 was “Accessibility of Musical Expression”, and selected papers from the conference were published in a special issue of the Computer Music Journal, see [

2]. Recent advancements in the music technology field have paved the way for new music interfaces that can be specifically designed, customized, and adapted to each and every musician’s needs. Despite such progress, many people are still largely excluded from active participation in music-making. For example, musicians with disability are still particularly under-represented in the global music community [

3]. The systematic review on ADMIs published in 2019 [

1] also revealed that most of the reviewed instruments were designed for people with physical disabilities (39.8%), whereas less work had been dedicated to instruments designed for people with complex needs (6.0%), persons who are non-vocal (6.0%), hard of hearing (6.0%) or have a visual impairment (3.6%). Moreover, the majority of the ADMIs in [

1] were designed to be used by a single person, and few ADMIs were large-scale instruments encouraging collaborative play.

In this paper, we present a 1.5-year-long study exploring the role of haptic feedback in musical interactions in sound installations for children with Profound and Multiple Learning Disabilities (PMLD). PMLD is commonly used to describe a person with severe learning disabilities who most likely has other complex disabilities and health conditions, although there is no single universally agreed definition of the term [

4]. Research reviewing the different PMLD definitions together with those who provide services, support and care for people with PMLD has highlighted that no definition can fully articulate the complexities associated with the term [

4]. Other work has challenged the very idea of PMLD, emphasizing the role of ambiguity in articulating the life-worlds of these children [

5].

The work described in this paper is part of a larger research project focused on advancing musical frontiers for people with disabilities through the design and customization of music technologies. The aim of this project is to improve access to music-making through the development of novel music interfaces and to explore how interface design and multimodal feedback can contribute to widened participation. The goal of the current study was to promote inclusion and diversity in music-making through the customization of a large-scale Digital Musical Instrument (DMI), thus allowing for rich multisensory experiences and multiple modes of interaction for users with various abilities and needs (as suggested in [

6]). We explored how music can be presented not only in an audible form but also as visual and haptic sensations. In particular, we investigated how Participatory Design (PD) methods can aid the design and customization of musical haptics in the Sound Forest installation. Participatory design is a methodology that involves future users of a design as co-designers in a design process [

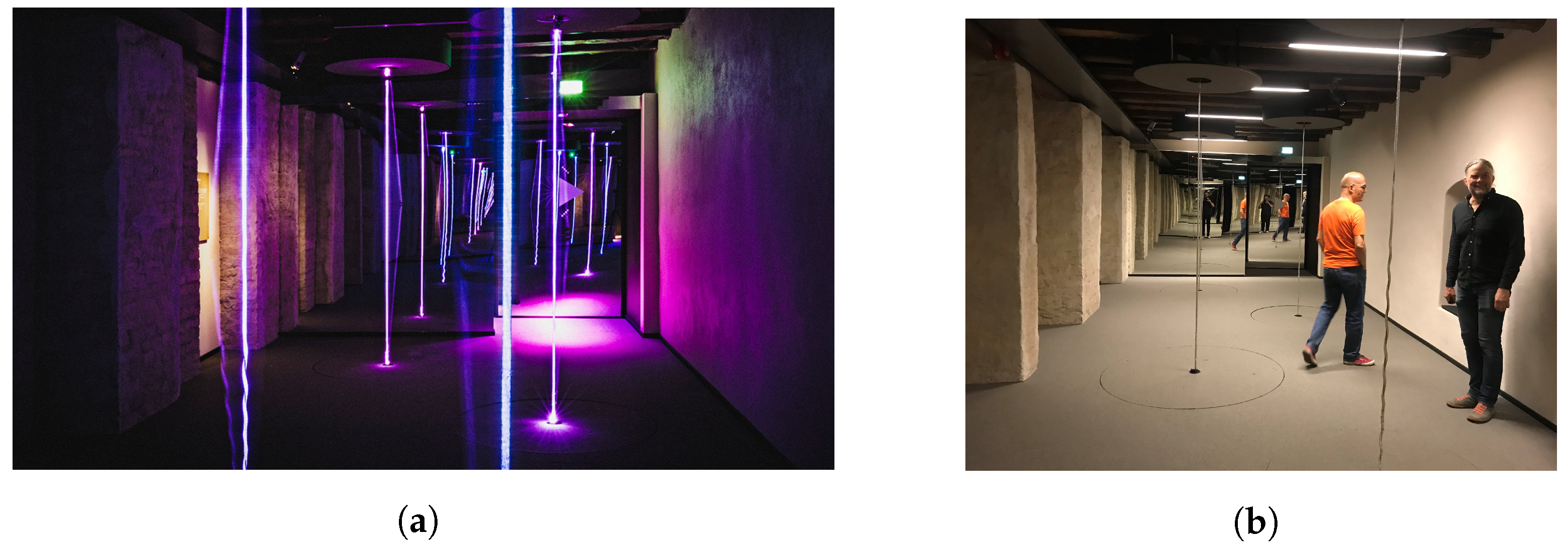

7]. Sound Forest (see

Figure 1a and

Section 2.1) is a long-term project between researchers in the Sound and Music Computing (SMC) group at KTH Royal Institute of Technology and the Swedish Museum of Performing Arts (see [

8]). It is a multisensory installation that enables visitors to create sounds by interacting with light-emitting strings, for example, by hitting or plucking a string using the hand. The visitors can feel the music through the body, while receiving visual feedback. In this paper, we describe a collaboration with four students enrolled at a special school in Sweden and how we designed a customized multisensory music experience in Sound Forest with and for this user group.

Previous research focused on the role of music for children with PMLD has emphasized the importance of music education [

9] and musical play [

10]. In [

11], the authors suggest that there is a lack of conceptual clarity as to what constitutes music education versus music therapy for this group. They propose a new model of music education where activities are undertaken primarily for their intrinsic musical value as opposed to promoting learning and development. A study exploring the role of music within the home lives of young people with PMLD through parental perspectives was described in [

12]. Findings outlined the positive role of music in contexts where music was used for enjoyment, to support mood-regulation, and to add structure to the lives of the children. The study also revealed that it was more common to listen to music than to make music in the home environment.

Musical Haptics is an emerging interdisciplinary field that focuses on investigating touch and proprioception in music scenarios from different perspectives [

13]. Musical experiences involve both perceiving airborne acoustic waves and vibratory cues conveyed through air and solid media [

13]. Traditional acoustic instruments intrinsically provide vibratory feedback during sound production. Playing a musical instrument requires a complex set of skills that depend on the brain’s ability to integrate information from multiple senses [

14]. The haptic sense has been shown to play an important role in musical interactions with acoustic instruments such as the piano [

15,

16,

17], violin [

18,

19], and percussion [

20]. Research has also shown that haptic technology can assist musicians in making gestures [

21]. Studies have suggested that amplifying certain vibrations in a concert venue or music reproduction system can improve the musical experience [

22], that vibrations play a significant role in the perception of music [

23], and that whole-body vibrations (the type of haptic feedback used in the Sound Forest installation, see

Section 2.1), can have a significant effect on loudness perception [

24]. As pointed out in [

13], musical haptics could potentially facilitate access to music for persons affected by somatosensory, visual, and hearing impairments. For example, haptic (or visual) feedback has been shown to enhance musical experiences for persons with hearing impairment [

25]. The potential of vibroacoustic therapy for persons with disabilities has also been stressed [

26]. A review of haptic feedback in sensory substitution systems allowing persons with hearing impairments to experience music through the sense of touch, so-called

Haptic Music Players (HMPs), was recently published in [

27]. An HMP is a device that can process an audio signal to extract musical information and translate this information into vibratory signals. An example is the haptic metronome, which presents musical beats through vibration onto the skin, see, e.g., [

28]. Despite the potential benefits of musical haptics and the importance of perceiving vibrations when interacting with a DMI (see, e.g., [

29]), few ADMIs provide active haptic feedback. In [

1], only 14.5% of the surveyed instruments provided active feedback through the sense of touch, and the full potential of haptics in ADMI design remains to be explored.

Few studies have explored the role of haptic feedback for people with multiple disabilities (relevant examples include [

30,

31]), especially in the context of music. However, the potential of multisensory experiences to enrich the lives of people with profound and multiple disabilities has been stressed (see, e.g., [

32]). Such experiences can be provided in so-called

MultiSensory Environments/Multisensory Rooms (MSE). MSEs are artificially engineered environments with multisensory equipment used to create a specific mood. These environments allow for activities and experiences of a sensory nature. Although not built to be used for music therapy purposes, Sound Forest can be considered a multisensory environment, as well as an ADMI.

When it comes to the design of ADMIs, it is crucial that those who have lived experience of disability are actively involved as co-designers in the process of creating the instruments. Reflections on co-design processes in the context of ADMI development are presented, for example, in [

33,

34,

35,

36]. In the systematic review of assistive technology developed through participatory methods published in [

37], the authors emphasize that participatory development processes should enhance the voice of the participants, considering their ideas, desires, and needs. In order to fully incorporate users with disablity into user-centered design processes, existing co-design methods may need to be extended and adapted [

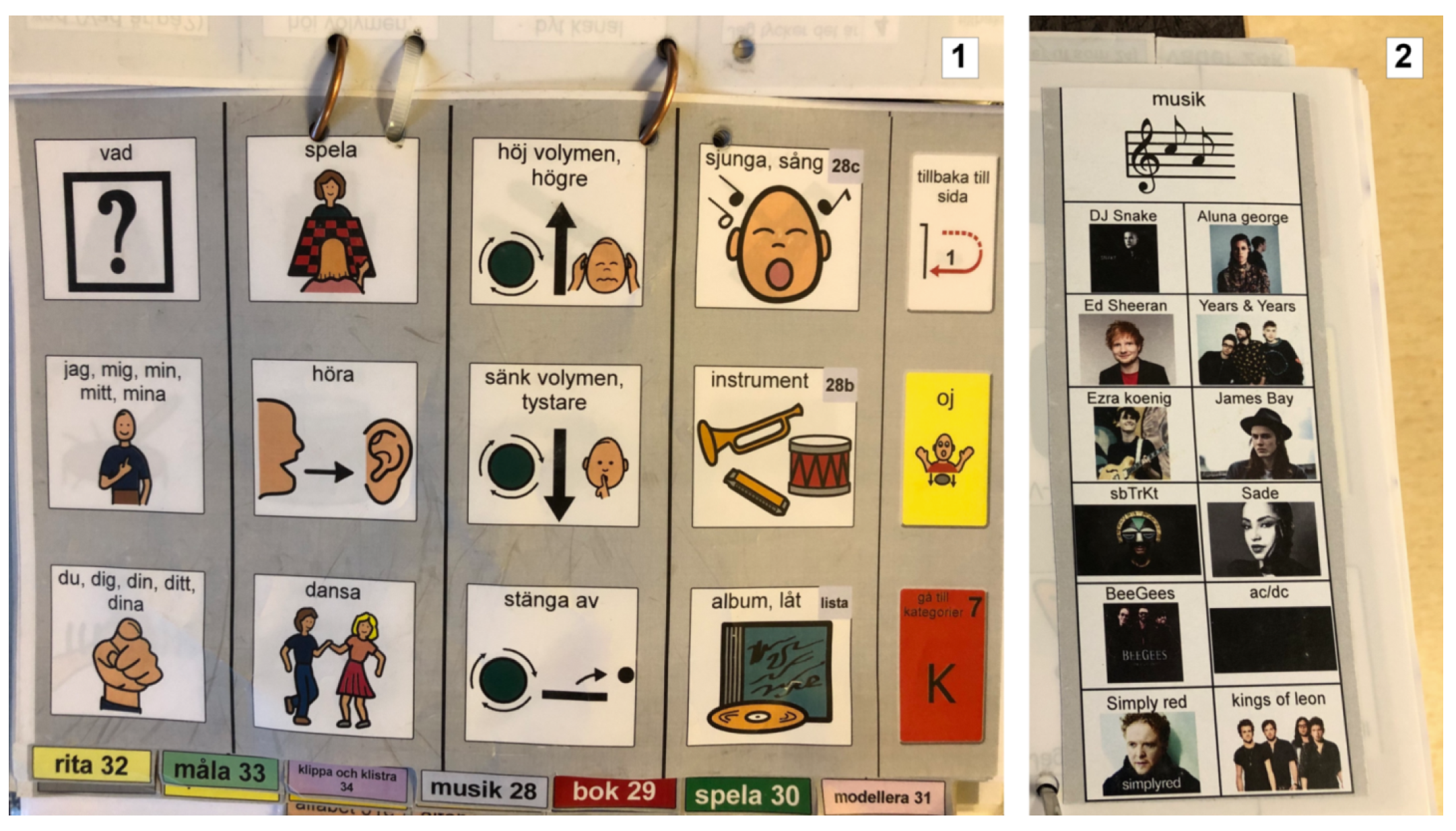

38]. Such adaptations are particularly important when working with children with PMLD, since the communication between designers and users can be affected. Co-design with children with PMLD should take into account that these children may express themselves through a number of different communication methods, depending on what is most efficient for them at the time. Examples include bodily gestures, nonverbal sounds, pointing, and facial expressions. Alternative methods for augmented communication, such as PODD (Pragmatic Organisation Dynamic Display) [

39,

40] and TaSSeL (Tactile Signaling for Sensory Learners) [

41], can be used to support such co-design processes. Other approaches that could be relevant in this context include methods for assessing the views of users through preference assessment [

42,

43] and methods to categorize behavioral states [

44,

45]. In the current work, a core focus has been on the tool PODD. PODD is a way of organizing word and symbol vocabulary in a communication book to support understanding (see

Figure 2). In our research, we have explored how PODD can be used in communication supporting the participatory design process. We use Participatory Design with Proxies (PDwP) [

46] to enable inclusion of input from different stakeholders, i.e., parents and teachers. This method allows for different proxies to provide valuable insights and feedback to augment direct input from the children. To the authors’ knowledge, little previous work has investigated how children with PMLD can act as informants in design processes focused on multisensory installations and large-scale ADMIs.

DMI evaluation is a persistently debated topic in the field of research dedicated to New Interfaces for Musical Expression (NIME) (see, e.g., [

47]). Challenges in the evaluation of bespoke assistive music technology have been discussed in [

48,

49]. The latter work highlights the shortcomings of frameworks biased towards describing others, with researchers often viewing ADMIs from an external perspective. The authors acknowledge that music-making is a phenomenon that is challenging to understand and that researchers might struggle to describe such ecosystems in their entirety. The term ecosystem in this context refers to the fact that a musical activity exists in a system containing constitutional building blocks of affordances, i.e., how something is perceived directly in terms of its potential for actions [

50], and constraints existing between the musician and the socio-cultural environment. For a detailed discussion of ecological perspectives in ADMI design and evaluation, please refer to [

48].

Nine properties to consider when designing and evaluating ADMIs were proposed in [

6]: expressiveness, playability, longevity, customizability, pleasure, sonic quality, robustness, multimodality and causality. A dimension space for the evaluation of ADMIs was introduced in [

51], which listed key aspects related to target users and use contexts (cognitive impairment, sensory impairment, physical impairment, and use context), and design choices (physical channels design novelty, adaptability, and simplification). However, such frameworks have not yet been extensively evaluated. In the context of MSEs, the review presented in [

52] highlighted the need for randomized controlled trials to evaluate the short- and long-term effectiveness of multisensory therapy. Going beyond discussions of effectiveness measures [

53], relatively little has still been published on how to best evaluate musical interactions taking place in MSEs, especially for children with PMLD. Relevant work in this context has primarily focused on observations and preference assessments (see, e.g., [

54,

55]). To summarise, there is a need for more rigorous research to assess and evaluate the impact of multisensory rooms on children with PMLD, as suggested in [

56].

In this article, we report on a long-term study on the customization and evaluation of a multisensory music experience in the Sound Forest installation. This work was conducted through a participatory design process in which we involved students with PMLD and their parents and teachers at a special education school as proxies. The aim of this work was to create a customized multisensory experience informed by the students’ needs, musical preferences, and abilities. An important aspect of this work was to explore tools and methods that could successfully enable a discussion about music and haptics with the students. For example, how can we talk about concepts such as the perception of touch, using PODD? The final design of the multisensory experience was evaluated through an experiment, with music presented in a haptic versus nonhaptic condition. Findings highlighted the diversity in employed interaction strategies, limitations in terms of the accessibility of the current multisensory experience design, and the importance of using a multifaceted variety of qualitative and quantitative methods to arrive at more informed conclusions when applying a design with proxies methodology. Our work contributes to the field of ADMI research by exploring participatory research methods for pre-verbal children with multifunctional physical and intellectual challenges and how such methods could be used to guide the design of musical haptics, a topic that to date has received little attention.

2. Materials and Methods

This paper describes a long-term collaboration with a student group, a teacher, and a group of teaching assistants at the school Dibber Rullen in Stockholm, Sweden [

57]. Dibber Rullen is a special education school for students with intellectual challenges and multifunctional physical challenges. The pedagogy at the school follows a thematic structure in smaller groups. The teaching is based on themes, in this case, countries, which are explored for 6–8 week periods. All research described in this paper was carried out as activities within the school’s standard curriculum during music and craft lessons.

The methods used in the current work are grounded on the

Social Model of Disability [

58,

59]. This model describes disability as a condition that arises from the organization of society, attitudes, and the design of the environment. In other words, a person with a disability is disabled by external factors, not by an impairment. As suggested in [

60], the design of technology should make sure that users have control of, and not only are passive recipients of, developed technology. This perspective is summarized in the disability rights movement motto

“Nothing about us without us” [

61], which highlights that people with disabilities know what is best for them and their communities and always should be included through participatory design processes. In our research, we have tried to include the students as informants in every step of the design process, using a combination of different user-centered [

62] and participatory [

7] methods with proxies [

46]. A key consideration has been to adapt our methodology to the school’s pedagogical practice and make sure that all steps of the research are well adapted to the students’ needs and preferences. Each step of the research project was informed by conclusions drawn from the previous stage. All work was conducted in close dialogue with the teacher and teaching assistants at the school, who in turn communicated directly with the student group and their parents.

Most of the research described in this paper was carried out during the COVID-19 pandemic. This greatly affected the extent to which we could connect with the students; social-distancing measures were required to ensure the safety of the users since they are a risk group. As a consequence, we adapted our methods to a distributed participatory design setting (see, e.g., [

63,

64,

65]). Distributed participatory design is a term that is used to describe situations in which all or most design team members are physically, and perhaps also temporally, dispersed. The need to re-frame activities focused on inclusive music-making or design due to the absence of in-person contact with musicians with physical disabilities during a global pandemic was discussed in [

66]. The authors proposed to use the

Three Pillars of Inclusive Design (accessibility, usability, and value) as a framework for analysis in such contexts. In our study, all of the prestudies were conducted online using video-conferencing tools or by asking the teacher responsible for the music classes to film the interactions taking place at the school,

in the wild. Participatory design methods in the wild take place outside of a lab, in settings for which the developed artifacts are actually envisioned [

67]. This may introduce particular challenges (see, e.g., [

34,

67]). The experiment in Sound Forest was carried out once the restrictions had been lifted. This was the only physical encounter we had with the student group.

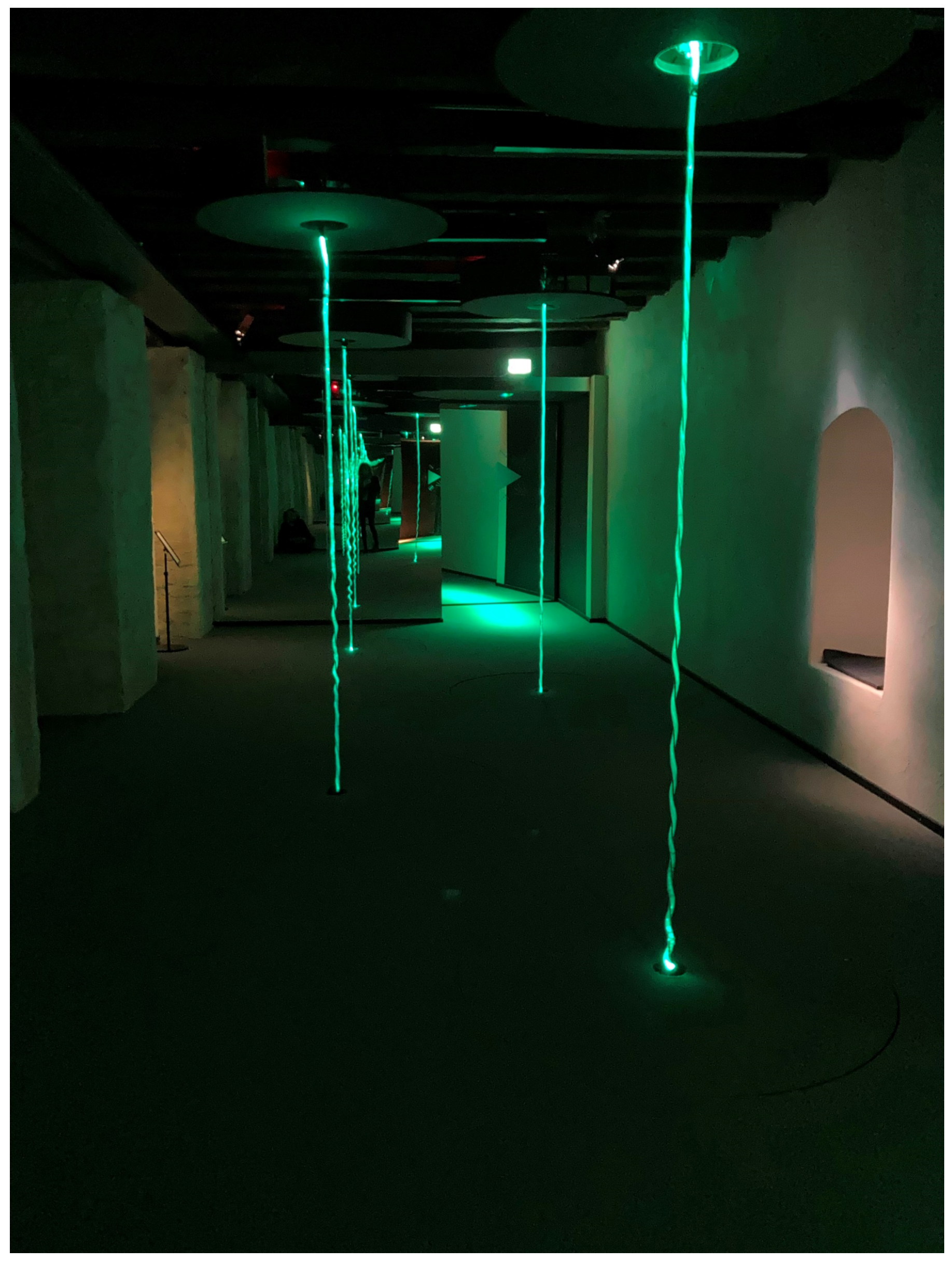

2.1. Sound Forest

The Sound Forest installation occupies an entire room. It consists of five light-emitting strings attached from the ceiling to the floor, five loudspeakers, five contact microphones set up to detect sound onsets for the strings, ten tactile transducers, and two mirrored walls. The installation was designed with accessibility in mind; the aim of the design was to provide rich multisensory experiences for all visitors, regardless of abilities. Visitors can interact with the strings in the installation and receive feedback in the form of sounds coming from loudspeakers placed above the strings, light emitted directly from the strings, and vibrations that can be felt through circular platforms cut out in the floor. One can use the hand to pluck or strike one of the strings, thereby generating a sound. For a detailed description of different interaction strategies observed for a prototype version of the Sound Forest installation, please refer to [

68]. The platforms in Sound Forest rest on vibration dampers, which enables them to vibrate freely if set into motion by two Clark Synthesis TST239 Silver tactile transducers [

69]. The strings are decoupled from the platforms and are thus not affected by the vibrations. There are five platforms in the installation. Four of them have a diameter of 0.60 m, and one has a diameter of 0.75 m. The larger platform is designed to be more accessible for visitors using a wheelchair (see

Figure 1b).

In our previous work, children and adults with physical and intellectual disabilities were invited to freely explore the installation, playing on the strings for three-minute intervals [

70]. The visitors could use whatever strategy they wanted to set the strings into motion (most participants used their hands). We have also explored how music producers can be supported by an introductory workshop focused on perception of whole-body vibrations when composing musical haptics for Sound Forest [

71]. Whole-body vibrations occur when a human is supported by a surface that is shaking, and the vibration affects body parts remote from the site of exposure [

72]. Studies have established a relationship between the magnitude, duration, frequency content, and waveform of the vibration signal in this context. However, the interaction between these properties is not trivial and is also confounded by inter- and intra-subject differences [

72]. Similar to the concept of a Head-Related Transfer Function (HRTF), which characterizes how an ear receives a sound from a point in space, different bodies have different transfer functions for vibrations, so-called Body Related Transfer Functions (BRTF). While an HRTF response depends on the size and shape of the head, ears, and the ear canal, among other factors, the BRTF depends on individual body properties such as weight [

73]. As a result, different individuals may have different perceptual experiences in Sound Forest, even if the same signal is played. This highlights the importance of asking the users what they actually perceive when interacting with the installation.

2.2. Participants

We collaborated with an existing student group at Dibber Rullen. The group consisted of four children (2F, 2M, age 9–15). Student groups at Dibber Rullen are reconfigured at the beginning of each school year. One student left and another student joined the group in 2021. This section describes the final group constellation. The students in the group are mostly pre-verbal, meaning that they do not yet have verbal communication skills. They all have multifunctional physical challenges, with varying motor skills, moderate to severe intellectual challenges, and use wheelchairs. As reported by the parents of P1 (Participant 1), she is hard of hearing, has a visual impairment, a chromosome aberration, reduced mobility, a physical disability, and hypermobility in the joints. The parents of P2 reported that it is not clear whether she is hard of hearing or if she has a visual impairment. They also described that she can lift her arms and grab objects. The parents of P3 reported that they did not know whether he is hard of hearing or has a visual impairment. They described that he could not walk but that he could jump when sitting. The parents of P4 reported that he had no hearing loss or visual impairment. A detailed description of the musical background and music preferences of the participant group, as reported by the parents, is presented in

Section 3.1.4.

2.3. Ethics Statement

The research procedure described in this paper was reviewed by the Swedish Ethical Review Authority (application No. 2021-06307-01). The study was carried out in accordance with the declaration of Helsinki. We followed informed consent rules and guidelines for ethical research practices presented in the APA Ethical Principles of Psychologists and Code of Conduct [

74], CODEX [

75], ALLEA [

76], and SATORI [

77]. We also followed guidelines for inclusive language use (see, e.g., [

78] for an overview of Swedish terms) and recommendations based on the International Classification of Functional Status, Disability and Health (ICF, see [

79]). In this paper, we use Person/People First Language (PFL) when writing about disability, as opposed to Identity First Language (IFL), see [

80]. All parents gave written informed consent before participation and agreed to the data being collected as described in the consent form. It was important to make sure that all students gave consent to participate at all times. This was ensured through direct communication with the teacher/teaching assistants. The teacher proxies also filled out a consent form and gave written informed consent prior to participation. Management of datasets that include personal information of study participants was compliant with the General Data Protection Regulation (GDPR). Procedures for registration and storage of personal data (including sensitive personal data, Swedish: känsliga personuppgifter) were reviewed and approved by KTH’s data protection officer (

dataskyddsombud@kth.se) and KTH’s Research Data Team. Only the Principal Investigator had access to videos and images of the students; none of this material is published as

Supplementary Material. The full Ethics Approval can be obtained from the Swedish Ethical Review Authority or by emailing the first author. The Ethics Approval report includes all consent forms and information for research subjects (Swedish: personuppgiftsinformation), as well as the Data Management Plan (DMP) approved by KTH officials.

2.4. Prestudies

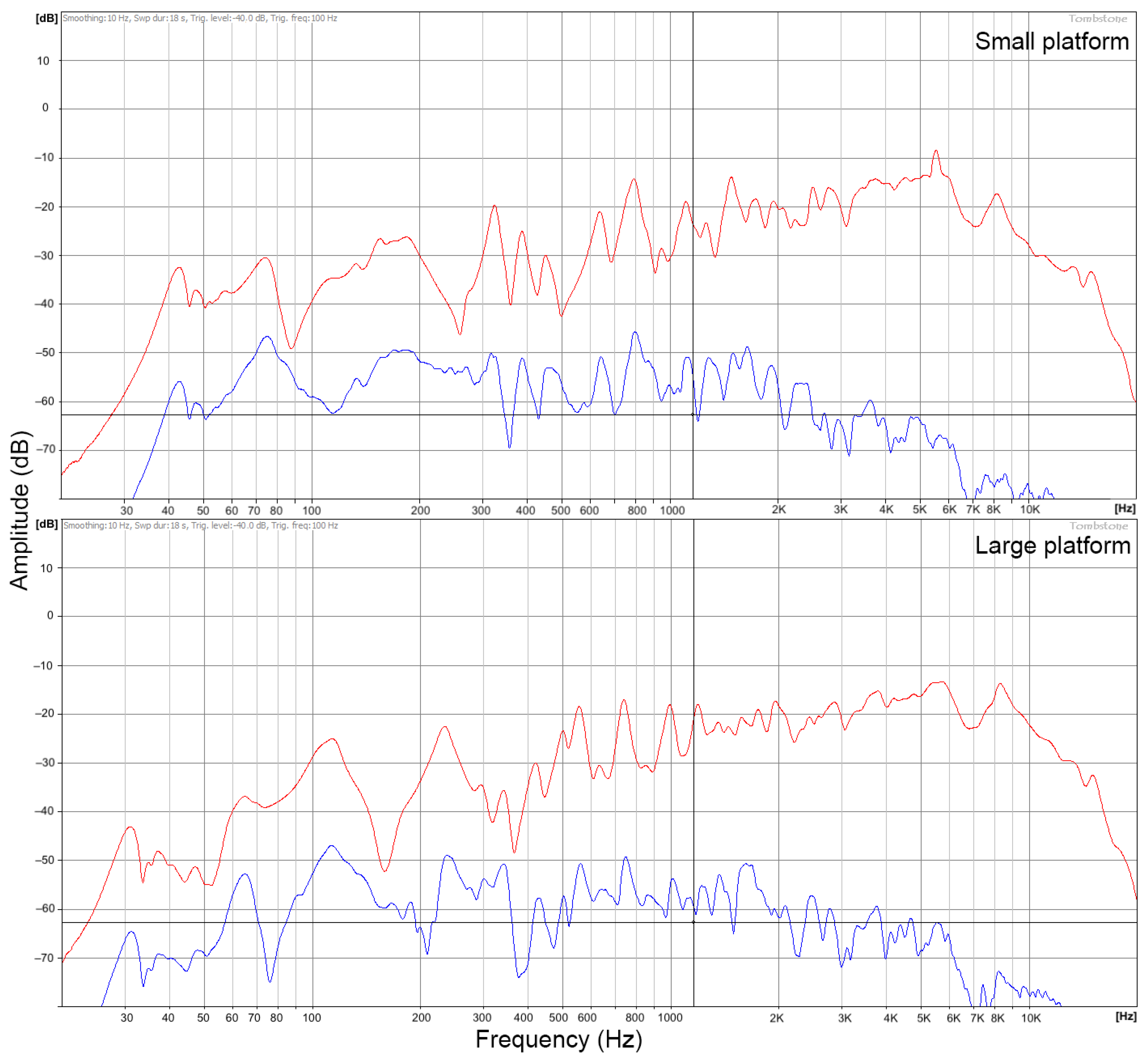

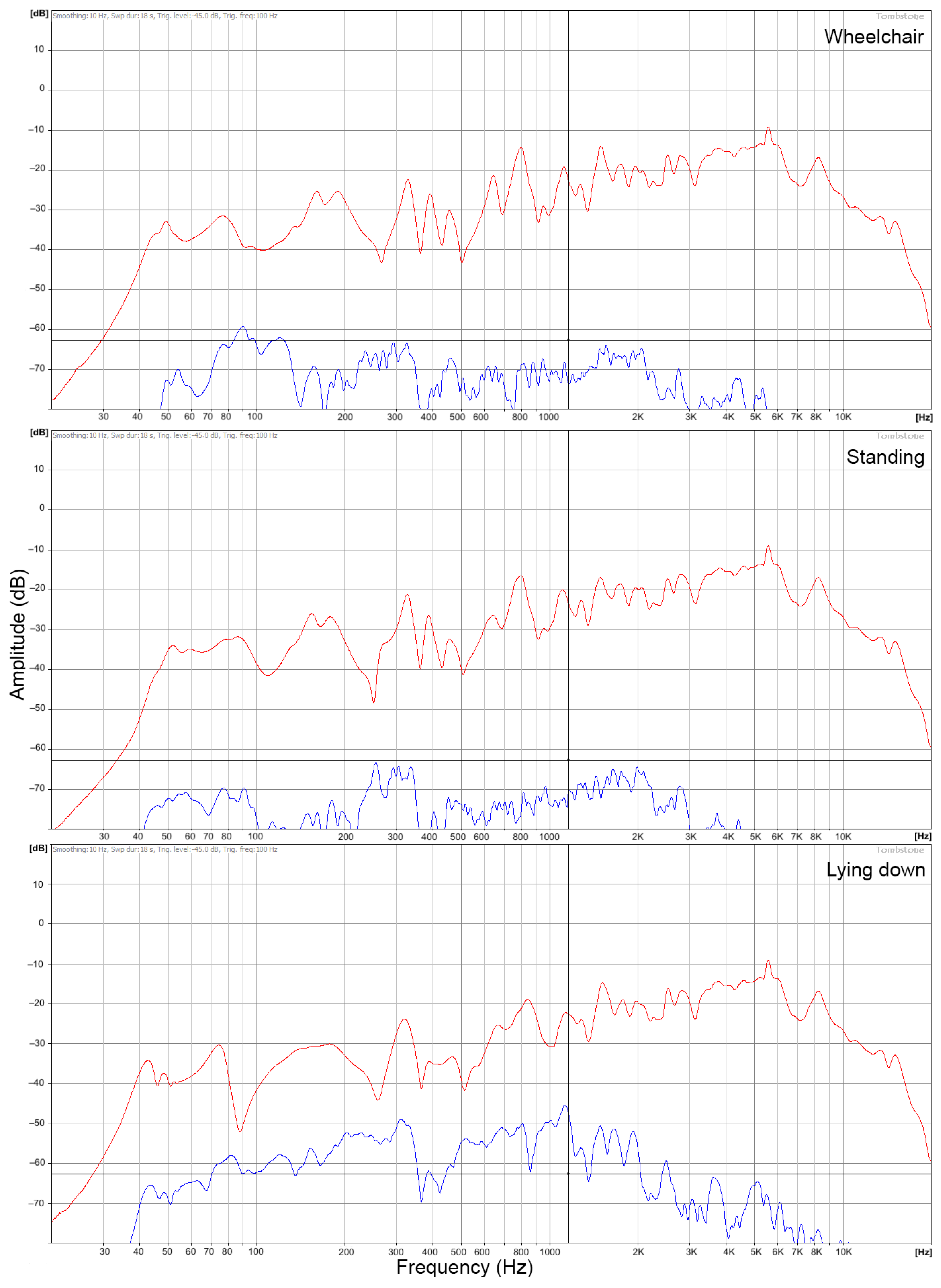

2.4.1. Physical Characterization of Vibrating Platforms

In order to understand the physical characteristics of the platforms in Sound Forest, we first measured the frequency responses of the differently sized platforms. The purpose of these measurements was to obtain frequency responses that could inform the design of the haptic feedback provided by the installation. Measurements were made using a Bruel and Kjær accelerometer type 4393 placed on the surface of the vibrating platforms, using petro wax mounting directly on the wooden floor, while exciting both tactile shakers attached to the platform using a sine sweep going from 10 to 10,000 Hz in 18 s. In this way, the accelerometer moved jointly with the platform, making it possible to determine the vibrations perpendicular to the platform surface. The accelerometer was connected to a Bruel and Kjær charge amplifier type 2635. A standard mini microphone captured the audible output for each measurement. The inputs were routed to an RME BabyFace sound card and recorded using the software Tombstone [

81], which also synthesized the sine sweep. The signals were recorded at a sampling rate of 44,100 Hz. The measurement was made with the microphone placed next to the accelerometer, on the floor. The procedure was then repeated for three conditions with one of the researchers (M, age 31, weight 70 kg) (1) sitting in a wheelchair, (2) standing on the platform, and (3) lying down on the platform. For these measurements, the microphone was placed at the ear of the researcher to simulate an actual use case scenario. For comparative purposes, additional measurements were also made for another researcher (F, age 32, weight 46 kg) for condition (1) and (3) for the small platform and for condition (1) and (2) for the large platform.

2.4.2. Observation of Music/Dance Lesson

This step consisted of remotely attending a music lesson at the school. The purpose of this prestudy was to obtain a better understanding of the type of music material that the students usually interacted with during their music classes and the musical interactions taking place during these sessions. The music class lasted 48 min and was video recorded. The session included dancing to music, interacting with a large colorful textile while listening to music, and playing musical instruments. Authors 1 and 2 attended the session and annotated in real-time what they observed, including potential questions that arose. We followed up on these questions through email correspondence with the teacher, focusing on: (1) the overall structure of the music classes at the school, (2) the type of music used, (3) the interplay between teacher/teaching assistants and students through gestures and dance, and (4) the time spent on dancing versus playing musical instruments. We subsequently summarized a set of themes to be further explored in an interview with the teacher and teaching assistants (see

Section 2.4.3).

2.4.3. Interview with Teacher Proxies

As a follow-up to the steps outlined in

Section 2.4.2, we conducted a semi-structured interview with the special education teacher responsible for the music/dance theme at the school, and two teaching assistants who worked with the music/dance lessons. We discussed what we had observed during the lesson, and how future experiments in Sound Forest could be designed to incorporate similar elements and structure. The overall purpose of this step was to obtain a better understanding of the pedagogical approaches used at the school, their communication tools, and how we best could align our research methods to the students’ needs and the school’s pedagogical practice. The interview started with a short video introducing the teacher and teaching assistants to the Sound Forest installation [

82]. We then asked questions about the following themes: (1) thematic structure of the pedagogical practice at the school, (2) the teacher’s and teaching assistants’ interpretation of the students’ communication, (3) properties of the sound and music to be used in a future experiment in Sound Forest, (4) accessibility, e.g., the students’ motor skills and strategies for interaction that could be used in Sound Forest, (5) preferences for visual feedback and lights, and (6) procedure, i.e., how the study should be designed to best account for the students’ needs. The full interview protocol is provided as

Supplementary Material. The interview lasted 44 min. It was manually transcribed by author 1. Results were then discussed with author 2 in order to decide how to best move forward.

2.4.4. Questionnaire

In order to obtain a better understanding of the musical background and music preferences of the students, we distributed a questionnaire to the parents of all students at the school (n = 19). A full analysis of all results is beyond the scope of this paper; therefore, we only present the findings for the four children who participated in our study. The purpose of this questionnaire was to investigate (1) if the students played any musical instruments or sing, and which musical instruments and tools they had used, (2) their interest in music overall, (3) what type of music they usually prefer to listen to, and (4) obstacles that may prevent the students from participating in music-making (if any). Most of the questions started off with a question with possible answers in the format “Yes/No/I don’t know”. This was, if applicable, followed by a question in a free-text format. We also included one question where the parents were asked to rate how strongly they agreed with the statement that their child is interested in music on a scale from 0 (strongly disagree) to 10 (strongly agree). The parents were encouraged to fill out the form together with their child. A translated version of the questionnaire is available as

Supplementary Material. The answers were analyzed to identify common themes across participants. This analysis was performed separately by authors 1 and 2, who then compared their individual results and conclusions, merging overlapping themes into the summary presented in

Section 3.1.4.

2.4.5. Music Listening Sessions

Two main conclusions could be drawn from attending the lesson described in

Section 2.4.2: the music used during the class mostly had a clear and prominent rhythm, and dance was an important part of the musical interactions taking place. The questionnaire results also suggested that the students had a broad taste in music overall. These conclusions prompted us to further explore how the students interacted with and responded to different musical elements. In particular, we wanted to investigate how they reacted to music with different rhythmic structures. Informed by these observations, we created two playlists with short excerpts of a range of different sounds that could be played to the students during their music lessons. The music was divided into three different categories: (1) music with clear rhythmic structure (e.g., dances such as waltz), (2) music with more complex rhythmic structure (e.g., an excerpt from a radical jazz improvisation session), and (3) music with the absence of clear rhythmic structure (e.g., ambient or drone music) (see

Table 1). The total duration of each playlist was ten minutes. This time was selected, after discussion with the teacher, in order to reduce the risk of fatigue. Each playlist consisted of six music excerpts of 1 min and 35 s. All sounds were normalized using LUFS loudness normalization. Breaks of five seconds were interjected after each excerpt to maintain a structure of music versus pauses similar to the one used during the observed music lesson; the music/dance class included many different musical excerpts, with pauses and PODD discussions in between. The two playlists are presented in

Table 1. The sounds are also available as YouTube playlists, see [

83,

84]. Each playlist was played to the students twice, on different occasions, using a Bluetooth speaker. The teacher had initially tried to play both playlists during the same lesson but soon realized that it would be better to split the activity into multiple listening sessions. The teacher and teaching assistants used PODD to ask the students if they liked or disliked the sounds as they were played. For this, they used PODD picture cards displaying a sad versus happy emoji, interpreting the students’ responses in the form of bodily expressions (e.g., pointing at a picture card) and nonverbal sounds. They also asked what the students liked or disliked about the music (e.g., if it was boring, fun, etcetera). The teacher filmed the student’s reactions using a mobile phone and annotated reactions to different sounds. Authors 1 and 2 watched the videos together with the teacher, discussing which sounds were most/least appreciated. The session was recorded and transcribed using an automatic transcription tool [

85].

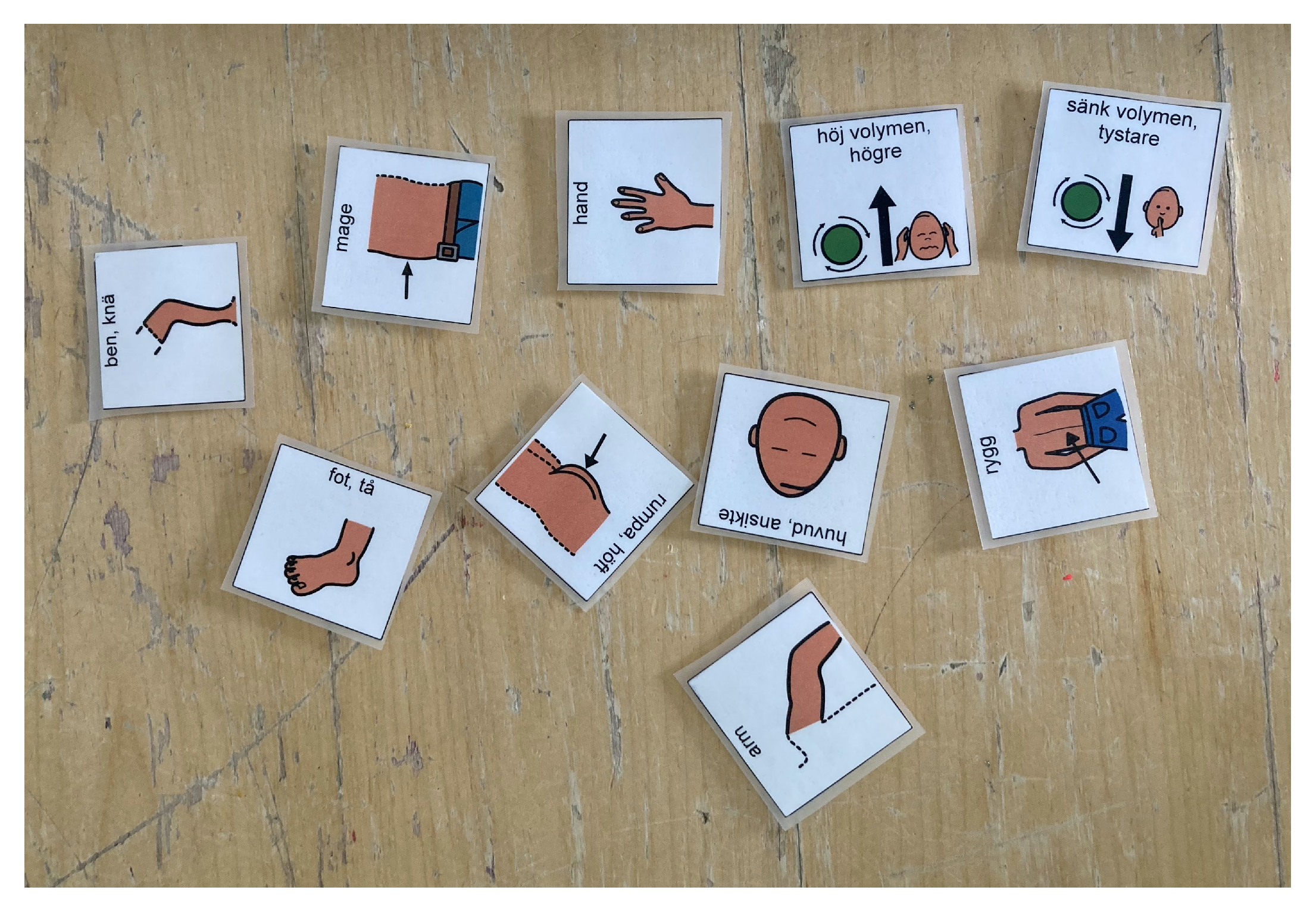

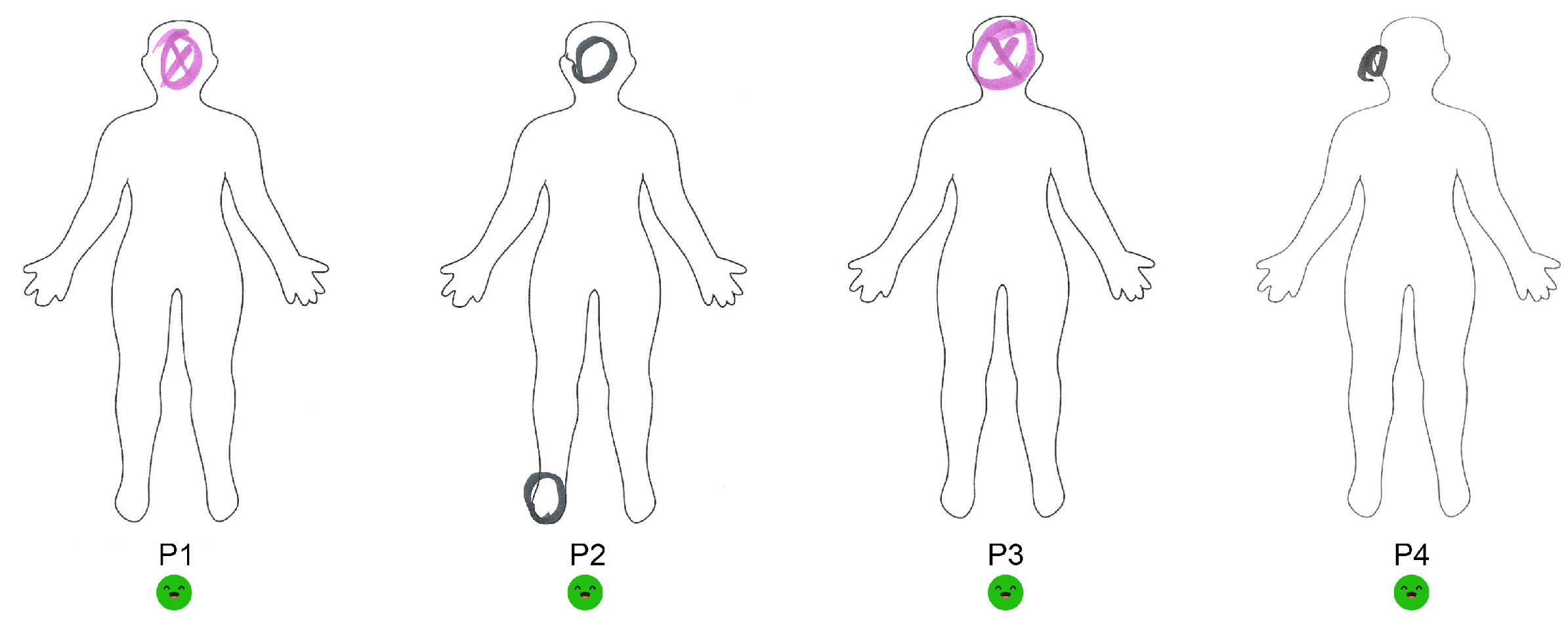

2.4.6. Introducing the Notion of Musical Haptics through PODD

The interview with the teacher and teaching assistants revealed that several students had pages in their PODD books that focused specifically on sound and music. The PODD books are individual and may therefore differ somewhat between students, depending on their interests. To acquire an understanding of the images and words used when communicating about music, we asked for the parents’ permission to let the teacher take pictures of sound and music-related pages in the students’ books. After analysis of these pages, we proposed a set of concepts that could be of interest when discussing musical haptics with the students: (1) places on the body (e.g., belly, chest, head, hands, arms, legs, and feet), (2) descriptions of sensations (pleasant or unpleasant, like or dislike, happy or sad), and (3) intensity (if a vibration should be stronger or softer). It was important to introduce these concepts early on to prepare the students for the experiment in Sound Forest. The teacher brought the above-mentioned concepts to the PODD responsible at the school and returned with a set of pictures that could be used. Examples are presented in

Section 3.1.6. The teacher then introduced these concepts to the students through PODD discussions.

2.4.7. Music Listening Sessions with Haptic Music Players (HMPs)

The aim of the last prestudy was to introduce the students to musical haptics on a more practical level. Based on our conversations with the teacher and teaching assistants, we understood that repetition is an important aspect of the school’s pedagogical practice. It was crucial to introduce the sounds that would be used in Sound Forest before the students visited the installation. For these reasons, we used three Haptic Music Players (HMPs) that allowed the students to not only hear sounds but also perceive simultaneous vibrations. The devices used were: HMP 1—a haptic pillow, the HUMU Augmented Audio Cushion [

86]; HMP 2—a plush toy backpack with an embedded haptic strap, the Woojer Strap Edge [

87]; and HMP 3—a custom-built plush toy with an embedded full-range speaker (Visaton FRS 8-8 Ohm [

88]) and tactile transducer (TT25-8 PUCK Tactile Transducer Mini Bass Shaker [

89]). The latter visually reminded of the character Chewbacca from Star Wars. The three HMPs are displayed in

Figure 3. The Haptic Music Players differed in terms of affordances. The haptic pillow encourages the user to lay their head down on the device. The plush toy backpack is intended to be worn on the back/belly. The custom-built Chewbacca plush toy can be placed on the lap and explored using your hands. The design of the two plush toys was informed by comments made by the teacher during a meeting in which the haptic pillow and the Woojer Strap Edge were demonstrated. This meeting focused on how to best design HMPs for the specific student group and which modes of interaction and bodily locations that might be most appropriate.

The teacher had three months to test out the HMPs with the students. Two weeks before the experiment in Sound Forest, a sketch of the music to be played in the installation was sent to the teacher for testing with the HMPs. This 2.5 min long sound sample is available as

Supplementary Material (see “

2.4.7.demo-soundforest.wav”). Following the same procedure as for the music listening sessions, the teacher filmed the interactions taking place when the students interacted with the HMPs, annotating observations. We subsequently conducted two semi-structured interviews with the teacher focused on usability and accessibility themes. The results are presented in [

90]. The most important findings in relation to the current study are summarized in

Section 3.1.7.

2.5. Experiment in Sound Forest

2.5.1. Procedure

The student group and the teacher, as well as three teaching assistants, were invited to Sound Forest to explore the sounds and multisensory experiences provided by the installation. The week before the experiment, we organized a 30-min meeting with the teacher and the teaching assistants (including observer 3, see description below) who would be present during the experiment. The purpose of this meeting was to discuss the appropriateness of the proposed methodology. Adjustments to the procedure were made based on the teacher’s and teaching assistants’ comments. Since we had quite limited time with the student group on-site during the experiment, instructions and questionnaires were distributed on the day before the experiment. Upon arrival in Sound Forest, the students first had a couple of minutes to familiarize themselves with the room and the sounds. This was done with the lights on (usually the installation is dark, with lights only emitted from the strings in the room, and two external LED lamps, see

Figure 1a versus

Figure 1b). The wheelchairs were placed on top of the respective platform so that the students could reach the string. The students stayed with the same string and platform throughout the experiment. Three of the students used the small platforms, and one student used the larger platform.

Once the students had familiarized themselves with the room and the teaching assistants had taken off their shoes, we went through the procedure. The structure of the procedure was as follows: twenty minutes of music was divided into four conditions. Each condition was five minutes long and was followed by a pause in which the music was turned off (i.e., silence in the room). The lights were turned on during these pauses. The conditions were: (1) sitting in a wheelchair-haptics on: WH; (2) sitting in a wheelchair-haptics off: W; (3) sitting or lying on the floor-haptics on: FH; and (4) sitting or lying on floor-haptics off: F. This condition order was chosen to minimize the number of times that the students had to be lifted. The order was reversed for two students to investigate if there was an effect of starting with haptics (P1 and P4 started with haptics off, whereas P2 and P3 started with haptics on). During haptic feedback conditions, the teacher and teaching assistants asked the students where in the body they could feel the vibrations and if they liked or disliked the sensation(s). The teacher and teaching assistants used the PODD images presented in

Figure 4 to ask where the vibrations could be felt. To enable the use of PODD books in the dark, each book was equipped with a small reading lamp. After each haptic condition, the teacher and teaching assistants filled out body map questionnaires (see [

91,

92]) to highlight where the students had perceived vibrations. A body map can broadly be defined as an image outlining the human body. The body map questionnaire also included one multi-line free text question:

“Do you interpret it as though the student liked or disliked the vibrations? Please describe if there was anything in particular that the student expressed that they liked/disliked”.Author 1 kept track of the timing between conditions and distributed and collected the body maps after each condition. Author 2 managed the on and off switches for the amplifiers controlling the haptic feedback, the audio on and off switch, as well as the lights. When not busy with other tasks, author 1 (observer 1), author 2 (observer 2), and one of the teaching assistants (observer 3), focused on observing the interactions taking place in the room. They were later interviewed about what they had observed during the experiment; see detailed description below. The entire session was filmed using four stationary video cameras (one for each student and platform). In order to capture the students’ nonverbal communication (facial and bodily expressions) when communicating with the teacher and teaching assistants about what they perceived, two researchers (including author 3) from KTH Royal Institute of Technology were invited to serve as mobile camera operators. All audio was registered using a Zoom H4nPro handy recorder device. Alongside the recordings, we recorded timestamps and amplitudes of every detected onset for each string.

Micro phenomenological interviews with the observers were carried out after the experiment. This interview method was used to access the observers’ passive memory (or background information) [

93] to elicit detailed first-person descriptions that may otherwise remain hidden to traditional methods [

94]. Micro phenomenology focuses on the

how instead of the

what of the experience, distinguishing it from other qualitative methods [

95]. This interview technique has been applied to scientific research for the exploration of pain in fibromyalgia patients [

96], the emergence of seizures in epileptic patients [

97], and musical experiences [

98]. The interview procedure is described as follows:

The interviewer introduces the objectives of the interview. In this case, the observers (or interviewees) were asked to select a specific moment in the students’ interaction with the Sound Forest installation that they might have found memorable. It is important to highlight that the exploration of the subjective experience of the observer is crucial.

Next, the interviewer explains the micro phenomenology process (described in step 3, below) and some formalities, including the duration of the interview and the possibility of withdrawing from the session at any time.

The interviewee is asked to select a specific moment to explore and describe in general terms. Then, the interviewer recapitulates and asks some open questions to access further contextual information (such as what they were seeing, hearing or feeling), aiding the interviewee in accessing their passive memory. The interviewer then asks more specific questions focusing on both the temporal aspects (such as what happens before and after a given description) and the how of the experience. These questions lead to more descriptions, which are further deepened by following the same procedure.

To close the session, the interviewer summarizes the interview content and asks the interviewee to clarify, comment, and correct if any accounts were misunderstood.

In our research, we used micro phenomenological interviews to explore some potential tensions and overlaps arising from the interpretation of the attitudes and body language of students while interacting with the installation. The three observers all had different roles in the project and could, therefore, contribute with different perspectives: observer 1 (author 1) is a researcher focused on sound and music computing, who was the principal investigator and responsible for the study; observer 2 (author 2) is a composer responsible for the programming, music, and multisensory design; and observer 3 is a Master’s thesis student in engineering, with many years of experience from working as an assistant at Dibber Rullen, currently carrying out a project focused on developing instruments for the students, building on [

99].

One week after the experiment, Author 1 conducted an online semi-structured group interview with the teacher and the teaching assistants (excluding observer 3). The interview used a stimulated recall methodology [

100]; a replay of videos from the experiments was used to stimulate a commentary upon the thought process at that time. The teacher and teaching assistants were shown one video clip from each condition and student, and asked (1) what happened in that particular instance and (2) how they interpreted the student’s reactions. For haptic conditions, this also involved visual inspection of the body maps. The video clips, which were selected by author 1 after the labeling process described in

Section 2.5.2, included interactions with the strings, bodily expressions, instances in which there was little movement or no bodily expression, or PODD discussions. The purpose of this step was to collect richer information about the communication between teacher proxies and students regarding

like versus

dislike. After commenting on all the videos, the session was concluded with six open-ended questions focused on accessibility:

How appropriate were the music, lights, and vibrations for the particular user group?

In general, what worked well/did not work well?

Do you have any suggestions for how the installation could be improved in order to be more accessible?

Did the students, overall, have any preferences when it comes to presence versus absence of haptic feedback?

Did the students, overall, have any preferences when it comes to experiencing the installation sitting in a wheelchair or being on the floor?

If you would have your own multisensory room at the school, with the possibility to have sound, lights, and vibrations, what would such a room look like and how would it work?

The purpose of this final step was to obtain a better understanding of how future versions of the installation could be improved to be more accessible for the student group, both in terms of multisensory design and mapping strategies.

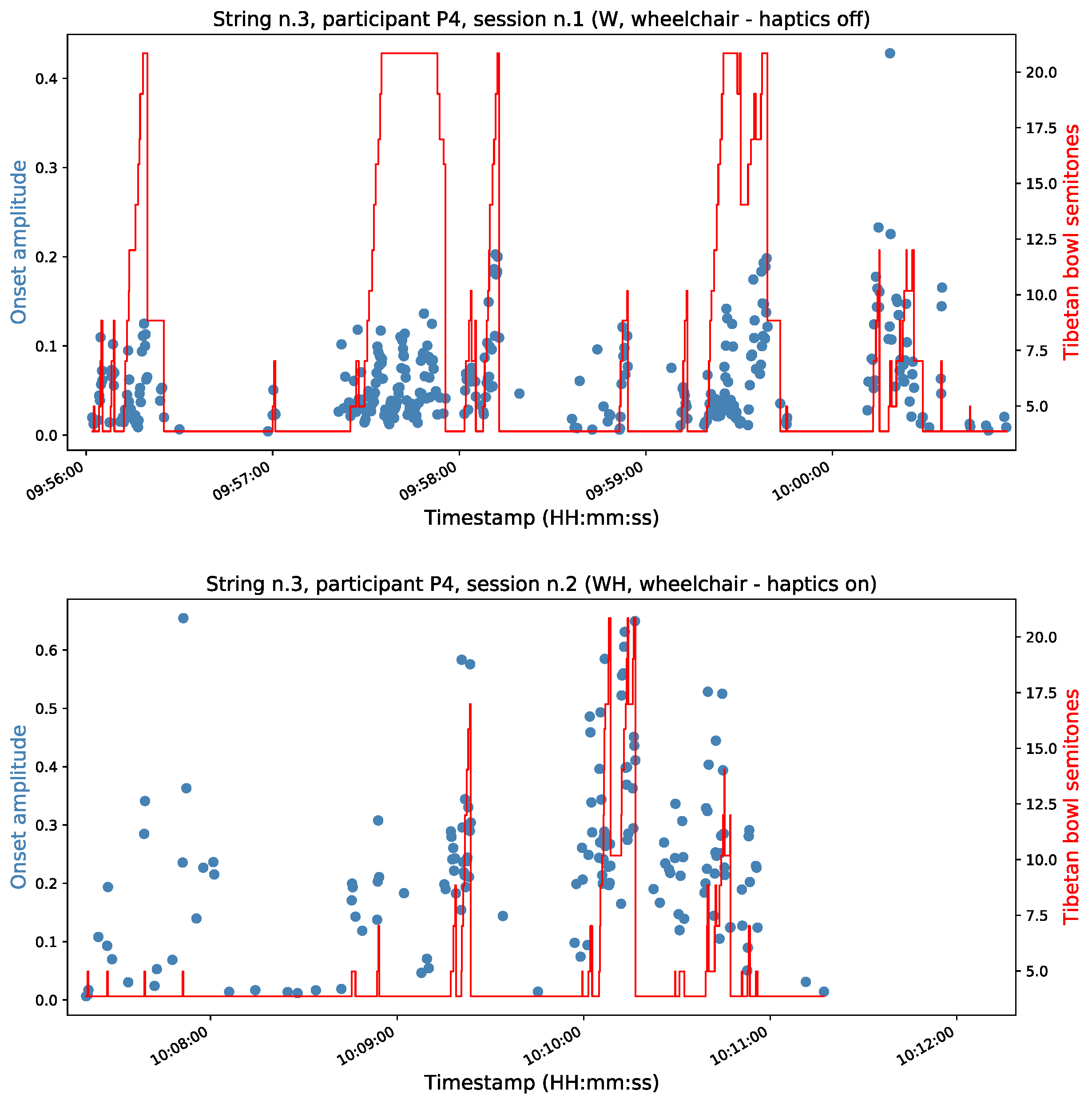

2.5.2. Analysis

Using the collected onset data, we resynthesized the sounds created by each student for each condition and generated temporal plots representing these interactions (see

Figure 5). All recorded videos were segmented for each condition. Author 1 then labeled the following time points in the recordings: interactions with the string, PODD discussions, other gestural expressions (arm and leg movements, e.g., clapping, waving the hands), and non-vocal expressions. Interactions with the strings were labeled based on strategy used to trigger a sound, inspired by the Exploratory Procedures (EPs) in haptic object exploration [

101] (e.g., movement patterns such as lateral motion or contour following) and categories identified in [

68] (e.g., plucking, pulling, shaking, muting). Annotations regarding the level of interplay between the student and the teacher/teaching assistants versus autonomous play were also made for each video clip. The total number of onsets performed by the student versus the teacher or teaching assistants was counted manually by author 1, using a click counter. This procedure was used since it is not possible to know from the recorded onset data alone which sounds were triggered by the student versus the teacher proxies. Obtained results were compared to the recorded onset data to separate the number of onsets triggered by the student versus the teacher and teaching assistants. The total duration of interaction was calculated for each student and condition; interaction time was defined as the time in between that the student had touched the string until the string was released (if no sound was made) or until the string went into idle mode (if a sound had been triggered). Idle mode is the state when the string has returned to its original color and does not make any sound, i.e., when the system is waiting for a new trigger from the user (see

Section 3.2.1).

The micro phenomenological interviews were analyzed by author 3. The interview material was automatically transcribed using a transcription tool [

85], then refined manually to ensure precision. The data were analyzed through the lenses of thematic analysis [

102], where interviews were read several times in search of patterns. Themes were expected to arise as similarities or differences in how the observers perceived the users’ behaviors. Insights linked to responses found in other datasets (i.e., interviews with the teacher and teaching assistants described below) were also considered.

The final interview with the teacher and teaching assistants was transcribed manually and analyzed by author 1. For the first part of the interview, i.e., stimulate and recall, content analysis [

103] was used to group quotes into themes. While thematic analysis provides a purely qualitative account of data, content analysis uses a descriptive approach in both coding of the data and its interpretation of quantitative counts of the codes (see [

104,

105,

106]). As such, an inclusion criterion in which there had to be at least three quotes to be considered as a theme was defined. For the second part of the interview, i.e., the six concluding questions, responses were summarized per question.

4. Discussion

The prestudies described in

Section 3.1 all provided information that was crucial to the planning and execution of the next steps of the project. For example, the physical characterization of the platforms provided valuable information about frequency responses. The results highlighted differences in resonances based not only on platform size and if the subject was sitting in a wheelchair, standing, or lying on the platform, but also based on different bodily properties. Of course, this affected how the multisensory experience was perceived by different students in the different conditions. The observation of the dance/music lesson enabled us to obtain insights into the music practice at Dibber Rullen and the ways in which the students danced to and interacted with music and musical instruments. We observed considerable differences among students in terms of how much movement that was performed to the music. We also concluded that most students used hand gestures and upper body movements to express themselves, although some also used their legs. The initial interview with the teacher and teaching assistants provided valuable insights into the pedagogical structure at the school and the thematic structure of the education. The questionnaire introduced the parents as proxies. This was an important step since it allowed us to obtain a better understanding of the students’ musical background and interests, going beyond what had been observed at the school. Results from the music listening sessions informed our sound design process. We noticed that although there had been a strong focus on dance and clear rhythms during the observed music/dance lesson, complex structures and soundscape sounds were also popular among the students. For the final sound design, we tried to avoid sounds with properties similar to the excerpts that were less appreciated (excerpts 7 and 8). However, it should be stressed that it is difficult to know why certain sounds appeared to be more popular than others, and fatigue also seemed to play a part in this context. Finally, we used Haptic Music Players to introduce the students to the PODD pictures used to describe musical haptics. This also allowed us to test the first version of the sound design for Sound Forest, before the actual experiment. Overall, the haptic pillow (HMP 1) and the customized plush toy (HMP 3) worked well, possibly since they had built-in speakers. However, the plush toy backpack (HMP 2) had to be connected to external headphones, thereby adding another step to the setup process. As such, it was more difficult to debug.

A number of conclusions could be drawn from the experiment in Sound Forest (see

Section 3.2). First of all, the sound design used in the experiment appeared to work well in terms of creating a relaxing and ever-changing sonic experience, with a constant yet not overwhelming vibration coming from the haptic floor. Based on some of the feedback, the vibrations could perhaps have been even stronger. Analysis of video and onset data did not suggest any clear difference between haptic and nonhaptic conditions. Rather, we observed large interpersonal differences. This was reflected both in the interaction strategies used (see

Table 4), duration of play (ranging from approximately 1 min for P2 to 10 min for P4), and the total number of sound-producing onsets (ranging from 6 to 528 onsets, see

Table 3). Of course, the different sound excitation styles resulted in different sonic outputs for different users. For sound examples, please refer to the

Supplementary Material, which includes resynthesized sound files for each student and condition. When it comes to the interaction, it should be stressed that we observed a tendency towards fatigue as the experiment progressed (at least for P1, P3 and P4). For P1, this effect was also confirmed by the teacher proxies in the final interview. This further complicates attempts to draw conclusions about differences between conditions.

Overall, using PODD and body maps to communicate about musical haptics with the students seemed to work well (see

Section 3.2.3). The chosen strategy, which involved presenting a selection of bodily locations as images and then asking a follow-up question about like versus dislike, was mostly successful. There was indeed a correspondence between what the proxies observed and what was described using PODD, perhaps since the students had already practiced using the tools at the school. However, the interview with the teacher and teaching assistants also highlighted limitations of the employed methods. These limitations mainly concerned the translation between PODD images and body maps and the influence of the used text and picture representation. An example of a situation where the PODD images lacked level of detail was for communication of singular versus plural (e.g. “one foot” versus “two feet”). Pointing could be used as a method of clarification in such situations. Moreover, we also concluded that it would be good if the body map displayed not only the front, but also the back of the body.

Analysis of the micro-phenomenological interviews (see

Section 3.2.4) revealed that the observers could identify moments of active listening, including active listening to haptic feedback. The observers also discussed and identified social dimensions of the musical experience and musical play. Moreover, these interviews also brought forward challenges that arose when comparing PODD responses to observed body language, as well as discrepancies in interpretation between different observers.

As can be seen in

Table 6, a main conclusion from the

Stimulate and Recall part of the final interview with the teacher and teaching assistants was that they often used prior knowledge about the students and their previously displayed behaviors at the school to interpret reactions in Sound Forest. Discussion during the

Concluding Questions part of the interview also highlighted how the Sound Forest installation could be improved in order to be more accessible for children with limited movement. For example, the installation should ideally include certain elements or interaction points that could be tuned specifically to those who can move very little, in order to provide rich experiences for all. The multisensory design could also be customized to encourage more spontaneous discovery and support different types of felt experiences, depending on personal preference. One example of how this could be achieved, as mentioned by one of the teaching assistants, was to bring in elements that allow for tactile exploration for those who cannot see. For example, tactile elements could be placed on the walls of the installation. Analysis of the videos revealed that several of the students used nonverbal sounds and clapping to express themselves in the installation. We, therefore, believe that detecting such sounds (using, for example, machine learning techniques) and triggering sonic output based on such interactions could be one way of making the installation more accessible to users with limited movement.

Reflecting on the multisensory experience delivered in Sound Forest through the lenses of the three pillars of inclusive design discussed in [

66], i.e.,

accessibility,

usability, and

value, a couple of conclusions could be drawn. Regarding

accessibility, the installation setup appeared to provide access to creative activities for P3 and P4. It allowed for independent use, either immediately (as for P4) or after teacher demonstration (as for P3). However, the creative possibilities were somewhat limited for P1 and P2, who perhaps would have benefited from a higher string sensitivity. The sensitivity of the contact microphones was set to detect gentle and subtle touching. Unfortunately, the level was not high enough to allow all students, i.e., those with less hand strength, to easily trigger sounds. This issue could be solved by increasing the sensitivity of a specific string even more. However, this would add the counter effect of detecting triggers from the other strings, thus resulting in the installation playing sounds without physical interactions. The microphones would then pick up sounds from the room, not only sounds from the interaction with the string in question. These aspects should be considered in future versions of the multisensory design for Sound Forest, conceiving other ways of detecting interactions through sensing that could make the installation more accessible. In terms of

usability, the interactions appeared to be rather intuitive for P3 and P4, whereas they were less so for P1. P2 specifically told the teaching assistant that it was too easy to play on the string, suggesting that she found the interaction intuitive. However, although she perceived the interaction as easy, she did not play so much. Regarding the creative

value, it appeared as though P4 managed to explore a wide range of different sonic outcomes, using many different types of gestural techniques. P3 was also rather expressive in this context, playing on the string, making dance gestures, spinning around in the multisensory experience, and interacting with others who played. Interestingly, what we observed goes in line with the high levels of musical interest reported by the parents (a level 8 interest for P4 and a level 10 for P3, see

Section 3.1.4). For P1 and P2, the expressivity of the string interaction could indeed be improved. However, it should also be taken into account that both P1’s and P2’s parents reported a level 4 of musical interest. In addition to that, P2’s parents commented that they did not think she would be interested in playing a musical instrument (but perhaps in creating

“her own sounds”, see

Section 3.1.4). One example of how the musical output could be made more rewarding in general would be to reduce the time required between onsets to achieve a raise in pitch. In the current version of the sound design, this musical effect was mostly explored by P4.

In this study we have effectively explored multisensory music experiences for a group of four students with Profound and Multiple Learning Disabilities (PMLD). We have presented methods that could be used to explore and describe experiences involving both sound and haptics. In our work, we attempted to gather as much information as possible through proxies, meaning that the parents and the teacher/teaching assistants played a vital role when it came to including the students as informants and co-designers of the multisensory experience design. Since the interpretation of what the students experience is complex to access, we decided to use a multifaceted variety of qualitative and quantitative methods to arrive at more informed conclusions. Findings from multiple time points in the research process highlighted the importance of combining different methods, as well as different proxies, both in the design phase as well as the evaluation of our work, in order to understand the complex interactions taking place. A main conclusion from the final interview with the teacher and teaching assistants was that they often used prior knowledge about the students and their previously displayed behaviors at the school to interpret reactions during the experiment. However, even with this prior knowledge developed through their practice, there were several situations in which it was complex for the teacher proxies to interpret some student reactions in relation to the PODD replies. This highlights the importance of using proxies in this context; no observation performed by a researcher, however complex coding schemes that would be used, could replace such input. Nevertheless, it should be noted that working with proxies is never the same as working directly with the users and that this method introduces an additional layer of ambiguity into the research process. Moreover, this methodology, especially when used in the wild at the school (without the researchers being present), puts a lot of responsibility on the proxies to actively engage the users in the co-design process.

4.1. Limitations

Our research relied largely on descriptive narratives provided by proxies. One of the limitations of the current work relates to the assessment of musical preference. Even if evaluation of the aesthetics of music occurs in most cultures, these processes are not yet fully understood [

112]. Music preferences are also known to change over time, something that has been stressed in music recommendation literature. Of course, proxy evaluation introduces yet another level of ambiguity in the assessment process. Nevertheless, there are measures that can be taken to aid preference assessments in interactions between teachers and students with disability. For example, the importance of timing, i.e., when you ask a question, has been stressed [

113]. In our current work, we have tried to address the above-mentioned limitations by combining several different methods of data collection, using different proxies (parents and teachers).

Another limitation of the presented work is the limited number of participants; results are not generalizable due to the small sample size. Our study design was partly based on research carried out in the wild. As such, the focus has been on obtaining enough experimentation time with the participants rather than on the number of participants, an important aspect of in-the-wild research stressed in [

67]. As mentioned in [

67], page 59,

“It becomes much more difficult, if not impossible, to design an in-the-wild study that can isolate specific effects”. Nevertheless, the outcome of studies carried out in the wild can be most revealing and demonstrate quite different results from those arising out of lab studies [

114], showing how people come to understand and appropriate technologies on their own terms and for their own situated purposes [

67].

Finally, only a small group of researchers were involved in the qualitative analyses of the material presented in this work. It would, of course, have been better if a larger number of independent researchers would have performed the analyses.

4.2. Future Work

Based on the large interpersonal differences observed in the current study, we believe that future explorations in Sound Forest should include larger groups of participants in order to fully understand the range of interaction strategies that could be explored in the room. Future experiments in Sound Forest could allow for interactive control and adjustment of the haptic experience in real-time. One possibility could be to tune the sensitivity of each string to every child at the beginning of the session. Another option, as suggested by the teacher proxies, would be to program the haptic feedback so that it evolves over time and is different in different places in the room. The installation would, in that sense, invite to spontaneous exploration of the room; if certain users would stay in one place (where there are more vibrations), this could be used as an implicit method to draw conclusions about preferences for haptic feedback. In general, future experiments should allow the students to spend more time exploring the installation freely on their own terms, i.e., in a less controlled experimental setting. However, our results also highlighted that 20 min of music was quite long. Measures should be taken in order to reduce the risk of fatigue when planning time spent in the installation. Since the teachers are also co-discoverers in this context, it would also be good to invite them to explore the installation before the students are able to try out the multisensory experience.