Forming Cognitive Maps of Ontologies Using Interactive Visualizations

Abstract

1. Introduction

2. Background

2.1. Cognitive Map Formation

2.2. Ontologies

2.3. Interactive Visualization Tools

3. Methods

3.1. Related Work

3.2. Task Analysis

3.3. Existing Tool Review

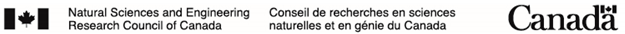

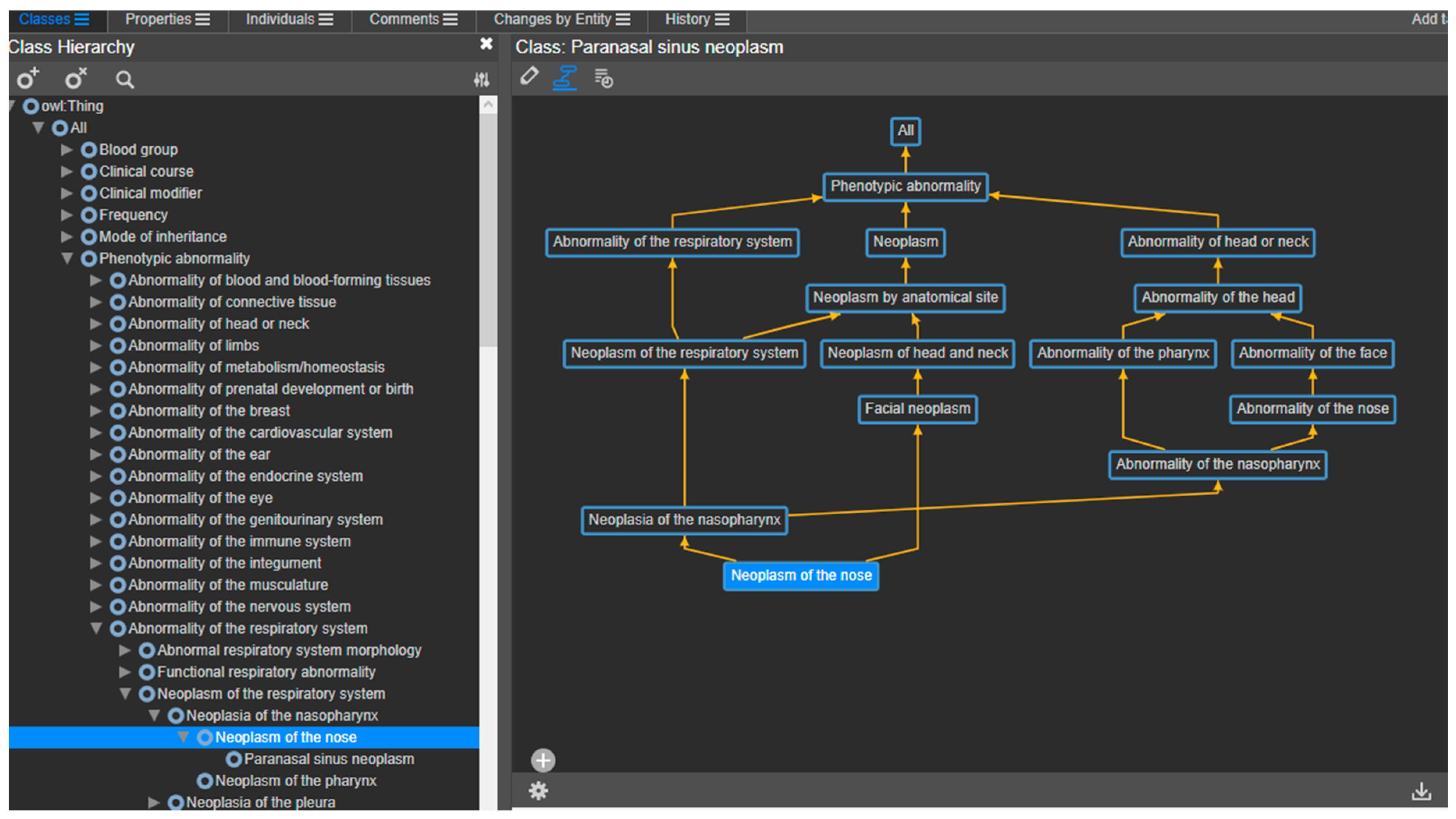

3.3.1. List+Details Designs

3.3.2. List+Context Designs

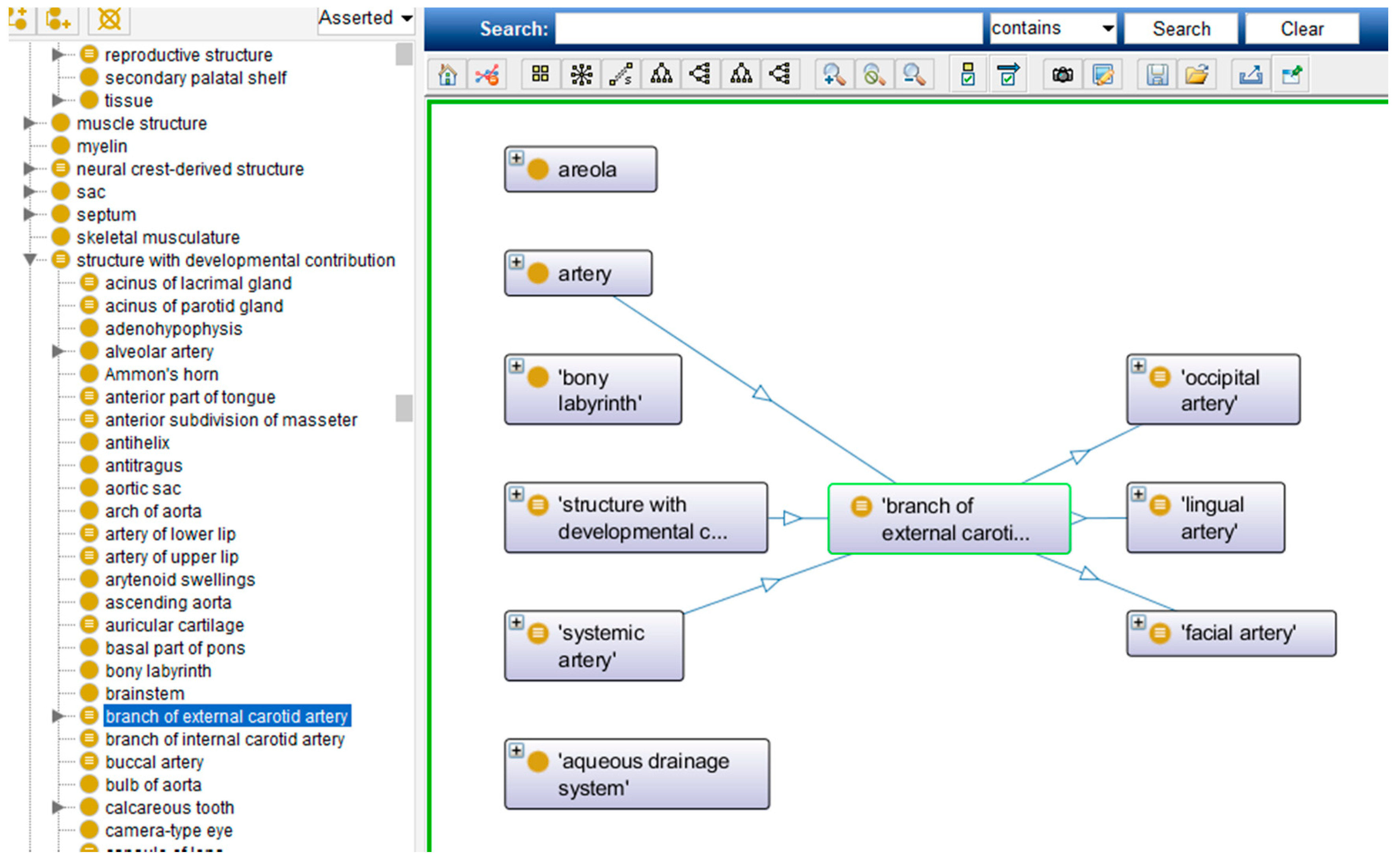

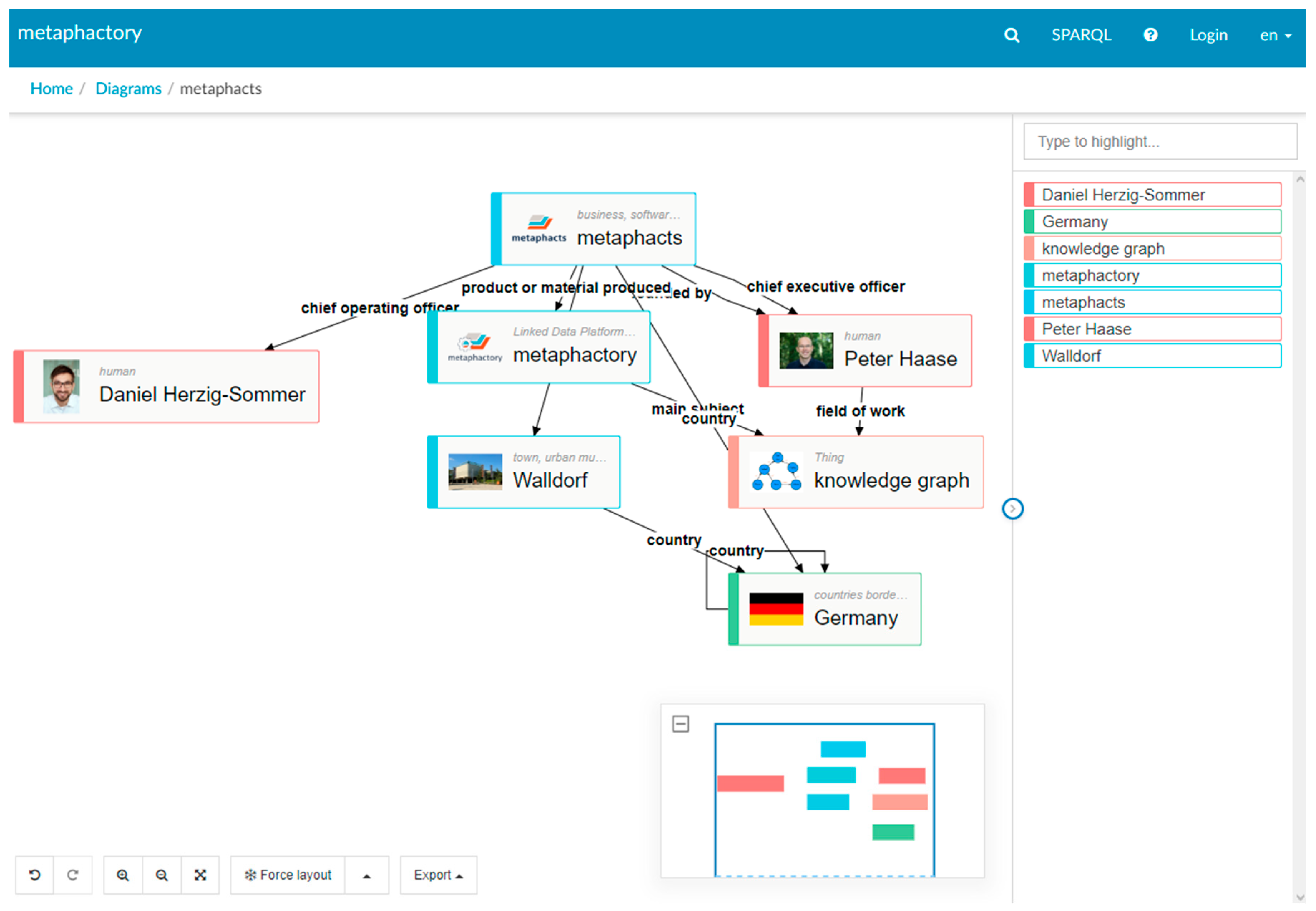

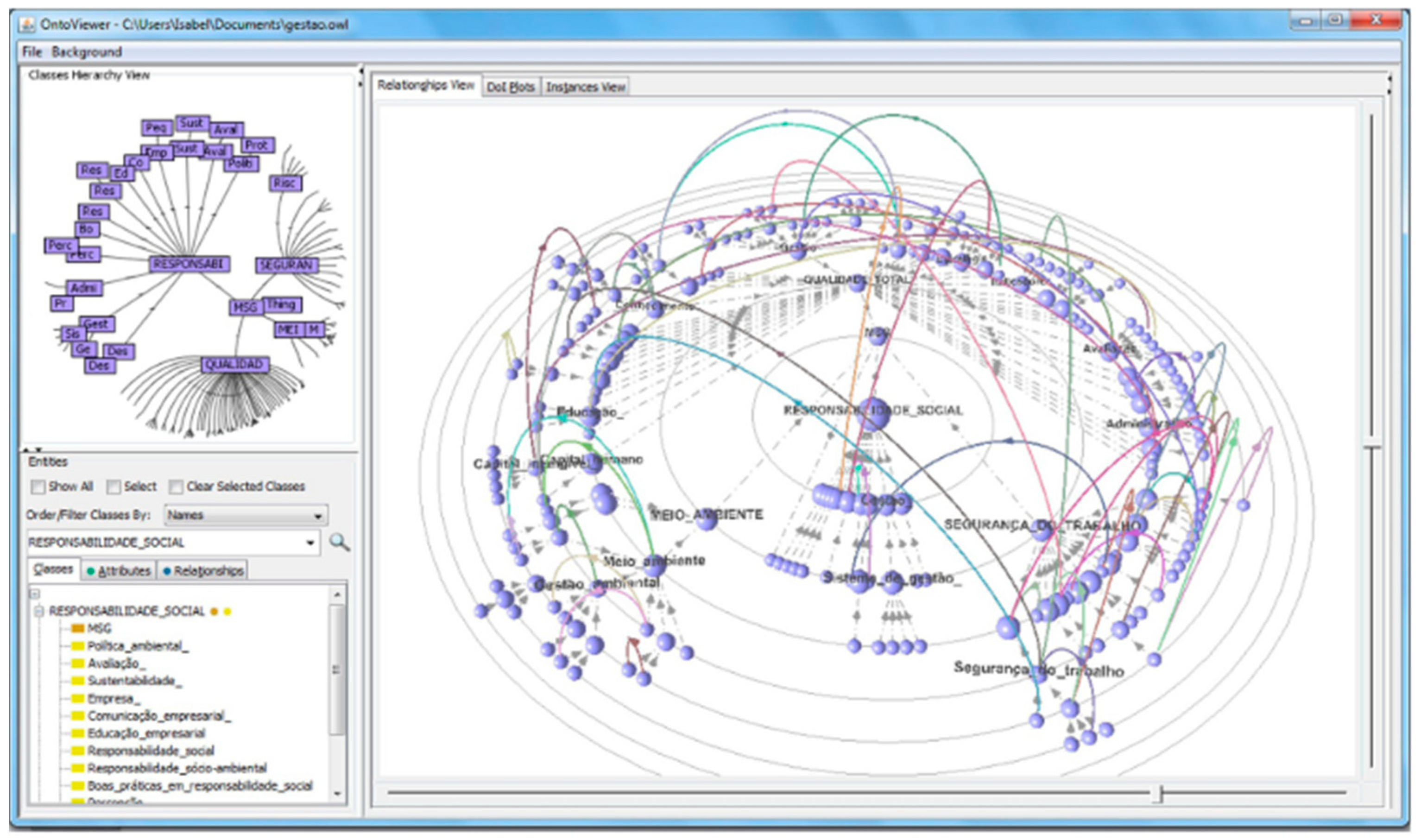

3.3.3. Overview+Details Designs

3.3.4. List+Overview+Details Design

3.3.5. List+Context+Details Designs

3.3.6. List+Overview+Context+Details Design

4. Materials

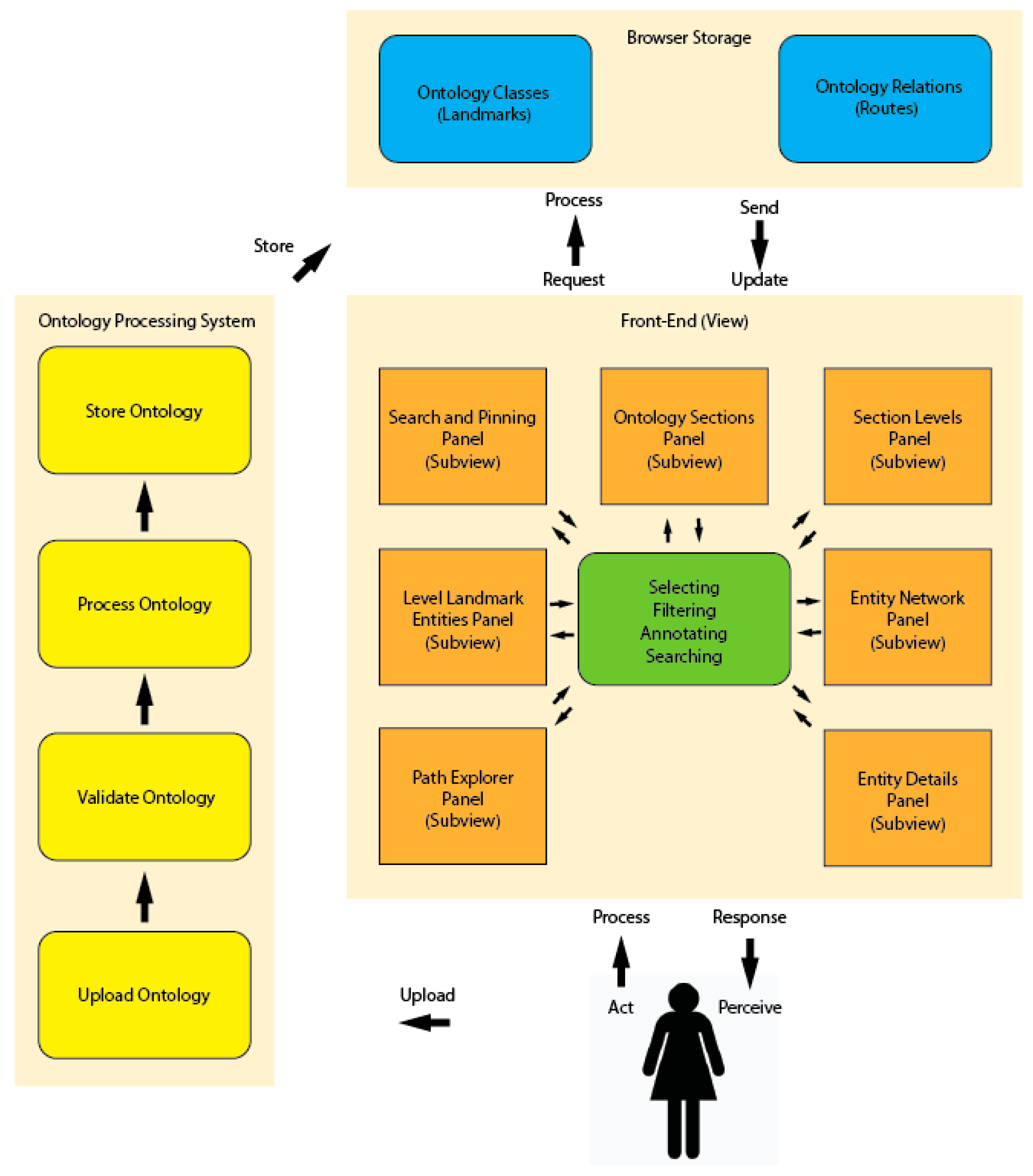

4.1. PRONTOVISE Technologies

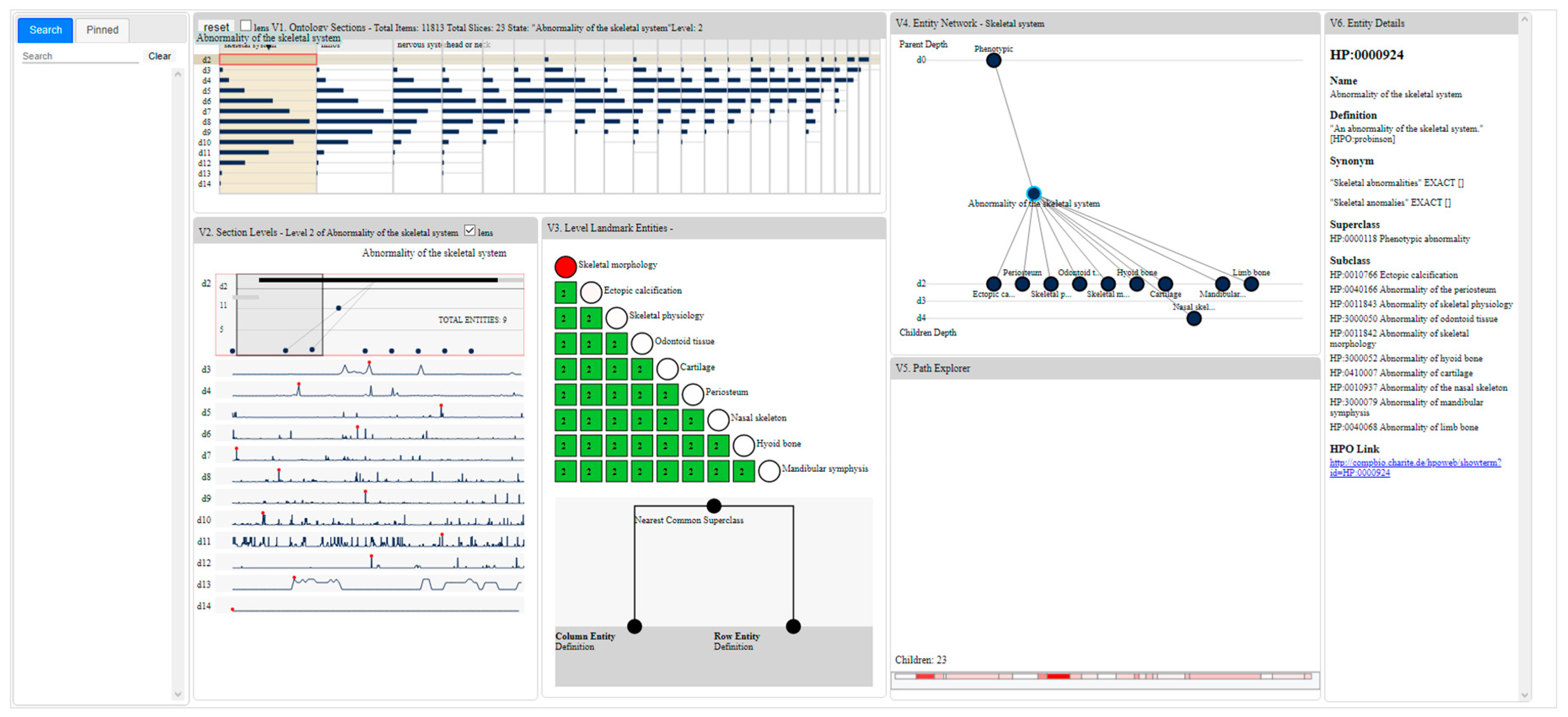

4.2. PRONTOVISE Workflow and Design

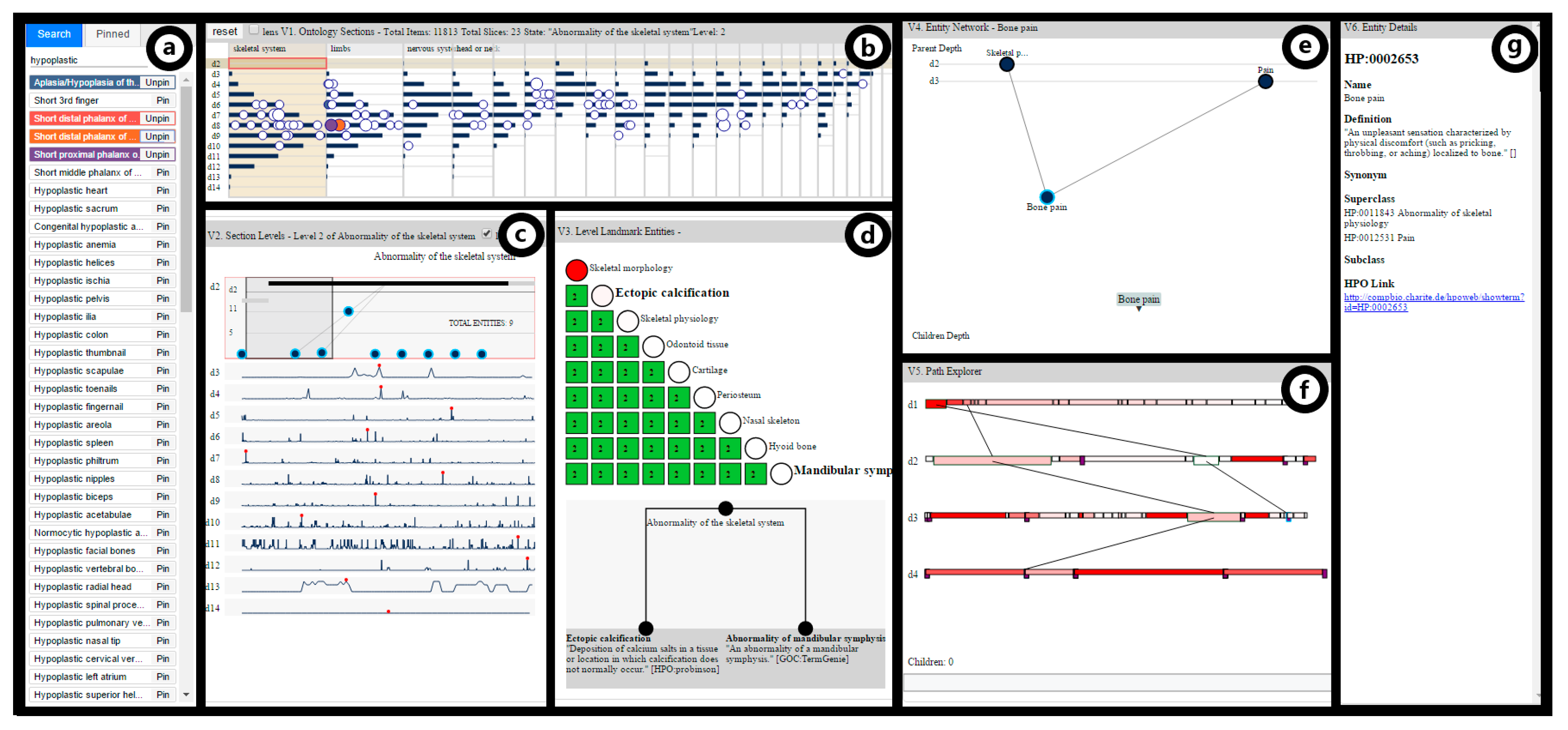

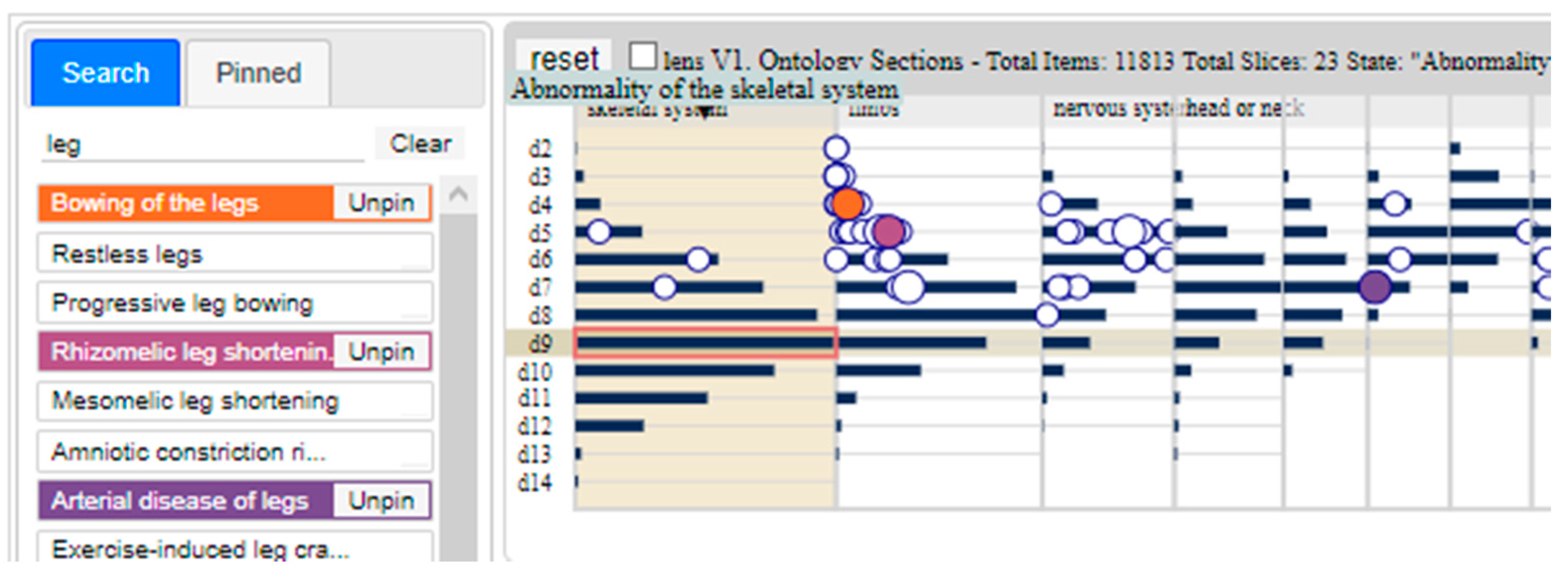

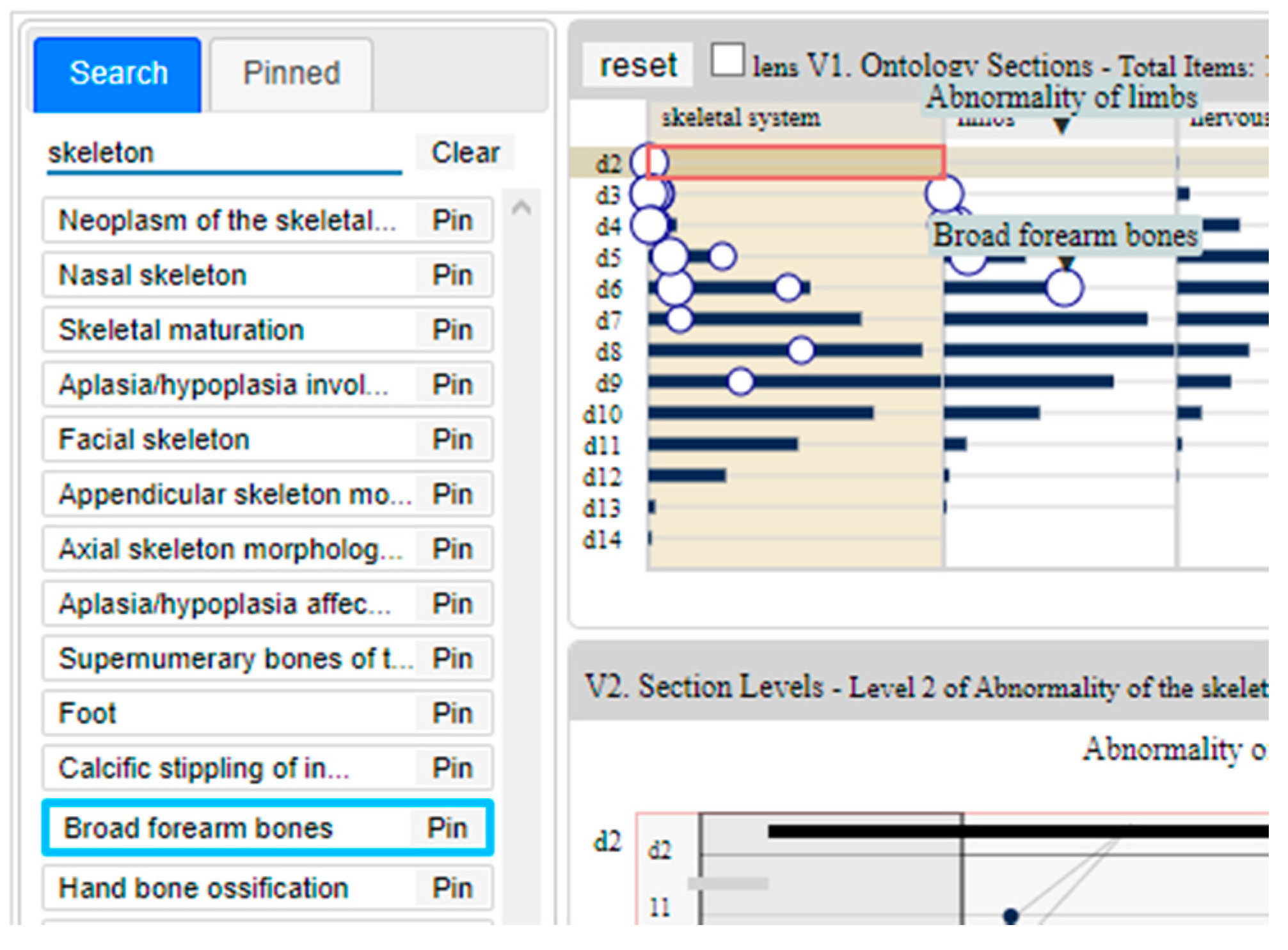

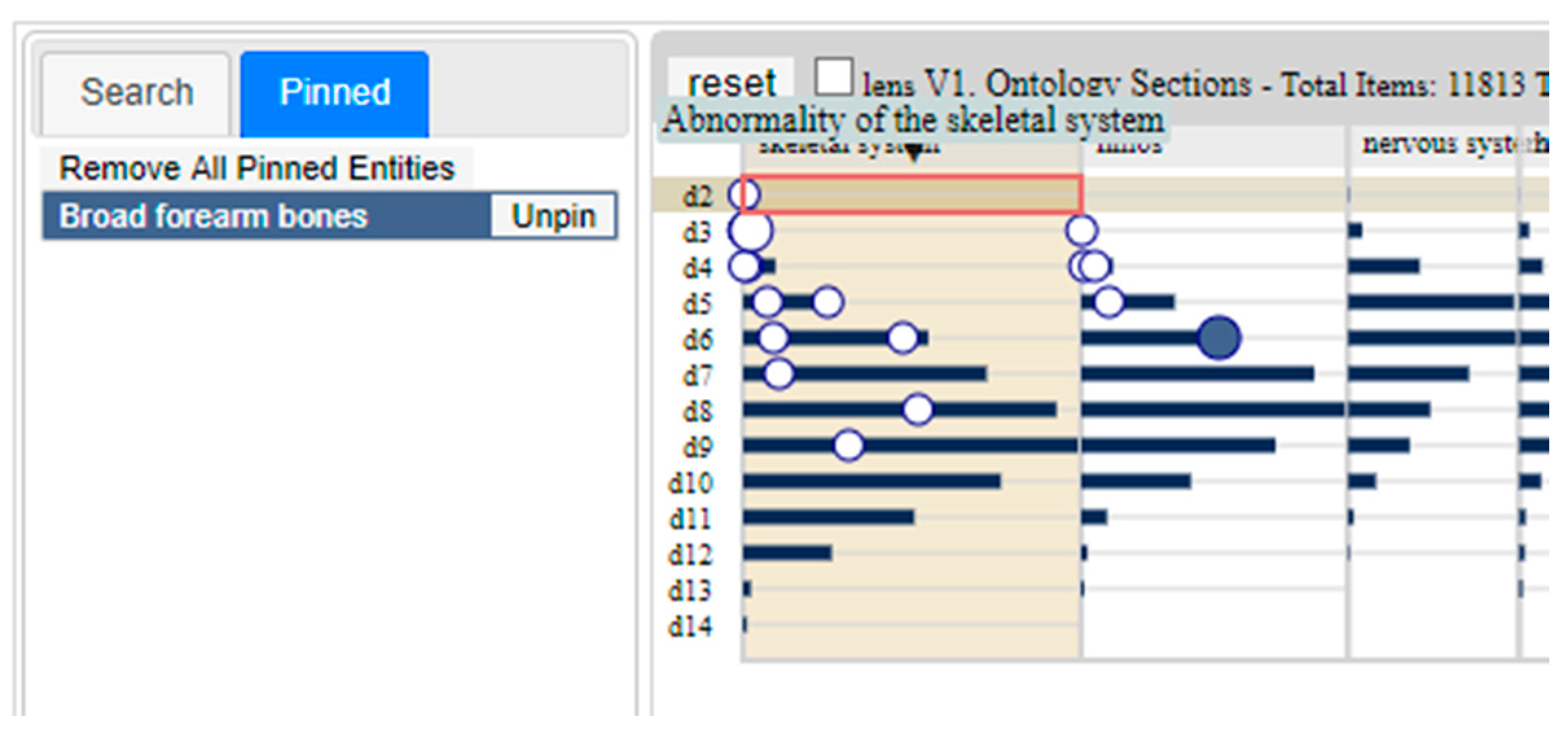

4.2.1. Search and Pinning Panel

Ontology Entity Search

Ontology Entity Pinning

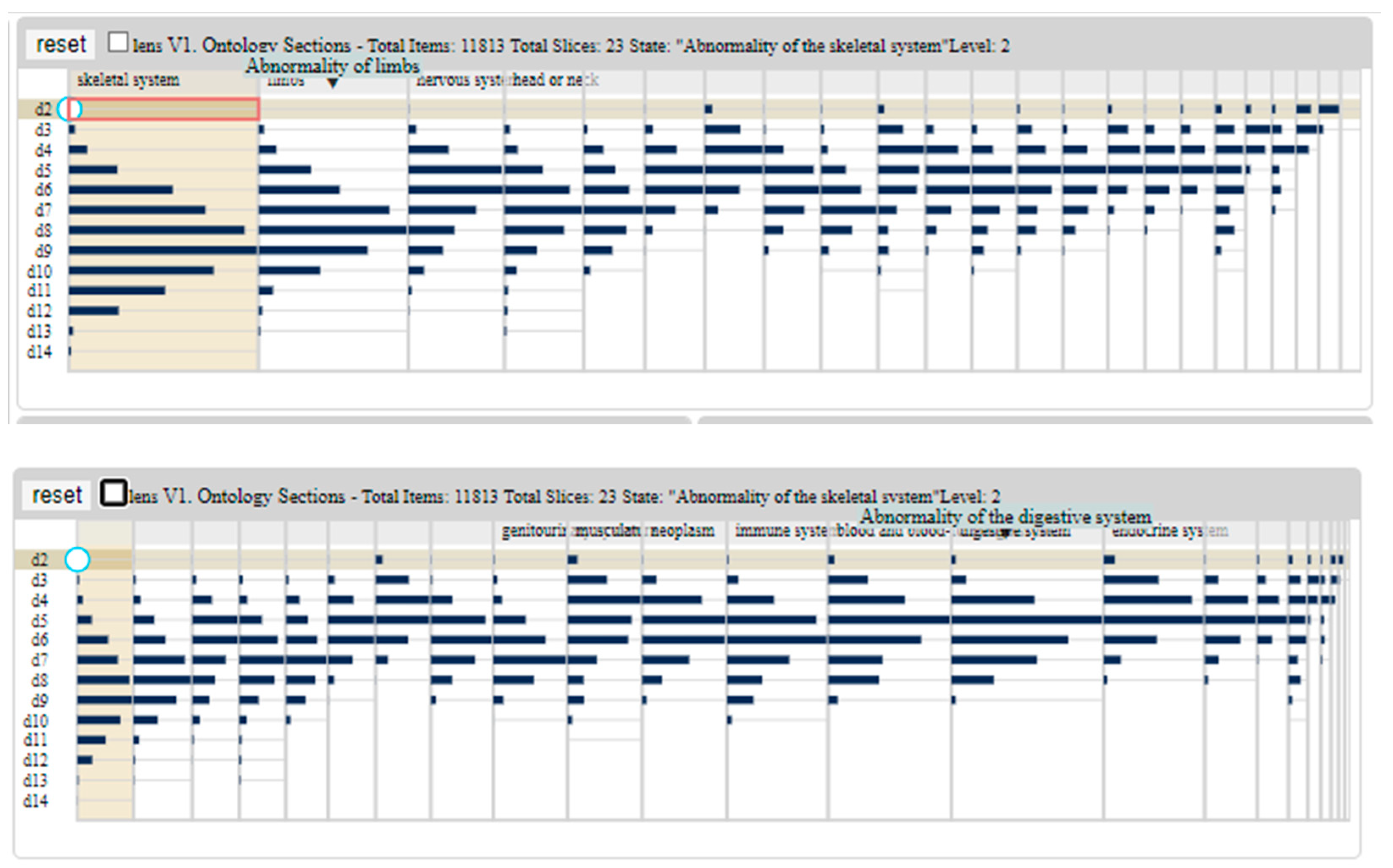

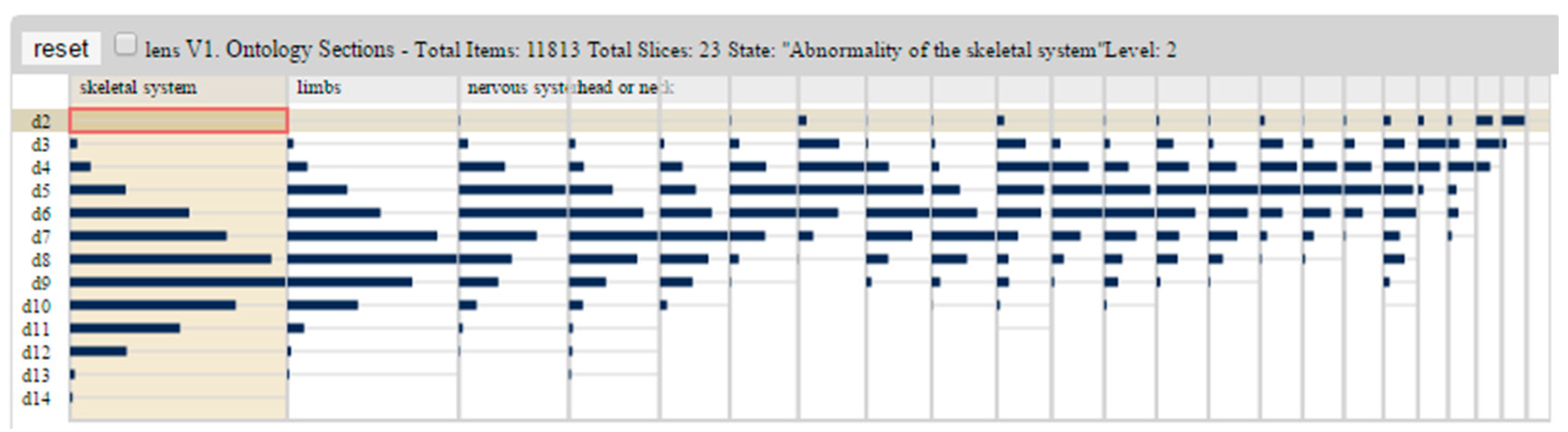

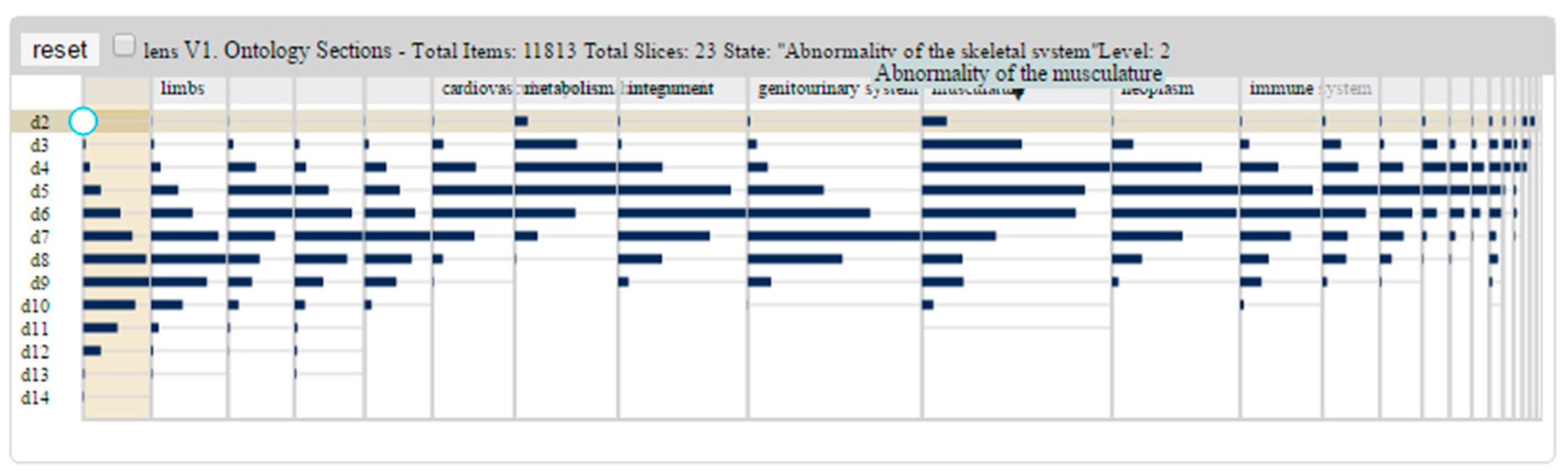

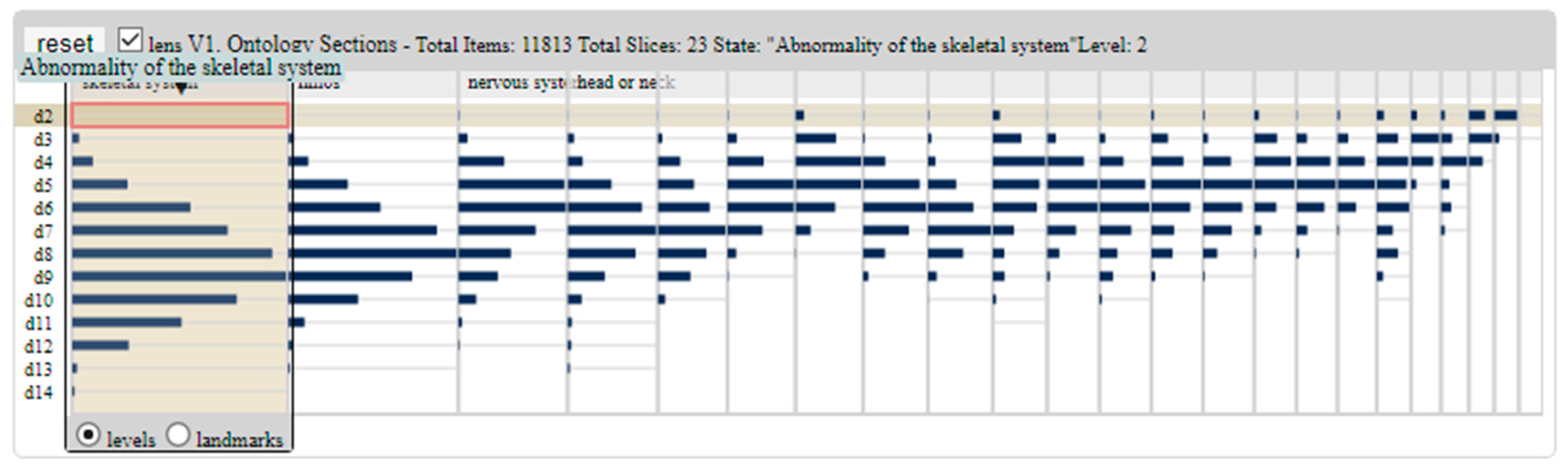

4.2.2. Ontology Sections Panel

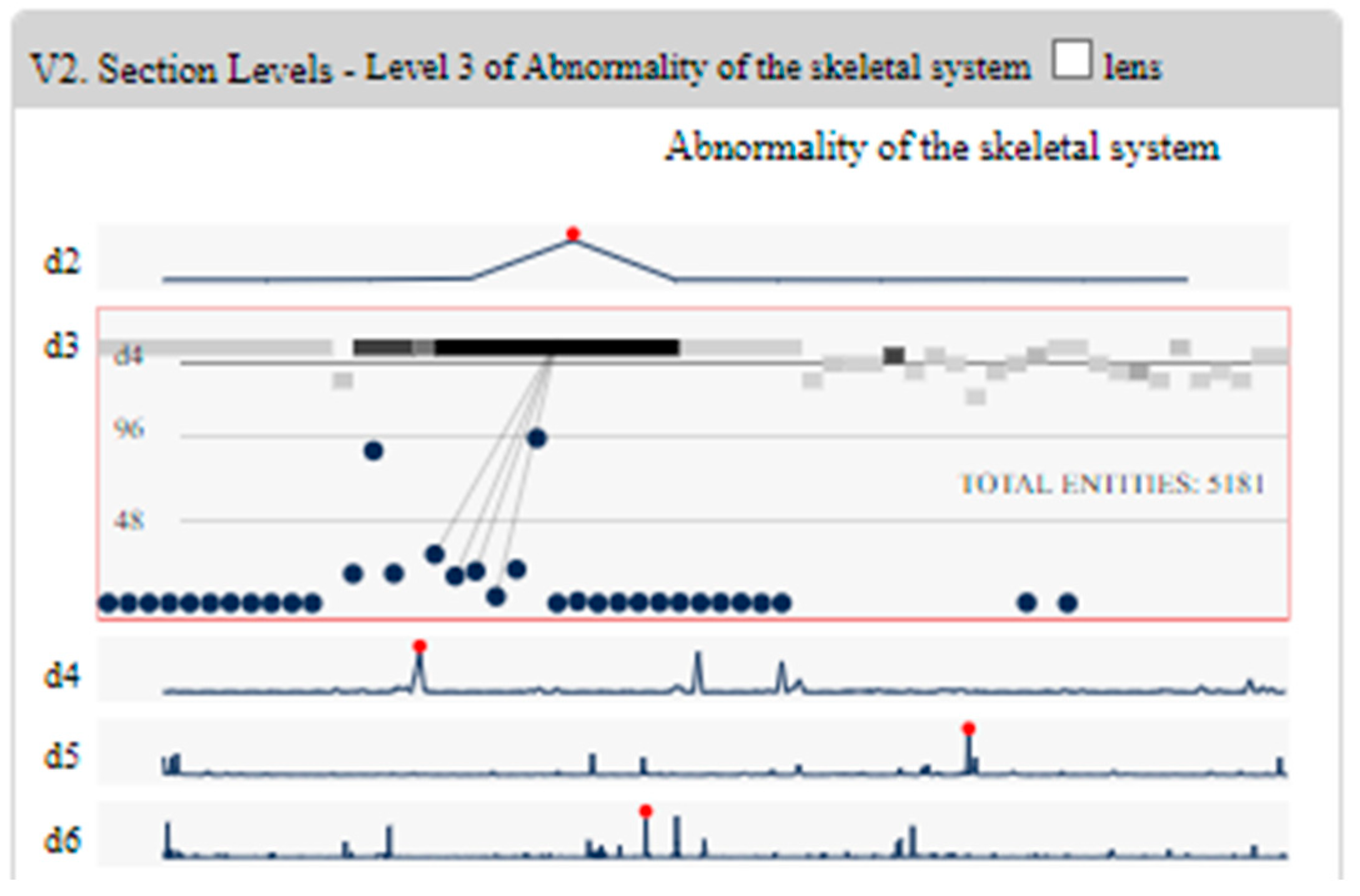

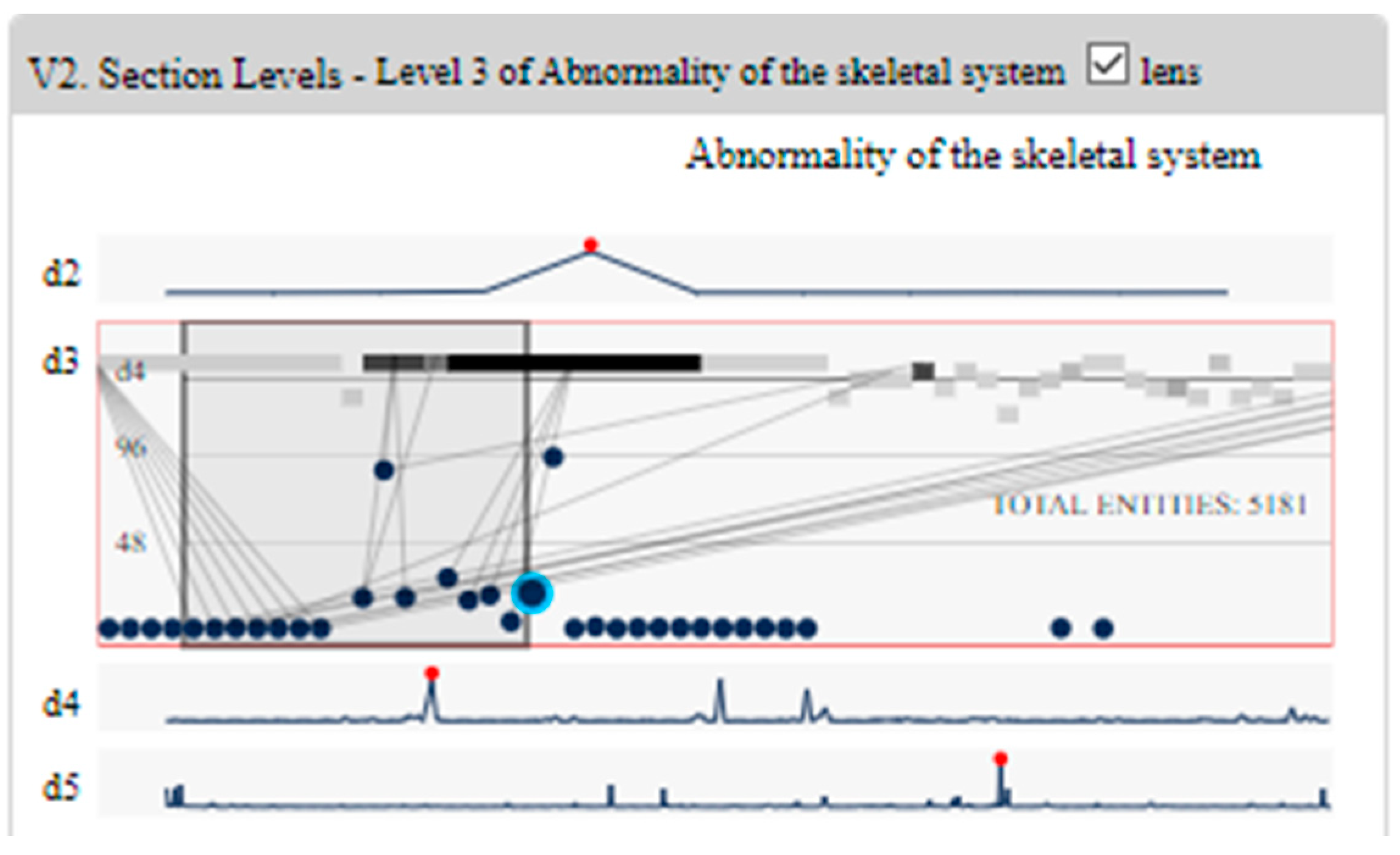

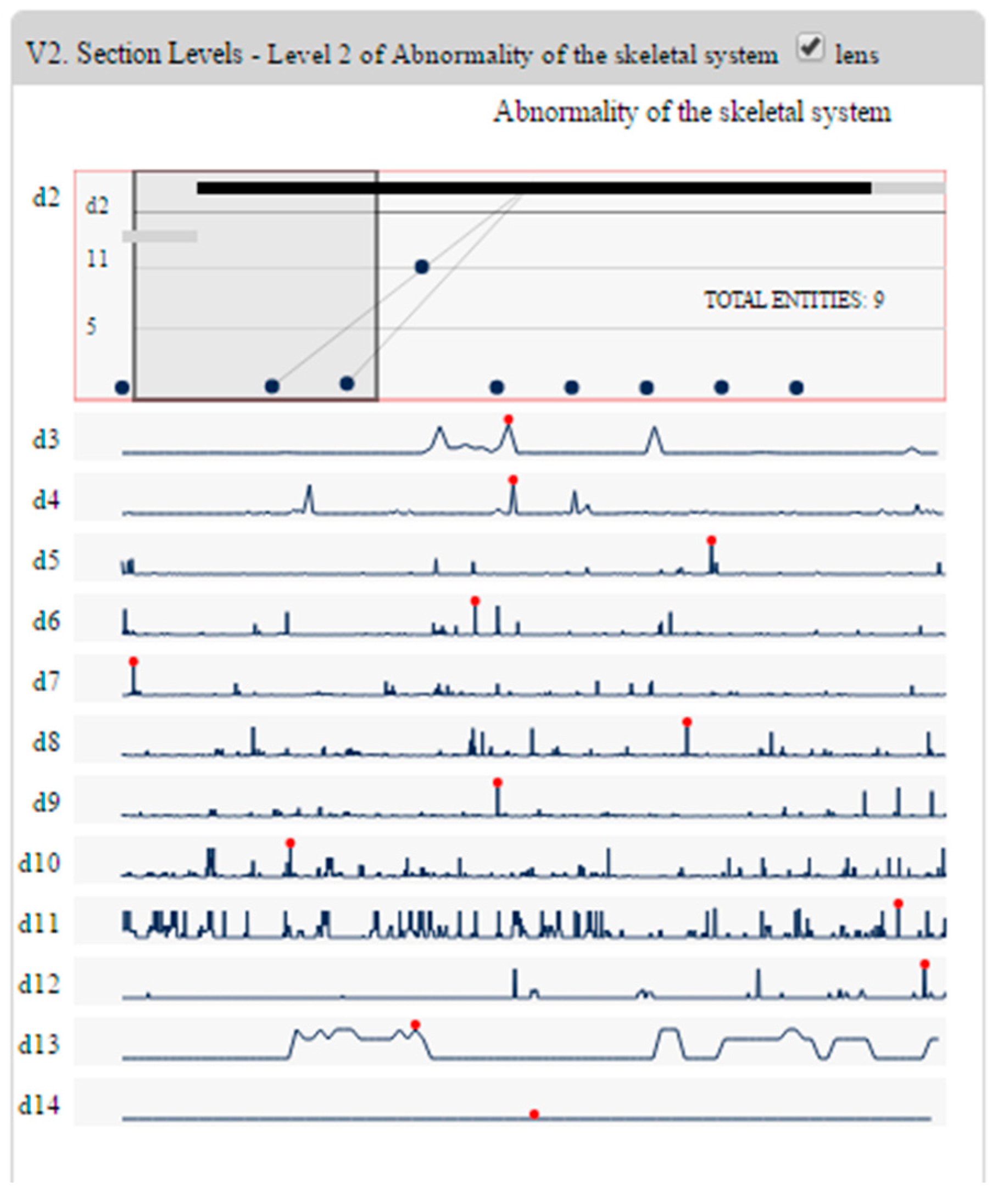

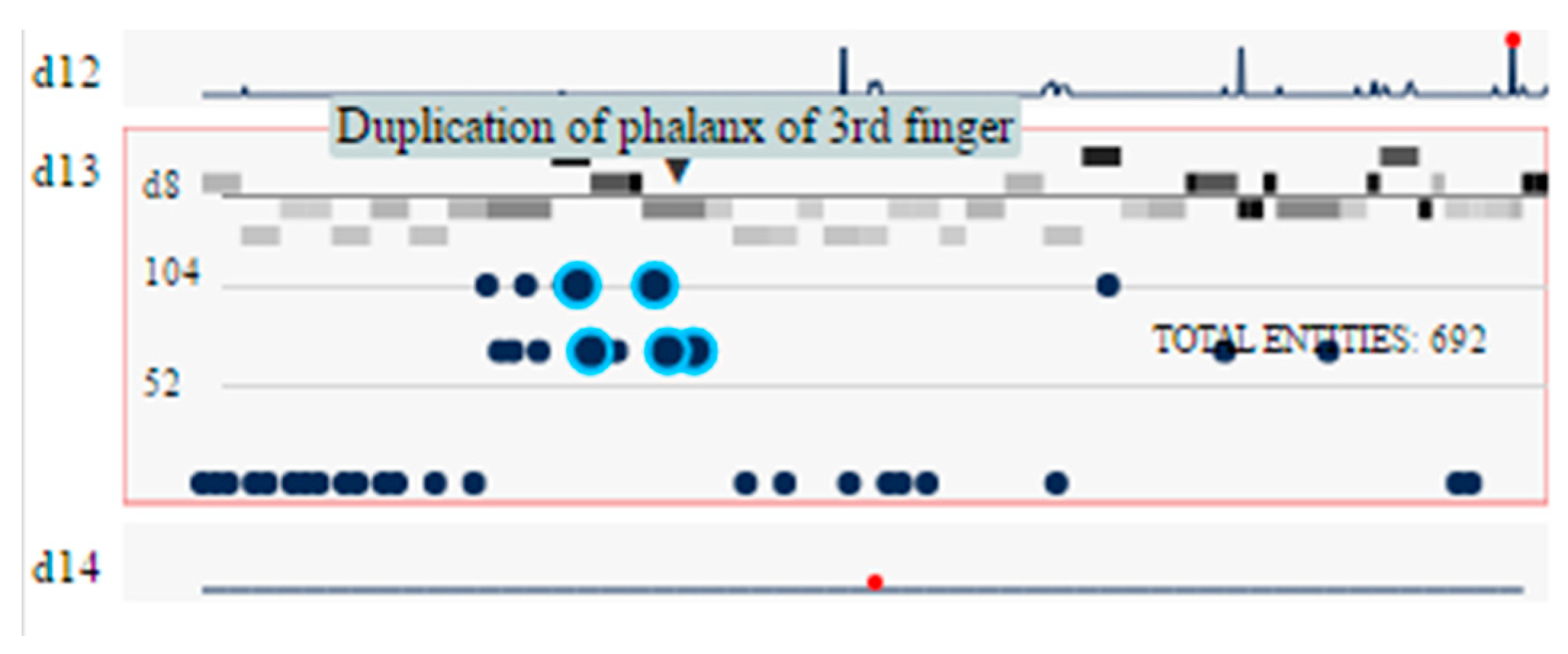

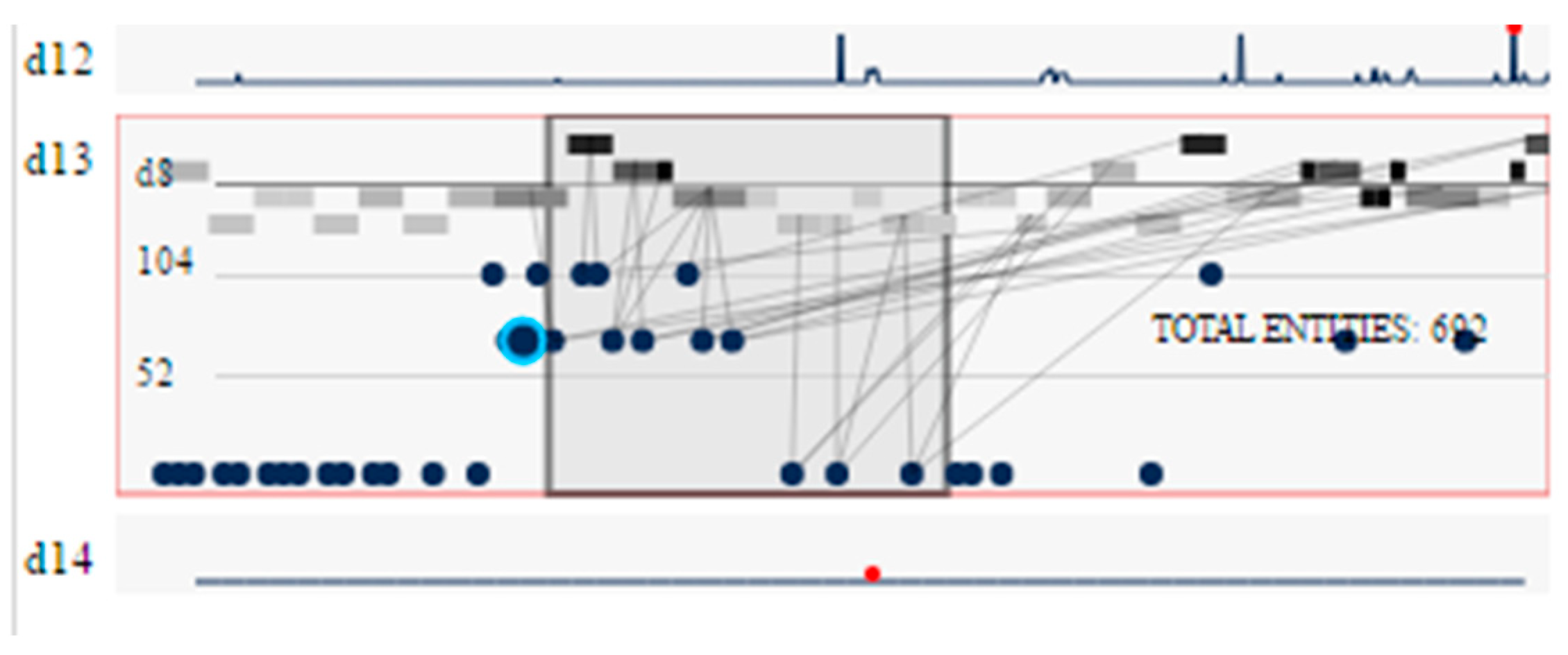

4.2.3. Section Levels Panel

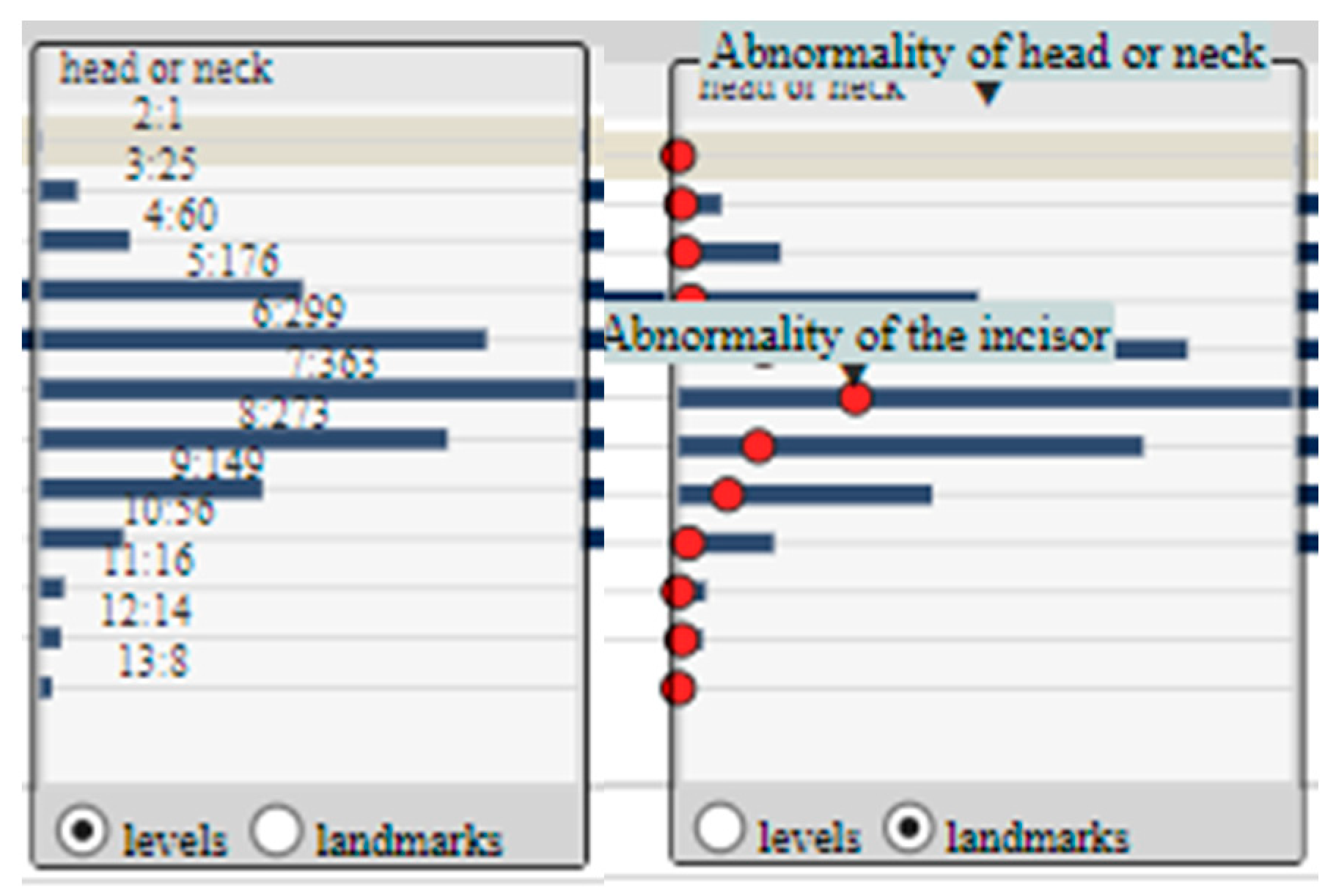

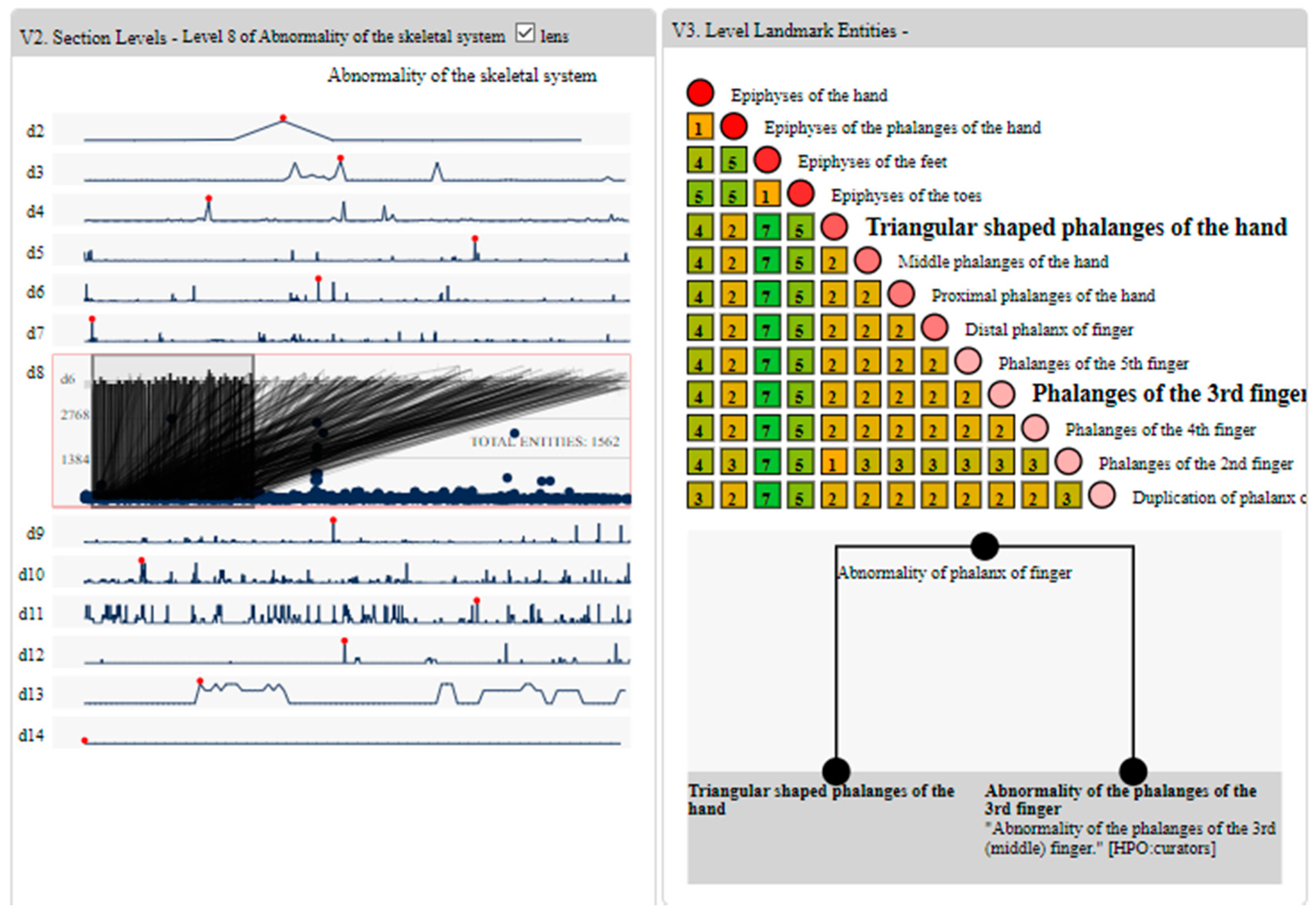

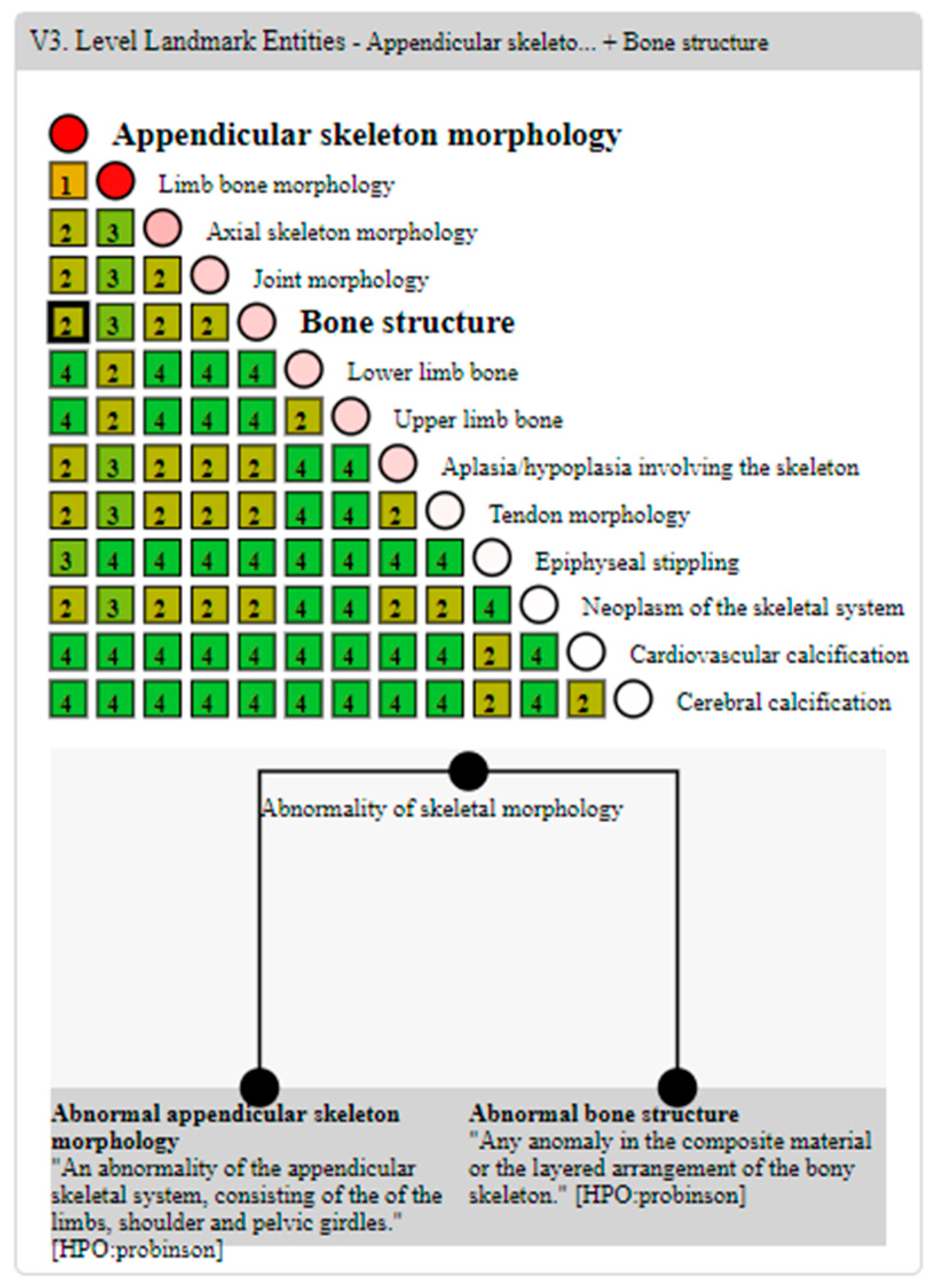

4.2.4. Level Landmark Entities Panel

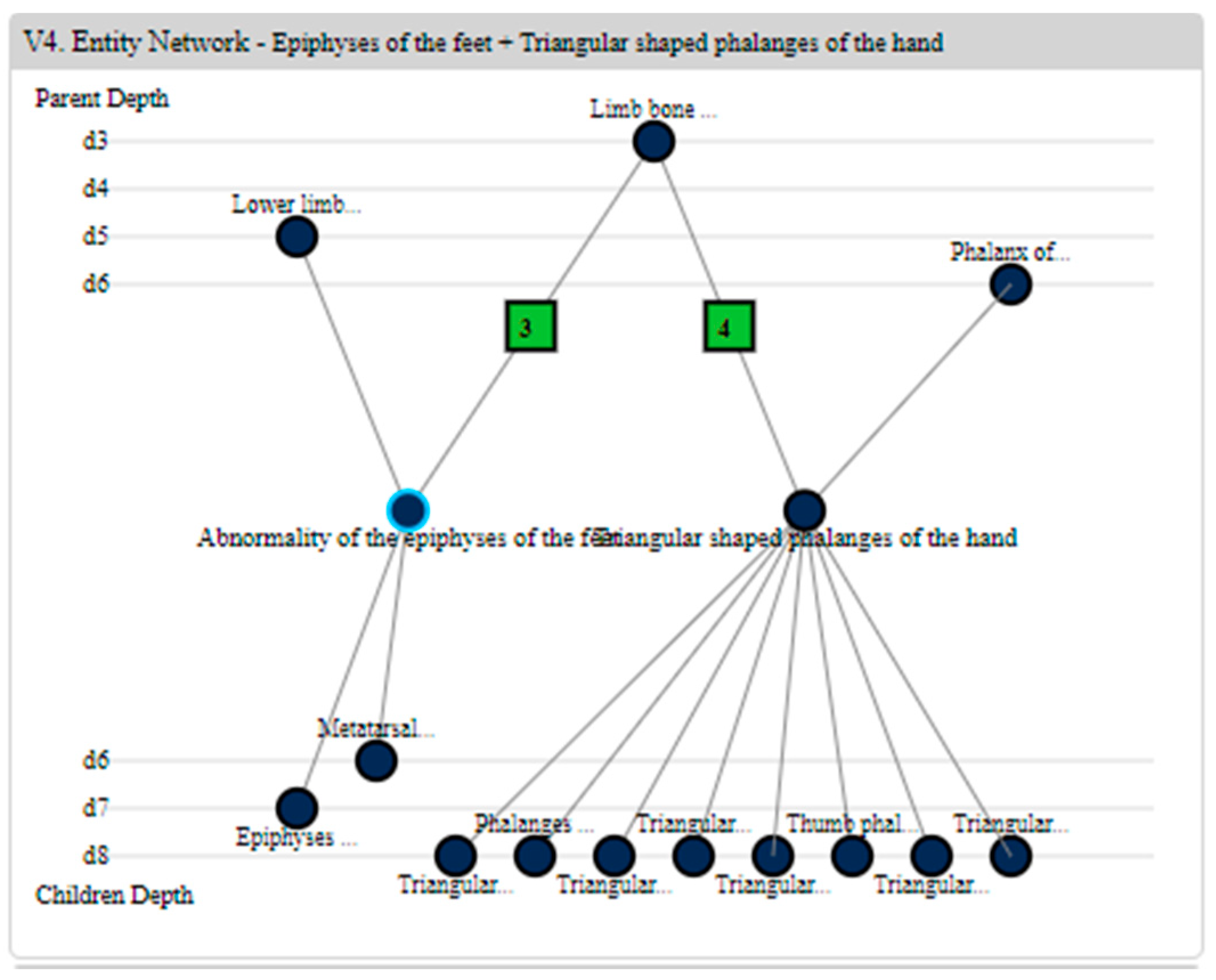

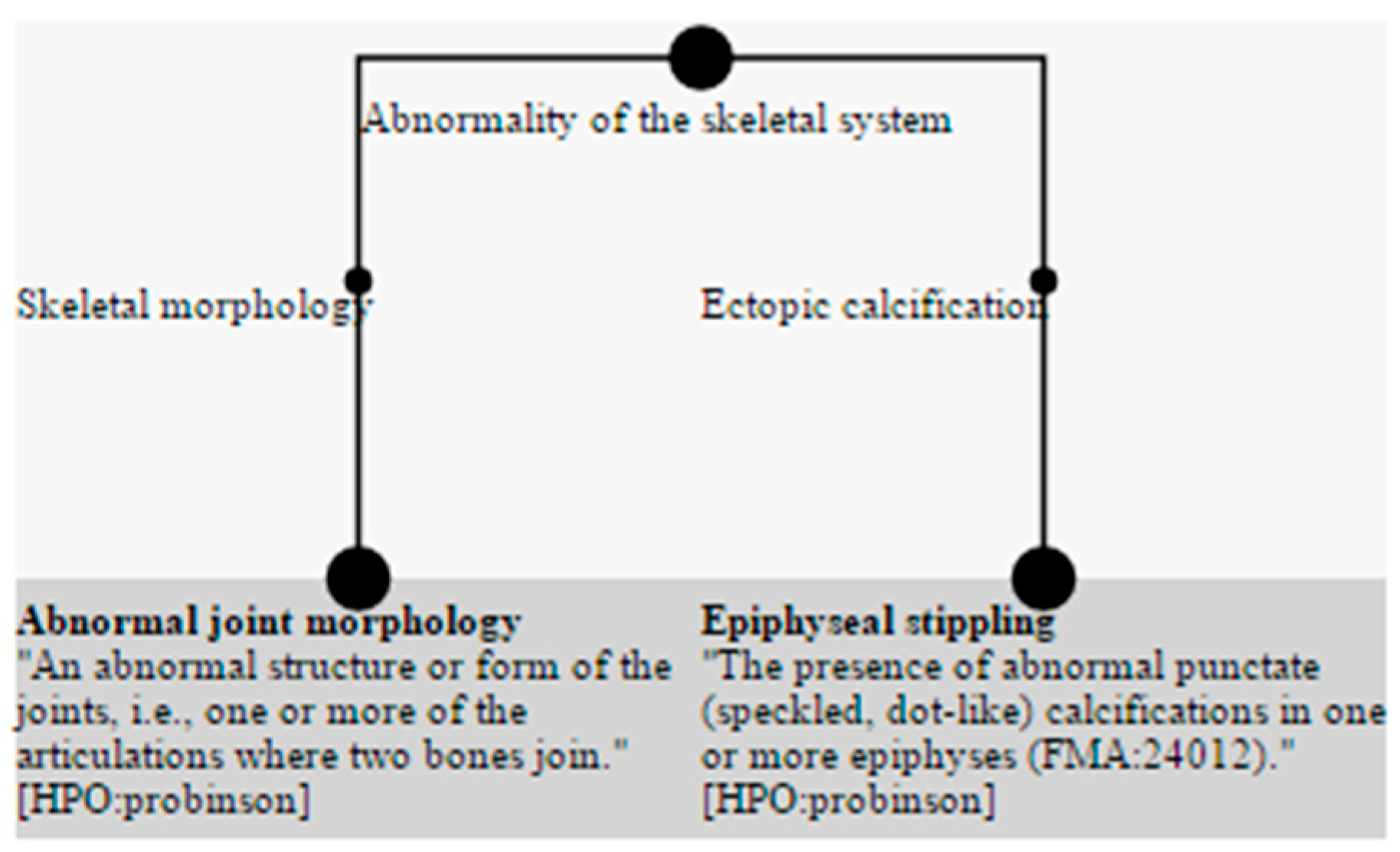

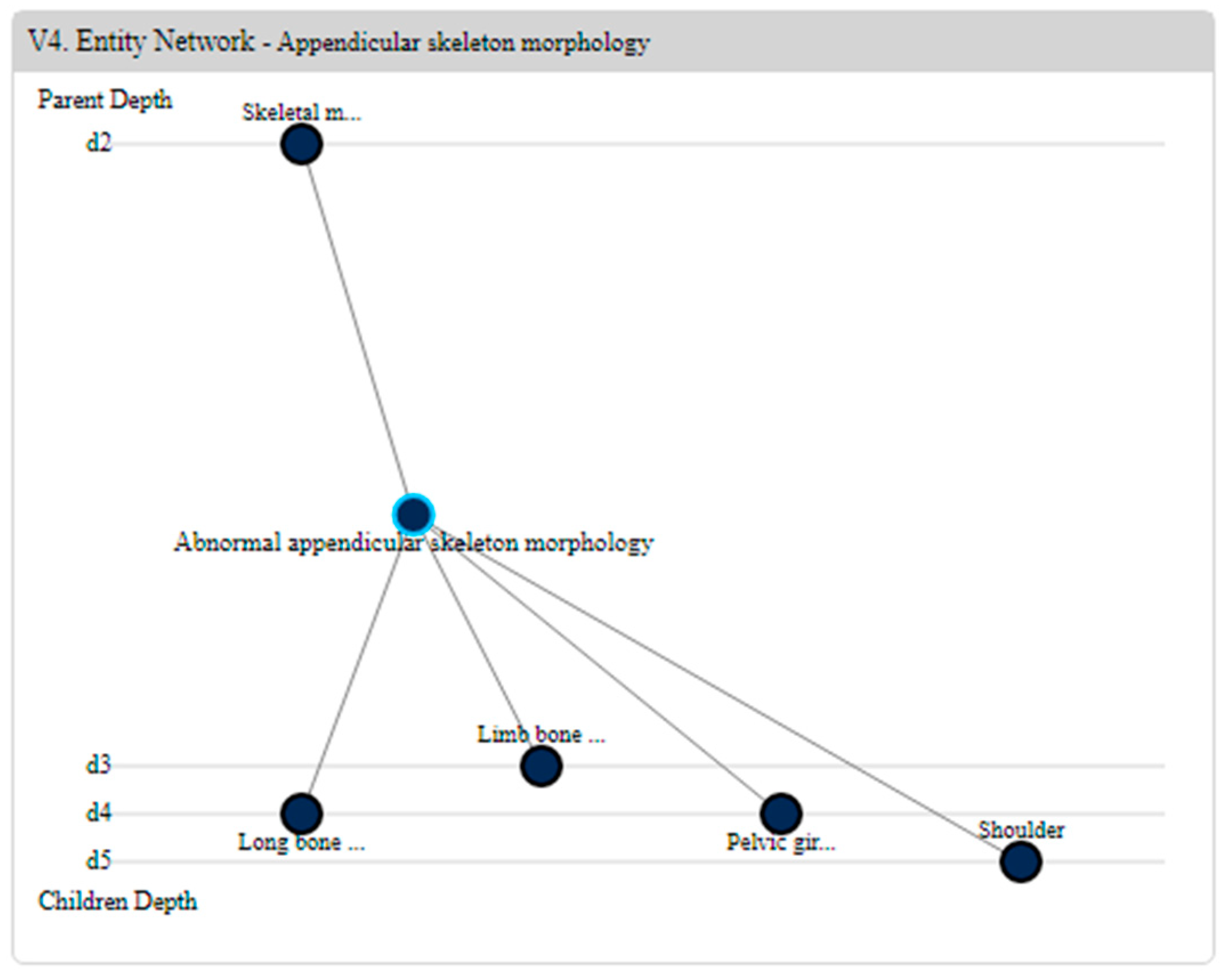

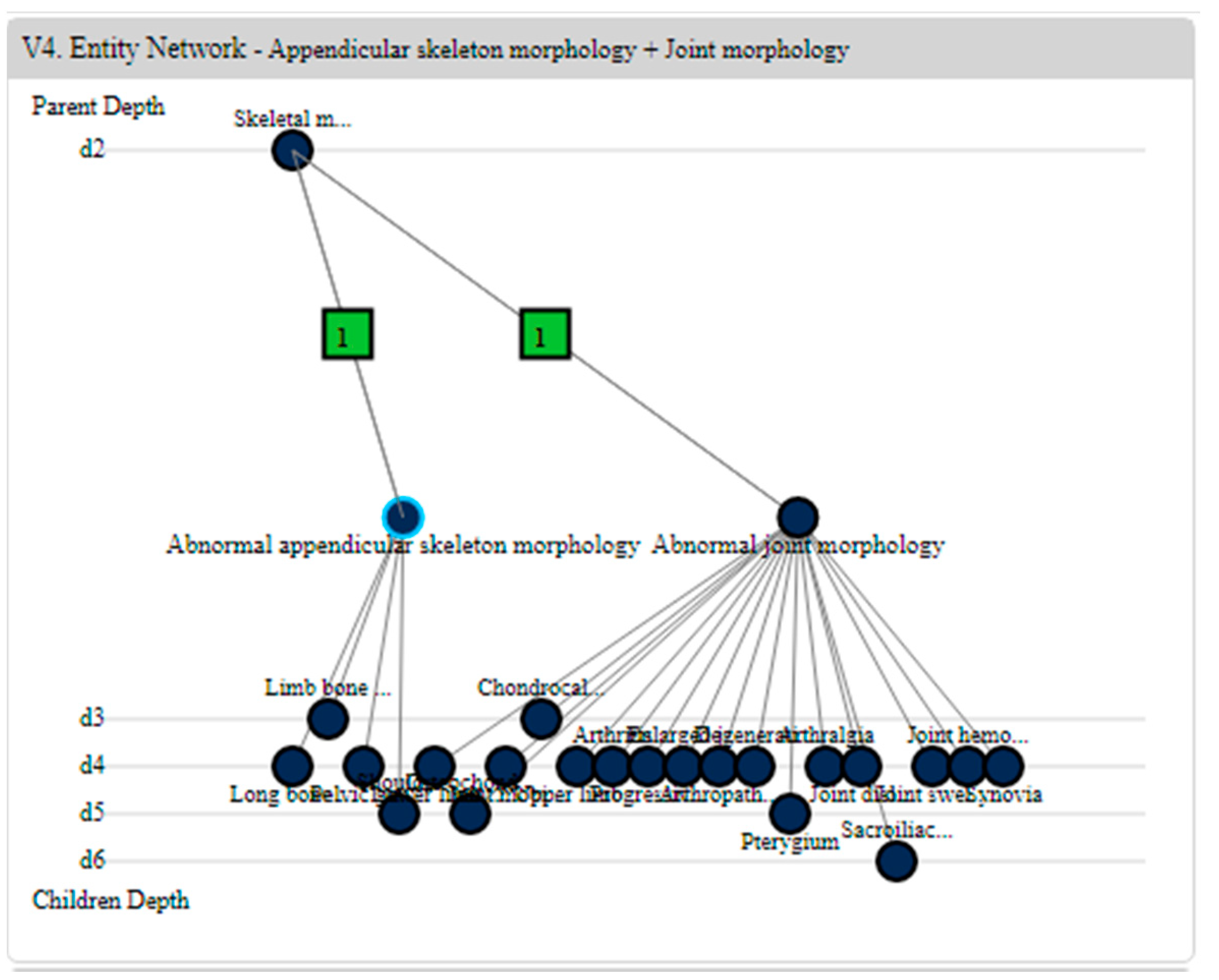

4.2.5. Entity Network Panel

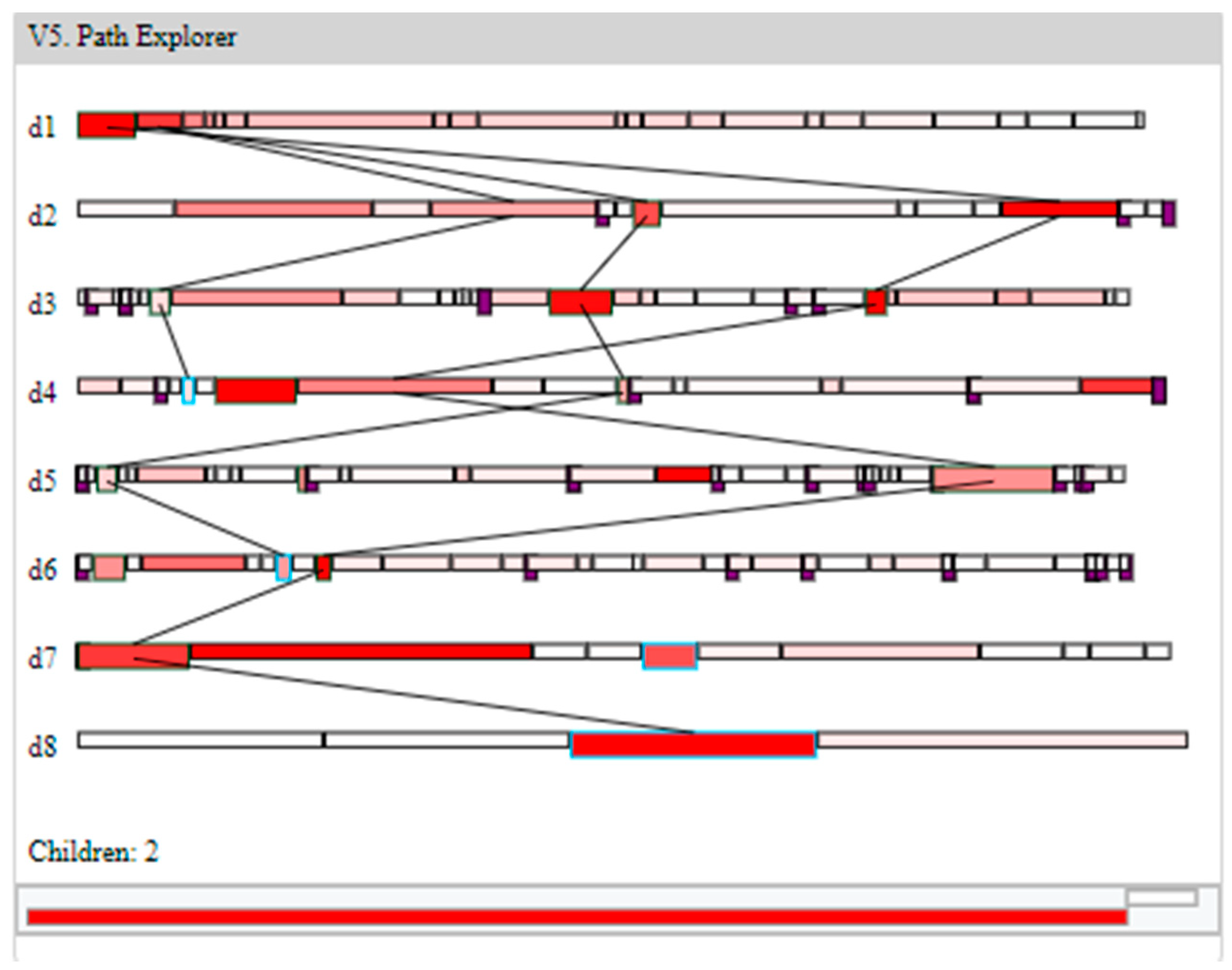

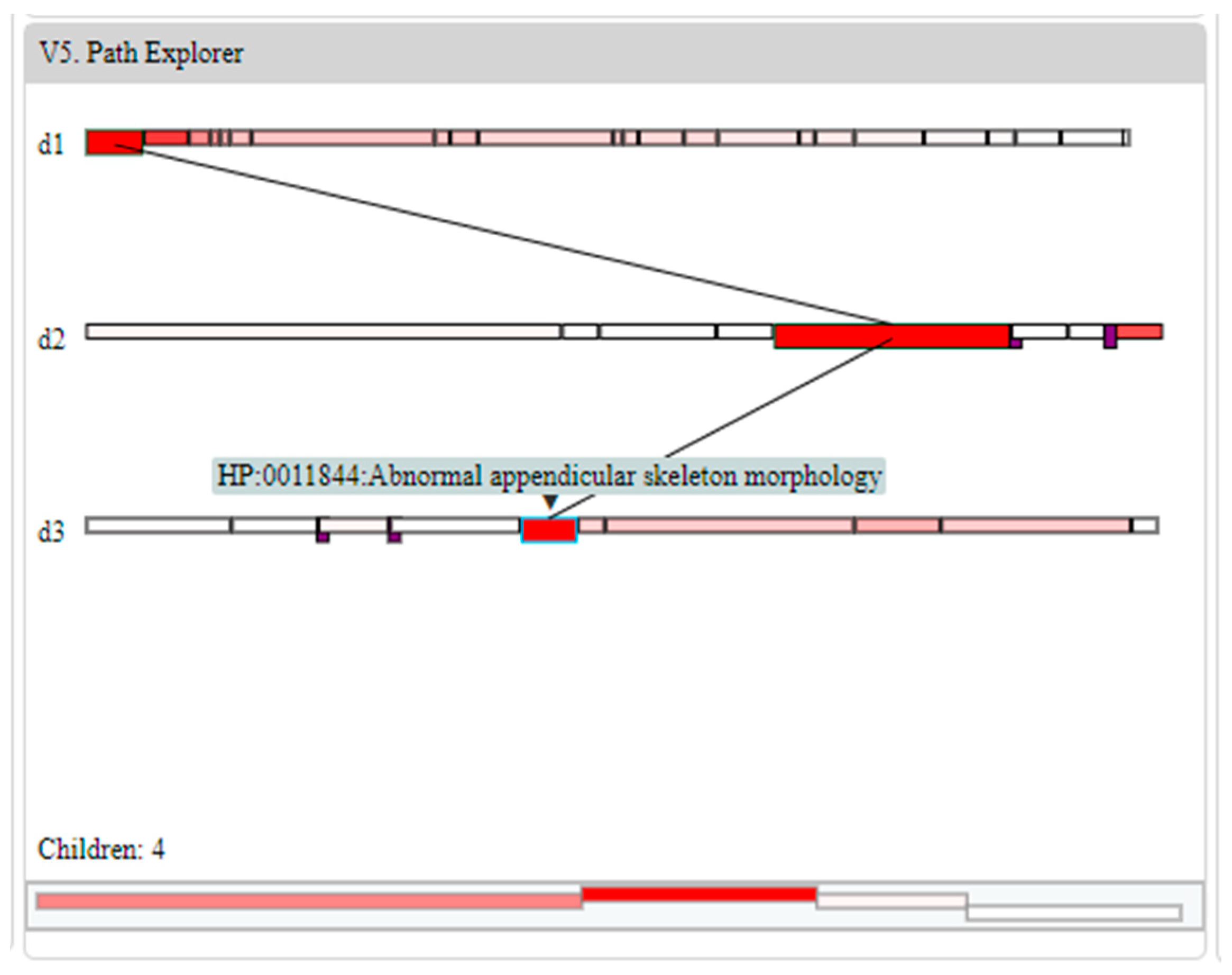

4.2.6. Path Explorer Panel

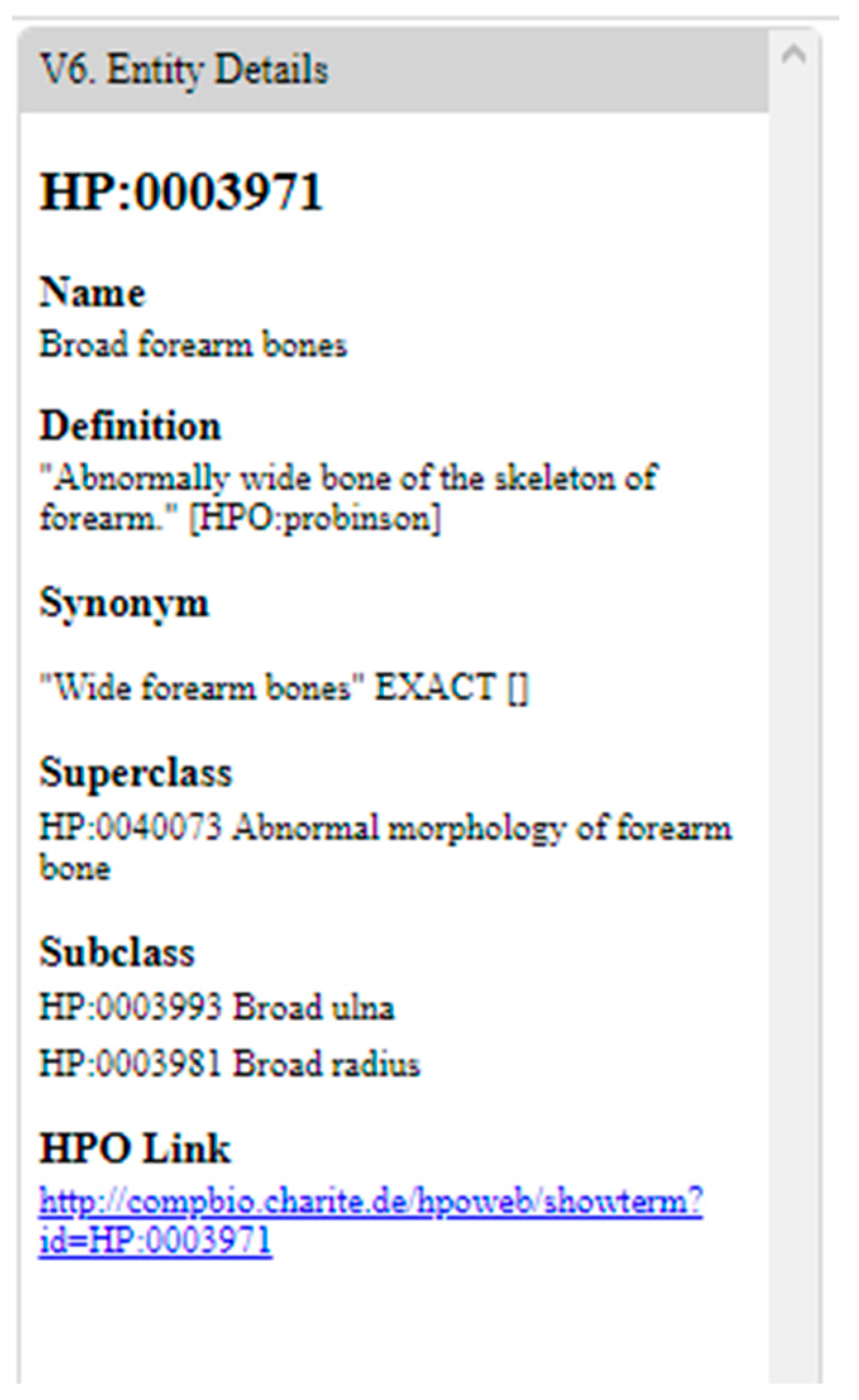

4.2.7. Entity Details Panel

5. Usage Scenario

6. Discussion and Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rector, A.; Schulz, S.; Rodrigues, J.M.; Chute, C.G.; Solbrig, H. On beyond Gruber: “Ontologies” in today’s biomedical information systems and the limits of OWL. J. Biomed. Inform. X 2019, 2, 100002. [Google Scholar] [CrossRef]

- Priya, M.; Kumar, C.A. A survey of state of the art of ontology construction and merging using Formal Concept Analysis. Indian J. Sci. Technol. 2015, 8. [Google Scholar] [CrossRef]

- Jusoh, S.; Awajan, A.; Obeid, N. The Use of Ontology in Clinical Information Extraction. J. Phys. Conf. Ser. 2020, 1529. [Google Scholar] [CrossRef]

- Lytvyn, V.; Dosyn, D.; Vysotska, V.; Hryhorovych, A. Method of Ontology Use in OODA. In Proceedings of the 2020 IEEE Third International Conference on Data Stream Mining & Processing (DSMP), Lviv, Ukraine, 21–25 August 2020; pp. 409–413. [Google Scholar]

- Román-Villarán, E.; Pérez-Leon, F.P.; Escobar-Rodriguez, G.A.; Martínez-García, A.; Álvarez-Romero, C.; Parra-Calderóna, C.L. An ontology-based personalized decision support system for use in the complex chronically ill patient. Stud. Health Technol. Inform. 2019, 264, 758–762. [Google Scholar]

- Dessimoz, C.; Škunca, N. The Gene Ontology Handbook; Springer Nature Open Access: New York, NY, USA, 2017; Volume 1446, ISBN 978-1-4939-3741-7. [Google Scholar]

- Sedig, K.; Parsons, P.; Liang, H.-N.; Morey, J. Supporting Sensemaking of Complex Objects with Visualizations: Visibility and Complementarity of Interactions. Informatics 2016, 3, 20. [Google Scholar] [CrossRef]

- Behrens, T.E.J.; Muller, T.H.; Whittington, J.C.R.; Mark, S.; Baram, A.B.; Stachenfeld, K.L.; Kurth-Nelson, Z. What Is a Cognitive Map? Organizing Knowledge for Flexible Behavior. Neuron 2018, 100, 490–509. [Google Scholar] [CrossRef]

- Craig, M.; Dewar, M.; Harris, M.A.; Della Sala, S.; Wolbers, T. Wakeful rest promotes the integration of spatial memories into accurate cognitive maps. Hippocampus 2016, 26, 185–193. [Google Scholar] [CrossRef]

- Epstein, R.A.; Patai, E.Z.; Julian, J.B.; Spiers, H.J. The cognitive map in humans: Spatial navigation and beyond. Nat. Neurosci. 2017, 20, 1504–1513. [Google Scholar] [CrossRef]

- Weisberg, S.M.; Newcombe, N.S. How do (some) people make a cognitive map? Routes, places, and working memory. J. Exp. Psychol. Learn. Mem. Cogn. 2016, 42, 768–785. [Google Scholar] [CrossRef]

- Schlegel, D.R.; Elkin, P.L. Ontology Visualization Tools to Assist in Creating and Maintaining Textual Term Definitions. Available online: https://pdfs.semanticscholar.org/3ffb/60e33e8009761b4465dee57c7ef86c4eda22.pdf (accessed on 28 November 2020).

- Borland, D.; Christopherson, L.; Schmitt, C. Ontology-Based Interactive Visualization of Patient-Generated Research Questions. Appl. Clin. Inform. 2019, 10, 377–386. [Google Scholar] [CrossRef]

- Ekstrom, A.D.; Ranganath, C. Space, time and episodic memory: The Hippocampus is all over the Cognitive Map. Hippocampus 2018, 28, 680–687. [Google Scholar] [CrossRef] [PubMed]

- Waller, D.E.; Nadel, L.E. Handbook of Spatial Cognition; American Psychological Association: Washington, DC, USA, 2013; ISBN 978-1-4338-1204-0. [Google Scholar]

- Kallioniemi, P.; Hakulinen, J.; Keskinen, T.; Turunen, M.; Heimonen, T.; Pihkala-Posti, L.; Uusi-Mäkelä, M.; Hietala, P.; Okkonen, J.; Raisamo, R.; et al. Evaluating landmark attraction model in collaborative wayfinding in virtual learning environments. PUZZLE Rev. Hisp. la Intel. Compet. 2013, 4, 15–18. [Google Scholar]

- Fast, K.V. Human-Information Interaction for Digital Libraries: From Document Repositories to Knowledge Environments. Ph.D. Thesis, University of Western Ontario, London, ON, Canada, 2010. [Google Scholar]

- Sedig, K.; Rowhani, S.; Liang, H. Designing interfaces that support formation of cognitive maps of transitional processes: An empirical study. Interact. Comput. 2005, 17, 419–452. [Google Scholar] [CrossRef]

- Saleemi, M.M.; Rodríguez, N.D.; Lilius, J.; Porres, I. A framework for context-aware applications for smart spaces. In Smart Spaces and Next Generation Wired/Wireless Networking; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6869 LNCS, pp. 14–25. ISBN 9783642228742. [Google Scholar]

- Jakus, G.; Milutinovic, V.; Omerović, S.; Tomazic, S. Concepts, Ontologies, and Knowledge Representation; Springer: New York, NY, USA, 2013; ISBN 978-1-4614-7821-8. [Google Scholar]

- Tobergte, D.R.; Curtis, S. A Survey on Ontologies for Human Behavior Recognition. J. Chem. Inf. Model. 2013, 53, 1689–1699. [Google Scholar]

- Katifori, A.; Torou, E.; Vassilakis, C.; Lepouras, G.; Halatsis, C. Selected results of a comparative study of four ontology visualization methods for information retrieval tasks. In Proceedings of the 2008 Second International Conference on Research Challenges in Information Science, Marrakech, Morocco, 3–6 June 2008; pp. 133–140. [Google Scholar]

- Zhao, K.; Ward, M.; Rundensteiner, E.; Higgins, H. MaVis: Machine Learning Aided Multi-Model Framework for Time Series Visual Analytics. Electron. Imaging 2017, 2016, 1–10. [Google Scholar] [CrossRef]

- Parsons, P.; Sedig, K. Common visualizations: Their cognitive utility. In Handbook of Human Centric Visualization; Huang, W.D., Ed.; Springer: New York, NY, USA, 2014; pp. 671–691. [Google Scholar]

- Shneiderman, B. The Eyes Have It: A Task by Data Type Taxonomy for Information Visualizations. In Proceedings of the 1996 IEEE Symposium on Visual Languages, Boulder, CO, USA, 3–6 September 1996; pp. 336–343. [Google Scholar]

- Davies, J.; Michaelian, K. Identifying and individuating cognitive systems: A task-based distributed cognition alternative to agent-based extended cognition. Cogn. Process. 2016, 17, 307–319. [Google Scholar] [CrossRef]

- Pereira Rocha, J.A.; de Paula, C.P.; Sirihal Duarte, A.B. Distributed Cognition as a Theoretical Framework for Information Behavior Studies. Inf. Soc. 2016, 26, 91–105. [Google Scholar]

- Ninkov, A.; Sedig, K. VINCENT: A visual analytics system for investigating the online vaccine debate. Online J. Public Health Inform. 2019, 11. [Google Scholar] [CrossRef]

- Demelo, J.; Parsons, P.; Sedig, K. Ontology-Driven Search and Triage: Design of a Web-Based Visual Interface for MEDLINE. JMIR Med. Inform. 2017, 5, e4. [Google Scholar] [CrossRef]

- Ola, O.; Sedig, K. Beyond simple charts: Design of visualizations for big health data. Online J. Public Health Inform. 2016, 8. [Google Scholar] [CrossRef]

- Sedig, K.; Parsons, P. Interaction Design for Complex Cognitive Activities with Visual Representations: A Pattern-Based Approach. AIS Trans. Hum.-Comput. Interact. 2013, 5, 85–133. [Google Scholar] [CrossRef]

- Parsons, P.; Didandeh, A. Interactivity in Visual Analytics: Use of Conceptual Frameworks to Support Human-Centered Design of a Decision-Support Tool. In Proceedings of the 2015 48th Hawaii International Conference on System Sciences, Kauai, HI, USA, 5–8 January 2015; pp. 1138–1147. [Google Scholar]

- Mayer, R.E. The Cambridge Handbook of Multimedia Learning; Cambridge University Press: Cambridge, UK, 2014; ISBN 1107035201. [Google Scholar]

- Seufert, T. The interplay between self-regulation in learning and cognitive load. Educ. Res. Rev. 2018, 24, 116–129. [Google Scholar] [CrossRef]

- Wang, C.; Fang, T.; Gu, Y. Learning performance and behavioral patterns of online collaborative learning: Impact of cognitive load and affordances of different multimedia. Comput. Educ. 2020, 143, 103683. [Google Scholar] [CrossRef]

- Mutlu-Bayraktar, D.; Cosgun, V.; Altan, T. Cognitive load in multimedia learning environments: A systematic review. Comput. Educ. 2019, 141, 103618. [Google Scholar] [CrossRef]

- Sun, M.; Wang, M.; Wegerif, R. Using computer-based cognitive mapping to improve students’ divergent thinking for creativity development. Br. J. Educ. Technol. 2019, 50, 2217–2233. [Google Scholar] [CrossRef]

- Yalçin, M.A.; Elmqvist, N.; Bederson, B.B. Cognitive stages in visual data exploration. In Proceedings of the Sixth Workshop on Beyond Time and Errors on Novel Evaluation Methods for Visualization, Baltimore, MD, USA, 24 October 2016; pp. 86–95. [Google Scholar]

- Ragan, E.D.; Bowman, D.A.; Huber, K.J. Supporting cognitive processing with spatial information presentations in virtual environments. Virtual Real. 2012, 16, 301–314. [Google Scholar] [CrossRef]

- Pesquita, C.; Ivanova, V.; Lohmann, S.; Lambrix, P. A framework to conduct and report on empirical user studies in semantic web contexts. In European Knowledge Acquisition Workshop; Springer: Cham, Switzerland, 2018; Volume 11313, pp. 567–583. [Google Scholar]

- Katifori, A.; Halatsis, C.; Lepouras, G.; Vassilakis, C.; Giannopoulou, E. Ontology visualization methods—A survey. ACM Comput. Surv. 2007, 39, 10-es. [Google Scholar] [CrossRef]

- Dudáš, M.; Lohmann, S.; Svátek, V.; Pavlov, D. Ontology Visualization Methods and Tools: A Survey of the State of the Art; Cambridge University Press: Cambridge, UK, 2018; Volume 33, ISBN 0269888918. [Google Scholar]

- Po, L.; Bikakis, N.; Desimoni, F.; Papastefanatos, G. Linked Data Visualization: Techniques, Tools, and Big Data. Synth. Lect. Semant. Web Theory Technol. 2020, 10, 1–157. [Google Scholar] [CrossRef]

- Mouromtsev, D.; Pavlov, D.; Emelyanov, Y.; Morozov, A.; Razdyakonov, D.; Galkin, M. The Simple Web-based Tool for Visualization and Sharing of Semantic Data and Ontologies. In Proceedings of the International Semantic Web Conference (Posters & Demos), Bethlehem, PA, USA, 11–15 October 2015. [Google Scholar]

- Falconer, S. OntoGraf. Available online: https://protegewiki.stanford.edu/wiki/OntoGraf (accessed on 21 May 2020).

- Semafora Systems OntoStudio. Available online: https://www.semafora-systems.com/ontobroker-and-ontostudio-x (accessed on 21 May 2020).

- Musen, M.A.; Team, P. The Protégé Project: A Look Back and a Look Forward. AI Matters 2015, 1, 4–12. [Google Scholar] [CrossRef]

- TopQuadrant TopBraid Explorer. Available online: https://www.topquadrant.com/products/topbraid-explorer/ (accessed on 21 May 2020).

- Lohmann, S.; Negru, S.; Haag, F.; Ertl, T. Visualizing Ontologies with VOWL. Semant.-Web-J. Net 2016, 7, 399–419. [Google Scholar] [CrossRef]

- Silva, I.C.S.; Santucci, G.; Freitas, C.M.D.S. Visualization and analysis of schema and instances of ontologies for improving user tasks and knowledge discovery. J. Comput. Lang. 2019, 51, 28–47. [Google Scholar] [CrossRef]

- Tudorache, T.; Nyulas, C.; Noy, N.F.; Musen, M.A. WebProtégé: A collaborative ontology editor and knowledge acquisition tool for the web. Semant. Web 2013, 4, 89–99. [Google Scholar] [CrossRef] [PubMed]

- Nightingale, O. Lunr.js. Available online: http://lunrjs.com/ (accessed on 10 August 2020).

- Bostock, M. D3.js Data-Driven Documents. Available online: https://d3js.org/ (accessed on 9 December 2020).

- Ukrop, J.; Číková, Z.; Kapec, P. Visual Access to Graph Content Using Magic Lenses and Filtering. In Proceedings of the 28th Spring Conference on Computer Graphics, Budmerice, Slovakia, 2–4 May 2012; Volume 1, pp. 23–30. [Google Scholar]

- Kohler, S.; Doelken, S.C.; Mungall, C.J.; Bauer, S.; Firth, H.V.; Bailleul-Forestier, I.; Black, G.C.M.; Brown, D.L.; Brudno, M.; Campbell, J.; et al. The Human Phenotype Ontology project: Linking molecular biology and disease through phenotype data. Nucleic Acids Res. 2014, 42, 966–974. [Google Scholar] [CrossRef] [PubMed]

- Köhler, S.; Robinson, P. HPO Web Browser: Blindness Infopage. Available online: http://compbio.charite.de/hpoweb/showterm?id=HP:0000618 (accessed on 9 December 2020).

- The Human Phenotype Ontology. Available online: https://hpo.jax.org/app/ (accessed on 10 May 2020).

- Köhler, S.; Carmody, L.; Vasilevsky, N.; Jacobsen, J.O.B.; Danis, D.; Gourdine, J.-P.; Gargano, M.; Harris, N.L.; Matentzoglu, N.; McMurry, J.A.; et al. Expansion of the Human Phenotype Ontology (HPO) knowledge base and resources. Nucleic Acids Res. 2018, 47, D1018–D1027. [Google Scholar] [CrossRef] [PubMed]

| Name | Description | Related Thinking Processes | Required Spatial Knowledge |

|---|---|---|---|

| Sensemaking | Reasoning and the mental manipulation of representations to develop, build upon, and refine mental models [7]. | Convergent | None |

| Navigation | Observing, orientating, and decision-making for directed movement towards a known objective [4,11,31]. | Convergent | Landmark, Route |

| Exploration | Observing, orientating, and decision-making for undirected movement without an objective [4,38]. | Divergent, Convergent | None |

| Search | Observing, orientating, and decision-making for directed movement towards an unknown objective [31]. | Divergent, Convergent | Landmark, Route, Survey |

| Wayfinding | Constructing and memorizing movement sequences for future objective-oriented activities [16,39,40]. | Divergent, Convergent | Landmark, Route, Survey |

| Criteria | Description |

|---|---|

| Provide generalized support for ontology datasets | Designs should provide a generalized environment which facilitate the loading of ontology datasets of any size under the guidance of existing ontology file specifications. This is so that we may build our understanding of ontology datasets which are relevant to our challenging knowledge-based tasks. |

| Tune cognitive load to specific needs | Designs should provide a cognitive load which is aligned with the conditions for an effective learning environment for ontology datasets. Specifically, extraneous load which is unrelated to the learning task should be minimized, intrinsic load should be tuned to support the specific cognitive activities of the learning task, and germane load should provide affordances which unify the needs of the learner, space, and chosen process for learning. |

| Afford the spatial knowledge within ontological space | Designs should supply encounters which afford to us an authentic internal encoding of the entities, relations, and structures of the ontology dataset to support our development of spatial knowledge for the formation of our cognitive maps. |

| Facilitate the performance of the cognitive activities necessary to learn a space | Designs should provide encounters which allow us to perform the cognitive activities necessary to build understanding of a space. This is because not supporting any one of sensemaking, navigation, exploration, wayfinding, and search would lessen our ability to leverage our various cognitive processes and hamper the stages of cognitive map formation. |

| Support self-regulated learning | Designs should provide encounters which allow us to guide our own learning tasks: through setting goals, planning our learning process, enacting our process by using our resources to interact with new information, and evaluating our learning achievements. |

| Type | Description | Typical Implementation Strategy | Cognitive Activities | Use in Review Tools |

|---|---|---|---|---|

| List | A subview that depicts components of the ontology datasets like entities and relations within a list. | A text-based visual representation strategy with interactions for selection and management. | Sensemaking, Navigation, Exploration, Search, Wayfinding | Protégé Entity Browser, Protégé OntoGraf, Ontodia OntoStudio, TopBraid Explorer, WebProtégé Entity Graph, OntoViewer |

| Overview | A subview that depicts the full contents of an ontology dataset. | A pictorial-based visual representation strategy with interactions for selection and filtering. | Sensemaking, Navigation, Exploration, Search, Wayfinding | WebVOWL, Ontodia, OntoViewer |

| Context | A subview that depicts a subset of the ontology dataset contents determined through interaction. | A pictorial-based visual representation strategy with interactions for selection and comparison. | Sensemaking, Exploration, Wayfinding | Protégé OntoGraf, OntoStudio, TopBraid Explorer, WebProtégé Entity Graph, OntoViewer |

| Details | A subview that depicts the information of a specific object within the ontology dataset. | A text-based visual representation strategy with minimal opportunities for interaction. | Sensemaking | WebVOWL, Ontodia OntoStudio, TopBraid Explorer, WebProtégé Entity Graph, OntoViewer |

| Criteria | PRONTOVISE | Related Systems/Views |

|---|---|---|

| Provide generalized support for ontology datasets | PRONTOVISE provides a generalized environment which supports the loading of ontology datasets of any size and from any domain when they fulfill the requirements of OWL RDF, the leading ontology dataset format. Additionally, its visual representation and interaction designs are built to scale for any number of encoded complex objects. | Ontology processing system; all front-end subviews |

| Tune cognitive load to specific needs | Cognitive load is actively considered within the design of PRONTOVISE. PRONTOVISE is designed to be a complex learning environment, so design features which produce extraneous load unrelated to learning tasks are minimized. PRONTOVISE provides a level intrinsic load which targets a promotion of the stages of cognitive map formation. PRONTOVISE accounts for germane load by specifically being designed to provide a learning environment for those who are unfamiliar with an ontology dataset. This is achieved through visualizations which address the specific spatial knowledge of the various complex objects within ontology datasets. | All front-end subviews |

| Afford the spatial knowledge within ontological space | PRONTOVISE includes numerous subviews which provide encounters that afford perspectives of authentic internal encodings of the entities, relations, and structures of the ontology dataset. | Various front-end subviews |

| Facilitate the performance of the cognitive activities necessary to learn a space | PRONTOVISE facilitates the performance of sensemaking, navigation, exploration, wayfinding, and search cognitive activities within ontological space over numerous subviews to support our thinking processes and the stages of cognitive map formation. | Various front-end subviews |

| Support self-regulated learning | The design of PRONTOVISE includes a modular set of subviews which support nonlinear interaction loops, which together provide the freedom to set, plan, enact, and evaluate any set of learning tasks for ontological space, all while following the requirements for cognitive map formation. | Ontology processing system; all front-end subviews |

| Subview | Type of Subview | Cognitive Activities | Spatial Knowledge |

|---|---|---|---|

| Search and Pinning Panel | List | Sensemaking, Navigation, Search, Wayfinding | Landmark |

| Ontology Sections Panel | Overview | Sensemaking, Navigation, Exploration, Search, Wayfinding | Landmark, Survey |

| Section Levels Panel | Context | Sensemaking, Exploration, Search, Wayfinding | Landmark, Route, Survey |

| Level Landmark Entities Panel | Context | Sensemaking, Navigation, Exploration, Wayfinding | Landmark, Route |

| Entity Network Panel | Context | Sensemaking, Navigation, Exploration, Wayfinding | Landmark, Route |

| Path Explorer Panel | Overview | Sensemaking, Navigation, Exploration, Wayfinding | Route, Survey |

| Entity Details Panel | Details | Sensemaking | Landmark |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Demelo, J.; Sedig, K. Forming Cognitive Maps of Ontologies Using Interactive Visualizations. Multimodal Technol. Interact. 2021, 5, 2. https://doi.org/10.3390/mti5010002

Demelo J, Sedig K. Forming Cognitive Maps of Ontologies Using Interactive Visualizations. Multimodal Technologies and Interaction. 2021; 5(1):2. https://doi.org/10.3390/mti5010002

Chicago/Turabian StyleDemelo, Jonathan, and Kamran Sedig. 2021. "Forming Cognitive Maps of Ontologies Using Interactive Visualizations" Multimodal Technologies and Interaction 5, no. 1: 2. https://doi.org/10.3390/mti5010002

APA StyleDemelo, J., & Sedig, K. (2021). Forming Cognitive Maps of Ontologies Using Interactive Visualizations. Multimodal Technologies and Interaction, 5(1), 2. https://doi.org/10.3390/mti5010002