Tell Them How They Did: Feedback on Operator Performance Helps Calibrate Perceived Ease of Use in Automated Driving

Abstract

1. Introduction

1.1. Validation and Development of Methodology

1.2. Judgment Calibration

1.3. Objective and Hypotheses

2. Materials and Methods

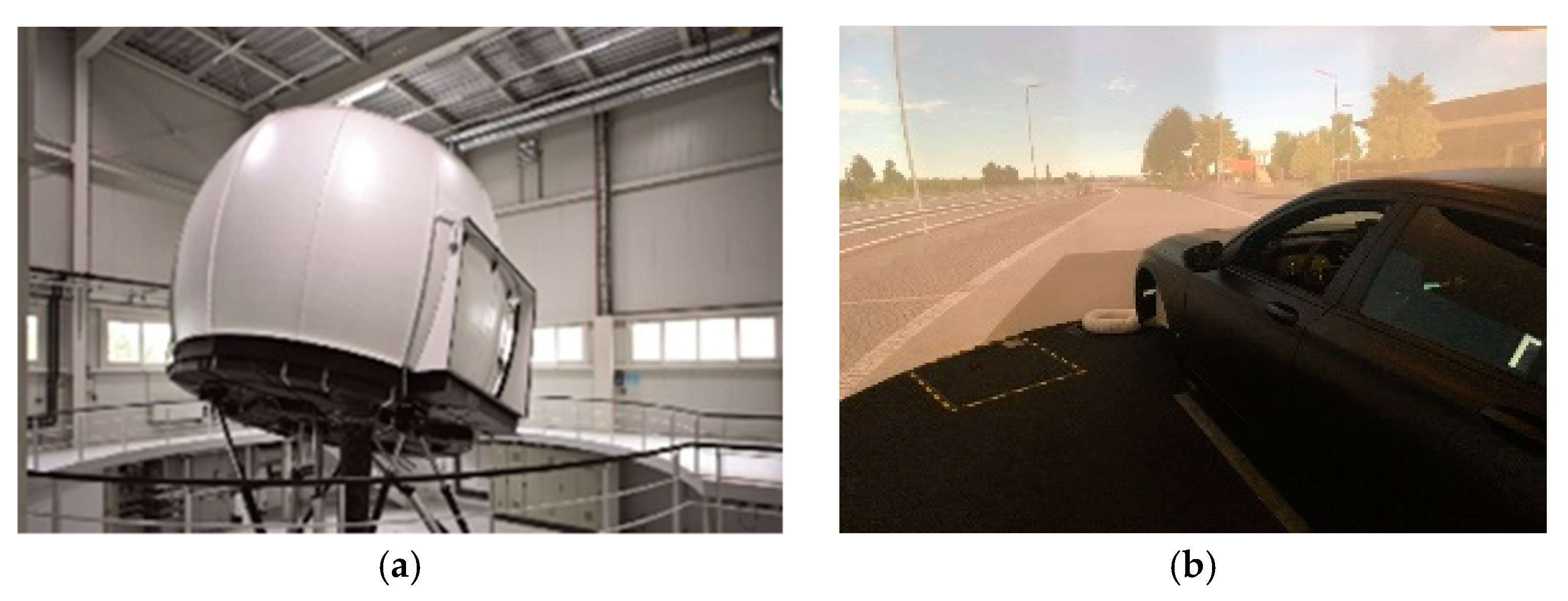

2.1. Driving Simulation

2.2. Driving Automation and Human–Machine Interface

2.3. Design and Procedure

2.4. Use Cases

2.5. Dependent Variables

2.6. Sample

3. Results

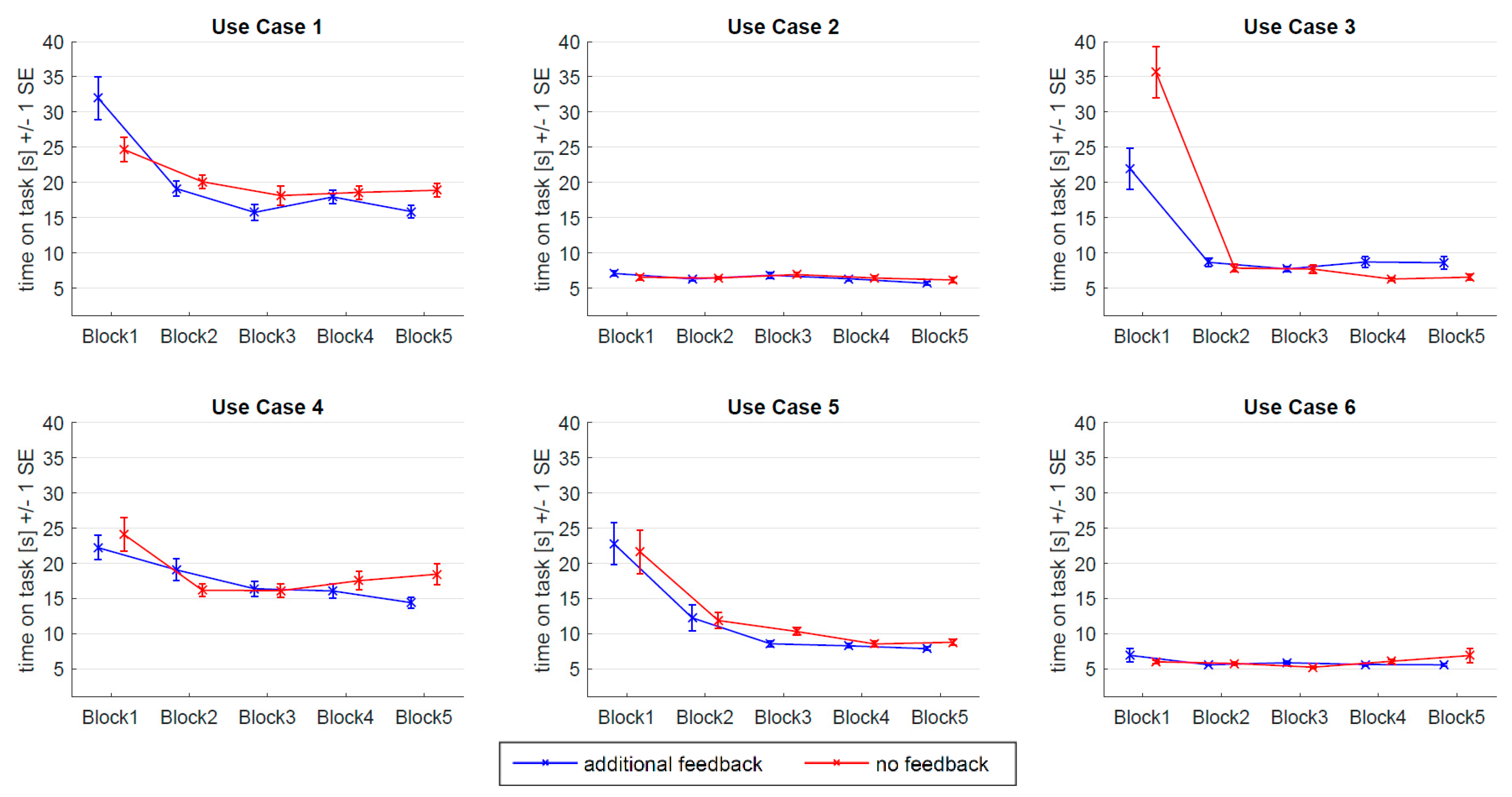

3.1. Manipulation Check: Time on Task

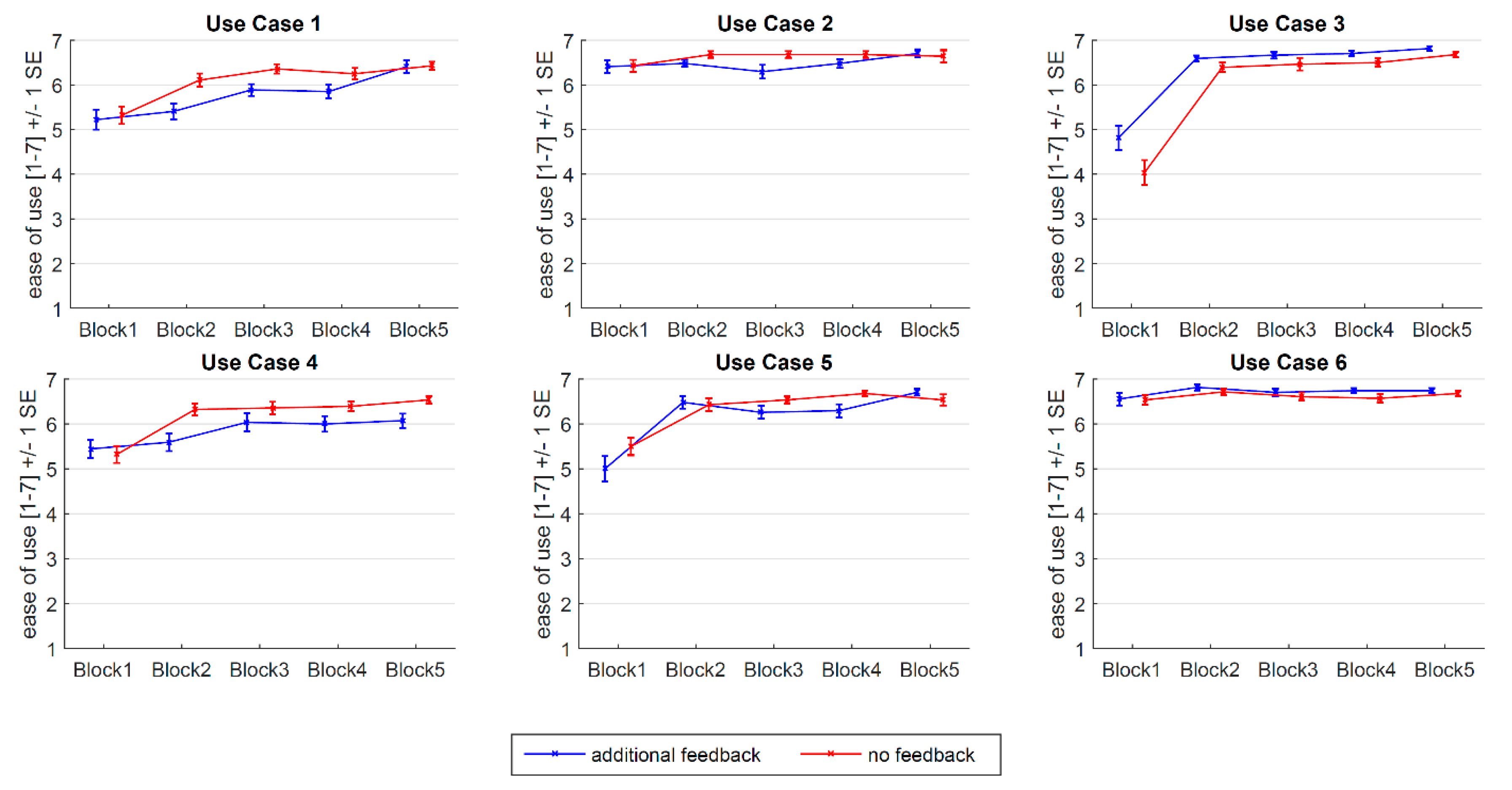

3.2. Perceived Ease of Use

4. Discussion

4.1. Effect of Feedback on Interaction Performance

4.2. Change Over Time and UC Specificity

4.3. Calibrating Effects of Feedback

4.4. Limitations and Future Research

4.5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- SAE. Taxonomy and Definitions for Terms Related to On-Road Motor Vehicle Automated Driving Systems; SAE International: Warrendale, PE, USA, 2018. [Google Scholar]

- AAM. Statement of Principles, Criteria and Verification Procedures on Driver Interactions with Advanced In-Vehicle Information and Communication Systems; Alliance of Automobile Manufactures: Washington, DC, USA, 2006. [Google Scholar]

- Kun, A.L. Human-Machine Interaction for Vehicles: Review and Outlook. FNT Hum. Comput. Interact. 2017, 11, 201–293. [Google Scholar] [CrossRef]

- NHTSA. Visual-Manual NHTSA Driver Distraction Guidelines for In-Vehicle Electronic Devices; National Highway Traffic Safety Administration (NHTSA), Department of Transportation (DOT): Washington, DC, USA, 2012.

- Hergeth, S.; Lorenz, L.; Vilimek, R.; Krems, J.F. Keep your scanners peeled: Gaze behavior as a measure of automation trust during highly automated driving. Hum. Factors 2016, 58, 509–519. [Google Scholar] [CrossRef] [PubMed]

- Forster, Y.; Naujoks, F.; Neukum, A. Increasing anthropomorphism and trust in automated driving functions by adding speech output. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium, Redondo Beach, CA, USA, 11–14 June 2017. [Google Scholar]

- Naujoks, F.; Mai, C.; Neukum, A. The effect of urgency take-over requests during highly automated driving under distraction conditions. Adv. Hum. Asp. Transp. 2014, 7, 431. [Google Scholar]

- Feldhütter, A.; Segler, C.; Bengler, K. Does Shifting Between Conditionally and Partially Automated Driving Lead to a Loss of Mode Awareness? In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Los Angeles, CA, USA, 17–21 July 2017. [Google Scholar]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.F. How Usability can Save the Day. Methodological Considerations for Making Automated Driving a Success Story. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Toronto, ON, Canada, 23–25 September 2018. [Google Scholar]

- Naujoks, F.; Hergeth, S.; Wiedemann, K.; Schoemig, N.; Forster, Y.; Keinath, A. Test Procedure for Evaluating the Human-Machine-Interface of Vehicles with Automated Driving. Traffic Injury Prev. 2019, in press. [Google Scholar]

- Sweeney, M.; Maguire, M.; Shackel, B. Evaluating user-computer interaction: A framework. Int. J. Man. Mach. Stud. 1993, 38, 689–711. [Google Scholar] [CrossRef]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.F.; Keinath, A. Self-Report Measures for the Evaluation of Human-Machine Interfaces in Automated Driving. 2018; Subimtted and under Review. [Google Scholar]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Beggiato, M.; Krems, J.F.; Keinath, A. Learning to Use Automation: Behavioral Changes in Interaction with Automated Driving Systems. Transp. Res. Part F Traffic Psychol. Behav. 2019, 62, 599–614. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319. [Google Scholar] [CrossRef]

- Forster, Y.; Naujoks, F.; Neukum, A. Your Turn or My Turn? Design of a Human-Machine Interface for Conditional Automation. In Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ann Arbor, MI, USA, 24–26 October 2016; pp. 253–260. [Google Scholar]

- Rahman, M.M.; Lesch, M.F.; Horrey, W.J.; Strawderman, L. Assessing the utility of TAM, TPB, and UTAUT for advanced driver assistance systems. Accid. Anal. Prev. 2017, 108, 361–373. [Google Scholar] [CrossRef]

- Nordhoff, S.; de Winter, J.; Madigan, R.; Merat, N.; Arem, B.; Happee, R. User acceptance of automated shuttles in Berlin-Schöneberg: A questionnaire study. Transp. Res. Part F Traffic Psychol. Behav. 2018, 58, 843–854. [Google Scholar] [CrossRef]

- Rödel, C.; Stadler, S.; Meschtscherjakov, A.; Tscheligi, M. Towards Autonomous Cars: The Effect of Autonomy Levels on Acceptance and User Experience. In Proceedings of the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Seattle, WA, USA, 17–19 September 2014. [Google Scholar]

- Payre, W.; Cestac, J.; Delhomme, P. Intention to use a fully automated car. Attitudes and a priori acceptability. Transp. Res. Part F Traffic Psychol. Behav. 2014, 27, 252–263. [Google Scholar] [CrossRef]

- Wintersberger, P.; Riener, A.; Schartmüller, C.; Frison, A.-K.; Weigl, K. Let Me Finish before I Take Over: Towards Attention Aware Device Integration in Highly Automated Vehicles. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Toronto, ON, Canada, 23–25 September 2018; pp. 53–65. [Google Scholar]

- Frison, A.-K.; Wintersberger, P.; Riener, A.; Schartmüller, C. Driving Hotzenplotz: A Hybrid Interface for Vehicle Control Aiming to Maximize Pleasure in Highway Driving. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Oldenburg, Germany, 24–27 September 2017; pp. 236–244. [Google Scholar]

- Guo, C.; Sentouh, C.; Popieul, J.-C.; Haué, J.-B. Predictive shared steering control for driver override in automated driving: A simulator study. Transp. Res. Part F Traffic Psychol. Behav. 2019, 61, 326–336. [Google Scholar] [CrossRef]

- Large, D.; Burnett, G.; Crundall, E.; Lawson, G.; Skrypchuk, L. Twist It, Touch It, Push It, Swipe It: Evaluating Secondary Input Devices for Use with an Automotive Touchscreen HMI. In Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ann Arbor, MI, USA, 24–26 October 2016; pp. 253–260. [Google Scholar]

- Karahanna, E.; Straub, D.W. The psychological origins of perceived usefulness and ease-of-use. Inf. Manag. 1999, 35, 237–250. [Google Scholar] [CrossRef]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Beggiato, M.; Krems, J.F.; Keinath, A. Learning and Development of Mental Models in Interaction with Driving Automation: A Simulator Study. In Proceedings of the 10th International Driving Symposium on Human Factors in Driver Assessment, Training, and Vehicle Design, Santa Fe, NM, USA, 24–27 June 2019. [Google Scholar]

- Hart, C.A. Self Driving Safety Steps into the Unknown. [Web Blog Post]. Available online: http://www.thedrive.com/tech/26896/self-driving-safety-steps-into-the-unknown (accessed on 14 March 2019).

- Beggiato, M.; Pereira, M.; Petzoldt, T.; Krems, J.F. Learning and development of trust, acceptance and the mental model of ACC. A longitudinal on-road study. Transp. Res. Part F Traffic Psychol. Behav. 2015, 35, 75–84. [Google Scholar] [CrossRef]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.F. Unskilled and Unaware: Subpar Users of Automated Driving Systems Make Spurious Decisions. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Toronto, ON, Canada, 23–25 September 2018. [Google Scholar]

- Ajzen, I.; Fishbein, M. Attitude-behavior relations: A theoretical analysis and review of empirical research. Psychol. Bull. 1977, 84, 888. [Google Scholar] [CrossRef]

- Hancock, P.A.; Matthews, G. Workload and Performance: Associations, Insensitivities, and Dissociations. Hum. Factors 2018. [Google Scholar] [CrossRef]

- Nielsen, J.; Levy, J. Measuring Usability: Preference vs. Performance. Commun. ACM 1994, 37, 66–75. [Google Scholar] [CrossRef]

- Bol, L.; Hacker, D.J. Calibration research: Where do we go from here? Front. Psychol. 2012, 3, 229. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Attaining self-regulation: A social cognitive perspective. In Handbook of Self-Regulation; Zimmerman, B.J., Ed.; Elsevier: Amsterdam, The Netherlands, 2000; pp. 13–39. [Google Scholar]

- Tversky, A.; Kahneman, D. Judgment under uncertainty: Heuristics and biases. Science 1974, 185, 1124–1131. [Google Scholar] [CrossRef] [PubMed]

- Seong, Y.; Bisantz, A.M. The impact of cognitive feedback on judgment performance and trust with decision aids. Int. J. Ind. Ergon. 2008, 38, 608–625. [Google Scholar] [CrossRef]

- Helldin, T.; Falkman, G.; Riveiro, M.; Davidsson, S. Presenting system uncertainty in automotive UIs for supporting trust calibration in autonomous driving. In Proceedings of the 5th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Eindhoven, The Nethderlands, 27–30 October 2013; pp. 210–217. [Google Scholar]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed]

- ISO. Road Vehicles—Ergonomic Aspects of Transport Information and Control Systems—Calibration Tasks for Methods Which Assess Driver Demand Due to the Use of In-Vehicle Systems; ISO: Geneva, Switzerland, 2012. [Google Scholar]

- Naujoks, F.; Purucker, C.; Neukum, A.; Wolter, S.; Steiger, R. Controllability of Partially Automated Driving functions–Does it matter whether drivers are allowed to take their hands off the steering wheel? Transp. Res. Part F Traffic Psychol. Behav. 2015, 35, 185–198. [Google Scholar] [CrossRef]

- Manca, L.; de Winter, J.C.F.; Happee, R. Visual Displays for Automated Driving: A Survey. In Proceedings of the 7th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Nottingham, UK, 1–3 September 2015. [Google Scholar]

- Naujoks, F.; Hergeth, S.; Wiedemann, K.; Schoemig, N.; Keinath, A. Use Cases for Assessing, Testing, and Validating the Human Machine Interface of Automated Driving Systems. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Philadelphia, PA, USA, 1–5 October 2018. [Google Scholar]

- Wickens, C.D.; Hollands, J.G.; Banbury, S.; Parasuraman, R. Engineering Psychology & Human Performance; Psychology Press: Hove, UK, 2015. [Google Scholar]

- Gagne, R. Learning Outcomes and Their Effects. Useful Categories of Human Performance. Am. Psychol. 1984, 39, 377–385. [Google Scholar] [CrossRef]

- Eriksson, A.; Stanton, N.A. Takeover Time in Highly Automated Vehicles: Noncritical Transitions to and From Manual Control. Hum. Factors 2017, 59, 689–705. [Google Scholar] [CrossRef]

- Lewis, J.R. Psychometric Evaluation of the PSSUQ Using Data from Five Years of Usability Studies. Int. J. Hum. Comput. Interact. 2002, 14, 463–488. [Google Scholar]

- Hergeth, S.; Lorenz, L.; Krems, J.F. Prior familiarization with takeover requests affects drivers’ takeover performance and automation trust. Hum. Factors 2017, 59, 457–470. [Google Scholar] [CrossRef] [PubMed]

- Payre, W.; Cestac, J.; Delhomme, P. Fully automated driving: Impact of trust and practice on manual control recovery. Hum. Factors 2016, 58, 229–241. [Google Scholar] [CrossRef]

- Sportillo, D.; Paljic, A.; Ojeda, L. Get ready for automated driving using Virtual Reality. Accid. Anal. Prev. 2018, 118, 102–113. [Google Scholar] [CrossRef]

| Category | Value | Description |

|---|---|---|

| No problem | 1 | Quick processing |

| Hesitation | 2 | Independent solution without errors But: hesitation, very conscious operating and full concentration |

| Minor errors | 3 | Independent solution without or with minor errors which were corrected confidently But: longer pauses for reflection Evaluation of potential operating steps |

| Massive errors | 4 | One or multiple errors Clearly impaired operation flow Excessive correction of errors No help of experimenter necessary |

| Help of experimenter | 5 | Multiple errors Massive errors require to restart task Help of experimenter necessary |

| Transition Type | Scenario | ADS level at UC Initiation | ADS Target Level | UC Number |

|---|---|---|---|---|

| Upward transition | Activation L3 | L0 | L3 | 1 |

| Activation L3 | L2 | L3 | 4 | |

| Activation L2 | L0 | L2 | 3 | |

| Downward transition | Deactivation L3 | L3 | L0 | 2 |

| Deactivation L3 | L3 | L2 | 5 | |

| Deactivation L2 | L2 | L0 | 6 |

| Effect | Approx. Χ2 | df | p |

|---|---|---|---|

| Time of measurement | 82.002 | 9 | <0.001 |

| Use case | 100.439 | 14 | <0.001 |

| Time of measurement × Use case | 1235.381 | 209 | <0.001 |

| Effect | F | df1 | df2 | p | ηp2 |

|---|---|---|---|---|---|

| Time of measurement | 73.630 | 2.177 | 123.278 | <0.001 | 0.581 |

| Use case | 103.203 | 3.580 | 189.754 | <0.001 | 0.661 |

| Feedback | 0.832 | 1 | 53 | 0.366 | 0.015 |

| Time of measurement × Feedback | 0.588 | 2.177 | 123.278 | 0.571 | 0.011 |

| Use case × Feedback | 0.342 | 3.580 | 189.754 | 0.829 | 0.006 |

| Time of measurement × Use case | 8.305 | 4.501 | 238.556 | <0.001 | 0.135 |

| Time of measurement × Use case × Feedback | 2.250 | 4.501 | 238.556 | 0.057 | 0.041 |

| Effect | Approx. Χ2 | df | p |

|---|---|---|---|

| Time of measurement | 49.001 | 9 | <0.001 |

| Use case | 48.367 | 14 | <0.001 |

| Time of measurement × Use case | 567.343 | 209 | <0.001 |

| Effect | F | df1 | df2 | p | ηp2 |

|---|---|---|---|---|---|

| Time of measurement | 49.231 | 2.685 | 142.281 | <0.001 | 0.482 |

| Use case | 23.717 | 3.869 | 205.076 | <0.001 | 0.309 |

| Feedback | 0.725 | 1 | 53 | 0.398 | 0.013 |

| Time of measurement × Feedback | 1.601 | 2.685 | 142.281 | 0.372 | 0.019 |

| Use case × Feedback | 4.529 | 3.869 | 205.076 | <0.01 | 0.079 |

| Time of measurement × Use case | 8.660 | 8.200 | 434.589 | <0.001 | 0.140 |

| Time of measurement × Use case × Feedback | 0.961 | 8.200 | 434.589 | 0.467 | 0.018 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.; Keinath, A. Tell Them How They Did: Feedback on Operator Performance Helps Calibrate Perceived Ease of Use in Automated Driving. Multimodal Technol. Interact. 2019, 3, 29. https://doi.org/10.3390/mti3020029

Forster Y, Hergeth S, Naujoks F, Krems J, Keinath A. Tell Them How They Did: Feedback on Operator Performance Helps Calibrate Perceived Ease of Use in Automated Driving. Multimodal Technologies and Interaction. 2019; 3(2):29. https://doi.org/10.3390/mti3020029

Chicago/Turabian StyleForster, Yannick, Sebastian Hergeth, Frederik Naujoks, Josef Krems, and Andreas Keinath. 2019. "Tell Them How They Did: Feedback on Operator Performance Helps Calibrate Perceived Ease of Use in Automated Driving" Multimodal Technologies and Interaction 3, no. 2: 29. https://doi.org/10.3390/mti3020029

APA StyleForster, Y., Hergeth, S., Naujoks, F., Krems, J., & Keinath, A. (2019). Tell Them How They Did: Feedback on Operator Performance Helps Calibrate Perceived Ease of Use in Automated Driving. Multimodal Technologies and Interaction, 3(2), 29. https://doi.org/10.3390/mti3020029