Designing for a Wearable Affective Interface for the NAO Robot: A Study of Emotion Conveyance by Touch

Abstract

1. Introduction

2. Background

3. Method

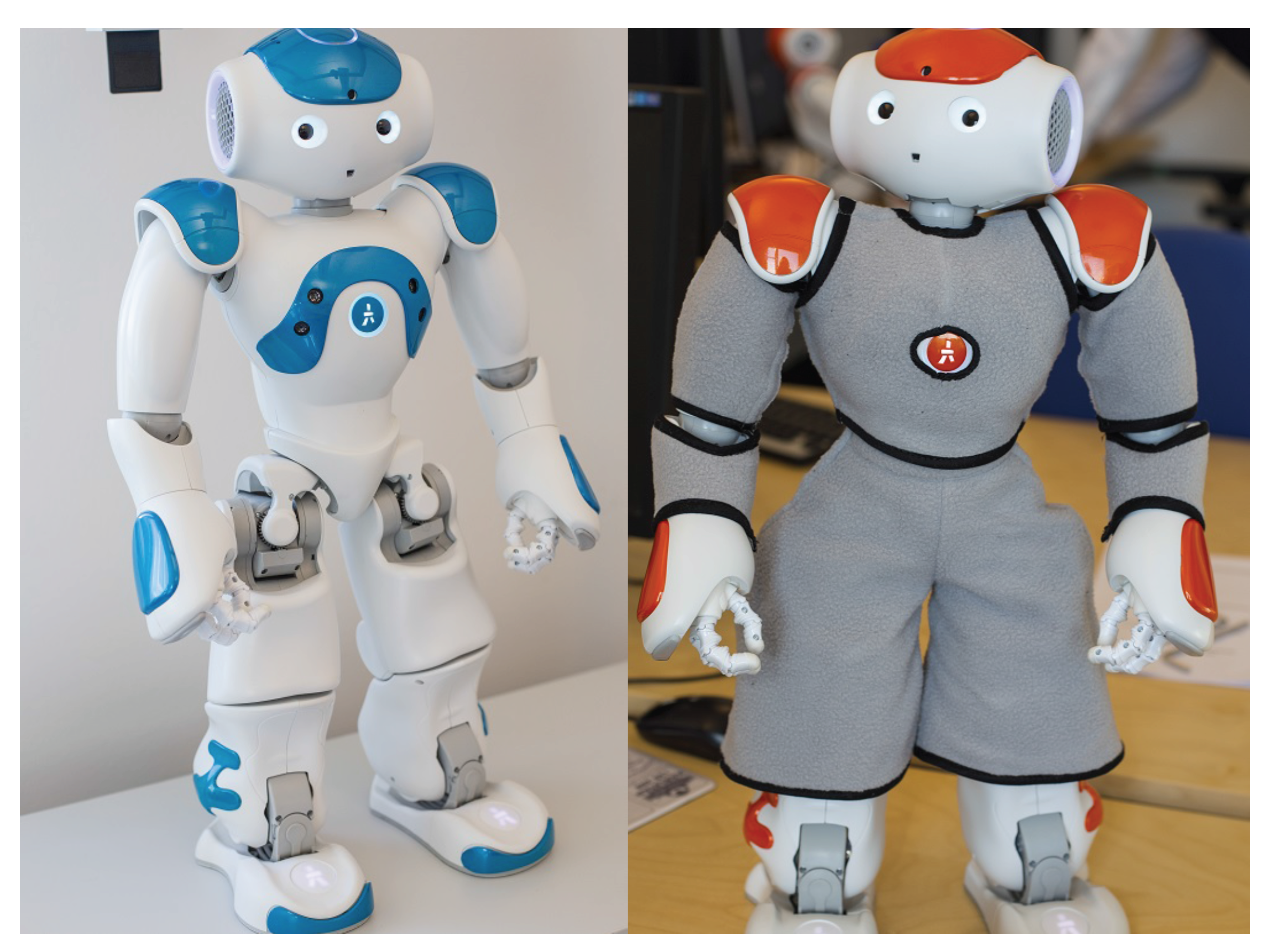

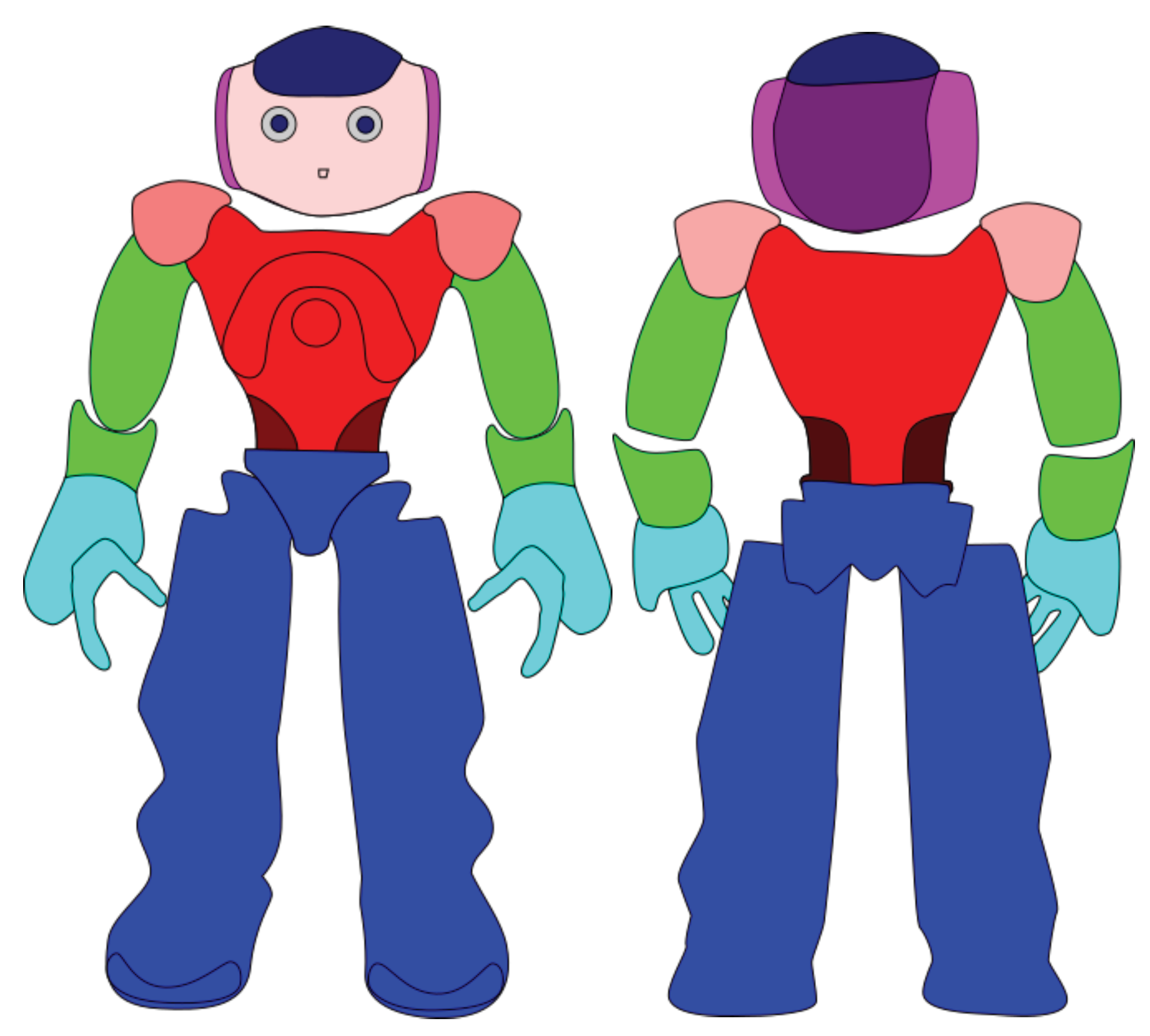

3.1. A Wearable Affective Interface (WAffI)

3.2. Participants

3.3. Procedure and Materials

Coding Procedure

4. Results

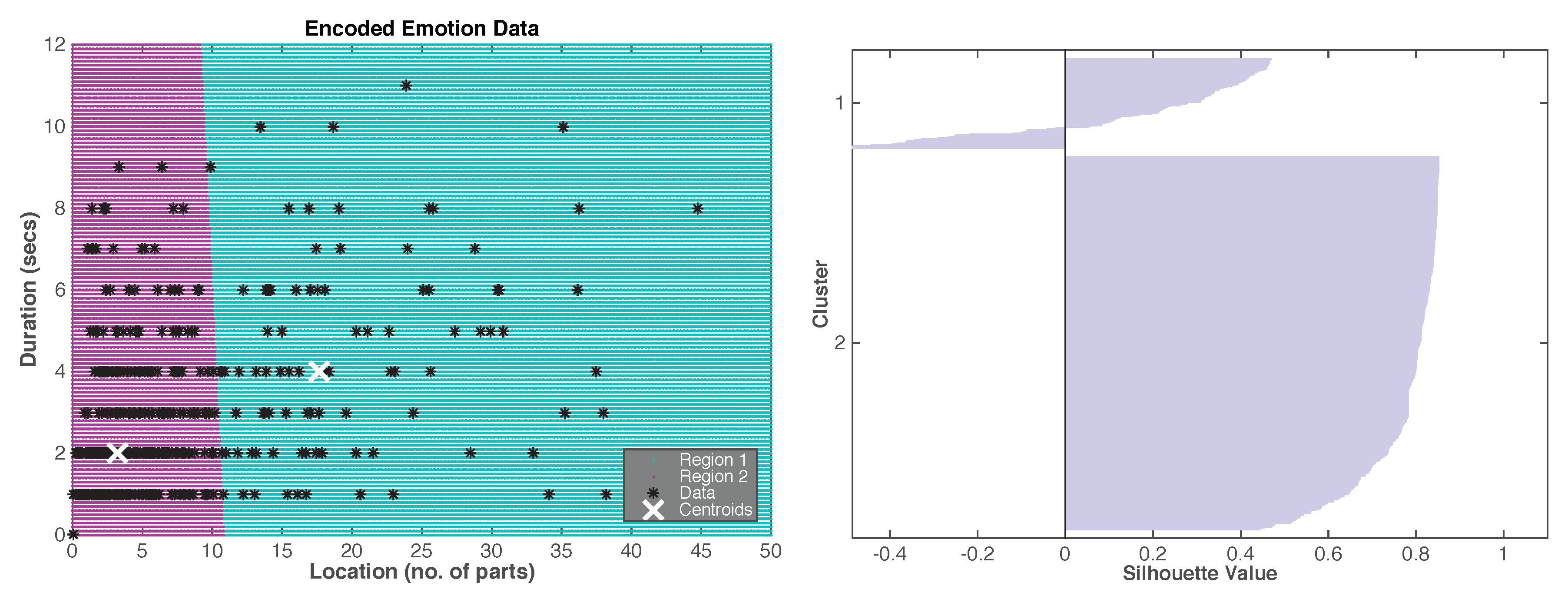

4.1. Encoder Results

- touch intensity— light intensity, moderate intensity, strong intensity;

- touch duration—length of time that the subjects interacted with the NAO;

- touch type—the quality of the tactile interaction, e.g., press or stroke;

- touch location—the place on the NAO that is touched.

4.1.1. Intensity

- No Interaction (subjects refused or were not able to contemplate an appropriate touch),

- Low Intensity (subjects gave light touches to the NAO robot with no apparent or barely perceptible movement of NAO),

- Medium Intensity (subjects gave moderate intensity touches with some, but not extensive, movement of the NAO robot),

- High Intensity (subjects gave strong intensity touches with a substantial movement of the NAO robot as a result of pressure to the touch).

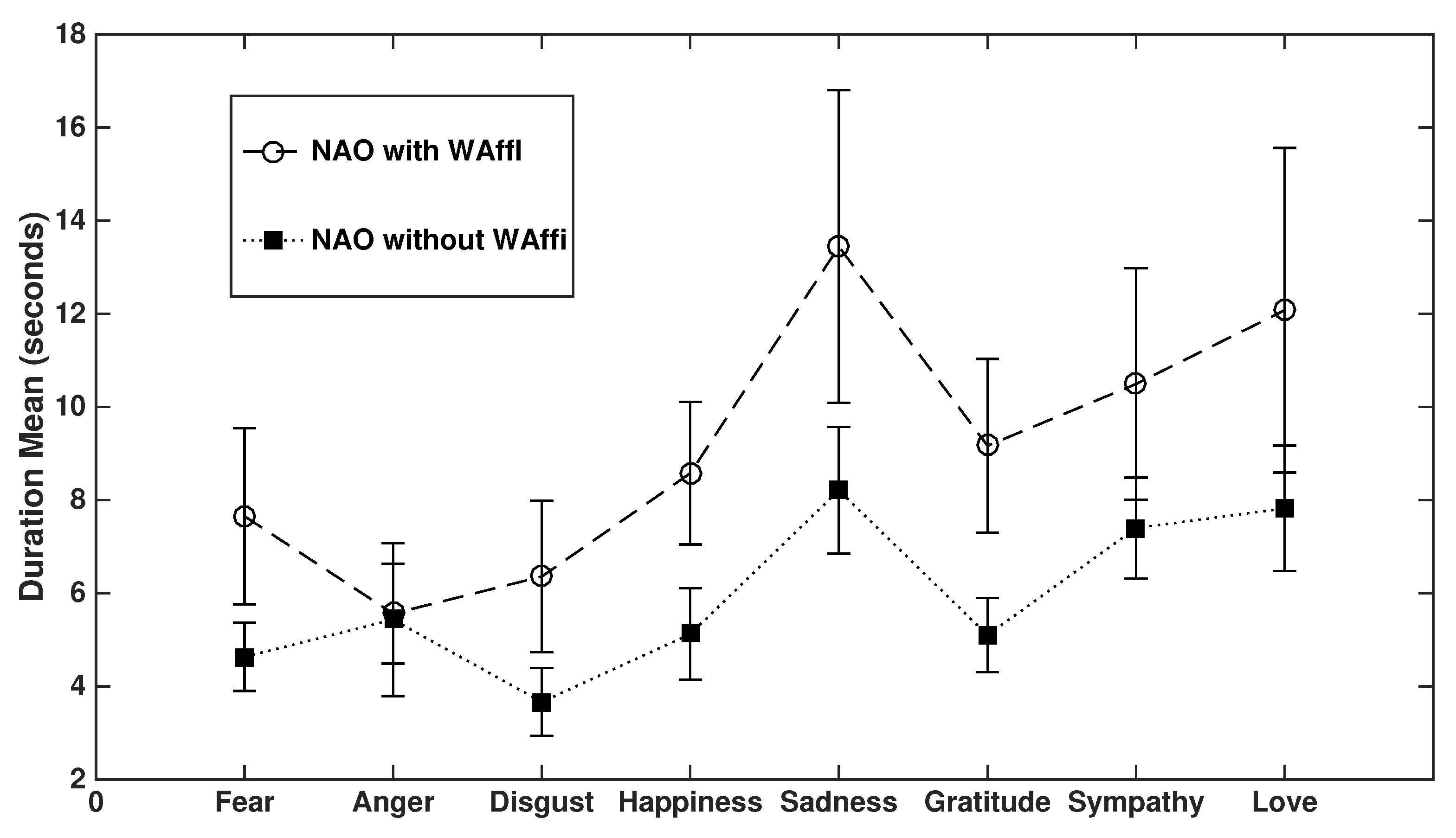

4.1.2. Duration

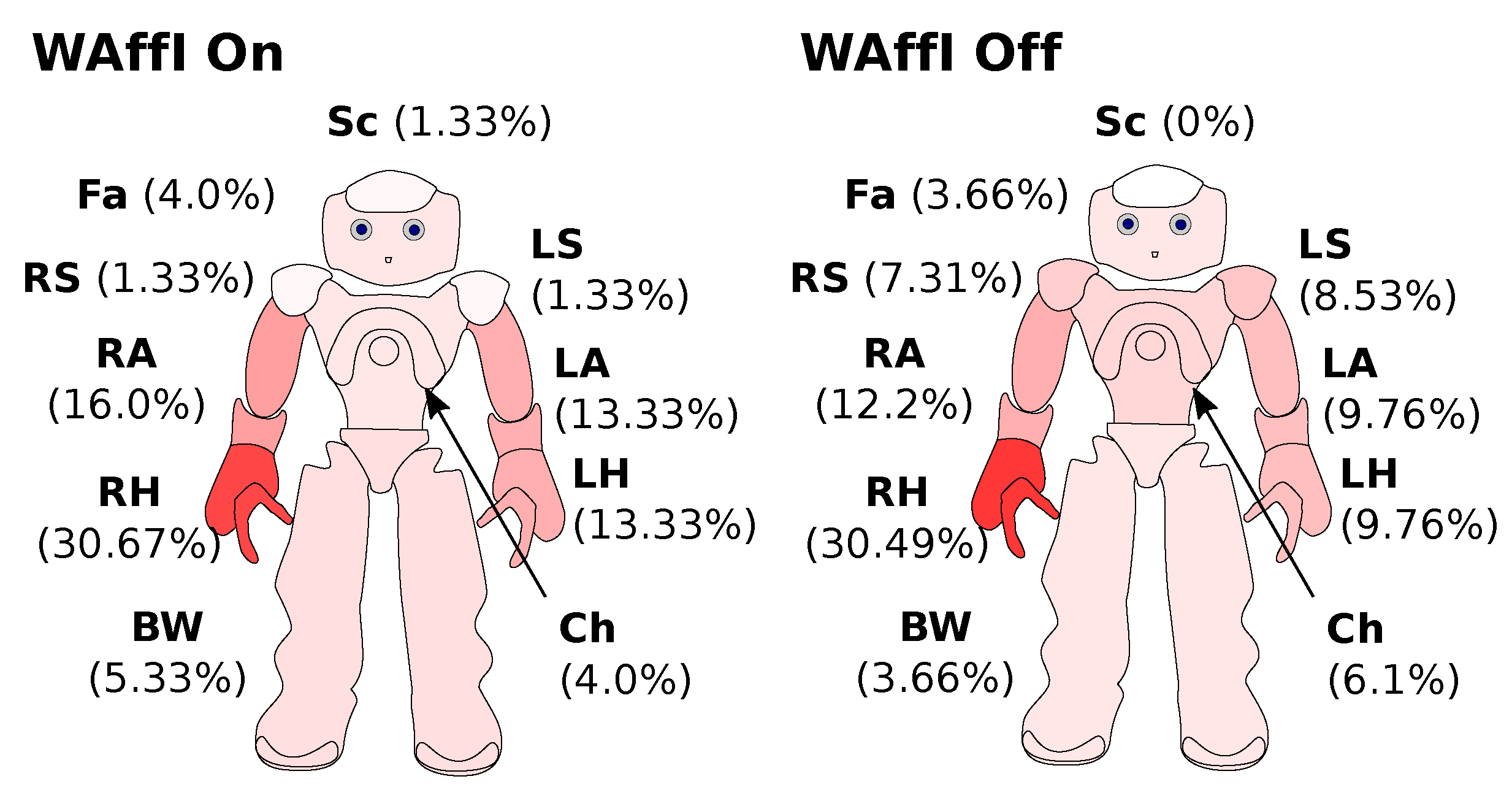

4.1.3. Location

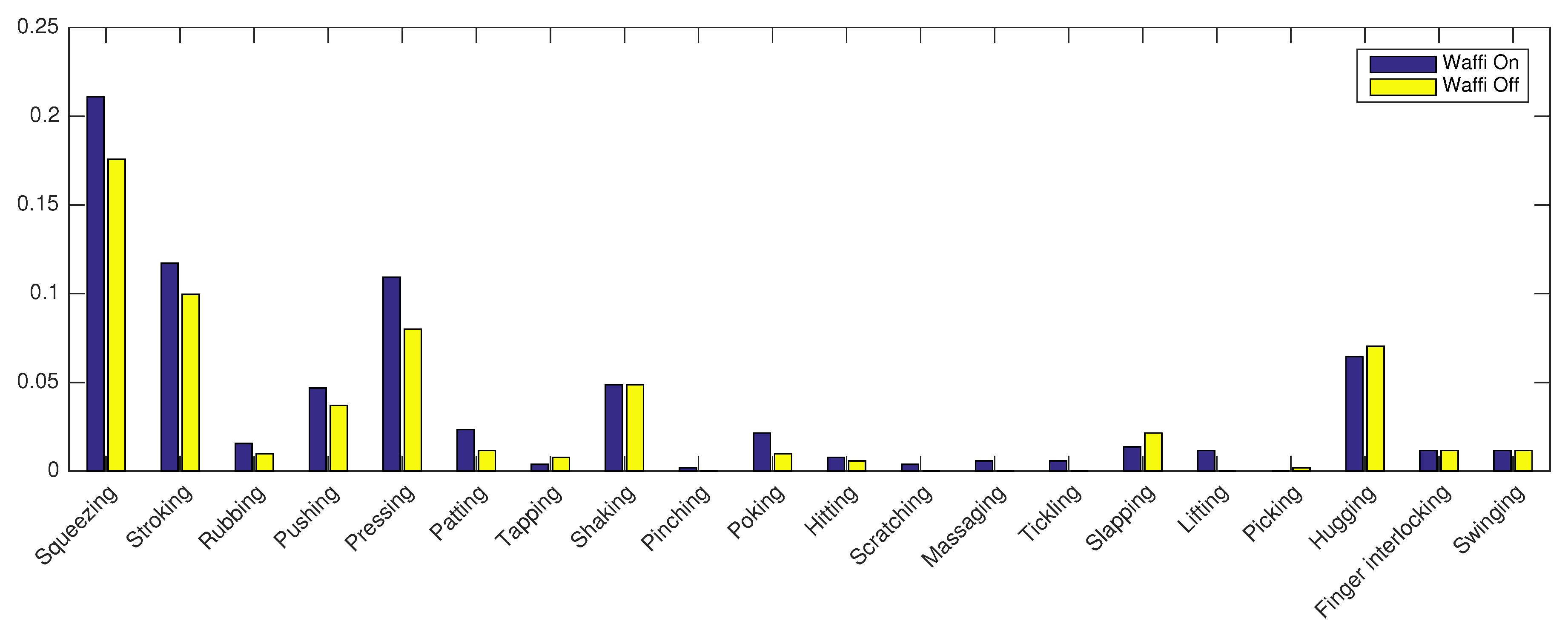

4.1.4. Type

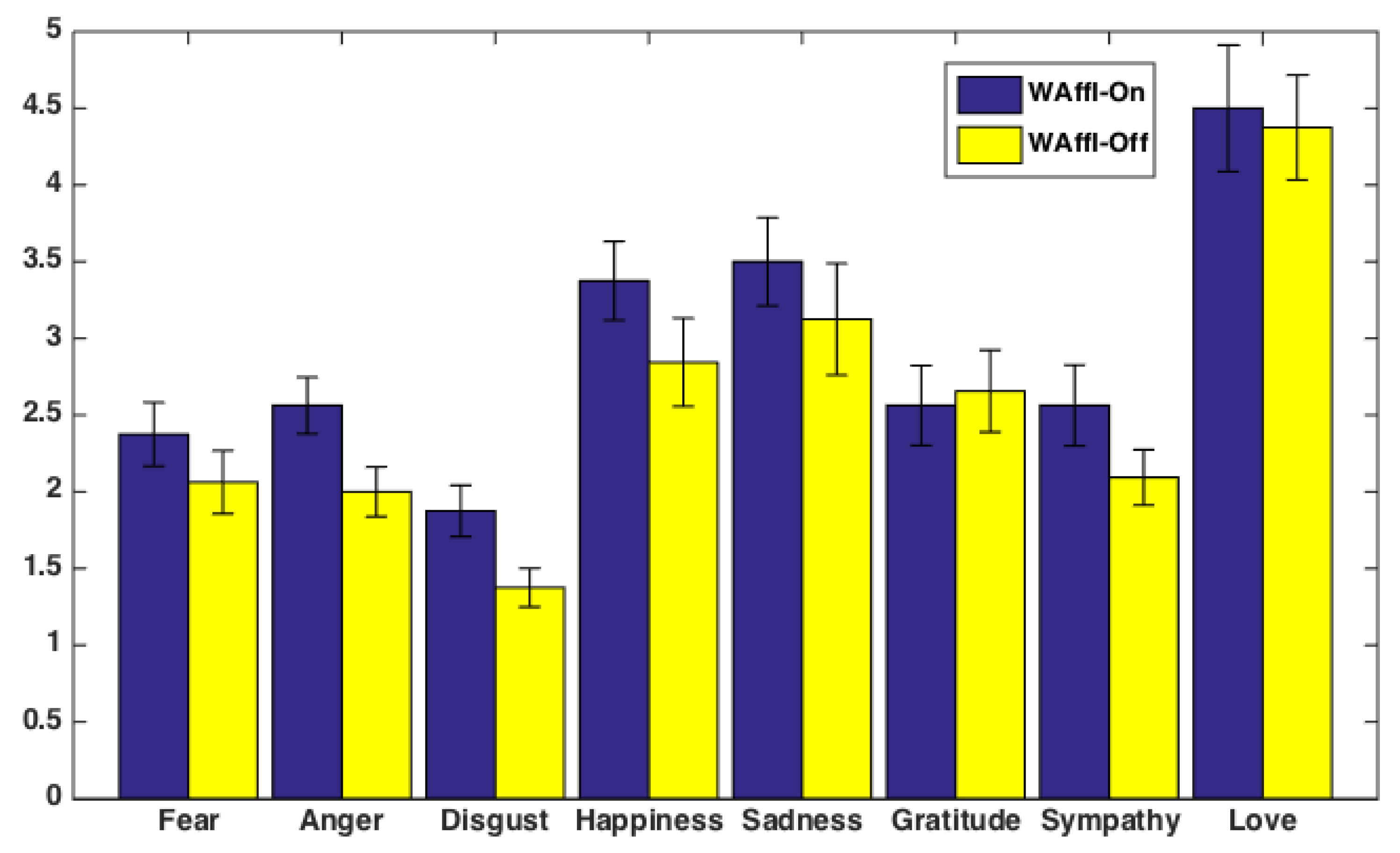

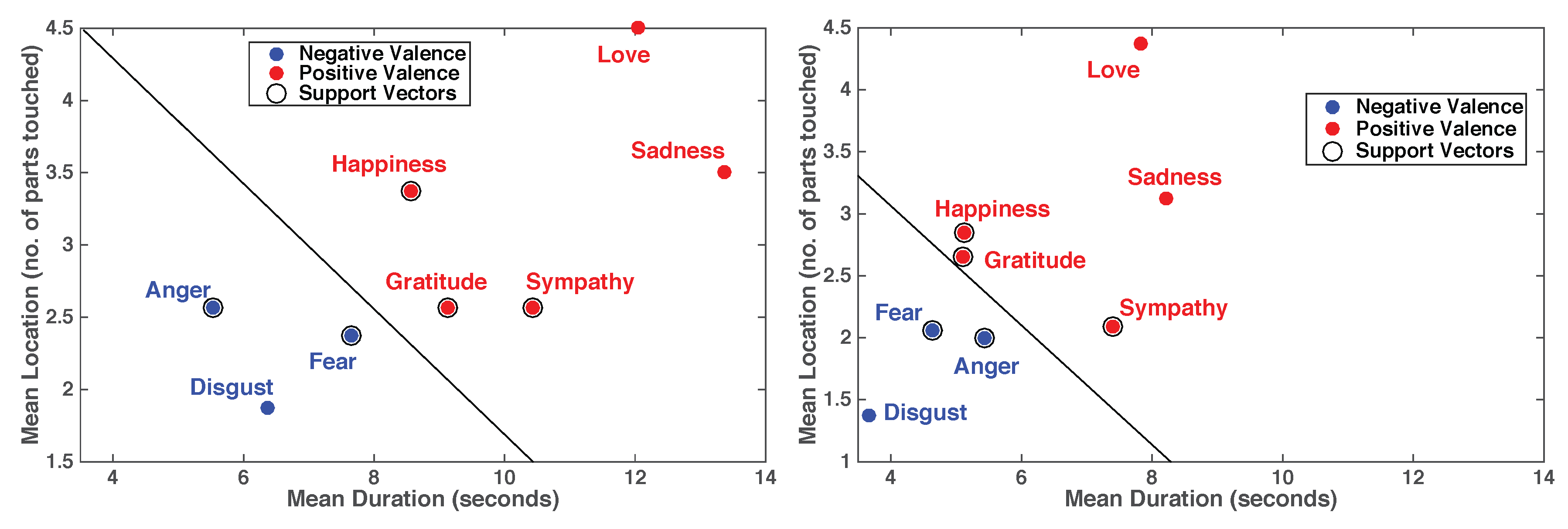

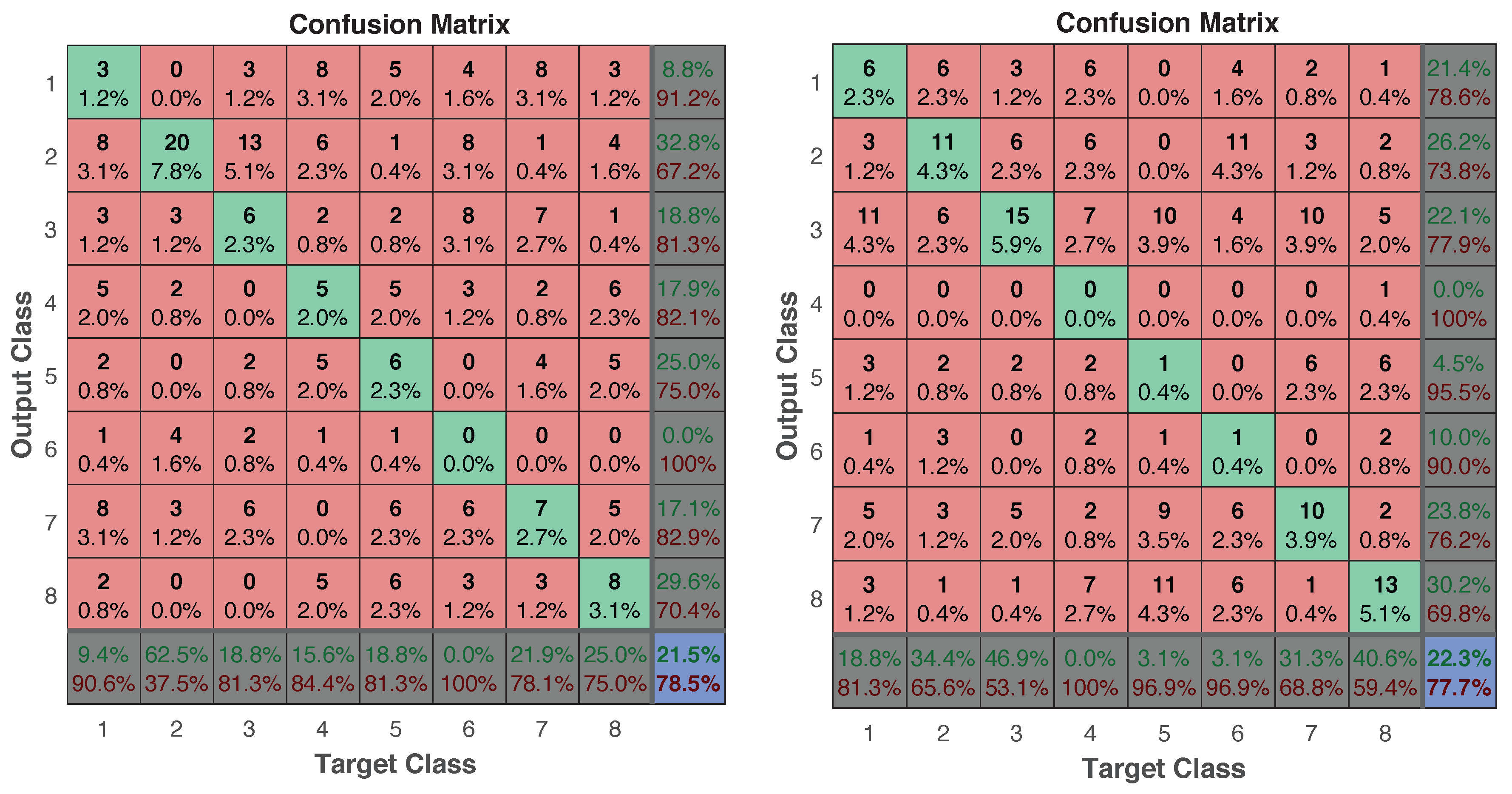

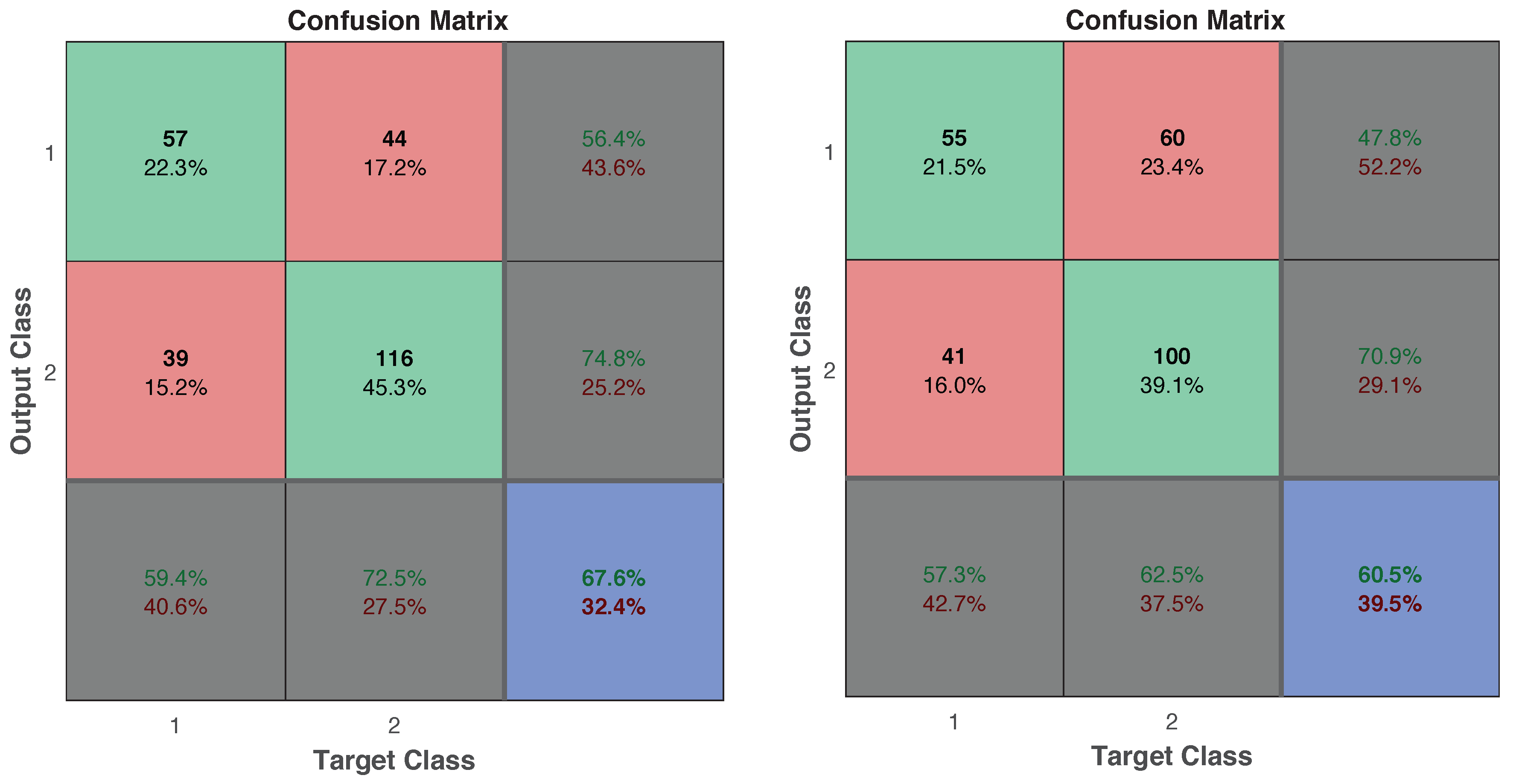

4.2. Decoder Results

5. Discussion

- Participants interacted with different intensites (stronger overall) of touch when the NAO was with the WAffI on than when it was without.

- Participants interacted for a longer duration when NAO was in the WAffI than when NAO was not.

- Emotions were most simply and reliably classified when pooled into negatively valence conveyance-based (Fear, Anger and Disgust) versus positively valence conveyance-based (Love, Sadness, Sympathy, Gratitude and Happiness) affective states.

- Emotions, when pooled according to valence conveyance, were more accurately classified when the NAO was in the WAffI than when not.

- Individual emotions were not as accurately classified as compared to the human-human study of Hertenstein et al. [10].

- Individual emotions were marginally less accurately classified when the robot was wearing the WAffI garments than when without.

- Anger and Love were the first and third most easily decodable emotions overall in this human-robot study as was the case in the Hertenstein et al. [10] human-human interaction study.

- Participants touched the NAO robot for longer duration when conveying Sadness (typically as an attachment-based consoling gesture) than when conveying Disgust (a rejection-based emotion).

- Participants touched more locations on the NAO robot when conveying Love than when conveying all emotions other than Sadness; Sadness was conveyed over more locations than for Disgust.

- Squeezing was the most frequently occurring touch type over emotions.

- Left arm and right arm were the most frequently touched locations on the NAO.

- Gratitude was typically conveyed by a handshake gesture.

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A. Tables of Touch Location and Touch Type Data

Appendix B. Encoder Statistical Comparisons

References

- Broekens, J.; Heerink, M.; Rosendal, H. Asistive social robots in elderly care: A review. Gerontechnology 2009, 8, 94–103. [Google Scholar] [CrossRef]

- Shamsuddin, S.; Yussof, H.; Ismail, L.; Hanapiah, F.A.; Mohamed, S.; Piah, H.A.; Zahari, N.I. Initial response of autistic children in human-robot interaction therapy with humanoid robot NAO. In Proceedings of the 2012 IEEE 8th International Colloquium on Signal Processing and its Applications (CSPA), Malacca, Malaysia, 23–25 March 2012; pp. 188–193. [Google Scholar]

- Soler, M.V.; Aguera-Ortiz, L.; Rodriguez, J.O.; Rebolledo, C.M.; Munoz, A.P.; Perez, I.R.; Ruiz, S.F. Social robots in advanced dementia. Front. Aging Neurosci. 2015, 7. [Google Scholar] [CrossRef]

- Dautenhahn, K. Socially intelligent robots: Dimensions of human-robot interaction. Philos. Trans. R. Soc. B Biol.Sci. 2007, 362, 679–704. [Google Scholar] [CrossRef] [PubMed]

- Silvera-Tawil, D.; Rye, D.; Velonaki, M. Interpretation of social touch on an artificial arm covered with an EIT-based sensitive skin. Int. J. Soc. Robot. 2014, 6, 489–505. [Google Scholar] [CrossRef]

- Montagu, A. Touching: The Human Significance of the Skin, 3rd ed.; Harper & Row: New York, NY, USA, 1986. [Google Scholar]

- Dahiya, R.S.; Metta, G.; Sandini, G.; Valle, M. Tactile sensing-from humans to humanoids. IEEE Trans. Robot. 2010, 26, 1–20. [Google Scholar] [CrossRef]

- Silvera-Tawil, D.; Rye, D.; Velonaki, M. Artificial skin and tactile sensing for socially interactive robots: A review. Robot. Auton. Syst. 2015, 63, 230–243. [Google Scholar] [CrossRef]

- Aldebaran. Aldebaran by SoftBank Group. 43, rue du Colonel Pierre Avia 75015 Paris. Available online: https://www.aldebaran.com (accessed on 9 November 2017).

- Hertenstein, M.J.; Holmes, R.; McCullough, M.; Keltner, D. The communication of emotion via touch. Emotion 2009, 9, 566–573. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P. Facial expression and emotion. Am. Psychol. 1993, 48, 384. [Google Scholar] [CrossRef] [PubMed]

- Scherer, K.R. Vocal communication of emotion: A review of research paradigms. Speech Commun. 2003, 40, 227–256. [Google Scholar]

- Field, T. Touch; MIT Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Kosfeld, M.; Heinrichs, M.; Zak, P.J.; Fischbacher, U.; Fehr, E. Oxytocin increases trust in humans. Nature 2005, 435, 673–676. [Google Scholar] [CrossRef] [PubMed]

- Hertenstein, M.J.; Keltner, D.; App, B.; Bulleit, B.A.; Jaskolka, A.R. Touch communicates distinct emotions. Emotion 2006, 6, 528. [Google Scholar] [CrossRef] [PubMed]

- Cooney, M.D.; Nishio, S.; Ishiguro, H. Importance of Touch for Conveying Affection in a Multimodal Interaction with a Small Humanoid Robot. Int. J. Hum. Robot. 2015, 12, 1550002. [Google Scholar] [CrossRef]

- Lee, K.M.; Jung, Y.; Kim, J.; Kim, S.R. Are physically embodied social agents better than disembodied social agents?: The effects of physical embodiment, tactile interactio.; people’s loneliness in human–robot interaction. Int. J. Hum.-Comput. Stud. 2006, 64, 962–973. [Google Scholar] [CrossRef]

- Ogawa, K.; Nishio, S.; Koda, K.; Balistreri, G.; Watanabe, T.; Ishiguro, H. Exploring the natural reaction of young and aged person with Telenoid in a real world. J. Adv. Comput. Intell. Intell. Inform. 2011, 15, 592–597. [Google Scholar] [CrossRef]

- Turkle, S.; Breazeal, C.; Dasté, O.; Scassellati, B. Encounters with Kismet and Cog: Children respond to relational artifacts. Digit. Media Transform. Hum. Commun. 2006, 15, 1–20. [Google Scholar]

- Stiehl, W.D.; Lieberman, J.; Breazeal, C.; Basel, L.; Lalla, L.; Wolf, M. Design of a therapeutic robotic companion for relational, affective touch. In Proceedings of the IEEE International Workshop Robot and Human Interactive Communication, Nashville, TN, USA, 13–15 August 2005. [Google Scholar]

- Yohanan, S.; MacLean, K.E. The haptic creature project: Social human-robot interaction through affective touch. In Proceedings of the AISB 2008 Symposium on the Reign of Catz & Dogs: The Second AISB Symposium on the Role of Virtual Creatures in a Computerised Society, Aberdeen, UK, 1–4 April 2008; pp. 7–11. [Google Scholar]

- Flagg, A.; MacLean, K. Affective touch gesture recognition for a furry zoomorphic machine. In Proceedings of the 7th International Conference on Tangible, Embedded and Embodied Interaction, Barcelona, Spain, 10–13 February 2013; pp. 25–32. [Google Scholar]

- Kaboli, M.; Walker, R.; Cheng, G. Re-using Prior Tactile Experience by Robotic Hands to Discriminate In-Hand Objects via Texture Properties, Technical Report. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Yogeswaran, N.; Dang, W.; Navaraj, W.T.; Shakthivel, D.; Khan, S.; Polat, E.O.; Gupta, S.; Heidari, H.; Kaboli, M.; Lorenzelli, L.; et al. New materials and advances in making electronic skin for interactive robots. Adv. Robot. 2015, 29, 1359–1373. [Google Scholar] [CrossRef]

- Kadowaki, A.; Yoshikai, T.; Hayashi, M.; Inaba, M. Development of soft sensor exterior embedded with multi-axis deformable tactile sensor system. In Proceedings of the RO-MAN 2009—The 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009; pp. 1093–1098. [Google Scholar]

- Ziefle, M.; Brauner, P.; Heidrich, F.; Möllering, C.; Lee, K.; Armbrüster, C. Understanding requirements for textile input devices individually tailored interfaces within home environments. In UAHCI/HCII 2014; Stephanidis, C., Antona, M., Eds.; Part II.; LNCS 8515; Springer: Cham, Switzerland, 2014; pp. 587–598. [Google Scholar]

- Trovato, G.; Do, M.; Terlemez, O.; Mandery, C.; Ishii, H.; Bianchi-Berthouze, N.; Asfour, T.; Takanishi, A. Is hugging a robot weird? Investigating the influence of robot appearance on users’ perception of hugging. In Proceedings of the 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), Cancun, Mexico, 15–17 November 2016; pp. 318–323. [Google Scholar]

- Schmitz, A.; Maggiali, M.; Randazzo, M.; Natale, L.; Metta, G. A prototype fingertip with high spatial resolution pressure sensing for the robot iCub. In Proceedings of the Humanoids 2008-8th IEEE-RAS International Conference on Humanoid Robots, Daejeon, South Korea, 1–3 December 2008; pp. 423–428. [Google Scholar]

- Maiolino, P.; Maggiali, M.; Cannata, G.; Metta, G.; Natale, L. A flexible and robust large scale capacitive tactile system for robots. IEEE Sens. J. 2013, 13, 3910–3917. [Google Scholar] [CrossRef]

- Petreca, B.; Bianchi-Berthouze, N.; Baurley, S.; Watkins, P.; Atkinson, D. An Embodiment Perspective of Affective Touch Behaviour in Experiencing Digital Textiles. In Proceedings of the 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction (ACII), Geneva, Switzerland, 2–5 September 2013; pp. 770–775. [Google Scholar]

- Katterfeldt, E.-S.; Dittert, N.; Schelhowe, H. EduWear: Smart textiles as ways of relating computing technology to everyday life. In Proceedings of the 8th International Conference on Interaction Design and Children, Como, Italy, 3–5 June 2009; pp. 9–17. [Google Scholar]

- Tao, X. Smart technology for textiles and clothing—Introduction and overview. In Smart Fibres, Fabrics and Clothing; Tao, X., Ed.; Woodhead Publishing: Cambridge, UK, 2001; pp. 1–6. [Google Scholar]

- Rawassizadeh, R.; Price, B.A.; Petre, M. Wearables: Has the age of smartwatches finally arrived? Commun. ACM 2015, 58, 45–47. [Google Scholar] [CrossRef]

- Swan, M. Sensor mania! the internet of things, wearable computing, objective metric and the quantified self 2.0. J. Sens. Actuator Netw. 2012, 1, 217–253. [Google Scholar]

- Stoppa, M.; Chiolerio, A. Wearable electronics and smart textiles: A critical review. Sensors 2014, 14, 11957–11992. [Google Scholar]

- Pantelopoulos, A.; Bourbakis, N.G. A survey on wearable sensor-based systems for health monitoring and prognosis. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2010, 40, 1–12. [Google Scholar] [CrossRef]

- Cherenack, K.; van Pieterson, L. Smart textiles: Challenges and opportunities. J. Appl. Phys. 2012, 112, 091301. [Google Scholar] [CrossRef]

- Carvalho, H.; Catarino, A.P.; Rocha, A.; Postolache, O. Health Monitoring using Textile Sensors and Electrodes: An Overview and Integration of Technologies. In Proceedings of the IEEE MeMeA 2014—IEEE International Symposium on Medical Measurements and Applications, Lisboa, Portugal, 1–12 June 2014. [Google Scholar]

- Cooney, M.D.; Nishio, S.; Ishiguro, H. Recognizing affection for a touch-based interaction with a humanoid robot. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Algarve, Portugal, 7–12 October 2012. [Google Scholar]

- Jung, M.M.; Poppe, R.; Poel, M.; Heylen, D.K. Touching the Void-Introducing CoST: Corpus of Social Touch. In Proceedings of the 16th International Conference on Multimodal Interaction, Istanbul, Turkey, 12–16 November 2014; pp. 120–127. [Google Scholar]

- Jung, M.M.; Cang, X.L.; Poel, M.; MacLean, K.E. Touch Challenge’15: Recognizing Social Touch Gestures. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 9–13 November 2015; pp. 387–390. [Google Scholar]

- Andreasson, R.; Alenljung, B.; Billing, E.; Lowe, R. Affective Touch in Human–Robot Interaction: Conveying Emotion to the Nao Robot. Int. J. Soc. Robot. 2017, 23, 1–19. [Google Scholar] [CrossRef]

- Li, C.; Bredies, K.; Lund, A.; Nierstrasz, V.; Hemeren, P.; Högberg, D. kNN based Numerical Hand Posture Recognition using a Smart Textile Glove. In Proceedings of the Fifth International Conference on Ambient Computing, Applications, Services and Technologies, Nice, France, 19–24 July 2015. [Google Scholar]

- Barros, P.; Wermter, S. Developing Crossmodal Expression Recognition Based on a Deep Neural Model. In Special Issue on Grounding Emotions in Robots: Embodiment, Adaptation, Social Interaction. Adapt. Behav. 2016, 24, 373–396. [Google Scholar] [CrossRef] [PubMed]

- Oatley, K.; Johnson-Laird, P.N. Towards a cognitive theory of emotions. Cogn. Emot. 1987, 1, 29–50. [Google Scholar] [CrossRef]

- Oatley, K.; Johnson-Laird, P.N. Cognitive approaches to emotions. Trends Cogn. Sci. 2014, 18, 134–140. [Google Scholar] [CrossRef] [PubMed]

- Bicho, E.; Erlhagen, W.; Louro, L.; e Silva, E.C. Neuro-cognitive mechanisms of decision making in joint action: A human–robot interaction study. Hum. Mov. Sci. 2011, 30, 846–868. [Google Scholar] [CrossRef] [PubMed]

- Michael, J. Shared emotions and joint action. Rev. Philos. Psychol. 2011, 2, 355–373. [Google Scholar] [CrossRef]

- Silva, R.; Louro, L.; Malheiro, T.; Erlhagen, W.; Bicho, E. Combining intention and emotional state inference in a dynamic neural field architecture for human-robot joint action, in Special Issue on Grounding Emotions in Robots: Embodiment, Adaptation, Social Interaction. Adapt. Behav. 2016, 24, 350–372. [Google Scholar] [CrossRef]

- Gao, Y.; Bianchi-Berthouze, N.; Meng, H. What does touch tell us about emotions in touchscreen-based gameplay? ACM Trans. Comput.-Hum. Interact. (TOCHI) 2012, 19, 31. [Google Scholar] [CrossRef]

- Lowe, R.; Sandamirskaya, Y.; Billing, E. A neural dynamic model of associative two-process theory: The differential outcomes effect and infant development. In Proceedings of the 4th International Conference on Development and Learning and on Epigenetic Robotics, Genoa, Italy, 13–16 October 2014; pp. 440–447. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lowe, R.; Andreasson, R.; Alenljung, B.; Lund, A.; Billing, E. Designing for a Wearable Affective Interface for the NAO Robot: A Study of Emotion Conveyance by Touch. Multimodal Technol. Interact. 2018, 2, 2. https://doi.org/10.3390/mti2010002

Lowe R, Andreasson R, Alenljung B, Lund A, Billing E. Designing for a Wearable Affective Interface for the NAO Robot: A Study of Emotion Conveyance by Touch. Multimodal Technologies and Interaction. 2018; 2(1):2. https://doi.org/10.3390/mti2010002

Chicago/Turabian StyleLowe, Robert, Rebecca Andreasson, Beatrice Alenljung, Anja Lund, and Erik Billing. 2018. "Designing for a Wearable Affective Interface for the NAO Robot: A Study of Emotion Conveyance by Touch" Multimodal Technologies and Interaction 2, no. 1: 2. https://doi.org/10.3390/mti2010002

APA StyleLowe, R., Andreasson, R., Alenljung, B., Lund, A., & Billing, E. (2018). Designing for a Wearable Affective Interface for the NAO Robot: A Study of Emotion Conveyance by Touch. Multimodal Technologies and Interaction, 2(1), 2. https://doi.org/10.3390/mti2010002