Three-Dimensional, Kinematic, Human Behavioral Pattern-Based Features for Multimodal Emotion Recognition

Abstract

:1. Introduction

2. Related Work

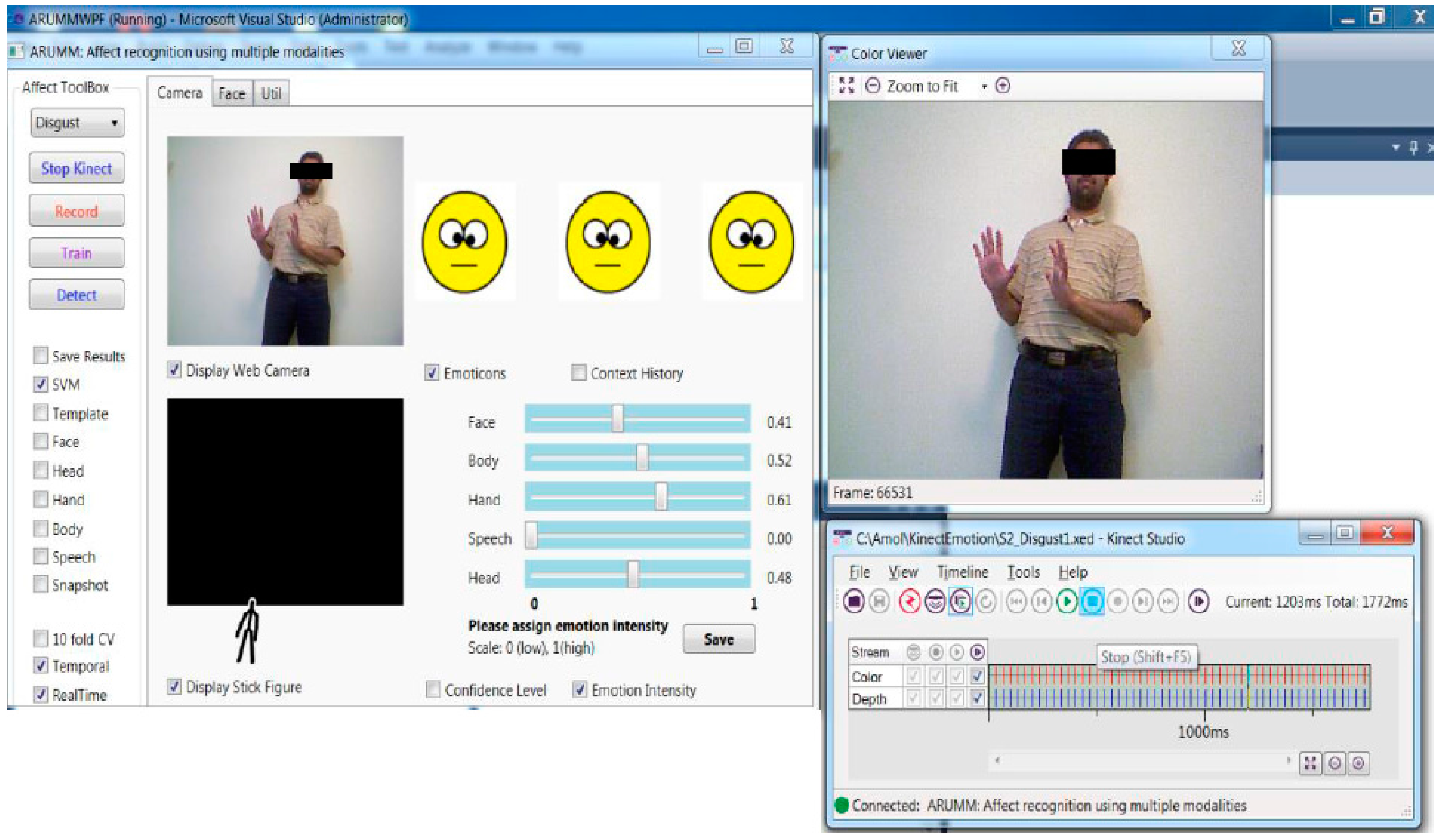

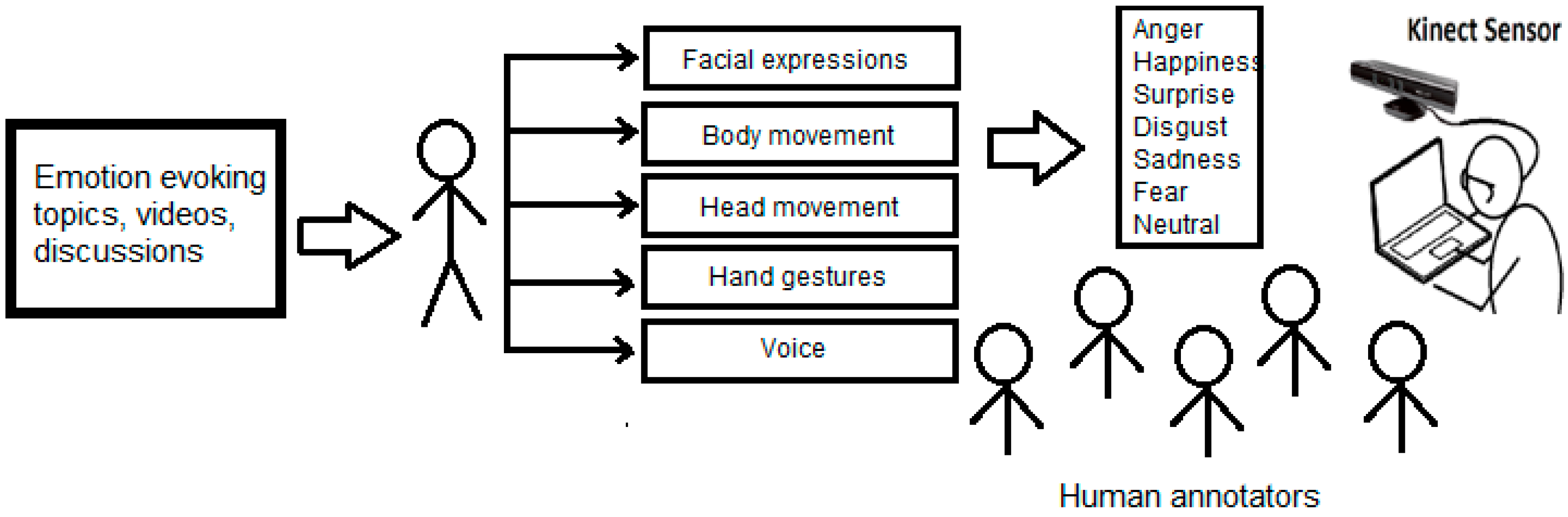

3. System Overview

4. Experimental Setup

4.1. Participant Details

4.2. Data Collection

- Discussion of the presidential election (Anger, Disgust, Sadness, Happiness)

- Discussion of the NBA finals (Anger, Disgust, Sadness, Happiness)

- Discussion of the NFL Super Bowl (Anger, Disgust, Sadness, Happiness)

- Discussion of Star Wars movie (Anger, Disgust, Sadness, Happiness)

- Reaction to viral cat videos (Happiness)

- Reaction to funny viral videos (Happiness)

- Reaction to disgusting viral videos (Disgust)

- Reaction to viral music videos (Disgust, Happiness, Anger)

- Reaction to videos on violence (Disgust, sad, Anger)

- Reaction to sad news (Sadness, Anger)

- Reaction to shocking news (Sadness, Surprise, Anger)

- Reaction to seeing an insect (Fear, Surprise)

4.3. Annotation Process

5. Methods

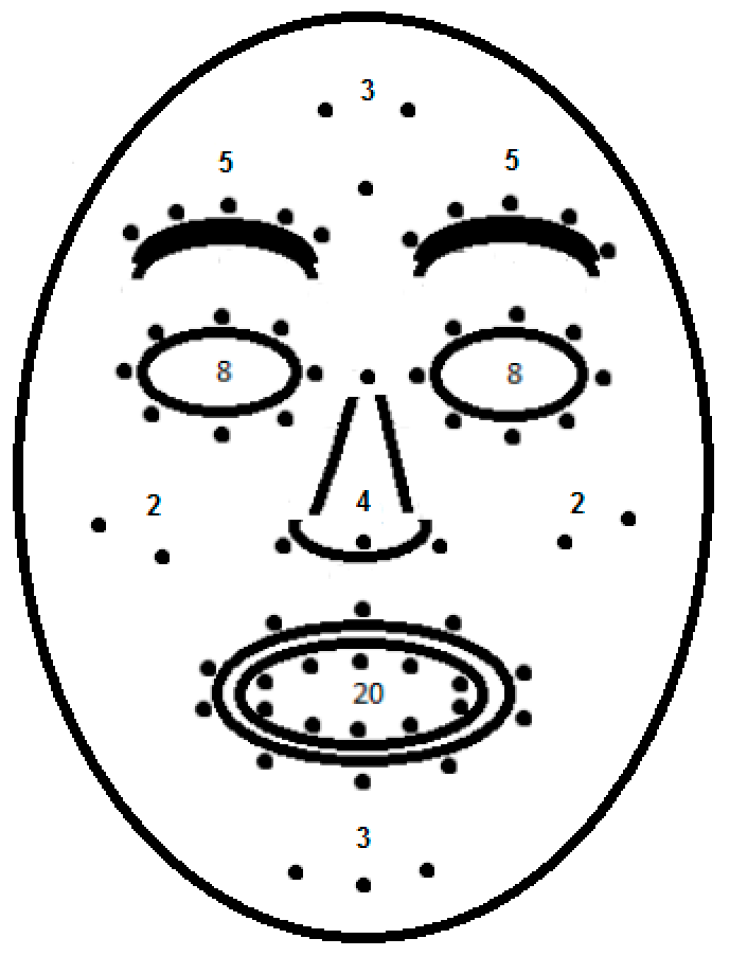

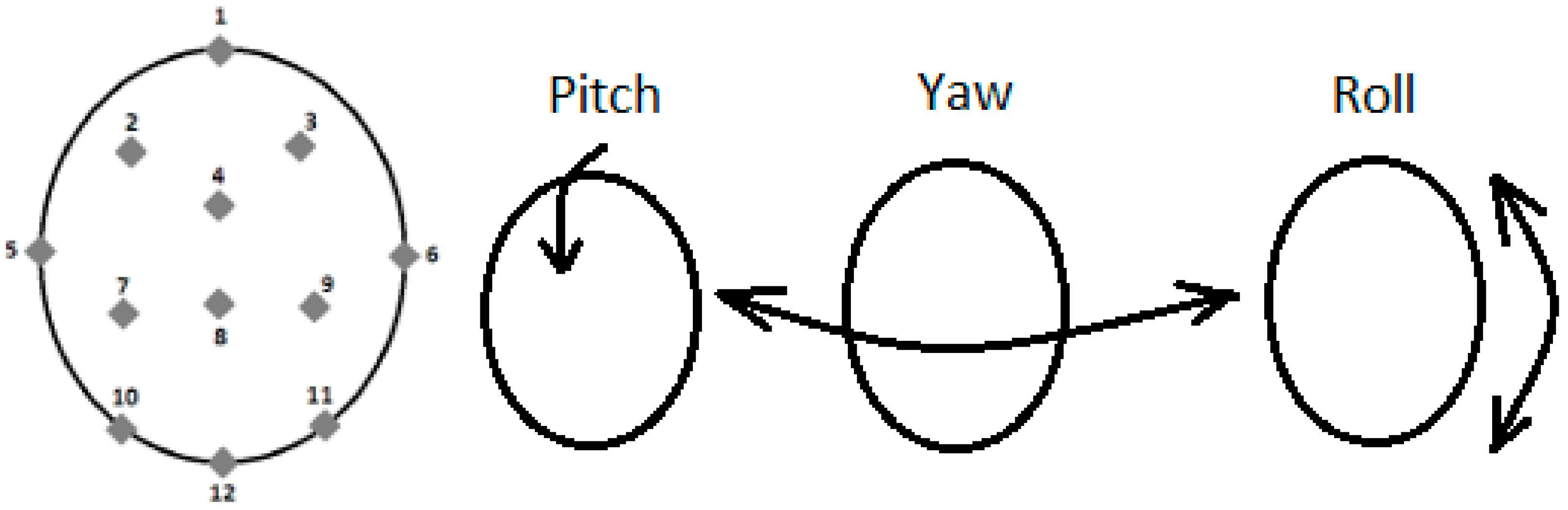

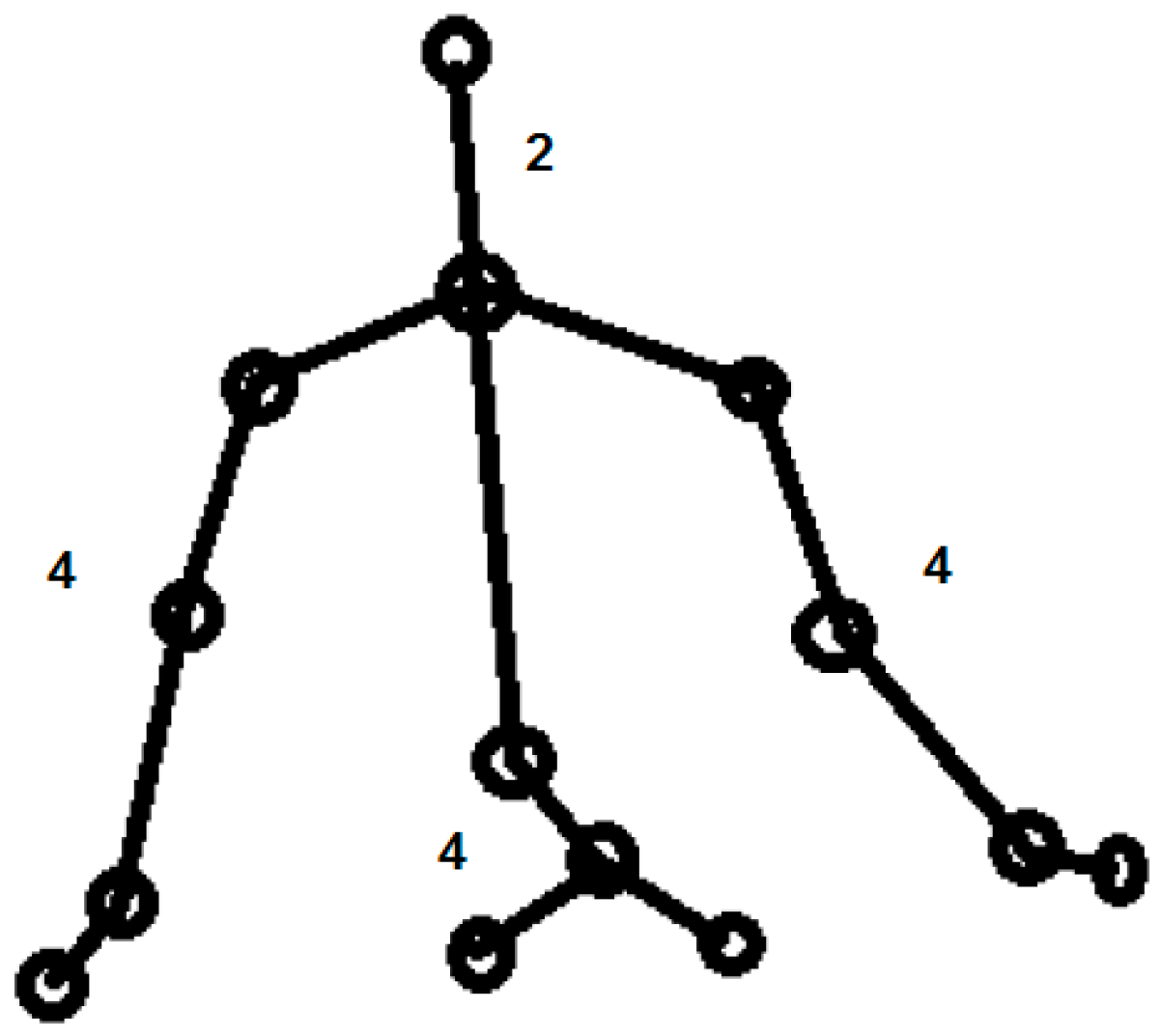

5.1. Three-Dimensional and Kinematic Feature Extraction

5.2. Human Behavioral Features

5.3. Classification Process

6. Results and Discussion

7. Threats to Validity

8. Conclusions

Conflicts of Interest

Appendix A

| Rule Descriptor | 0 | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|---|

| Units: Angle measurements are in degrees. Distances are in normalized pixels. Frequency of moving head, joints, facial parts is the number of times the tracked joint or feature point moves along reference x, y, z axis or crosses the axis per video segment. | |||||||

| Angle of left elbow = T | 43 | 172 | 31 | 16 | 32 | 124 | 187 |

| Angle of right elbow = T | 45 | 178 | 33 | 11 | 36 | 121 | 185 |

| Angle between left shoulder and arm = T | 37 | 93 | 122 | 6 | 212 | 254 | 276 |

| Angle between right shoulder and arm = T | 39 | 87 | 126 | 7 | 214 | 257 | 271 |

| Angle of spine = T (x, y, z axis) | 92, 2, 89 | 113, 2, 94 | 101,1,96 | 105, 2, 93 | 108, 2, 97 | 85, 3, 96 | 92, 1, 91 |

| Angle of head = T (x, y, z axis) | 90, 2, 85 | 92, 3, 114 | 91, 2, 106 | 89, 4, 107 | 91, 2, 102 | 93, 1, 86 | 89, 1, 90 |

| Y (wrist)–Y (elbow) | 0.12 | 0.13 | 0.09 | 0.12 | 0.15 | 0.13 | 0.16 |

| Y (elbow)–Y(shoulder) | 0.07 | 0.09 | 0.08 | 0.10 | 0.06 | 0.08 | 0.07 |

| X (wrist)–X (elbow) | 0.6 | 0.7 | 0.6 | 0.5 | 0.3 | 0.2 | 0.2 |

| X (elbow)–X (shoulder) | 0.12 | 0.14 | 0.16 | 0.6 | 0.8 | 0.6 | 0.4 |

| Z (wrist)–Z (elbow) | 0.15 | 0.16 | 0.11 | 0.7 | 0. 5 | 0.7 | 0. 5 |

| Z co-ordinate (elbow)–Z (shoulder) | 0.14 | 0.17 | 0.13 | 0.6 | 0.6 | 0.8 | 0. 7 |

| X co-ordinate (wrist)–X (shoulder) | 0.19 | 0.18 | 0.16 | 0.8 | 0.9 | 0.6 | 0.6 |

| Frequency of waving hand | 5 | 3 | 2 | 2 | 2 | 2 | 0 |

| Frequency of head nod (pitch) | 6 | 4 | 2 | 1 | 2 | 1 | 0 |

| Frequency of shaking head sideways (yaw) | 4 | 3 | 2 | 1 | 2 | 1 | 0 |

| Frequency of head bob (roll) | 3 | 3 | 2 | 2 | 2 | 1 | 0 |

| Frequency of body forward movement | 4 | 3 | 2 | 1 | 3 | 1 | 0 |

| Frequency of body backward movement | 4 | 3 | 2 | 1 | 2 | 1 | 0 |

| Frequency of sideways movement | 3 | 3 | 2 | 1 | 2 | 2 | 1 |

| Frequency of wrist movement (x, y, z axis) | 6,3,3 | 4,2,3 | 3,3,2 | 1,1,1 | 2,1,1 | 1,1,2 | 0,0,1 |

| Frequency of elbow movement (x, y, z axis) | 5,2,2 | 3,3,2 | 2,3,1 | 2,1,2 | 2,1,1 | 1,1,1 | 0,0,1 |

| Frequency of hip movement (x, y, z axis) | 3,2,1 | 3,1,1 | 1,2,1 | 1,1,2 | 2,1,2 | 1,0,1 | 0,1,1 |

| Frequency of forehead movement (x, y, z axis) | 3,2,3 | 3,3,3 | 2,3,2 | 1,1,2 | 2,2,1 | 1,1,1 | 0,0,0 |

| Frequency of spine tilting (x, y, z axis) | 3,1,1 | 3,1,1 | 2,2,1 | 1,2,1 | 3,1,1 | 1,1,1 | 0,0,1 |

| Frequency of X (shoulder) movement | 3 | 3 | 2 | 1 | 2 | 1 | 0 |

| Distance between left wrist and head top | 0.13 | 0.15 | 0.08 | 0.23 | 0.08 | 0.36 | 0.42 |

| Distance between right wrist and head top | 0.14 | 0.18 | 0.09 | 0.24 | 0.07 | 0.38 | 0.44 |

| X (elbow)–X (spine) | 0.25 | 0.12 | 0.14 | 0.18 | 0.15 | 0.07 | 0.51 |

| Y (elbow)–Y (spine) | 0.18 | 0.19 | 0.22 | 0.24 | 0.21 | 0.23 | 0.22 |

| Distance between eyebrow and eyes | 0.003 | 0.002 | 0.004 | 0.001 | 0.005 | 0.001 | 0.004 |

| Distance between upper and lower lip | 0.005 | 0.006 | 0.005 | 0.003 | 0.005 | 0.002 | 0.002 |

| Distance between nose tip and upper lip | 0.001 | 0.003 | 0.003 | 0.002 | 0.002 | 0.003 | 0.001 |

| Distance between corners of lip | 0.02 | 0.04 | 0.02 | 0.02 | 0.03 | 0.03 | 0.03 |

| Distance between upper and lower eyelid | 0.012 | 0.015 | 0.14 | 0.14 | 0.13 | 0.12 | 0.15 |

| Distance between right cheek and lip corner | 0.07 | 0.08 | 0.04 | 0.05 | 0.04 | 0.04 | 0.04 |

| Distance between left cheek and lip corner | 0.04 | 0.06 | 0.04 | 0.04 | 0.04 | 0.04 | 0.04 |

| Distance between upper lip and forehead | 0.03 | 0.06 | 0.05 | 0.05 | 0.03 | 0.05 | 0.15 |

| Distance between left and right eyebrow | 0.02 | 0.02 | 0.02 | 0.03 | 0.03 | 0.03 | 0.03 |

| Distance between nose tip and forehead | 0.03 | 0.05 | 0.05 | 0.05 | 0.03 | 0.05 | 0.15 |

| Frequency of movement of lip corners (x, y, z axis) | 3, 3, 2 | 2, 2, 2 | 2, 3, 1 | 1, 2, 1 | 2, 2, 1 | 1, 2, 1 | 0, 0, 0 |

| Frequency of cheek movement (x, y, z axis) | 3, 2, 2 | 2, 3, 1 | 2, 2, 1 | 2, 2, 1 | 2, 1, 1 | 2, 2, 1 | 0, 0, 0 |

| Frequency of eyebrow movement (x, y, z axis) | 2, 3, 2 | 2, 3, 3 | 2, 3, 2 | 1, 2, 2 | 3, 2, 1 | 1, 2, 1 | 0, 0, 0 |

| Frequency of upper lip movement (x, y, z axis) | 2, 3, 2 | 3, 3, 2 | 2, 2, 2 | 1, 3, 1 | 2, 3, 1 | 1, 2, 1 | 0, 0, 0 |

| Frequency of lower lip movement (x, y, z axis) | 2, 3, 2 | 2, 3, 2 | 2, 3, 3 | 1, 3, 1 | 2, 2, 1 | 1, 2, 1 | 0, 0, 0 |

References

- Picard, R.W.; Picard, R. Affective Computing; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Pantic, M.; Rothkrantz, L.J. Toward an Affect-Sensitive Multimodal Human–Computer Interaction. IEEE Proc. 2003, 91, 1370–1390. [Google Scholar] [CrossRef]

- Zeng, Z.; Pantic, M.; Roisman, G.I.; Huang, T.S. A survey of affect recognition methods: Audio, visual, and spontaneous expressions. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 39–58. [Google Scholar] [CrossRef] [PubMed]

- Castellano, G.; Kessous, L.; Caridakis, G. Multimodal emotion recognition from expressive faces, body gestures and speech. In Proceedings of the 2nd International Conference on Affective Computing and Intelligent Interaction, Lisbon, Portugal, 12–14 September 2007; Volume 247, pp. 375–388. [Google Scholar]

- Emerich, S.; Lupu, E.; Apatean, A. Bimodal approach in emotion recognition using speech and facial expressions. In Proceedings of the International Symposium on Signals, Circuits and Systems, Iasi, Romania, 9–10 July 2009; pp. 1–4. [Google Scholar]

- Chen, L.; Huang, T.; Miyasato, T.; Nakatsu, R. Multimodal human emotion/expression recognition. In Proceedings of the 3rd IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 366–371. [Google Scholar]

- Scherer, K.; Ellgring, H. Multimodal Expression of Emotion: Affect Programs or Componential Appraisal Patterns? Emotion 2007, 7, 158–171. [Google Scholar] [CrossRef] [PubMed]

- Kapoor, A.; Picard, R.W. Multimodal affect recognition in learning environments. In Proceedings of the 13th Annual ACM International Conference on Multimedia, Singapore, 6–12 November 2005; pp. 677–682. [Google Scholar]

- D’Mello, S.; Graesser, A. Multimodal semi-automated affect detection from conversational cues, gross body language, and facial features. User Model. User Adapt. Interact. 2010, 10, 147–187. [Google Scholar] [CrossRef]

- Baenziger, T.; Grandjean, D.; Scherer, K.R. Emotion recognition from expressions in face, voice, and body: The Multimodal Emotion Recognition Test (MERT). Emotion 2009, 9, 691–704. [Google Scholar] [CrossRef] [PubMed]

- Busso, C.; Deng, Z.; Yildirim, S.; Bulut, M.; Lee, C.M.; Kazemzadeh, A.; Lee, S.; Neumann, U.; Narayanan, S. Analysis of emotion recognition using facial expressions, speech and multimodal information. In Proceedings of the 6th International Conference on Multimodal Interfaces, State College, PA, USA, 13–15 October 2004; pp. 205–211. [Google Scholar]

- Wallbott, H.G. Bodily expression of emotion. Eur. J. Soc. Psychol. 1998, 28, 879–896. [Google Scholar] [CrossRef]

- Kleinsmith, A.; Bianchi-Berthouze, N. Affective body expression perception and recognition: A survey. IEEE Trans. Affect. Comput. 2013, 4, 15–33. [Google Scholar] [CrossRef]

- Bone, D.; Lee, C.; Narayan, S. Robust unsupervised arousal rating: A rule-based framework with knowledge-inspired vocal features. IEEE Trans. Affect. Comput. 2014, 5, 201–213. [Google Scholar] [CrossRef] [PubMed]

- Zeng, Z.; Jilin, T.; Pianfetti, B.M.; Huang, T.S. Audio-visual affective expression recognition through multistream fused HMM. IEEE Trans. Multimedia 2008, 10, 570–577. [Google Scholar] [CrossRef]

- Takahashi, K. Remarks on SVM-based emotion recognition from multi-modal bio-potential signals. In Proceedings of the 13th IEEE International Workshop on Robot and Human Interactive Communication, Kurashiki, Japan, 20–22 September 2004; pp. 95–100. [Google Scholar]

- Valstar, M.F.; Gunes, H.; Pantic, M. How to distinguish posed from spontaneous smiles using geometric features. In Proceedings of the ACM International Conference on Multimodal Interfaces, Nagoya, Japan, 12–15 November 2007; pp. 38–45. [Google Scholar]

- Zhang, L.; Yap, B. Affect Detection from text-based virtual improvisation and emotional gesture recognition. Adv. Hum. Comput. Interact. 2012, 2012, 461247. [Google Scholar] [CrossRef]

- Ioannou, S.; Raouzaiou, A.; Karpouzis, K.; Pertselakis, M.; Tsapatsoulis, N.; Kollias, S. Adaptive rule-based facial expression recognition. Lect. Notes Artif. Intell. 2004, 3025, 466–475. [Google Scholar]

- Coulson, M. Attributing emotion to static body postures: Recognition accuracy, confusions, and viewpoint dependence. J. Nonverbal Behav. 2010, 28, 117–139. [Google Scholar] [CrossRef]

- Ekman, P. An argument for basic emotions. Cognit. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Fothergill, S.; Mentis, H.M.; Kohli, P.; Nowozin, S. Instructing people for training gestural interactive systems CHI. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 1737–1746. [Google Scholar]

- Masood, S.Z.; Ellis, C.; Tappen, M.F.; LaViola, J.J., Jr.; Sukthankar, R. Exploring the trade-off between accuracy and observational latency in action recognition. Int. J. Comput. Vis. 2013, 101, 420–436. [Google Scholar]

- Yuang, J.; Liu, Z.; Wu, Y. Discriminative subvolume search for efficient action detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2009), Miami, FL, USA, 20–25 June 2009; pp. 22–24. [Google Scholar]

- Eyben, F.; Wöllmer, M.; Schuller, B. OpenEAR—Introducing the munich open-source emotion and affect recognition toolkit. In Proceedings of the 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, The Netherlands, 10–12 September 2009; pp. 1–6. [Google Scholar]

- Fasel, B.; Luettin, J. Automatic facial expression analysis: A survey. Pattern Recognit. 2003, 36, 259–275. [Google Scholar] [CrossRef]

- Wang, W.; Enescu, V.; Sahli, H. Towards Real-Time Continuous Emotion Recognition from Body Movements, Human Behavior Understanding. Lect. Notes Comput. Sci. 2013, 8212, 235–245. [Google Scholar] [CrossRef]

- Gunes, H.; Piccardi, M. Affect recognition from face and body: Early fusion versus late fusion. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC ’05), Banff, AB, Canada, 5–8 October 2017; pp. 3437–3443. [Google Scholar]

- Patwardhan, A.S.; Knapp, G.M. Aggressive action and anger detection from multiple modalities using Kinect. arXiv, 2016; arXiv:1607.01076. [Google Scholar]

- Patwardhan, A.S.; Knapp, G.M. EmoFit: Affect Monitoring System for Sedentary Jobs. arXiv, 2016; arXiv:1607.01077. [Google Scholar]

- Patwardhan, A.S. Multimodal affect recognition using Kinect. arXiv, 2016; arXiv:1607.02652. [Google Scholar]

- Sebe, N.; Cohen, I.; Huang, T.S. Multimodal emotion recognition. In Handbook of Pattern Recognition and Computer Vision; World Scientific Publishing Co.: Boston MA, USA, 2005; Volume 4, pp. 387–410. [Google Scholar]

- Cowie, R.; Douglas-Cowie, E.; Tsapatsoulis, N.; Votsis, G.; Kollias, S.; Fellenz, W.; Taylor, J. Emotion recognition in human-computer interaction. IEEE Signal Process. Mag. 2001, 18, 32–80. [Google Scholar] [CrossRef]

- De Meijer, M. The contribution of general features of body movement to the attribution of emotions. J. Nonverbal Behav. 1989, 13, 247–268. [Google Scholar] [CrossRef]

- Dael, N.; Mortillaro, M.; Scherer, K.R. The Body Action and Posture Coding System (BAP): Development and Reliability. J. Nonverbal Behav. 2012, 36, 97–121. [Google Scholar] [CrossRef]

- Schuller, B.; Muller, R.; Hornler, B.; Hothker, A.; Konosu, H.; Rigoll, G. Audiovisual recognition of spontaneous interest within conversations. In Proceedings of the 9th ACM International Conference on Multimodal Interfaces (ICMI’07), Nagoya, Japan, 12–15 November 2007; pp. 30–37. [Google Scholar]

- Konstantinidis, E.I.; Billis, A.; Savvidis, T.; Xefteris, S.; Bamidis, P.D. Emotion Recognition in the Wild: Results and Limitations from Active and Healthy Ageing Cases in a Living Lab, eHealth 360°; Springer: Budapest, Hungary, 2017. [Google Scholar]

- Ranganathan, H.; Chakraborty, S.; Panchanathan, S. Multimodal emotion recognition using deep learning architectures. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–9 March 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Zhang, Z.; Cui, L.; Liu, X.; Zhu, T. Emotion Detection Using Kinect 3D Facial Points. In Proceedings of the 2016 IEEE/WIC/ACM International Conference on Web Intelligence (WI), Omaha, NE, USA, 13–16 October 2016; pp. 407–410. [Google Scholar] [CrossRef]

- Patwardhan, A.S.; Knapp, G.M. Affect Intensity Estimation Using Multiple Modalities. In Proceedings of the 27th International Florida Artificial Intelligence Research Society Conference, Pensacola Beach, FL, USA, 21–23 May 2014. [Google Scholar]

- Patwardhan, A.S.; Knapp, G.M. Multimodal Affect Analysis for Product Feedback Assessment. In Proceedings of the 2013 IIE Annual Conference, San Juan, Puerto Rico, 18–22 May 2013. [Google Scholar]

- Sahoo, S.; Routray, A. Emotion recognition from audio-visual data using rule based decision level fusion. In Proceedings of the 2016 IEEE Students’ Technology Symposium (TechSym), Kharagpur, India, 30 September–2 October 2016; pp. 7–12. [Google Scholar] [CrossRef]

- Seng, K.; Ang, L.M.; Ooi, C. A Combined Rule-Based and Machine Learning Audio-Visual Emotion Recognition Approach. IEEE Trans. Affect. Comput. 2016, PP, 1–11. [Google Scholar] [CrossRef]

- Poria, S.; Chaturvedi, I.; Cambria, E.; Hussain, A. Convolutional MKL Based Multimodal Emotion Recognition and Sentiment Analysis. In Proceedings of the IEEE 16th International Conference on Data Mining, Barcelona, Spain, 12–15 December 2016. [Google Scholar]

- Liu, H.; Cocea, M. Fuzzy rule based systems for interpretable sentiment analysis. In Proceedings of the 2017 Ninth International Conference on Advanced Computational Intelligence (ICACI), Doha, Qatar, 4–6 February 2017; pp. 129–136. [Google Scholar] [CrossRef]

- Nguyen, D.; Nguyen, K.; Sridharan, S.; Ghasemi, A.; Dean, D.; Fookes, C. Deep Spatio-Temporal Features for Multimodal Emotion Recognition. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 1215–1223. [Google Scholar] [CrossRef]

- Noroozi, F.; Marjanovic, M.; Njegus, A.; Escalera, S.; Anbarjafari, G. Audio-Visual Emotion Recognition in Video Clips. IEEE Trans. Affect. Comput. 2017, PP, 1. [Google Scholar] [CrossRef]

- Hall, M.; Frank, E.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I.H. The WEKA Data Mining Software: An Update. SIGKDD Explor. 2009, 11, 10–18. [Google Scholar] [CrossRef]

- C# Reference. Available online: https://docs.microsoft.com/en-us/dotnet/csharp/language-reference/index (accessed on 10 August 2017).

- .NET Framework 4. Available online: https://msdn.microsoft.com/en-us/library/w0x726c2(v=vs.100).aspx (accessed on 10 August 2017).

- Kinect SDK 1.8. Available online: https://msdn.microsoft.com/en-us/library/hh855347.aspx (accessed on 10 August 2017).

- Windows Presentation Foundation 4. Available online: https://msdn.microsoft.com/en-us/library/ms754130(v=vs.100).aspx (accessed on 10 August 2017).

- Fleiss, J.L. Measuring nominal scale agreement among many raters. Psychol. Bull. 1971, 76, 378–382. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1997, 33, 159–174. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W. Head and Body Cues in the Judgment of Emotion: A Reformulation. Percept. Motor Skills 1967, 24, 711–724. [Google Scholar] [CrossRef] [PubMed]

| Angle-, Frequency-, and Distance-Based List of Features | |

|---|---|

| Angle of left elbow = T | Shaking head sideways (yaw) frequency |

| Angle of right elbow = T | Head bob (roll) frequency |

| Angle (left shoulder and arm) = T | Body forward movement frequency |

| Angle (right shoulder and arm) = T | Body backward movement frequency |

| Angle of spine = T (x, y, z axis) | Sideways movement frequency |

| Angle of head = T (x, y, z axis) | Wrist movement (x, y, z axis) frequency |

| Y (wrist)–Y (elbow) | Elbow movement (x, y, z axis) frequency |

| Y (elbow)–Y (shoulder) | Hip movement (x, y, z axis) frequency |

| X (wrist)–X (elbow) | Forehead movement (x, y, z axis) frequency |

| X (elbow)–X (shoulder) | Spine tilting (x, y, z axis) frequency |

| Z (wrist)—Z (elbow) | X (shoulder) movement frequency |

| Z coordinate (elbow)–Z (shoulder) | Waving hand frequency |

| X coordinate (wrist)–X (shoulder) | Head nod (pitch) frequency |

| X (elbow)–X (spine) | Eyebrow movement (x, y, z axis) frequency |

| Y (elbow)–Y (spine) | Upper lip movement (x, y, z axis) frequency |

| Distance between right cheek and lip corner | Lower lip movement (x, y, z axis) frequency |

| Distance between nose tip and upper lip | Cheek movement (x, y, z axis) frequency |

| Distance between corners of lip | Lip corner movement (x, y, z axis) frequency |

| Distance between upper and lower eyelid | Distance between eyebrow and eyes |

| Distance between left cheek and lip corner | Distance between upper and lower lip |

| Distance between nose tip and forehead | Distance between left wrist and head top |

| Distance between upper lip and forehead | Distance between right wrist and head top |

| Distance between left and right eyebrow | |

| Emotion | Action | Fleiss Kappa | Agreement Level |

|---|---|---|---|

| Anger | Throwing an object. Punching. Holding head in frustration. Folding hands. Holding hands on waist. Moving forward threateningly. Throwing a fit. Raising arms in rage. Moving around aggressively. Shouting in rage. Pointing a finger at someone. Threatening someone. Scowling. | 1 1 0.8 0.6 0.6 0.8 0.8 0.6 0.6 1 0.6 0.8 1 | Perfect Perfect Substantial Moderate Moderate Substantial Substantial Moderate Moderate Perfect Moderate Substantial Perfect |

| Happiness | Jumping for joy. Fist pumping in joy. Raising arms in air in happiness. Laughing. Smiling. | 1 1 1 1 1 | Perfect Perfect Perfect Perfect Perfect |

| Disgust | Holding nose. Looking down, expressing disgust. Moving back in disgust. | 1 1 0.6 | Perfect Perfect Moderate |

| Sadness | Looking down, leaning against wall. Looking down with hands on waist. Looking down with hands folded. Crying. Toothache. Head hurting. | 0.8 0.6 0.8 1 0.8 0.8 | Substantial Moderate Substantial Perfect Substantial Substantial |

| Surprise | Raising arms in surprise. Moving back in surprise. Walking forward and getting startled. Holding arms near chest in surprise. Covering mouth with hands. | 0.8 0.8 1 0.8 0.8 | Substantial Substantial Perfect Substantial Substantial |

| Fear | Moving backwards, trying to evade. Moving sideways. Looking up and running away. Getting rid of an insect on shirt. | 1 0.8 0.8 0.8 | Perfect Substantial Substantial Substantial |

| Neutral | Standing straight with no facial expression. | 1 | Perfect |

| Action | Emotion | Fleiss Kappa | Agreement Level |

|---|---|---|---|

| Crouch or hide | Fear | 1 | Perfect |

| Shoot with a pistol | Angry | 0.8 | Substantial |

| Throw an object | Angry | 0.8 | Substantial |

| Change weapon | Angry | 0.2 | Slight |

| Kick to attack an enemy | Angry | 1 | Perfect |

| Put on night vision goggles | Neutral | 0.4 | Fair |

| Had enough gesture | Angry | 0.6 | Moderate |

| Music-based gestures | Neutral | 0.4 | Fair |

| Action | Emotion | Fleiss Kappa | Agreement Level |

|---|---|---|---|

| Balance, climb ladder, climb up | Neutral | 1 | Perfect |

| Duck | Fear | 0.8 | Substantial |

| Hop | Surprise | 0.6 | Moderate |

| Kick | Anger | 0.8 | Substantial |

| Leap | Surprise | 0.6 | Moderate |

| Punch | Anger | 1 | Perfect |

| Run | Fear | 0.8 | Substantial |

| Step back | Fear | 0.8 | Substantial |

| Step back | Disgust | 0.6 | Moderate |

| Step front | Anger | 0.6 | Moderate |

| Step left | Disgust | 0.8 | Substantial |

| Step right | Disgust | 0.8 | Substantial |

| Turn left, Turn right, Vault | Neutral | 1 | Perfect |

| Action | Emotion | Fleiss Kappa | Agreement Level |

|---|---|---|---|

| Sit down, stand up | Neutral | 1 | Perfect |

| Hand clapping | Happiness | 1 | Perfect |

| Hand waving | Happiness | 0.6 | Moderate |

| Fist pump gesture | Happiness | 1 | Perfect |

| Boxing | Anger | 1 | Perfect |

| Toss a paper in frustration | Anger | 0.8 | Substantial |

| Modality | C | Gamma |

|---|---|---|

| Head | 1 | 1/12 |

| Face | 1 | 1/60 |

| Hand | 1 | 1/8 |

| Body | 1 | 1/14 |

| Multimodal | 1 | 0.09 |

| Audio | 1 | 0.009 |

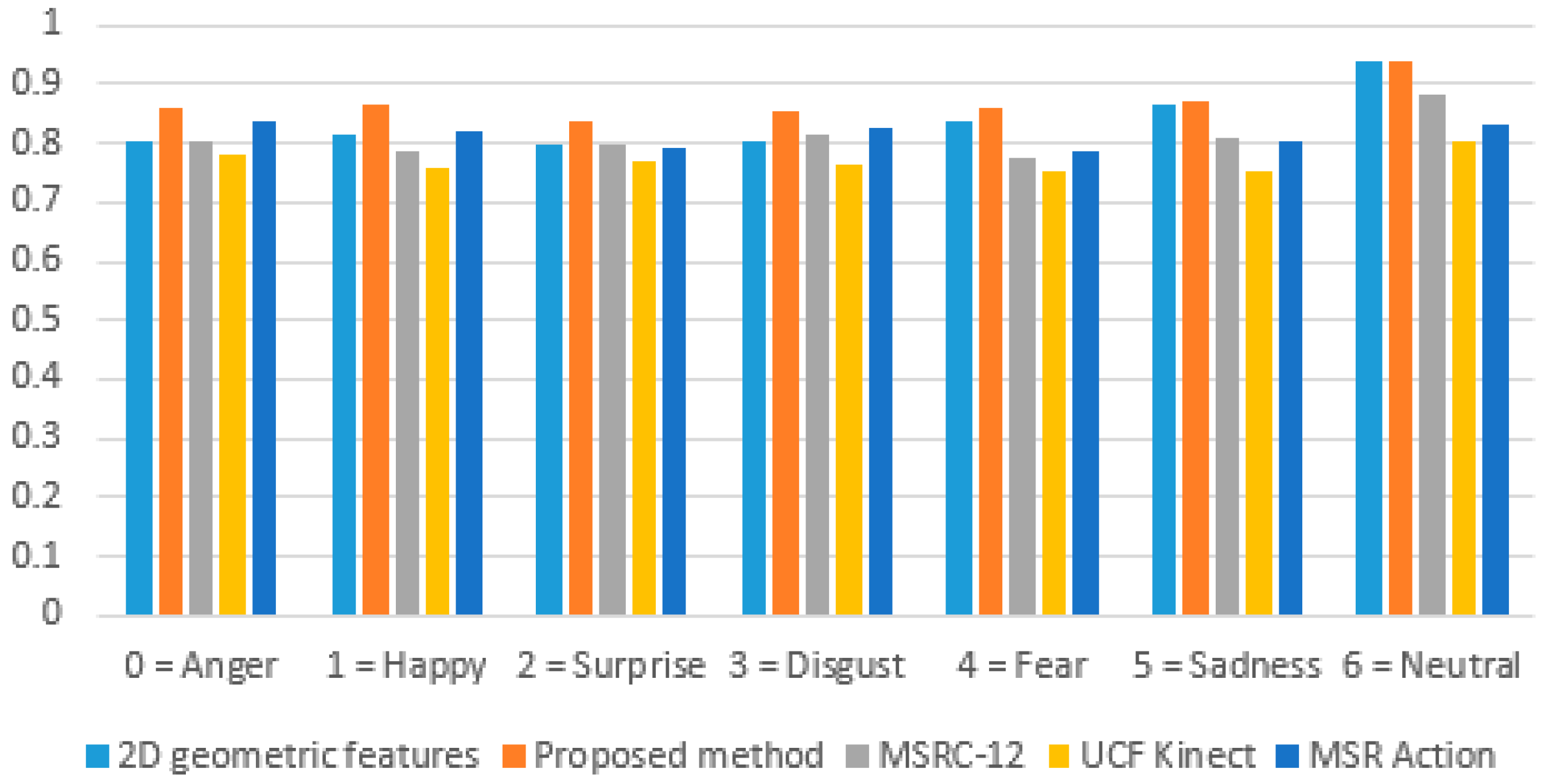

| 2D Geometric Features | Proposed Method | MSRC-12 | UCF Kinect | MSR Action | Label | Emotion |

|---|---|---|---|---|---|---|

| 80.5 | 86.2 | 81.7 | 77.4 | 84.6 | 0 | Anger |

| 82.8 | 87.2 | 77.3 | 79.8 | 84.6 | 1 | Happiness |

| 79.5 | 82 | 78.9 | 73.9 | 75.1 | 2 | Surprise |

| 81.1 | 88.3 | 83.1 | 73.5 | 80.9 | 3 | Disgust |

| 83.2 | 85.2 | 75.3 | 72.2 | 76.7 | 4 | Fear |

| 85 | 84.1 | 78.4 | 75.3 | 80.4 | 5 | Sadness |

| 94.7 | 96.8 | 94.6 | 88.6 | 88.9 | 6 | Neutral |

| 2D Geometric Features | Proposed Method | MSRC-12 | UCF Kinect | MSR Action | Label | Emotion |

|---|---|---|---|---|---|---|

| 80.1 | 86 | 78.6 | 78.4 | 83.4 | 0 | Anger |

| 80.4 | 86.3 | 80.6 | 72.5 | 79.8 | 1 | Happiness |

| 80.5 | 85.2 | 81.2 | 79.7 | 83.4 | 2 | Surprise |

| 79.7 | 82.4 | 79.7 | 79.5 | 84.8 | 3 | Disgust |

| 84.8 | 87 | 80.1 | 78.3 | 80.1 | 4 | Fear |

| 88.5 | 90.9 | 83.8 | 75.5 | 79.9 | 5 | Sadness |

| 92.9 | 91.3 | 83.1 | 73.9 | 77.7 | 6 | Neutral |

| 2D Geometric Features | Proposed Method | MSRC-12 | UCF Kinect | MSR Action | Label | Emotion |

|---|---|---|---|---|---|---|

| 80.3 | 86.1 | 80.1 | 77.9 | 84 | 0 | Anger |

| 81.6 | 86.7 | 78.9 | 76 | 82.1 | 1 | Happiness |

| 80 | 83.6 | 80 | 76.7 | 79 | 2 | Surprise |

| 80.4 | 85.2 | 81.4 | 76.4 | 82.8 | 3 | Disgust |

| 84 | 86.1 | 77.6 | 75.1 | 78.4 | 4 | Fear |

| 86.7 | 87.4 | 81 | 75.4 | 80.1 | 5 | Sadness |

| 93.8 | 94 | 88.5 | 80.6 | 82.9 | 6 | Neutral |

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | Label | Emotion |

|---|---|---|---|---|---|---|---|---|

| 80.1 | 4.9 | 9.6 | 4.8 | 0.3 | 0.2 | 0 | 0 | Anger |

| 9.7 | 80.4 | 6 | 3.6 | 0.1 | 0.1 | 0.1 | 1 | Happiness |

| 7.4 | 10.8 | 80.5 | 0.4 | 0.2 | 0.5 | 0.1 | 2 | Surprise |

| 1 | 0.6 | 0.6 | 79.7 | 8.9 | 8.4 | 0.9 | 3 | Disgust |

| 0.8 | 0.3 | 3.1 | 6.2 | 84.8 | 3.3 | 1.5 | 4 | Fear |

| 0.5 | 0.5 | 0.1 | 3.2 | 5 | 88.5 | 2.2 | 5 | Sadness |

| 0.4 | 0.1 | 0.8 | 0.8 | 2.6 | 2.4 | 92.9 | 6 | Neutral |

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | Label | Emotion |

|---|---|---|---|---|---|---|---|---|

| 86 | 3.1 | 8.9 | 1.9 | 0.1 | 0 | 0 | 0 | Anger |

| 6.8 | 86.3 | 6.2 | 0.6 | 0 | 0 | 0.1 | 1 | Happiness |

| 4.6 | 9.3 | 85.2 | 0.4 | 0.1 | 0.4 | 0.1 | 2 | Surprise |

| 0.9 | 0.1 | 0.6 | 82.4 | 7.2 | 8.4 | 0.4 | 3 | Disgust |

| 0.6 | 0.3 | 2.7 | 5 | 87 | 3.2 | 1.3 | 4 | Fear |

| 0.4 | 0.1 | 0 | 2.8 | 4.5 | 90.9 | 1.3 | 5 | Sadness |

| 0.2 | 0 | 0.4 | 0.6 | 3.5 | 4 | 91.3 | 6 | Neutral |

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Patwardhan, A. Three-Dimensional, Kinematic, Human Behavioral Pattern-Based Features for Multimodal Emotion Recognition. Multimodal Technol. Interact. 2017, 1, 19. https://doi.org/10.3390/mti1030019

Patwardhan A. Three-Dimensional, Kinematic, Human Behavioral Pattern-Based Features for Multimodal Emotion Recognition. Multimodal Technologies and Interaction. 2017; 1(3):19. https://doi.org/10.3390/mti1030019

Chicago/Turabian StylePatwardhan, Amol. 2017. "Three-Dimensional, Kinematic, Human Behavioral Pattern-Based Features for Multimodal Emotion Recognition" Multimodal Technologies and Interaction 1, no. 3: 19. https://doi.org/10.3390/mti1030019

APA StylePatwardhan, A. (2017). Three-Dimensional, Kinematic, Human Behavioral Pattern-Based Features for Multimodal Emotion Recognition. Multimodal Technologies and Interaction, 1(3), 19. https://doi.org/10.3390/mti1030019