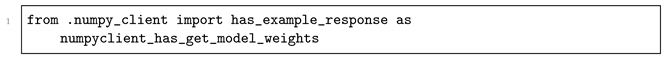

The privacy of individuals in healthcare institutes is jeopardized by the sharing of sensitive data across different institutions, posing a substantial challenge for machine learning applications in the medical field. To tackle this issue, our study presents a proposed solution that adopts a centralized FL framework, integrating an RLWE-based MKHE scheme for secure communication between the server and clients. Using this approach, we aim to enhance privacy-preserving machine learning in healthcare institutes and alleviate the inherent risks associated with data sharing.

3.2. RLWE: Original and Improved

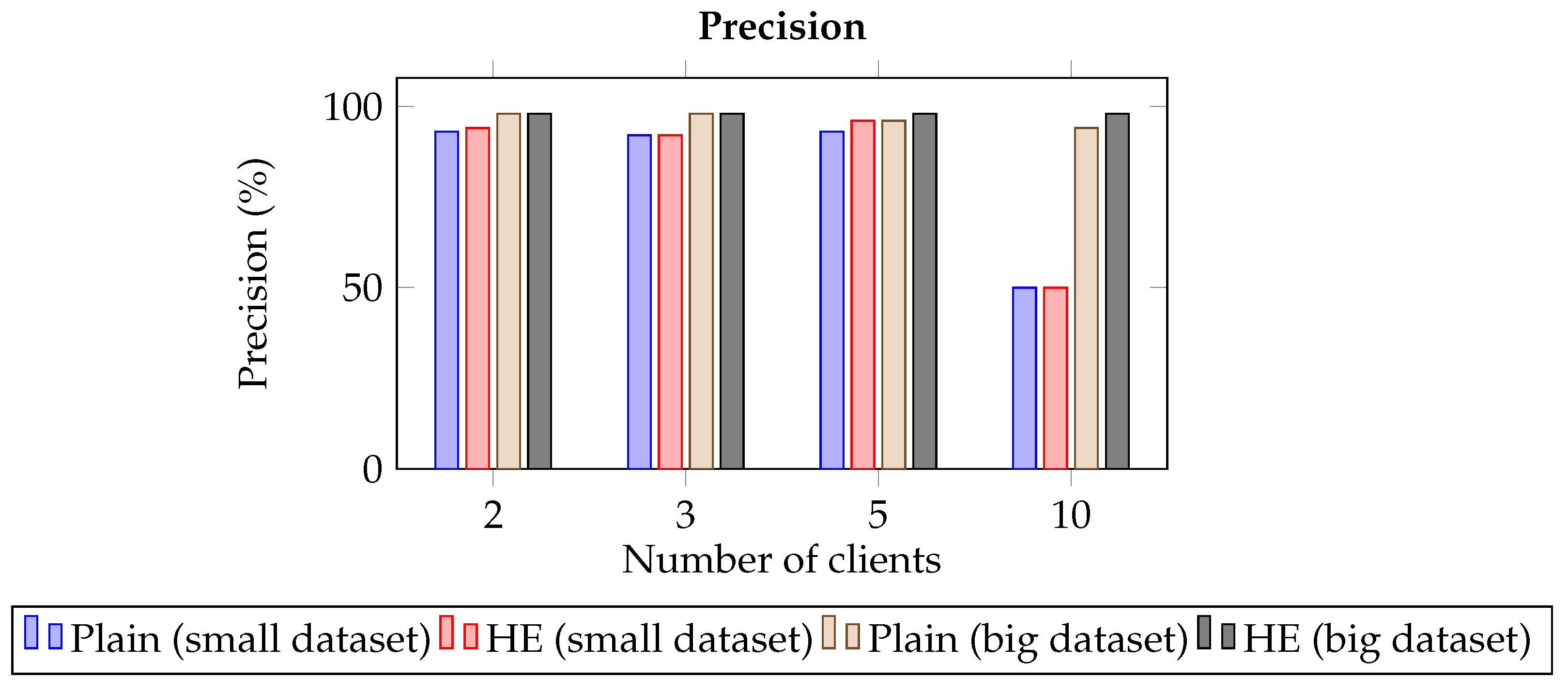

3.2.1. Overview of Original RLWE Implementation

The initial implementation of RLWE was obtained from a GitHub repository [

34] and will be the basis for our modifications. The existing RWLE implementation consists of three primary Python files. The utils.py file contains utility functions, the Rq.py file is responsible for creating ring polynomial objects, and the RLWE.py file is used to instantiate RLWE instances for tasks such as key generation, encryption, decryption, and modular operations required for HE.

Key Generation, Encryption, and Decryption in Original RLWE

The original RLWE.py file includes a class that creates an RLWE instance, which requires four variables: n, t, q, and . These variables represent the degrees (length) of the polynomial plaintext to be encrypted (which must be a power of 2), two large prime numbers defining the value ranges for the coefficients of the plaintext and ciphertext, and the standard deviation of a zero-mean Gaussian distribution utilized for generating the private keys and errors.

In the original RLWE implementation and our modified version, we incorporate three Gaussian distributions: , , and , vital in key and error generation. All distributions are zero-mean Gaussian but with distinct standard deviations. is employed to generate private keys, is utilized for generating error polynomials during the encryption process, and is introduced in our modified RLWE scheme specifically for partial decryption. In the partial decryption phase, generates error polynomials with a slightly higher standard deviation than the error polynomials used during encryption. In our project, we use a standard deviation of 3 for and (making them equivalent), while has a standard deviation of 5.

When setting the variable t, it is crucial to ensure that the minimum and maximum coefficients of the polynomial plaintext fall within the range of and . This is of utmost importance in RLWE, as the coefficients undergo a wrapping operation within the ring. Consequently, a coefficient exceeding will be interpreted as a negative value, and vice versa.

Likewise, the ratio between the modulus for the ciphertext

q and the modulus for the plaintext

t holds great significance. As discussed by Peikert in the context of the hardness of LWE and its variants, as well as Ring-LWE [

29], the selection of these moduli must carefully consider the desired security level and the specific parameters of the scheme. In our proof-of-concept work, we arbitrarily chose the modulus

q to be a prime number greater than twenty times the modulus

t, aiming to balance computational efficiency and security.

Regarding the selection of standard deviations, our choices of 3 and 5 are somewhat arbitrary. As highlighted in Peikert’s papers [

29,

31], the standard deviation is a crucial factor in determining the security level in conjunction with other parameters. A larger ratio between the modulus

q and

t enables a higher standard deviation, resulting in increased noise that enhances decryption difficulty and thus augments security. However, these choices must be carefully balanced in practice, considering both security and efficiency requirements. For more precise guidance on parameter selection, we recommend referring to Peikert’s work.

Key generation, encryption, and decryption processes in the original simple RLWE implementation are as follows:

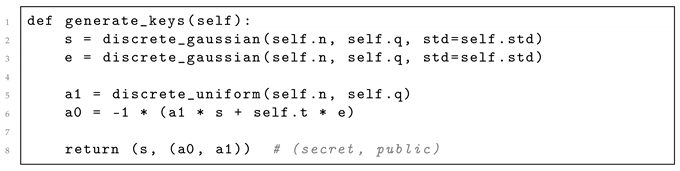

Key Generation:

Generate a private key s (noise) from key distribution .

Generate a public key a comprising two polynomials and . is a polynomial over modulus q with coefficients randomly sampled from a uniform distribution, while is a polynomial over modulus t computed using the formula , where e is an error polynomial of modulus q drawn from distribution . Listing 1 shows the generate keys method.

| Listing 1. Key generation. |

![Cryptography 07 00048 i001]() |

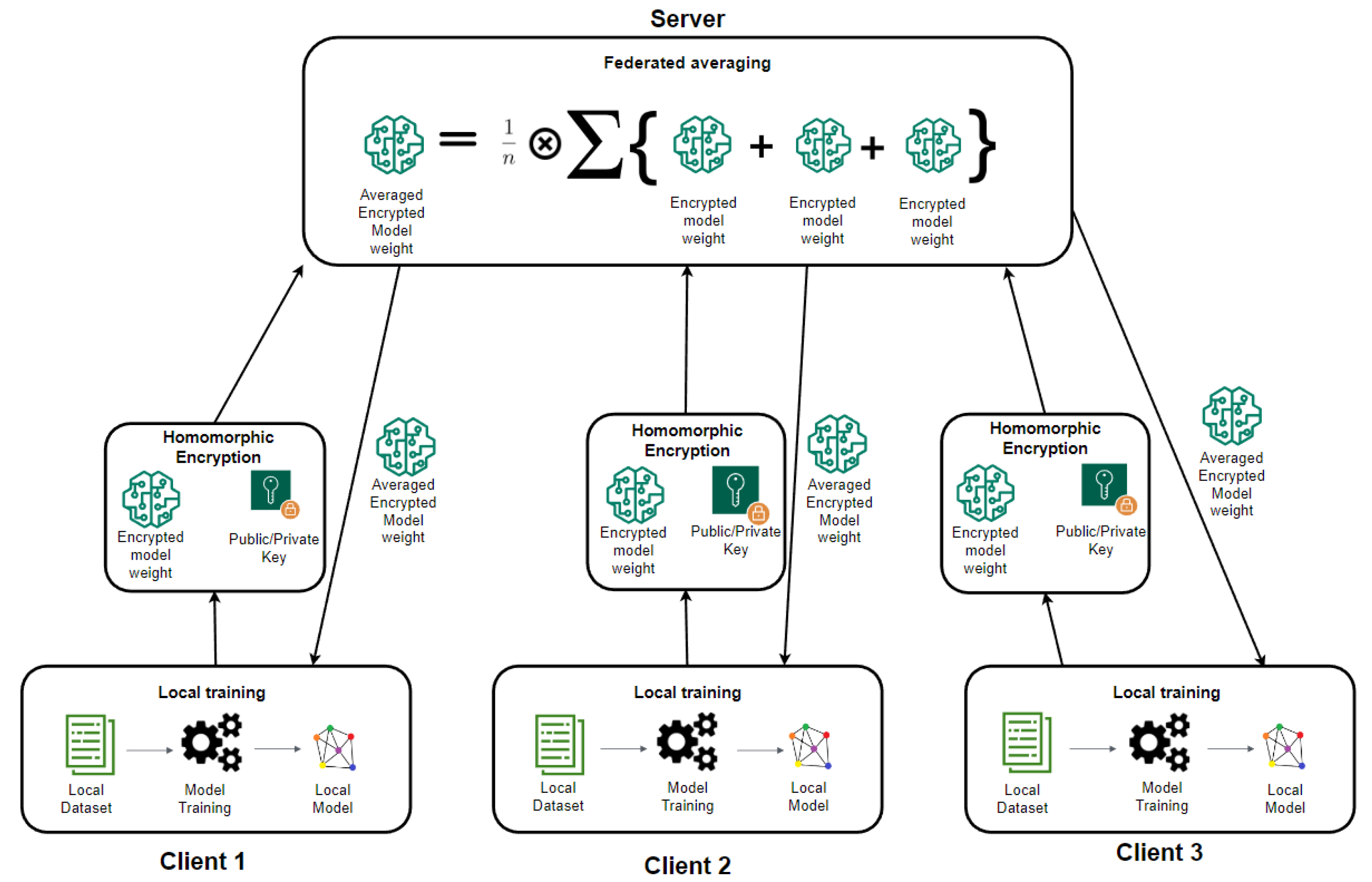

Encryption:

Given a Python list of integers as a plaintext, convert the list to an Rq object for the Python plaintext to represent and behave as a polynomial over modulus q.

Encrypt the polynomial plaintext into two polynomial ciphertexts c using the formula , where m is the plaintext and e are error polynomials sampled from distribution . c is a tuple consisting of the polynomial ciphertexts c0 and c1. Listing 2 shows the encryption method.

| Listing 2. encrypting a message ‘m’ using lattice-based cryptography with error terms and public

key ‘a’. |

![Cryptography 07 00048 i002]() |

| Listing 3. decrypting a ciphertext ‘c’ using a lattice-based cryptography scheme with the secret key ‘s’. |

![Cryptography 07 00048 i003]() |

3.2.2. Modifications to RLWE.py for xMK-CKKS Integration

Our aim of implementing secure FL using the lattice-based xMK-CKKS scheme necessitated certain modifications to the original RLWE implementation. These modifications primarily involved the functions for key generation, encryption, decryption, and the inclusion of additional utility functions. In this subsection, we will follow a similar structure as before but with the inclusion of formulas to justify the changes made to the original RLWE implementation. All the procedures presented in this section are derived from [

27], which outlines the setup of the xMK-CKKS scheme for the key-sharing process and provides a detailed explanation of the encryption and decryption steps (steps 1 to 5).

Changes in Key Generation for xMK-CKKS Integration

In the FL context, each client must have a unique private key while utilizing a shared public key. To accomplish this, we employ the formulas proposed in the xMK-CKKS paper. Each client generates a distinct public key, denoted as b, by combining a shared polynomial a with their private key s. The server subsequently aggregates these individual public keys through modular addition, resulting in a shared public key distributed back to all the clients.

For each client i, we assume that the secret key is drawn from the distribution , and the error polynomial is drawn from the distribution . In contrast to the original RLWE implementation, where both the secret key and error polynomials have the same degree as the plaintext, in the xMK-CKKS scheme, the secret key is required to be a polynomial of a single degree. The polynomial a is shared among all clients by the server before they generate their respective keys. Each client’s public key can then be computed using the formula: .

Listing 4 shows the modified key generation function.

| Listing 4. Key Generation Method for Cryptographic Operations. |

![Cryptography 07 00048 i004]() |

Changes in Encryption for xMK-CKKS Integration

The encryption process has also undergone slight modifications from the original RLWE implementation. While the overall outcome remains the same, which is to encrypt a polynomial plaintext using a public key and three error polynomials, the modified scheme differs in that it only requires the first value of the aggregated public key for encryption instead of the entire public key. As a result, the encryption produces a tuple of two ciphertexts: ct = (, ).

For each client

i, let

be the client’s polynomial plaintext to be encrypted into the ciphertext

. Let

and

, which are the same for all clients. For each client, generate three error polynomials:

,

, and

. The polynomial

is drawn from the key distribution

and is used in the computation of both

and

. On the other hand,

and

are unique to either

or

and are drawn from the error distribution

. The computed ciphertext can then be obtained using the equation:

Listing 5 shows the updated encryption function.

| Listing 5. Encryption Method for Cryptographic Operation. |

![Cryptography 07 00048 i005]() |

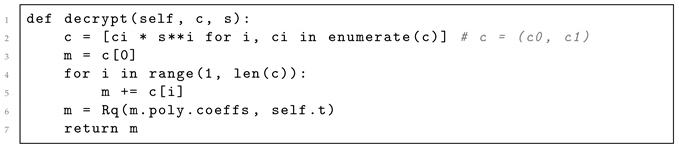

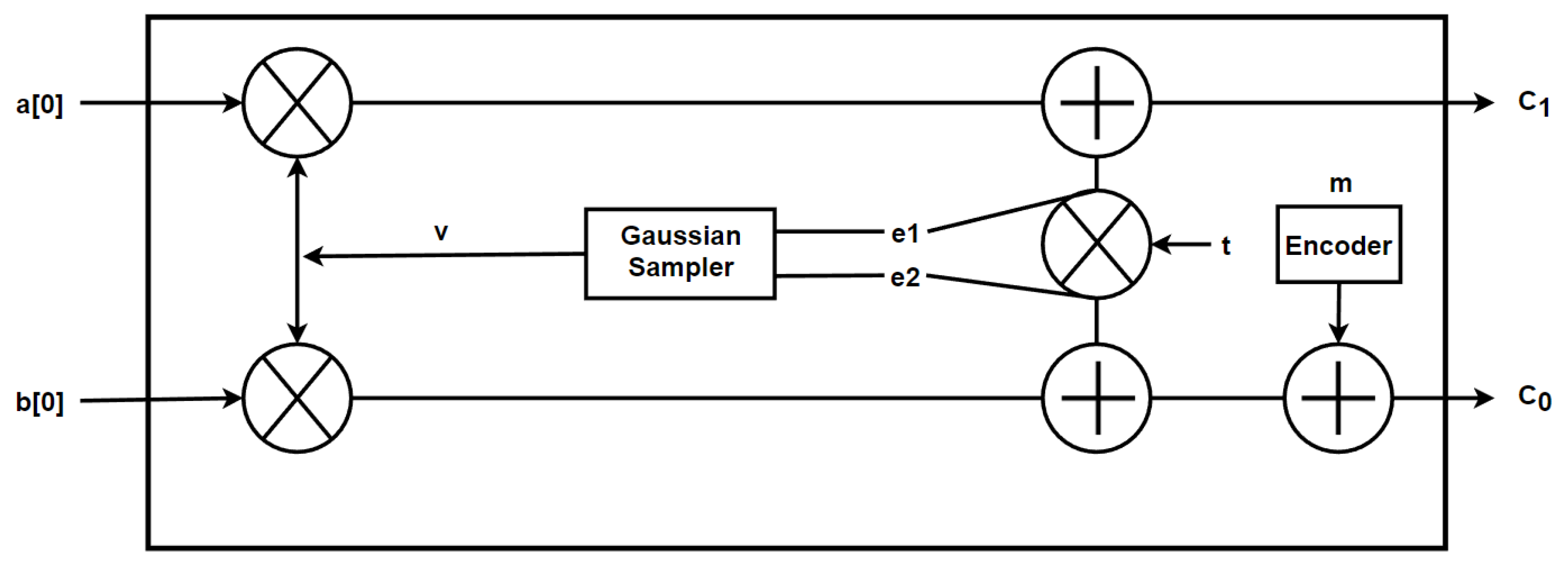

Changes in Decryption for xMK-CKKS Integration

In the case of FL with xMK-CKKS, clients cannot fully decrypt ciphertexts that have undergone homomorphic operations. Instead, clients compute a partial decryption share, and once the server has successfully retrieved all the partial results, it can decrypt the aggregated ciphertexts from all the clients.

For each client

i, let

be the client’s secret key, and let

be an error polynomial sampled from distribution

, assuming that

has a more considerable variance than the distribution

used in the basic scheme. The server collects all the ciphertexts

and

from each client, and let

be the aggregated result of the modular addition of all the

ciphertexts. With these variables, a client can compute their partial decryption share

using the following equation:

Listing 6 shows the updated (now only partial) decryption function.

| Listing 6. Decryption Method for Cryptographic Operations. |

![Cryptography 07 00048 i006]() |

Additional modifications to the original RLWE implementation were made to enhance functionality. These modifications include adding methods to set and retrieve a shared polynomial a, which enables the server to generate a polynomial and distribute it to the clients for storage in their respective RLWE instances. Another modification involves including a function to convert a Python list into a polynomial object of type Rq, defined over a specified modulus. These modifications contribute to the improved versatility and convenience of the RLWE implementation.

3.2.3. Alterations to Utility.py

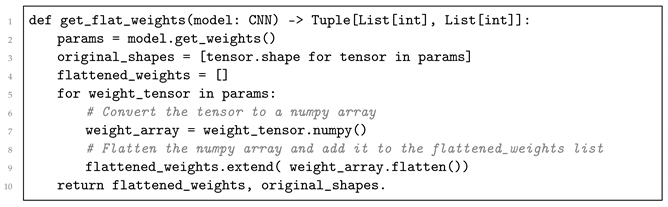

While our work did not require any modifications to the Rq file, we introduced several additional functions to the utils file. Specifically, we implemented functions to:

1. Get model weights and flatten them: This function retrieves the weights of a CNN model, which are organized in nested tensors, and flattens them into a single Python list. The function also returns the original shape of the weights for future reference. Clients use this function during the encryption stage, as we require the weights to be in a long Python list format to represent them as a polynomial. Listing 7 shows the extracting flattened weights and original shapes.

| Listing 7. Extracting flattened weights and original shapes from a CNN model’s parameters. |

![Cryptography 07 00048 i007]() |

2. Revert flattened weights back to original nested tensors: This function serves as the inverse operation of the previous function. It takes a long list of flattened weights and the original shape of the weights, allowing the function to reconstruct the weights back into their original nested tensor format. This function is used by clients at the end of each training round after the decryption process. Once all clients have sent their model updates to the server, the server computes an average and sends back the updated model weights to all clients before the next training round begins. At this point, clients need to update their local CNN models by retrieving the updated model weights and converting them back into the nested tensor format required by the CNN model. Listing 8 shows the unflattening weights using the original shapes.

| Listing 8. Unflattening weights using the original shapes to reconstruct the parameters. |

![Cryptography 07 00048 i008]() |

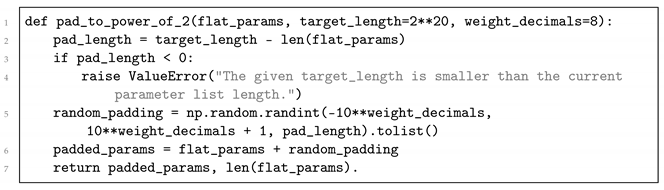

3. Pad the flattened weights to the nearest 2n: The xMK-CKKS scheme requires that the plaintext has a length of . We provide clients with a function to pad their plaintext to the required length to satisfy this requirement. The target length is the smallest power of 2, more significant than the length of the plaintext.

Furthermore, CNN model weights are initially floating-point numbers ranging from −1 to 1 with eight decimal places. Since the RLWE scheme cannot directly handle floating-point numbers, we scale the weights to large integers in our CNN class. By default, the weights are scaled up by a factor of , ensuring nearly complete precision of the model as all decimal places are preserved during the encryption process. However, we have made this scaling factor a static variable that can be modified to evaluate the trade-off between the accuracy and speed of the model by using fewer decimal places in the model weights. Listing 9 shows the padding a list of flattened parameters.

| Listing 9. Padding a list of flattened parameters to a specified length. |

![Cryptography 07 00048 i009]() |

3.3. Flower Implementation of xMK-CKKS

The Flower FL library offers a versatile and user-friendly interface for initializing and running FL prototypes. It is designed to be compatible with various machine learning frameworks and programming languages, with a particular emphasis on Python, as discussed in

Section 2.3.

In a typical Flower library setup, the user will implement the server and client functionalities in separate scripts, commonly named [server.py] and [client.py]. The [server.py] script primarily handles the initiation of the FL process. This typically entails creating a server instance and specifying various optional parameters, such as a strategy object. A strategy object instructs the server on aggregating and computing the model updates the clients received. Users can choose from various pre-existing strategies or customize their own by subclassing the predefined strategy class.

On the contrary, the [client.py] script is responsible for defining the logic of a client. Its main objective is to perform computations, such as training a local model on its dataset and sending the computed results (i.e., model updates) back to the server. To implement a client, the user must create a subclass of the Client or NumpyClient class provided by the Flower library and define the required methods: get_parameters, set_parameters, and fit. The get_parameters method is responsible for retrieving the parameters of the local model, while the set_parameters method updates the model with new parameters received from the server. The fit method, on the other hand, oversees the training process of the current model.

This architecture provides a flexible, scalable, and highly adaptable environment for implementing FL across various specifications. Users can utilize their preferred machine learning framework while still enjoying the inherent privacy and security benefits of FL.

While the standard Flower setup offers excellent flexibility, it does have a limitation regarding the support for custom communication messages between the server and client. This limitation becomes apparent when implementing the xMK-CKKS scheme, as it requires data exchange beyond the pre-defined message types in the original Flower library. We had to fork the library’s repository and modify the source code to overcome this limitation. The modifications involved changing and compiling several related files to incorporate our custom messages. These changes were crucial in successfully implementing the xMK-CKKS scheme. The following section will provide a comprehensive guide on the necessary steps for creating custom message types. However, before delving into that, we will discuss integrating the xMK-CKKS scheme into the Flower source code, assuming that all the required message types have already been created.

3.3.1. RLWE Instance Initialization

At the heart of our xMK-CKKS implementation are our custom Ring Learning with Errors (RLWE) instances. These are integral to our work and each server and client instance needs their own rlwe instance. In our custom client script client.py, we subclass the NumpyClient and pass an rlwe instance. Similarly, in server.py, we provide the server with an rlwe instance by subclassing the pre-defined strategy FedAvg. Listing 10 shows the dynamic settings for initializing an RLWE.

| Listing 10. Dynamic settings for initializing an RLWE encryption scheme. |

![Cryptography 07 00048 i010]() |

3.3.2. Key Sharing Process

Until now, when referring to the server.py file, we have been discussing the user-created server.py file, typically used to start a Flower server. However, starting from this section onwards, when we mention server.py, we are explicitly referring to the server.py file located within the source code of the Flower library itself. This server.py file serves as the foundation for the library’s operations, and our modified version allows us to make significant changes to the communication and encryption procedures between the server and clients. It is important to note that our Python code directly deals with polynomial objects for encryption and decryption computations. In contrast, the transmission of polynomials between the server and clients requires them to be converted into Python lists of integers.

- Step 1:

Polynomial Generation and Key Exchange

The key sharing process is initiated in the modified server.py script. Just before the training loop in Flower, our custom communication message types are employed to ensure that all participating clients possess the same aggregated public key. In the modified server.py script, the local RLWE instance is utilized to generate a uniformly generated polynomial a. This polynomial “a” is then sent by the server to all the clients, who use it to generate their private keys and corresponding public keys “b”. The clients respond to the server’s request by returning their public key “b”.

Listings 11 and 12 show the server and client side code for the first step.

(Forked) Server.py:

| Listing 11. Server-side code for the first step in an RLWE-based secure computation process. |

![Cryptography 07 00048 i011]() |

Client.py:

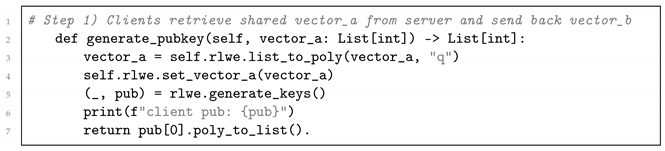

| Listing 12. Client-side code for the first step in an RLWE-based secure computation process. |

![Cryptography 07 00048 i012]() |

- Step 2:

Public Key Aggregation

After collecting all the public keys from the participating clients, the server performs modular additions to aggregate these keys. The resulting aggregated public key is then sent to all clients through a message request from the server. After training, clients store this shared public key in their RLWE instance to encrypt their local model weights. Upon receiving the shared public key, the clients respond to the server with a confirmation. Listings 13 and 14 show the server and client side code for the second step.

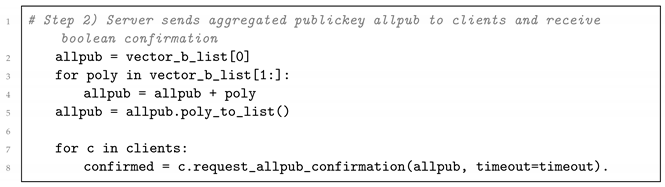

| Listing 13. Server-side code for the second step in an RLWE-based secure computation process. The

server aggregates public keys received from clients and sends the aggregated ‘allpub’ to clients then

awaits confirmation from each client. |

![Cryptography 07 00048 i013]() |

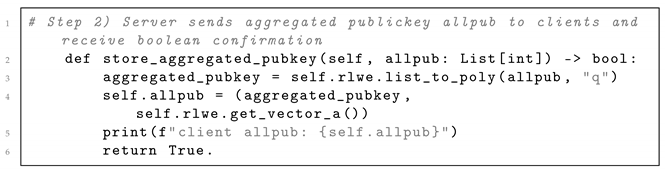

| Listing 14. Client-side code for the second step in an RLWE-based secure computation process.

Clients receive the aggregated public key ‘allpub’ from the server and confirm the reception. |

![Cryptography 07 00048 i014]() |

3.3.3. Training Loop and Weight Encryption Process

After completing the key sharing process, the server initiates the federated training loop. During this loop, each client independently trains its local model using its local dataset. Once the training is complete, the server collects and aggregates the model updates from all clients. The server then calculates the average of the aggregated model updates and redistributes the updated model to all clients for the next training round. This process ensures collaboration and synchronization among all clients in the FL process. Listing 15 shows the loop of a distributed training process.

| Listing 15. The loop that iterates through multiple rounds of a distributed training process where a

local model is trained simultaneously on all participating clients in each round. |

![Cryptography 07 00048 i015]() |

In a standard setup of the Flower library, clients transmit their unencrypted model weights to the server, which raises significant security concerns. If any client trains its model on sensitive data, an untrusted server or malicious client could perform an inversion attack to infer the client’s training data based on the updated model weights. In contrast, our implementation of the xMK-CKKS scheme significantly enhances the security and privacy of this process. In our implementation, clients encrypt their model weights before transmitting them to the server. The server then homomorphically aggregates all the encrypted updates, ensuring that neither the server nor any client can read the individual model updates of other clients. This encryption scheme provides a strong layer of security and protects the confidentiality of each client’s training data throughout the FL process.

Moreover, in the standard setup of Flower, clients transmit their complete updated model weights. In contrast, our approach deviates from this by sending only the gradients of the updated weights. Each client locally compares the new weights with the old ones and communicates only the weight changes to the server. Similarly, the server homomorphically aggregates all the gradients and returns the weighted average to the clients. This approach ensures that the server never has access to the complete weights of any model, thereby reducing the level of trust required from the participating clients. By transmitting only the weight differentials, the privacy and confidentiality of the client’s model are further protected, as the server only receives information about the changes made to the weights rather than the total weight values.

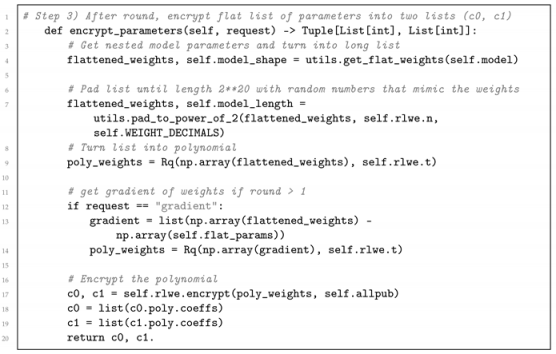

- Step 3:

Weight Encryption and Transmission

At the end of each round, when the clients have trained their local models, the server sends a request for the updated weights. The clients will convert the nested tensor structure of the weights into a long Python list. This will be the plaintext and the following encryption will result in two ciphertexts c0, c1. The server will then homomorphically aggregate all the c0 and c1 received from the clients to a c0sum and c1sum variable. The encryption of plaintexts is performed by the client’s RLWE instance and is based on Equation (

8), while the server aggregates the ciphertexts accoAfter each round, the server requests the updated weights when the clients have completed training their local models. The clients convert the nested tensor structure of the weights into a long Python list, which serves as the plaintext for encryption. Subsequently, the encryption process produces two ciphertexts, c0 and c1. The server then performs homomorphic aggregation on all the received c0 and c1 ciphertexts, resulting in the variables c0sum and c1sum.

The encryption of plaintexts is carried out by the client’s RLWE instance, following the equation shown in Equation (

8). On the other hand, the server aggregates the ciphertexts using Equation (

9):

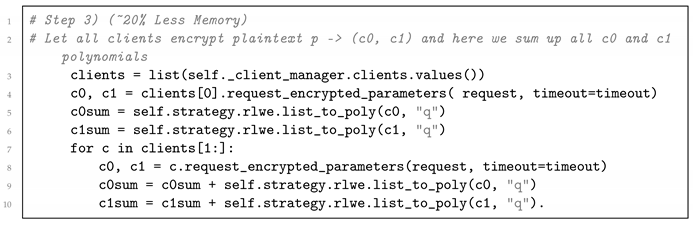

Listings 16 and 17 show the server and client side code for Step 3 in the process. (Forked) Server.py:

| Listing 16. Step 3 in the process where all clients encrypt plaintext ‘p’ into (c0 c1) polynomials and

the server aggregates and sums up these polynomials ‘c0sum’ and ‘c1sum’ from all clients. |

![Cryptography 07 00048 i016]() |

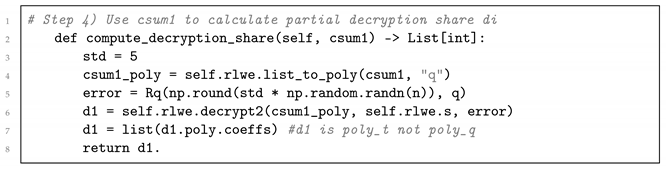

- Step 4:

Aggregation and Partial Decryption

Upon receiving the encrypted ciphertexts from all the clients, the server requests each client to send a partial decryption share based on the previously computed c1sum. The clients compute their partial decryption share, denoted as “d”, through modular operations involving c1sum, their private key, and an error polynomial. The server performs homomorphic aggregation on all the partial decryption shares, resulting in the variable dsum. The client’s RLWE instance carries out the partial decryption process, following the equation described in Equation (

10).

Client.py:

| Listing 17. Step 3 of the process involves encrypting a flat list of parameters into two lists ‘c0’ and ‘c1’

using the RLWE encryption scheme. |

![Cryptography 07 00048 i017]() |

Listings 18 and 19 show the server and client side code for Step 4 in the process.

| Listing 18. Step 4 in the process where the server sends ‘c1sum’ to clients and retrieves all decryption

shares ‘d_i’. The shares are aggregated and summed up to obtain ‘dsum’. |

![Cryptography 07 00048 i018]() |

| Listing 19. Step 4 of the process involves computing the decryption share ‘di’ using ‘csum1’. The

function processes ‘csum1’ then decrypts it and returns ‘di’ as a list of integers. |

![Cryptography 07 00048 i019]() |

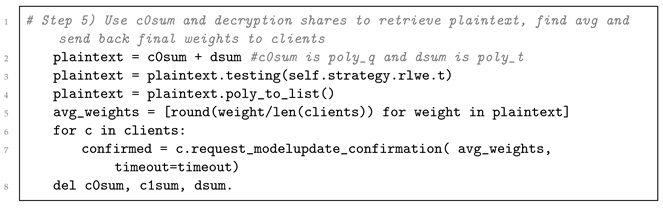

- Step 5:

Weight Updates and Model Evaluation

In the final step of the xMK-CKKS scheme, the server performs modular addition between c0sum and dsum. This operation yields a very close approximation of the sum of all the plaintexts that would have resulted from unencrypted standard addition. The server then calculates the average of the aggregated updates, taking into account the number of participating clients. The resulting average becomes the final model update for the next training round, which the server sends to all the clients.

Upon receiving the updated model weights, the clients overwrite their current weights with the new ones. This step also involves evaluating the performance of the new model weights. The modular addition is performed according to the following equation:

Listings 20 and 21 show the server and client side code for Step 5 of the process.

| Listing 20. Step 5 of the process involves using ‘c0sum’ and decryption shares ‘dsum’ to retrieve

plaintext then calculate the average and send back the final weights to clients. The average weights

are calculated and sent to clients and memory is freed up by deleting unnecessary variables. |

![Cryptography 07 00048 i020]() |

| Listing 21. Step 5 of the process involves receiving approximated model weights from the server and

setting the new weights on the client side. The function converts the received weights then updates

them and then restores the weights into the original tensor structure of the neural network model. |

![Cryptography 07 00048 i021]() |

3.4. Flower Guide: Create Custom Messages

This section guides implementing custom messages, a crucial aspect of integrating the xMK-CKKS scheme into the Flower architecture. Including custom messages enables data transmission between the server and clients beyond the predefined message types provided by the library.

Regrettably, the Flower library does not provide a straightforward method for creating custom messages. Consequently, we are compelled to fork the library and modify the source code to manually introduce the required changes for implementing this functionality. It is important to note that this task is nontrivial, as making incorrect changes can disrupt the existing gRPC communication and other components, failing the FL process.

The Flower library’s documentation site features a contributor section where a third party has attempted to provide a guide on modifying the source code. However, despite some typographical errors, this guide needs more files and changes necessary for the task [

35]. To address this gap, our section aims to serve as a comprehensive guide to implementing custom messages in the Flower library. We provide extensive code snippets and explanations to facilitate this process, which is currently largely undocumented.

With our contribution, we aim to support and assist future projects seeking to modify and enhance FL frameworks.

Now, we will proceed with a step-by-step example demonstrating creating a custom communication system, similar to how we developed our protobuf messages for transferring list-converted polynomials. The initial step involves forking the library repository, and upon obtaining access to all the source code files, the following files must be modified: ‘client.py’, ‘transport.proto’, ‘serde.py’, ‘message_handler.py’, ‘numpy_client.py’, ‘app.py’, ‘grpc_client_proxy.py’, and finally ‘server.py’.

Let us consider a scenario where the server needs to send a string request to the clients, asking them to respond with a list of integers representing the weights of their local models. Here is an example function from client.py. Listing 22 shows the retrieving model weights.

| Listing 22. Function for retrieving model weights based on the request type. |

![Cryptography 07 00048 i022]() |

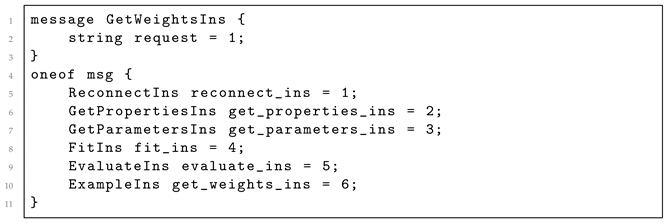

3.4.1. transport.proto (Defining Message Types)

The utilization of Google’s Protocol Buffers (protobuf) by Flower has provided us with an advantageous ability to make modifications with ease. Protobuf is a language-agnostic and platform-neutral tool that enables the serialization of structured data, granting versatility by creating multiple message types, including integers, floats, booleans, strings, and even other message types. The first step in this process involves defining message types for the RPC communication system [

36].

A protobuf file consists of various message types, primarily divided into two main blocks: ServerMessage and ClientMessage. The ServerMessage block includes the kinds of messages the server can send, while the ClientMessage block has a similar structure for client-side messages. Conventionally, when designing protobuf messages, it is common practice to pair the server request and client response messages with similar names to indicate their association as part of the same operation. The official proto3 documentation often uses pairing words like ExampleRequest and ExampleResponse, while Flower’s protobuf file uses ExampleIns (Ins for Instruction) and ExampleRes (Res for Response). Ultimately, the choice of naming convention can be arbitrary. Listings 23 and 24 show the server and client side code for Protocol Buffer message definition.

Within the “ServerMessage” block:

| Listing 23. Protocol Buffer message definition in which the ‘GetWeightsIns’ message includes a

‘request’ field of type string. |

![Cryptography 07 00048 i023]() |

Within the “ClientMessage” block:

| Listing 24. Protocol Buffer message definition for the ‘GetWeightsRes’ message which includes a

‘response’ field of type string and a repeated field ‘l’ of type int64. |

![Cryptography 07 00048 i024]() |

After defining protobuf messages, you need to compile them using the protobuf compiler (protoc) for generating the corresponding code for your chosen programming language (in our case, Python). To compile, we navigate to the correct folder and use the command:

If it compiles succesfully, you should see the following messages:

3.4.2. Serde.py (Serialization and Deserialization)

Serialization and deserialization, commonly known as SerDe, play a crucial role in distributed systems as they convert complex data types into a format suitable for transmission and storage and vice versa. To facilitate this conversion for our defined RPC message types, our next step is to include functions in the serde.py file. This file will handle the serialization process of Python objects into byte streams and the deserialization process of byte streams back into Python objects.

To ensure the proper serialization and deserialization of data in each server and client request-response pair, we need to add four functions to the serde.py file. Firstly, we require a function on the server side to serialize the Python data into bytes for transmission. Secondly, on the client side, we need a function to deserialize the received bytes back into Python data. Thirdly, on the client side, we need a function to serialize the Python data into bytes for transmission back to the server. Lastly, we need a function on the server side to deserialize the received bytes back into Python data. These four functions collectively enable seamless data conversion between Python objects and byte streams for effective communication. Listing 25 defines functions for serializing and deserializing messages.

| Listing 25. Define functions for serializing and deserializing messages between the server and client

using Protocol Buffers. |

![Cryptography 07 00048 i025]() |

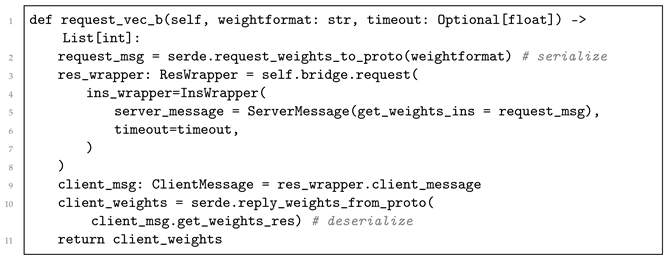

3.4.3. grpc_client_proxy.py (Sending the Message from the Server)

This file is crucial for smooth data exchange between the client and server. Its primary function entails the serialization of messages originating from the server and the deserialization of responses transmitted by the clients. Listing 26 shows the client-side implementation for requesting vector.

| Listing 26. The client-side implementation for requesting vector ‘b’ in a specific weight format from

a server. |

![Cryptography 07 00048 i026]() |

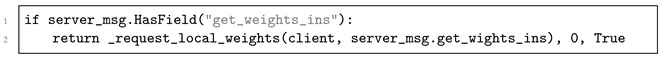

3.4.4. message_handler.py (Receiving Message by Client)

This particular file is responsible for managing the processing of incoming messages. Its various functions are designed to efficiently extract the message payload from the server, convert it into an understandable format, and then direct it towards the appropriate function within your clients. After completing this process, the results are converted into a legible form and transmitted to the server. Listings 27 and 28 show the conditional statements.

Within the handle function:

| Listing 27. Conditional statement that checks if the received ‘server_msg’ contains a

‘get_weights_ins’ field. |

![Cryptography 07 00048 i027]() |

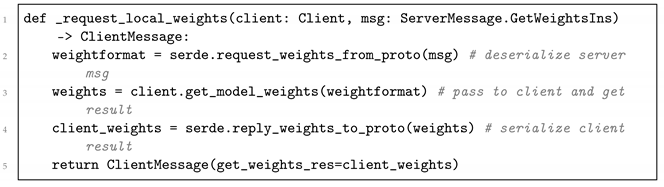

Add a new function:

| Listing 28. Client Request for Local Weights with Serialization and Deserialization. |

![Cryptography 07 00048 i028]() |

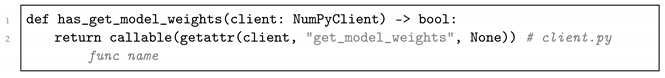

3.4.5. numpy_client.py

This file defines how the pre-defined numpyclient interacts with the server. If your client.py subclasses NumpyClient, one must add new methods for sending and receiving custom messages. Listing 29 shows below.

| Listing 29. Function for requesting and retrieving local model weights from the client based on the

provided ‘weightformat’ received from the server. |

![Cryptography 07 00048 i029]() |

3.4.6. app.py

Here, you implement the functionality for handling your custom messages. If your message were a request to perform some computation, one would write the function that performs this computation here. Listing 30 shows the import for client.py file.

| Listing 30. Imports. |

![Cryptography 07 00048 i030]() |

Listing 31 defines this function above the _wrap_numpy_client function.

| Listing 31. Wrapper function that retrieves model weights from a client using the specified ‘weightformat’. |

![Cryptography 07 00048 i031]() |

Listing 32 adds wrapper type method inside the _wrap_numpy_client function.

| Listing 32. If the ‘numpyclient’ has a ‘get_model_weights’ method assign the ‘_get_model_weights’

function to the ‘member_dict’ dictionary with the key ‘get_model_weights’. |

![Cryptography 07 00048 i032]() |