Abstract

3D least-squares matching is an algorithm that allows to measure subvoxel-precise displacements between two data sets of computed tomography voxel data. The determination of precise displacement vector fields is an important tool for deformation analyses in in-situ X-ray micro-tomography time series. The goal of the work presented in this publication is the development and validation of an optimized algorithm for 3D least-squares matching saving computation time and memory. 3D least-squares matching is a gradient-based method to determine geometric (and optionally also radiometric) transformation parameters between consecutive cuboids in voxel data. These parameters are obtained by an iterative Gauss-Markov process. Herein, the most crucial point concerning computation time is the calculation of the normal equations using matrix multiplications. In the paper at hand, a direct normal equation computation approach is proposed, minimizing the number of computation steps. A theoretical comparison shows, that the number of multiplications is reduced by 28% and the number of additions by 17%. In a practical test, the computation time of the 3D least-squares matching algorithm was proven to be reduced by 27%.

1. Introduction

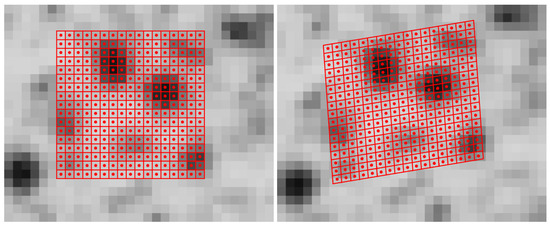

Volume data analysis in a voxel space representation is a logical extension of 2D image data processing. In the field of Photogrammetry and Computer Vision, image correlation techniques are often used for fine measurement of corresponding points in image sequences or stereo image pairs. Early contributions applied cross-correlation to image data [1]. In the early 80’s, gradient based algorithms were developed using an iterative least squares method [2] that also offers subpixel precision. Lucas and Kanade [2] only introduced two translation parameters, whereas Ackermann [3] and Grün [4] extended the model toward an affine and radiometric transformation, which also considers rotation, scaling and shear to obtain a better adaption for perspective distortions. As an example for the least-squares matching (LSM) algorithm, an image section of a textured concrete surface with two views is illustrated in Figure 1. Figure 1a shows a section of the reference image with the patch (subimage) consisting of the pixels included in the computation. The pixels are illustrated as red squares with red circles in the centers. In Figure 1b, an image section of the second view as well as the transformed patch (red squares with red circles) are depicted. In this example, the transformation includes translation, rotation, scale and shear and the corresponding parameters are obtained by the LSM algorithm. The most important parameters are the shifts, which typically show an accuracy of 0.02 to 0.05 pixels in this kind of application.

Figure 1.

Least-squares matching example: (a) reference image with the red colored patch; (b) second (warped) image with the red colored transformed patch.

It is also possible to use more complex geometric models for the perspective transformation [5]. Grün and Baltsavias [6] introduced geometrically constrained multiphoto matching, where the solution can inherently be forced to the epipolar line. Other contributions combined digital image matching and object surface reconstruction. In addition to a geometric 3D model of the surface, they used a radiometric model for the surface and the images. The unknowns of the surface densities, 3D points and image orientation parameters were computed in an iterative least-squares adjustment [7,8].

Maas et al. [9] transferred the idea from the 2D image matching to 3D voxel data (digital volume correlation DVC). They applied the subvoxel-precise cuboid tracking (3D least-squares matching) with 12 parameters of a 3D affine transformation to a sequence of multi-temporal voxel representations of mixing fluids. Bay et al. [10] extended the cross-correlation technique to 3D, combined it with a subvoxel-precise refinement and applied it to X-ray tomography data for strain analysis. Liebold et al. [11] also introduced a radiometric transformation to 3D cuboid matching.

In the last years, also free DVC code was provided. For instance, TomoWarp2 ensures the computation of 3D displacement fields using 3D cross-correlation [12]. They also implemented a further step to calculate subvoxel positions considering the maximum cross-correlation integer position and the 26 neighbor voxel around. The free software package SPAM is based on TomoWarp2 [13] and also provides a tool for the coarse registration that can consider a rotation between the reference and the deformed volume. In ALDVC [14], a Fast Fourier Transform (FFT) is applied for the estimation of initial shift values, followed by an algorithm including a 12-parameter affine transformation similar to 3D least-squares matching for computing subvoxel positions.

The least-squares matching algorithm contains an adjustment procedure where normal equations are computed. To speed up the process, Sutton et al. [15] showed a direct computation of the normal equations for 2D least-squares matching including affine parameters, and Jin et al. [16] also included radiometric parameters.

The publication at hand is based on the work of Maas et al. [9] and Liebold et al. [11]. It presents an optimized algorithm for the 3D LSM, likewise to what has been shown for 2D [15,16]. The central goal of the work is the acceleration of the 3D algorithm in order to save computation time and memory, without restrictions to the universality of the algorithm. The paper is structured as follows: In the next section, an overview of the computation of 3D least-squares matching is given including the optimization. In addition, a comparison to the standard method is shown. The publication closes with a conclusion.

2. 3D Least-Squares Matching

2.1. Mathematical Model

In the following, some formulae of Liebold et al. [11] are reused and extended. 3D least-squares matching is a gradient-based method and is applied to two voxel data sets. In the reference volume, a small cuboid is defined containing the voxel of the cuboid’s center (point to be matched) and its neighborhood. The dimensions , and are odd numbers, and a typical cuboid size is for example voxel. The aim is to find the corresponding cuboid in the second volume data set. The coordinates of the center of the cuboid in the reference state are integer values, whereas the coordinates of the voxel in the second state are floating-point values.

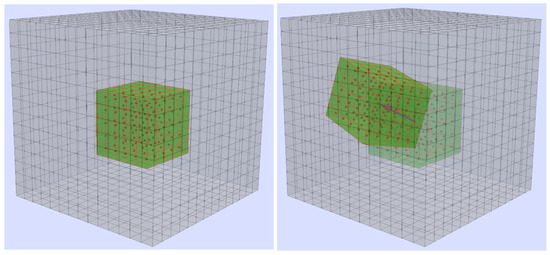

Similar to Figure 1 for 2D, Figure 2 illustrates the 3D case with a voxel grid (gray). In the reference state, a subvolume consisting of e.g., voxel (green cuboid with red edges and red center points, Figure 2a). In the second state, depicted in Figure 2b, the affine-transformed cuboid as well as the faded reference subvolume are shown. The shift vector that connects the center points of the subvolumes is colored in magenta. 3D LSM is used to determine the transformation parameters, including the three translations.

Figure 2.

(a) Reference voxel grid with the subvolume including the voxel (green with red edges and red center points) that are used for the computation. (b) Second volume with the transformed subvolume. The shift vector is colored in magenta and the reference subvolume is faded.

The range of the cuboid in the reference volume is:

where ; ;

Equation (2) shows the relationship between the gray values of the first (reference) and second (deformed) volume, also taking into account radiometric parameters (). This relationship is valid for each voxel of the cuboid.

where

Between the reference and the deformed state, a coordinate transformation is performed. Similar to [3] in 2D and [9] in 3D, an affine transformation is used as mathematical model containing displacements, rotations, scaling and shear, see Equation (3), where and are the translation parameters.

where

The reduced reference coordinates are computed as follows:

Thus, the vector of unknowns is:

The parameter vector consists of 14 unknowns, and a cuboid with an exemplary size of voxel (observations) leads to an over-determination. Thus, the parameters of are computed in a least-squares adjustment process. Therefore, residuals are added to Equation (2) that results in the observation equations:

where = residuum

The first terms of the Taylor series expansion are used to linearize the observation equation, see Equation (7) where initial values of the parameters are included. They are marked with an additional zero subscript. Section 2.2 shows a way to obtain initial values for 3D LSM.

where

The volume gradients of the gray values are written with their short forms in Equation (7). The full notation is:

There are different ways to obtain these gradients: One possibility is the computation of central differences (numerical differentiation, Equation (9)) at integer positions () combined with a tri-linear interpolation in order to calculate the derivatives at the floating point values of and .

where

Another approach is the use of tri-cubic spline interpolation to compute the derivatives [17] (derivatives of cubic polynomials). And a further possibility is the calculation of the derivatives in the reference volume as an approximation. The advantage of the last point is once-only computation in the iterative least-squares process.

Thus, the differentials , and are:

where

The vector of corrections to the unknowns is:

The vector of unknowns results from the sum of the vector of the initial values and the vector of the corrections to the unknowns :

The linearized observation equations can be written in matrix notation:

where

- A = Jacobian matrix

- l = reduced observation vector

- v = residual vector

The Jacobian matrix is built up as follows:

where

The reduced observation vector is:

In the Gauss-Markov model, the weighted sum of the squared residuals is minimized:

where = matrix of weights

In the following, only the case of equally weighted observations (, : identity matrix) is considered, see Equation (21).

The solution of this problem can be obtained using the normal equations:

As the computation of the normal matrix and the right hand side are done directly, it is not necessary to build up and store the whole Jacobian matrix and the reduced observation vector . The detailed computation process is shown in Section 2.3.

After setting up the matrices and , Equation (22) is solved for and the initial values of the unknowns are updated:

The steps of the interpolation of the gray values and their derivatives as well as the computation of and , the determination of and the update of the unknowns are repeated until the process converges.

After the iterative adjustment routine, the residuals are determined by rearranging Equation (6). The gray values are obtained by applying Equation (3) with the updated affine parameters and tri-linear (or tri-cubic) interpolation. The individual residual is:

where = individual residual

The standard deviation of the unit weight is:

where

- s0 = standard deviation of the unit weight

- n = number of voxel of the cuboid

- n = number of unknowns

2.2. Initial Values

The model in Equation (2) is not linear and if the movements between the volume data sets may exceed the dimensions of the cuboid, initial values have to be obtained for the 3D LSM algorithm.

Often, mainly translations and only small rotations occur between different epochs of measurements. In these cases, 3D cross-correlation can be used to obtain initial shifts [10]. An overview of the optimized algorithm can be found in Appendix A.

2.3. Direct Computation of the Normal Equations

As mentioned above, the normal matrix () and the right hand side vector () are computed directly, so that building up the Jacobian matrix as well as the reduced observation vector and the matrix multiplications can be avoided in order to gain efficiency and save memory. To compute the terms of the normal equations, the dot products of the columns of the Jacobian matrix (Equation (14)) as well as the dot products with the reduced observation vector are calculated (each combination of columns). Equation (26) shows the normal matrix that is organized in submatrices. , , , , , and as well as the whole normal matrix are symmetric matrices and only the upper triangular matrices are shown. In Equation (28), the right hand side of the normal equations is depicted.

where

In fact, the geometric and radiometric parameters are usually independent on each other and are therefore often solved independently in LSM. If only the affine parameters are unknowns and the radiometric parameters are excluded from the model, the last two columns and last two rows of as well as the last two values of are omitted. Appendix B and Appendix C consider the two cases concerning this point.

For each voxel, the following terms are computed and saved in temporal variables because they are used several times:

2.4. Computational Effort

The computational costs are compared between the direct computation and the standard way that includes building up and as well as the matrix multiplications ( and ). The detailed determination of the number of multiplication steps and addition steps is shown in the following.

2.4.1. Computational Costs of the Direct Method

A total of 100 terms have to be computed to form the normal equations directly, see Equation (32). This results from 60 terms of the matrices , , , , , and 26 terms of the matrices , , , as well as 14 further terms of . 26 terms (sums) of are already computed in , , and .

To evaluate the computational effort, the number of additions and multiplications are counted:

Altogether, for the direct computation of and , 95 multiplications and 100 additions per voxel are required. The additional multiplication and summations for building up and can be neglected due to the large number of voxel.

2.4.2. Computational Costs of the Standard Method

The computational effort of the standard method contains building up the Jacobian matrix and the reduced observation vector as well as the matrix-matrix multiplication and the matrix-vector multiplication.

The following terms are calculated and saved in temporal variables for each line of the matrix (for each voxel):

For the each line of matrix , the following nine terms have to be computed:

For each element of , there are one multiplication and two additions (subtractions):

- Multiplications per voxel:

- −

- One multiplication for building up the reduced observation vector .

- −

- Three multiplications for the terms of Equation (33) that are computed temporally due to multiple use for building up the Jacobian matrix.

- −

- Nine further multiplications for building up the Jacobian matrix of Equation (34).

- −

- 105 multiplications (105 elements) due to the matrix multiplication of (only upper triangular matrix).

- −

- 14 multiplications (14 elements) due to the matrix-vector multiplication of .

- Additions per voxel:

- −

- Two additions for building up the reduced observation vector .

- −

- 105 additions (105 elements) due to the matrix multiplication of (only upper triangular matrix).

- −

- 14 additions (14 elements) due to the matrix-vector multiplication of .

Altogether, for building up and and for the matrix multiplications and , 132 multiplications and 121 additions per voxel are required.

2.4.3. Theoretical Comparison

Table 1 summarizes the consideration and shows the number of multiplications and additions per voxel for the standard method and the direct computation of the normal equations. The direct method reduces the number of multiplications by 28% and reduces the number of additions by 17%. Appendix B and Appendix C also consider the cases that the radiometric parameters are fixed and that these parameters are omitted. In addition to the reduced number of multiplications and summations, the direct method also save memory because the computation of the Jacobian matrix () and observation vector () is avoided.

Table 1.

Comparison of the computational effort between the direct calculation of the normal equations and the standard method.

2.4.4. Comparison of Time Measurements in Practise

The comparison was done on a desktop machine with a AMD Ryzen 7 3800X 8-Cores (16 threads) processor at 3.9 GHz. In the test, 3D LSM was applied to 146,933 cuboids defined in the first two data sets of an in-situ tension test of Lorenzoni et al. [18]. The patch dimension was set to vx. The algorithm is implemented in C++ (using the GNU Compiler Collection, https://gcc.gnu.org) and compiled with the optimization option -O3. The loop over the matching points with the computation of the 3D LSM is parallelized with the OpenMP library (16 parallel units), and the time measurement only considers this loop. The time measurement includes the whole 3D LSM process so that not only the calculation of the normal matrix and the right hand side vector is analyzed. However, the computation of the normal equations is one of the most time-consuming steps herein.

Different calculation modes are tested: Method M1 stands for the direct computation. M2 represents the standard computation with building up the Jacobian matrix and the reduced observation vector as well as the matrix multiplications (only upper triangular matrix of the normal matrix) with an own implementation. M3 and M4 are similar to M2, but the matrix multiplications are done using the Eigen library. Eigen is a C++ template library for linear algebra (release 3.4.0, https://eigen.tuxfamily.org/). For M3, the full matrix of is computed, and for M4, only the upper triangular matrix is computed. For all modes, before the calculation of the normal matrix begins, the values of the interpolated gray values and derivatives are computed and saved in separate arrays. All modes lead to the same numerical results, only the calculation times differ. Table 2 shows the calculation times for the different modes. The direct method is the fastest variant and almost twice as fast as the own standard computation M2. Compared to the best matrix multiplication variant M4 using Eigen (only upper triangular matrix), the direct method reduces the computation time by 27%. This reduction approximately agrees with the theoretical decrease of the multiplications of 28% (Section 2.4.3). The computation time difference between M3 and M4 is very small (only 9% decrease), although significantly more matrix elements (91) have to be computed (M3: 132 multiplications and 121 addition per voxel, M4: 223 multiplications and 212 addition per voxel).

Table 2.

Comparison of the computation times with different modes for the calculation of the normal equations.

3. Conclusions

The paper presents an optimized algorithm to compute 3D least-squares matching in voxel data sequences including geometric and radiometric parameters. Compared to the standard method of the estimation of the normal equations, the number of multiplications is reduced by 28% and the number of additions is reduced by 17%. In a practical test, the computation time was decreased by 27%.

Author Contributions

Conceptualization, writing—review and editing, F.L. and H.-G.M.; methodology, writing—original draft preparation, visualization F.L.; supervision, funding acquisition, project administration, H.-G.M. All authors have read and agreed to the published version of the manuscript.

Funding

The research work presented in the publication has been funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation–SFB/TRR 280, Projekt-ID: 417002380).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Furthermore, we thank the Institute of Construction Materials at Technische Universität Dresden, the Department of Chemical and Materials Engineering at the Pontificía Universidade Católica do Rio de Janiero and the Bundesanstalt für Materialforschung und-prüfung in Berlin for providing the data sets.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LSM | least-squares matching |

| vx | voxel |

| DVC | digital volume correlation |

| SHCC | strain-hardening cement-based composites |

| FFT | Fast Fourier Transform |

Appendix A. Optimized 3D Cross-Correlation

In the following, an optimized method of the 3D cross-correlation is presented. In our approach, the normalized cross-correlation coefficient is used, see Equation (A2). t is the template, the subvolume of the reference volume, to be matched. The number of voxel of the template is

where

There are optimized methods to compute the transformed terms using two approaches:

- The values of are computed for the whole search area using the 3D Fast Fourier transform as also done by Lewis [19] for 2D and Yang et al. [14] for 3D: where are the complex conjugate values (∘: Hadamard product, element-wise multiplication). is a volume data set with the same size as the search volume containing t and is filled up with zeros for indices exceeding the dimensions of t.

- The values of and are computed using the integral volume method [20].

The terms and are computed straight forward. The position of the maximum coefficient can be used as initial position for the 3D LSM.

Appendix B. 3D Least-Squares Matching with Fixed Radiometric Parameters

This section considers the case that the radiometric parameters are fixed and shows the most important changes.

Appendix B.1. Mathematical Model

The relationship between the gray values of the reference and the second volume stays the same as before, see Equation (2).

The vector of unknowns reduces to the affine parameters:

In the case of fixed radiometric parameters, the last two columns of the Jacobian matrix are omitted compared to Equation (14) (for and see Equations (15) to (17)):

The reduced observation vector does not change:

Appendix B.2. Computational Effort

Appendix B.2.1. Direct Method

As above, for each voxel, the following terms are computed and saved in temporal variables because they are used several times:

60 terms of the matrices , , , , and (Equation (30)) and 12 terms of are computed. To evaluate the computational effort of the direct method, the number of additions and multiplications are counted. Only the operations per voxel are considered, the other operations can be neglected.

- Multiplications per voxel:

- −

- Ten multiplications for the temporally terms of Equation (A12).

- −

- 60 multiplications in the matrices.

- −

- 12 multiplications in the sums of .

- Additions per voxel:

- −

- Two additions (subtractions) in .

- −

- 60 additions in the 60 sums of the matrices.

- −

- 12 additions in the 12 sums of .

All in all, 82 multiplications and 74 additions have to be computed per voxel for the direct method.

Appendix B.2.2. Standard Method

For the standard method, building up and as well as the matrix multiplications are considered:

- Multiplications per voxel:

- −

- One multiplication for building up the reduced observation vector .

- −

- Three multiplications for the terms of Equation (33) that are computed temporally due to multiple use for building up the Jacobian matrix.

- −

- Nine further multiplications for building up the Jacobian matrix of Equation (34).

- −

- 78 multiplications (78 elements) due to the matrix multiplication of (only upper triangular matrix).

- −

- 12 multiplications (12 elements) due to the matrix-vector multiplication of .

- Additions per voxel:

- −

- Two additions (subtractions) for building up the reduced observation vector .

- −

- 78 additions (78 elements) due to the matrix multiplication of (only upper triangular matrix).

- −

- 12 additions (12 elements) due to the matrix-vector multiplication of .

Thus, for the standard method, 103 multiplications and 92 additions have to be computed per voxel.

Appendix B.2.3. Comparison

Table A1 shows the number of computations per voxel for the direct and the standard method. Using the direct method, 20.4% less multiplications and 19.6% less additions are done.

Table A1.

Comparison of the computational effort between the direct computation of the normal equations and the standard method (affine mathematical model with fixed radiometric parameters).

Table A1.

Comparison of the computational effort between the direct computation of the normal equations and the standard method (affine mathematical model with fixed radiometric parameters).

| Method | Multiplications per vx | Additions per vx |

|---|---|---|

| Standard | 103 | 92 |

| Direct | 82 | 74 |

Appendix C. 3D Least-Squares Matching with 12-Parameter Affine Transformation

In this section, only the affine transformation with 12 parameters is used as mathematical model.

Appendix C.1. Mathematical Model

The relationship between the gray values of the reference and the second volume is reduced to:

The vector of unknowns is the same as in the case of Appendix B:

In the Jacobian matrix , the parameter is omitted and also the reduced observation vector differs:

with the submatrices:

The right hand side of the normal equations simplifies to:

where

Appendix C.2. Computational Effort

Appendix C.2.1. Direct Method

Similar to the case above, for each voxel, the following terms are computed and saved in temporal variables because they are used several times:

60 terms of the matrices , , , , and (Equation (30)) and 12 terms of are computed. To evaluate the computational effort of the direct method, the number of additions and multiplications are counted. Only the operations per voxel are considered, the other operations can be neglected.

- Multiplications per voxel:

- −

- Nine multiplications for the temporally terms of Equation (A22).

- −

- 60 multiplications in the matrices.

- −

- 12 multiplications in the sums of .

- Additions per voxel:

- −

- One addition (subtraction) in .

- −

- 60 additions in the 60 sums of the matrices.

- −

- 12 additions in the 12 sums of .

All in all, 81 multiplications and 73 additions have to be computed per voxel for the direct method.

Appendix C.2.2. Standard Method

For the standard method, the buildup of and as well as the matrix multiplications are considered:

- Multiplications per voxel:

- −

- Nine multiplications for building up the Jacobian matrix of Equation (A15).

- −

- 78 multiplications (78 elements) due to the matrix multiplication of (only upper triangular matrix).

- −

- 12 multiplications (12 elements) due to the matrix-vector multiplication of .

- Additions per voxel:

- −

- One addition (subtraction) for building up the reduced observation vector .

- −

- 78 additions (78 elements) due to the matrix multiplication of (only upper triangular matrix).

- −

- 12 additions (12 elements) due to the matrix-vector multiplication of .

Thus, for the standard method, 99 multiplications and 91 additions have to be computed per voxel.

Appendix C.2.3. Comparison

Table A2 shows the number of computations per voxel for the direct and the standard method. Using the direct method, 18.2% less multiplications and 19.8% less additions are done.

Table A2.

Comparison of the computational effort between the direct computation of the normal equations and the standard method (affine mathematical model without radiometric parameters).

Table A2.

Comparison of the computational effort between the direct computation of the normal equations and the standard method (affine mathematical model without radiometric parameters).

| Method | Multiplications per vx | Additions per vx |

|---|---|---|

| Standard | 99 | 91 |

| Direct | 81 | 73 |

References

- Barnea, D.I.; Silverman, H.F. A Class of Algorithms for Fast Digital Image Registration. IEEE Trans. Comput. 1972, C-21, 179–186. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; Volume 2, pp. 675–679. [Google Scholar] [CrossRef]

- Ackermann, F. Digital Image Correlation: Performance and Potential Application in Photogrammetry. Photogramm. Rec. 1984, 11, 429–439. [Google Scholar] [CrossRef]

- Grün, A. Adaptive least squares correlation: A powerful image matching technique. S. Afr. J. Photogramm. Remote Sens. Cartogr. 1985, 14, 175–187. [Google Scholar]

- Bethmann, F.; Luhmann, T. Least-squares matching with advanced geometric transformation models. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38 Pt 5, 86–91. [Google Scholar]

- Grün, A.; Baltsavias, E.P. Geometrically constrained multiphoto matching. Photogramm. Eng. Remote Sens. 1988, 54, 633–641. [Google Scholar]

- Wrobel, B.P. Digital image matching by facets using object space models. In Advances in Image Processing; Oosterlinck, A.J., Tescher, A.G., Eds.; International Society for Optics and Photonics (SPIE): Bellingham, WA, USA, 1987; Volume 804, pp. 325–335. [Google Scholar] [CrossRef]

- Ebner, H.; Heipke, C. Integration of digital image matching and object surface reconstruction. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 1988, XXVII, 534–545. [Google Scholar]

- Maas, H.G.; Stefanidis, A.; Grün, A. From pixels to voxels: Tracking volume elements in sequences of 3D digital images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 1994, 2357, 539–546. [Google Scholar] [CrossRef]

- Bay, B.K.; Smith, T.S.; Fyhrie, D.P.; Saad, M. Digital Volume Correlation: Three-dimensional Strain Mapping Using X-ray Tomography. Exp. Mech. 1999, 39, 217–226. [Google Scholar] [CrossRef]

- Liebold, F.; Lorenzoni, R.; Curosu, I.; Léonard, F.; Mechtcherine, V.; Paciornik, S.; Maas, H.G. 3D least squares matching applied to micro-tomography data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 533–539. [Google Scholar] [CrossRef]

- Tudisco, E.; Andò, E.; Cailletaud, R.; Hall, S.A. TomoWarp2: A local digital volume correlation code. SoftwareX 2017, 6, 267–270. [Google Scholar] [CrossRef]

- Stamati, O.; Andò, E.; Roubin, E.; Cailletaud, R.; Wiebicke, M.; Pinzon, G.; Couture, C.; Hurley, R.C.; Caulk, R.; Caillerie, D.; et al. spam: Software for Practical Analysis of Materials. J. Open Source Softw. 2020, 5, 2286. [Google Scholar] [CrossRef]

- Yang, J.; Hazlett, L.; Landauer, A.K.; Franck, C. Augmented Lagrangian Digital Volume Correlation (ALDVC). Exp. Mech. 2020, 60, 1205–1223. [Google Scholar] [CrossRef]

- Sutton, M.A.; Orteu, J.J.; Schreier, H. Image Correlation for Shape, Motion and Deformation Measurements: Basic Concepts, Theory and Applications, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar] [CrossRef]

- Jin, H.; Favaro, P.; Soatto, S. Real-Time Feature Tracking and Outlier Rejection with Changes in Illumination. In Proceedings of the Eighth IEEE International Conference on Computer Vision (ICCV), Vancouver, BC, Canada, 7–14 July 2001; Volume 1, pp. 684–689. [Google Scholar] [CrossRef]

- Keys, R. Cubic Convolution Interpolation for Digital Image Processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef] [Green Version]

- Lorenzoni, R.; Curosu, I.; Léonard, F.; Paciornik, S.; Mechtcherine, V.; Silva, F.A.; Bruno, G. Combined mechanical and 3D-microstructural analysis of strain-hardening cement-based composites (SHCC) by in-situ X-ray microtomography. Cem. Concr. Res. 2020, 136, 106139. [Google Scholar] [CrossRef]

- Lewis, J.P. Fast Template Matching. In Proceedings of the Vision Interface Conference, Quebec City, QC, Canada, 15–19 May 1995; Volume 95, pp. 120–123. [Google Scholar]

- Urschler, M.; Bornik, A.; Donoser, M. Memory Efficient 3D Integral Volumes. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 2–8 December 2013; pp. 722–729. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).