Abstract

This paper presents an enhanced crayfish optimization algorithm (ECOA). The ECOA includes four improvement strategies. Firstly, the Halton sequence was used to improve the population initialization of the crayfish optimization algorithm. Furthermore, the quasi opposition-based learning strategy is introduced to generate the opposite solution of the population, increasing the algorithm’s searching ability. Thirdly, the elite factor guides the predation stage to avoid blindness in this stage. Finally, the fish aggregation device effect is introduced to increase the ability of the algorithm to jump out of the local optimal. This paper performed tests on the widely used IEEE CEC2019 test function set to verify the validity of the proposed ECOA method. The experimental results show that the proposed ECOA has a faster convergence speed, greater performance stability, and a stronger ability to jump out of local optimal compared with other popular algorithms. Finally, the ECOA was applied to two real-world engineering optimization problems, verifying its ability to solve practical optimization problems and its superiority compared to other algorithms.

1. Introduction

Engineering problems have become increasingly complex [1] with the rapid development of engineering technology. As a result, more and more issues need to be optimized, making the importance of optimization increasingly prominent [2]. Traditional optimization algorithms, such as linear programming [3], gradient descent [4], and the simplex method [5], are often used to solve these problems in the past. Although they performed well in solving simple problems, their limitations became more apparent as engineering challenges and data processing requirements increased. Traditional algorithms are difficult to solve high-dimensional, nonlinear, or discrete optimization problems. Therefore, the swarm intelligent optimization algorithm [6] was developed, simulating the behavior of biological groups in nature to seek the optimal solution through cooperation and information exchange between individuals [7]. The swarm intelligence algorithms have more vital global optimization ability, robustness, and adaptability and can deal with various complex optimization tasks compared to traditional algorithms. These problems are all complex, and a swarm intelligent optimization algorithm can help find the optimal or approximate optimal solution [8], improving the solving efficiency and solution quality.

Many swarming optimization algorithms have been proposed recently due to their simplicity and strong optimization capabilities [9]. These algorithms include ant colony algorithm [10] (ACO) inspired by the foraging behavior of ants in nature, particle swarm optimization algorithm [11] (PSO) that simulates bird foraging behavior, and whale optimization algorithm [12] (WOA) that simulates the unique search methods and trapping mechanisms of humpback whales, among others. Although these standard swarming algorithms have been successful in many problems, they may still face some challenges in some cases [13]. Scholars have proposed several improved versions to address these challenges and improve the performance of swarm intelligence algorithms. For instance, Hadi Moazen et al. [14] identified the shortcomings of the PSO and developed an improved PSO-ELPM algorithm with elite learning, enhanced parameter updating, and exponential mutation operators. This algorithm balances the exploration and development capabilities of the PSO and produces higher accuracy at an acceptable time complexity, as demonstrated by the CEC 2017 test set. Similarly, Ya Shen et al. [15] proposed the MEWOA algorithm, which divides individual whales into three subpopulations and adopts different renewal methods and evolutionary strategies. This algorithm has been applied to global optimization and engineering design problems, and its effectiveness and competitiveness have been verified. Furthermore, Fang Zhu et al. [16] found that the Dung Beetle optimization algorithm (DBO) was prone to falling into local optima in the late optimization period. They proposed the Dung Beetle search algorithm (QHDBO) based on quantum computing and a multi-strategy mixture to address this issue. The strategy’s effectiveness was verified through experiments, which significantly improved the convergence speed and optimization accuracy of the DBO. These proposed and improved optimization algorithms significantly promote the development of swarm intelligence algorithms and make their performance better, allowing them to be applied to optimization problems in various fields, such as workshop optimization scheduling [17], microgrid optimization scheduling [18], vehicle path planning [19], engineering design [20], and wireless sensor layout [21].

The crayfish optimization algorithm [22] (COA) was proposed in September 2023 as a new swarm intelligence optimization algorithm inspired by the summer, competition, and predatory behavior of crayfish. There is intense competition in global optimization and engineering optimization. However, experiments have found that the convergence rate could be slower in some problems and has fallen into local optimal problems. This paper presents four strategies to improve it and comprehensively improve the optimization performance of the COA. The main contributions of this paper are as follows:

- (1)

- An enhanced crayfish optimization algorithm (ECOA) is proposed by mixing four improvement strategies. Halton sequence was introduced to improve the population initialization process of COA, which made the initial population distribution of crayfish more uniform and increased the initial population’s diversity and the early COA’s convergence rate. Before crayfish began to summer resort, compete, and predate, QOBL was applied to the COA population, which was conducive to increasing the search range of the population and improving the quality of candidate solutions to accelerate the convergence rate. There is a certain blindness in this process, and elite factors are introduced to guide the crayfish because crayfish can directly ingest food when the size of the food is appropriate. This paper introduces the fish device aggregation effect (FADs) in the marine predator algorithm (MPA) into the predation phase of crayfish to enhance the ability of COA to jump out of local optimality.

- (2)

- The proposed ECOA solves the widely used IEEE CEC2019 test function set and compares it with four standard swarm intelligence algorithms, four improved swarm intelligence algorithms, and crayfish optimization algorithms, respectively. Five experiments were carried out: numerical experiment, iterative curve analysis, box plot analysis, the Wilcoxon rank sum test, and ablation experiments. The experimental results show that the proposed ECOA is competitive and compared to similar algorithms, it has faster convergence speed, higher convergence accuracy, and stronger ability to jump out of local optima.

- (3)

- Using the ECOA for practical optimization problems in the three-bar truss design and pressure vessels design, and comparing it with other algorithms. The ECOA shows higher convergence accuracy, faster convergence speed, and higher stability compared to other algorithms.

The rest of this paper is arranged as follows: Part 2 introduces the standard crayfish optimization algorithm (COA), Part 3 details the proposed enhanced crayfish optimization algorithm (ECOA), Part 4 tests the effectiveness of the ECOA and its superiority over other optimization algorithms through five experiments, Part 5 applies the ECOA to practical engineering optimization problems and Part 6 is the conclusion and future work.

2. The Crayfish Optimization Algorithm (COA)

The crayfish optimization algorithm (COA) is a novel swarm intelligence optimization algorithm inspired by crayfish’s summer heat, competition, and predation behavior. Crayfish are arthropods of the shrimp family that live in various freshwater areas. Research has shown that crayfish behave differently in different ambient temperatures. In the mathematical modeling of COA, the heat escape, competition, and predation behavior are defined as three distinct stages, and the optimization algorithm is controlled to enter different stages by defining different temperature intervals. Among them, the summer stage is the exploration stage of COA, the competition stage, and the foraging stage is the development stage of COA. The steps of the COA are described in detail below.

2.1. Population Initialization

The COA is a population-based algorithm that starts with population initialization to provide a suitable starting point for the subsequent optimization process. In the modeling of COA, the location of each crayfish represents a candidate solution to a problem, which has dimension, and the population location of crayfish constitutes a group of candidate solutions X, whose expression is shown as Equation (1).

where is the location of the initial crayfish population, is the number of crayfish population, is the dimension of the problem, and is the initial location of the crayfish in the dimension, which is generated in the search space of the problem randomly, and the specific expression of is shown in Equation (2).

where is the lower bound of the j-dimension of the problem variable in the search space, is the upper bound, and is the uniformly distributed random number belonging to [0, 1].

2.2. Define Temperature and Crawfish Food Intake

At different ambient temperatures, crayfish will enter different stages. The crayfish will enter the summer stage when the temperature is above 30 °C. Crayfish have assertive predation behavior between 15 °C and 30 °C, with 25 °C being the optimal temperature. Their food intake is also affected by temperature and is approximately normal as temperature changes. In COA, the temperature is defined as Equation (3).

where is the ambient temperature. The mathematical expression of food intake of crayfish is shown in Equation (4).

where is the optimal temperature and and are used to control the food intake of crayfish at different ambient temperatures.

2.3. Summer Phase

When the temperature is higher than 30 °C, crayfish will choose cave for heat escape, which is the heat escape stage of the COA. The mathematical definition of cave is shown in Equation (5).

where is the optimal position obtained by the algorithm iteration so far, and is the optimal position of the current crayfish population.

There may be competition for crawfish to get into the heat. Multiple crayfish will compete for the same burrow to escape the heat if there are many crayfish and few burrows. This will not be the case if there are more caves. A random number between 0 and 1, is used to determine whether a race has occurred in the COA. When the random number , no other crayfish compete for the cave, and crayfish can directly enter the cave to escape the heat. The mathematical expression of this process is shown in Equation (6).

where is the current number of iterations, is the current position of the i crayfish in the th dimension, represents the number of iterations of the next generation, is a random number [0, 1], and the value of decreases with the increase in iterations, as expressed in Equation (7).

where is the maximum number of iterations of the algorithm.

2.4. Competition Phase

Multiple crayfish will compete for a cave and enter the competition stage when the temperature is higher than 30 °C and the random number . At this stage, the position of the crayfish is updated, as shown in Equation (8).

where is a random crayfish in the population, and its expression is shown in Equation (9).

where is the random number belonging to [0, 1], and is the integer function.

2.5. Predation Stage

The crayfish will hunt and eat food when the temperature . The Crawfish move towards their food and eat it. The food location is defined in Equation (10).

The crayfish will judge the size of the food to adopt different ways before ingesting food. The size of food is defined in Equation (11). The crayfish will tear the food with their claws first if the food is too large, and alternate eating with their second and third walking feet.

where is the food factor, representing the maximum value of food, and the value is constant 3; is the fitness value of the i crayfish, that is the objective function value; represents the fitness value of the food location . The Crayfish judge the size of their food by the size of their maximum food. When the size of the food , the food is too large, and the tiny dragon will use chelates (shrimp claws, the first pair of feet) to tear the food; the mathematical expression is as Equation (12).

After that, the crayfish will alternate feeding with the second and third feet, a process simulated in the COA using sine and cosine functions, as shown in Equation (13).

where is the food intake and is the random number belonging to [0, 1].

The food size is appropriate and crayfish can be directly ingested when , and the position update expression is shown in Equation (14).

where is a random number belonging to [0, 1].

3. The Enhanced Crayfish Optimization Algorithm (ECOA)

This section describes the proposed enhanced crayfish optimization algorithm (ECOA). Because the crawfish optimization algorithm (COA) has defects in slow convergence speed and easy falling into local areas, this paper adopts four strategies to improve it and comprehensively enhance the optimization performance of the crawfish optimization algorithm. Firstly, the Halton sequence is used to improve the population initialization so that the initial population is more evenly distributed in the search space. Secondly, quasi opposition-based learning strategy is introduced to generate the quasi-oppositional solution of the population, and the next-generation population is selected by greedy strategy, which increases the search space and enhances the diversity of the crayfish population. Thirdly, the foraging stage is improved, and the elite guiding factor is introduced to enhance the optimization rate of this stage. Finally, after the foraging stage, the vortex effect of the marine predator algorithm is introduced to strengthen the ability of the algorithm to jump out of the local optimal. Specific enhancement strategies are as follows.

3.1. Halton Sequence Population Initialization

Generally, population initialization in swarm intelligent optimization algorithms creates initial solutions that can converge to better solutions through continuous iteration [23]. This process forms the basis of algorithm iteration, and the population initialization quality directly affects the algorithm’s iteration speed and global optimization ability. In a standard COA, crayfish populations are generated randomly. Although this initialization method is simple and easy to implement, it may lead to uneven distribution of small and medium-sized lobsters in the population, and the population cannot cover the entire search space well, resulting in slow convergence of the algorithm and even falling into local optimal prematurely.

In contrast, the Halton sequence [24] makes the generated points evenly distributed throughout the search space as a low difference [25] numerical sequence. It differs from random sequences that create different points each time because of its deterministic nature. This paper uses the Halton sequence to replace the original random initialization strategy. This alternative can make the initial population cover the whole search space more evenly, improve the diversity of the population, and speed up the algorithm’s convergence. The expression for the initial location of each crayfish produced by population initialization based on the Halton sequence is shown in Equation (15).

where is a value based on the Halton sequence.

3.2. Quasi Opposition-Based Learning

Opposition-based learning [26] (OBL) was first proposed by Tizhoosh in 2005 and has been widely used to improve swarm intelligence optimization algorithms in subsequent studies. The concept is to generate the opposite solution of the current population, and by comparing the current solution and the opposite solution, retain the better candidate solution of the two as the next-generation population. OBL proposed that the opposition values of the current candidate solutions may be closer to the optimal solution, and the process is conducive to increasing the search range of the population and improving the quality of the candidate solutions. The position expression of the generated opposition solutions is shown in Equation (16).

where lb is the lower bound of the problem variable in the search space, and is the upper bound.

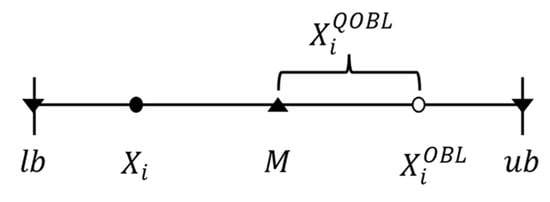

There are also limitations. Inverse learning can improve the algorithm’s convergence speed in the early iteration stage, although some improvements show good performance. The inverse solution generated in the late iteration stage may slow down the algorithm speed due to the excessive position change. Quasi opposition-based learning [27] (QOBL) is a more effective oppositional learning strategy evolved from OBL. It requires that the opposite solution be close to the center of the search space, not the opposite value; the value of M is shown in Equation (17). Figure 1 shows the position schematic of the generated quasi-opposition and the ordinary opposition solutions. It can be seen from the figure that the position of the quasi-opposition solution generated by QOBL is between the opposition solution and the center of the search space.

Figure 1.

Schematic diagram of individual location of QOBL population.

The expression for the position of the quasi-opposite solution generated by QOBL is shown in Equation (18).

where r is the uniformly distributed random number belonging to [0, 1].

3.3. Elite Steering Factor

Formula (14) describes that crayfish can directly ingest food when the size of the food is appropriate, and there is a certain blindness in this process of crayfish. Therefore, this paper introduces the elite factor [28] to guide the position update, as shown in Equation (19). With its replacement Formula (14), the algorithm can be guided to converge faster and improve the optimization accuracy to a certain extent. The boundary value is taken if the crayfish position updated by Equation (19) is outside the search boundary.

where is the optimal position of the current crayfish population, that is the elite.

3.4. Vortex Formation and Fish Aggregation Device Effect

Modeling the effects of fish aggregating devices () [29] was first proposed in the marine predator algorithm. In modeling the effect, the individual position of the population may produce a long jump, which can help the algorithm jump out of the local optimal solution. In this paper, the effect is added to the predation phase of COA. Suppose the crawfish fitness after the update of Equation (19) is not as good as the fitness of the previous generation, the positions of these crawfish are disturbed according to the effect, and the mathematical expression of this process is shown in (20).

where is the current position of the crayfish, represents the position of the next generation, the value of is 0.2, is a binary vector whose value is between 0 and 1, is a uniformly distributed random number belonging to [0, 1], and and are the positions of two random individuals in the current population.

3.5. Pseudo-Code of the ECOA

The pseudo-code of the ECOA proposed in this paper is shown in Algorithm 1.

| Algorithm 1. The pseudo-code of the ECOA |

| Initialize population size , number of iterations , problem dimension for = 1: for = 1: Generate the initial population individual position according to Equation (15) end end Calculate the fitness value of the population to obtain the values of and While < Define the ambient temperature through Equation (3) for = 1: Crayfish perform QOBL according to Equation (18) end Choosing to retain crayfish populations with better fitness for the next generation if > 30 Define the cave location according to Equation (5) if 0.5 Crayfish undergo the summer retreat stage according to Equation (6) else Crayfish compete in stages according to Equation (8) end else Define food intake and size through Equations (4) and (11), respectively if > 2 Crayfish shred food according to Equation (12) Crayfish ingest food according to Equation (13) else Crayfish can directly consume food according to Equation (19) if < The position of crayfish remains unchanged else Update the effect of crayfish based on Equation (20) end end end Perform boundary processing Update fitness values, and values = + 1 end |

3.6. Analysis of Computational Time Complexity of the ECOA

In the standard crayfish optimization algorithm (COA), is the number of crayfish populations, is the number of problem variables, and is the maximum number of iterations in the algorithm. The time complexity of the standard crayfish optimization algorithm is . In the ECOA, the complexity of the introduced Halton sequence initialization is , which is the same as the original random initialization. The complexity of quasi opposition-based learning is also . The elite factor introduced during the predation phase is an improvement on the established steps of the original algorithm and will not increase the complexity of the original algorithm. Due to the fact that only a small number of crayfish undergo the effect, its time complexity is much smaller than . In summary, the overall complexity of the ECOA is less than . Therefore, the proposed ECOA does not increase much computational complexity and is on the same order of magnitude as the complexity of COA.

4. The ECOA Effectiveness Test Experiment

4.1. Experimental Scheme

In this section, we conduct simulation experiments on the IEEE CEC2019 [30] test function set to verify the proposed enhanced crayfish optimization algorithm’s (ECOA) optimization performance. The name, dimension D, range and optimal value information of the IEEE CEC2019 test function set are shown in Table 1, which contains 10 minimally optimized single-objective test functions, which is highly challenging.

Table 1.

Information of IEEE CEC2019 test function set.

This paper compares the ECOA with a nine-population intelligent optimization algorithm. They include advanced standard optimization algorithms: the particle swarm optimization algorithm (PSO), the Aquila Optimizer [31] (AO), the Beluga Optimization algorithm [32] (BWO), the golden jackal optimization algorithm [33] (GJO), and the crayfish optimization algorithm (COA). Recently, advanced optimization algorithms have been proposed: the sine-cosine chaotic Harris Eagle Optimization Algorithm [34] (CSCAHHO), the Adaptive slime fungus algorithm [35] (AOSMA), the mixed arithmetic-trigonometric optimization algorithm [36] (ATOA), and the Adaptive Gray Wolf Optimizer [37] (AGWO). These algorithms include the most widely used algorithms, recently proposed algorithms, and four highly advanced improved algorithms. They have demonstrated strong optimization performance in previous research, and compared with these algorithms, they better reflect the excellent optimization ability of the proposed ECOA. The population of all algorithms is set to 30, the maximum number of iterations is set to 2000, and the critical parameters of each algorithm are set using the original algorithm parameters, as shown in Table 2.

Table 2.

Important parameter settings of each algorithm.

All experiments were conducted on a computer with a Windows 10 operating system, a Intel(R) Core (TM) i7-7700HQ 2.80 GHz CPU and 16 GB memory. The simulation experiment platform used is Matlab R2022a. This experiment is divided into five parts: numerical experiment analysis, iterative curve analysis, box plot analysis, the Wilcoxon rank sum tests and ablation experiments. The above experimental system verifies the effectiveness and superiority of the ECOA.

4.2. Numerical Experiment and Analysis

In this section, the results of each algorithm running 30 times on CEC2019 are counted, and the statistical indicators include the best value (Best), the mean value (Mean), and the standard deviation (Std). The numerical experimental results of the ECOA and other comparisons are shown in Table 3. In addition to the PSO and the GJO, the ECOA and other algorithms have an optimal value of 1 on F1. The average and optimal values obtained by the ECOA are the minimum, and the minimum standard deviation is obtained on most test functions on F2–F9. By comparing the best value with the average value, it can be seen that the proposed ECOA algorithm has higher optimization accuracy than other algorithms. From the statistical results of the standard deviation, it can be seen that the ECOA proposed in most test functions has higher robustness.

Table 3.

Numerical experimental results of each algorithm on the CEC2019 test set.

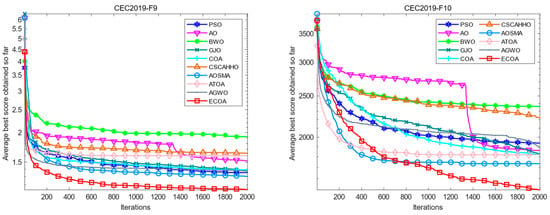

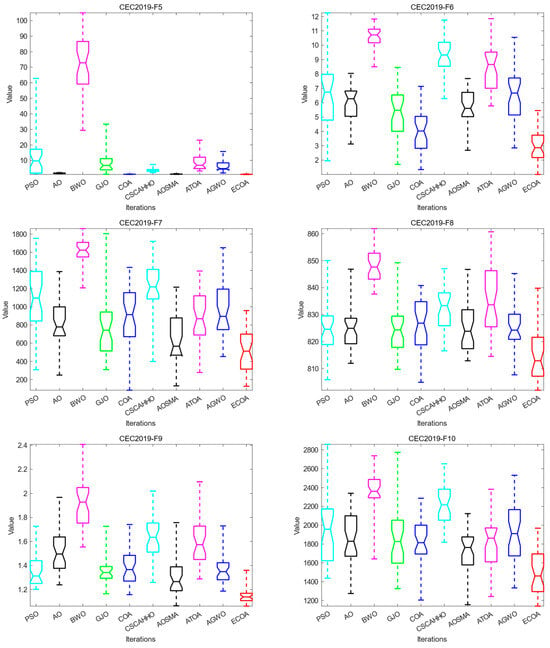

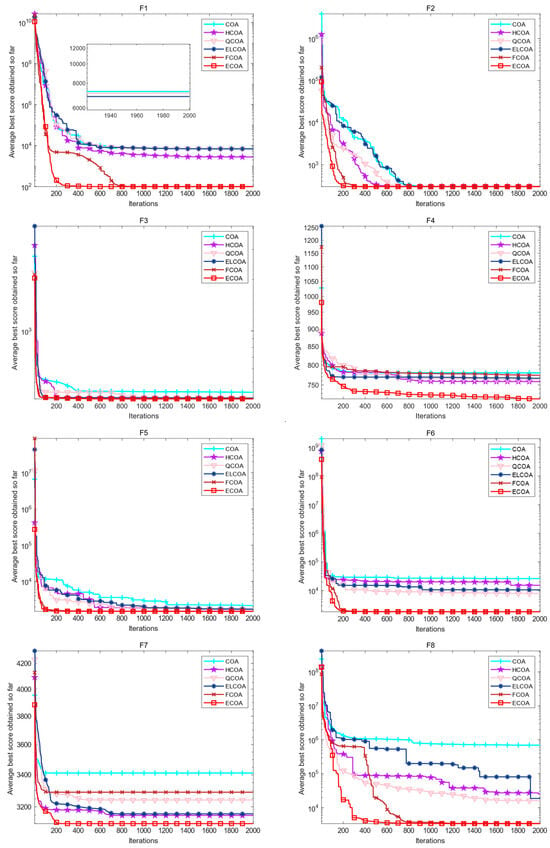

4.3. Iterative Curve Analysis

This paper took the average value of the 30 iterations in which each optimization algorithm was independently run and drew iteration curves for intuitive comparison to verify the proposed ECOA’s optimization performance further. The results are shown in Figure 2. On F1, in addition to the slow convergence speed of PSO and GJO, other algorithms converge quickly to the optimal value, and the ECOA’s convergence speed is the fastest. On F2, except for the slow convergence speed of PSO, GJO, ATOA, and AGWO, other algorithms converge to a quick and close precision. On F3, F4, F7, F8, F9, and F10, although the speed is slightly behind that of some algorithms in the early stage of iteration, other algorithms fall into local optimality with the progress of iteration. The speed is slower, while the ECOA can search and converge faster and has a more vital ability to jump out of local optimality. On both F5 and F6, the ECOA converges to a better value faster than other algorithms. The proposed ECOA has a faster convergence speed and a more vital ability to jump out of local optima compared with the standard optimization algorithm or improved algorithm.

Figure 2.

Comparison of iteration curves of each algorithm on the CEC2019 test set.

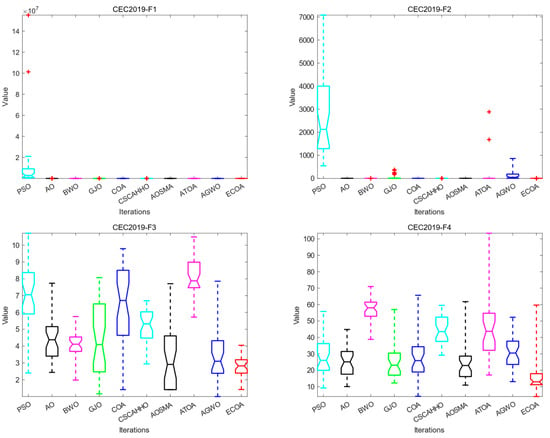

4.4. Box Plot Analysis

We draw boxplots according to the optimization results of the ECOA and other algorithms running 30 times on each test function and carry out the box plot analysis experiment. The boxplot [38] can reflect the distribution and spread range of 30 statistical results for each algorithm and reflect each algorithm’s performance stability when optimizing the IEEE CEC2019 test function. The shorter the box range means that the algorithm’s values are more similar when run several times, and the better the stability and reliability of the algorithm can be obtained. The smaller the value of the median line, the higher the optimization accuracy of the algorithm. The box diagram results are shown in Figure 3. In addition to the results of the ECOA boxplot on F1, F2, and F5, the ECOA’s boxplot range is shorter, and the median line is lower than that of the compared algorithms on other test functions. As a result, the ECOA can achieve better optimization results during multiple runs, and its performance is more stable, which can be used as a more reliable optimization algorithm.

Figure 3.

Comparison of box plots of various algorithms on the CEC2019 test set.

4.5. The Wilcoxon Rank Sum Test

The Wilcoxon rank sum test [39] is a nonparametric statistical method that can statistically test the difference in the optimization performance of optimization algorithms. This paper compares the ECOA running 30 times in IEEE CEC2019 with the results of other algorithms by the Wilcoxon rank sum test. The p value of the significance level was set at 0.05. Suppose the rank sum test result of the ECOA and the compared algorithm is less than 0.05. In that case, it indicates that the optimization performance of the ECOA is completely better than that of the compared algorithm, and there is a significant difference. Table 4 shows the results of the Wilcoxon rank sum test between the ECOA and each algorithm. It can be seen that the p-value of the ECOA and the compared algorithm is less than 0.05 in most of the number functions, which has a significant difference. “N/A” indicates no significant difference between the two algorithms. In the last row of the table, the “worse”, “same”, and “better” of the optimization performance of the ECOA compared with the algorithm are visually counted, which are represented by “–”, “=“, and “+”, respectively. The performance of the ECOA in comparison to the compared algorithms is less than 0.05 in the vast majority of p-values, with slight differences only in individual functions (highlighted in bold). The rank sum test verifies that the ECOA has significantly improved performance compared with other algorithms.

Table 4.

Test p-values of each algorithm on the IEEE CEC2019 test set.

4.6. Analysis of Ablation Experiments

In this section, in order to further verify whether all four improvement strategies adopted for COA have had a positive improvement effect, ablation experiments are conducted to analyze. In the experiment, we will conduct ablation comparison experiments using only HCOA initialized with the Halton sequence, QCOA with only quasi opposition-based learning strategy, ELCOA with only elite factor, FCOA with only effect, original COA, and the ECOA proposed by the mixed four strategies. We selected eight different types of functions from the widely used and IEEE CEC2017 test function set for ablation experiments to further verify the rationality of the improved strategy and the superiority of the proposed ECOA. The information of eight test functions is shown in Table 5. To maintain fairness, the population size of all algorithms is set to 30 and the maximum number of iterations is set to 2000.

Table 5.

Information on the eight test functions of IEEE CEC2017.

The iteration curve results of all algorithm ablation experiments are shown in Figure 4. The results show that on eight different types of IEEE CEC2017 test functions, each single strategy improved COA has faster convergence speed and optimization accuracy compared to the original algorithm, and the optimization performance of the proposed ECOA is significantly better than other algorithms. Through the above experiments, the feasibility of the adopted strategy was further verified, and mixing these four strategies can comprehensively improve the optimization performance of the original algorithm.

Figure 4.

Iteration curves of various algorithms in ablation experiments.

5. Practical Engineering Optimization Experiment

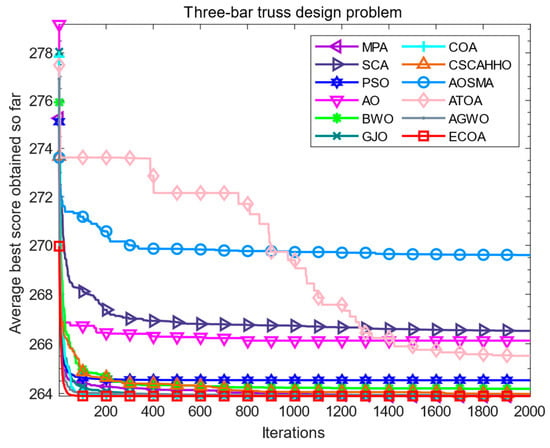

In this section, to verify the optimization performance of the proposed ECOA in solving practical engineering problems, we will use the ECOA to solve two engineering design optimization problems [40], namely the three-bar truss design problem and the pressure vessel design problem. Compared with other algorithms, 12 standard and improved algorithms were tested, including the marine predator algorithm (MPA), sine cosine algorithm [41] (SCA), PSO, AO, BWO, GJO, COA, CSCAHHO, AOSMA, ATOA, and AGWO. All algorithms run independently 30 times to statistically analyze the experimental results, with a population size of 30 and a maximum iteration count of 2000.

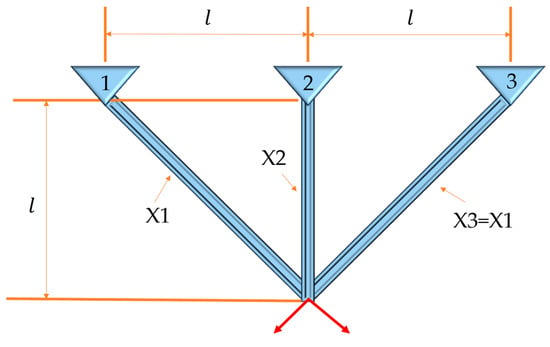

5.1. Three-Bar Truss Design Problem

The three-bar truss design problem refers to the minimization of the volume of the truss by optimizing and adjusting the cross-sectional areas (X1 and X2) under stress constraints on each truss. The structure of the three-bar truss is shown in Figure 5, represents spacing, X1, X2, and X3 represent cross-sectional areas. Its objective function is nonlinear and includes two decision parameters and three inequality constraints. The fitness iteration curve results of all algorithms are shown in Figure 6, which shows that compared to other algorithms, the ECOA has a faster convergence speed and higher convergence accuracy. The numerical results of each algorithm are summarized in Table 6. The ECOA results in higher accuracy and more stable performance.

Figure 5.

Three-bar truss structure diagram.

Figure 6.

Iterative curves of various algorithms for three-bar truss design problems.

Table 6.

Experimental results of various algorithms on the three-bar truss design problems.

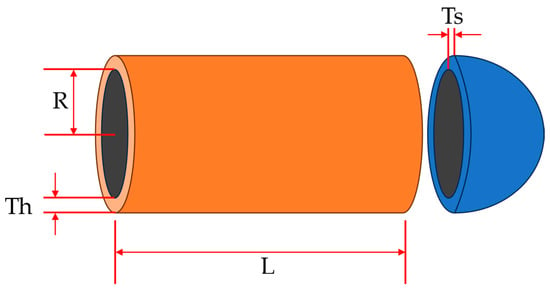

5.2. Pressure Vessel Design

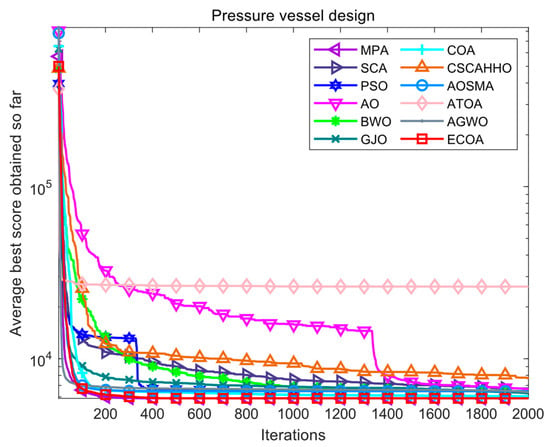

The pressure vessel design problem is to obtain the design structure of a pressure vessel with minimal cost through optimization while satisfying constraints. There are four decision variables that need to be optimized for this problem, namely: hemispherical head thickness (Th), container wall thickness (Ts), cylindrical section length (L), and inner diameter (R). The structural diagram of the pressure vessel is shown in Figure 7. The fitness iteration curves of all algorithms for pressure vessel design problems are shown in Figure 8. The optimization results of various algorithms for pressure vessel design problems are shown in Table 7. Based on the results in Figure 8 and Table 7, it can be seen that the results obtained by the ECOA and MPA are superior to those of other algorithms, and both have achieved optimal results. Moreover, from the convergence curve, it can be seen that the ECOA quickly converged to a better value, indicating its feasibility and superiority in applying to this problem.

Figure 7.

Schematic diagram of pressure vessel structure.

Figure 8.

Iterative curves of various algorithms for pressure vessel design.

Table 7.

Experimental results of various algorithms in pressure vessel design.

6. Conclusions and Future Work

The crayfish optimization algorithm (COA) is a new swarm intelligence optimization algorithm that is performing well in global and engineering optimization. However, the COA also has some defects. In this paper, the standard COA mixing strategy is improved: (1) The Halton sequence is used to initialize the population so that the initial population is more evenly distributed in the search space, and the convergence speed of the initial COA iteration is increased. (2) QOBL is introduced to generate the quasi-oppositional solution of the population. The better solution is selected from the original solution, and the quasi-oppositional solution enters the next generation to improve the quality of candidate solutions. (3) Introducing elite guiding factors in the predation stage to avoid the blindness of this process. (4) The fish device aggregation effect (FADs) in the marine predator algorithm is introduced into COA to enhance its ability to jump out of local optimal. Based on the above, an enhanced crayfish optimization algorithm (ECOA) with better performance is proposed. This paper compares it with other optimization algorithms and improved algorithms on the CEC2019 test function and two real-world engineering optimization problems set to verify the effectiveness of the ECOA. The experimental results show that the ECOA can better balance the exploration and development of the algorithm and has a more robust global optimization ability. In future work, we will apply the ECOA to other practical problems, such as UAV track optimization, flexible shop scheduling, and microgrid optimization scheduling, to verify its ability to solve various complex practical problems.

Author Contributions

Conceptualization, Y.Z. and P.L.; methodology, Y.Z.; software, P.L.; validation, P.L. and Y.L.; formal analysis, Y.L.; writing—original draft preparation, Y.Z.; writing—review and editing, P.L.; visualization, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the fund of the Science and Technology Development Project of Jilin Province No. 20220203190SF.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hu, G.; Zhong, J.; Du, B.; Wei, G. An enhanced hybrid arithmetic optimization algorithm for engineering applications. Comput. Methods Appl. Mech. Eng. 2022, 394, 114901. [Google Scholar] [CrossRef]

- Jiang, Y.; Yin, S.; Dong, J.; Kaynak, O. A Review on Soft Sensors for Monitoring, Control, and Optimization of Industrial Processes. IEEE Sens. J. 2021, 21, 12868–12881. [Google Scholar] [CrossRef]

- Cosic, A.; Stadler, M.; Mansoor, M.; Zellinger, M. Mixed-integer linear programming based optimization strategies for renewable energy communities. Energy 2021, 237, 121559. [Google Scholar] [CrossRef]

- Shen, Y.; Branscomb, D. Orientation optimization in anisotropic materials using gradient descent method. Compos. Struct. 2020, 234, 111680. [Google Scholar] [CrossRef]

- Xu, Z.; Geng, H.; Chu, B. A Hierarchical Data–Driven Wind Farm Power Optimization Approach Using Stochastic Projected Simplex Method. IEEE Trans. Smart Grid 2021, 12, 3560–3569. [Google Scholar] [CrossRef]

- Mavrovouniotis, M.; Li, C.; Yang, S. A survey of swarm intelligence for dynamic optimization: Algorithms and applications. Swarm Evol. Comput. 2017, 33, 1–17. [Google Scholar] [CrossRef]

- Tang, J.; Liu, G.; Pan, Q. A Review on Representative Swarm Intelligence Algorithms for Solving Optimization Problems: Applications and Trends. IEEE/CAA J. Autom. Sin. 2021, 8, 1627–1643. [Google Scholar] [CrossRef]

- Li, W.; Wang, G.-G.; Gandomi, A.H. A Survey of Learning-Based Intelligent Optimization Algorithms. Arch. Comput. Methods Eng. 2021, 28, 3781–3799. [Google Scholar] [CrossRef]

- El-Kenawy, E.-S.M.; Khodadadi, N.; Mirjalili, S.; Abdelhamid, A.A.; Eid, M.M.; Ibrahim, A. Greylag Goose Optimization: Nature-inspired optimization algorithm. Expert Syst. Appl. 2024, 238, 122147. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimizationtimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Elseify, M.A.; Hashim, F.A.; Hussien, A.G.; Kamel, S. Single and multi-objectives based on an improved golden jackal optimization algorithm for simultaneous integration of multiple capacitors and multi-type DGs in distribution systems. Appl. Energy 2024, 353, 122054. [Google Scholar] [CrossRef]

- Moazen, H.; Molaei, S.; Farzinvash, L.; Sabaei, M. PSO-ELPM: PSO with elite learning, enhanced parameter updating, and exponential mutation operator. Inf. Sci. 2023, 628, 70–91. [Google Scholar] [CrossRef]

- Shen, Y.; Zhang, C.; Gharehchopogh, F.S.; Mirjalili, S. An improved whale optimization algorithm based on multi-population evolution for global optimization and engineering design problems. Expert Syst. Appl. 2023, 215, 119269. [Google Scholar] [CrossRef]

- Zhu, F.; Li, G.; Tang, H.; Li, Y.; Lv, X.; Wang, X. Dung beetle optimization algorithm based on quantum computing and multi-strategy fusion for solving engineering problems. Expert Syst. Appl. 2024, 236, 121219. [Google Scholar] [CrossRef]

- Zhang, X.; Sang, H.; Li, Z.; Zhang, B.; Meng, L. An efficient discrete artificial bee colony algorithm with dynamic calculation method for solving the AGV scheduling problem of delivery and pickup. Complex Intell. Syst. 2024, 10, 37–57. [Google Scholar] [CrossRef]

- Yang, Y.; Qiu, J.; Qin, Z. Multidimensional Firefly Algorithm for Solving Day-Ahead Scheduling Optimization in Microgrid. J. Electr. Eng. Technol. 2021, 16, 1755–1768. [Google Scholar] [CrossRef]

- Song, Q.; Zhao, Q.; Wang, S.; Liu, Q.; Chen, X. Dynamic Path Planning for Unmanned Vehicles Based on Fuzzy Logic and Improved Ant Colony Optimization. IEEE Access 2020, 8, 62107–62115. [Google Scholar] [CrossRef]

- Kalita, K.; Ramesh, J.V.N.; Cepova, L.; Pandya, S.B.; Jangir, P.; Abualigah, L. Multi-objective exponential distribution optimizer (MOEDO): A novel math-inspired multi-objective algorithm for global optimization and real-world engineering design problems. Sci. Rep. 2024, 14, 1816. [Google Scholar] [CrossRef]

- Al Aghbari, Z.; Raj, P.V.P.; Mostafa, R.R.; Khedr, A.M. iCapS-MS: An improved Capuchin Search Algorithm-based mobile-sink sojourn location optimization and datdata collection scheme for Wireless Sensor Networks. Neural Comput. Appl. 2024, 36, 8501–8517. [Google Scholar] [CrossRef]

- Jia, H.; Rao, H.; Wen, C.; Mirjalili, S. Crayfish optimization algorithm. Artif. Intell. Rev. 2023, 56 (Suppl. S2), 1919–1979. [Google Scholar] [CrossRef]

- Li, Q.; Liu, S.-Y.; Yang, X.-S. Influence of initialization on the performance of metaheuristic optimizers. Appl. Soft Comput. 2020, 91, 106193. [Google Scholar] [CrossRef]

- Wei, F.; Zhang, Y.; Li, J. Multi-strategy-based adaptive sine cosine algorithm for engineering optimization problems. Expert Syst. Appl. 2024, 248, 123444. [Google Scholar] [CrossRef]

- Halton, J.H. Algorithm 247: Radical-inverse quasi-random point sequence. Commun. ACM 1964, 7, 701–702. [Google Scholar] [CrossRef]

- Tizhoosh, H.R. Opposition-Based Learning: A New Scheme for Machine Intelligence. In Proceedings of the International Conference on Computational Intelligence for Modelling, Control and Automation and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (CIMCA-IAWTIC’06), Vienna, Austria, 28–30 November 2005; pp. 695–701. [Google Scholar] [CrossRef]

- Truonga, K.H.; Nallagownden, P.; Baharudin, Z.; Vo, D.N. A Quasi-Oppositional-Chaotic Symbiotic Organisms Search algorithm for global optimization problems. Appl. Soft Comput. 2019, 77, 567–583. [Google Scholar] [CrossRef]

- Yang, X.; Hao, X.; Yang, T.; Li, Y.; Zhang, Y.; Wang, J. Elite-guided multi-objective cuckoo search algorithm based on crossover operation and information enhancement. Soft Comput. 2023, 27, 4761–4778. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Zhang, M.; Tan, K.C. Conference Report on 2019 IEEE Congress on Evolutionary Computation (IEEE CEC 2019). IEEE Comput. Comput. Intell. Mag. 2020, 15, 4–5. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Elaziz, M.A.; Ewees, A.A.; Al-Qaness, M.A.A.; Gandomi, A.H. Aquila Optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Zhong, C.; Li, G.; Meng, Z. Beluga whale optimization: A novel nature-inspired metaheuristic algorithm. Knowl. -Based Syst. 2022, 251, 109215. [Google Scholar] [CrossRef]

- Chopra, N.; Ansari, M.M. Golden jackal optimization: A novel nature-inspired optimizer for engineering applications. Expert Syst. Appl. 2022, 198, 116924. [Google Scholar] [CrossRef]

- Zhang, Y.-J.; Yan, Y.-X.; Zhao, J.; Gao, Z.-M. CSCAHHO: Chaotic hybridization algorithm of the Sine Cosine with Harris Hawk optimization algorithms for solving global optimization problems. PLoS ONE 2022, 17, e0263387. [Google Scholar] [CrossRef]

- Naik, M.K.; Panda, R.; Abraham, A. Adaptive opposition slime mould algorithm. Soft Comput. 2021, 25, 14297–14313. [Google Scholar] [CrossRef]

- Devan, P.A.M.; Hussin, F.A.; Ibrahim, R.B.; Bingi, K.; Nagarajapandian, M.; Assaad, M. An Arithmetic-Trigonometric Optimization Algorithm with Application for Control of Real-Time Pressure Process Plant. Sensors 2022, 22, 617. [Google Scholar] [CrossRef]

- Meidani, K.; Hemmasian, A.; Mirjalili, S.; Farimani, A.B. Adaptive grey wolf optimizer. Neural Comput. Appl. 2022, 34, 7711–7731. [Google Scholar] [CrossRef]

- Streit, M.; Gehlenborg, N. Bar charts and box plots. Nat. Methods 2014, 11, 117. [Google Scholar] [CrossRef]

- Bo, Q.; Cheng, W.; Khishe, M. Evolving chimp optimization algorithm by weighted opposition-based technique and greedy search for multimodal engineering problems. Appl. Soft Comput. 2023, 132, 109869. [Google Scholar] [CrossRef]

- Zhang, S.-W.; Wang, J.-S.; Li, Y.-X.; Zhang, S.-H.; Wang, Y.-C.; Wang, X.-T. Improved honey badger algorithm based on elementary function density factors and mathematical spirals in polar coordinate systema. Artif. Intell. Rev. 2024, 57, 55. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).