Abstract

In this study, we focused on using microarray gene data from pancreatic sources to detect diabetes mellitus. Dimensionality reduction (DR) techniques were used to reduce the dimensionally high microarray gene data. DR methods like the Bessel function, Discrete Cosine Transform (DCT), Least Squares Linear Regression (LSLR), and Artificial Algae Algorithm (AAA) are used. Subsequently, we applied meta-heuristic algorithms like the Dragonfly Optimization Algorithm (DOA) and Elephant Herding Optimization Algorithm (EHO) for feature selection. Classifiers such as Nonlinear Regression (NLR), Linear Regression (LR), Gaussian Mixture Model (GMM), Expectation Maximum (EM), Bayesian Linear Discriminant Classifier (BLDC), Logistic Regression (LoR), Softmax Discriminant Classifier (SDC), and Support Vector Machine (SVM) with three types of kernels, Linear, Polynomial, and Radial Basis Function (RBF), were utilized to detect diabetes. The classifier’s performance was analyzed based on parameters like accuracy, F1 score, MCC, error rate, FM metric, and Kappa. Without feature selection, the SVM (RBF) classifier achieved a high accuracy of 90% using the AAA DR methods. The SVM (RBF) classifier using the AAA DR method for EHO feature selection outperformed the other classifiers with an accuracy of 95.714%. This improvement in the accuracy of the classifier’s performance emphasizes the role of feature selection methods.

1. Introduction

According to the latest data from the International Diabetes Federation (IDF) Diabetes Atlas in 2021, diabetes affects around 10.5% of the global adult population aged between 20 and 79. Alarmingly, nearly half of these individuals remain unaware of their diabetes status. The projections indicate that by 2045, the number of adults living with diabetes worldwide will increase by 46% to reach approximately 783 million, which corresponds to around one in eight adults [1]. Type II DM accounts for over 90% of all diabetes cases and is influenced by several factors, including socio-economic, demographic, environmental, and genetic factors. The increase in type II DM is connected to urbanization, a growing elderly population because of higher life expectancy, reduced levels of physical activity, and a high overweight and obesity rate. To address the impact of diabetes, preventive measures, early diagnosis, and proper care for all types of diabetes are crucial. These interventions can help individuals with diabetes prevent or delay the complications associated with the condition.

According to estimates from 2019, approximately 77 million adults in India were affected by diabetes [2]. Unfortunately, the prevalence of type II DM in the country is rapidly escalating. By 2045, the number of adults living with diabetes in India could reach a staggering 134 million, with younger people under 40 being particularly affected. Several risk factors like genetic predisposition, sedentary lifestyles, unhealthy dietary habits, obesity, urbanization, and mounting stress levels increase the risk of type II diabetes. India’s southern, urban, and northern regions exhibit higher rates compared to the eastern and western regions [3]. Many cases are undiagnosed until complications arise. Diabetes continues to be the seventh leading cause of death in India, taking a toll on both human lives and the economy. It is estimated that diabetes costs the Indian economy approximately USD 100 billion annually [4].

Genesis of Diabetes Diagnosis Using Microarray Gene Technology

Creating precise and effective techniques for identifying type II diabetes mellitus holds the potential to facilitate early identification and intervention. By analyzing microarray gene data, it becomes possible to identify specific genetic markers or patterns associated with diabetes [5]. This provides opportunities for personalized medicine, where treatment plans can be tailored based on an individual’s genetic profile, leading to more targeted and effective interventions. Robust and reliable methods for detecting diabetes from microarray gene data can be developed and integrated into existing healthcare systems [6]. Novel dimensionality reduction techniques, classification algorithms, and feature selection methods can be explored, and other omics data can be integrated to further enhance the accuracy and reliability of diabetes detection methods. Such research could advance the state of the art in machine learning [7]. The proposed method could be used to detect other diseases that are characterized by changes in gene expression.

The structure of the article is as follows: in Section 1, an introduction to the research is discussed. Section 2 presents the literature review. Section 3 presents the methodology. In Section 4, the materials and methods are reviewed. Section 5 explains the dimensionality reduction techniques with and without a feature extraction process. In Section 6, the feature selection methods are discussed, and Section 7 focuses on the classifiers used. The results and discussion are presented in Section 8, and the conclusion is given in Section 9.

2. Literature Review

Type II DM is a chronic disease that affects people worldwide irrespective of age. The early detection and diagnosis of DM in patients is essential for effective treatment and management. However, traditional methods for detecting DM, such as blood glucose testing, are often inaccurate and time consuming [8]. In recent years, there has been growing interest in the use of microarray gene data to detect DM. Microarray gene data can provide a comprehensive overview of gene expression patterns in the pancreas, which can be used to identify patients who are at risk of DM [9]. Jakka et al. [10] conducted an experimental analysis using various machine learning classifiers, including KNN, DT, NB, SVM, LR, and RF. The classifiers were trained and evaluated on the Pima Indians Diabetes dataset, which consists of nine attributes and is available from the UCI Repository. Among the classifiers tested, Logistic Regression (LR) exhibited the best performance, achieving an accuracy of 77.6%. It outperformed the other algorithms in terms of accuracy, F1 score, ROC-AUC score, and misclassification rate. Radja et al. [11] carried out a study to evaluate the performance of various supervised classification algorithms for medical data analysis, specifically in disease diagnosis. The algorithms tested included NB, SVM, decision table, and J48. The evaluation utilized measurement variables such as Correctly Classified, Incorrectly Classified, Precision, and Recall. The predictive database of diabetes was used as the testing dataset. The SVM algorithm demonstrated the highest accuracy among the tested algorithms at 77.3%, making it an effective tool for disease diagnosis. Dinh et al. [12] analyzed the capabilities of machine learning models in identifying and predicting diabetes and cardiovascular diseases using survey data, including laboratory results. The NHANES dataset was utilized, and various supervised machine learning models such as LR, SVM, RF, and GB were evaluated. An ensemble model combining the strengths of different models was developed, and key variables contributing to disease detection were identified using information obtained from tree-based models. The ensemble model achieved an AUC-ROC score of 83.1% for cardiovascular disease detection and 86.2% for diabetes classification. When incorporating laboratory data, the accuracy increased to 83.9% for cardiovascular disease and 95.7% for diabetes. For pre-diabetic patients, the ensemble model achieved an AUC-ROC score of 73.7% without laboratory data, and XGBoost performed the best, with a score of 84.4% when using laboratory data. The key predictors for diabetes included waist size, age, self-reported weight, leg length, and sodium intake.

Yang et al. [13] conducted a study that aimed to develop prediction models for diabetes screening using an ensemble learning approach. The dataset was obtained from NHANES from 2011 to 2016. Three simple machine learning methods (LDA, SVM, and RF) were used, and the performance of the models was evaluated through fivefold cross-validation and external validation using the Delong test. The study included 8057 observations and 12 attributes. In the validation set, the ensemble model utilizing linear discriminant analysis showcased superior performance, achieving an AUC of 0.849, an accuracy of 0.730, a sensitivity of 0.819, and a specificity of 0.709. Muhammed et al. [14] conducted a study utilizing a diagnostic dataset of type 2 diabetes mellitus (DM) collected from Murtala Mohammed Specialist Hospital in Kano, Nigeria. Predictive supervised machine learning models were developed using LR, SVM, KNN, RF, NB, and GB algorithms. Among the developed models, the RF predictive learning-based model achieved the highest accuracy at 88.76%. Kim et al.’s [15] study aimed to assess the impact of nutritional intake on obesity, dyslipidemia, high blood pressure, and T2DM using deep learning techniques. The researchers developed a deep neural network (DNN) model and compared its performance with logistic regression and decision tree models. Data from the KNHANES were analyzed. The DNN model, consisting of three hidden layers with varying numbers of nodes, demonstrated superior prediction accuracy (ranging from 0.58654 to 0.80896) compared to the LoR and decision tree models. In conclusion, the study highlighted the advantage of using a DNN model over conventional machine learning models in predicting the impact of nutritional intake on obesity, dyslipidemia, high blood pressure, and T2DM.

Ramdaniah et al. [16] conducted a study utilizing microarray gene data from the GSE18732 dataset to distinguish between different classes of diabetes. The study consisted of 46 samples from diabetic classes and 72 samples from non-diabetic classes. Machine learning techniques, specifically Naïve Bayes and SVM with Sigmoid kernel, were employed for classification, achieving accuracy rates of 88.89% and 83.33%, respectively. The PIMA Indian diabetic dataset has been widely used by researchers to classify and analyze diabetic and non-diabetic patients. However, the use of microarray gene-based datasets for diabetic class identification has received less attention. As a result, a variety of performance metrics, such as accuracy, sensitivity, specificity, and MCC, have been investigated in the context of this microarray gene-based dataset.

The main characteristics and contributions of this paper are as follows:

- The work suggests a novel approach for the early detection and diagnosis of diabetes using microarray gene expression data from pancreatic sources.

- Four DR techniques are used to reduce the high dimensionality of the microarray gene data.

- Two metaheuristic algorithms are used for feature selection to further reduce the dimensionality of the microarray gene data.

- Ten classifiers in two categories, namely nonlinear models and learning-based classifiers, are used to detect diabetes mellitus. The performance of the classifiers is analyzed based on parameters like accuracy, F1 score, MCC, error rate, FM metric, and Kappa, both with and without feature selection techniques. The enhancement of classifier performance due to feature selection is exemplified through MCC and Kappa plots.

3. Methodology

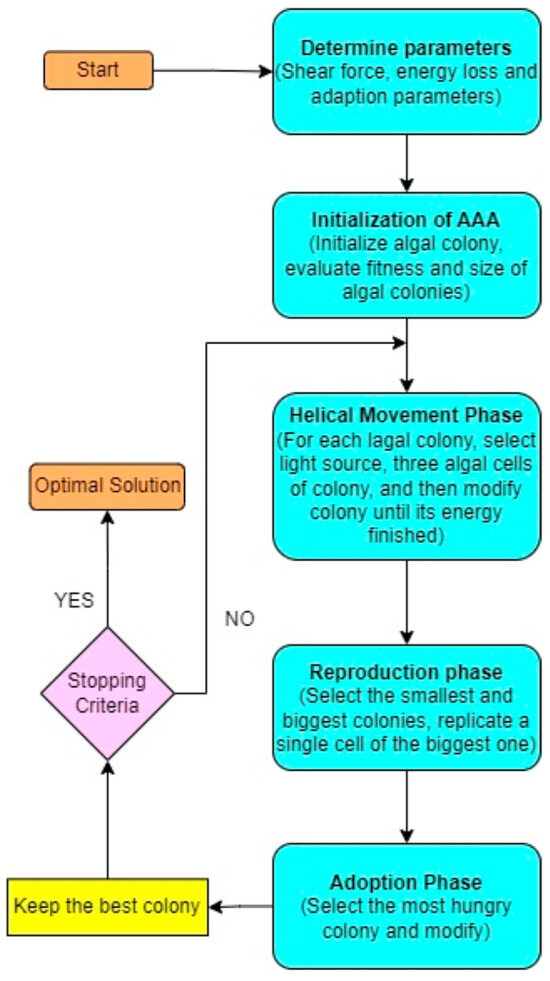

Figure 1 shows the methodology of the research. The approach includes four DR techniques: the Bessel function (BF), Discrete Cosine Transform (DCT), Least Squares Linear Regression (LSLR), and Artificial Algae Algorithm (AAA). Following this, feature selection techniques, either with or without classification of the data, are used. In terms of those with feature selection, two optimization algorithms are used: Elephant Herding Optimization (EHO) and Dragonfly Optimization (DFO). Moreover, ten classifiers are used, namely NLR, LR, GMM, EM, BLDC, LoR, SDC, SVM-L, SVM-Poly, and SVM-RBF, to classify the genes as non-diabetic and diabetic.

Figure 1.

Workflow diagram.

Role of Microarray Gene Data

Microarray gene data play a critical role in this research. The data can be used to identify patterns of gene expression that are associated with diabetes. The data are used to train and evaluate machine learning models and to identify the most relevant features for classification. The machine learning models are then used to predict whether a patient has diabetes or not. The models are trained on a dataset of microarray gene data [17] labeled with the patient’s diabetes status.

4. Materials and Methods

Microarray gene data are readily available from many search engines. We obtained human pancreatic islet data from the Nordic Islet Transplantation program (https://www.ncbi.nlm.nih.gov/bioproject/PRJNA178122). The data has accessed on 20 August 2021. The dataset included 28,735 genes from 57 non-diabetic and 20 diabetic patients. The data were preprocessed to only select the 22,960 genes with the highest peak intensity per patient. The logarithmic transformation was applied with a base 10 to standardize the individual samples, with a mean of 0 and a variance of 1. The data were then used to train and evaluate a machine learning model for the detection of diabetes. The model was able to achieve an accuracy of 90%, which is a significant improvement over the baseline accuracy of 50%. The results of this study suggest that microarray gene data can be used to develop effective methods for the detection of diabetes. The data are readily available and can be easily processed to identify the most relevant features for classification.

Dataset

This study focused on utilizing microarray gene data to detect diabetes and explore the features associated with the condition based on p-values using probability functions. Additionally, we aimed to address the issue of false positive errors in the selection of significant genes. The data we used for our analysis are available through multiple portals and comprised a total of 28,735 human genes as shown in the Table 1. We specifically considered 50 non-diabetic and 20 diabetic classes, selecting those with the greatest minimal intensity across 70 samples. To handle the high dimensionality of the dataset, we employed four dimensionality reduction techniques, namely BF, DCT, LSLR, and AAA. This allowed us to reduce the dimensions of the data while maintaining their informative content. The resulting dimensions were [2870 × 20] for the diabetic group and [2870 × 50] for the non-diabetic group. To further refine the dataset and improve classification accuracy, we applied feature selection techniques.

Table 1.

Pancreatic microarray gene dataset for non-diabetic and diabetic classes.

Specifically, we employed two techniques: EHO search and DOA. These techniques helped identify the most relevant features in the dataset, leading to a further reduction in dimensions to [287 × 20] for the diabetic group and [287 × 50] for the non-diabetic group. To evaluate the performance and accuracy of the classification, we employed ten classifiers, as already discussed.

5. Need for Dimensionality Reduction Techniques

Dimensionality reduction plays a crucial role in our research due to the high-dimensional nature of the microarray gene data. As the number increases, the complexity and computational costs of analyzing the data also increase significantly. Dimensionality reduction techniques allow us to reduce the number of features, making the subsequent analysis more efficient and manageable. Then, dimensionality reduction helps mitigate the curse of dimensionality [18]. In highly dimensional spaces, data points tend to become sparse, leading to difficulties in accurately representing the underlying structure of the data.

5.1. Dimensionality Reduction

To reduce the dimensionality of the dataset, BF, DCT, LSLR, and AAA were used.

- Bessel Function as Dimensionality Reduction

In this section, an overview of the Bessel function and its relevant relationships and properties associated with these functions are represented [19]. Furthermore, we investigate several valuable connections and characteristics related to these functions, as possesses the following mathematical definition:

The Gamma function is represented as Γ(λ):

The series () converges for all values of ranging from negative infinity to positive infinity. In fact, the Bessel function serves as a solution to a specific Sturm–Liouville equation [20]. This equation helps to analyze the Bessel function.

For .

It is evident that the Bessel functions Jn(x) are linearly independent when n is an integer. Additionally, there exist several recursive relations for Bessel functions that can be utilized in their analysis [20]. These relations provide valuable insights into the properties and behavior of Bessel functions in various mathematical contexts.

Lemma 1.

A significant recursion relation that proves useful in the analysis of the Bessel function of the first kind is:

The Bessel functions can be derived using the following procedure: Consider the vector Jn = [J0(x), J1(x), J2(x), …, Jn(x)]T, where J0, J1, J2, …, Jn denote the Bessel functions evaluated at x. To obtain the derivative operational matrix, we start with the derivative of J0(x) and denote it as J′0(x), where J′0(x) represents the derivative of J0(x) with respect to x. By constructing a matrix D, known as the derivative operational matrix, we can express Jn = DJ0, where D is a matrix that performs the differentiation operation on J0(x) to obtain Jn(x). This recursion relation allows for the efficient calculation and evaluation of Bessel functions, providing a valuable tool in various mathematical and scientific applications.

- DCT—Discrete Cosine Transform

The Discrete Cosine Transform (DCT) is a DR technique that approximates the Kernighan–Lin method. It aims to reduce the dimensions of the input data by eliminating the most significant features, thereby simplifying further analysis. By applying the DCT method [21], the input vector and its components are orthogonalized, resulting in a reduction in complexity. This method extracts features by selecting coefficients, which is a crucial step with a significant impact on computation efficiency [22,23]. The DCT can be denoted as:

- Least Squares Linear Regression (LSLR) as Dimensionality Reduction

Another effective technique for reducing dimensionality is the LSLR. Hotelling [24] initially introduced this concept, utilizing principal component analysis (PCA) as a regression analysis tool. It uses principal component analysis to reduce the dimensionality of high-dimensional data before applying a linear regression model. The transformation is learned by minimizing the sum of squared errors between the predicted lower-dimensional representation and the actual high-dimensional data.

LSLR, as discussed in Hastie et al. [25], performs dimensionality reduction by identifying the best-fit line that represents the relationship between the features of independent variables and the target as a dependent variable. The objective of LSLR is to minimize the sum of squared differences between the actual and predicted values of the target variable. Considering a set of N observations in the form (x1, y1), (x2, y2), …, (xN, yN), where xi represents the observation of the independent variables and yi corresponds to the observation of the target variable, the LSR solution can be represented as a linear equation:

In the context of LSR, the linear model is characterized by the parameters α_1, α_2, α_3, …, α_p, where p represents the number of independent variables. This minimization process is expressed through the following equation:

After applying dimensionality reduction techniques to the microarray gene data, the resulting outputs are further analyzed using various statistical parameters such as mean, kurtosis, variance, Pearson correlation coefficient (PCC), skewness, t-test, f-test, p-value, and canonical correlation analysis (CCA). These statistical measures are used to assess whether the outcomes accurately represent the intrinsic properties of the underlying microarray genes in the reduced subspace.

- Artificial Algae Algorithm (AAA) as Dimensionality Reduction

The Artificial Algae Algorithm (AAA) [26] is a nature-inspired optimization algorithm that mimics the behavior and characteristics of real algae to solve complex problems. Each solution in the problem space is represented by an artificial alga, which captures the essence of algae’s traits. Like real algae, artificial algae exhibit helical swimming patterns and can move towards a light source for photosynthesis. The AAA consists of three fundamental components: the evolutionary process, adaptation, and helical movement as depicted in the Figure 2. The algal colony acts as a cohesive unit, moving and responding to environmental conditions. By incorporating the principles of artificial algae into the algorithm, the AAA offers a novel approach to solving optimization problems.

where is an algal cell in the Dth dimension of the nth algal colony.

Figure 2.

Flow diagram for Artificial Algae Algorithm.

During the evolutionary process [27] of the AAA, the growth and reproduction of algal colonies are influenced by the availability of nutrients and light. When an algal colony is exposed to sufficient light and nutrient conditions, it undergoes growth and replicates itself through a process like real mitotic division. In this process, two new algal cells are generated at time t. Conversely, if an algal colony does not receive enough light, it can survive for a certain period, but eventually perishes. It is important to note that is assumed to be 1, as the maximum biomass conversion should be equivalent to the substrate consumption in unit time, following the conservation of mass principle. The size of the algal colony at time t + 1 is determined by the Monod equation, as expressed in the subsequent equation:

where i = 1, 2, 3, …, N,

represents in time with algal colony,

N represents number of algae.

In AAA, nutrient-rich algal colonies with optimal solutions thrive, and successful traits are transferred from larger colonies to smaller ones through cell replication during the evolutionary process.

Maximumt = max , whereas i = 1, 2, 3, …, N,

Minimumt = min , whereas i = 1, 2, 3, …, N,

Minimumt = maximumt, whereas m = 1, 2, 3, …, D.

In the AAA, algal colonies are ranked by size at time t. In each dimension, the smallest algal colony’s cell dies, while the largest colony’s cell replicates itself.

In the AAA algorithm, algal colonies that are unable to grow sufficiently in their environment attempt to adapt by becoming more similar to the largest colony. This process changes the starvation levels within the algorithm. Each artificial alga starts with a starvation value of zero, which increases over time if the algal cell does not receive enough light. The artificial alga with the highest starvation value is the focus of adaptation.

Helical movement: The cells and colonies exhibit specific swimming behavior, striving to stay near the water surface where sufficient light for their survival is available. They move in a helical manner, propelled by their flagella, which face limitations from gravity and viscous drag. In the AAA, gravity’s influence is represented by a value of 0, while viscous drag is simulated as shear force, proportional to the size of the algal cell. The cell is modeled as a spherical shape, with its size determined by its volume, and the friction surface is equivalent to the surface area of a hemisphere.

Friction surface is represented as .

The helical movement of algal cells is determined by three randomly selected dimensions. One dimension corresponds to linear movement, as described by Equation (18). The other two dimensions correspond to angular movement, as described by Equations (19) and (20). Equation (18) is used for one-dimensional problems, allowing the algal cell or colony to move in a single direction. Equation (19) is used for two-dimensional problems, where the algal movement follows a sinusoidal pattern. Equation (20) is used for three or more dimensions, where the algal movement takes on a helical trajectory. The step size of the movement is determined by the friction surface and the distance to the light source.

where represents the x, y, and z coordinates of the algal cell at time t.

The variables α and β are in the range [0, 2π], while p is within the interval [−1, 1]. Δ represents the shear force and denotes the surface area of the ith algal cell.

5.2. Statistical Analysis

The microarray gene data were reduced in dimension through four distinct dimensionality reduction (DR) techniques and comprehensive analysis using the statistical metrics of mean, variance, skewness, kurtosis, PCC, and CCA. This scrutiny aimed to ascertain whether the outcomes accurately portrayed the inherent properties of microarray genes within the reduced subspace. As shown in Table 2, the DR method based on AAA exhibited elevated mean and variance values across classes. In contrast, the remaining three DR methods—namely the Bessel function, Discrete Cosine Transform (DCT), and Least Squares Linear Regression (LSLR)—revealed modest and overlapping mean and variance values within classes. Among these methods, the LSLR DR approach showcased negative skewness, indicating the occurrence of skewed elements in the classes. Additionally, the DCT and LSLR DR methods demonstrated negative kurtosis, signifying their preservation of the underlying microarray gene traits. The PCC values revealed substantial correlations within the obtained outputs for a particular class. In the case of the Bessel function DR method, all four statistical parameters exhibited positive values at their minimum. This indicates an association with non-Gaussian and nonlinear distributions, a conclusion substantiated by the histograms, normal probability plots, and scatter plots of the DR method outputs. Canonical Correlation Analysis (CCA) provided insight into the correlation between DR outputs for diabetic and non-diabetic instances. Notably, the low CCA value in Table 2 suggests a limited correlation between the DR outputs of the two distinct classes.

Table 2.

Statistical analysis for different DR techniques.

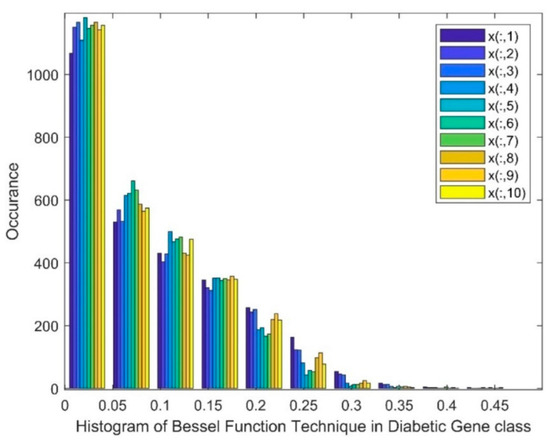

Figure 3 shows a histogram of the Bessel function DR techniques in the diabetic class. The histogram depicts that a skewed group of values, a gap, and the existence of nonlinearity were witnessed in this method. Patients from 1 to 10 are represented as x(:,1) to x(:,10).

Figure 3.

Histogram of Bessel function technique in the diabetic gene class.

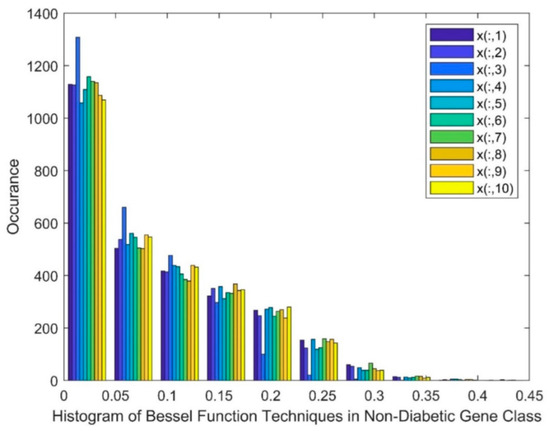

Figure 4 exhibits a histogram of the BF DR techniques in the non-diabetic class, in which the marker of x(:,1) represents patient 1 and x(:,10) represents patient 10. Figure 4 shows a skewed group of values, a gap, and the existence of nonlinearity in this method.

Figure 4.

Histogram of Bessel function technique in the non-diabetic gene class.

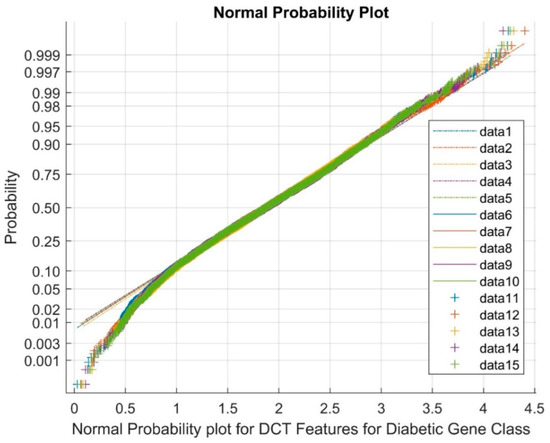

In Figure 5, data points 1 to 5 signify the reference points, 6 to 10 highlight the upper bound, and 11 to 15 depict the clustered variable points. This representation signifies the generation of a normal probability plot for features obtained using DCT DR techniques within the diabetic gene class. As can be observed from Figure 5, the plot effectively showcases the complete cluster of DCT DR outputs, accentuating the existence of variables with the nature of nonlinearity across classes.

Figure 5.

Normal probability plot showcasing DCT features for the diabetic gene class.

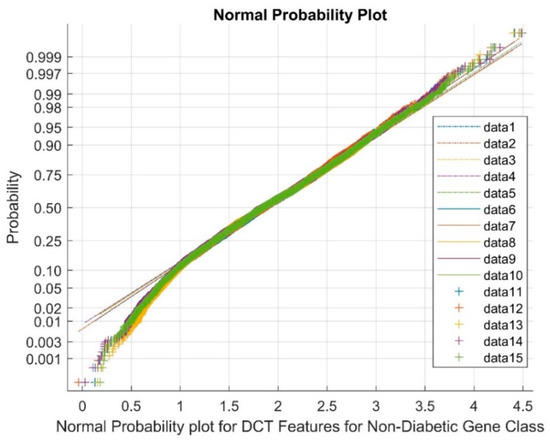

Figure 6 shows the normal probability plot for the DCT DR techniques for the non-diabetic gene class. The data points from 1 to 5 represent references, the upper bound values are represented from 6 to 10, and the cluster variable points are from 11 to 15. The plot shows that the total cluster of DCT DR outputs and nonlinearly correlated variables among the classes were observed due to the low values of mean and variance and the presence of negative kurtosis variables in the DR method.

Figure 6.

Normal probability plot representing DCT features for the non-diabetic gene class.

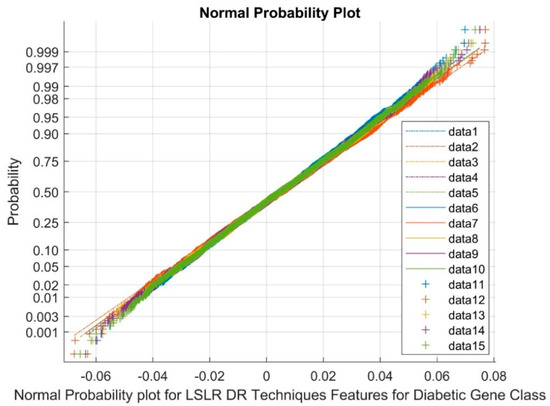

Figure 7 presents data points 1 to 5 as references, 6 to 10 as upper bound values, and 11 to 15 as variable points. The normal probability plot distinctly exhibits clustered groups corresponding to LSLR DR outputs. This observation underscores the existence of non-Gaussian and nonlinearly varying variables among the classes. This phenomenon can be attributed to the low variance and negative kurtosis attributes of the outcomes generated by the DR method.

Figure 7.

Normal probability plot for LSLR DR techniques in diabetic gene class.

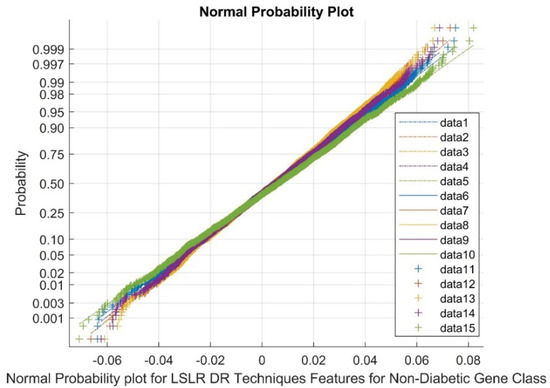

Figure 8 presents the normal probability plot for LSLR DR techniques in the non-diabetic class. The plot displays a discrete group of clusters for LSLR DR outputs. The data points 1 to 5 represent references, 6 to 10 represent upper bound values, and 11 to 15 represent variable points. The flat kurtosis variable and low variance in the DR methods indicate the presence of nonlinearity and a non-Gaussian nature.

Figure 8.

Normal probability plot for LSLR DR techniques in non-diabetic gene class.

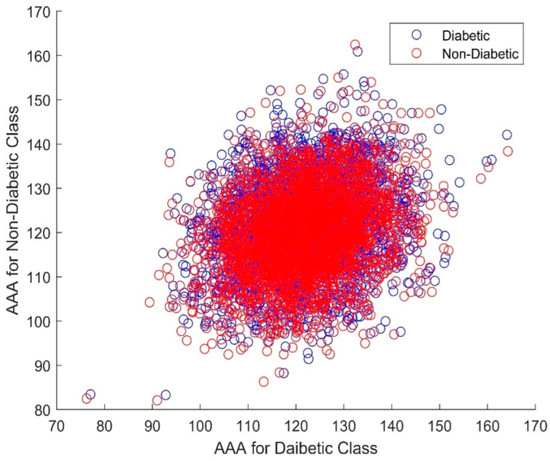

Figure 9 presents a scatter plot of the AAA DR techniques for the non-diabetic and diabetic gene classes. As can be seen, there is total clustering and overlapping of the variables in both classes. The non-Gaussian and nonlinear nature can also be observed from this graph. Furthermore, the AAA algorithm has a heavy computational cost on the classifier design. To reduce the burden of the classifiers, a feature selection process comprising the Elephant Herd Optimization (EHO) and Dragonfly algorithms was initiated.

Figure 9.

Scatter plot depicting AAA DR results for both non-diabetic and diabetic classes.

6. Feature Selection Methods

The reduced dimensionality dataset was used for the feature selection methods. The metaheuristic algorithms of Monarch Butterfly Optimization (MBO) [28], Slime Mold Algorithm (SMA) [29], Moth Search Algorithm (MSA) [30], Hunger Games Search (HGS) [31], Runge Kutta Method (RUN) [32], Colony Predation Algorithm (CPA) [33], weIghtedmeaNoFvectOrs (INFO) [34], Harris Hawks Optimization (HHO) [35], Rime Optimization Algorithm (RIME) [36], Elephant Herding Optimization (EHO) [37] algorithm, and Dragonfly Optimization Algorithm (DOA) [38] were considered for the FS.

MBO has two operators: migration and butterfly adjusting operator. The Lévy flight is used in the butterfly adjusting operator, which has infinite mean and variance. SMA is used for attaining global optimization. It has three stages: the first is to make a better solution approach based on the slime mold bound condition through the iterations attained from the tanh function; the second is wrap food, based on SMA, that imitates the updating position of the slime mold; and the third is an oscillator, based on step size, which is considered within bound. MSA was also used to find the global optimization. Moths have the propensity to follow Lévy flights. It exhibits similar characteristics to MBO such as being non-Gaussian and having infinite mean and infinite variance. HGS is a good population-based optimizer; however, when dealing with challenging optimization problems, the classic HGS sometimes shows premature convergence and stagnation shortcomings. Therefore, finding approaches that enhance solution diversity and exploitation capabilities is crucial. RUN is also an optimization technique. Although RUN has a solid mathematical theoretical foundation, there are still some performance defects when dealing with complex optimization problems. In the initialization phase, the focus is on constructing a population that evolves over several iterations. CPA has taken inspiration from the predatory habits of groups in nature. However, CPA suffers from poor exploratory ability and cannot always escape certain solutions. Two strategies are used in the pursuit process to increase the probability of successful predation: scattering prey and surrounding prey. Prey dispersal drives the prey in different directions and weakens the prey group. The weIghtedmeaNoFvectOrs (INFO) algorithm is also a population-based optimization algorithm operating based on the calculation of the weighted mean for a set of vectors. It has three techniques to update the vectors’ location: a local search, a vector-combining rule, and the weighted mean concept for a solid structure. The INFO algorithm’s reliance on weighted mean vectors may not capture nonlinear relationships between features and target variables effectively. It focuses on selecting individual features based on their weighted mean values, so may not effectively explore interactions or combinations of features. HHO is a computational intelligence tool, and its complexity may increase with the number of features in high-dimensional datasets. It may struggle to handle large feature spaces efficiently, leading to longer execution times. It replicates Harris hawk predator–prey dynamics. It is divided into three sections: exploring, transformation, and exploitation. It has a high convergence rate and a powerful global search capability, but it has an unsatisfactory optimization effect on high-dimensional or complex problems. RIME is also a good optimization algorithm for search space mechanisms and the typical idea is to compare the updated fitness value of an agent with the global optimum; if the updated value is better than the current global optimum, then the optimum fitness value is replaced, and the agent is recorded as the optimum. The advantage of such an operation is that it is simple and fast, but it does not help in the exploration and exploitation of the population and only serves as a record. However, algorithms like EHO and DOA are used as feature selection parameters for emulating the behavior observed in elephants and dragonflies for the better selection of features and offer effective approaches to address the abovementioned challenges in optimization techniques for FS.

- Elephant Herding Optimization (EHO) algorithm

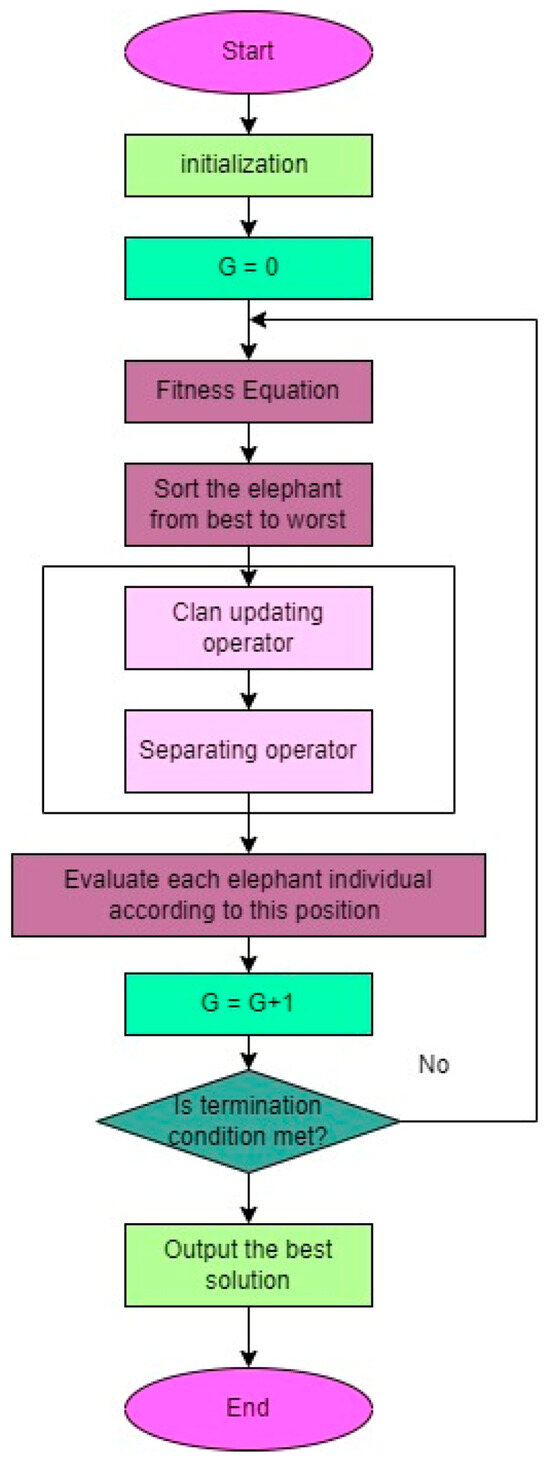

Wang et al. [37] introduced EHO as a metaheuristic algorithm inspired by the behavior of elephants in the African savanna. It has demonstrated effectiveness in solving optimization problems and has been successfully applied in various domains, including feature selection. In feature selection, the objective is to identify a subset of informative features from a larger set that are relevant to the target variable. EHO employs a herd of elephants to search for the optimal solution, with each elephant representing a potential solution. By combining global and local search strategies, the algorithm guides the elephants towards the best solution. The methodology of the EHO is depicted in Figure 10. EHO offers immense potential as a feature selection technique due to its ability to strike a balance between global and local searches, making it suitable for high-dimensional data. The initialization of the elephant herd involves assigning random positions to the elephants in the feature space, providing a comprehensive representation of the elephants’ positions and the overall movement of the herd.

Figure 10.

Diagram illustrating the process of the EHO algorithm.

The EHO algorithm [39] involves updating the positions of elephants within the herd. This update process considers both the old position () and the new position () of each elephant. A control parameter (α), which falls within the range of [0, 1], is used in conjunction with a randomly generated number (r ∈ [0, 1]) to determine the new position. Additionally, each elephant in the herd maintains a memory of its best position in the feature space. The best position is updated using the following equation, ensuring that the elephant’s memory is updated accordingly.

The algorithm includes the concept of the best position (Ybest) for each elephant within the herd. This best position is determined by considering the control parameter (β), which falls within the range of [0, 1]. The control parameter plays a role in updating and adjusting the best position of the elephant, ensuring that it reflects the optimal solution obtained during the optimization process.

By considering both the best and worst solutions, the EHO algorithm ensures a more comprehensive exploration of the solution space, leading to improved optimization performance.

- Dragonfly Optimization Algorithm (DOA)

The Dragonfly Algorithm (DA) is an optimization technique based on swarm intelligence, taking inspiration from the collective behaviors of dragonflies. Introduced by Mirjalili in 2016 [38], this algorithm mimics both static and dynamic swarming behaviors observed in nature. Figure 11 shows the flowchart of DOA. During the dynamic or exploitation phase, it forms large swarms and travels in a specific direction to confuse potential threats. In the static or exploration phase, the swarms form smaller groups, moving within a limited area to hunt and attract prey [40]. The DA is guided by five fundamental principles: separation, alignment, cohesiveness, attraction, and diversion. These principles dictate the behavior of individual dragonflies and their interactions within the swarm. In the equations that follow, K and Ki denote the current position and the ith position of a dragonfly, respectively, while N represents the total number of neighboring flies.

Figure 11.

Flowchart of the Dragonfly Optimization algorithm.

Separation: This implies that the static phase of the algorithm focuses on preventing dragonflies from colliding with each other in their vicinity. This calculation aims to ensure the avoidance of collisions among flies.

where represents the motion of the ith individual aimed at maintaining separation from other dragonflies.

Alignment: This denotes the synchronization of velocities among dragonflies belonging to the same group. It is represented as

This is represented by , which is called the velocity of the ith individual.

Cohesiveness: This represents the inclination of individual flies to converge towards the center of swarms. The calculation is

Attraction: The quantification of the attraction towards the food source is characterized by

Here, is the attraction of the food source, and represents the position of the food source.

Diversion: The diversion from the enemy is determined by the outward distance, which is calculated as

The calculation of the outward distance determines the diversion from the enemy, and it is expressed in terms of the step vector (∆K) and the current position vector (K) are used to update the locations of artificial dragonflies within the search space. The step vector (∆K) can be calculated using the direction of movement of the dragonfly:

The behavior of the dragonfly algorithm is influenced by factors such as separation weight (s), alignment weight (a), cohesion weight (c), attraction weight (h), and enemy weight (d). The inertia weight is represented by “ω”, and “t” represents the iteration number.

Through the manipulation of these weights, the algorithm can attain both exploration and exploitation phases. The position of the ith dragonfly at t + 1 iterations is determined by the following equation:

The evaluation of this method’s outcomes is conducted by assessing the consequence of the p-value using the t-test. Table 3 demonstrates the significance of the p-values associated with the EHO and Dragonfly Algorithm methods across the four DR techniques. The data presented in Table 3 reveal that both the EHO and Dragonfly Algorithms’ feature selection methods do not exhibit significant p-values across classes for all four dimensionality reduction methods. This p-value serves as an initial indicator to quantify the existence of outliers, nonlinearity, and non-Gaussian nature among the classes after the implementation of feature selection techniques.

Table 3.

Significance of p-values for feature selection methods using t-test across various DR techniques.

7. Classification Techniques

- NLR—Nonlinear Regression

The behavior of a system is expressed through mathematical equations to facilitate representation and analysis, ultimately aiming to determine an exact best-fit line between classifier values. Nonlinear regression introduces nonlinear and random variables (a, b) to capture the complexity of the system. The primary objective of nonlinear regression is to reduce the sum of squares. This involves measuring values from the dataset and computing the difference between the mean and each data point, squaring these differences, and summing them. The minimum value of the sum of squared differences indicates a better fit to the dataset.

Nonlinear models require more attention due to their inherent complexity, and researchers have devised various methods to mitigate this difficulty, such as the Levenberg–Marquardt and Gauss–Newton methods. Estimating parameters for nonlinear systems is achieved through least squares methods, aiming to minimize the residual sum of squares. Iterative techniques, including the Taylor series, steepest descent method, and Levenberg–Marquardt method (Zhang et al. [41]), can be employed for nonlinear equations. The Levenberg–Marquardt technique is commonly used for assessing the nonlinear least squares, offering advantages and producing reliable results through an iterative process.

The authors assume a represented model:

Here, represent the individual and supported variables of the ith iteration, are the parameters, and are the error terms that follow ).

Let be the starting values; the successive estimates are obtained using

where and are a multiplier and is the identitymatrix.

The integrity of the model is assessed using the MSE, which quantifies the discrepancy between the experimental and estimated values. The MSE is computed as the average squared difference between the actual and predicted values. The overall experimental values are expressed in terms of N.

The steps for nonlinear regression are the initialization of the initial parameters and the generation of curves based on these values. The goal is to iteratively modify the parameters to minimize the MSE and bring the curve closer to the desired value. The process continues until the MSE value no longer changes compared to the previous iteration, indicating convergence.

- Linear Regression (LR)

In the investigation of gene expression data, linear regression is a suitable method for obtaining the best-fit curve, as the conveyance levels in the genes exhibit only minor variations. To identify the most informative genes, a feature selection process is performed by comparing the training dataset with the gene expression data within different levels of diversity. In this linear regression model, the dependent variable, denoted as x, is associated with the independent variable, y. The model aims [42] to predict values using the x variable, optimizing the regression fitness value based on the population in the y variable. The hypothesis function for a single variable is given by

where represents the parameters. The objective is to select the range of that ensures closely approximates y in the training dataset (x, y).

Here, “m” symbolizes the total count of samples within the training dataset. For LR models with n variables, the hypothesis function becomes

and the cost function is given by

where θ is a set of parameters {}. The gradient descent algorithm is employed to minimize the cost function, and the partial derivative of the cost function is computed as

To update the parameter value , the following equation is used:

where β represents the learning rate, and is continuously computed until convergence is reached. In this study, β is set to 0.01.

The algorithm for LR involves the following steps:

Feature selection parameters, obtained from algorithms such as the Bessel function, DCT, LSLR, and AAA, are used as input for the classifiers.

A line represented by is fitted to the data in a linear manner.

The cost function is formulated with the aim of minimizing the squared error existing between the observed data and the predictions.

The solutions are found by equating the derivatives of to zero.

To yield the coefficient of MSE, repeat steps 2, 3, and 4.

- Gaussian Mixture Model (GMM)

GMM is a well-known unsupervised learning technique in machine learning used for many applications like pattern recognition and signal classifications. It involves integrating related objects based on clustering techniques. By classifying the data, GMM [43] facilitates the prediction and computation of unrated items within the same category. Hard and soft clustering techniques are used by GMM, and it utilizes the distribution for data analysis. Each GMM consists of multiple Gaussian distributions (referred to as “g”). The PDF of GMM combines these distributed components linearly, enabling easier analysis of the generated data. When generating random values as a vector “a” within an n-dimensional sample space χ, if “a” adheres to a Gaussian distribution, the expression for its probability distribution function is as follows:

Here, represents the mean vector in the n-dimensional space, and is the covariance matrix of size . The determination of the covariance matrix and mean vector is essential for the Gaussian distribution. Multiple components are mixed in the Gaussian distribution function [44], with each component and the equation of mixture distribution given by

In this equation, represents the mixing coefficient corresponding to the Gaussian mixture, while denote the mean vector and covariance matrix of that Gaussian component, respectively.

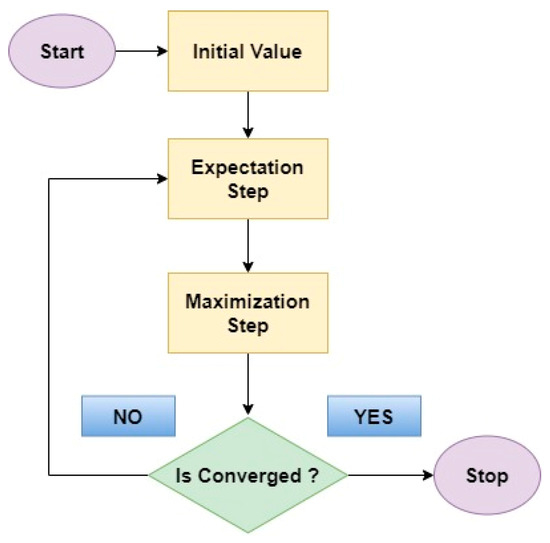

- Expectation Maximum (EM)

The EM algorithm [45] serves as a classifier in this context. Its primary objective is to estimate missing values within a dataset and subsequently predict those values to maximize the dataset’s order based on the application’s requirements. Consider two random variables, X and Y, involved in the prediction process and determining the order of the data in rows. Variable X is observable and known in the dataset, while the unknown variable Z needs to be predicted to set the value of Y.

The maximum likelihood estimation is obtained as

To estimate the expected value of the log-likelihood function, we calculate

The above quantity is maximized to compute the maximum value, resulting in

The expectation and maximization steps are iteratively repeated until a converged sequence of values is reached as mentioned in Figure 12 which is the flow diagram of expectation maximization.

Figure 12.

Flow diagram of Expectation Maximum.

- Bayesian Linear Discriminant Classifier (BLDC)

BLDC [46] is commonly employed to regularize high-dimensional signals, reduce noisy signals, and improve computational efficiency. Before conducting Bayesian linear discriminant analysis, an assumption is made that a target, denoted as b, is related to a vector x with the addition of white Gaussian noise, c.

This relationship can be expressed as . The function x is assigned weights, and its likelihood function is given by

where the pair represents G. The of prior distribution is expressed as

The regularization square matrix is given by

and is a hyperparameter obtained from data forecasting, while l represents the assigned vector number. By applying Bayes’ rule, can be calculated as

The mean vector υ and the covariance matrix must adhere to the specific norms outlined in Equations (50) and (52) for the posterior distribution. The predominant nature of the posterior distribution is Gaussian.

When predicting the input vector , the probability distribution for regression can be expressed as

Again, the nature of this prediction analysis is predominantly Gaussian, with the mean expressed as and variance expressed as .

- Logistic Regression (LoR)

Logistic Regression (LoR) has proven to be effective in classifying diseases such as diabetes, types of cancer, and epilepsy. In this context, function y that represents the disease level is considered, ranging from 0 to 1 to indicate non-diabetic and diabetic patients, respectively. Gene expressions are represented by a vector , where each element corresponds to the expression level of the jth gene. Using a model-based approach for a dataset , the aim is to identify informative genes for diabetic patients based on the likelihood of y being 1 given x. To achieve dimensionality reduction, logistic regression is utilized to select the most relevant “q” genes. The gene expression representation * corresponds to the gene expression, with j ranging from 1 to q, while the binary disease status is denoted by , where i ranges from 1 to n. The logistic regression model can be expressed as

The objective is to maximize the fitness and log-likelihood, which can be achieved by obtaining the following function

where τ is a parameter that limits the reduction in υ near 0, as defined by the model [47,48], and denotes the Euclidean length of . The selection of are determined using the parametric bootstrap method, which imposes constraints on accurate error prediction. Initially, = 0, for the purpose of calculating the cost function. It is then varied with different parameters to minimize the cost function. The sigmoid function is applied to restrict values between 0 and 1, serving as an attenuation mechanism. The threshold cut-off value of 0.5 is used to classify patients as either diabetic or non-diabetic. Any probability below the threshold is considered indicative of non-diabetic patients, while values above the threshold indicate diabetic patients.

- SDC—Softmax Discriminant Classifier

The SDC is used to verify and detect the group to which a particular test sample belongs [49]. It weighs the distance between the training samples and the test sample within a particular class or group of data. Z is represented as

consisting of samples from distinct classes named q, . Each class, represented by , contains samples from the class, where The sum of the sample sizes, given a test sample , is passed through the classifiers to obtain minimal construction errors, thereby assigning it to the class q. The transformation of class samples and test samples in SDC involves nonlinear enhancement values. This is achieved through the following equations:

In these equations, represents the differentiate of the class and approaches zero, resulting in the maximization of . This asymptotic behavior leads to the maximum likelihood of the test sample belonging to a particular class.

- Support Vector Machines

The SVM classifier is a significant machine learning approach widely used for classification problems, particularly in the phase of nonlinear regression [50]. In this study, three distinct methods are explored for data classification:

SVM-Linear: this method utilizes a linear kernel to classify the data.

SVM-Polynomial: this approach involves the use of a polynomial kernel for data classification.

SVM-Radial Basis Function (RBF): the RBF kernel is used here to classify the data.

These three SVM methods offer different strategies for effectively classifying datasets, allowing researchers to choose the most suitable approach based on their specific classification requirements.

The training time and computational complexity of the SVM depend on the data and classifiers used. When the number of supports in the SVM increases, it results in higher computational requirements due to the calculation of floating-point multiplications and additions. To address this issue, K-means clustering techniques have been introduced to reduce the number of supports in the SVM. In the linear case, Lagrange multipliers can be employed, and the data points on the borders are expressed as . Here, m represents the number of supports, represents the target labels for y, and the linear discriminant function is used.

The process of implementing the Support Vector Machine (SVM) involves several key steps.

Step 1: The first step is to use quadratic optimization to linearize and converge the problem. By transforming the primal minimization problem into a dual optimization problem, the objective is to maximize the dual Lagrangian LD with respect to :

subject to , where .

Step 2: The next step involves solving the quadratic polynomial programming to obtain the optimal separating hyperplane. The data points with non-zero Lagrangian multipliers () are identified as the support vectors.

Step 3: The optimal hyperplane is determined based on the support vectors, which are the data points closest to the decision boundary in the trained data.

Step 4: K-means clustering is applied to the dataset, grouping the data into clusters according to the conditions from Steps 2 and 3. Three points are randomly chosen from each cluster as the center points, which are representative points from the dataset. Each center point acquires the points around them.

Step 5: When there are six central points, each representing an individual cluster, the SVM training data are acquired through the utilization of kernel methods.

Polynomial Function: K (X, Z) = (XT Z + 1) d

7.1. Training and Testing of Classifiers

Due to the limited availability of training data, we employed k-fold cross-validation, a widely used technique for evaluating machine learning models. The methodology described by Fushiki et al. [51] was followed to conduct the k-fold cross-validation. Initially, the dataset was divided into k equally sized subsets or “folds”. This process was repeated for all k-folds, ensuring that each fold was used once for testing. Consequently, k performance estimates (one for each fold) were obtained. To obtain an overall estimate of the model’s performance, the average of these k performance estimates was calculated. After training and validating the model using k-fold cross-validation, it was retrained on the complete dataset to make predictions on new, unseen data. The significant advantage of utilizing this method is the more reliable model performance compared to other test split methods, as the technique maximizes the utilization of the available data. Here, we adopted a k-value of 10-fold cross-validation. Furthermore, the research incorporated 2870 dimensionally reduced features per patient, focusing on a cohort of 20 patients with diabetes and 50 non-diabetic patients. The utilization of cross-validation eliminates any reliance on a specific pattern for the test set, enhancing the robustness of our findings. The training process is regulated in the MSE proposed by Wang et al. [52], which is defined as follows:

where Oj is the observed value at time j, and Tj is the target value at model j.

Table 4 represents confusion matrix for detecting diabetes. The following terms in Table 4 can be defined as:

TP—true positive: a patient is accurately classified into the diabetic class.

TN—true negative: a patient is accurately recognized as belonging to the non-diabetic class.

FP—false positive: a patient is inaccurately classified as belonging to the diabetic class when they actually belong to the non-diabetic class.

FN—false negative: a patient is inaccurately classified as being in the non-diabetic class when they should be categorized as belonging to the diabetic class.

Table 4.

Confusion matrix for detecting diabetes.

Table 4.

Confusion matrix for detecting diabetes.

| Clinical Situation | Predicted Values | ||

|---|---|---|---|

| Diabetic | Non-Diabetic | ||

| Real Values | Diabetic class | TP | FN |

| Non-diabetic class | FP | TN | |

Table 5 provides insight into the performance of the classifiers without the feature selection method, focusing on the training and testing Mean Squared Error (MSE) across various DR techniques. The training MSE values consistently range between 10−4 and 10−10, while the testing MSE varies from 10−4 to 10−8. Among the classifiers, the SVM (RBF) classifier using the AAA DR technique without feature selection achieves the lowest training and testing MSE, specifically 1.93 × 10−10 and 1.77 × 10−8, respectively. Notably, a lower testing MSE indicates superior classifier performance. It is evident from Table 5 that higher testing MSE values correspond to lower classifier performance, regardless of the DR techniques used.

Table 5.

Analysis of MSE in for different DR techniques without feature selection.

Table 6 exhibits the training and testing of MSE in the classifiers with EHO feature selection method across all four DR techniques. The training MSE varies from 10−5 to 10−10, while the testing MSE varies between 10−5 and 10−8. The SVM (RBF) classifier in the AAA DR method with PSO feature selection achieved a minimum training and testing MSE of 1.99 × 10−10 and 2.5 × 10−8, respectively. The Bessel function DR method indicates slightly lower training and testing MSE values for the classifiers when compared to the other three DR techniques. All of the classifiers had slightly enhanced testing performance when compared to methods without feature selection. This indicates the enhancement in classifier performance irrespective of the DR technique.

Table 6.

Analysis of MSE performance for classifiers using EHO feature selection methods across different DR techniques.

Table 7 demonstrates the training and testing Mean Squared Error (MSE) performance of classifiers utilizing the Dragonfly Algorithm-based feature selection method across various dimensionality reduction techniques. The training MSE values range from 10−6 to 10−9, while the testing MSE varies between 10−5 and 10−8. The SVM (RBF) classifier, when combined with the Dragonfly feature selection method, achieved a minimal training MSE of 1.66 × 10−9 and a testing MSE of 3.25 × 10−8. Notably, this feature selection method led to improvements in the training and testing performance of all classifiers. This enhancement is reflected in improved accuracy, MCC, and Kappa parameters, regardless of the specific dimensionality reduction technique employed.

Table 7.

Analysis of MSE in classifiers for various DR techniques with Dragonfly feature selection methods.

7.2. Selection of Target

The non-diabetic class target value is taken at the lower side from 0→1, and this is mapped according to the following constraint:

Here, represents the mean value of the input feature vectors for the N number of non-diabetic features considered for classification. Similarly, for the diabetic class ()), the target value is mapped to the upper end of the zero-to-one (0→1) scale. This mapping is established based on the following:

Here, signifies the average value of input feature vectors for the M number of diabetic cases used for classification. It is important to highlight that the target value is set to be higher than the average values of and . This selection of target values requires the discrepancy between them to be at least 0.5, as expressed by the following:

The targets for non-diabetic and diabetic are chosen as 0.1 and 0.85, respectively. Once the target is fixed, MSE is used for evaluating the performance of the classifiers. Table 8 shows the selection of optimal parameters for the classifiers after training and testing process.

Table 8.

Selection of optimal parametric values for classifiers.

8. Results and Findings

The study employs the conventional tenfold testing and training approach, where 10% of the input is dedicated to testing, while the remaining 90% is utilized for training. The selection of performance metrics is pivotal for assessing the efficacy of classifiers. The assessment of classifier performance, especially in binary classification scenarios like distinguishing between diabetic and non-diabetic cases from pancreatic microarray gene data, relies on the utilization of a confusion matrix. This matrix facilitates the computation of performance metrics including accuracy, F1 score, MCC, error rate, FM metrics, and Kappa, which are commonly utilized to gauge the comprehensive performance of the model. The relevant parameters associated with the classifiers for performance analysis are illustrated in Table 9.

Table 9.

Performance metrics.

The performance of the classifier was evaluated using several metrics, including Acc, F1 score, MCC, ER, FM, and Kappa. Accuracy is the fraction of predictions that are correct, and it is a measure of the overall performance of the classifier. F1 score is the harmonic mean of precision and recall, and it is a measure of the classifier’s ability to both correctly identify positive instances and to correctly identify negative instances. MCC is a measure of the correlation between the observed and predicted classifications, and it is a more sensitive metric than accuracy or F1 score. Error rate is the fraction of predictions that are incorrect, and it is the complement of accuracy. The FM metric is a generalization of the F-measure that adds a beta parameter, and it is a measure of the classifier’s ability to both correctly identify the values of positive and negative instances, with a weighting that can be adjusted to favor one or the other. Kappa is a statistic that measures agreement between observed and predicted classifications, adjusted for chance. The results are tabulated in Table 10.

Table 10.

Parametric analysis of different classifiers through various DM techniques.

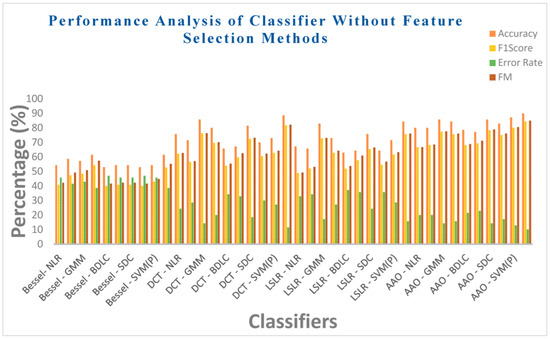

Table 10 illustrates the performance analysis of ten classifiers, considering metrics such as Acc, F1 score, MCC, ER, F-measure, and Kappa values. This analysis is conducted for four DR methods without the incorporation of two feature selection methods. Table 9 reveals that the EM classifier in the Bessel function DR technique achieves a moderate accuracy of 61.42%, an F1 score of 54.23%, a moderate error rate of 38.57%, and an F-measure of 57.28%. However, the EM classifier exhibits a lower MCC value of 0.3092 and a Kappa value of 0.2645. On the other hand, the SVM (linear) classifier in the Bessel function DR method demonstrates a low accuracy of 52.85% along with a high error rate of 47.15%. Additionally, it exhibits an F1 score of 40% and an F-measure of 41.57%. The MCC and Kappa values for the SVM (linear) classifier are notably low, at 0.06324 and 0.05714, respectively. Across the Bessel function DR techniques, all classifiers exhibit poor performance in the various metrics. This trend can be attributed to the intrinsic properties of the Bessel function, which is evident from the non-negative values of the statistical parameters. Equally, the SVM (RBF) classifier in the context of the DCT DR technique achieves a respectable accuracy of 88.57%, complemented by a low error rate of 11.42%. Furthermore, it attains an F1 score of 81.81% and an F-measure of 82.15%. The MCC and Kappa values of the SVM (RBF) classifier reach 0.7423 and 0.7358, respectively. Within the SVM (RBF) classifier, the AAA DR technique exhibits a remarkable accuracy score of 90%, coupled with a low error rate of 10%. This is accompanied by an F1 score of 84.44% and an F-measure of 84.97%. The MCC and Kappa values of the SVM (RBF) classifier are noteworthy, totaling 0.7825 and 0.772, respectively. Remarkably, regardless of the DR technique employed, all classifiers manage to maintain accuracy within the range of 52% to 85%. This is primarily due to the inherent limitations of the DR techniques. Therefore, incorporating feature selection methods is highly recommended to enhance the performance of these classifiers.

Figure 13 provides an overview of the performance analysis of ten classifiers concerning the metrics of accuracy, F1 score, error rate, and F-measure values. This analysis is carried out within the context of four dimensionality reduction methods, specifically without feature selection methods. Table 10 shows that the EM classifier in the Bessel function DR technique achieves a modest accuracy of 61.42%, along with an F1 score of 54.23%. Moreover, it exhibits a moderate error rate of 38.57% and an F-measure of 57.28%. On the other hand, the SVM (linear) classifier in the Bessel function DR method demonstrates a lower accuracy of 52.85%. This classifier is accompanied by a higher error rate of 47.15%, an F1 score of 40%, and an F-measure of 41.57%. Across the performance metrics, all classifiers exhibit suboptimal performance within the Bessel function DR technique. This trend is observed consistently across various measures. However, the SVM (RBF) classifier within the DCT DR technique maintains an impressive accuracy level of 88.57%. Furthermore, it exhibits a commendably low error rate of 11.42%, an F1 score of 81.81%, and an F-measure of 82.15%. Employing the AAA DR technique in the SVM (RBF) classifier results in achieving an elevated accuracy rate of 90%. Additionally, this combination yields a notably low ER of 10% and an F1 score of 84.44%, accompanied by an F-measure of 84.97%.

Figure 13.

Different classifiers without feature selection methods.

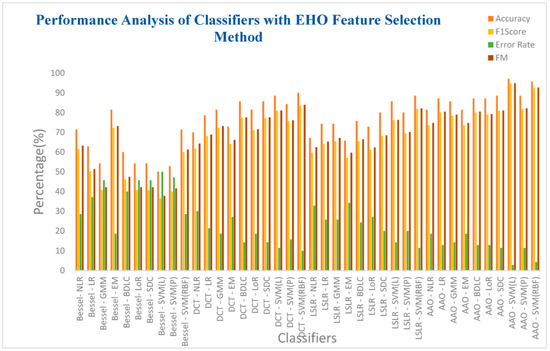

Table 11 presents an in-depth analysis of the performance of ten classifiers concerning four DR methods integrated with the EHO feature selection technique. Notably, the SVM (RBF) classifier within the AAA DR technique achieves an exceptional accuracy of 95.71%. This classifier further demonstrates a commendable F1 score of 92.68%, accompanied by a notably low error rate of 4.28% and an impressive F-measure of 92.71%. Additionally, the SVM (RBF) has a high MCC value of 0.897 and a Kappa value of 0.8965. However, a contrasting performance is observed with the SVM(Linear) classifier within the Bessel function DR technique. Once again, this classifier registers a relatively low accuracy of 50%, coupled with a high error rate of 50%. Further metrics include an F1 score of 36.36% and an F-measure of 37.79%. Intriguingly, the SVM (Linear) classifier achieves null values for both MCC and Kappa, marking a unique and distinctive characteristic of its performance. All classifiers exhibit improved accuracy within the DCT, LSLR, and AAA DR techniques. However, the impact of the EHO feature selection method does not translate into substantial enhancements for classifiers employing the Bessel function DR method.

Table 11.

Performance metrics with Elephant Herding Optimization (EHO) feature selection method for different DR techniques.

Figure 14 presents the analysis of the ten classifiers concerning the four DR methods combined with the EHO feature selection techniques. Furthermore, it is evident from the insights presented in Table 11 that the SVM (RBF) classifier, operating within the AAA DR technique, achieves an impressively high accuracy of 95.71%. Additionally, this classifier demonstrates a notable F1 score of 92.68%, accompanied by a commendably low error rate of 4.29% and an impressive F-measure of 92.71%. Equally, the SVM(Linear) classifier used within the Bessel function DR technique reflects a lower accuracy of 50%, coupled with a higher error rate of 50%. Correspondingly, the F1 score is registered at 36.36%, and the F-measure reaches 37.79%. Overall, the classifiers exhibit relatively low performance within the context of the Bessel function DR technique.

Figure 14.

Different classifiers with EHO feature selection methods.

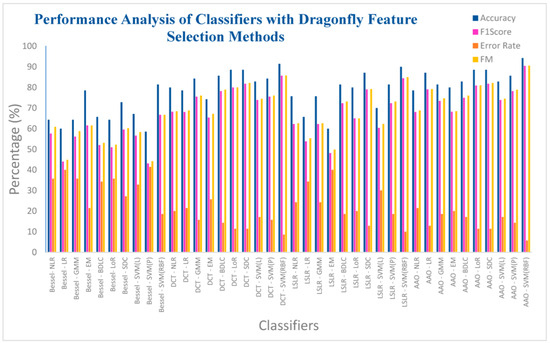

Table 12 presents the analysis of the ten classifiers concerning the four DR methods combined with the Dragonfly method. As depicted in Table 12, it is evident that the SVM (RBF), operating within the AAA DR technique, achieves an impressively high accuracy rate of 94.28%. Moreover, this classifier demonstrates a commendable F1 score of 90.47%, accompanied by a relatively low error rate of 5.72% and an appreciable F-measure of 90.57%. Furthermore, the SVM (RBF) classifier exhibits notable values of MCC and Kappa, standing at 0.866 and 0.864, respectively. On the other hand, the SVM (Polynomial) classifier, applied within the context of the Bessel function DR technique, achieves a lower accuracy rate of 58.57%. Correspondingly, it registers a higher error rate of 41.43%, along with an F1 score of 43.13% and an F-measure of 44.17%. However, the MCC and Kappa values for the SVM (Polynomial) classifier are notably lower, reaching 0.1364 and 0.1287, respectively. Among the classifiers utilized in the Bessel function DR method, only the SVM (RBF) classifier achieves an accuracy above 78%. Additionally, the SVM (RBF) classifier attains high accuracy in the DCT DR and LSLR DR methods, reaching 91% and 90%, respectively.

Table 12.

Performance metric of different classifiers with four DR techniques with the Dragonfly feature selection method.

Figure 15 illustrates the performance assessment of the ten classifiers concerning the four DR methods, paired with the Dragonfly feature selection technique. It is observed from Table 12 that the SVM (RBF) classifier, within the AAA DR technique, attains a notably high accuracy rate of 94.28%. This classifier also demonstrates a commendable F1 score of 90.47%, coupled with a comparatively low ER of 5.72%, and a noteworthy F-measure of 90.57%. Conversely, the SVM (Polynomial) classifier, employed in the context of the Bessel function DR technique, registers a relatively low accuracy of 58.57%. Correspondingly, it records a higher error rate of 41.43%, accompanied by an F1 score of 43.13%, and an F-measure of 44.17%. Among the four dimensionality reduction methods, the SVM (RBF) classifier consistently achieves individual accuracy levels exceeding 81%. However, it is important to note that the classifier’s performance in the Bessel function DR method, when paired with the Dragonfly feature selection, remains in the lower performance category.

Figure 15.

Different classifiers with Dragonfly feature selection method.

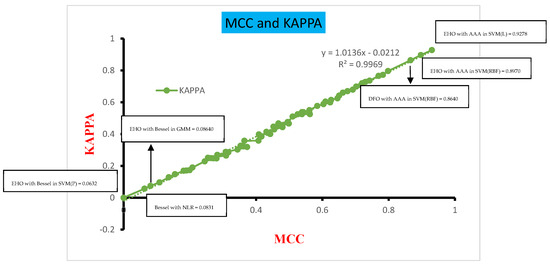

Figure 16 presents the comparative analysis of the MCC and Kappa parameters across the various classifiers concerning the four different DR techniques. This analysis was conducted for measuring the MCC and Kappa that serve as benchmarks, shedding light on the performance outcomes of the classifiers across diverse inputs. In this study, the inputs are categorized into three groups: dimensionally reduced without feature selection, with EHO feature selection, and with Dragonfly feature selection methods. These classifiers’ performance is evaluated based on the MCC and Kappa values derived from these inputs. The average MCC and Kappa values across the classifiers are calculated to be 0.2984 and 0.2849, respectively. A systematic approach is formulated to assess the classifiers’ performance, drawing insights from Figure 14. The MCC values are categorized into three ranges: 0.0–0.25, 0.251–0.54, and 0.55–0.9. Notably, the classifiers exhibit poor performance within the first range, while the MCC vs. Kappa slope demonstrates a significant upsurge within the second range of MCC values. In contrast, the third range of MCC values corresponds to a higher level of classifier performance, devoid of any substantial anomalies.

Figure 16.

Classifier performance in terms of MCC and Kappa.

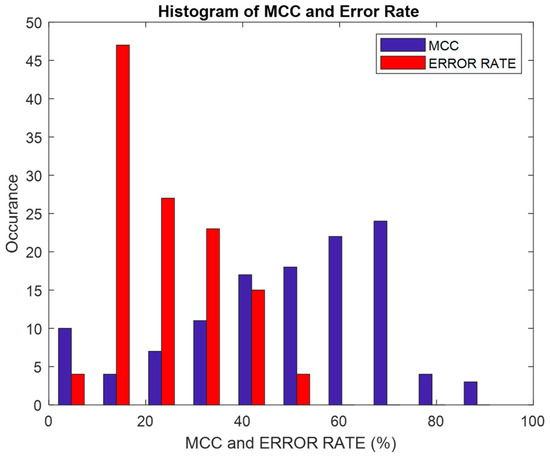

Figure 17 shows a histogram of the error rate and MCC (%) parameters that were analyzed. It can be seen that the maximum error rate is 50% and the maximum MCC is 90%. The histogram of the error rate is skewed at the right side of the graph, which indicates that for any of the DR methods, and irrespective of the feature selection method, the classifier’s error rate does not go beyond 50%. The histogram of MCC depicts the classifier as being sparser at the edges and covering more points in the middle area.

Figure 17.

Performance of error rate and MCC (%).

8.1. Computational Complexity (CC)

The analysis of the classifiers in this study considers their CC, which is determined based on the size of the input (denoted as O(n)). A lower CC, indicated by O(1), is desirable as it indicates that the complexity remains constant regardless of the input size. However, it is directly proportional to the number of inputs and computational complexity. It is notable that CC is independent of the size of the input, which is a favorable characteristic for any type of algorithm. If it increases logarithmically with the increase in ‘n’, it is represented as O(logn). Additionally, hybrid models of classifiers are used that incorporate DR techniques and feature selection methods in their classification process.

Table 13 presents the CC of the classifiers without incorporating feature selection methods. A noteworthy observation from the table is that the CC of all of the classifiers is relatively similar. However, their performance in terms of accuracy is relatively low. Among the classifiers, the Bessel function classifier demonstrates a moderate CC of O(n3logn), while the Discrete Cosine Transform, Least Squares Linear Regression, and Artificial Algae Algorithm exhibit higher CC with improved accuracy, represented by O(2n4log2n), O(2n5log4n), and O(2n5log8n), respectively, when compared to the other classifiers. Additionally, when considering the values of MCC and Kappa, the DST, LSLR, and AAA classifiers exhibit similar performance.

Table 13.

CC of the classifiers without feature selection methods.

Table 14 illustrates the CC of the classifiers utilizing the EHO feature selection method. The table reveals that the CC of all of the classifiers is relatively similar, while their performance demonstrates significant accuracy. Similar to the case without feature selection, the Expectation Maximum classifier exhibits a higher computational complexity of O(n5logn) along with remarkable accuracy. Regarding the DCT, LSLR, and AAA classifiers, they achieve similar CC to the SVM (RBF) classifier with O(2n6log2n), O(2n7log4n), and O(2n7log8n), respectively. Notably, the SVM (RBF) classifier in combination with the EHO feature selection technique for DCT, LSLR, and AAA achieves the highest accuracy among all classifiers, with accuracies of 90%, 88.57%, and 95.71%, respectively. Furthermore, the corresponding Kappa values for these classifiers are 0.7655, 0.65, and 0.8965, indicating their strong performance.

Table 14.

CC of the classifiers with EHO feature selection method.

Table 15 provides insights into the CC of the classifiers using the Dragonfly method. From the table, it can be seen that the CC of all of the classifiers is relatively similar, while their performance exhibits a significant level of accuracy. Notably, all four dimensionality reduction techniques demonstrate the highest CC compared to their counterparts. Specifically, the Bessel function, DCT, LSLR, and AAA classifiers achieve a computational complexity of O(8n5log2n), O(8n5log2n), O(8n6log4n), and O(8n6log8n), respectively. Regarding accuracy, the Bessel function, DCT, LSLR, and AAA classifiers achieve the highest accuracy values of 81.42%, 91.42%, 90%, and 95.71%, respectively. Moreover, the corresponding Kappa values for these classifiers are 0.538, 0.796, 0.772, and 0.864, indicating their robust performance. A comparison with previous work is provided in Table 16.

Table 15.

CC of the classifiers with Dragonfly feature selection method.

Table 16.

Comparison with previous work.

As observed in Table 16, it is evident that a variety of machine learning classifiers, including SVM (RBF), NB, LoR, DT, NLR, RF, multilayer perceptron, and DNN, have been employed for diabetic classification using clinical databases. The accuracies of these classifiers span the range of 67% to 95%. However, the present investigation focuses on diabetes detection using microarray gene data, where SVM (RBF) stands out with an accuracy of 95%.

8.2. Limitations and Major Outcomes

The findings of this study may be limited to the specific population of type II diabetes mellitus patients and may not be applicable to other populations or different types of diabetes. The analysis in this study relies on microarray gene data, which may not be readily available or accessible in all healthcare settings. The methods proposed in this study, such as microarray gene arrays, may involve complex and expensive procedures that are not feasible for routine clinical practice. The performance of the classifiers in this study may be influenced by the presence of outliers in the data. Outliers can have a significant impact on the accuracy and reliability of the classification results. The developed classification approach, which utilizes various dimensionality reduction techniques and feature selection methods, has demonstrated its potential in effectively screening and predicting diabetic markers, while also identifying associated diseases such as strokes, kidney failure, and neuropathy. An outcome of this study is the establishment of a comprehensive database for the mass screening and sequencing of diabetic genomes. By incorporating microarray gene data and leveraging the proposed classification techniques, this database enables the identification of patterns and trends in diabetes outbreaks associated with different lifestyles.

The ability to detect diabetes in its early stages and predict associated diseases is of utmost importance for chronic diabetic patients. This will facilitate timely interventions, improve disease management, and, ultimately, lead to better patient outcomes. Overall, this study contributes valuable insights to the field and lays the foundation for further investigations into the early detection and management of type II diabetes mellitus patients.

9. Conclusions