ClinSegNet: Towards Reliable and Enhanced Histopathology Screening

Abstract

1. Introduction

- Lack of tissue awareness: Most methods do not consider the tissue source of the input images. The model cannot distinguish structural patterns from different organs during training. This “tissue insensitivity” leads to unstable performance under multi-organ mixed training and limits generalisability.

- Low recall of lesion regions: Most objectives are dominated by pixel accuracy or the Dice coefficient, neglecting recall. In clinical screening, missing a potential lesion is more harmful than false detection. Improving recall is, therefore, critical, even at the expense of some precision.

- Organ embedding mechanism: Organ label information was integrated into the model, enabling conditional modulation of different tissues during feature extraction and improving cross-organ generalisation.

- Edge-assisted branch: Edge supervision was introduced to strengthen boundary localisation, reducing lesion blurring and breakage, and improving contour consistency.

- Recall-prioritised loss function (HistoLoss): A composite loss combining Tversky and binary cross entropy (BCE) was formulated to bias optimisation towards recall while maintaining training stability.

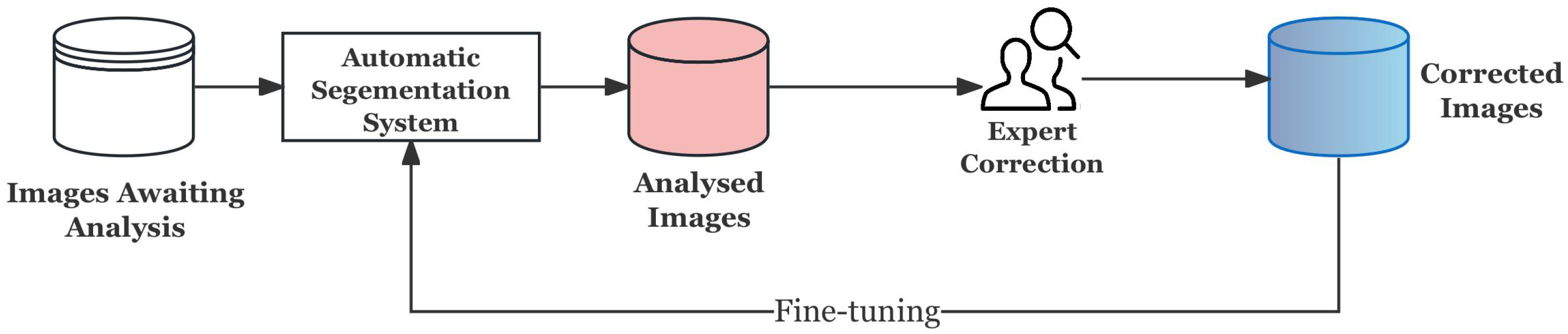

- Expert-guided refinement: An uncertainty-driven Human-in-the-Loop (HITL) strategy was adopted to selectively fine-tune high-uncertainty cases, simulating expert correction in clinical workflows.

- Proposed ClinSegNet, a clinically oriented, recall-first segmentation framework for histopathology images.

- Designed HistoLoss, a composite loss function combining BCE and Tversky, to ensure stable optimisation while prioritising recall.

- Incorporated organ-aware embedding and edge-assisted supervision into a SEU-Net backbone, enhancing cross-organ discrimination and boundary localisation.

- Introduced a human-centred refinement strategy based on uncertainty-driven HITL, demonstrating that even limited recall improvements hold clinical value.

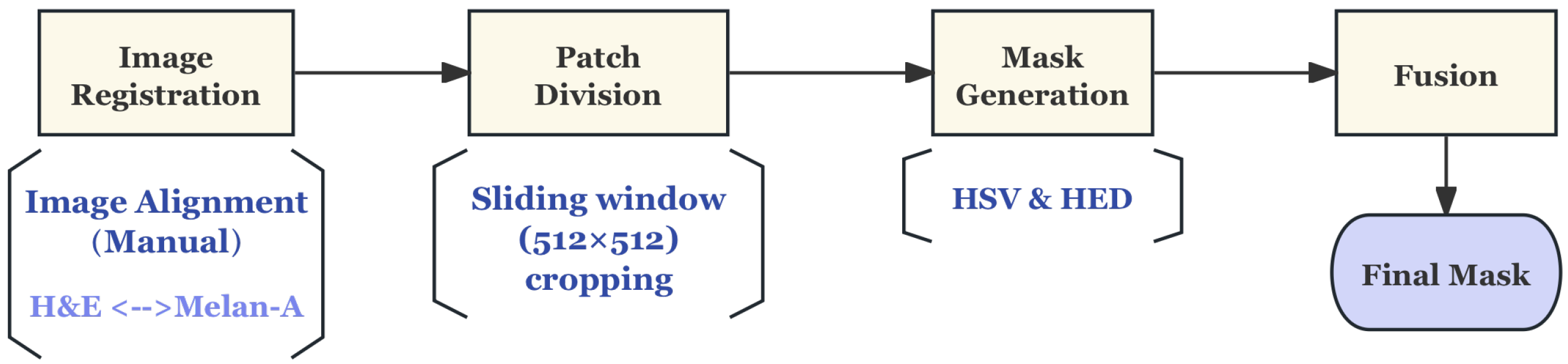

- Established a practical clinical data processing pipeline, where pixel-level annotations were automatically derived from IHC-to-H&E mapping and then integrated with public datasets, enabling effective training under limited clinical data conditions.

2. Literature Review

2.1. Medical Image Segmentation

- Radiological Imaging, such as computed tomography (CT), magnetic resonance imaging (MRI), and Ultrasound, is commonly used to visualize anatomical structures and detect lesions at a macroscopic scale. Such images have relatively stable imaging parameters and low resolution variation, but may be affected by noise, motion artefacts, and signal loss caused by metal implants [6].

- Microscopy-based Imaging, including Histopathology and Cytology imaging, can reveal cellular structure and tissue morphological characteristics at the microscopic level. Such images have extremely high resolution and rich details, accompanied by significant colour differences, complex textures, and morphologically diverse nuclear and tissue structures. Different modalities put forward different requirements for the input scale, feature extraction strategy, context information modelling, and generalization ability of the segmentation model [7].

2.2. Histopathology Image Segmentation Based on Deep Learning

2.3. Human-in-the-Loop (HITL) Approaches in Medical AI

2.4. Research Gap and Motivation

- Ignoring recall: Most existing studies use Dice or IoU as the main evaluation metric, while Recall is often weakened or even not explicitly reported, although it is more critical in clinical safety.

- Risk of missed detection: Due to excessive focus on overall accuracy, current methods tend to miss lesions that are small in size or difficult to detect, thus undermining their utility in early screening tasks.

- Limited HITL focus: Current Human-in-the-Loop (HITL) methods are mainly applied to classification tasks, annotation optimization, or diagnostic assistance, and pay little attention to pixel-level segmentation tasks.

- Lack of recall-oriented HITL strategies: Existing HITL frameworks explicitly introduce Recall optimization, which is precisely the key to ensure the reliability of diagnostic segmentation of pathological images.

3. Methods

3.1. Datasets and Pre-Processing

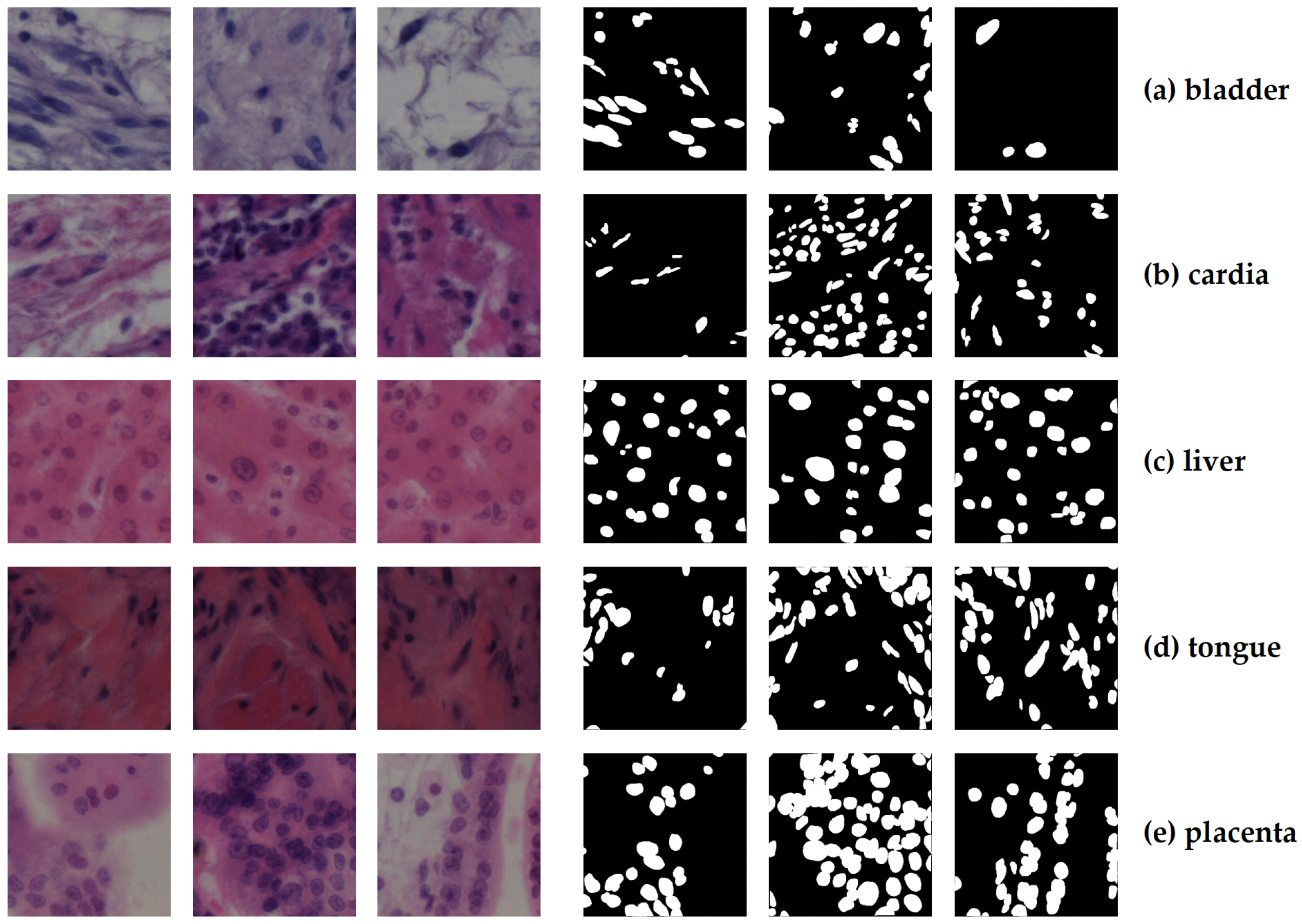

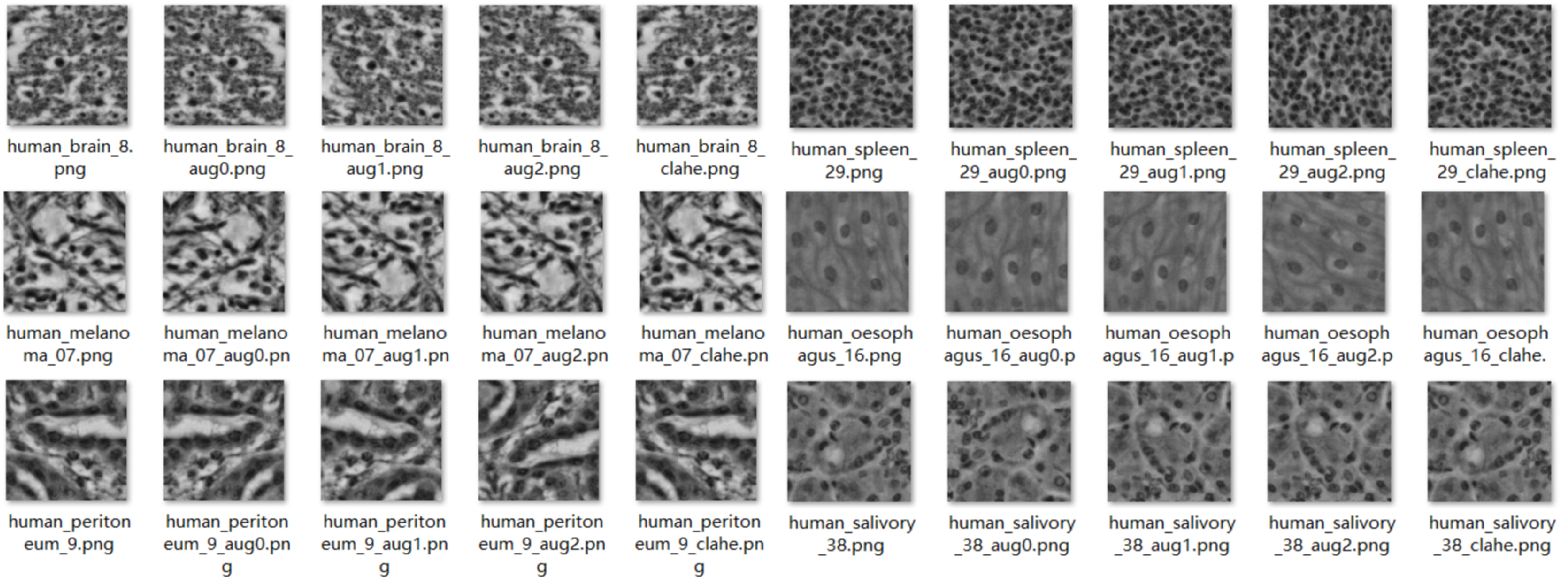

3.1.1. NuInsSeg Dataset

Pre-Processing

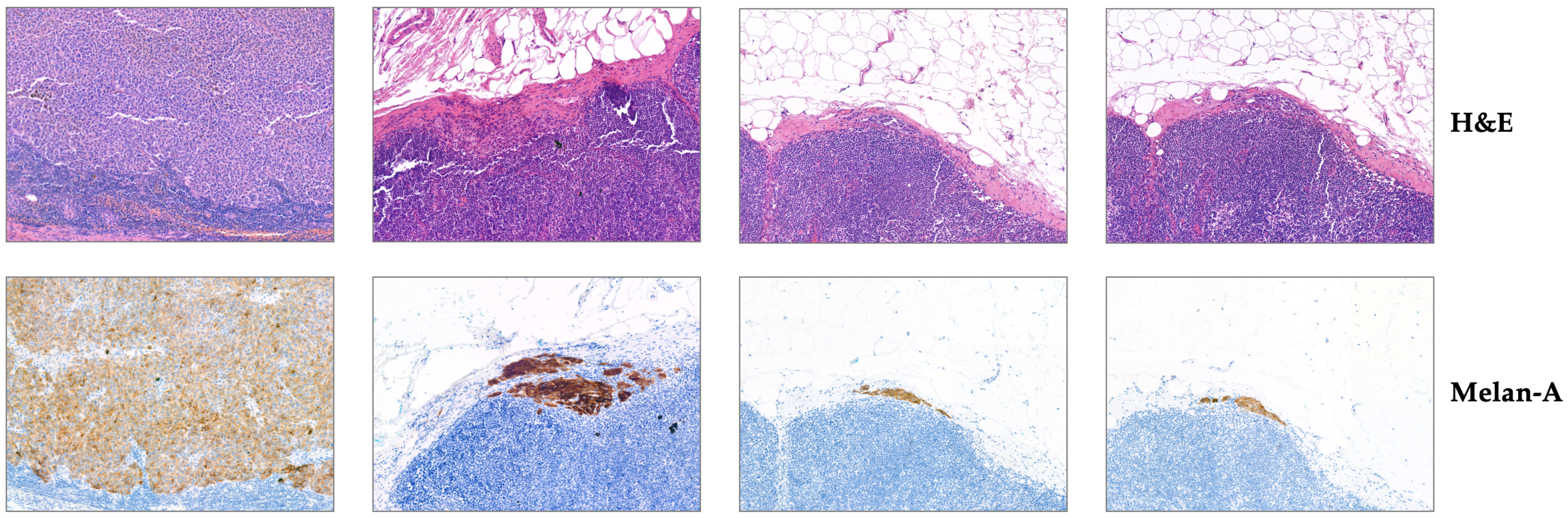

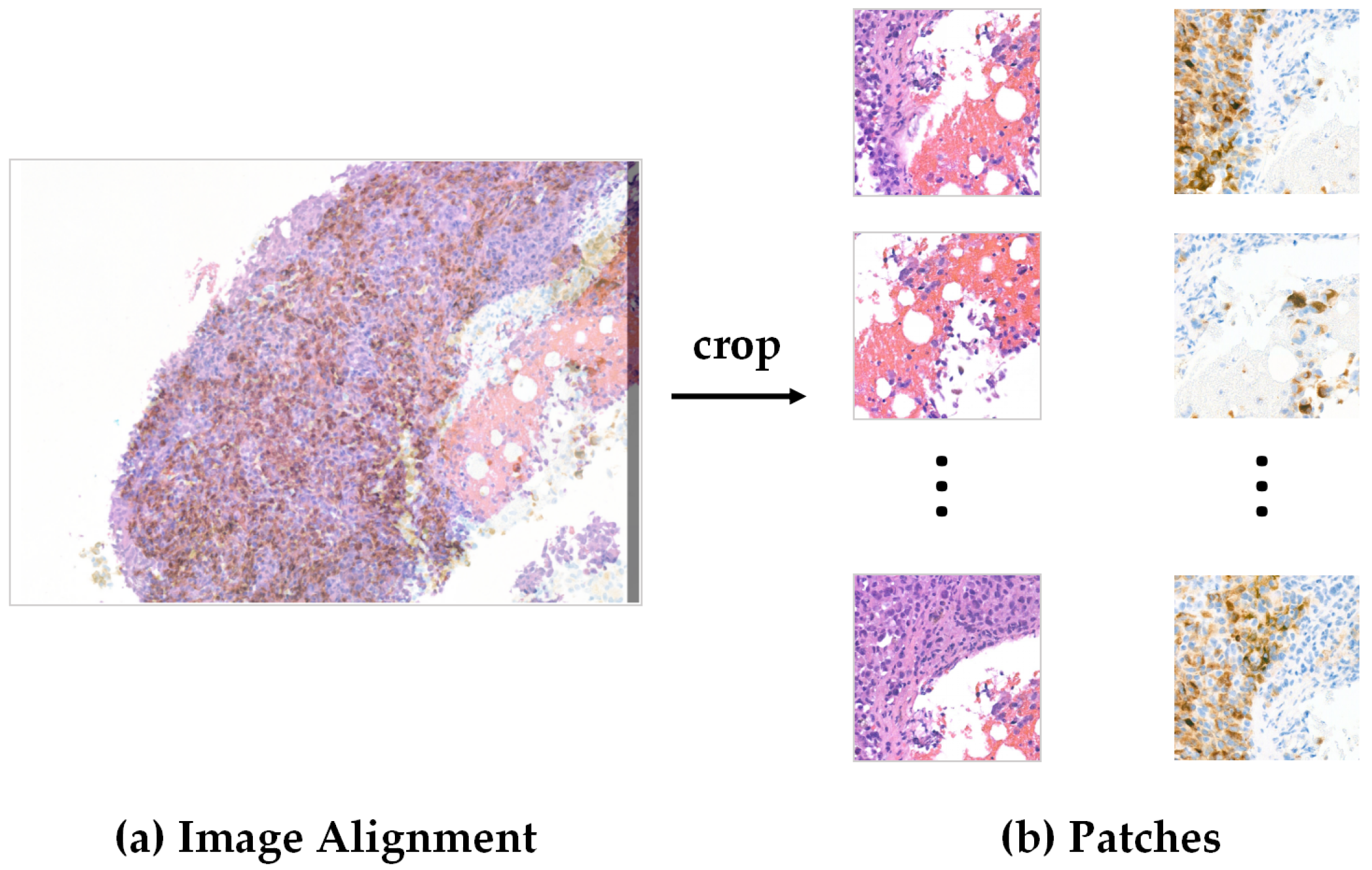

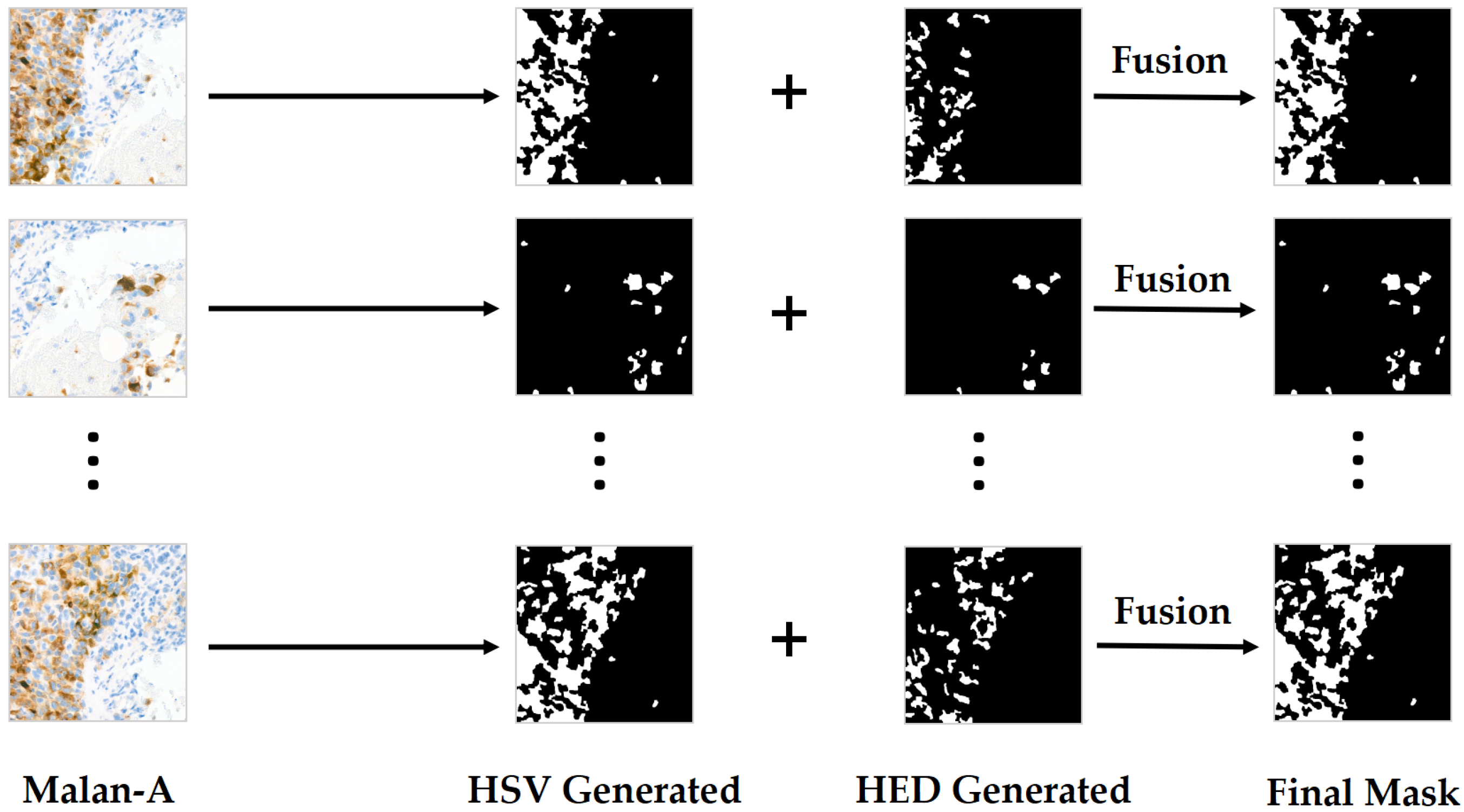

3.1.2. UHS-MelHist Dataset

Pre-Processing

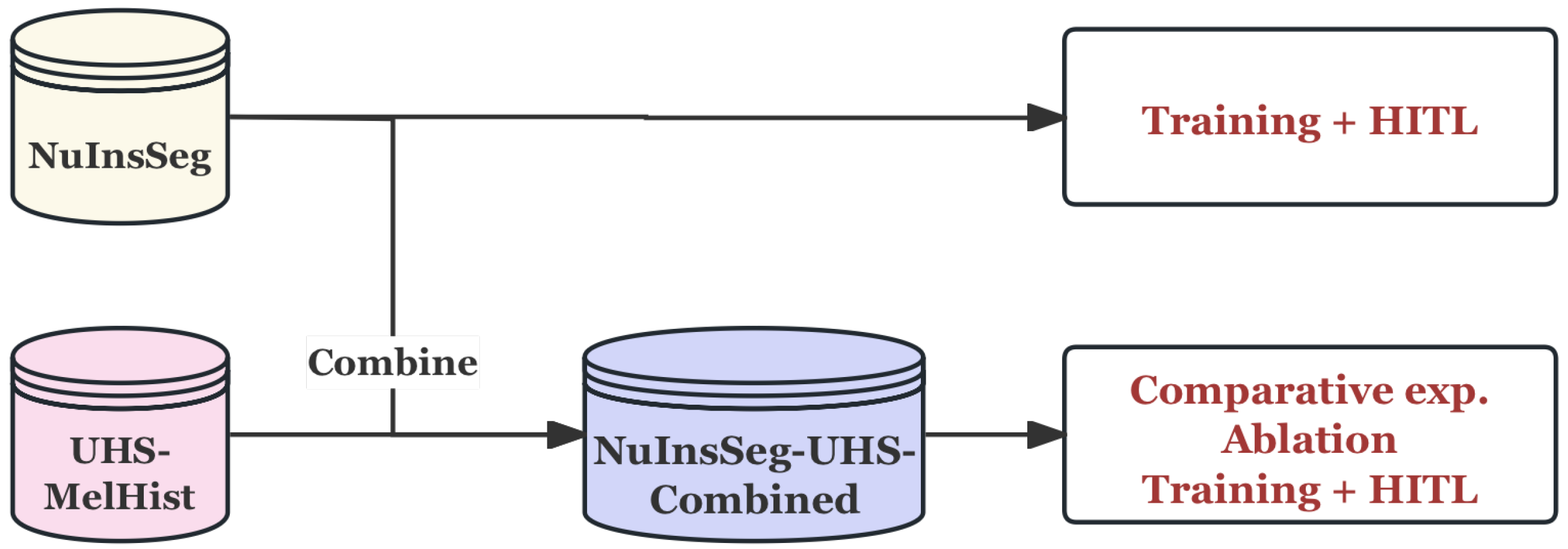

3.1.3. Data Usage Strategy

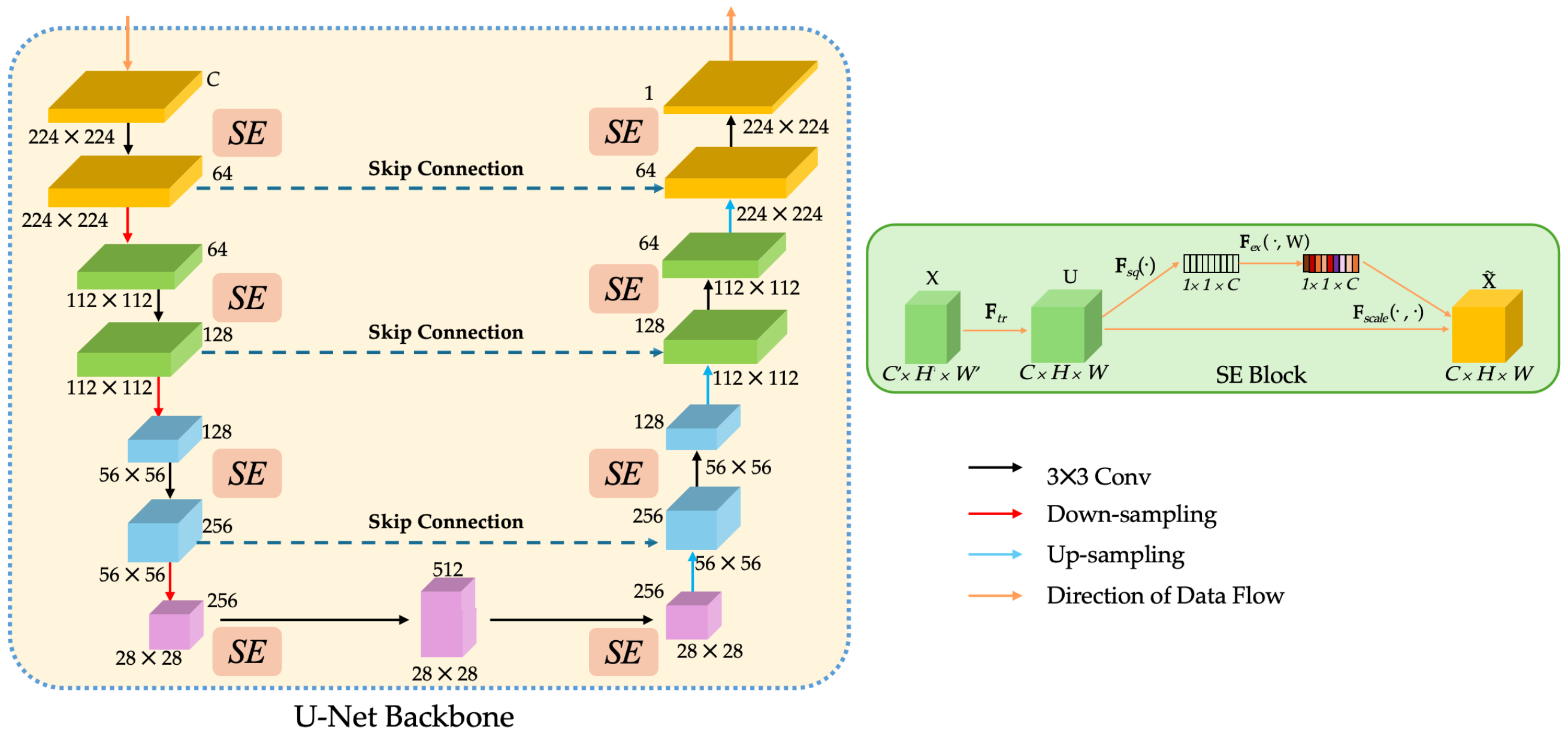

3.2. Network Architecture

3.2.1. SEU-Net Backbone

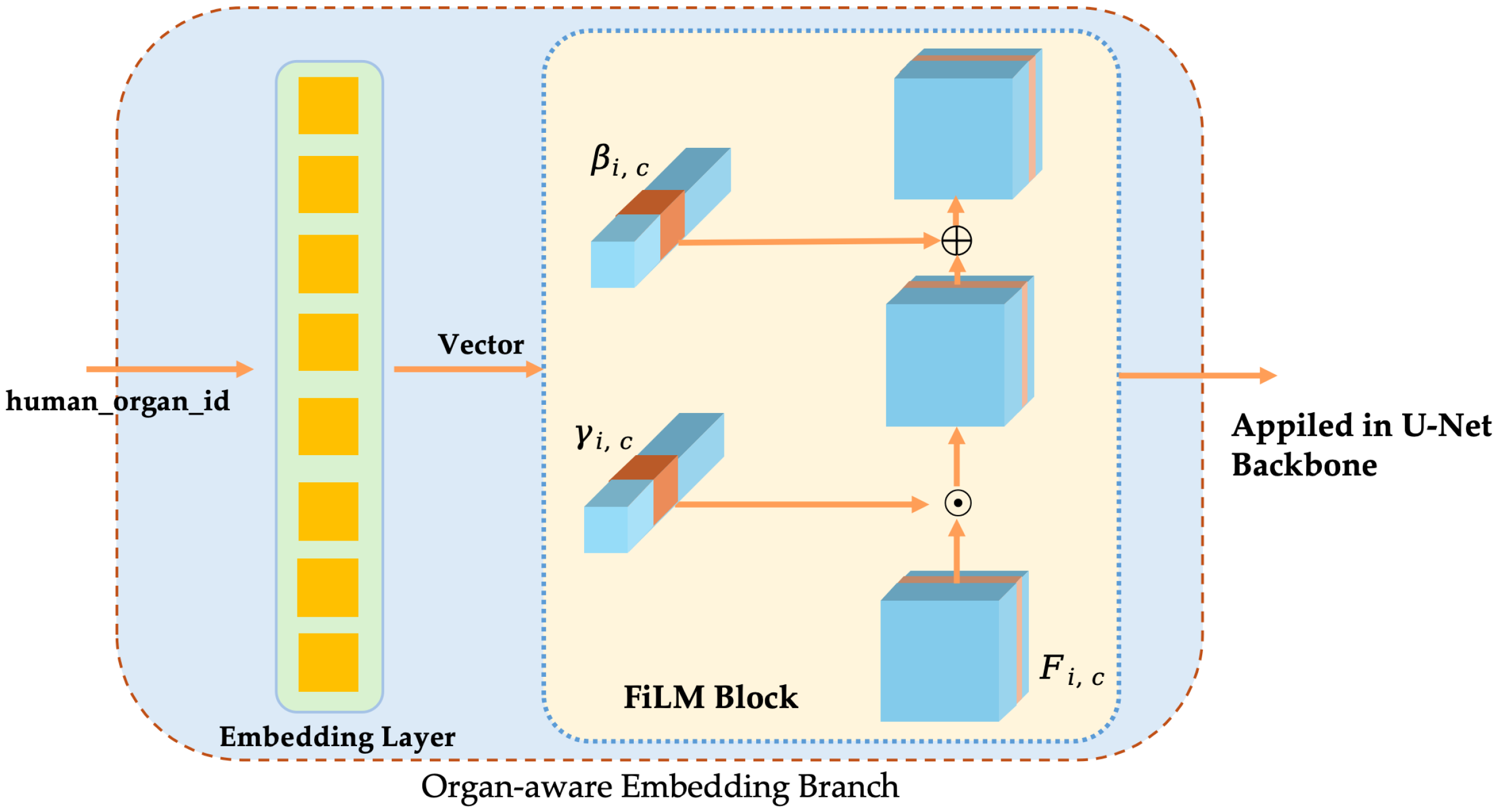

3.2.2. Organ-Aware Embedding Branch

- Organ/tissue labels and embedding vectorsLet the total number of organ categories be K. The organ labels of each sample are mapped to low-dimensional vectors by a learnable embedding layer:where d is the embedding dimension. This vector contains the category prior information of the organ/tissue.

- FiLM conditional modulationAt the s-th stage of the encoder, the graph is . To inject conditional information, the embedding vector is mapped through independent linear layers to a scaling factor and a bias that is consistent with the number of channels:where is the Sigmoid activation used to constrain the scaling range. The feature maps are then obtained by a channel-wise affine transformation as follows:This modulation process does not change the spatial dimension and the number of channels of the feature map, and recalibration is performed only in the channel dimension.

- Multi-scale applicationsIn order to inject conditional information in different receptive fields, FiLM is applied in the encoder and decoder stages ➀–➆ (corresponding channel = 64, 128, 256, 512, 256, 128, 64) (Shown in Figure 9). Separate linear layer parameters and are used for each stage to ensure that the output dimension matches the number of channels.The computational and parameter overhead is given by . Compared to the backbone network, the overall computational complexity is negligible.

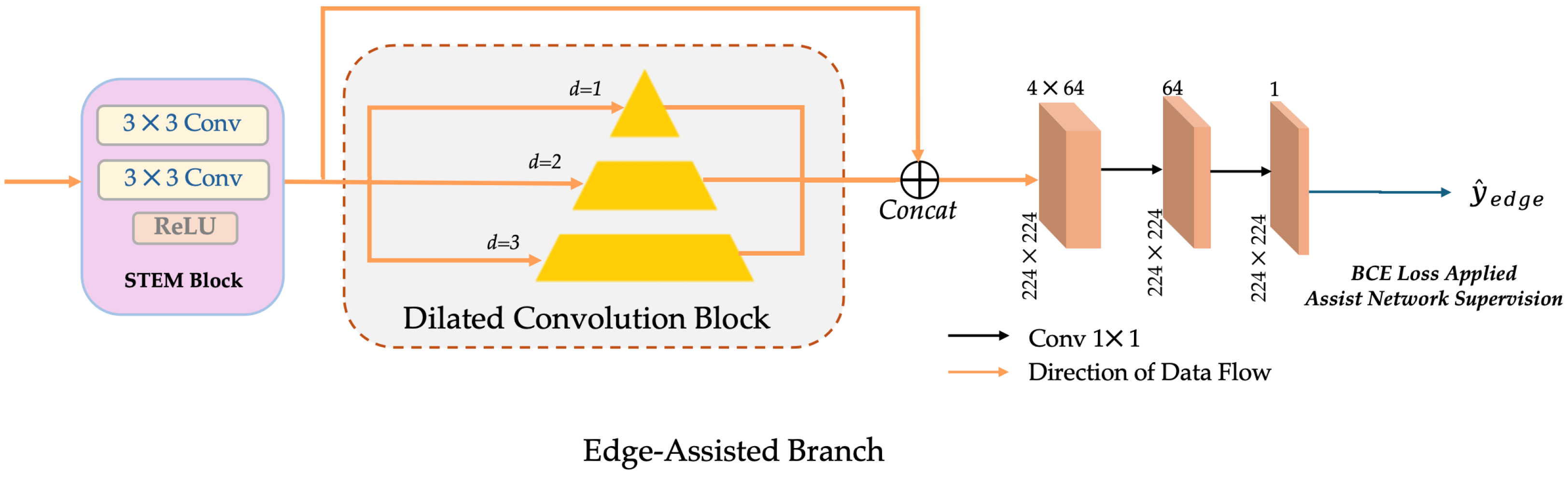

3.2.3. Edge-Assisted Branch

3.3. Loss Functions

- Class imbalance problem: The lesion area is often smaller than the background, and if only pixel-wise cross entropy (BCE) [52] is used, the model is easy to bias to predict the background, resulting in a decrease in recall.

- Small lesion and fuzzy boundary problem: If the model only relies on Tversky loss [53], although it can alleviate the imbalance, the constraint on the probability distribution is insufficient, and the training is unstable, especially in the small lesion area, so it is easy to oscillate.

- Missing boundary problem: The boundary between the lesion and normal tissue is fuzzy, and the main branch is not sensitive enough to the edge, which is easy to produce false negatives or broken boundaries.

3.3.1. HistoLoss: A Recall-Prioritised Composite Loss for the Main Segmentation Branch

3.3.2. BCE Loss for Edge-Assisted Branch

3.3.3. Total Loss Function

3.4. Human-in-the-Loop (HITL) Strategy

3.4.1. Why HITL?

- Requirements for real scenarios: Tumour metastatic lesions often have fuzzy boundaries and strong heterogeneity in morphology, which is prone to false negatives by simply relying on automatic segmentation models. But HITL allows for experts to supplement and correct key difficult cases to improve clinical reliability.

- Limited annotation resources: Medical image annotation is extremely expensive. HITL can maximise the value of limited annotation information by using a small number of expert corrections in the uncertainty sampling set.

- Model interpretability: The HITL framework not only outputs the segmentation results, but also provides the information of “which samples are the most uncertain” so that experts can understand the shortcomings of the model, thereby improving the transparency of human-machine collaboration.

- Experimental feasibility: Compared to building an online system, the offline fine-tuning scheme is simpler to implement, but it can effectively simulate the HITL idea at the experimental level and quantitatively evaluate its impact on model performance.

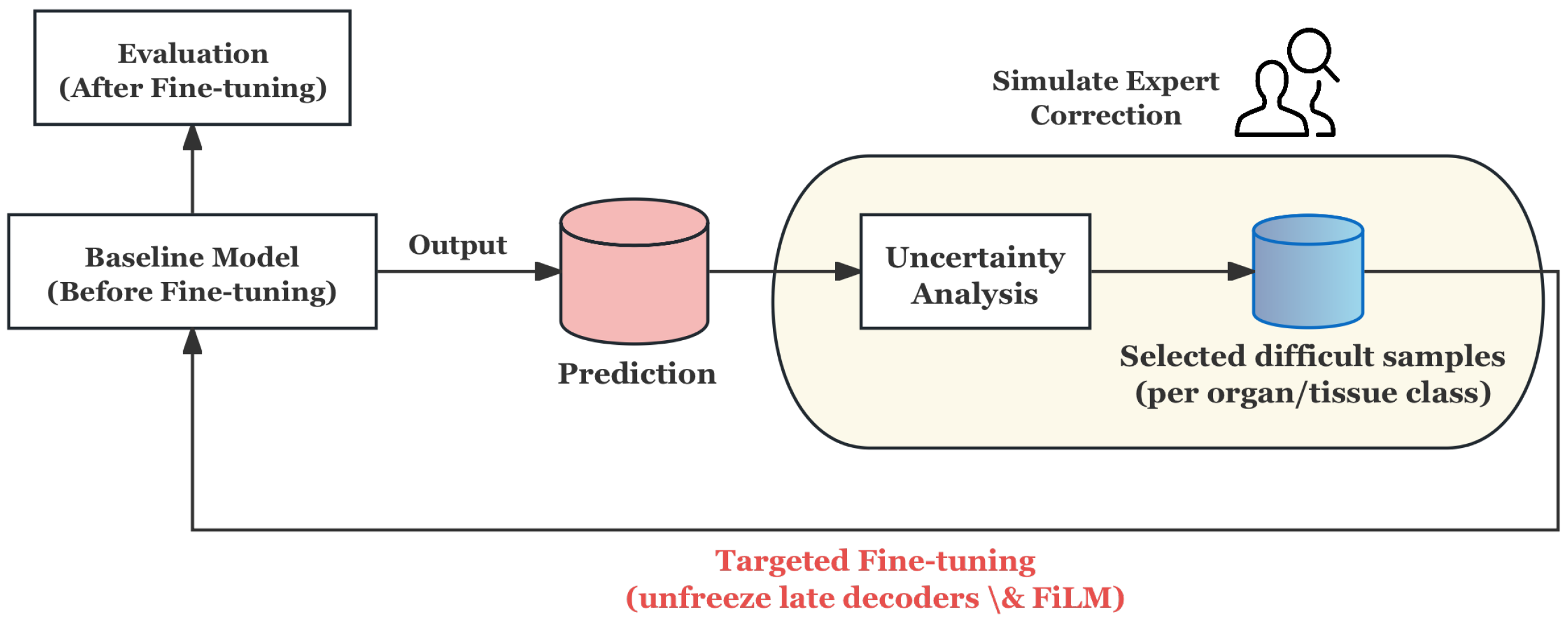

3.4.2. Offline HITL for Proposed Architecture

- Prediction and Uncertainty Evaluation: The baseline model generates probability maps for training samples, and the prediction uncertainty is quantified by calculating the entropy of the probability distribution, thereby identifying the samples with the lowest model confidence.

- Stratified Uncertainty Sampling: The most uncertain samples in each organ category are selected to form the HITL subset. This ensures that difficult cases in each category are covered and that approximate simulation experts give priority to ambiguous or error-prone cases in the clinic.

- Targeted Fine-tuning: Instead of updating the whole model, only the decoder and its conditioning module are unfrozen for refinement, and the rest of the network remains frozen. This design can reduce the risk of overfitting and enable the model to focus corrections on segmentation boundaries and organ-specific features.

- Evaluation and Feedback Loop: After fine-tuning on the HITL subset, the model is evaluated on an independent test set. The process simulates the feedback loop of “prediction → expert correction → model update”, which proves that the model can improve the segmentation reliability in fuzzy boundary and heterogeneous regions, even under the condition of limited correction.

3.5. Recall-Oriented Design Rationale

- SEU-Net serves as the fundamental architecture. The U-shaped encoder–decoder captures multi-scale context, while SE (Squeeze-and-Excitation) recalibrates channel responses. This combination enhances sensitivity to subtle lesion patterns and reduces FNs in heterogeneous tissue regions.

- HistoLoss (main branch) addresses FNs from small lesions and long-tail positive samples that are often overwhelmed by the background. As a combination of BCE and Tversky with , HistoLoss penalises false negatives more strongly, while the BCE term ensures training stability. This loss encourages the model to prioritise sensitivity to positive regions.

- (edge supervision) alleviates FNs caused by broken or blurred boundaries. The auxiliary edge prediction head is trained with a BCE-based loss, which provides explicit contour supervision and reduces the likelihood of missing boundary pixels.

- Conditional guidance (FiLM + organ embeddings) mitigates FNs due to cross-organ morphological variation. By injecting organ embeddings through FiLM layers and recalibrating channels with SE blocks, the network adapts to organ-specific structures and reduces domain-shift-induced FNs.

- Colour processing handles FNs introduced by staining variability across tissues or batches. By suppressing colour and emphasising morphological cues, the model becomes less sensitive to stain shifts that obscure positive regions.

- Offline HITL (uncertainty-driven fine-tuning) targets FNs in difficult cases that persist after initial training. By selecting high-uncertainty samples and fine-tuning only the decoder’s final layers and conditional embeddings, the system converts critical FNs into true positives at low cost.

4. Experimental Settings

4.1. Environment Settings

4.2. Main Model Training Settings

4.3. HITL Settings

4.4. Evaluation Metrics

- Recall reflects the sensitivity of the model detection, and is defined as follows:

- The Dice coefficient is used to evaluate the overlap between the prediction and the true mask, and the equation is as follows:

- Intersection over Union (IoU) measures the ratio of the intersection and union between the predicted region and the true region, and is defined as follows:

- Precision measures the reliability of the positive samples in the prediction, as follows:

5. Results

5.1. Quantitative Results

5.1.1. NuInsSeg: Training vs. HITL

5.1.2. NuInsSeg-UHS-Combined: Training vs. HITL

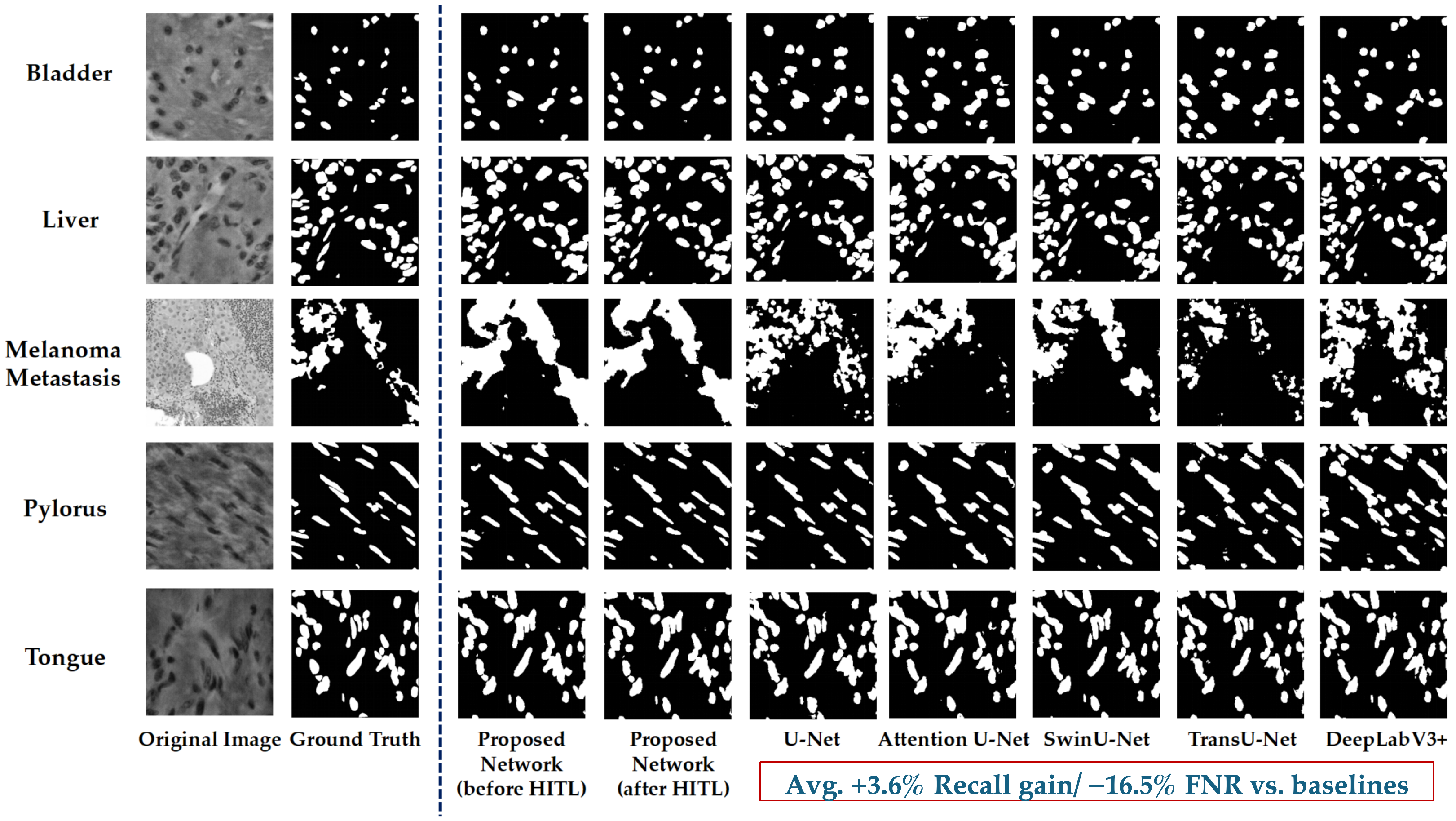

5.1.3. Comparison Experiment

5.1.4. Ablation Study

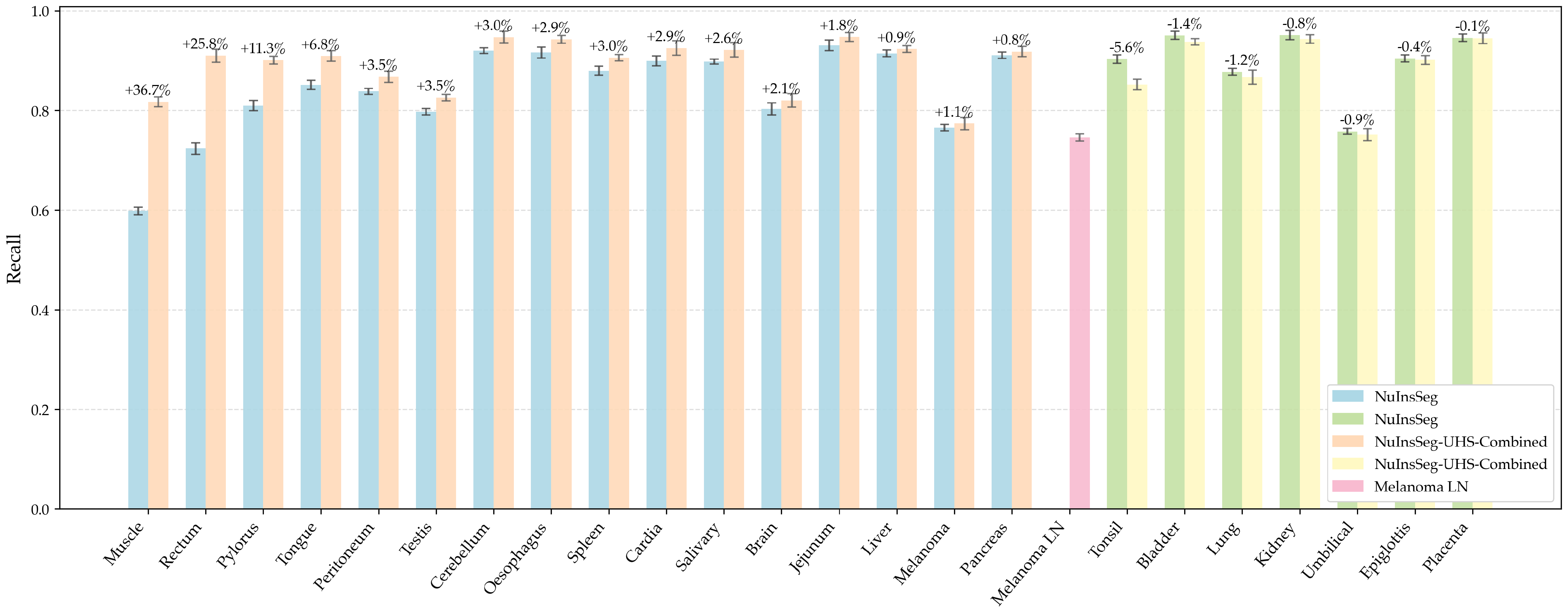

5.1.5. Per-Organ Recall Analysis

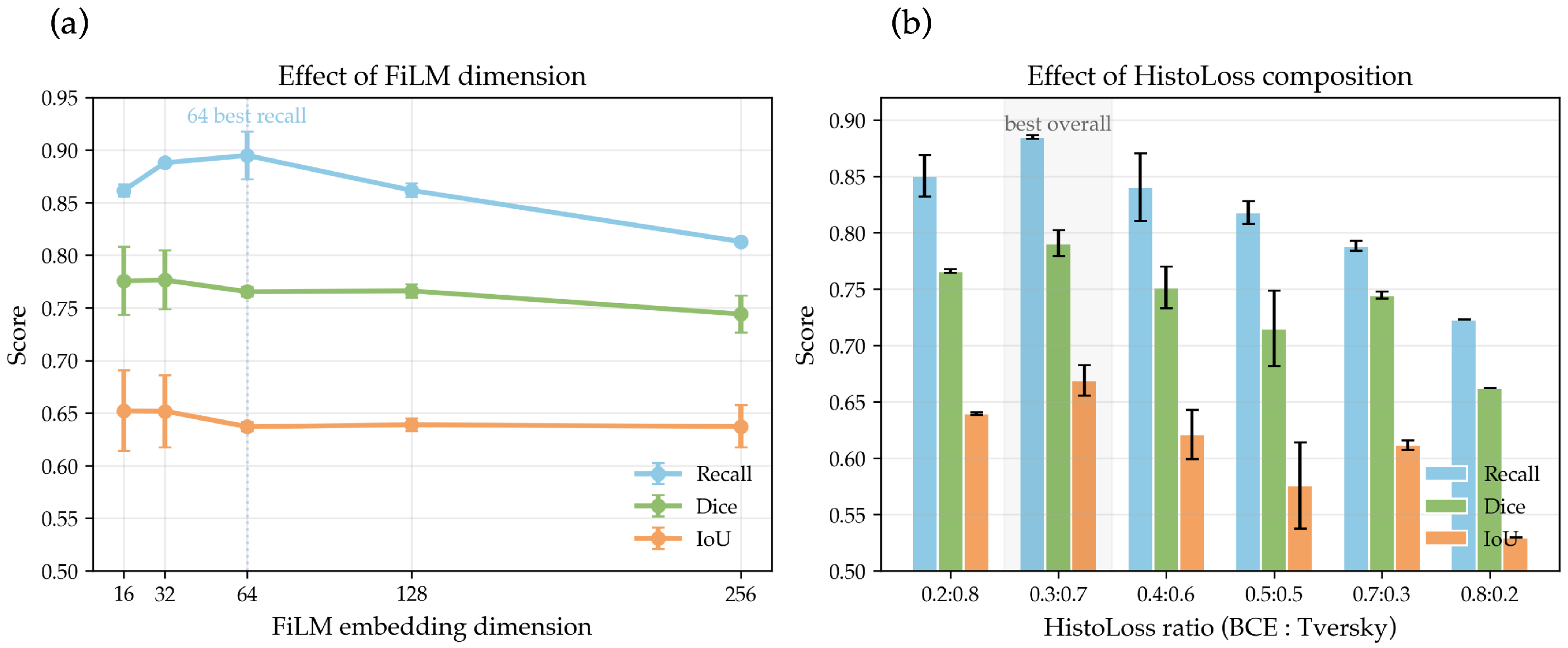

5.1.6. Hyperparameter Sensitivity Analysis

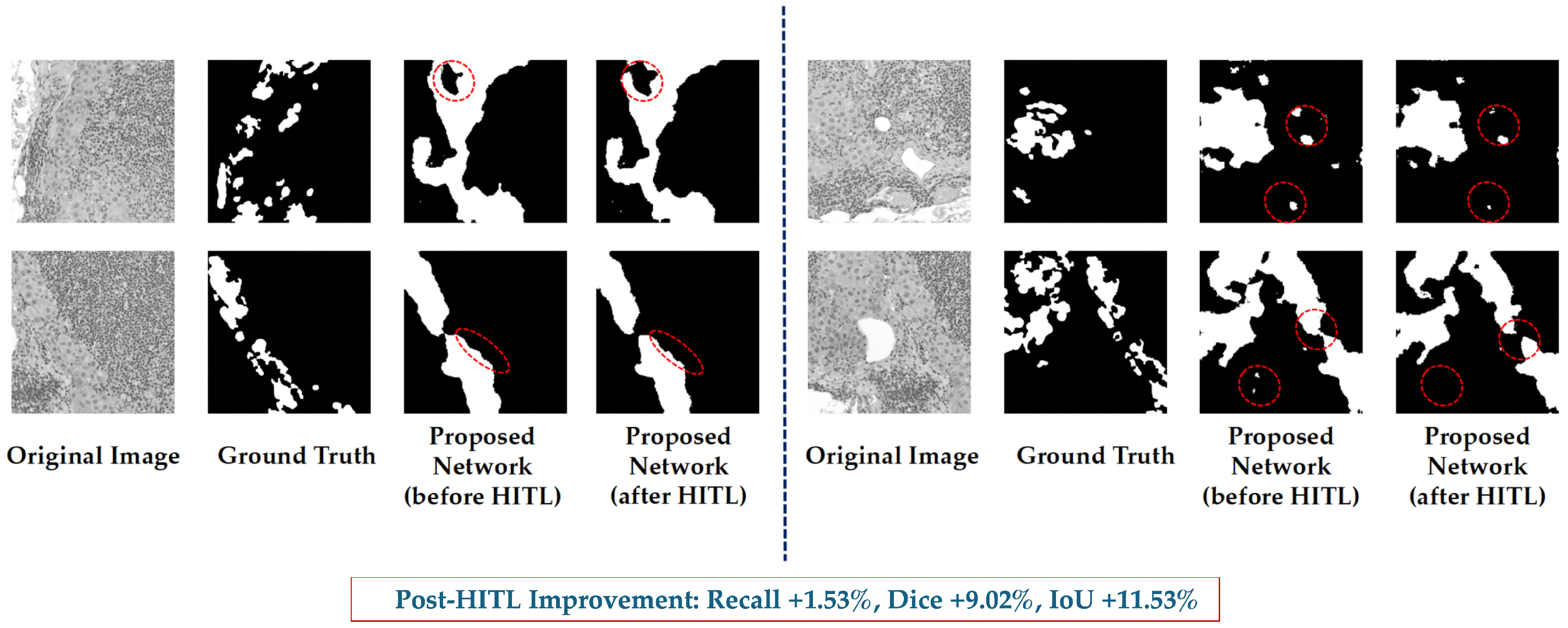

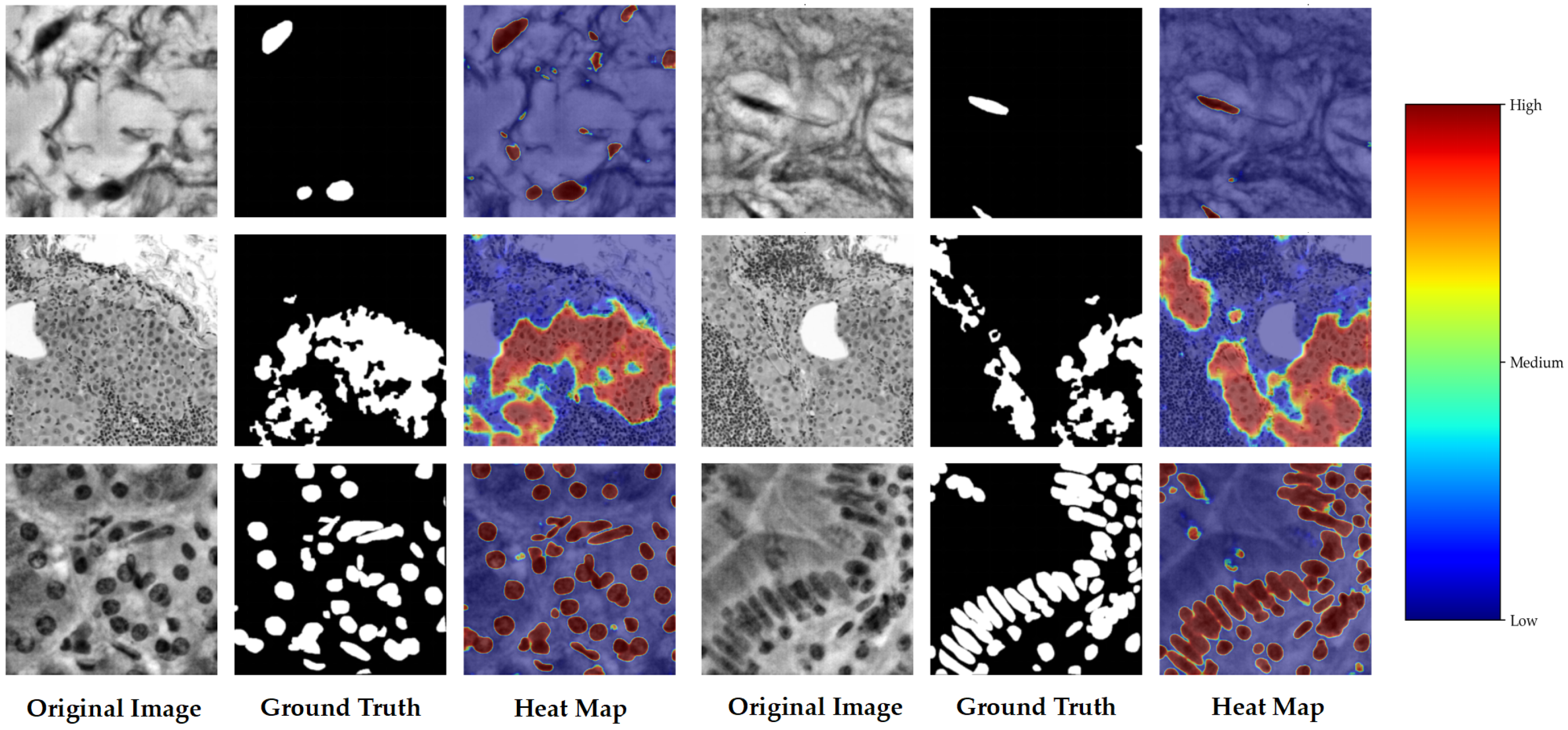

5.2. Qualitative Results

5.2.1. Segmentation Results Visualisation

5.2.2. Heatmap Visualisation

6. Discussion

6.1. Overall Performance

6.2. Effectiveness of HITL Mechanism

6.3. Comparison with Representative Baseline Models

6.4. Dataset Constraints and Generalisation Potential

6.5. Clinical Relevance

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Xu, Y.; Quan, R.; Xu, W.; Huang, Y.; Chen, X.; Liu, F. Advances in medical image segmentation: A comprehensive review of traditional, deep learning and hybrid approaches. Bioengineering 2024, 11, 1034. [Google Scholar] [CrossRef]

- Kalet, I.J.; Austin-Seymour, M.M. The use of medical images in planning and delivery of radiation therapy. J. Am. Med. Inform. Assoc. 1997, 4, 327–339. [Google Scholar] [CrossRef]

- van Diepen, P.R.; Smithuis, F.F.; Hollander, J.J.; Dahmen, J.; Emanuel, K.S.; Stufkens, S.A.; Kerkhoffs, G.M. Reporting of morphology, location, and size in the treatment of osteochondral lesions of the talus in 11,785 patients: A systematic review and meta-analysis. Cartilage, 2024; in press. [Google Scholar] [CrossRef]

- Wang, R.; Lei, T.; Cui, R.; Zhang, B.; Meng, H.; Nandi, A.K. Medical image segmentation using deep learning: A survey. IET Image Process. 2022, 16, 1243–1267. [Google Scholar] [CrossRef]

- Barrett, J.F.; Keat, N. Artifacts in CT: Recognition and avoidance. Radiographics 2004, 24, 1679–1691. [Google Scholar] [CrossRef] [PubMed]

- Vu, Q.D.; Graham, S.; Kurc, T.; To, M.N.N.; Shaban, M.; Qaiser, T.; Koohbanani, N.A.; Khurram, S.A.; Kalpathy-Cramer, J.; Zhao, T.; et al. Methods for segmentation and classification of digital microscopy tissue images. Front. Bioeng. Biotechnol. 2019, 7, 53. [Google Scholar] [CrossRef] [PubMed]

- Senthilkumaran, N.; Vaithegi, S. Image segmentation by using thresholding techniques for medical images. Comput. Sci. Eng. Int. J. 2016, 6, 1–13. [Google Scholar] [CrossRef]

- Goh, T.Y.; Basah, S.N.; Yazid, H.; Safar, M.J.A.; Saad, F.S.A. Performance analysis of image thresholding: Otsu technique. Measurement 2018, 114, 298–307. [Google Scholar] [CrossRef]

- Lu, Y.; Jiang, T.; Zang, Y. A split–merge-based region-growing method for fMRI activation detection. Hum. Brain Mapp. 2004, 22, 271–279. [Google Scholar] [CrossRef] [PubMed]

- Choi, S.; Kim, C. Automatic initialization active contour model for the segmentation of the chest wall on chest CT. Healthc. Inform. Res. 2010, 16, 36–45. [Google Scholar] [CrossRef] [PubMed]

- Ng, H.; Huang, S.; Ong, S.; Foong, K.; Goh, P.; Nowinski, W. Medical image segmentation using watershed segmentation with texture-based region merging. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–24 August 2008; pp. 4039–4042. [Google Scholar]

- Chen, X.; Pan, L. A survey of graph cuts/graph search based medical image segmentation. IEEE Rev. Biomed. Eng. 2018, 11, 112–124. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Proceedings of the International Workshop on Deep Learning in Medical Image Analysis, Granada, Spain, 20 September 2018; pp. 3–11. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar] [CrossRef]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef]

- Valanarasu, J.M.J.; Patel, V.M. Unext: Mlp-based rapid medical image segmentation network. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Singapore, 18–22 September 2022; pp. 23–33. [Google Scholar]

- Valanarasu, J.M.J.; Oza, P.; Hacihaliloglu, I.; Patel, V.M. Medical transformer: Gated axial-attention for medical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; pp. 36–46. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-unet: Unet-like pure transformer for medical image segmentation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 205–218. [Google Scholar]

- Hatamizadeh, A.; Tang, Y.; Nath, V.; Yang, D.; Myronenko, A.; Landman, B.; Roth, H.R.; Xu, D. Unetr: Transformers for 3d medical image segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 574–584. [Google Scholar]

- Zhang, Y.; Liu, H.; Hu, Q. Transfuse: Fusing transformers and cnns for medical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; pp. 14–24. [Google Scholar]

- Graham, S.; Vu, Q.D.; Raza, S.E.A.; Azam, A.; Tsang, Y.W.; Kwak, J.T.; Rajpoot, N. Hover-net: Simultaneous segmentation and classification of nuclei in multi-tissue histology images. Med. Image Anal. 2019, 58, 101563. [Google Scholar] [CrossRef]

- Gamper, J.; Koohbanani, N.A.; Benes, K.; Graham, S.; Jahanifar, M.; Khurram, S.A.; Azam, A.; Hewitt, K.; Rajpoot, N. Pannuke dataset extension, insights and baselines. arXiv 2020, arXiv:2003.10778. [Google Scholar] [CrossRef]

- Khened, M.; Kori, A.; Rajkumar, H.; Krishnamurthi, G.; Srinivasan, B. A generalized deep learning framework for whole-slide image segmentation and analysis. Sci. Rep. 2021, 11, 11579. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Kim, H.; Yoon, H.; Thakur, N.; Hwang, G.; Lee, E.J.; Kim, C.; Chong, Y. Deep learning-based histopathological segmentation for whole slide images of colorectal cancer in a compressed domain. Sci. Rep. 2021, 11, 22520. [Google Scholar] [CrossRef]

- Chen, Y.; Jia, Y.; Zhang, X.; Bai, J.; Li, X.; Ma, M.; Sun, Z.; Pei, Z. TSHVNet: Simultaneous nuclear instance segmentation and classification in histopathological images based on multiattention mechanisms. BioMed Res. Int. 2022, 2022, 7921922. [Google Scholar] [CrossRef] [PubMed]

- Vo, V.T.T.; Kim, S.H. Mulvernet: Nucleus segmentation and classification of pathology images using the hover-net and multiple filter units. Electronics 2023, 12, 355. [Google Scholar] [CrossRef]

- Li, J.; Li, X. MIU-Net: MIX-attention and inception U-Net for histopathology image nuclei segmentation. Appl. Sci. 2023, 13, 4842. [Google Scholar] [CrossRef]

- Liakopoulos, P.; Massonnet, J.; Bonjour, J.; Mizrakli, M.T.; Graham, S.; Cuendet, M.A.; Seipel, A.H.; Michielin, O.; Merkler, D.; Janowczyk, A. HoverFast: An accurate, high-throughput, clinically deployable nuclear segmentation tool for brightfield digital pathology images. arXiv 2024, arXiv:2405.14028. [Google Scholar] [CrossRef]

- Huang, X.; Chen, J.; Chen, M.; Wan, Y.; Chen, L. FRE-Net: Full-region enhanced network for nuclei segmentation in histopathology images. Biocybern. Biomed. Eng. 2023, 43, 386–401. [Google Scholar] [CrossRef]

- Chen, J.; Wang, R.; Dong, W.; He, H.; Wang, S. HistoNeXt: Dual-mechanism feature pyramid network for cell nuclear segmentation and classification. BMC Med. Imaging 2025, 25, 9. [Google Scholar] [CrossRef]

- Zidan, U.; Gaber, M.M.; Abdelsamea, M.M. SwinCup: Cascaded swin transformer for histopathological structures segmentation in colorectal cancer. Expert Syst. Appl. 2023, 216, 119452. [Google Scholar] [CrossRef]

- Du, Y.; Chen, X.; Fu, Y. Multiscale transformers and multi-attention mechanism networks for pathological nuclei segmentation. Sci. Rep. 2025, 15, 12549. [Google Scholar] [CrossRef] [PubMed]

- Imran, M.; Islam Tiwana, M.; Mohsan, M.M.; Alghamdi, N.S.; Akram, M.U. Transformer-based framework for multi-class segmentation of skin cancer from histopathology images. Front. Med. 2024, 11, 1380405. [Google Scholar] [CrossRef]

- Atabansi, C.C.; Nie, J.; Liu, H.; Song, Q.; Yan, L.; Zhou, X. A survey of Transformer applications for histopathological image analysis: New developments and future directions. Biomed. Eng. Online 2023, 22, 96. [Google Scholar] [CrossRef]

- Budd, S.; Robinson, E.C.; Kainz, B. A survey on active learning and human-in-the-loop deep learning for medical image analysis. Med. Image Anal. 2021, 71, 102062. [Google Scholar] [CrossRef]

- Greenwald, N.F.; Miller, G.; Moen, E.; Kong, A.; Kagel, A.; Dougherty, T.; Fullaway, C.C.; McIntosh, B.J.; Leow, K.X.; Schwartz, M.S.; et al. Whole-cell segmentation of tissue images with human-level performance using large-scale data annotation and deep learning. Nat. Biotechnol. 2022, 40, 555–565. [Google Scholar] [CrossRef]

- Stacke, K.; Eilertsen, G.; Unger, J.; Lundström, C. A closer look at domain shift for deep learning in histopathology. arXiv 2019, arXiv:1909.11575. [Google Scholar] [CrossRef]

- Faryna, K.; van der Laak, J.; Litjens, G. Automatic data augmentation to improve generalization of deep learning in H&E stained histopathology. Comput. Biol. Med. 2024, 170, 108018. [Google Scholar] [CrossRef]

- Wang, H.; Jin, Q.; Li, S.; Liu, S.; Wang, M.; Song, Z. A comprehensive survey on deep active learning in medical image analysis. Med. Image Anal. 2024, 95, 103201. [Google Scholar] [CrossRef]

- Holzinger, A.; Langs, G.; Denk, H.; Zatloukal, K.; Müller, H. Causability and explainability of artificial intelligence in medicine. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, e1312. [Google Scholar] [CrossRef] [PubMed]

- Gu, H.; Huang, J.; Hung, L.; Chen, X. Lessons learned from designing an AI-enabled diagnosis tool for pathologists. Proc. ACM Hum.-Comput. Interact. 2021, 5, 1–25. [Google Scholar] [CrossRef]

- Zhang, S.; Yu, J.; Xu, X.; Yin, C.; Lu, Y.; Yao, B.; Tory, M.; Padilla, L.M.; Caterino, J.; Zhang, P.; et al. Rethinking human-AI collaboration in complex medical decision making: A case study in sepsis diagnosis. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–18. [Google Scholar]

- Slany, E.; Ott, Y.; Scheele, S.; Paulus, J.; Schmid, U. CAIPI in practice: Towards explainable interactive medical image classification. In Proceedings of the IFIP International Conference on Artificial Intelligence Applications and Innovations, Hersonissos, Greece, 17–20 June 2022; pp. 389–400. [Google Scholar]

- Gómez-Carmona, O.; Casado-Mansilla, D.; Lopez-de Ipina, D.; García-Zubia, J. Human-in-the-loop machine learning: Reconceptualizing the role of the user in interactive approaches. Internet Things 2024, 25, 101048. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, UK, 2016; Volume 1. [Google Scholar]

- Salehi, S.S.M.; Erdogmus, D.; Gholipour, A. Tversky loss function for image segmentation using 3D fully convolutional deep networks. In Proceedings of the International Workshop on Machine Learning in Medical Imaging, Quebec City, QC, Canada, 10 September 2017; pp. 379–387. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Micikevicius, P.; Narang, S.; Alben, J.; Diamos, G.; Elsen, E.; Garcia, D.; Ginsburg, B.; Houston, M.; Kuchaiev, O.; Venkatesh, G.; et al. Mixed precision training. arXiv 2017, arXiv:1710.03740. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. Adv. Neural Inf. Process. Syst. 2017, 30, 1195–1204. [Google Scholar]

- Maxim, L.D.; Niebo, R.; Utell, M.J. Screening tests: A review with examples. Inhal. Toxicol. 2014, 26, 811–828. [Google Scholar] [CrossRef] [PubMed]

- Mazer, B.L.; Homer, R.J.; Rimm, D.L. False-positive pathology: Improving reproducibility with the next generation of pathologists. Lab. Investig. 2019, 99, 1260–1265. [Google Scholar] [CrossRef] [PubMed]

- Trevethan, R. Sensitivity, specificity, and predictive values: Foundations, pliabilities, and pitfalls in research and practice. Front. Public Health 2017, 5, 307. [Google Scholar] [CrossRef] [PubMed]

| Organ/Tissue | Organ/Tissue | Organ/Tissue |

|---|---|---|

| Human bladder | Human pancreas | Human brain |

| Human peritoneum | Human cardia | Human placenta |

| Human cerebellum | Human pylorus | Human epiglottis |

| Human rectum | Human jejunum | Human salivary gland |

| Human kidney | Human spleen | Human liver |

| Human testis | Human lung | Human tongue |

| Human melanoma | Human tonsil | Human muscle |

| Human umbilical cord | Human oesophagus |

| Dataset | Original Images | After Augmentation | Final Used |

|---|---|---|---|

| NuInsSeg | 472 | 1888 | Train: 1510 Test: 378 |

| Source ROIs (SLN) | Derived ROI Pairs | ROI Size (Pixels) |

|---|---|---|

| SLN_01 | 6 | 2100 × 1500 |

| SLN_02 | 7 | 2100 × 1500 |

| SLN_03 | 5 | 2100 × 1500 |

| SLN_04 | 7 | 2100 × 1500 |

| SLN_05 | 6 | 2100 × 1500 |

| SLN_06 | 6 | 2100 × 1500 |

| Total | 37 | — |

| Dataset | Original Images | After Augmentation | Final Used |

|---|---|---|---|

| UHS-MelHist | 37 | 189 | Train: 144 Test: 45 |

| Component | Specification |

|---|---|

| CPU | Intel Core i7-11800H @ 2.30 GHz |

| RAM | 16 GB |

| GPU | NVIDIA GeForce RTX 4090 (24 GB) |

| Storage | 1.38 TB SSD |

| System | Windows 11, 64-bit |

| Python | 3.9 |

| PyTorch | 1.12.1 |

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actual Positive | True Positive (TP) | False Negative (FN) |

| Actual Negative | False Positive (FP) | True Negative (TN) |

| Metric | Baseline | Post-HITL | Improvement (%) |

|---|---|---|---|

| Recall | 0.8803 | 0.8983 | +2.05% |

| Precision | N/A | 0.6885 | N/A |

| Dice | N/A | 0.7796 | N/A |

| IoU | N/A | 0.6387 | N/A |

| Metric | Baseline | Post-HITL | Improvement (%) |

|---|---|---|---|

| Recall | 0.8917 | 0.9053 ± 0.0898 | +1.53% |

| Precision | 0.6869 | 0.7759 ± 0.1023 | +12.96% |

| Dice | 0.7664 | 0.8356 ± 0.0916 | +9.02% |

| IoU | 0.6433 | 0.7176 ± 0.1170 | +11.53% |

| Model | Recall | Prec | Dice | IoU | FNR (%) | Params (M) | GFLOPs | Mem (MB) | Steps/s | Epoch (s) | Imgs/s | Latency (ms) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| U-Net [14] | 0.8701 | 0.8416 | 0.8556 | 0.7476 | 18.6 | 31.04 | 83.70 | 592.1 | 29.68 | 7.70 | 995.7 | 1.00 |

| AttentionU-Net [16] | 0.8842 | 0.8454 | 0.8644 | 0.7611 | 7.3 | 31.39 | 85.35 | 598.7 | 27.53 | 8.30 | 904.0 | 1.11 |

| SwinU-Net [21] | 0.8380 | 0.8463 | 0.8421 | 0.7273 | 37.2 | 31.04 | 83.70 | 592.1 | 29.73 | 7.70 | 993.9 | 1.01 |

| TransU-Net [17] | 0.8873 | 0.7750 | 0.8273 | 0.7055 | 4.4 | 31.08 | 83.78 | 592.8 | 29.62 | 7.70 | 988.9 | 1.01 |

| DeepLabV3+ [29] | 0.8421 | 0.8268 | 0.8344 | 0.7159 | 35.2 | 3.02 | 36.47 | 57.5 | 51.83 | 4.40 | 1767.2 | 0.57 |

| MedT [20] | 0.9562 | 0.4291 | 0.5924 | 0.4208 | −165.1 | 2.63 | 16.00 | 50.1 | 12.88 | 17.70 | 323.2 | 3.09 |

| ClinSegNet (Ours) | 0.8917 | 0.6869 | 0.7664 | 0.6433 | – | 9.79 | 83.90 | 185.3 | 29.70 | 7.70 | 724.3 | 1.38 |

| BM | SE | FiLM | Edge | Recall (±SD) | Precision | Dice | IoU | ||

|---|---|---|---|---|---|---|---|---|---|

| 1 | – | – | – | 0.8545 ± 0.012 | 0.8491 | 0.8518 | 0.7418 | – | – |

| 2 | ✓ | – | – | 0.8565 ± 0.015 | 0.7879 | 0.8208 | 0.6960 | p = 0.0000003 | p = 0.0000016 |

| 3 | – | ✓ | – | 0.8356 ± 0.014 | 0.7000 | 0.7618 | 0.6153 | p = 0.4219 | p = 0.0000045 |

| 4 | – | – | ✓ | 0.8615 ± 0.018 | 0.8484 | 0.8549 | 0.7466 | p < 0.000001 | p < 0.000001 |

| 5 | ✓ | ✓ | – | 0.8703 ± 0.011 | 0.8312 | 0.8503 | 0.7396 | p < 0.000001 | p < 0.000001 |

| 6 | ✓ | – | ✓ | 0.8600 ± 0.010 | 0.8509 | 0.8554 | 0.7473 | p = 0.1158 | p = 0.8766 |

| 7 | – | ✓ | ✓ | 0.8781 ± 0.013 | 0.7975 | 0.8358 | 0.7180 | p = 0.0518 | p = 0.0000002 |

| 8 | ✓ | ✓ | ✓ | 0.8917 ± 0.009 | 0.6869 | 0.7664 | 0.6433 | p = 0.2583 | p = 0.000587 |

| Organ | NuInsSeg | Combined | Organ | NuInsSeg | Combined |

|---|---|---|---|---|---|

| Bladder | 0.9510 | 0.9377 | Liver | 0.9148 | 0.9234 |

| Brain | 0.8032 | 0.8202 | Lung | 0.8778 | 0.8671 |

| Cardia | 0.8995 | 0.9253 | Melanoma | 0.7655 | 0.7738 |

| Cerebellum | 0.9202 | 0.9474 | Melanoma LN | — | 0.7460 |

| Epiglottis | 0.9049 | 0.9016 | Muscle | 0.5981 | 0.8175 |

| Jejunum | 0.9307 | 0.9475 | Oesophagus | 0.9165 | 0.9429 |

| Kidney | 0.9514 | 0.9437 | Pancreas | 0.9110 | 0.9184 |

| Peritoneum | 0.8385 | 0.8678 | Placenta | 0.9460 | 0.9453 |

| Pylorus | 0.8098 | 0.9013 | Rectum | 0.7236 | 0.9102 |

| Salivary | 0.8983 | 0.9219 | Spleen | 0.8796 | 0.9060 |

| Testis | 0.7975 | 0.8258 | Tongue | 0.8513 | 0.9093 |

| Tonsil | 0.9031 | 0.8522 | Umbilical | 0.7579 | 0.7512 |

| FiLM dim | Params (M) | Recall | Dice | IoU |

|---|---|---|---|---|

| 16 | 9.66 | 0.8616 ± 0.0057 | 0.7756 ± 0.0325 | 0.6522 ± 0.0382 |

| 32 | 9.79 | 0.8879 ± 0.0004 | 0.7763 ± 0.0280 | 0.6517 ± 0.0343 |

| 64 | 9.88 | 0.8948 ± 0.0227 | 0.7653 ± 0.0047 | 0.6370 ± 0.0042 |

| 128 | 10.06 | 0.8616 ± 0.0065 | 0.7661 ± 0.0063 | 0.6388 ± 0.0062 |

| 256 | 10.42 | 0.8129 ± 0.0013 | 0.7440 ± 0.0176 | 0.6372 ± 0.0199 |

| BCE:Tversky | Recall | Dice | IoU |

|---|---|---|---|

| 0.2:0.8 | 0.8506 ± 0.0185 | 0.7658 ± 0.0016 | 0.6395 ± 0.0012 |

| 0.3:0.7 | 0.8849 ± 0.0016 | 0.7906 ± 0.0115 | 0.6689 ± 0.0137 |

| 0.4:0.6 | 0.8403 ± 0.0300 | 0.7514 ± 0.0184 | 0.6210 ± 0.0218 |

| 0.5:0.5 | 0.8178 ± 0.0101 | 0.7150 ± 0.0337 | 0.5757 ± 0.0381 |

| 0.7:0.3 | 0.7882 ± 0.0046 | 0.7446 ± 0.0033 | 0.6116 ± 0.0042 |

| 0.8:0.2 | 0.7230 ± 0.0000 | 0.6620 ± 0.0000 | 0.5296 ± 0.0000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, B.; Markham, H.; Moutasim, K.; Foria, V.; Liu, H. ClinSegNet: Towards Reliable and Enhanced Histopathology Screening. Bioengineering 2025, 12, 1156. https://doi.org/10.3390/bioengineering12111156

Yu B, Markham H, Moutasim K, Foria V, Liu H. ClinSegNet: Towards Reliable and Enhanced Histopathology Screening. Bioengineering. 2025; 12(11):1156. https://doi.org/10.3390/bioengineering12111156

Chicago/Turabian StyleYu, Boyang, Hannah Markham, Karwan Moutasim, Vipul Foria, and Haiming Liu. 2025. "ClinSegNet: Towards Reliable and Enhanced Histopathology Screening" Bioengineering 12, no. 11: 1156. https://doi.org/10.3390/bioengineering12111156

APA StyleYu, B., Markham, H., Moutasim, K., Foria, V., & Liu, H. (2025). ClinSegNet: Towards Reliable and Enhanced Histopathology Screening. Bioengineering, 12(11), 1156. https://doi.org/10.3390/bioengineering12111156