A Scoping Review of AI-Based Approaches for Detecting Autism Traits Using Voice and Behavioral Data

Abstract

1. Introduction

1.1. Background

- Social communication and social interaction deficits.

- Restrictive or repetitive behaviors (RRBs), interests, or activities.

1.2. Objective of the Review

- What is the performance of the models applied in the research to detect autism traits and what modalities have been explored?

- How to extract voice and behavioral data features to integrate into existing models?

- What are the challenges and limitations of the approach using voice and behavioral data?

- What are the ethical constraints for research on people with ASD?

1.3. ASD Discrimination Criteria from a Clinical Point of View

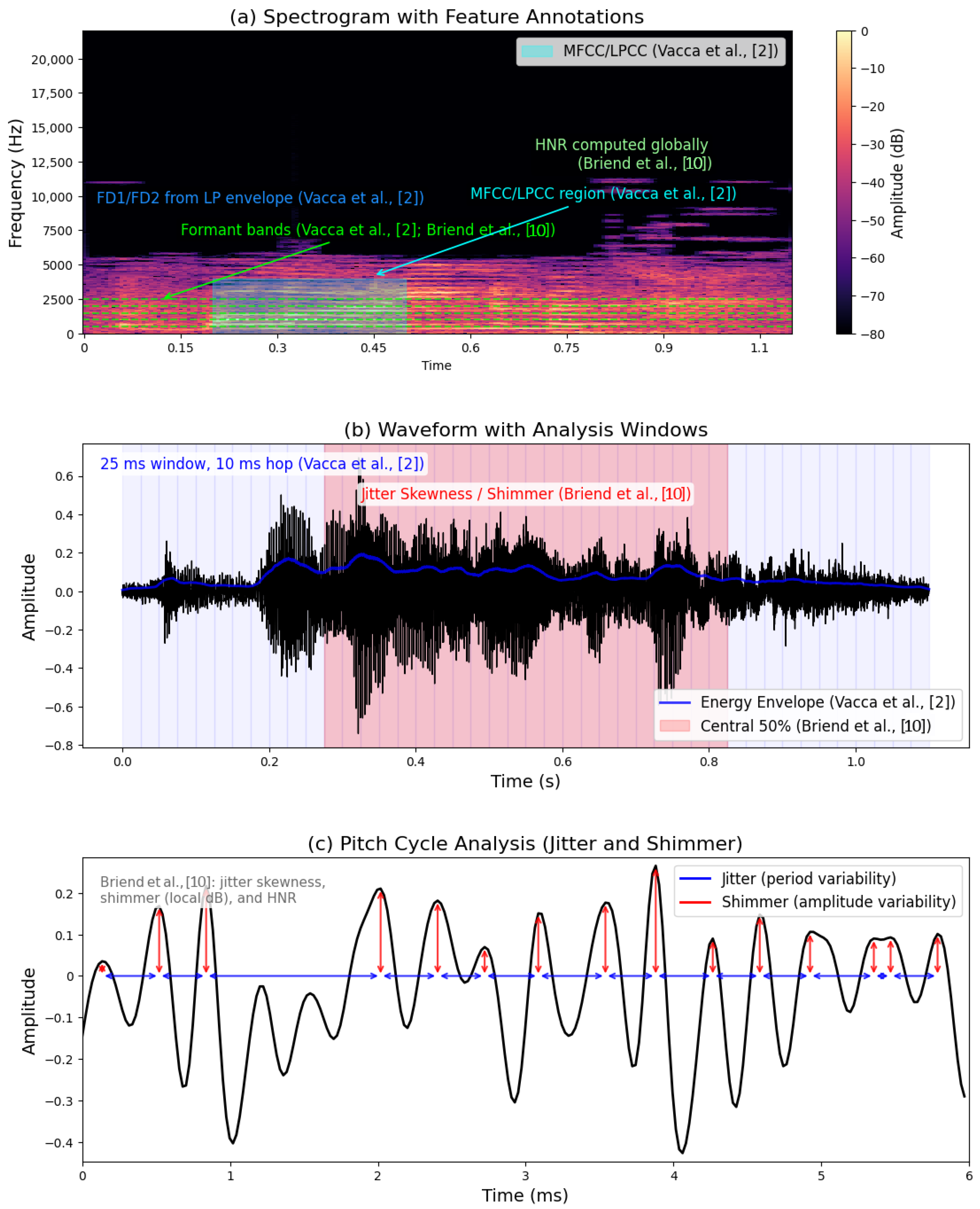

1.3.1. Voice Biomarkers

- Atypical Prosody:Measured as reduced pitch (fundamental frequency F0) variation, leading to a monotonic or flat-sounding voice that lacks the melodic contours used for emotional emphasis and questioning.

- Vocal Instability: Increased jitter (frequency instability) and shimmer (amplitude instability) can manifest as a shaky, rough, or strained voice quality, reflecting poor neuromotor control of the larynx.

- Abnormal Resonance and Timbre: Deviations in formant frequencies and spectral energy distribution can make the voice sound atypically nasal, hoarse, or strained.

1.3.2. Conversational/Interactional Dynamics

- Atypical Turn-Taking: Characterized by either excessively long response latencies, suggesting difficulty with rapid social processing, or frequent turn-taking overlaps and interruptions, indicating challenges with social timing and reciprocity.

- Lack of Prosodic Entrainment: Failure to subconsciously synchronize pitch, intensity, or speaking rate with a conversation partner. This absence of “vocal mirroring” is quantifiable evidence of deficits in social-emotional reciprocity.

- Dysfluent Pause Structure: Irregular and unpredictable pausing patterns disrupt the natural flow of conversation.

1.3.3. Linguistic and Content-Level Language Analysis

- Pragmatic Language Deficits: Inferred from a lack of cohesive devices, tangential storytelling, or a failure to provide context for a listener, making narratives hard to follow.

- Semantic and Lexical Differences: This can manifest as an overly formal or pedantic speaking style (“professorial”), or conversely, as a limited and concrete vocabulary. A strong focus on a specific topic can be detected through repetitive lexical choices.

- Pronoun Reversal: A historically noted but variable feature where the individual might refer to themselves as “you” or by their own name.

1.3.4. Movement Analysis

- Atypical Gait and Posture: Quantifiable as increased body sway, unusual trunk posture, or asymmetrical, irregular gait.

- Impoverished or Atypical Gestures: A reduced rate and range of communicative gestures (e.g., pointing and waving) can be measured spatially and temporally. Gestures that are present may be poorly integrated with speech or appear stiff and mechanical.

- Motor Incoordination: Measured as increased movement irregularity, high variability, and reduced smoothness in reaching and other goal-directed movements.

1.3.5. Activity Recognition

- Motor Stereotypies: The ability to automatically classify whole-body repetitive behaviours such as body rocking, hand flapping, or spinning.

- Atypical Activity Patterns: Recognizing periods of unusually high or low activity levels, or a lack of variation in play and exploration.

- Context-Inappropriate Behaviours: Identifying episodes such as elopement (running off) or meltdowns characterized by intense motor agitation.

1.3.6. Facial Gesture Analysis

- Reduced Facial Expressivity: A lower frequency and intensity of facial Action Unit (AU) activation, leading to a “flat affect” or neutral face, even in emotionally charged situations.

- Atypical Expression Dynamics: Facial expressions may be fleeting, slow to develop, or poorly timed with social cues and speech.

- Incoherent Expression: Incongruence between different parts of the face (e.g., a smiling mouth without the accompanying eye creases of a genuine “Duchenne smile”).

1.3.7. Visual Attention

- Reduced Attention to Social Stimuli: Quantifiably less time spent fixating on the eyes and faces of others in images, videos, or real-life interactions.

- Atypical Scanpaths: Visual exploration of a scene that is more disorganized, with more fixations on background objects and fewer on socially relevant information.

- Impaired Joint Attention: A failure to spontaneously follow another person’s gaze or pointing gesture, a key early social milestone.

1.3.8. Multimodal Approaches

- Capturing Behavioral Incongruence: For example, detecting when a flat vocal prosody (Voice Biomarker) co-occurs with a lack of facial expressivity (Facial Gesture Analysis) during a supposedly happy conversation.

- Compensatory Analysis: Identifying when a strength in one modality (e.g., complex linguistic content) masks a deficit in another (e.g., poor interactional dynamics).

- Holistic Phenotyping: Creating a composite profile that more accurately reflects the complex, multi-faceted nature of ASD than any single modality can. A model might fuse visual attention (to eyes), vocal prosody, and head movement to generate a more robust estimate of social engagement.

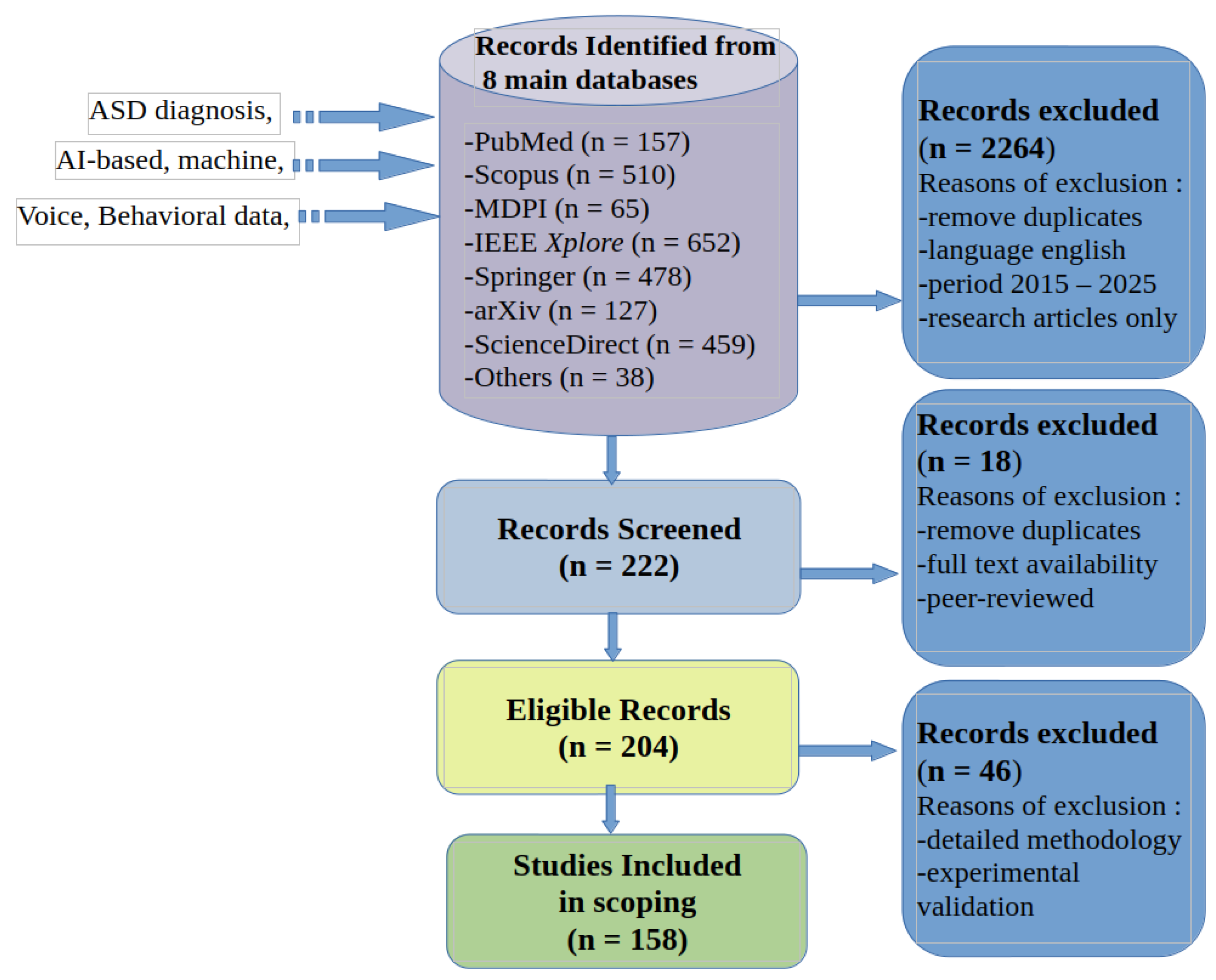

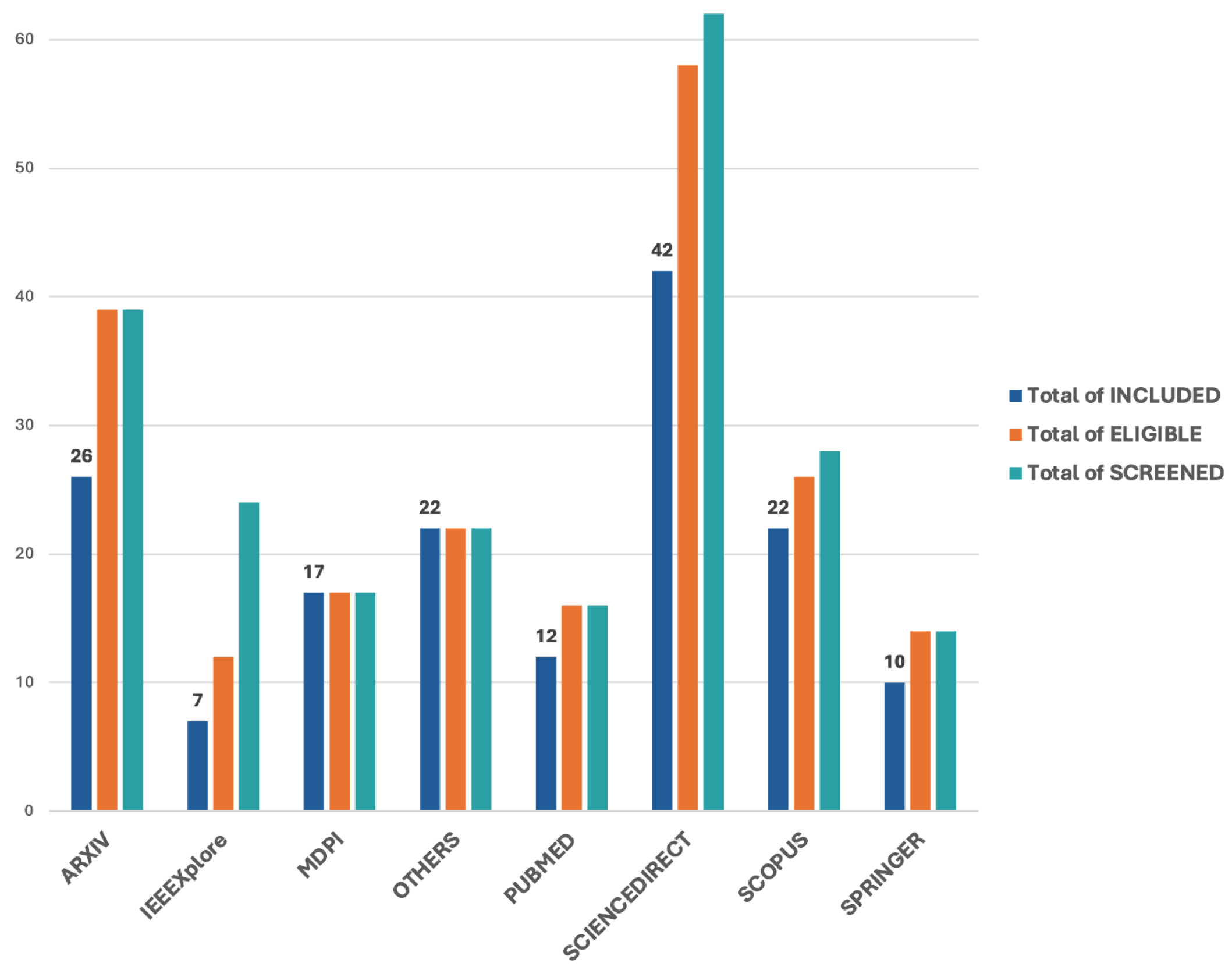

2. Materials and Methods

2.1. Identification

- PubMed was searched for its extensive coverage of biomedical and healthcare research, particularly studies on neurodevelopmental disorders and clinical applications of AI.

- IEEE Xplore was consulted for research on machine learning algorithms, signal processing, and computational models applied to ASD detection.

- ScienceDirect provided access to literature spanning psychology, neuroscience, and computational approaches in healthcare.

- Scopus and ArXiv ensured multidisciplinary coverage, including peer-reviewed and preprint studies across medical, engineering, and behavioral sciences.

2.2. Selection of Sources of Evidence

- Elimination of duplicates to maintain a unique and non-redundant dataset;

- Inclusion of English-language publications to ensure accessibility and consistency in analysis;

- A 10-year publication range was chosen to encompass the rapid advances and emerging trends in AI-driven methodologies.

- To ensure a focused and high-quality analysis, only research articles were selected for this screening phase.

2.3. Eligibility

- Full-text availability, ensuring comprehensive access to research findings.

- Peer-reviewed journal publications, guaranteeing scientific rigor and credibility.

2.4. Studies Included in Scoping

3. Results

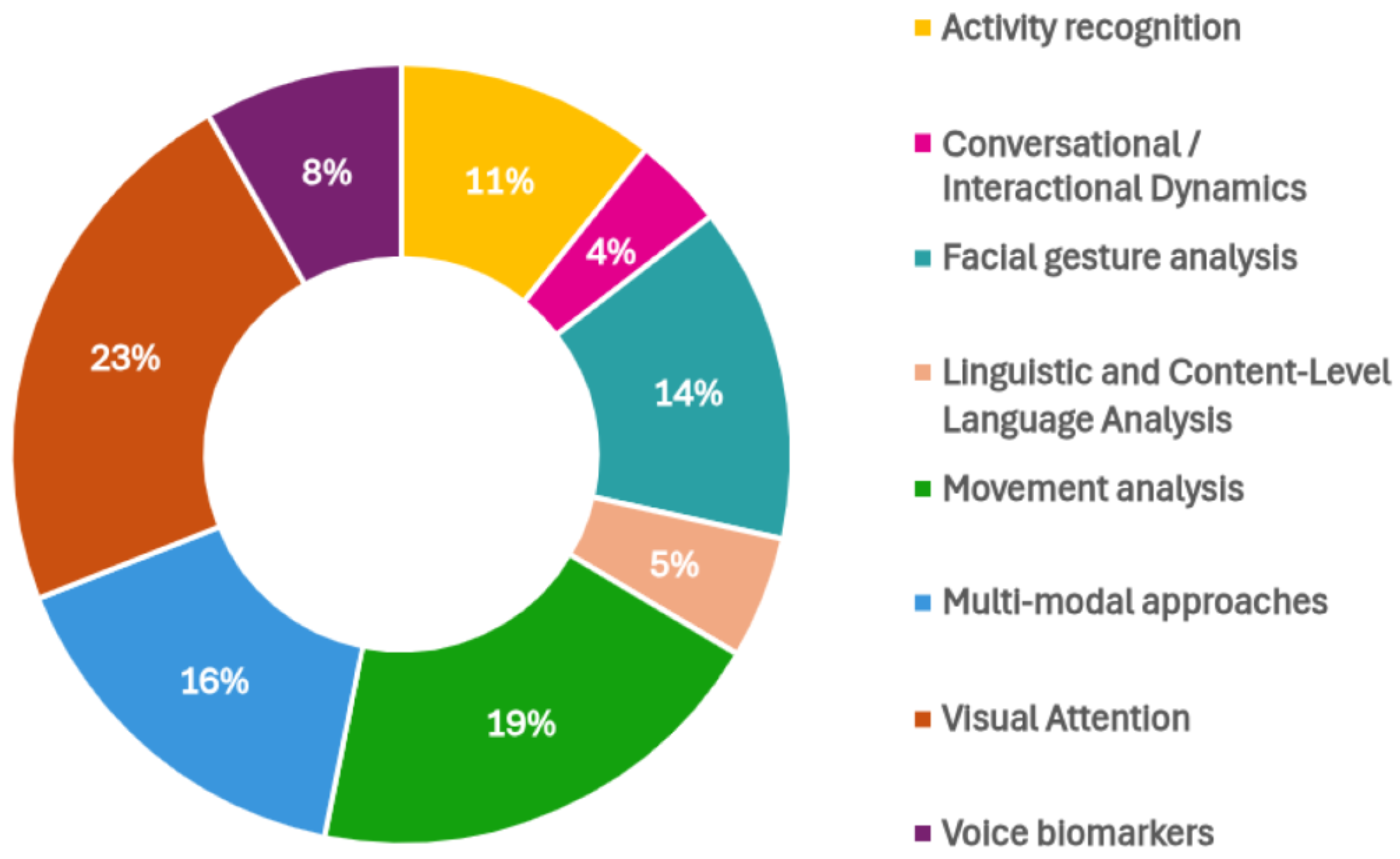

3.1. Overview of Included Studies

3.2. Voice Biomarkers (Acoustic/Prosodic Features)

3.2.1. Data Preprocessing Approaches

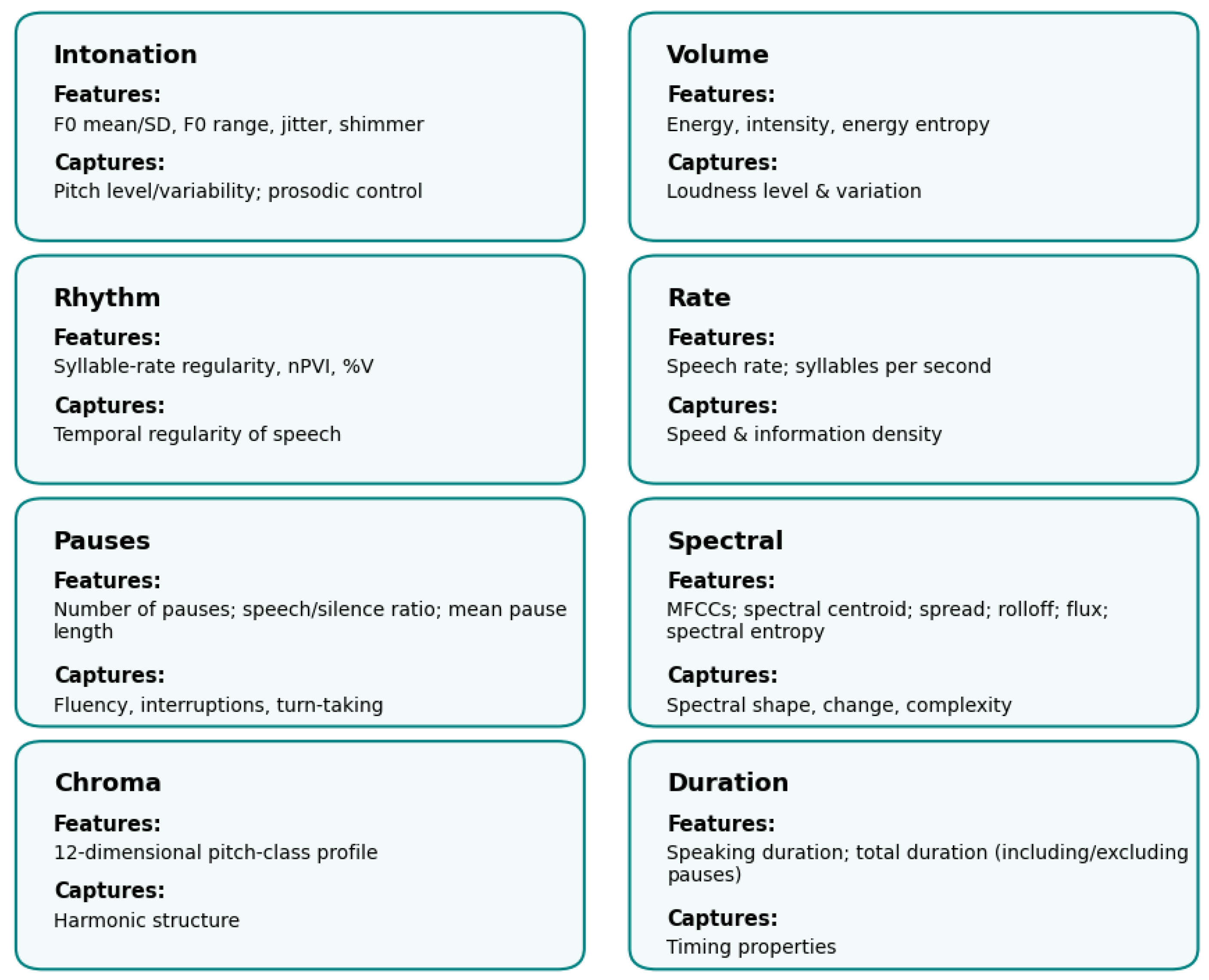

3.2.2. Feature Extraction

- Mohanta & Mittal (2022) [13]: formants, MFCCs, and jitter.

- Jayasree & Shia (2021) [14]: MFCCs, jitter, and shimmer.

- Asgari & Chen (2021) [15]: pitch, jitter, shimmer, MFCCs, and prosodic cues.

- Lee et al. (2020) [19]: prosody, MFCC, and spectral measures.

- Guo et al. (2022) [17]: jitter, shimmer, HNR, and MFCCs.

- Li et al. (2019) [20]: acoustic features using OpenSMILE and a spectrogram.

- Rosales-Pérez et al. (2019) [21]: acoustic features acquisition from the Mel Frequency Cepstral Coefficient and the Linear Predictive Coding from signal processing.

- Chi et al. (2020) [22]: audio features (including Mel-frequency cepstral coefficients), spectrograms, and speech representations (wav2vec).

3.2.3. AI Techniques Used

- Mohanta & Mittal (2022) [13]: GMM, SVM, RF, KNN, and PNN.

- Jayasree & Shia (2021) [14]: FFNN.

- Asgari & Chen (2021) [15]: SVM and RF.

- Godel et al. (2023) [16]: statistical models and ML classifiers.

- Lau et al. (2022) [18]: SVM.

- Lee et al. (2023) [19]: SVM, RF, and CNN.

- Guo et al. (2022) [17]: RF.

- Li et al. (2022) [20]: SVM and CNN.

- Chi et al. (2022) [22]: RF, CNN, and Transformer-based ASR model.

3.2.4. Performance, Constraints, and Challenges

3.2.5. Comparative Overview of Top Studies

3.3. Conversational/Interactional Dynamics

- Turn-gap duration and variability.

- Overlap proportion and pause ratios.

- Prosodic entrainment (synchrony of F0, intensity, and speech rate) across turns.

- Conversational balance (reciprocity, turn counts, and initiations vs. responses).

- Blockwise dynamics of pitch and intensity during dialogue.

3.3.1. Data Preprocessing Approaches

- Speaker diarization and voice activity detection (VAD) to separate interlocutors and silence.

- Optional automatic speech recognition (ASR) for transcript alignment when lexical information is required.

- Time alignment of interlocutor streams to compute dyadic timing measures (e.g., turn boundaries, gap and overlap durations) and prosodic entrainment metrics (cross-correlations of F0, intensity, and speaking rate).

3.3.2. Feature Extraction

- Turn-taking timing: Distribution of silence gaps between turns; overlap proportion/frequency; mean/variance of response latency; and overall pause ratio.

- Prosodic synchrony (entrainment): Correlation or convergence of F0, intensity, and speaking rate across turns, computed as global or local entrainment indices.

- Participation balance: Relative number and length of turns per speaker; reciprocity of exchanges; and ratio of initiations to responses.

- Blockwise dynamics: Short-window (e.g., 3–5 s) changes in intensity and pitch aligned across speakers to capture interactional synchrony.

3.3.3. AI Techniques Used

3.3.4. Performance, Constraints, and Challenges

3.3.5. Comparative Overview of Representative Studies

3.4. Language- and Content-Level Analysis

3.4.1. Methods of Data Preprocessing

3.4.2. Feature Extraction

- Lexical: Vocabulary richness, type-token ratio, and word frequency.

- Syntactic: Sentence length, parse tree depth, and part-of-speech tags.

- Semantic: Embeddings from BERT, GPT, or word2vec.

- Narrative: Cohesion, coherence, and temporal structure.

3.4.3. AI Techniques Used

3.4.4. Performance, Constraints, and Challenges

3.4.5. Comparison of Autism Language Analysis Classification Studies

3.5. Movement Analysis

3.5.1. Data Preprocessing Approaches

3.5.2. Feature Extraction

3.5.3. AI Techniques Used

3.5.4. Performance, Constraints, and Challenges

3.5.5. Comparative Overview of Representative Studies

3.6. Activity Recognition

3.6.1. Data Preprocessing Approaches

3.6.2. Feature Extraction

3.6.3. AI Techniques Used

3.6.4. Performance, Constraints, and Challenges

3.6.5. Comparative Overview of Representative Studies

3.7. Facial Gesture Analysis

3.7.1. Data Preprocessing Approaches

3.7.2. Feature Extraction

3.7.3. AI Techniques Used

3.7.4. Performance, Constraints, and Challenges

3.7.5. Comparison of Autism Facial Gesture Analysis Classification Studies

3.8. Visual Attention Analysis

3.8.1. Data Preprocessing Approaches

3.8.2. Feature Extraction

- Fixation-based metrics: Proportion of looking time to regions of interest (ROIs), such as eyes, mouth, or faces versus nonsocial content. Pierce et al. [120] quantified attention to “motherese” speech, while Chang et al. [119] measured gaze allocation to social versus nonsocial scenes using percent-looking and silhouette scores. Yang et al. (2024, not in bib yet) extended this approach by modeling transitions between AOIs as graph structures, highlighting reduced face-directed transitions as robust ASD markers.

- Scanpath dynamics: Sequences of fixations and saccades can be represented using methods such as string-edit distances, Markov models, or temporal statistics. Minissi et al. [146] further summarized clustering-based encodings, histogram representations, and dimensionality reduction pipelines for scanpath analysis.

- Deep representations: Recent work bypasses handcrafted features by learning directly from raw gaze traces. Vasant et al. [115] trained deep neural networks on gaze trajectories and saliency maps, demonstrating higher classification accuracy and scalability in tablet-based screening.

- Egocentric/real-world cues: Wearable eye trackers enable measures of naturalistic attention such as eye contact detection [123] or social partner engagement, often requiring privacy-preserving preprocessing.

3.8.3. AI Techniques Used

- Sequential models: CNN-LSTM hybrids capture temporal dependencies in scanpaths [131,135]. Ahmed et al. [128] proposed an end-to-end pipeline for gaze-driven ASD detection, and Thanarajan et al. [140] used deep optimization frameworks. Recent work also explored involutional convolutions for temporal gaze encoding [141].

- Fusion models: Semantic and multimodal integration strategies enrich gaze-based representations. Fang et al. [118] introduced hierarchical semantic fusion, Alcañiz et al. [122] and Minissi et al. [133] combined gaze with VR contexts and biosignals, and Varma et al. [139] leveraged mobile game-based gaze indicators. Vasant et al. [115] advanced transfer learning on raw gaze trajectories for scalable tablet-based screening.

- Explainable ML: Colonnese et al. [147], Antolí et al. [129], and Almadhor et al. [134] emphasized interpretability, applying techniques such as influence tracing, SHAP-based feature attribution, and task-level explanations to enhance clinical trust. Keehn et al. [121] further highlighted the importance of clinical translation by demonstrating the feasibility of eye-tracking biomarkers for ASD diagnosis in primary care.

3.8.4. Performance, Constraints, and Challenges

3.8.5. Comparative Overview of Representative Studies

4. Discussion

- A critical synthesis of the literature, prioritizing the dual criteria of early detection potential and predictive accuracy, reveals a distinct hierarchy among the AI modalities investigated. The voice biomarker modality emerges as the most compelling avenue for future research. Its superiority is grounded in its unique capacity for extremely early signal acquisition, from infancy, coupled with a non-invasive nature and, as evidenced by this review, consistently high predictive performance. Following this, facial analysis and visual attention modalities also demonstrate significant promise, offering complementary behavioral data that align well with our core criteria.

- To transcend the limitations of any single modality and develop tools with the generalizability required for clinical deployment, a multimodal approach is paramount. We propose the following two strategic pathways: First, the integration of the highly promising voice biomarker with a visual modality, such as visual attention, to capture concurrent vocal and social engagement cues. Second, a powerful alternative involves fusing the closely related auditory-linguistic modalities, voice biomarkers, conversational dynamics, and language analysis, and combining this rich composite with one of the other modalities. Such integrative strategies are likely to yield the robust, generalizable, and clinically viable diagnostic tools necessary to improve outcomes through timely intervention.

- Diagnostic Accuracy: Machine learning (ML) and deep learning (DL) models consistently demonstrated high performance in controlled settings, with accuracies ranging from 85% to 99%. For instance, STFT-CNN models in voice analysis achieved 99.1% accuracy with augmented data, while gait analysis using 3DCNNs and MLPs classified ASD with 95% precision. These results suggest that AI can augment traditional tools like ADOS by offering objective, quantifiable metrics.

- Modality-Specific Insights:

- Voice Biomarkers: Acoustic features (pitch, jitter, and shimmer) and prosodic patterns emerged as robust indicators, though performance varied with linguistic and demographic diversity.

- Movement Analysis: Motor abnormalities (e.g., gait irregularities and repetitive movements) were effectively captured via wearable sensors and pose estimation tools, enabling low-cost, scalable screening.

- Facial Gesture Analysis: Vision Transformers and CNNs excelled in decoding atypical gaze patterns and micro-expressions, though dataset imbalances hindered subgroup differentiation.

- Multimodal Fusion: Integrating audio-visual, kinematic, and linguistic data (e.g., Deng et al. [30]’s LLaVA-ASD framework) improved robustness, addressing the heterogeneity of ASD presentations.

- Innovations in Explainability: Post hoc interpretability methods, such as attention maps in transformer models and feature importance scoring in RF/SVM, bridged the gap between “black-box” AI and clinical trust. For example, Briend et al. (2023) [10] linked shimmer and F1 frequencies to vocal instability in ASD, aligning computational outputs with clinical observations.

- Dataset Limitations: Small, homogeneous samples (e.g., gender imbalances and limited age ranges) and lack of multicultural/multilingual datasets restrict generalizability. For instance, Vacca et al. [2]’s voice analysis relied on 84 subjects, raising concerns about overfitting.

- Ethical and Practical Barriers: Privacy risks in video/audio data collection and algorithmic bias (e.g., underrepresentation of female and non-Western cohorts).

- Clinical Translation: Most studies operated in controlled environments, with limited validation in real-world clinical workflows. For example, Li et al. [82]’s MMASD dataset, while promising, lacks benchmarking against gold-standard diagnostic tools.

- Mandatory Bias Auditing and Transparent Reporting: Future studies must mandate and report results of bias audits across diverse demographic subgroups. Model performance metrics (accuracy, sensitivity, etc.) should be disaggregated by sex, ethnicity, and socioeconomic background as a standard requirement for publication, moving beyond aggregate performance to ensure equitable efficacy.

- Development of Standardized Data Handling Protocols: To mitigate privacy risks, the field should develop and adopt standardized deidentification protocols for behavioral data. This includes technical standards for removing personally identifiable information from audio and video files, as well as clear guidelines for secure data storage, access, and sharing, similar to those used in genomic research.

- Promoting Explainability and Co-Design for Trust: To address the “black box” problem, a dual approach is essential. First, the development of explainable AI (XAI) techniques must be prioritized to provide clinicians with interpretable rationales for a model’s output (e.g., highlighting which specific vocal patterns or behaviors drove the assessment). Second, the development process must transition to a participatory design model, actively involving autistic individuals, their families, and clinicians. This collaboration is crucial for validating model explanations, ensuring they align with human experience, and building the foundational trust required for clinical integration.

- Scalability Through Multimodal Integration: Combining voice, movement, and gaze data could capture the dynamic interplay of ASD traits, as seen in hybrid frameworks like 2sGCN-AxLSTM. Federated learning (Shamseddine et al., 2022 [148]) offers a pathway to pooling diverse datasets while preserving privacy.

- Longitudinal and Real-World Studies: Tracking developmental trajectories via wearable sensors or mobile apps (e.g., Wall et al. [164]’s gaze-tracking glasses) could enhance early detection and personalize interventions.

- Ethical AI Frameworks: Developing standardized benchmarks, inclusive datasets, and guidelines for consent/transparency is critical. Innovations like privacy-preserving optical flow (Li et al., 2023 [65])and synthetic data generation may mitigate biases.

5. Conclusions

- Expansion of datasets that are multilingual, diverse in age/gender, and inclusive of varying ASD severities.

- Development of standardized pipelines and benchmarks for feature extraction, model training, and evaluation.

- Stronger emphasis on model interpretability and clinical usability.

- Rigorous ethical oversight and privacy protection, particularly in the use of video and audio recordings.

Funding

Conflicts of Interest

References

- Canadian Health Survey on Children and Youth (CHSCY). Autism Spectrum Disorder: Highlights from the 2019 Canadian Health Survey on Children and Youth. Available online: https://www.canada.ca/en/public-health/services/publications/diseases-conditions/autism-spectrum-disorder-canadian-health-survey-children-youth-2019.html#a7.1 (accessed on 15 April 2025).

- Vacca, J.; Brondino, N.; Dell’Acqua, F.; Vizziello, A.; Savazzi, P. Automatic Voice Classification of Autistic Subjects. arXiv 2024, arXiv:2406.13470. [Google Scholar] [CrossRef]

- Xie, J.; Wang, L.; Webster, P.; Yao, Y.; Sun, J.; Wang, S.; Zhou, H. Identifying visual attenttion features accurately discerning between autism and typically developing: A deep learning framework. Interdiscip. Sci. Comput. Life Sci. 2022, 14, 639–651. [Google Scholar] [CrossRef]

- Ibadi, H.; Lakizadeh, A. ASDvit: Enhancing autism spectrum disorder classification using vision transformer models based on static features of facial images. Intell.-Based Med. 2025, 11, 100226. [Google Scholar] [CrossRef]

- Themistocleous, C.; Andreou, M.; Peristeri, E. Autism detection in children: Integrating machine learning and natural language processing in narrative analysis. Behav. Sci. 2024, 14, 459. [Google Scholar] [CrossRef]

- Ji, Y.; Zhang-Lea, J.; Tran, J. Automated ADHD detection using dual-modal sensory data and machine learning. Med. Eng. Phys. 2025, 139, 104328. [Google Scholar] [CrossRef]

- Kim, Y.K.; Visscher, R.M.S.; Viehweger, E.; Singh, N.B.; Taylor, W.R.; Vogl, F. A deep-learning approach for automatically detecting gait-events based on foot-marker kinematics in children with cerebral palsy—Which markers work best for which gait patterns? PLoS ONE 2022, 17, e0275878. [Google Scholar] [CrossRef]

- Lai, J.W.; Ang, C.K.E.; Acharya, U.R.; Cheong, K.H. Schizophrenia: A Survey of Artificial Intelligence Techniques Applied to Detection and Classification. Int. J. Environ. Res. Public Health 2021, 18, 6099. [Google Scholar] [CrossRef]

- PRISMA-Scoping Reviews. Available online: https://www.prisma-statement.org/scoping (accessed on 5 April 2025).

- Briend, F.; David, C.; Silleresi, S.; Malvy, J.; Ferré, S.; Latinus, M. Voice acoustics allow classifying autism spectrum disorder with high accuracy. Transl. Psychiatry 2023, 13, 250. [Google Scholar] [CrossRef] [PubMed]

- Hu, C.; Thrasher, J.; Li, W.; Ruan, M.; Yu, X.; Paul, L.; Wang, S.; Li, X. Exploring speech pattern disorders in autism using machine learning. arXiv 2024, arXiv:2405.05126. [Google Scholar] [CrossRef]

- Sai, K.; Krishna, R.; Radha, K.; Rao, D.; Muneera, A. Automated ASD detection in children from raw speech using customized STFT–CNN model. Int. J. Speech Technol. 2024, 27, 701–716. [Google Scholar] [CrossRef]

- Mohanta, A.; Mittal, V. Analysis and classification of speech sounds of children with autism spectrum disorder using acoustic features. Comput. Speech Lang. 2022, 72, 101287. [Google Scholar] [CrossRef]

- Jayasree, T.; Shia, S. Combined signal processing–based techniques and feed-forward neural networks for pathological voice detection and classification. Sound Vib. 2021, 55, 141–161. [Google Scholar] [CrossRef]

- Asgari, M.; Chen, L.; Fombonne, E. Quantifying voice characteristics for detecting autism. Comput. Speech Lang. 2021, 12, 665096. [Google Scholar] [CrossRef]

- Godel, M.; Robain, F.; Journal, F.; Kojovic, N.; Latrèche, K.; Dehaene-Lambertz, G.; Schaer, M. Prosodic signatures of ASD severity and developmental delay in preschoolers. npj Digit. Med. 2023, 6, 99. [Google Scholar] [CrossRef]

- Guo, C.; Chen, F.; Chang, Y.; Yan, J. Applying Random Forest classification to diagnose autism using acoustical voice-quality parameters during lexical tone production. Biomed. Signal Process. Control 2022, 77, 103811. [Google Scholar] [CrossRef]

- Lau, J.; Patel, S.; Kang, X.; Nayar, K.; Martin, G.; Choy, J.; Wong, P.; Losh, M. Cross-linguistic patterns of speech prosodic differences in autism: A machine learning study. PLoS ONE 2022, 17, e0269637. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Lee, G.; Bong, G.; Yoo, H.; Kim, H. Deep-learning–based detection of infants with autism spectrum disorder using autoencoder feature representation. Sensors 2020, 20, 6762. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Tang, D.; Zeng, J.; Zhou, T.; Zhu, H.; Chen, B.; Zou, X. An automated assessment framework for atypical prosody and stereotyped idiosyncratic phrases related to autism spectrum disorder. Comput. Speech Lang. 2019, 56, 80–94. [Google Scholar] [CrossRef]

- Rosales-Pérez, A.; Reyes-García, C.; Gonzalez, J.; Reyes-Galaviz, O.; Escalante, H.; Orlandi, S. Classifying infant cry patterns by the genetic selection of a fuzzy model. Biomed. Signal Process. Control 2015, 17, 38–46. [Google Scholar] [CrossRef]

- Chi, N.; Washington, P.; Kline, A.; Husic, A.; Hou, C.; He, C.; Dunlap, K.; Wall, D. Classifying autism from crowdsourced semi-structured speech recordings: A machine learning approach. arXiv 2022. [Google Scholar] [CrossRef]

- Ochi, K.; Ono, N.; Owada, K.; Kojima, M.; Kuroda, M.; Sagayama, S.; Yamasue, H. Quantification of speech and synchrony in the conversation of adults with autism spectrum disorder. PLoS ONE 2019, 15, e0225377. [Google Scholar] [CrossRef]

- Lehnert-LeHouillier, H.; Terrazas, S.; Sandoval, S. Prosodic Entrainment in Conversations of Verbal Children and Teens on the Autism Spectrum. Front. Psychol. 2020, 11, 582221. [Google Scholar] [CrossRef]

- Wehrle, S.; Cangemi, F.; Janz, A.; Vogeley, K.; Grice, M. Turn-timing in conversations between autistic adults: Typical short-gap transitions are preferred, but not achieved instantly. PLoS ONE 2023, 18, e0284029. [Google Scholar] [CrossRef] [PubMed]

- Chowdhury, T.; Romero, V.; Stent, A. Parameter Selection for Analyzing Conversations with Autism Spectrum Disorder. arXiv 2024, arXiv:2401.09717v1. [Google Scholar] [CrossRef]

- Yang, Y.; Cho, S.; Covello, M.; Knox, A.; Bastani, O.; Weimer, J.; Dobriban, E.; Schultz, R.; Lee, I.; Parish, J. Automatically Predicting Perceived Conversation Quality in a Pediatric Sample Enriched for Autism. Interspeech 2023, 2023, 4603–4607. [Google Scholar] [CrossRef] [PubMed]

- Mukherjee, P.; Gokul, S.; Sadhukhan, S.; Godse, M.; Chakraborty, B. Detection of ASD from natural language text using BERT and GPT models. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 0141041. [Google Scholar] [CrossRef]

- Rubio-Martín, S.; García-Ordás, M.; Bayón-Gutiérrez, M.; Prieto-Fernández, N.; Benítez-Andrades, J. Enhancing ASD detection accuracy: A combined approach of machine learning and deep learning models with natural language processing. Health Inf. Sci. Syst. 2024, 12, 20. [Google Scholar] [CrossRef]

- Deng, S.; Kosloski, E.; Patel, S.; Barnett, Z.; Nan, Y.; Kaplan, A.; Aarukapalli, S.; Doan, W.T.; Wang, M.; Singh, H.; et al. Hear Me, See Me, Understand Me: Audio–Visual Autism Behavior Recognition. arXiv 2024, arXiv:2406.02554. [Google Scholar] [CrossRef]

- Angelopoulou, G.; Kasselimis, D.; Goutsos, D.; Potagas, C. A Methodological Approach to Quantifying Silent Pauses, Speech Rate, and Articulation Rate across Distinct Narrative Tasks: Introducing the Connected Speech Analysis Protocol (CSAP). Brain Sci. 2024, 16, 466. [Google Scholar] [CrossRef]

- Hansen, L.; Rocca, R.; Simonsen, A.; Parola, A.; Bliksted, V.; Ladegaard, N.; Bang, D.; Tylén, K.; Weed, E.; Østergaard, S.D.; et al. Automated Voice- and Text-Based Classification of Neuropsychiatric Conditions in a Multidiagnostic Setting. arXiv 2023, arXiv:2301.06916. [Google Scholar] [CrossRef]

- Murugaiyan, S.; Uyyala, S. Aspect-based sentiment analysis of customer speech data using deep CNN and BiLSTM. Cogn. Comput. 2023, 15, 914–931. [Google Scholar] [CrossRef]

- Ramesh, V.; Assaf, R. Detecting autism spectrum disorders with machine learning models using speech transcripts. arXiv 2021, arXiv:2110.03281. [Google Scholar] [CrossRef]

- Jaiswal, A.; Washington, P. Using #ActuallyAutistic on Twitter for precision diagnosis of autism spectrum disorder: Machine learning study. JMIR Form. Res. 2024, 8, 52660. [Google Scholar] [CrossRef]

- Airaksinen, M.; Räsänen, O.; Ilén, E.; Häyrinen, T.; Kivi, A.; Marchi, V.; Gallen, A.; Blom, S.; Varhe, A.; Kaartinen, N.; et al. Automatic posture and movement tracking of infants with wearable movement sensors. Sci. Rep. 2020, 10, 169. [Google Scholar] [CrossRef]

- Rad, N.M.; Bizzego, A.; Kia, S.M.; Jurman, G.; Venuti, P.; Furlanello, C. Convolutional Neural Network for Stereotypical Motor Movement Detection in Autism. arXiv 2016, arXiv:1511.01865. [Google Scholar] [CrossRef]

- Zahan, S.; Gilani, Z.; Hassan, G.; Mian, A. Human gesture and gait analysis for autism detection. arXiv 2023, arXiv:2304.08368. [Google Scholar] [CrossRef]

- Serna-Aguilera, M.; Nguyen, X.; Singh, A.; Rockers, L.; Park, S.; Neely, L.; Seo, H.; Luu, K. Video-Based Autism Detection with Deep Learning. arXiv 2024, arXiv:2402.16774. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, X.; Li, W.; Hu, X.; Qu, X.; Cao, X.; Liu, Y.; Lu, J. Applying machine learning to identify autism with restricted kinematic features. IEEE Access 2019, 7, 157614–157622. [Google Scholar] [CrossRef]

- Caruso, A.; Gila, L.; Fulceri, F.; Salvitti, T.; Micai, M.; Baccinelli, W.; Bulgheroni, M.; Scattoni, M.L.; on behalf of the NIDA Network Group. Early Motor Development Predicts Clinical Outcomes of Siblings at High-Risk for Autism: Insight from an Innovative Motion-Tracking Technology. Brain Sci. 2020, 10, 379. [Google Scholar] [CrossRef] [PubMed]

- Alcañiz, M.; Marín-Morales, J.; Minissi, M.; Teruel, G.; Abad, L.; Chicchi, I. Machine learning and virtual reality on body movements’ behaviors to classify children with autism spectrum disorder. J. Clin. Med. 2020, 9, 1260. [Google Scholar] [CrossRef]

- Bruschetta, R.; Caruso, A.; Micai, M.; Campisi, S.; Tartarisco, G.; Pioggia, G.; Scattoni, M. Marker-less video analysis of infant movements for early identification of neurodevelopmental disorders. Diagnostics 2025, 15, 136. [Google Scholar] [CrossRef]

- Sadouk, L.; Gadi, T.; Essoufi, E. A novel deep learning approach for recognizing stereotypical motor movements within and across subjects on the autism spectrum disorder. Comput. Intell. Neurosci. 2018, 2018, 7186762. [Google Scholar] [CrossRef]

- Großekathöfer, U.; Manyakov, N.; Mihajlović, V.; Pandina, G.; Skalkin, A.; Ness, S.; Bangerter, A.; Goodwin, M. Automated detection of stereotypical motor movements in autism spectrum disorder using recurrence quantification analysis. Front. Neuroinform. 2017, 11, 9. [Google Scholar] [CrossRef] [PubMed]

- Jin, X.; Zhu, H.; Cao, W.; Zou, X.; Chen, J. Identifying activity level related movement features of children with ASD based on ADOS videos. Sci. Rep. 2023, 13, 3471. [Google Scholar] [CrossRef]

- Doi, H.; Iijima, N.; Soh, Z.; Yonei, R.; Shinohara, K.; Iriguchi, M.; Shimatani, K.; Tsuji, T. Prediction of autistic tendencies at 18 months of age via markerless video analysis of spontaneous body movements in 4-month-old infants. Sci. Rep. 2022, 12, 18045. [Google Scholar] [CrossRef] [PubMed]

- Wedyan, M.; Al-Jumaily, A.; Crippa, A. Using machine learning to perform early diagnosis of autism spectrum disorder based on simple upper limb movements. Int. J. Hybrid Intell. Syst. 2019, 15, 195–206. [Google Scholar] [CrossRef]

- Tunçgenç, B.; Pacheco, C.; Rochowiak, R.; Nicholas, R.; Rengarajan, S.; Zou, E.; Messenger, B.; Vidal, R.; Mostofsky, S.; Qu, X. Computerized assessment of motor imitation as a scalable method for distinguishing children with autism. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 2021, 6, 321–328. [Google Scholar] [CrossRef]

- Luongo, M.; Simeoli, R.; Marocco, D.; Milano, N.; Ponticorvo, M. Enhancing early autism diagnosis through machine learning: Exploring raw motion data for classification. PLoS ONE 2024, 19, e0302238. [Google Scholar] [CrossRef]

- Martin, K.; Hammal, Z.; Ren, G.; Cohn, J.; Cassell, J.; Ogihara, M.; Britton, J.; Gutierrez, A.; Messinger, D. Objective measurement of head movement differences in children with and without autism spectrum disorder. Mol. Autism 2018, 9, 14. [Google Scholar] [CrossRef]

- Kojovic, N.; Natraj, S.; Mohanty, S.; Maillart, T.; Schaer, M. Using 2D video-based pose estimation for automated prediction of autism spectrum disorders in young children. Sci. Rep. 2021, 11, 15069. [Google Scholar] [CrossRef]

- Mohd, S.; Hamdan, M. Analyzing lower body movements using machine learning to classify autistic children. Biomed. Signal Process. Control 2024, 94, 106288. [Google Scholar] [CrossRef]

- Li, Y.; Mache, M.; Todd, T. Automated identification of postural control for children with autism spectrum disorder using a machine learning approach. J. Biomech. 2020, 113, 110073. [Google Scholar] [CrossRef]

- Rad, N.M.; Kia, S.M.; Zarbo, C.; van Laarhoven, T.; Jurman, G.; Venuti, P.; Marchiori, E.; Furlanello, C. Deep learning for automatic stereotypical motor movement detection using wearable sensors in autism spectrum disorders. arXiv 2017, arXiv:1709.05956. [Google Scholar] [CrossRef]

- Altozano, A.; Minissi, M.; Alcañiz, M.; Marín-Morales, J. Introducing 3DCNN ResNets for ASD full-body kinematic assessment: A comparison with hand-crafted features. arXiv 2024, arXiv:2311.14533. [Google Scholar] [CrossRef]

- Emanuele, M.; Nazzaro, G.; Marini, M.; Veronesi, C.; Boni, S.; Polletta, G.; D’Ausilio, A.; Fadiga, L. Motor synergies: Evidence for a novel motor signature in autism spectrum disorder. Cognition 2021, 213, 104652. [Google Scholar] [CrossRef]

- Lu, B.; Zhang, D.; Zhou, D.; Shankar, A.; Alasim, F.; Abidi, M. SBoCF: A deep learning–based sequential bag of convolutional features for human behavior quantification. Pattern Recognit. Lett. 2025, 165, 108534. [Google Scholar] [CrossRef]

- Sun, Z.; Yuan, Y.; Dong, X.; Liu, Z.; Cai, K.; Cheng, W.; Wu, J.; Qiao, Z.; Chen, A. Supervised machine learning: A new method to predict the outcomes following exercise intervention in children with autism spectrum disorder. Int. J. Clin. Health Psychol. 2023, 23, 100409. [Google Scholar] [CrossRef] [PubMed]

- Ullah, F.; AbuAli, N.; Ullah, A.; Ullah, R.; Siddiqui, U.A.; Siddiqui, A. Fusion-based body-worn IoT sensor platform for gesture recognition of autism spectrum disorder children. Sensors 2023, 23, 1672. [Google Scholar] [CrossRef]

- Georgescu, A.; Koehler, J.; Weiske, J.; Vogeley, K.; Koutsouleris, N.; Falter-Wagner, C. Machine learning to study social interaction difficulties in ASD. Front. Robot. AI 2019, 6, 132. [Google Scholar] [CrossRef]

- Siddiqui, U.; Ullah, F.; Iqbal, A.; Khan, A.; Ullah, R.; Paracha, S.; Shahzad, H.; Kwak, K. Wearable-sensors–based platform for gesture recognition of autism spectrum disorder children using machine learning algorithms. Int. J. Med. Eng. Inform. 2021, 21, 3319. [Google Scholar] [CrossRef]

- Ganai, U.; Ratne, A.; Bhushan, B.; Venkatesh, K. Early detection of autism spectrum disorder: Gait deviations and machine learning. Sci. Rep. 2025, 15, 873. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhu, Z.; Zhang, X.; Tang, H.; Xing, J.; Hu, X.; Lu, J.; Qu, X. Identifying autism with head movement features by implementing machine learning algorithms. J. Autism Dev. Disord. 2022, 52, 3038–3049. [Google Scholar] [CrossRef]

- Li, J.; Chheang, V.; Kullu, P.; Brignac, E.; Guo, Z.; Barner, K.; Bhat, A.; Barmaki, R. MMASD: A Multimodal Dataset for Autism Intervention Analysis. arXiv 2023, arXiv:2306.08243. [Google Scholar] [CrossRef]

- Al-Jubouri, A.; Hadi, I.; Rajihy, Y. Three dimensional dataset combining gait and full body movement of children with autism spectrum disorders collected by Kinect v2 camera. Dryad 2020. [Google Scholar] [CrossRef]

- Zhang, Y.; Tian, Y.; Wu, P.; Chen, D. Application of skeleton data and long short–term memory in action recognition of children with autism spectrum disorder. Sensors 2021, 21, 411. [Google Scholar] [CrossRef]

- Sadek, E.; Seada, N.; Ghoniemy, S. Neural network–based method for early diagnosis of autism spectrum disorder head-banging behavior from recorded videos. Int. J. Pattern Recognit. Artif. Intell. 2023, 37, 2356003. [Google Scholar] [CrossRef]

- Sadek, E.; Seada, N.; Ghoniemy, S. Computer vision–based approach for detecting arm-flapping as autism suspect behaviour. Int. J. Med. Eng. Inform. 2023, 15, 129350. [Google Scholar] [CrossRef]

- Cook, A.; Mandal, B.; Berry, D.; Johnson, M. Towards automatic screening of typical and atypical behaviors in children with autism. arXiv 2019, arXiv:1907.12537. [Google Scholar] [CrossRef]

- Wei, P.; Ahmedt-Aristizabal, D.; Gammulle, H.; Denman, S.; Armin, M. Vision-based activity recognition in children with autism-related behaviors. Heliyon 2023, 9, e16763. [Google Scholar] [CrossRef] [PubMed]

- Alam, S.; Raja, P.; Gulzar, Y.; Mir, M. Enhancing autism severity classification: Integrating LSTM into CNNs for multisite meltdown grading. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 0141269. [Google Scholar] [CrossRef]

- Singh, A.; Shrivastava, T.; Singh, V. Advanced gesture recognition in autism: Integrating YOLOv7, video augmentation and VideoMAE for video analysis. arXiv 2024, arXiv:2410.09339. [Google Scholar] [CrossRef]

- Lakkapragada, A.; Kline, A.; Mutlu, O.; Paskov, K.; Chrisman, B.; Stockham, N.; Washington, P.; Wall, D. Classification of abnormal hand movement for aiding in autism detection: Machine learning study. JMIR Biomed. Eng. 2022, 7, 33771. [Google Scholar] [CrossRef]

- Asmetha, J.; Senthilkumar, R. Detection of stereotypical behaviors in individuals with autism spectrum disorder using a dual self-supervised video transformer network. Neurocomputing 2025, 624, 129397. [Google Scholar] [CrossRef]

- Stevens, E.; Dixon, D.; Novack, M.; Granpeesheh, D.; Smith, T.; Linstead, E. Identification and analysis of behavioral phenotypes in autism spectrum disorder via unsupervised machine learning. Int. J. Med. Inform. 2019, 129, 29–36. [Google Scholar] [CrossRef]

- Jayaprakash, D.; Kanimozhiselvi, C. Multinomial logistic regression method for early detection of autism spectrum disorders. Meas. Sens. 2024, 33, 101125. [Google Scholar] [CrossRef]

- Gardner-Hoag, J.; Novack, M.; Parlett-Pelleriti, C.; Stevens, E.; Dixon, D.; Linstead, E. Unsupervised machine learning for identifying challenging behavior profiles to explore cluster-based treatment efficacy in children with autism spectrum disorder: Retrospective data analysis study. JMIR Med. Inform. 2021, 9, 27793. [Google Scholar] [CrossRef] [PubMed]

- Gomez-Donoso, F.; Cazorla, M.; Garcia-Garcia, A.; Garcia-Rodriguez, J. Automatic Schaeffer’s gestures recognition system. Expert Syst. 2016, 33, 480–488. [Google Scholar] [CrossRef]

- Zhang, N.; Ruan, M.; Wang, S.; Paul, L.; Li, X. Discriminative few-shot learning of facial dynamics in interview videos for autism trait classification. IEEE Trans. Affect. Comput. 2023, 14, 1110–1124. [Google Scholar] [CrossRef]

- Zhao, Z.; Chung, E.; Chung, K.; Park, C. AV–FOS: A transformer-based audio–visual multi-modal interaction style recognition for children with autism based on the Family Observation Schedule (FOS-II). IEEE J. Biomed. Health Inform. 2025, 29, 6238–6250. [Google Scholar] [CrossRef]

- Li, X.; Fan, L.; Wu, H.; Chen, K.; Yu, X.; Che, C.; Cai, Z.; Niu, X.; Cao, A.; Ma, X. Enhancing autism spectrum disorder early detection with the parent–child dyads block-play protocol and an attention-enhanced GCN–xLSTM hybrid deep learning framework. arXiv 2024, arXiv:2408.16924. [Google Scholar] [CrossRef]

- Liu, R.; He, S. Proposing a system-level machine learning hybrid architecture and approach for a comprehensive autism spectrum disorder diagnosis. arXiv 2021, arXiv:2110.03775. [Google Scholar] [CrossRef]

- Beno, J.; Muthukkumar, R. Enhancing the identification of autism spectrum disorder in facial expressions using DenseResNet-based transfer learning. Biomed. Signal Process. Control 2025, 103, 107433. [Google Scholar] [CrossRef]

- Shahzad, I.; Khan, S.; Waseem, A.; Abideen, Z.; Liu, J. Enhancing ASD classification through hybrid attention-based learning of facial features. Signal Image Video Process. 2024, 18, 475–488. [Google Scholar] [CrossRef]

- Ruan, M.; Zhang, N.; Yu, X.; Li, W.; Hu, C.; Webster, P.; Paul, K.; Wang, S.; Li, X. Can micro-expressions be used as a biomarker for autism spectrum disorder? Front. Neuroinform. 2024, 18, 1435091. [Google Scholar] [CrossRef] [PubMed]

- Sellamuthu, S.; Rose, S. Enhanced special needs assessment: A multimodal approach for autism prediction. IEEE Access 2025, 12, 121688–121699. [Google Scholar] [CrossRef]

- Muhathir, M.; Maqfirah, D.; El, M.; Ula, M.; Sahputra, I. Facial-based autism classification using support vector machine method. Int. J. Comput. Digital Syst. 2024, 15, 160163. [Google Scholar] [CrossRef]

- Sarwani, I.; Bhaskari, D.; Bhamidipati, S. Emotion-based autism spectrum disorder detection by leveraging transfer learning and machine learning algorithms. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 0150556. [Google Scholar] [CrossRef]

- Alhakbani, N. Facial emotion recognition–based engagement detection in autism spectrum disorder. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 0150395. [Google Scholar] [CrossRef]

- ElMouatasim, A.; Ikermane, M. Control learning rate for autism facial detection via deep transfer learning. Signal Image Video Process. 2023, 17, 3713–3720. [Google Scholar] [CrossRef]

- Gaddala, L.; Kodepogu, K.; Surekha, Y.; Tejaswi, M.; Ameesha, K.; Kollapalli, L.; Kotha, S.; Manjeti, V. Autism spectrum disorder detection using facial images and deep convolutional neural networks. Rev. d’Intelligence Artif. 2023, 37, 801–806. [Google Scholar] [CrossRef]

- Alkahtani, H.; Aldhyani, T.; Alzahrani, M. Deep learning algorithms to identify autism spectrum disorder in children based on facial landmarks. Appl. Sci. 2023, 13, 4855. [Google Scholar] [CrossRef]

- Dedgaonkar, S.; Sachdeo, R.; Godbole, S. Machine learning for developmental screening based on facial expressions and health parameters. Int. J. Comput. Digital Syst. 2023, 14, 140134. [Google Scholar] [CrossRef]

- Iwauchi, K.; Tanaka, H.; Okazaki, K.; Matsuda, Y.; Uratani, M.; Morimoto, T.; Nakamura, S. Eye-movement analysis on facial expression for identifying children and adults with neurodevelopmental disorders. Front. Digit. Health 2023, 5, 952433. [Google Scholar] [CrossRef] [PubMed]

- Derbali, M.; Jarrah, M.; Randhawa, P. Autism spectrum disorder detection: Video games–based facial expression diagnosis using deep learning. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 0140112. [Google Scholar] [CrossRef]

- Saranya, A.; Anandan, R. Facial action coding and hybrid deep learning architectures for autism detection. Intell. Autom. Soft Comput. 2022, 33, 1167–1182. [Google Scholar] [CrossRef]

- Banire, B.; AlThani, D.; Qaraqe, M.; Mansoor, B. Face-based attention recognition model for children with autism spectrum disorder. J. Healthc. Inform. Res. 2021, 5, 420–445. [Google Scholar] [CrossRef]

- Akter, T.; Ali, M.; Khan, M.; Satu, M.; Uddin, M.; Alyami, S.; Ali, S.; Azad, A.; Moni, M. Improved transfer-learning–based facial recognition framework to detect autistic children at an early stage. Brain Sci. 2021, 11, 734. [Google Scholar] [CrossRef]

- Lu, A.; Perkowski, M. Deep learning approach for screening autism spectrum disorder in children with facial images and analysis of ethnoracial factors in model development and application. Brain Sci. 2021, 11, 1446. [Google Scholar] [CrossRef] [PubMed]

- Awaji, B.; Senan, E.; Olayah, F.; Alshari, E.; Alsulami, M.; Abosaq, H.; Alqahtani, J.; Janrao, P. Hybrid techniques of facial feature image analysis for early detection of autism spectrum disorder based on combined CNN features. Diagnostics 2023, 13, 2948. [Google Scholar] [CrossRef]

- Alvari, G.; Furlanello, C.; Venuti, P. Is smiling the key? Machine learning analytics detect subtle patterns in micro-expressions of infants with ASD. J. Clin. Med. 2021, 10, 1776. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Chen, C.; Xu, R.; Liu, L. Autism identification based on the intelligent analysis of facial behaviors: An approach combining coarse- and fine-grained analysis. Children 2024, 11, 1306. [Google Scholar] [CrossRef]

- Li, J.; Zhong, Y.; Han, J.; Ouyang, G.; Li, X.; Liu, H. Classifying ASD children with LSTM based on raw videos. Neurocomputing 2025, 390, 226–238. [Google Scholar] [CrossRef]

- Sapiro, G.; Hashemi, J.; Dawson, G. Computer vision and behavioral phenotyping: An autism case study. Curr. Opin. Biomed. Eng. 2019, 9, 14–20. [Google Scholar] [CrossRef]

- Kang, J.; Han, X.; Hu, J.; Feng, H.; Li, X. The study of the differences between low-functioning autistic children and typically developing children in processing own-race and other-race faces by a machine learning approach. J. Clin. Neurosci. 2020, 81, 54–60. [Google Scholar] [CrossRef] [PubMed]

- Huynh, M.; Kline, A.; Surabhi, S.; Dunlap, K.; Mutlu, O.; Honarmand, M.; Azizian, P.; Washington, P.; Wall, D. Ensemble modeling of multiple physical indicators to dynamically phenotype autism spectrum disorder. arXiv 2024, arXiv:2408.13255. [Google Scholar] [CrossRef]

- Ji, Y.; Wang, S.; Xu, R.; Chen, J.; Jiang, X.; Deng, Z.; Quan, Y.; Liu, J. Hugging Rain Man: A novel facial action units dataset for analyzing atypical facial expressions in children with autism spectrum disorder. arXiv 2024, arXiv:2411.13797. [Google Scholar] [CrossRef]

- Gautam, S.; Sharma, P.; Thapa, K.; Upadhaya, M.; Thapa, D.; Khanal, S.; Filipe, V. Screening autism spectrum disorder in children using a deep learning approach: Evaluating YOLOv8 by comparison with other models. arXiv 2023, arXiv:2306.14300. [Google Scholar] [CrossRef]

- Beary, M.; Hadsell, A.; Messersmith, R.; Hosseini, M. Diagnosis of autism in children using facial analysis and deep learning. arXiv 2020, arXiv:2008.02890. [Google Scholar] [CrossRef]

- Jaiswal, S.; Valstar, M.; Gillott, A.; Daley, D. Automatic detection of ADHD and ASD from expressive behaviour in RGBD data. arXiv 2016, arXiv:1612.02374. [Google Scholar] [CrossRef]

- Alam, S.; Rashid, M.; Roy, R.; Faizabadi, A.; Gupta, K.; Ahsan, M. Empirical study of autism spectrum disorder diagnosis using facial images by improved transfer learning approach. Bioengineering 2022, 9, 710. [Google Scholar] [CrossRef]

- Farhat, T.; Akram, S.; AlSagri, H.; Ali, Z.; Ahmad, A.; Jaffar, A. Facial image–based autism detection: A comparative study of deep neural network classifiers. Comput. Mater. Contin. 2024, 78, 105–126. [Google Scholar] [CrossRef]

- Almars, A.; Badawy, M.; Elhosseini, M. ASD2-TL* GTO: Autism spectrum disorders detection via transfer learning with Gorilla Troops Optimizer framework. Heliyon 2023, 9, e21530. [Google Scholar] [CrossRef] [PubMed]

- Vasant, R.; Mishra, S.; Kamini, S.; Kotecha, K. Attention-focused eye gaze analysis to predict autistic traits using transfer learning. Int. J. Comput. Intell. Syst. 2024, 17, 120. [Google Scholar] [CrossRef]

- Klin, A.; Lin, D.J.; Gorrindo, P.; Ramsay, G.; Jones, W. Two-year-olds with autism orient to non-social contingencies rather than biological motion. Nature 2009, 459, 257–261. [Google Scholar] [CrossRef]

- Frazier, T.W.; Klingemier, E.W.; Parikh, S.; Speer, L.; Strauss, M.S.; Eng, C. Development and validation of objective and quantitative eye tracking-based measures of autism risk and symptom levels. J. Am. Acad. Child Adolesc. Psychiatry 2018, 57, 858–866. [Google Scholar] [CrossRef]

- Fang, Y.; Zhang, H.; Zuo, Y.; Jiang, W.; Huang, H.; Yan, J. Visual attention prediction for autism spectrum disorder with hierarchical semantic fusion. Signal Process. Image Commun. 2021, 93, 116186. [Google Scholar] [CrossRef]

- Chang, Z.; Di Martino, J.M.; Aiello, R.; Baker, J.; Carpenter, K.; Compton, S.; Davis, N.; Eichner, B.; Espinosa, S.; Flowers, J.; et al. Computational methods to measure patterns of gaze in toddlers with autism spectrum disorder. JAMA Pediatr. 2021, 175, 827–836. [Google Scholar] [CrossRef]

- Pierce, K.; Wen, T.H.; Zahiri, J.; Andreason, C.; Courchesne, E.; Barnes, C.C.; Lopez, L.; Arias, S.J.; Esquivel, A.; Cheng, A. Level of attention to motherese speech as an early marker of autism spectrum disorder. JAMA Netw. Open 2023, 6, e2255125. [Google Scholar] [CrossRef]

- Keehn, B.; Monahan, P.; Enneking, B.; Ryan, T.; Swigonski, N.; McNally Keehn, R. Eye-tracking biomarkers and autism diagnosis in primary care. JAMA Netw. Open 2024, 7, e2411190. [Google Scholar] [CrossRef]

- Alcañiz, M.; Chicchi-Giglioli, I.A.; Carrasco-Ribelles, L.A.; Marín-Morales, J.; Minissi, M.E.; Teruel-García, G.; Sirera, M.; Abad, L. Eye gaze as a biomarker in the recognition of autism spectrum disorder using virtual reality and machine learning: A proof of concept for diagnosis. Autism Res. 2022, 15, 131–145. [Google Scholar] [CrossRef] [PubMed]

- Chong, E.; Clark-Whitney, E.; Southerland, A.; Stubbs, E.; Miller, C.; Ajodan, E.; Silverman, M.; Lord, C.; Rozga, A.; Jones, R.; et al. Detection of Eye Contact with Deep Neural Networks Is as Accurate as Human Experts. Nat. Commun. 2020, 11, 2322. [Google Scholar] [CrossRef]

- Zhao, Z.; Tang, H.; Zhang, X.; Qu, X.; Hu, X.; Lu, J. Classification of children with autism and typical development using eye-tracking data from face-to-face conversations: Machine learning model development and performance evaluation. J. Med. Internet Res. 2021, 23, e29328. [Google Scholar] [CrossRef] [PubMed]

- Wei, Q.; Dong, W.; Yu, D.; Wang, K.; Yang, T.; Xiao, Y.; Long, D.; Xiong, H.; Chen, J.; Xu, X.; et al. Early Identification of Autism Spectrum Disorder Based on Machine Learning with Eye-Tracking Data. J. Affect. Disord. 2024, 358, 326–334. [Google Scholar] [CrossRef] [PubMed]

- Cilia, F.; Carette, R.; Elbattah, M.; Dequen, G.; Guérin, J.-L.; Bosche, J.; Vandromme, L.; Le Driant, B. Computer-Aided Screening of Autism Spectrum Disorder: Eye-Tracking Study Using Data Visualization and Deep Learning. JMIR Hum. Factors 2021, 8, e26451. [Google Scholar] [CrossRef]

- Liaqat, S.; Wu, C.; Duggirala, P.R.; Cheung, S.S.; Chuah, C.; Ozonoff, S.; Young, G. Predicting ASD Diagnosis in Children with Synthetic and Image-Based Eye Gaze Data. Signal Process. Image Commun. 2021, 94, 116198. [Google Scholar] [CrossRef]

- Ahmed, I.A.; Senan, E.M.; Rassem, T.H.; Ali, M.A.; Shatnawi, H.S.; Alwazer, S.M.; Alshahrani, M. Eye Tracking-Based Diagnosis and Early Detection of Autism Spectrum Disorder Using Machine Learning and Deep Learning Techniques. Electronics 2022, 11, 530. [Google Scholar] [CrossRef]

- Antolí, A.; Rodriguez-Lozano, F.J.; Cañas, J.J.; Vacas, J.; Cuadrado, F.; Sánchez-Raya, A.; Pérez-Dueñas, C.; Gámez-Granados, J.C. Using explainable machine learning and eye-tracking for diagnosing autism spectrum and developmental language disorders in social attention tasks. Front. Psychiatry 2025, 19, 1558621. [Google Scholar] [CrossRef]

- Jiang, M.; Zhao, Q. Learning visual attention to Identify People with Autism Spectrum Disorder. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3287–3296. [Google Scholar] [CrossRef]

- Tao, F.; Shyu, M. SP-ASDNet: CNN-LSTM on scanpaths for ASD vs. TD classification. In Proceedings of the IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shanghai, China, 8–12 July 2019; pp. 306–311. [Google Scholar] [CrossRef]

- Mazumdar, P.; Arru, G.; Battisti, F. Early Detection of Children with Autism Spectrum Disorder Based on Visual Exploration of Images. Signal Process. Image Commun. 2021, 94, 116184. [Google Scholar] [CrossRef]

- Minissi, M.E.; Altozano, A.; Marín-Morales, J.; Chicchi Giglioli, I.A.; Mantovani, F.; Alcañiz, M. Biosignal comparison for autism assessment using machine learning models and virtual reality. Comput. Biol. Med. 2024, 174, 108194. [Google Scholar] [CrossRef]

- Almadhor, A.; Alasiry, A.; Alsubai, S.; AlHejaili, A.; Kovac, U.; Abbas, S. Explainable and secure framework for autism prediction using multimodal eye tracking and kinematic data. Complex Intell. Syst. 2025, 11, 173. [Google Scholar] [CrossRef]

- Alsaidi, M.; Obeid, N.; Al-Madi, N.; Hiary, H.; Aljarah, I. A convolutional deep neural network approach to predict autism spectrum disorder based on eye-tracking scan paths. Information 2024, 15, 133. [Google Scholar] [CrossRef]

- Kanhirakadavath, M.; Chandran, M. Investigation of eye-tracking scan path as a biomarker for autism screening using machine learning algorithms. Diagnostics 2022, 12, 518. [Google Scholar] [CrossRef]

- Sá, R.; Michelassi, G.; Butrico, D.; Franco, F.; Sumiya, F.; Portolese, J.; Brentani, H.; Nunes, F.; Machado-Lima, A. Enhancing ensemble classifiers utilizing gaze tracking data for autism spectrum disorder diagnosis. Comput. Biol. Med. 2024, 182, 109184. [Google Scholar] [CrossRef]

- Ozdemir, S.; Akin-Bulbul, I.; Kok, I.; Ozdemir, S. Development of a visual attention–based decision support system for autism spectrum disorder screening. Int. J. Psychophysiol. 2022, 173, 69–81. [Google Scholar] [CrossRef]

- Varma, M.; Washington, P.; Chrisman, B.; Kline, A.; Leblanc, E.; Paskov, K.; Stockham, N.; Jung, J.; Sun, M.; Wall, D. Identification of social engagement indicators associated with autism spectrum disorder using a game-based mobile app: Comparative study of gaze fixation and visual scanning methods. J. Med. Internet Res. 2022, 24, e31830. [Google Scholar] [CrossRef] [PubMed]

- Thanarajan, T.; Alotaibi, Y.; Rajendran, S.; Nagappan, K. Eye-tracking–based autism spectrum disorder diagnosis using chaotic butterfly optimization with deep learning model. Comput. Mater. Contin. 2023, 76, 1995–2013. [Google Scholar] [CrossRef]

- Islam, F.; Manab, M.; Mondal, J.; Zabeen, S.; Rahman, F.; Hasan, Z.; Sadeque, F.; Noor, J. Involution fused convolution for classifying eye-tracking patterns of children with autism spectrum disorder. Eng. Appl. Artif. Intell. 2025, 139, 109475. [Google Scholar] [CrossRef]

- Solovyova, A.; Danylov, S.; Oleksii, S.; Kravchenko, A. Early autism spectrum disorders diagnosis using eye-tracking technology. arXiv 2020, arXiv:2008.09670. [Google Scholar] [CrossRef]

- Fernández, D.; Porras, F.; Gilman, R.; Mondonedo, M.; Sheen, P.; Zimic, M. A convolutional neural network for gaze preference detection: A potential tool for diagnostics of autism spectrum disorder in children. arXiv 2020, arXiv:2007.14432. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, H.; Ding, R.; Zhu, Y.; Wang, W.; Choo, K. Exploring Gaze Pattern Differences Between Autistic and Neurotypical Children: Clustering, Visualisation, and Prediction. arXiv 2025, arXiv:2409.11744. [Google Scholar] [CrossRef]

- Yu, X.; Ruan, M.; Hu, C.; Li, W.; Paul, L.; Li, X.; Wang, S. Video-based analysis reveals atypical social gaze in people with autism spectrum disorder. arXiv 2024, arXiv:2409.00664. [Google Scholar] [CrossRef]

- Minissi, M.E.; Chicchi Giglioli, I.A.; Mantovani, F.; Alcañiz Raya, M. Assessment of the Autism Spectrum Disorder Based on Machine Learning and Social Visual Attention: A Systematic Review. J. Autism Dev. Disord. 2022, 52, 2187–2202. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Colonnese, F.; Di Luzio, F.; Rosato, A.; Panella, M. Enhancing Autism Detection Through Gaze Analysis Using Eye Tracking Sensors and Data Attribution with Distillation in Deep Neural Networks. Sensors 2024, 24, 7792. [Google Scholar] [CrossRef]

- Shamseddine, H.; Otoum, S.; Mourad, A. A Federated Learning Scheme for Neuro-developmental Disorders: Multi-Aspect ASD Detection. arXiv 2025, arXiv:2211.00643. [Google Scholar] [CrossRef]

- Hassan, I.; Nahid, N.; Islam, M.; Hossain, S.; Schuller, B.; Ahad, A. Automated Autism Assessment with Multimodal Data and Ensemble Learning: A Scalable and Consistent Robot-Enhanced Therapy Framework. IEEE Trans. Neural Syst. Rehabil. Eng. 2025, 33, 1191–1201. [Google Scholar] [CrossRef]

- Wang, H.; Jing, H.; Yang, J. Identifying autism spectrum disorder from multi-modal data with privacy-preserving. npj Ment. Health Res. 2024, 3, 15. [Google Scholar] [CrossRef]

- Toki, E.; Tatsis, G.; Tatsis, A.; Plachouras, K.; Pange, J.; Tsoulos, G. Employing classification techniques on SmartSpeech biometric data towards identification of neurodevelopmental disorders. Signals 2023, 4, 21. [Google Scholar] [CrossRef]

- Rouzbahani, H.; Karimipour, H. Application of Artificial Intelligence in Supporting Healthcare Professionals and Caregivers in Treatment of Autistic Children. arXiv 2024, arXiv:2407.08902. [Google Scholar] [CrossRef]

- Ouss, L.; Palestra, G.; Saint-Georges, C.; Leitgel, M.; Afshar, M.; Pellerin, H.; Bailly, K.; Chetouani, M.; Robel, L.; Golse, B.; et al. Behavior and interaction imaging at 9 months of age predict autism/intellectual disability in high-risk infants with West syndrome. Transl. Psychiatry 2020, 10, 54. [Google Scholar] [CrossRef] [PubMed]

- Pouw, W.; Trujillo, P.; Dixon, A. The quantification of gesture–speech synchrony: A tutorial and validation of multimodal data acquisition using device-based and video-based motion tracking. Behav. Res. Methods 2020, 52, 723–740. [Google Scholar] [CrossRef]

- Vabalas, A.; Gowen, E.; Poliakoff, E.; Casson, A. Applying machine learning to kinematic and eye movement features of a movement imitation task to predict autism diagnosis. Sci. Rep. 2020, 10, 8346. [Google Scholar] [CrossRef]

- Wei, Q.; Xu, X.; Xu, X.; Cheng, Q. Early identification of autism spectrum disorder by multi-instrument fusion: A clinically applicable machine learning approach. Psychiatry Res. 2023, 320, 115050. [Google Scholar] [CrossRef] [PubMed]

- Paolucci, C.; Giorgini, F.; Scheda, R.; Alessi, F.; Diciotti, S. Early prediction of autism spectrum disorders through interaction analysis in home videos and explainable artificial intelligence. Comput. Human Behav. 2023, 148, 107877. [Google Scholar] [CrossRef]

- Deng, A.; Yang, T.; Chen, C.; Chen, Q.; Neely, L.; Oyama, S. Language-assisted deep learning for autistic behaviors recognition. Smart Health 2024, 32, 100444. [Google Scholar] [CrossRef]

- Vidivelli, S.; Padmakumari, P.; Shanthi, P. Multimodal autism detection: Deep hybrid model with improved feature level fusion. Comput. Methods Programs Biomed. 2025, 260, 108492. [Google Scholar] [CrossRef]

- Alam, S.; Rashid, M.; Roy, R.; Faizabadi, A.; Gupta, K.; Ahsan, M. Survey on deep neural networks in speech and vision systems. Neurocomputing 2020, 417, 302–321. [Google Scholar] [CrossRef] [PubMed]

- Drimalla, H.; Scheffer, T.; Landwehr, N.; Baskow, I.; Roepke, S.; Behnia, B.; Dziobek, I. Towards the automatic detection of social biomarkers in autism spectrum disorder: Introducing the simulated interaction task (SIT). npj Digit. Med. 2020, 3, 25. [Google Scholar] [CrossRef] [PubMed]

- Drougkas, G.; Bakker, E.; Spruit, M. Multimodal machine learning for language and speech markers identification in mental health. BMC Med. Inform. Decis. Mak. 2024, 24, 354. [Google Scholar] [CrossRef]

- Natarajan, J.; Bajaj, U.; Shahi, D.; Soni, R.; Anand, T. Speech and gesture analysis: A new approach. Multimed. Tools Appl. 2022, 81, 20763–20779. [Google Scholar] [CrossRef]

- Nag, A.; Haber, N.; Voss, C.; Tamura, S.; Daniels, J.; Ma, J.; Chiang, B.; Ramachandran, S.; Schwartz, J.; Winograd, T.; et al. Toward Continuous Social Phenotyping: Analyzing Gaze Patterns in an Emotion Recognition Task for Children With Autism Through Wearable Smart Glasses. J. Med. Internet Res. 2020, 22, e13810. [Google Scholar] [CrossRef]

| Modality | Articles Included | Common Algorithms | Avg. Accuracy/ Performance | Sample Size of the Best Accuracy |

|---|---|---|---|---|

| Voice Biomarkers | 13 | SVM, RF, k-Means | 85–99% | 30 children |

| Linguistic and Content-Level Language Analysis | 8 | BERT, LSTM, Transformer-based NLP XGBoost | 80–97% | 120 children |

| Conversational/ Interactional Dynamics | 6 | HMM, Dialogue models, Graph-based models | 76-90% | 79 children |

| Movement Analysis | 31 | 3DCNN, LSTM, RQA | 90–95% | 118 children |

| Activity Recognition 1 | 17 | SVM, RF, k-Means | 87–93% | 120 children |

| Facial Gesture Analysis 2 | 22 | Vision Transformers, CNNs | 83–96% | 2926 facial images |

| Visual Attention | 36 | Eye-tracking, Attention models, CNN-RNN | 88–97% | 547 facial images (59 children) |

| Multimodal Approaches | 25 | Fusion models, Ensemble deep learning, Transformer hybrids | 70–99% | 44 samples of kinematic and eye movement features |

| Authors | Dataset Size | Age | Data Preprocessing | Feature Extraction | AI Model/Tool |

|---|---|---|---|---|---|

| Mohanta & Mittal (2022) [13] | 33 children | 3–9 | Cleaning, segmentation, normalization | Formants, MFCCs, jitter | GMM, SVM, RF, KNN, PNN (98%) |

| Briend et al. (2023) [10] | 84 children | 8–9 | Voice segmentation | Prosodic, MFCCs, pitch, intensity | SVM, RF, XGBoost (90%) |

| Vacca et al. (2024) [2] | 84 children | 6–12 | Noise reduction, normalization | Acoustic (pitch, formants) | SVM, RF (98.8%) |

| Jayasree & Shia (2021) [14] | 19 children | 5–14 | Denoising, feature scaling | MFCCs, jitter, shimmer | FFNN (87%) |

| Asgari & Chen (2021) [15] | 118 children | 7–15 | Cleaning, segmentation | Pitch, jitter, shimmer, MFCCs, prosody | SVM, RF (86%) |

| Godel et al. (2023) [16] | 74 children | 2–6 | Segmentation, normalization, annotation | Intonation, rhythm, stress | ML classifiers (85%) |

| Keerthana Sai et al. (2024) [12] | 30 children | 3.5–9 | STFT, normalization | STFT spectrograms, pitch | Custom CNN (99.1%) |

| Chi et al. (2023) [22] | 58 children | 3–12 | Cleaning, segmentation | MFCC, spectral, raw audio | RF, wav2vec 2.0, CNN (79%) |

| Guo et al. (2022) [17] | 40 children | 4–9 | Cleaning, tone segmentation | Jitter, shimmer, HNR, MFCCs | RF (78%) |

| Hu et al. (2024) [11] | 44 children | 4–16 | Cleaning, segmentation, normalization | Prosody, pitch, duration, rhythm | SVM, RF, CNN (89%) |

| Authors | Dataset Size | Age | Data Preprocessing | Feature Extraction | AI Model/Tool |

|---|---|---|---|---|---|

| Ochi et al. (2019) [23] | 79 adults (HF-ASD, TD) | 19–36 | Audio segmentation; speaker diarization; pause detection | Turn-gap duration; pause ratio; blockwise intensity synchrony | Statistical modeling; SVM 89.9% |

| Lehnert-LeHouillier et al. (2020) [24] | 24 children/ teens | 9–15 | Segmentation of natural conversation; prosody extraction (Praat) | Prosodic entrainment (F0, intensity, rate) | Linear regression models |

| Lau et al. (2022) [18] | 146 children- adults | 6–35 | Phonetic alignment, segmentation | Intonation, stress, duration | SVM (83%) |

| Wehrle et al. (2023) [25] | 40 adults | 18–45 | Free-dialogue segmentation; pause detection | Turn-timing distributions; gap preferences | Distributional analysis |

| Yang et al. (2023) [27] | 72 children (therapy sessions) | 3–11 years | Therapist–child session segmentation; diarization | Turn/response timing; prosodic cues for conversation-quality prediction | Supervised ML & DL |

| Chowdhury et al. (2024) [26] | 29 children (ADOS-style) | 10-15 years | Examiner–child dialogue segmentation; prosody + lexical tags | Turn counts; latency; basic prosody | RF (acc. 76%) |

| Authors | Dataset Size | Age | Preprocessing | Feature Extraction | AI Model/Tool |

|---|---|---|---|---|---|

| Mukherjee et al. (2023a) [28] | 1200 text samples | 5–16 | Cleaning, tokenization | BERT, GPT-3.5 embeddings | BERT, GPT-3.5, SVM (92%) |

| Rubio-Martín et al. (2024) [29] | 2500 text samples | 6–18 | Normalization, lemmatization | TF-IDF, embeddings, sentiment | SVM, CNN, LSTM (90%) |

| Murugaiyan & Uyyala (2023) [33] | 2000 customer speech (text) | — | Speech-to-text, cleaning | Sentiment, embeddings | Deep CNN + BiLSTM (90%) |

| Deng et al. (2024) [30] | 800 children | 4–14 | Speech-to-text, alignment | Semantics, syntax, behavior cues | Hybrid DL (89%) |

| Angelopoulou et al. (2024) [31] | 600 narrative samples | 5–12 | Transcription, cleaning | Lexical diversity, cohesion | RF, SVM (89%) |

| Ramesh et al. (2023) [34] | 86 children | 1–6 | Cleaning, encoding, SMOTE | Mean Length of Utterance and Turn (MLU, MLT ratio), POS | LR, SVM, KNN, NB, RF (80%) |

| Jaiswal et al. (2024) [35] | 17,323 ASD and 171,273 users | – | Cleaning, tokenization, stemming, lemmatization, encryption | Bag-of-words Term Frequency (TF-IDF), Word2vec | Logistic regression, Bi-LSTM (F1 80%) |

| Themistocleous et al. (2024) [5] | 120 children | 4–10 | Transcription, Random over-sampling, cleaning | Grammatical, semantic, syntactic and text complexity features | Gradient Boosting, Decision trees, Hist GB, XGBoost (97.5%) |

| Authors | Dataset Size | Age | Data Preprocessing | Feature Extraction | AI Model/Tool |

|---|---|---|---|---|---|

| Al-Jubouri et al. (2020) [66] | Kinect 3D gait clips for 118 children | 4–12 years | Gait-cycle isolation; MICE imputation; face blurring; augmentation | 3D kinematics; PCA reduction | MLP (acc. 95%) |

| Li et al. (2023) (MMASD) [65] | Therapy videos (>100 h) for 32 children | 5–12 | Clip segmentation; noisy clip removal; privacy streams | Optical flow; 2D/3D skeletons; clinical scores | Benchmark dataset |

| Zhang et al. (2021) [67] | ASD action videos | 5–10 years | Pose extraction; sequence curation | Skeleton sequences; temporal features | LSTM (acc. 93.55% |

| Serna-Aguilera et al. (2024) [39] | Video responses to stimuli of 66 subjects | Children | Frame/clip selection; normalization | Frame/flow deep features | CNN (acc. 81.48% |

| Sadek et al. (2023) (Head-banging) [68] | SSBD + collected videos | 4–12 | Pose estimation; clip curation | Skeleton + movement patterns | NN/CNN (acc. 85.5% to 93%) |

| Sadek et al. (2023) (Arm-flapping) [69] | Recorded videos | Children | Video preprocessing; pose estimation | Skeleton-based stereotypy cues | CNN/LSTM |

| Li et al. (2020) (Postural control) [54] | Lab postural videos for 50 children | 5–12 years | Trial segmentation; normalization | Postural sway/kinematics | 6 MLs, Naïve Bayes best acc. 90% |

| Wei et al. (2023) [71] | SSBD dataset- 61 videos | 61 subjects | Activity segmentation; normalization | Vision-based activity cues | RGB I3D + MS-TCN (acc. 83%) |

| Ganai et al. (2025) [63] | Multi-site gait data-61 children | 4–6 mean years | Gait trial segmentation; QC | Spatiotemporal gait deviations | SVM/RF/Logit best acc. 82% |

| Zhao et al. (2022) (Head movement) [64] | Clinical videos of 43 children | 6–13 years | Head-track preprocessing | Head-movement features | ML (DT/SVM/RF acc. 92.11% |

| Doi et al. (2022) (Infant) [47] | 62 mother-infant videos | 4–18 months | Markerless capture; QC | Spontaneous movement metrics | LDA, MLP |

| Bruschetta et al. (2025) (Infant) [43] | 74 infants-Home/clinic videos | 10 days to 24 weeks | Standardized capture; normalization | Markerless infant kinematics | SVM 85% |

| Authors | Dataset Size | Age | Data Preprocessing | Feature Extraction | AI Model/Tool |

|---|---|---|---|---|---|

| Zhao et al. (2025) [81] | 120 children (ASD/control), AV–FOS audio–video | 3–12 | A/V sync; segmentation; normalization | Multimodal interaction cues (prosody; gesture; facial; engagement) | Transformer-based AV model (acc. 93%) |

| Singh et al. (2024) [73] | 80 children (ASD/control), gesture videos | 4–12 | Video augmentation; YOLOv7 hand detection; frame normalization | Gesture pose/motion; temporal patterns | YOLOv7 + VideoMAE (acc. 92%) |

| Deng et al. (2024) [30] | 200 children (ASD/control), A/V recordings | 3–12 | A/V sync; denoising; segmentation; normalization | Audio: prosody, MFCC; Visual: pose, gesture, facial | Multimodal DL (CNN video, RNN audio, fusion) (acc. 91%) |

| Liu & He (2021) [83] | 3000+ samples, multimodal | 2–16 | Data fusion; normalization; feature selection | Behavioral; imaging; genetic; clinical | Ensemble (SVM; RF; DNN) (acc. 91%) |

| Asmetha & Senthilkumar (2025) [75] | 80 children, stereotypy videos | 4–14 | Video segmentation; augmentation; normalization | Spatiotemporal stereotypy features | Dual self-supervised Video Transformer (acc. 91%) |

| Alam et al. (2023) [72] | 800 meltdown episodes | 5–16 | A/V segmentation; normalization; augmentation | Audio; video; physiological meltdown cues | CNN–LSTM hybrid (acc. 90%) |

| Li et al. (2024) [82] | 120 dyads, block-play protocol (video) | 2–6 | Segmentation; pose estimation; normalization | Dyadic interaction features | 2sGCN–AxLSTM hybrid (acc. 89%) |

| Jayaprakash & Kanimozhiselvi (2024) [77] | 182 clinical/ behavioral records | 2–12 | Cleaning; normalization; encoding | Clinical/ behavioral items | Multinomial logistic regression (acc. 88%) |

| Jin et al. (2023) [46] | 80 children (ADOS videos) | 3–8 | Video segmentation; pose estimation; normalization | Activity level metrics | SVM; RF; Gradient Boosting (RF best acc. 87%) |

| Lakkapragada et al. (2022) [74] | 50 children, hand-movement videos | 4–10 | Video augmentation; hand extraction; normalization | Abnormal hand movement metrics | RF; SVM (best acc. 87%) |

| Authors | Dataset Size | Age | Data Preprocessing | Feature Extraction | AI Model/Tool |

|---|---|---|---|---|---|

| Ibadi et al. (2025) [4] | 2926 facial images | Children | Normalization, augmentation, | Static features by using Vision Transformer (VIT) model and Squeze-and-Excitation (SE) blocks | Xception, ResNet50, VGG-19, MobileNetV3, EfficientNet-B4, ASDvit model (AUC 97.7%) |

| Beno et al. (2025) [84] | 2726 facial images | 2–14 years | Normalization with magnitude stretching, augmentation, | Static facial features by using DenseNet and ResNet architectures | DenseResNet model (AUC 97.07%) |

| Ruan et al. (2024) [86] | 42 ADOS-2 interview videos | 16–37 years | Frame extraction, Face detection, Motion magnification | Micro-expression features by using SOFTNet and Micron-BERT model | MLP, SVM, ResNet (acc. 94.8%) |

| Almars et al. (2023) [114] | 1100 facial images | — | Face detection, normalization, augmentation | Deep features via transfer learning | CNN with Gorilla Troops Optimizer (acc. 94.2%) |

| Shahzad et al. (2024) [85] | 2000 facial images | 3–16 years | Face detection, normalization, augmentation | Landmarks, emotion, attention maps | Hybrid attention deep model (acc. 94%) |

| Farhat et al. (2024) [113] | 1200 facial images | 3–15 years | Face detection, normalization, augmentation | Deep CNN embeddings | CNN, ResNet, VGG (best acc. 93%) |

| Gaddala et al. (2023) [92] | 2000 facial images | 4–16 years | Face detection, normalization, augmentation | Deep facial conv features | Deep CNN (acc. 93%) |

| Gautam et al. (2023) [109] | 1000 facial images | 3–14 years | Face detection, normalization, augmentation | Landmarks, geometric, texture | YOLOv8 vs. CNN/SVM (YOLOv8 best acc. 93%) |

| Akter et al. (2021) [99] | 2000 facial images | 3–14 years | Face detection, normalization | Deep facial embeddings | Transfer learning (VGG, ResNet, best acc. 93%) |

| ElMouatasim & Ikermane (2023) [91] | 1200 facial images | 3–15 years | Face cropping, normalization | Deep CNN embeddings | ResNet, VGG (best acc. 92%) |

| Authors | Dataset Size | Age | Data Preprocessing | Feature Extraction | AI Model/Tool |

|---|---|---|---|---|---|

| Pierce et al. (2023) [120] | toddlers | 12–48 months | ROI segmentation; task standardization; calibration QC | % gaze to motherese vs. geometric patterns | Threshold/ML rule |

| Chang et al. (2021) [119] | (ASD = 40, TD = 936, DDLD = 17) | 16–30 months | Mobile eye-tracking calibration; synchronization | Social vs. nonsocial fixation; speech–gaze coordination | ML classifiers (AUC ≈ 0.88–0.90) |

| Fang et al. (2021) [118] | Saliency4ASD (300 images for training, 200 for benchmark) | 5–12 years | Images expanded via rotation and flipping; Pre-training on other datasets and fine-tuning | Spatial Feature Module (SFM) with FCN; Pseudo Sequential Feature Module (PSFM) with two ConvLSTMs | Two-stream model (ASD-HSF) |

| Xie et al. (2022) [3] | (20 ASD, 19 TD) | mean age ∼31 years | Fixation maps; Gaussian smoothing; normalization; augmentation | Two-stream integration of images and fixation maps; discriminative feature analysis | VGG-16 two-stream CNN (ASDNet); acc. up to 0.95 (LOO CV) |

| Chong et al. (2020) [123] | (ASD = 66, TD = 55; 18 to 60 mo; plus 15 ASD, 5–13 y) | 18 months– 13 years | Egocentric video capture; stabilization; alignment | Eye-contact episode detection | CNN (ResNet-50; wearable PoV) |

| Zhao et al. (2021) [124] | (ASD = 19, TD = 20) | Eye-tracking (face-to-face conversation) | Fixation time on AOIs (eyes, mouth, face, body); session length | Visual attention during structured conversation | SVM, LDA, DT, RF (SVM best, 92.3%) |

| Alcañiz et al. (2022) [122] | (ASD = 35, TD = 20) | 4–7 years | VR eye-tracker calibration; AOI feature extraction | VR-based fixation metrics (social vs. nonsocial; faces vs. bodies) | ML classifiers (SVM, RF, kNN, NB, XGBoost) |

| Minissi et al. (2024) [133] | (ASD = 39, TD = 42) | 3–7 years | VR calibration; biosignal recording (CAVE) | Motor skills, eye movements, behavioral responses | Linear SVM (RFE, nested CV) |

| Wei et al. (2024) [125] | (ASD = 290, TD = 239) | 1.5–6 years | Eye-tracking paradigms (social + non-social tasks; calibration; Z-score normalization) | Fixation, saccade, pursuit, joint attention features | RF (best), SVM/LR/ANN/ XGB (comparisons) |

| Cilia et al. (2021) [126] | (ASD = 29, TD = 30) | mean age ∼7–8 years | Scanpath visualization; data augmentation | Eye-tracking scanpath images with dynamics | CNN (4 conv + pooling, 2 FC) |

| Ahmed et al. (2022) [128] | (ASD = 29, TD = 30) | Children | Image enhancement (average + Laplacian filters); ROI segmentation | LBP + GLCM features; deep feature maps | ANN/FFNN; GoogLeNet; ResNet-18; CNN+SVM hybrids |

| Liaqat et al. (2021) [127] | (ASD = 14, TD = 14) | 6–12 years | Scanpath normalization; statistical features | Synthetic saccades + fixation maps | MLP/BrMLP, CNN, LSTM |

| Keehn et al. (2024) [121] | (146 usable) | ch. and Todd. | AOI-based calibration QC; blink removal; interpolation; artifact filtering | Multiple eye-tracking biomarkers (GeoPref, PLR, gap-overlap, fixation metrics) | Composite biomarker + logistic regression |

| Antolí et al. (2025) [129] | (ASD = 24, DLD = 25, TD = 44) | 32–74 months | AOI-based preprocessing; eye-tracking metrics extraction | Social vs. non-social fixation features | Naive Bayes, LMT (XAI) |

| Jiang & Zhao (2017) [130] | (20 ASD, 19 TD) | Adults | Eye-tracking with natural-scene free viewing; Fisher-score image selection | DoF maps + DNN features (VGG-16) | DNN + SVM |

| Almadhor et al. (2025) [134] | multimodal | Children | Multimodal calibration; sync | Gaze + kinematic features | Explainable ML (XAI) |

| Tao & Shyu (2019) [131] | Saliency4ASD (300 images; 14 ASD + 14 TD) | Children | Saliency map patch extraction; duration integration | Scanpath-based saliency patches + durations | CNN–LSTM |

| Mazumdar et al. 2021) [132] | Saliency4ASD (28 children) | Children | Object/non-object detection; fixation | Visual behavior features | Ensemble (TreeBagger) |

| Vasant et al. (2024) [115] | (ASD = 26, TD = 27) | 3–6 years | Tablet-based calibration; preprocessing of gaze trajectories | Saliency maps + trajectory-based features | Transfer learning CNN models |

| Alsaidi et al. (2024) [135] | Public dataset (59 children; 547 images) | Children (∼8 years) | Image resizing; grayscale conversion; dimensionality reduction | Eye-tracking scan path images | CNN (T-CNN-ASD) |

| Sá et al. (2024) [137] | 3–18 y (Ch./Adol.) | Joint-attention paradigm; floating ROI + resampling | Anticipation + gaze fixation features | Heterogeneous stacking ensemble (F1 = 95.5%) | |

| Ozdemir et al. (2022) [138] | (ASD = 61, TD = 72) | 26–36 mo | Eye-tracking preprocessing; feature selection (ReliefF, IG, Wrapper) | Fixation/dwell/ diversion duration features (social vs. non-social AOIs) | ML classifiers (DT, NB, RF, SVM) for DSS |

| Varma et al. (2022) [139] | (ASD = 68, NT = 27) | 2–15 y | Automated gaze annotation; AOI discretization | Gaze fixation + visual scanning patterns | LSTM (mild predictive power) |

| Thanarajan et al. (2023) [140] | (ASD = 219, TD = 328) | Ch. | U-Net segmentation; CBO-based hyperparameter tuning | Inception v3 features + LSTM | DL framework (ETASD-CBODL) |

| Islam et al. (2025) [141] | Public datasets (TD = 628 images, ASD = 519 images) | Ch. | Data augmentation; fixation map preprocessing | Hybrid involution–convolution gaze features | Lightweight deep model (3 Involution + 3 Convolution layers) |

| Solovyova et al. (2020) [142] | (ASD = 50, TD = 50), 3–10 y | Ch. | Calibration; Gaussian noise simulation | Time distribution across face ROIs (eyes, mouth, periphery) | Fully connected NN classifier |

| Fernández et al. (2020) [143] | (ASD = 8, TD = 23) | 2–6 y | Face/eye detection; gaze labelling; augmentation | Gaze direction (social vs. abstract vs. undetermined) | CNN (LeNet-5) |

| Shi et al. (2025) [144] | Qiao (74: 50 ASD, 24 TD, 5 y), Cilia (59: 29 ASD, 30 TD, 3–12 y), Saliency4ASD (28: 14 ASD, 14 TD, 5–12 y) | Ch. | Invalid gaze removal; clustering with KMeans, KMedoids, AC, BIRCH, DBSCAN, OPTICS, GMM | Internal cluster validity indices (SC, CH, DB, newDB, DI, CSL, GD33, PBM, STR) | ML models (LR, SVM, KNN, Decision Tree, RF, XGBoost, MLP) for ASD prediction (AUC up to 0.834) |

| Yu et al. (2024) [145] | (ASD = 43, TD = 9) | Ad. (age 18–28 y) | Video preprocessing; speech–visual sync | Gaze engagement, variance, density, diversion | Random Forest (video-based features) |