Improving Gait Analysis Techniques with Markerless Pose Estimation Based on Smartphone Location

Abstract

1. Introduction

2. Methods

2.1. Participants

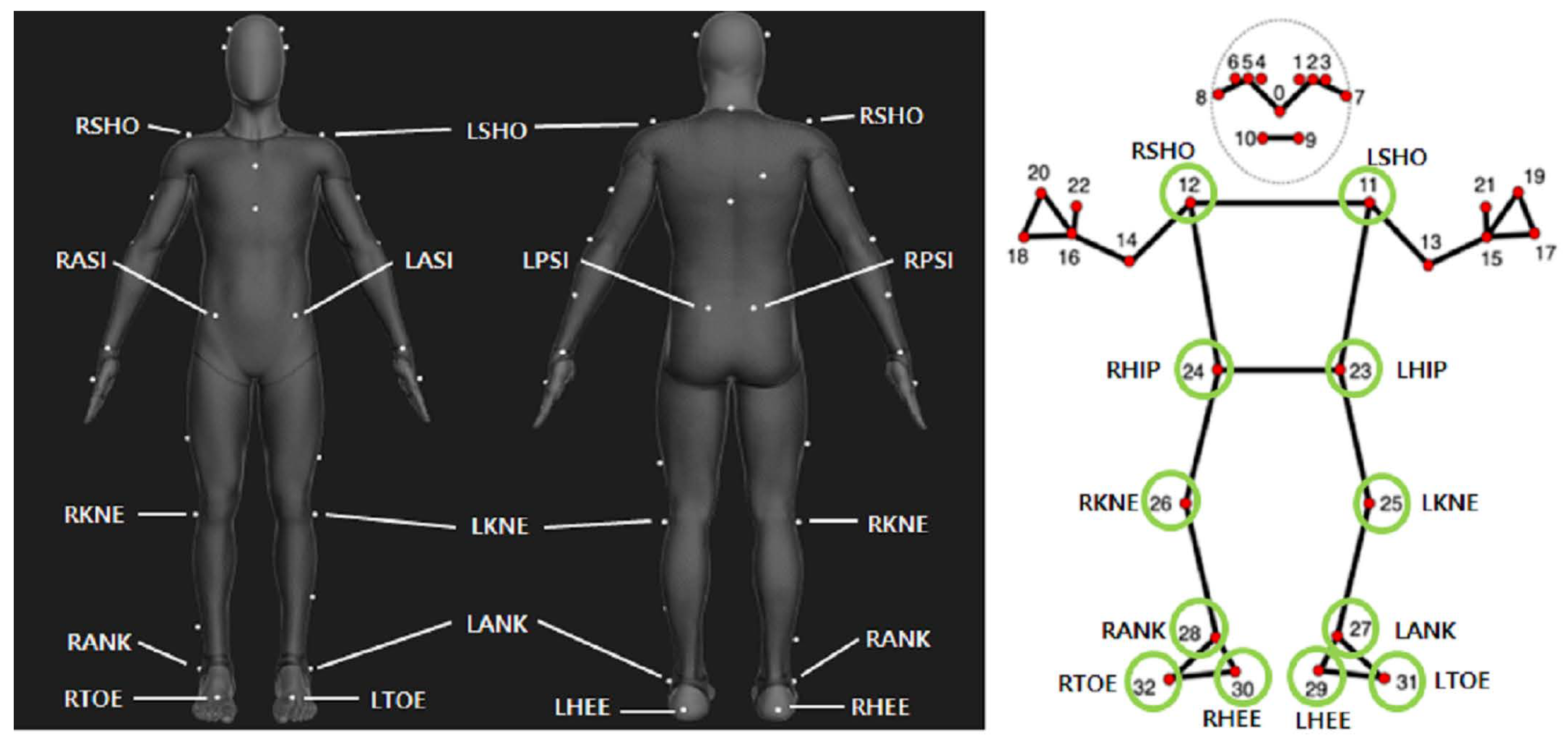

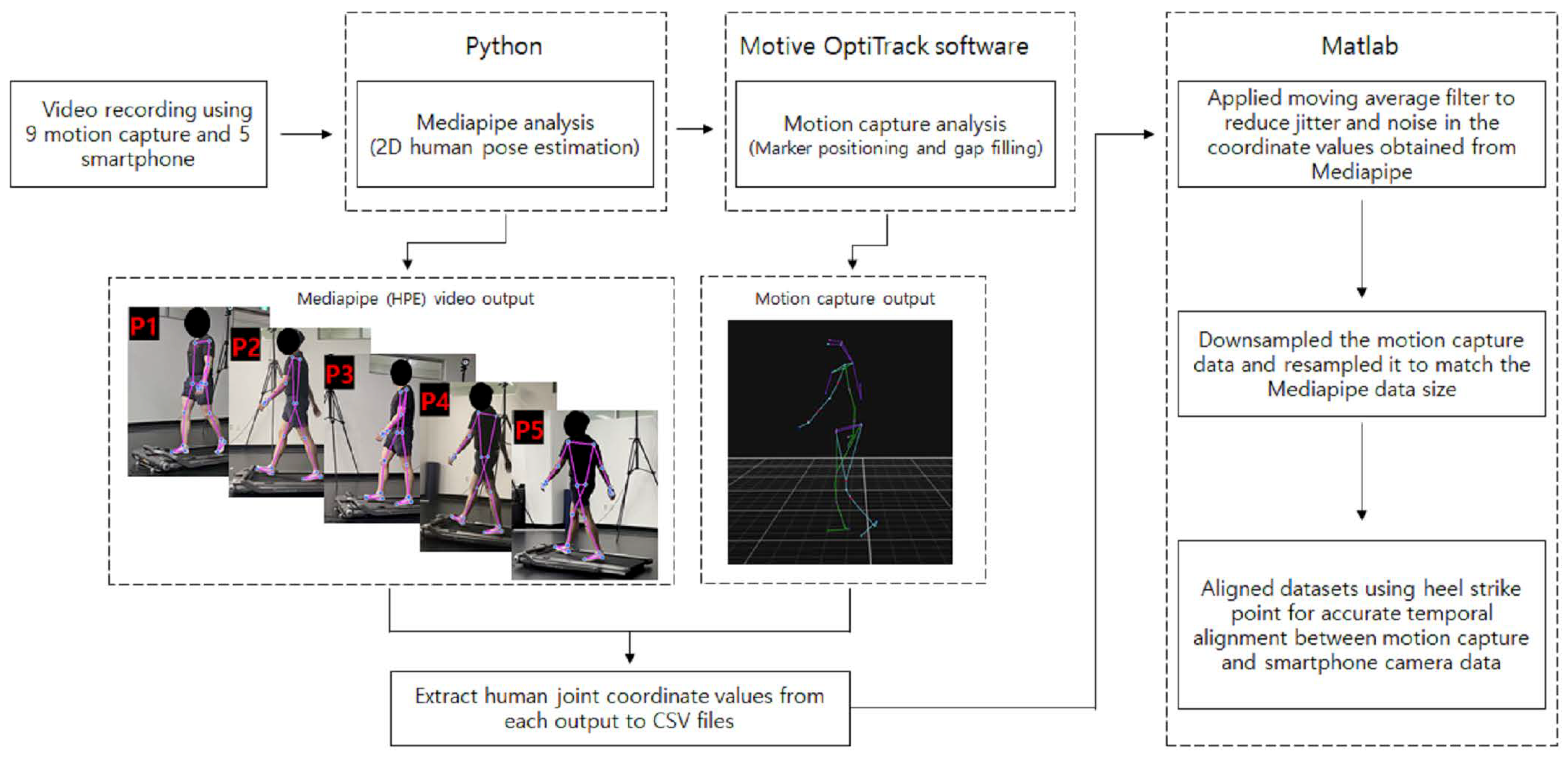

2.2. Data Collection

2.3. Human Pose Estimation (HPE)

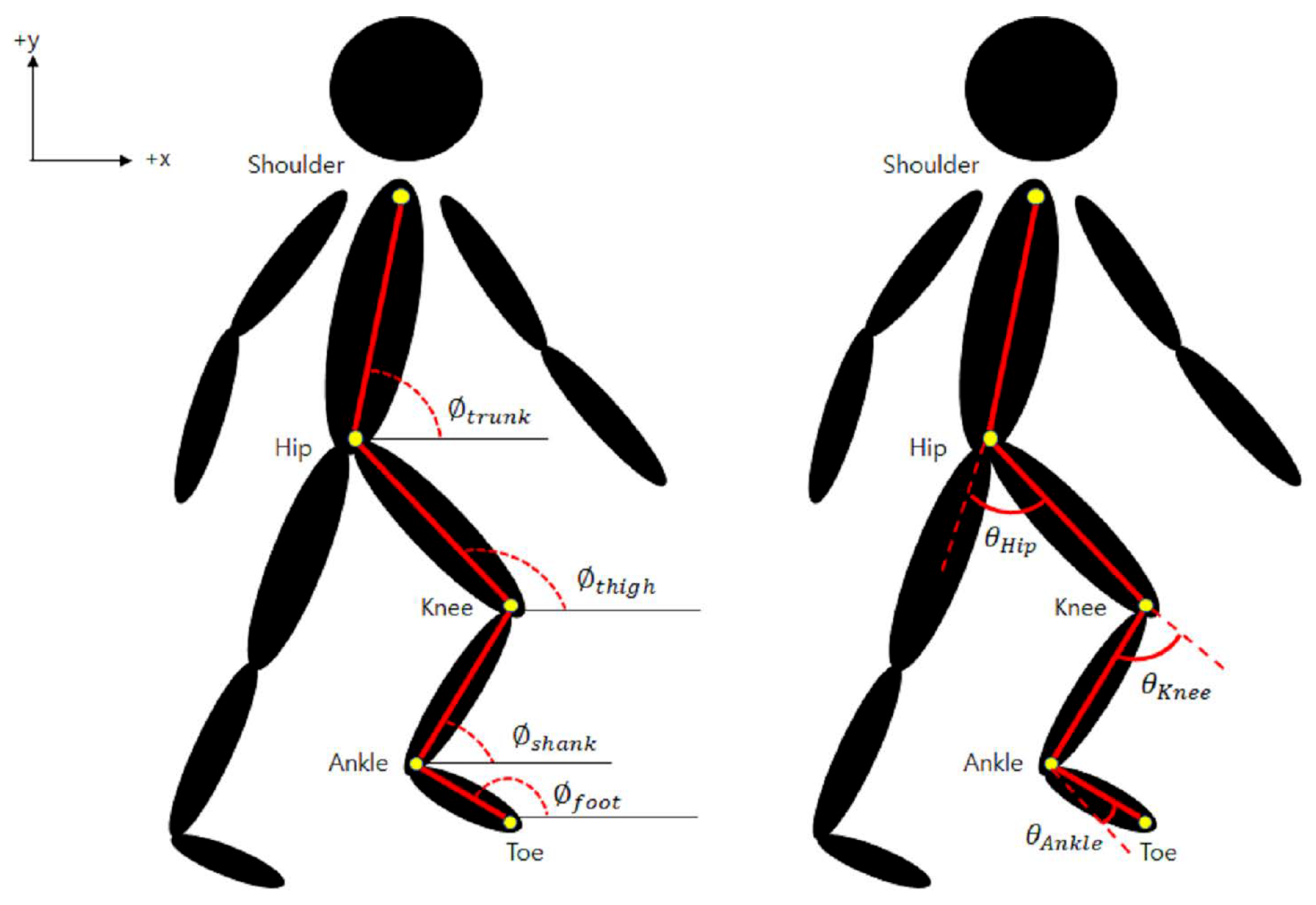

2.4. Data Processing

2.5. Error Calculation and Statistics

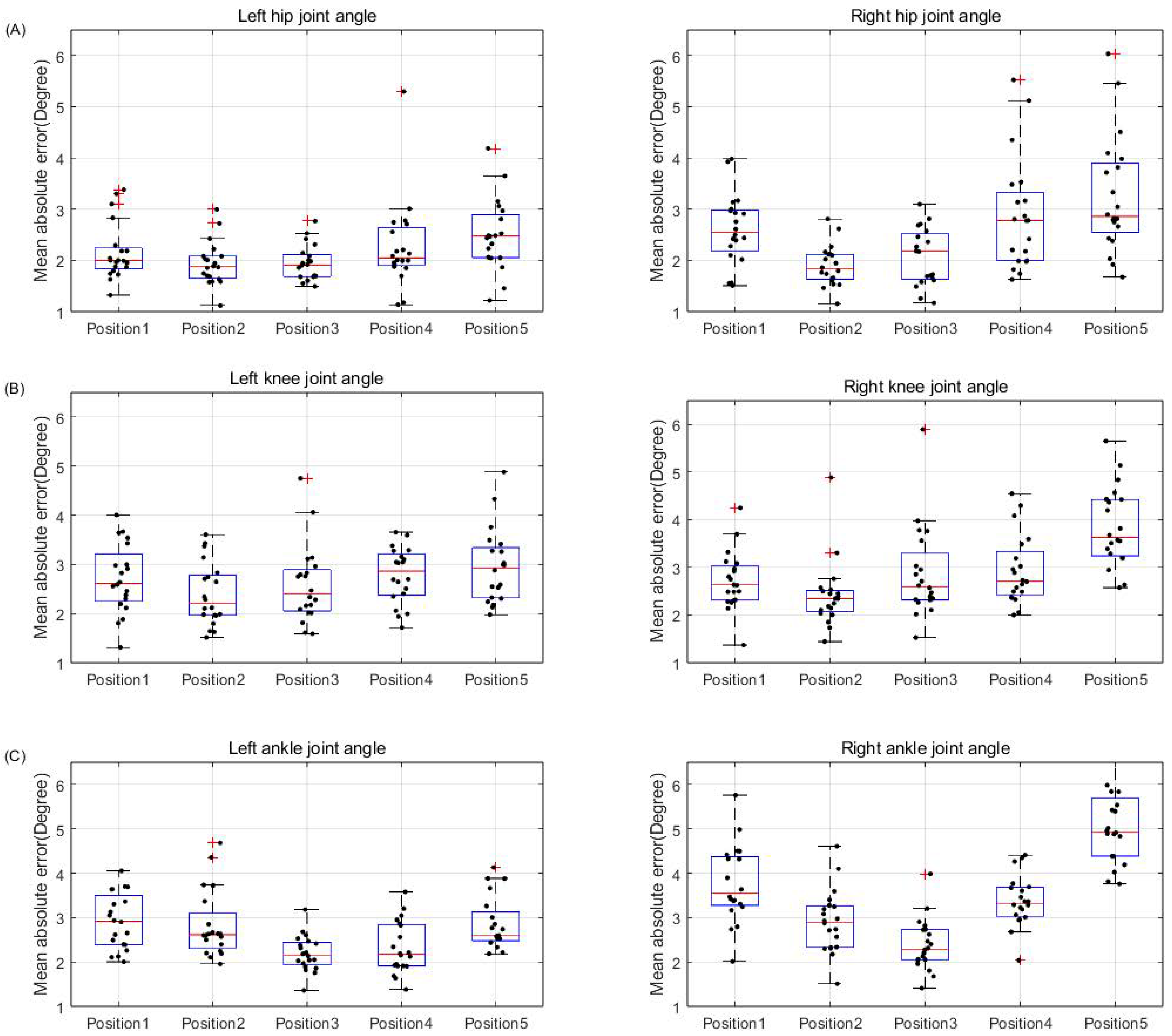

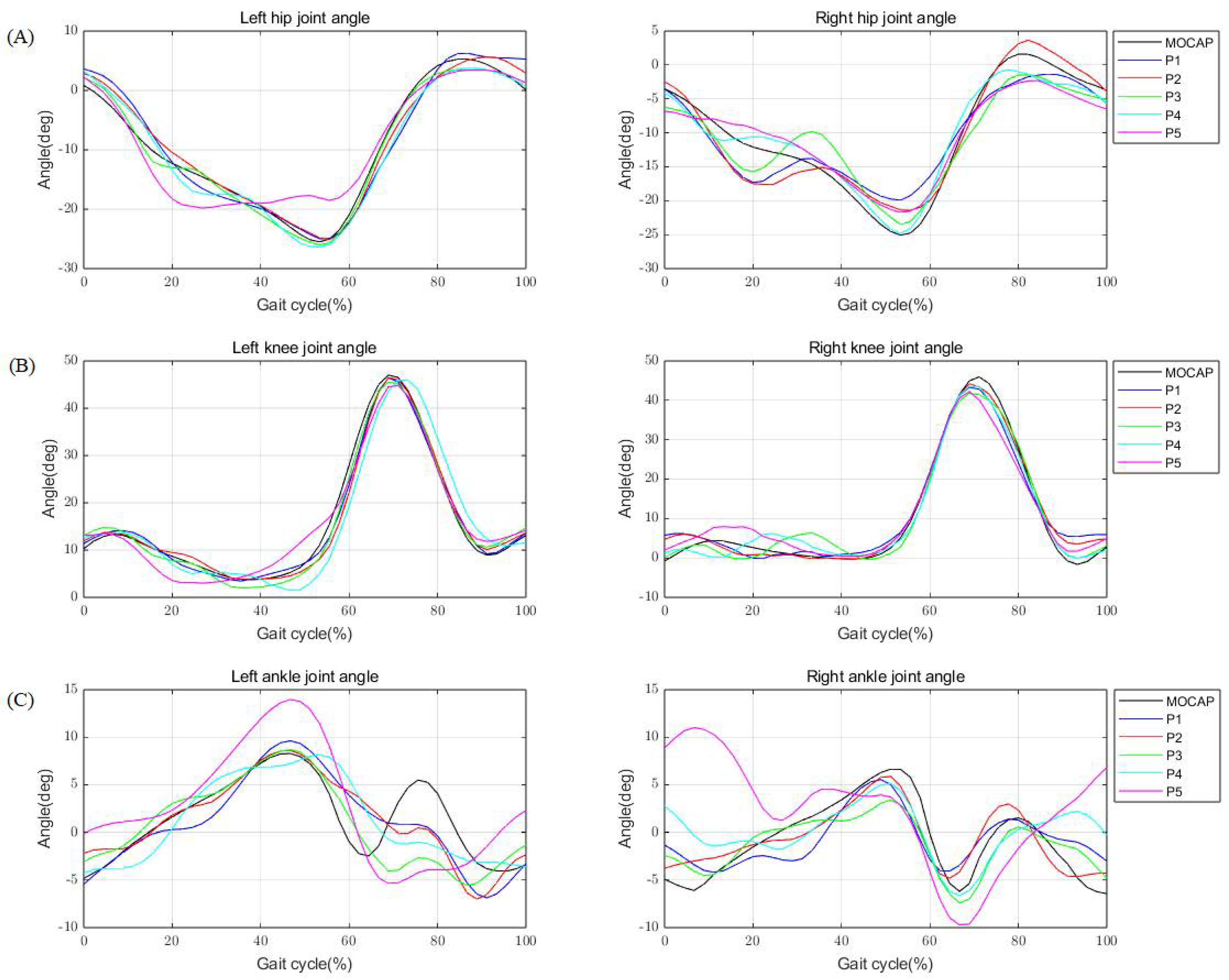

3. Results

4. Discussion

5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Akhtaruzzaman, M.D.; Shafie, A.A.; Khan, M.D.R. Gait analysis: Systems, technologies, and importance. J. Mech. Med. Biol. 2016, 16, 1630003. [Google Scholar] [CrossRef]

- Roberts, M.; Mongeon, D.; Prince, F. Biomechanical parameters for gait analysis: A systematic review of healthy human gait. Phys. Ther. Rehabil. 2017, 4, 6. [Google Scholar] [CrossRef]

- Muro-de-la Herran, A.; Garcia-Zapirain, B.; Mendez-Zorrilla, A. Gait analysis methods: An overview of wearable and non-wearable systems, highlighting clinical applications. Sensors 2014, 14, 3362–3394. [Google Scholar] [CrossRef]

- Prakash, C.; Kumar, R.; Mittal, N. Recent developments in human gait research: Parameters, approaches, applications, machine learning techniques, datasets and challenges. Artif. Intell. Rev. 2018, 49, 1–40. [Google Scholar] [CrossRef]

- Ferreira, J.P.; Crisostomo, M.M.; Coimbra, A.P. Human gait acquisition and characterization. IEEE Trans. Instrum. Meas. 2009, 58, 2979–2988. [Google Scholar] [CrossRef]

- Caldas, R.; Mundt, M.; Potthast, W.; Buarque de Lima Neto, F.; Markert, B. A systematic review of gait analysis methods based on inertial sensors and adaptive algorithms. Gait Posture 2017, 57, 204–210. [Google Scholar] [CrossRef] [PubMed]

- Krebs, D.E.; Edelstein, J.E.; Fishman, S. Reliability of observational kinematic gait analysis. Phys. Ther. 1985, 65, 1027–1033. [Google Scholar] [CrossRef] [PubMed]

- Ziegler, J.; Reiter, A.; Gattringer, H.; Müller, A. Simultaneous identification of human body model parameters and gait trajectory from 3D motion capture data. Med. Eng. Phys. 2020, 84, 193–202. [Google Scholar] [CrossRef]

- Krishnan, C.; Washabaugh, E.P.; Seetharaman, Y. A low cost real-time motion tracking approach using webcam technology. J. Biomech. 2015, 48, 544–548. [Google Scholar] [CrossRef]

- Bulat, A.; Tzimiropoulos, G. Human Pose Estimation via Convolutional Part Heatmap Regression. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 717–732. [Google Scholar]

- Calvache, D.A.; Bernal, H.A.; Guarín, J.F.; Aguía, K.; Orjuela-Cañón, A.D.; Perdomo, O.J. Automatic estimation of pose and falls in videos using computer vision model. In Proceedings of the 16th International Symposium on Medical Information Processing and Analysis, Lima, Peru, 3 November 2020; Brieva, J., Lepore, N., Romero Castro, E., Linguraru, M.G., Eds.; SPIE: Bellingham, WA, USA, 2020. [Google Scholar]

- Güney, G.; Jansen, T.S.; Dill, S.; Schulz, J.B.; Dafotakis, M.; Hoog Antink, C.; Braczynski, A.K. Video-based hand movement analysis of Parkinson patients before and after medication using high-frame-rate videos and MediaPipe. Sensors 2022, 22, 7992. [Google Scholar] [CrossRef]

- Garg, S.; Saxena, A.; Gupta, R. Yoga pose classification: A CNN and MediaPipe inspired deep learning approach for real-world application. J. Ambient Intell. Humaniz. Comput. 2022, 14, 16551–16562. [Google Scholar] [CrossRef]

- Thaman, B.; Cao, T.; Caporusso, N. Face Mask Detection using MediaPipe Facemesh. In Proceedings of the 2022 45th Jubilee International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- Khaleghi, L.; Artan, U.; Etemad, A.; Marshall, J.A. Touchless control of heavy equipment using low-cost hand gesture recognition. IEEE Internet Things Mag. 2022, 5, 54–57. [Google Scholar] [CrossRef]

- Moryossef, A.; Tsochantaridis, I.; Dinn, J.; Camgoz, N.C.; Bowden, R.; Jiang, T.; Rios, A.; Muller, M.; Ebling, S. Evaluating the immediate applicability of pose estimation for sign language recognition. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Docekal, J.; Rozlivek, J.; Matas, J.; Hoffmann, M. Human keypoint detection for close proximity human–robot interaction. In Proceedings of the 2022 IEEE-RAS 21st International Conference on Humanoid Robots (Humanoids), Ginowan, Japan, 28–30 November 2022. [Google Scholar]

- Chen, W.; Jiang, Z.; Guo, H.; Ni, X. Fall detection based on key points of human-skeleton using OpenPose. Symmetry 2020, 12, 744. [Google Scholar] [CrossRef]

- Hernández, Ó.G.; Morell, V.; Ramon, J.L.; Jara, C.A. Human pose detection for robotic-assisted and rehabilitation environments. Appl. Sci. 2021, 11, 4183. [Google Scholar] [CrossRef]

- Ben Gamra, M.; Akhloufi, M.A. A review of deep learning techniques for 2D and 3D human pose estimation. Image Vis. Comput. 2021, 114, 104282. [Google Scholar] [CrossRef]

- Topham, L.K.; Khan, W.; Al-Jumeily, D.; Hussain, A. Human body pose estimation for gait identification: A comprehensive survey of datasets and models: A comprehensive survey of datasets and models. ACM Comput. Surv. 2023, 55, 1–42. [Google Scholar] [CrossRef]

- Wang, J.; Tan, S.; Zhen, X.; Xu, S.; Zheng, F.; He, Z.; Shao, L. Deep 3D human pose estimation: A review. Comput. Vis. Image Underst. 2021, 210, 103225. [Google Scholar] [CrossRef]

- Ji, X.; Fang, Q.; Dong, J.; Shuai, Q.; Jiang, W.; Zhou, X. A survey on monocular 3D human pose estimation. Virtual Real. Intell. Hardw. 2020, 2, 471–500. [Google Scholar] [CrossRef]

- Ugbolue, U.C.; Papi, E.; Kaliarntas, K.T.; Kerr, A.; Earl, L.; Pomeroy, V.M.; Rowe, P.J. The evaluation of an inexpensive, 2D, video based gait assessment system for clinical use. Gait Posture 2013, 38, 483–489. [Google Scholar] [CrossRef] [PubMed]

- Lv, X.; Ta, N.; Chen, T.; Zhao, J.; Wei, H. Analysis of gait characteristics of patients with knee arthritis based on human posture estimation. Biomed Res. Int. 2022, 2022, 7020804. [Google Scholar] [CrossRef] [PubMed]

- Sato, K.; Nagashima, Y.; Mano, T.; Iwata, A.; Toda, T. Quantifying normal and parkinsonian gait features from home movies: Practical application of a deep learning—Based 2D pose estimator. PLoS ONE 2019, 14, e0223549. [Google Scholar] [CrossRef] [PubMed]

- Stenum, J.; Rossi, C.; Roemmich, R.T. Two-dimensional video-based analysis of human gait using pose estimation. PLoS Comput. Biol. 2021, 17, e1008935. [Google Scholar] [CrossRef] [PubMed]

- Viswakumar, A.; Rajagopalan, V.; Ray, T.; Gottipati, P.; Parimi, C. Development of a robust, simple, and affordable human gait analysis system using bottom-up pose estimation with a smartphone camera. Front. Physiol. 2021, 12, 784865. [Google Scholar] [CrossRef]

- Rohan, A.; Rabah, M.; Hosny, T.; Kim, S.H. Human pose estimation-based real-time gait analysis using convolutional neural network. IEEE Access 2020, 8, 191542–191550. [Google Scholar] [CrossRef]

- Takeda, I.; Yamada, A.; Onodera, H. Artificial Intelligence-Assisted motion capture for medical applications: A comparative study between markerless and passive marker motion capture. Comput. Methods Biomech. Biomed. Engin. 2021, 24, 864–873. [Google Scholar] [CrossRef] [PubMed]

- Ota, M.; Tateuchi, H.; Hashiguchi, T.; Ichihashi, N. Verification of validity of gait analysis systems during treadmill walking and running using human pose tracking algorithm. Gait Posture 2021, 85, 290–297. [Google Scholar] [CrossRef] [PubMed]

- Hii, C.S.T.; Gan, K.B.; Zainal, N.; Mohamed Ibrahim, N.; Azmin, S.; Mat Desa, S.H.; van de Warrenburg, B.; You, H.W. Automated gait analysis based on a marker-free pose estimation model. Sensors 2023, 23, 6489. [Google Scholar] [CrossRef]

- Takeichi, K.; Ichikawa, M.; Shinayama, R.; Tagawa, T. A mobile application for running form analysis based on pose estimation technique. In Proceedings of the 2018 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), San Diego, CA, USA, 23–27 July 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Kim, J.W.; Choi, J.Y.; Ha, E.J.; Choi, J.H. Human pose estimation using MediaPipe pose and optimization method based on a humanoid model. Appl. Sci. 2023, 13, 2700. [Google Scholar] [CrossRef]

- Tony Hii, C.S.; Gan, K.B.; Zainal, N.; Ibrahim, N.M.; Rani, S.A.M.; Shattar, N.A. Marker free gait analysis using pose estimation model. In Proceedings of the 2022 IEEE 20th Student Conference on Research and Development (SCOReD), Bangi, Malaysia, 8–9 November 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- Chung, J.L.; Ong, L.Y.; Leow, M.C. Comparative analysis of skeleton-based human pose estimation. Future Internet 2022, 14, 380. [Google Scholar] [CrossRef]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. MediaPipe: A Framework for Building Perception Pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

- Bazarevsky, V.; Grishchenko, I.; Raveendran, K.; Zhu, T.; Zhang, F.; Grundmann, M. BlazePose: On-device Real-time Body Pose tracking. arXiv 2020, arXiv:2006.10204. [Google Scholar]

- McGinley, J.L.; Baker, R.; Wolfe, R.; Morris, M.E. The reliability of three-dimensional kinematic gait measurements: A systematic review. Gait Posture 2009, 29, 360–369. [Google Scholar] [CrossRef] [PubMed]

- Kanko, R.M.; Laende, E.K.; Davis, E.M.; Selbie, W.S.; Deluzio, K.J. Concurrent assessment of gait kinematics using marker-based and markerless motion capture. J. Biomech. 2021, 127, 110665. [Google Scholar] [CrossRef] [PubMed]

- Yamamoto, M.; Shimatani, K.; Hasegawa, M.; Kurita, Y.; Ishige, Y.; Takemura, H. Accuracy of temporo-spatial and lower limb joint kinematics parameters using OpenPose for various gait patterns with orthosis. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 2666–2675. [Google Scholar] [CrossRef] [PubMed]

- Moro, M.; Marchesi, G.; Hesse, F.; Odone, F.; Casadio, M. Markerless vs. Marker-based gait analysis: A proof of concept study. Sensors 2022, 22, 2011. [Google Scholar] [CrossRef]

| Joint Angles | Number of Subjects | Mean ± SD | p | |||||

|---|---|---|---|---|---|---|---|---|

| P1 | P2 | P3 | P4 | P5 | ||||

| Hip (°) | Left | 20 | 2.16 ± 0.56 | 1.94 ± 0.42 | 1.95 ± 0.34 | 2.26 ± 0.86 | 2.51 ± 0.69 | 0.018 |

| Right | 20 | 2.61 ± 0.69 | 1.9 ± 0.4 | 2.07 ± 0.55 | 2.87 ± 1.09 | 3.26 ± 1.14 | <0.001 | |

| Knee (°) | Left | 20 | 2.71 ± 0.7 | 2.39 ± 0.64 | 2.58 ± 0.79 | 2.78 ± 0.57 | 2.96 ± 0.76 | 0.042 |

| Right | 20 | 2.72 ± 0.61 | 2.41 ± 0.7 | 2.87 ± 0.96 | 2.94 ± 0.72 | 3.85 ± 0.83 | <0.001 | |

| Ankle (°) | Left | 20 | 2.92 ± 0.62 | 2.83 ± 0.75 | 2.19 ± 0.4 | 2.32 ± 0.59 | 2.85 ± 0.59 | <0.001 |

| Right | 20 | 3.74 ± 0.86 | 2.91 ± 0.71 | 2.39 ± 0.58 | 3.38 ± 0.57 | 5.09 ± 0.89 | <0.001 | |

| Joint Angles | Number of Subjects | Mean ± SD | |||||

|---|---|---|---|---|---|---|---|

| P1 | P2 | P3 | P4 | P5 | |||

| Hip | Left | 20 | 0.96 ± 0.017 | 0.97 ± 0.014 | 0.97 ± 0.023 | 0.94 ± 0.077 | 0.94 ± 0.044 |

| Right | 20 | 0.95 ± 0.03 | 0.96 ± 0.016 | 0.96 ± 0.026 | 0.91 ± 0.114 | 0.92 ± 0.04 | |

| Knee | Left | 20 | 0.98 ± 0.014 | 0.98 ± 0.017 | 0.98 ± 0.018 | 0.98 ± 0.011 | 0.98 ± 0.011 |

| Right | 20 | 0.98 ± 0.01 | 0.98 ± 0.012 | 0.97 ± 0.025 | 0.97 ± 0.02 | 0.96 ± 0.021 | |

| Ankle | Left | 20 | 0.73 ± 0.124 | 0.75 ± 0.113 | 0.84 ± 0.074 | 0.84 ± 0.095 | 0.81 ± 0.124 |

| Right | 20 | 0.53 ± 0.18 | 0.7 ± 0.194 | 0.83 ± 0.087 | 0.67 ± 0.1 | 0.45 ± 0.149 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Park, K. Improving Gait Analysis Techniques with Markerless Pose Estimation Based on Smartphone Location. Bioengineering 2024, 11, 141. https://doi.org/10.3390/bioengineering11020141

Yang J, Park K. Improving Gait Analysis Techniques with Markerless Pose Estimation Based on Smartphone Location. Bioengineering. 2024; 11(2):141. https://doi.org/10.3390/bioengineering11020141

Chicago/Turabian StyleYang, Junhyuk, and Kiwon Park. 2024. "Improving Gait Analysis Techniques with Markerless Pose Estimation Based on Smartphone Location" Bioengineering 11, no. 2: 141. https://doi.org/10.3390/bioengineering11020141

APA StyleYang, J., & Park, K. (2024). Improving Gait Analysis Techniques with Markerless Pose Estimation Based on Smartphone Location. Bioengineering, 11(2), 141. https://doi.org/10.3390/bioengineering11020141