1. Introduction

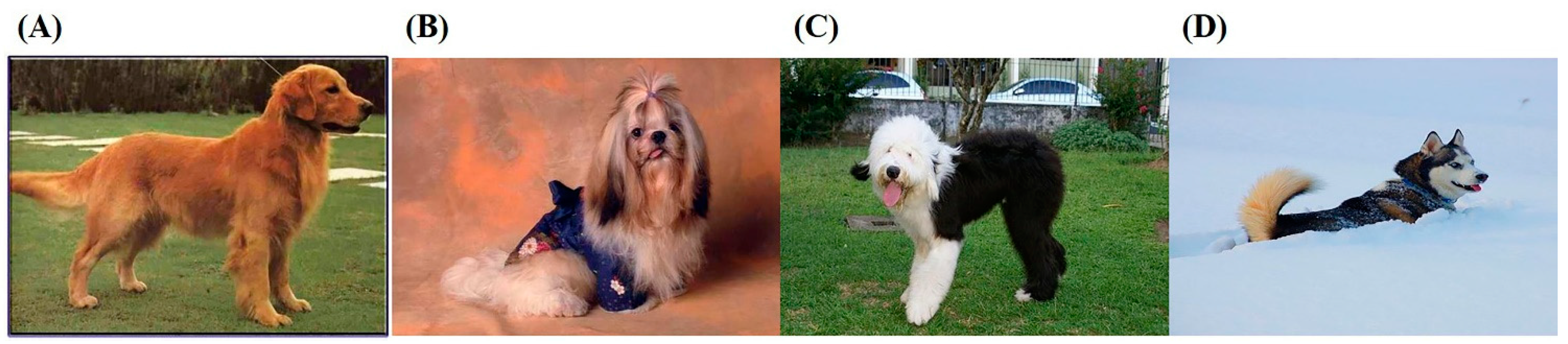

Dogs were one of the earliest domestic animals and have a diversity of phenotypes. Currently, there are more than 400 dog breeds worldwide [

1], and 283 breeds of them have been registered with the AKC (American Kennel Club;

https://www.akc.org (accessed on 22 October 2024)). An accurate classification of dog breeds is crucial in various fields, including veterinary diagnosis, genetic disease research and pet care [

2]. However, it is becoming increasingly difficult due to the diversities and similarities among dog breeds [

3,

4], which rely heavily on expert experiences. Therefore, identifying dog breeds easily, accurately and cost-effectively is a fascinating challenge for dog breeders, managers or fanciers.

To address the challenge of dog image identification, several methods have been proposed, which can be categorized into three main groups.

The first group consists of machine learning methods that focus on geometric features. These methods primarily involve training on geometric features extracted from dog face images and classifying these features using machine learning techniques such as principal component analysis (PCA) [

5,

6,

7]. However, some of these methods were tested on a limited number of dog breeds (35 breeds) [

5,

7], while another used a larger number of dog breeds but achieved insufficient accuracy (67% for 133 breeds) [

6]. PCA is a dimensionality reduction technique that aims to highlight patterns in data by emphasizing variance and capturing strong patterns in high-dimensional data. However, PCA’s effectiveness is predicated on the assumption that the data can be embedded in a globally linear or approximately linear low-dimensional space. Moreover, PCA focuses on the total variance in the explanatory variables, which does not fully reflect the amount of information, and the classification information in the original data is not fully utilized. The compressed data may even be detrimental to pattern classification.

The second group is based on convolutional neural networks (CNN) [

8,

9,

10,

11,

12,

13], which typically employ a single CNN model for the Stanford Dog Dataset, with most achieving an accuracy rate up to 80%. For instance, VGGNet increases network depth by using multiple 3 × 3 convolutional filters while reducing model parameters. The NIN model combines MLP and convolution, using more complex micro neural network structures in place of traditional convolutional layers. These models extract high-level semantic information to improve classification performance but may overlook contextual information around convolution and pooling kernels, leading to the loss of some feature information.

The third group combines CNN with machine learning, where several studies focusing on improving CNN models [

14,

15,

16,

17] have delivered better accuracy rates (up to 90%) than single CNN models. However, the classification accuracy is still hindered by the diversity of dog breeds. When applied to birds, cats and sheep, these models have achieved classification rates of 95% [

18,

19], 80% [

20,

21,

22] and 85% [

23,

24,

25], respectively. Notably, when using the combination of a CNN and Support Vector Machine (SVM) for flower identification, a satisfactory accuracy rate of 97% [

26,

27] was achieved. However, whether the accuracy of classification can be improved in dog image classification remains to be further explored.

To address this issue, we initiated this study to comprehensively research the integration of multiple CNNs and machine learning methods, with the aim of improving dog image classification accuracy. Additionally, the model was combined with dimension reducing and feature selection processing to optimize the exaction and fusion of image features. The experimental results demonstrate that our proposed model outperforms existing methods to achieve better identification efficiency.

The key contributions of this paper are given below: We propose a new method to combine CNN models and a machine learning model for dog image classification; we increased the accuracy of dog image classification to over 95%; and we used transfer learning for the CNN model training process which improved the efficiency and accuracy of this task. The rest of this paper is structured as follows:

Section 2 introduces the materials and methods, including data sources, data preprocessing and model architecture;

Section 3 introduce the experimental setup including parameter details;

Section 4 analyzes the experimental results and discusses the selection of model combination; and finally,

Section 5 discusses the findings and limitations of the study, concludes the research and explores its significance and potential applications in related fields.

5. Discussion

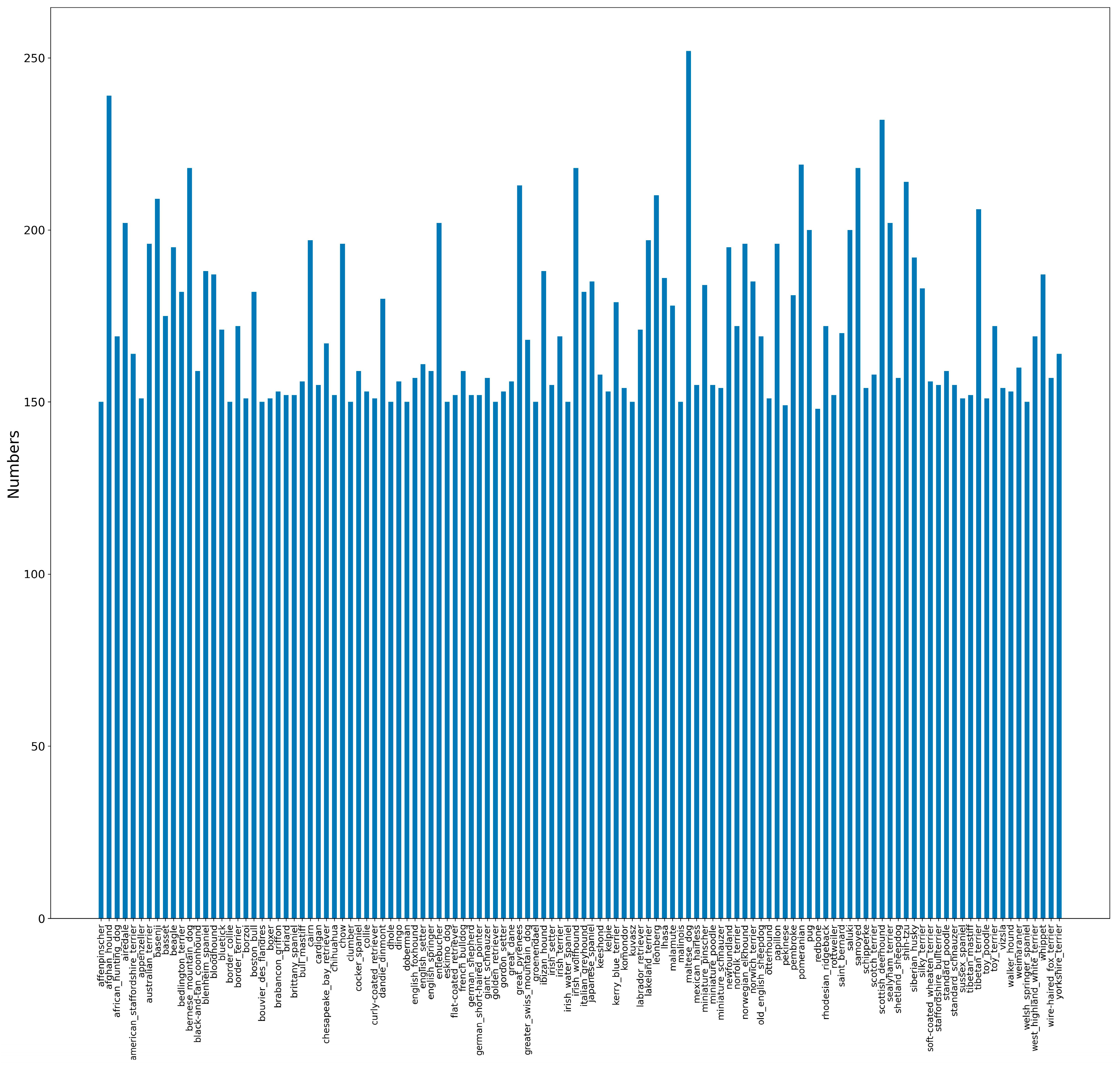

The classification of dog breed images is a type of fine-grained image classification. The proposed model has the advantages of comprehensive feature coverage, high-quality feature output and complete automatic implementation. One of the most characteristic features of this study is that we proposed a comprehensive multi-CNNs model architecture and conducted experiments of the factors that affect accuracy including model combination, feature size and classification method. We achieved a 95.24% accuracy, which is better than other reported deep learning methods using the same Stanford Dog Dataset of 120 breeds regardless of various used hyper parameters (

Table 6). Three improvements contribute to the result: Compared to the existing methods that only use a single CNN model as backbone model, we combined four CNN models and concatenated the extracted features. Our results show that the fusion of high-performance CNN models has a higher accuracy than single CNN model. (2) Existing methods directly use extracted features for classifying, while we use two feature selection methods, PCA and GWO, to obtain improved features. Our results show that PCA in combination with GWO shows better improvements in accuracy than PCA or GWO alone. (3) Most of the existing methods directly use the SoftMax function to classify dog breeds, while we use SVM. Our results show that the combination of CNN and SVM can improve accuracy. The whole set of results show the feasibility and effectiveness of our proposed model. Meanwhile, the number of images may have no direct effect on breed accuracy (

Figure 2).

Historically, dog image classification has focused on dog facial geometry features such as dog face profile or facial local features such as ear shape. Due to complexity of localized features, classification tasks were only trained on limited dog breeds (35 breeds) and/or images (less than 1000 images) followed by classical machining learning methods such as PCA. Since 2012, the deep learning methods show best-in-class performance in several application tasks, which facilitate the application to dog image classification. By using the Stanford Dog Dataset, single CNN models can classify 120 breeds with state-of-the-art accuracy.

6. Conclusions

We started with the image data and manually filtered it based on background size and dog coat color so that there were the same number of images for each breed of dog in Dataset2; the model classification was slightly better for Dataset2 than for the raw data. The performance of multiple CNNs combined with SVM is superior to that of a single CNN combined with SVM. Two feature selection methods, PCA and GWO, also showed an improvement in dog breed classification accuracy. Selecting different CNN models for feature extraction also impacts the results.

Despite these positive outcomes from the study, there are also some limitations to consider. Firstly, the model’s accuracy may be somewhat compromised by the fact that some breeds have such subtle differences that even the human eye finds it challenging to distinguish among them, such as the English foxhound, Beagle and Walker. Secondly, the model’s accuracy is further affected by breeds with significant internal variations, like the Collie, which exhibits a diverse range of coat colors. Additionally, the complexity of the model needs to be addressed in future work.

Future research could be carried out in the following directions. Firstly, we will continue to monitor and update our proposed model in three aspects: We will incorporate the Tsinghua Dogs Dataset (

https://cg.cs.tsinghua.edu.cn/ThuDogs/ (accessed on 22 October 2024)), which contains 70,428 images of 130 breeds, to increase the variety and quantity of image data, thereby enhancing the model’s generalization ability and robustness. (2) We will explore the use of modern architectures, such as autoencoders or large models in the computer vision field like Vision Transformers (ViT) [

17], which can perform various vision tasks such as image classification, target detection, image segmentation, pose estimation, face recognition and so on by training on large-scale image data. (3) Extending our model to other animal images, such as cats, sheep and birds, will allow us to assess its scalability and versatility for classification tasks. Secondly, to facilitate the download and use of our model, we have developed and released all codes on BioCode (

https://ngdc.cncb.ac.cn/biocode/tool/BT7319 (accessed on 22 October 2024)). And we plan to establish an email group accompanied by discussion workshops to garner feedback and suggestions. At present, our proposed model has been integrated into the iDog database (

https://ngdc.cncb.ac.cn/dogvc/ (accessed on 22 October 2024)) for dog image classification, and further developing other applications such as a mobile APP will widely promote the usage of our model. And the model’s explanatory ability also be needed to improve the effectiveness of our applications. Moreover, we advocate for the collaboration of the global canine community research including researchers, veterinarians, dog owners, dog breed experts and data scientists to advance our proposed model. This would include having dog breed experts validate the image classification results using their domain knowledge, data scientists annotate and outline the images to improve classification accuracy and dog owners and veterinarians collect images to enlarge the image scale.