HyMNet: A Multimodal Deep Learning System for Hypertension Prediction Using Fundus Images and Cardiometabolic Risk Factors

Abstract

1. Introduction

- We developed HyMNet, a multimodal deep learning system that combines fundus images with demographic features to improve hypertension detection capabilities.

- We investigated the effects underlying diabetes has on HyMNet’s predictive abilities and concluded that HyMNet strongly relies on diabetes as a confounding factor to make its predictions.

2. Materials and Methods

2.1. Dataset and Label Distribution

2.1.1. Descriptive Analysis

2.1.2. Data Preprocessing and Augmentation

2.2. Classification Models

2.2.1. MMDL Systems

IntermediateFusion and PredictionFusion

LateFusion

VotingFusion

2.2.2. Unimodal Systems

2.3. Training Configurations

2.4. Model Selection and Hyperparameter Tuning

2.5. Statistical Analysis

2.6. Experimental Environment

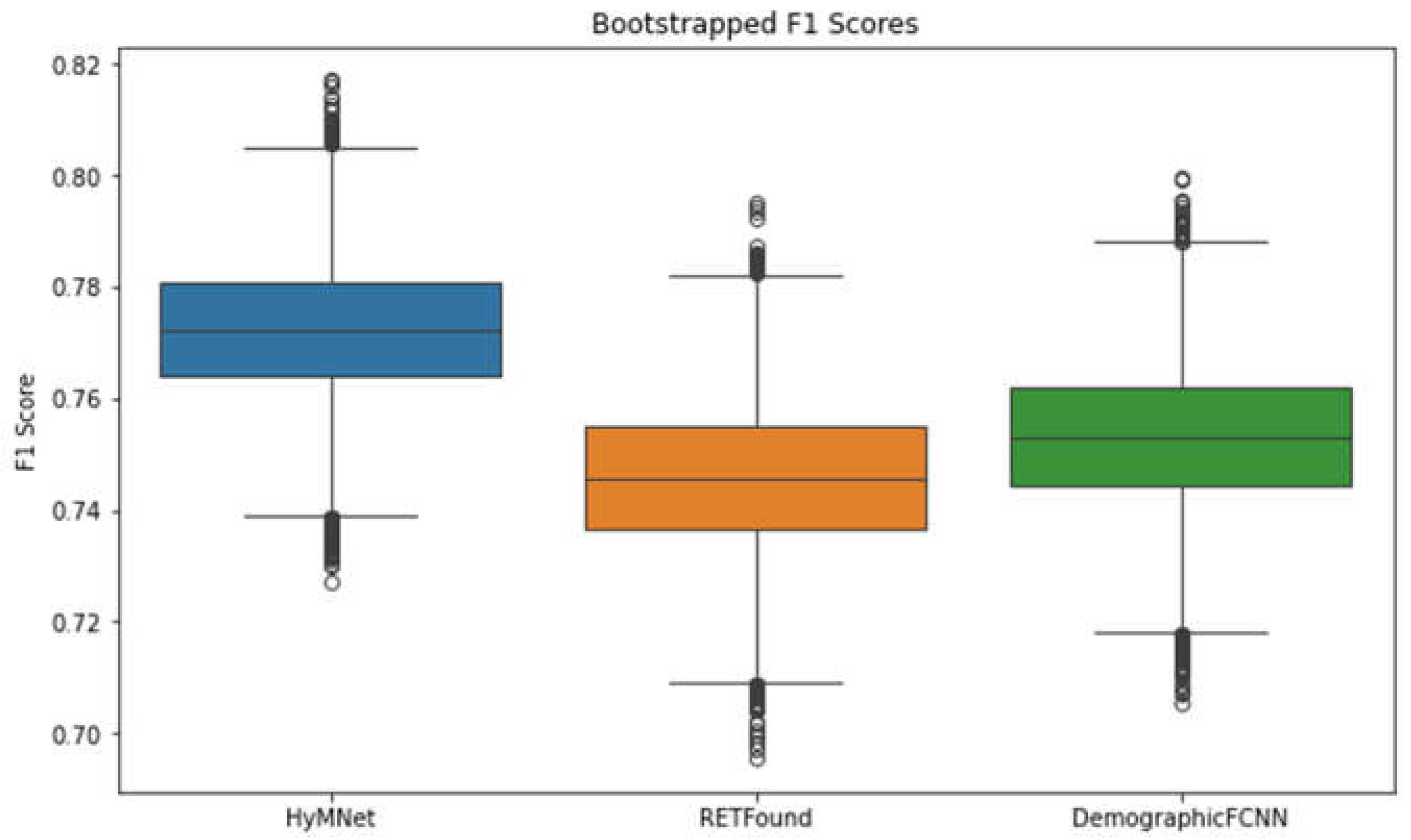

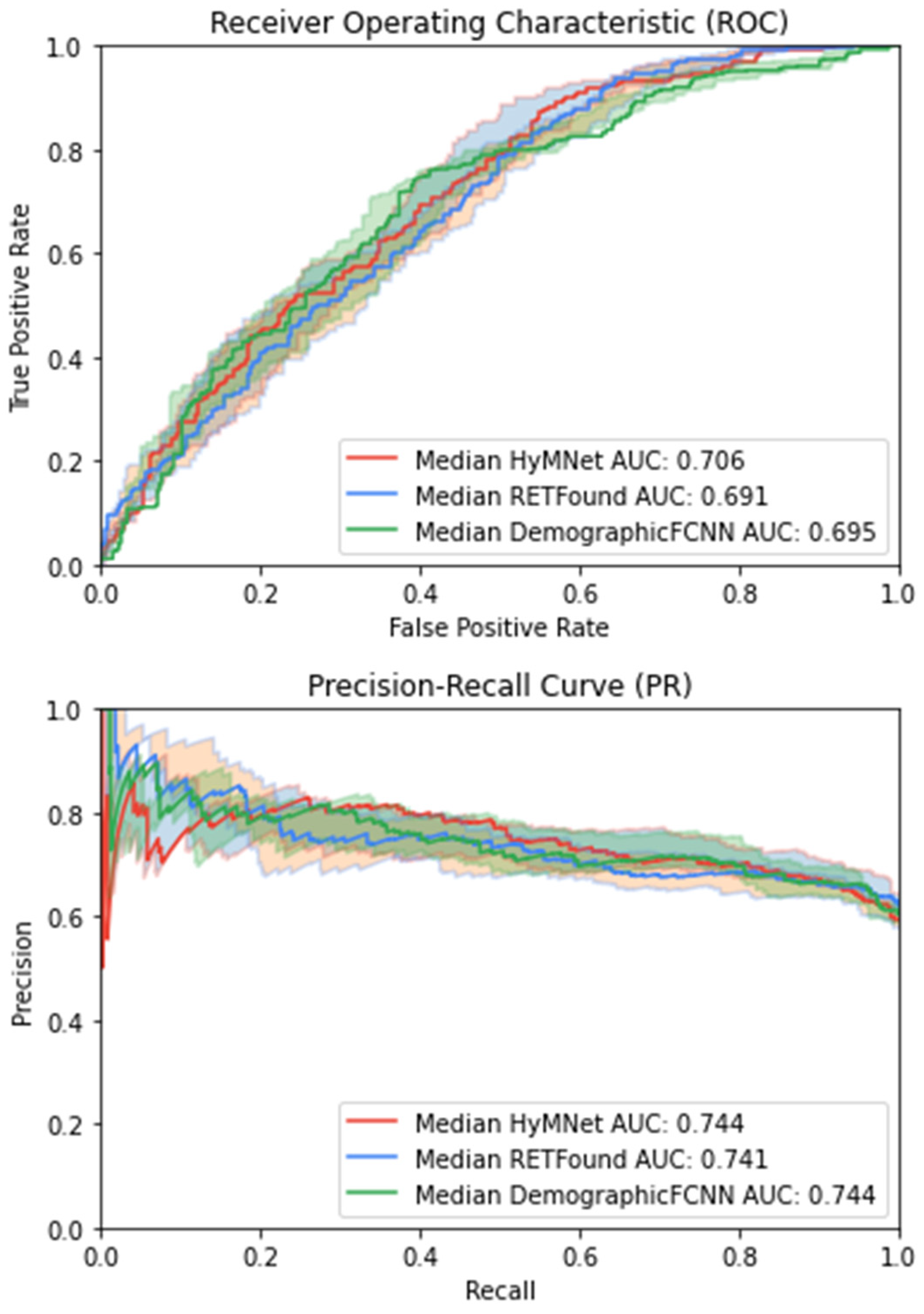

3. Results

3.1. Influence of Diabetes on HTN Detection

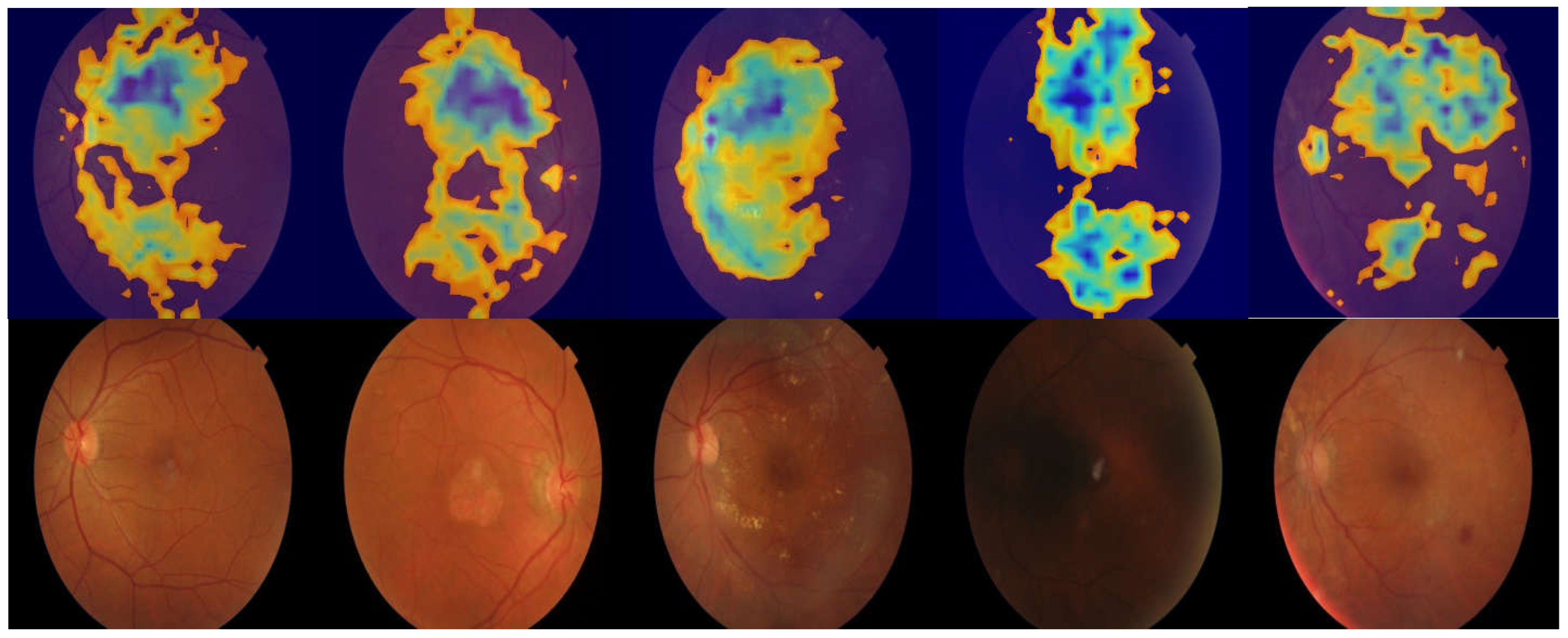

3.2. Region of Interest Visualization

4. Discussion

Limitations and Future Research Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| AUC | Area under the ROC curve |

| BP | Blood pressure |

| CVD | Cardiovascular disease |

| DL | Deep learning |

| FCNN | Fully connected neural network |

| HTN | Hypertension |

| KAIMRC | King Abdullah International Medical Research Center |

| MAE | Mean absolute error |

| MMDL | Multimodal deep learning |

| OCT | Optical coherence tomography |

| AUPRC | Area under the precision–recall curve |

| ReLU | Rectified linear unit |

| ROC | Receiver operating characteristic |

| SVM | Support vector machine |

| XGBoost | Extreme gradient boosting |

References

- Lindstrom, M.; DeCleene, N.; Dorsey, H.; Fuster, V.; Johnson, C.O.; LeGrand, K.E.; Mensah, G.A.; Razo, C.; Stark, B.; Turco, J.V.; et al. Global Burden of Cardiovascular Diseases and Risks Collaboration, 1990–2021. J. Am. Coll. Cardiol. 2022, 80, 2372–2425. [Google Scholar] [CrossRef] [PubMed]

- Fuchs, F.D.; Whelton, P.K. High Blood Pressure and Cardiovascular Disease. Hypertension 2020, 75, 285–292. [Google Scholar] [CrossRef] [PubMed]

- Roth, G.A.; Abate, D.; Abate, K.H.; Abay, S.M.; Abbafati, C.; Abbasi, N.; Abbastabar, H.; Abd-Allah, F.; Abdela, J.; Abdelalim, A.; et al. Global, regional, and national age-sex-specific mortality for 282 causes of death in 195 countries and territories, 1980–2017: A systematic analysis for the Global Burden of Disease Study 2017. Lancet 2018, 392, 1736–1788. [Google Scholar] [CrossRef] [PubMed]

- Lawes, C.M.M.; Vander Hoorn, S.; Rodgers, A.; International Society of Hypertension. Global burden of blood-pressure-related disease, 2001. Lancet Lond. Engl. 2008, 371, 1513–1518. [Google Scholar] [CrossRef]

- Vasan, R.S.; Song, R.J.; Xanthakis, V.; Beiser, A.; DeCarli, C.; Mitchell, G.F.; Seshadri, S. Hypertension-Mediated Organ Damage: Prevalence, Correlates, and Prognosis in the Community. Hypertension 2022, 79, 505–515. [Google Scholar] [CrossRef]

- Oh, J.S.; Lee, C.H.; Park, J.I.; Park, H.K.; Hwang, J.K. Hypertension-Mediated Organ Damage and Long-term Cardiovascular Outcomes in Asian Hypertensive Patients without Prior Cardiovascular Disease. J. Korean Med. Sci. 2020, 35, e400. Available online: https://pubmed.ncbi.nlm.nih.gov/33316856/ (accessed on 15 October 2024). [CrossRef]

- Zhou, B.; Carrillo-Larco, R.M.; Danaei, G.; Riley, L.M.; Paciorek, C.J.; Stevens, G.A.; Gregg, E.W.; Bennett, J.E.; Solomon, B.; Singleton, R.K.; et al. Worldwide trends in hypertension prevalence and progress in treatment and control from 1990 to 2019: A pooled analysis of 1201 population-representative studies with 104 million participants. Lancet 2021, 398, 957–980. [Google Scholar] [CrossRef]

- Carey, R.M.; Muntner, P.; Bosworth, H.B.; Whelton, P.K. Prevention and Control of Hypertension: JACC Health Promotion Series. J. Am. Coll. Cardiol. 2018, 72, 1278–1293. [Google Scholar] [CrossRef]

- US Preventive Services Task Force; Krist, A.H.; Davidson, K.W.; Mangione, C.M.; Cabana, M.; Caughey, A.B.; Davis, E.M.; Donahue, K.E.; Doubeni, C.A.; Kubik, M.; et al. Screening for Hypertension in Adults: US Preventive Services Task Force Reaffirmation Recommendation Statement. JAMA 2021, 325, 1650–1656. [Google Scholar]

- Pickering, T.G.; Hall, J.E.; Appel, L.J.; Falkner, B.E.; Graves, J.; Hill, M.N.; Jones, D.W.; Kurtz, T.; Sheps, S.G.; Roccella, E.J. Recommendations for blood pressure measurement in humans and experimental animals: Part 1: Blood pressure measurement in humans: A statement for professionals from the Subcommittee of Professional and Public Education of the American Heart Association Council on High Blood Pressure Research. Circulation 2005, 111, 697–716. [Google Scholar]

- Powers, B.J.; Olsen, M.K.; Smith, V.A.; Woolson, R.F.; Bosworth, H.B.; Oddone, E.Z. Measuring blood pressure for decision making and quality reporting: Where and how many measures? Ann. Intern. Med. 2011, 154, 781–788. [Google Scholar] [CrossRef] [PubMed]

- Muntner, P.; Einhorn, P.T.; Cushman, W.C.; Whelton, P.K.; Bello, N.A.; Drawz, P.E.; Green, B.B.; Jones, D.W.; Juraschek, S.P.; Margolis, K.L.; et al. Blood Pressure Assessment in Adults in Clinical Practice and Clinic-Based Research: JACC Scientific Expert Panel. J. Am. Coll. Cardiol. 2019, 73, 317–335. [Google Scholar] [CrossRef] [PubMed]

- Gustavsen, P.H.; Høegholm, A.; Bang, L.E.; Kristensen, K.S. White coat hypertension is a cardiovascular risk factor: A 10-year follow-up study. J. Hum. Hypertens. 2003, 17, 811–817. [Google Scholar] [CrossRef] [PubMed]

- Shimbo, D.; Muntner, P. Should Out-of-Office Monitoring Be Performed for Detecting White Coat Hypertension? Ann. Intern. Med. 2019, 170, 890–892. [Google Scholar] [CrossRef]

- Mancia, G.; Bombelli, M.; Facchetti, R.; Madotto, F.; Quarti-Trevano, F.; Polo Friz, H.; Grassi, G.; Sega, R. Long-term risk of sustained hypertension in white-coat or masked hypertension. Hypertension 2009, 54, 226–232. [Google Scholar] [CrossRef]

- Schwartz, J.E.; Burg, M.M.; Shimbo, D.; Broderick, J.E.; Stone, A.A.; Ishikawa, J.; Sloan, R.; Yurgel, T.; Grossman, S.; Pickering, T.G. Clinic Blood Pressure Underestimates Ambulatory Blood Pressure in an Untreated Employer-Based US Population: Results From the Masked Hypertension Study. Circulation 2016, 134, 1794–1807. [Google Scholar] [CrossRef]

- Palla, M.; Saber, H.; Konda, S.; Briasoulis, A. Masked hypertension and cardiovascular outcomes: An updated systematic review and meta-analysis. Integr. Blood Press. Control 2018, 11, 11–24. Available online: https://pubmed.ncbi.nlm.nih.gov/29379316/ (accessed on 15 October 2024). [CrossRef]

- Wong, T.Y.; Mitchell, P. The eye in hypertension. Lancet 2007, 369, 425–435. [Google Scholar] [CrossRef]

- Wong, T.Y.; Klein, R.; Klein, B.E.; Tielsch, J.M.; Hubbard, L.; Nieto, F.J. Retinal microvascular abnormalities and their relationship with hypertension, cardiovascular disease, and mortality. Surv. Ophthalmol. 2001, 46, 59–80. [Google Scholar] [CrossRef]

- Laurent, S.; Boutouyrie, P. The structural factor of hypertension: Large and small artery alterations. Circ. Res. 2015, 116, 1007–1021. [Google Scholar] [CrossRef]

- Burns, S.A.; Elsner, A.E.; Gast, T.J. Imaging the Retinal Vasculature. Annu. Rev. Vis. Sci. 2021, 7, 129–153. [Google Scholar] [CrossRef] [PubMed]

- MacGillivray, T.J.; Trucco, E.; Cameron, J.R.; Dhillon, B.; Houston, J.G.; van Beek, E.J.R. Retinal imaging as a source of biomarkers for diagnosis, characterization and prognosis of chronic illness or long-term conditions. Br. J. Radiol. 2014, 87, 20130832. [Google Scholar] [CrossRef] [PubMed]

- Robertson, G.; Fleming, A.; Williams, M.C.; Trucco, E.; Quinn, N.; Hogg, R.; McKay, G.J.; Kee, F.; Young, I.; Pellegrini, E.; et al. Association between hypertension and retinal vascular features in ultra-widefield fundus imaging. Open Heart 2020, 7, e001124. [Google Scholar] [CrossRef]

- Cheung, C.Y.; Ikram, M.K.; Sabanayagam, C.; Wong, T.Y. Retinal microvasculature as a model to study the manifestations of hypertension. Hypertension 2012, 60, 1094–1103. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Goutam, B.; Hashmi, M.F.; Geem, Z.W.; Bokde, N.D. A Comprehensive Review of Deep Learning Strategies in Retinal Disease Diagnosis Using Fundus Images. IEEE Access 2022, 10, 57796–57823. [Google Scholar] [CrossRef]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA 2016, 316, 2402. [Google Scholar] [CrossRef]

- Grzybowski, A.; Brona, P.; Lim, G.; Ruamviboonsuk, P.; Tan, G.S.W.; Abramoff, M.; Ting, D.S.W. Artificial intelligence for diabetic retinopathy screening: A review. Eye 2020, 34, 451–460. [Google Scholar] [CrossRef]

- Mursch-Edlmayr, A.S.; Ng, W.S.; Diniz-Filho, A.; Sousa, D.C.; Arnold, L.; Schlenker, M.B.; Duenas-Angeles, K.; Keane, P.A.; Crowston, J.G.; Jayaram, H. Artificial Intelligence Algorithms to Diagnose Glaucoma and Detect Glaucoma Progression: Translation to Clinical Practice. Transl. Vis. Sci. Technol. 2020, 9, 55. [Google Scholar] [CrossRef]

- Li, H.; Wang, X.; Liu, C.; Li, P.; Jiao, Y. Integrating multi-domain deep features of electrocardiogram and phonocardiogram for coronary artery disease detection. Comput. Biol. Med. 2021, 138, 104914. [Google Scholar] [CrossRef]

- Li, H.; Wang, X.; Liu, C.; Zeng, Q.; Zheng, Y.; Chu, X.; Yao, L.; Wang, J.; Jiao, Y.; Karmakar, C. A fusion framework based on multi-domain features and deep learning features of phonocardiogram for coronary artery disease detection. Comput. Biol. Med. 2020, 120, 103733. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Feng, Q.; Wu, P.; Lupu, R.A.; Wilke, R.A.; Wells, Q.S.; Denny, J.C.; Wei, W.-Q. Learning from Longitudinal Data in Electronic Health Record and Genetic Data to Improve Cardiovascular Event Prediction. Sci. Rep. 2019, 9, 717. [Google Scholar] [CrossRef] [PubMed]

- Bhimavarapu, U.; Chintalapudi, N.; Battineni, G. Automatic Detection and Classification of Hypertensive Retinopathy with Improved Convolution Neural Network and Improved SVM. Bioengineering 2024, 11, 56. [Google Scholar] [CrossRef] [PubMed]

- Nagpal, D.; Panda, S.N.; Malarvel, M. Hypertensive retinopathy screening through fundus images-a review. In Proceedings of the 2021 6th International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 20–22 January 2021; IEEE: Piscataway, NJ, USA; pp. 924–929. [Google Scholar]

- Triwijoyo, B.K.; Pradipto, Y.D. Detection of hypertension retinopathy using deep learning and Boltzmann machines. In Proceedings of the Journal of Physics: Conference Series, Medan, Indonesia, 14–15 December 2016; IOP Publishing: Bristol, UK, 2017; Volume 801, p. 012039. [Google Scholar]

- Triwijoyo, B.K.; Budiharto, W.; Abdurachman, E. The classification of hypertensive retinopathy using convolutional neural network. Procedia Comput. Sci. 2017, 116, 166–173. [Google Scholar] [CrossRef]

- Arsalan, M.; Haider, A.; Choi, J.; Park, K.R. Diabetic and hypertensive retinopathy screening in fundus images using artificially intelligent shallow architectures. J. Pers. Med. 2021, 12, 7. [Google Scholar] [CrossRef]

- Cen, L.P.; Ji, J.; Lin, J.W.; Ju, S.T.; Lin, H.J.; Li, T.P.; Wang, Y.; Yang, J.F.; Liu, Y.F.; Tan, S.; et al. Automatic detection of 39 fundus diseases and conditions in retinal photographs using deep neural networks. Nat. Commun. 2021, 12, 4828. [Google Scholar] [CrossRef]

- Qureshi, I.; Abbas, Q.; Yan, J.; Hussain, A.; Shaheed, K.; Baig, A.R. Computer-aided detection of hypertensive retinopathy using depth-wise separable CNN. Appl. Sci. 2022, 12, 12086. [Google Scholar] [CrossRef]

- Zhang, L.; Yuan, M.; An, Z.; Zhao, X.; Wu, H.; Li, H.; Wang, Y.; Sun, B.; Li, H.; Ding, S.; et al. Prediction of hypertension, hyperglycemia and dyslipidemia from retinal fundus photographs via deep learning: A cross-sectional study of chronic diseases in central China. PLoS ONE 2020, 15, e0233166. [Google Scholar] [CrossRef]

- Poplin, R.; Varadarajan, A.V.; Blumer, K.; Liu, Y.; McConnell, M.V.; Corrado, G.S.; Peng, L.; Webster, D.R. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat. Biomed. Eng. 2018, 2, 158–164. [Google Scholar] [CrossRef]

- Gerrits, N.; Elen, B.; Craenendonck, T.V.; Triantafyllidou, D.; Petropoulos, I.N.; Malik, R.A.; De Boever, P. Age and sex affect deep learning prediction of cardiometabolic risk factors from retinal images. Sci. Rep. 2020, 10, 9432. [Google Scholar] [CrossRef]

- Almazroa, A.; Alawad, M.; Aljouie, A.; Alkanhal, N.; Almatar, H.; Algoblan, M. King Abdullah International Medical Research Center (KAIMRC)’s big ocular images data set. In Medical Imaging 2022: Imaging Informatics for Healthcare, Research, and Applications; SPIE: New York, NY, USA, 2022; pp. 84–89. Available online: https://www.spiedigitallibrary.org/conference-proceedings-of-spie/12037/120370D/King-Abdullah-International-Medical-Research-Center-KAIMRCs-big-ocular-images/10.1117/12.2612529.full (accessed on 15 October 2024).

- Zhou, Y.; Chia, M.A.; Wagner, S.K.; Ayhan, M.S.; Williamson, D.J.; Struyven, R.R.; Liu, T.; Xu, M.; Lozano, M.G.; Woodward-Court, P.; et al. A foundation model for generalizable disease detection from retinal images. Nature 2023, 622, 156–163. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. arXiv 2015, arXiv:1502.01852. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An imperative style, high-performance deep learning library. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates Inc.: Red Hook, NY, USA, 2019; pp. 8026–8037. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- McKinney, W. Data Structures for Statistical Computing in Python. In Proceedings of the 9th Annual SciPy Conference, Austin, TX, USA, 28 June–3 July 2010; pp. 56–61. [Google Scholar] [CrossRef]

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. Prceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 2818–2826. Available online: http://ieeexplore.ieee.org/document/7780677/ (accessed on 15 October 2024).

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. arXiv 2019, arXiv:1801.04381. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. DINOv2: Learning Robust Visual Features without Supervision. arXiv 2024, arXiv:2304.07193. [Google Scholar]

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K.; et al. CheXNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning. arXiv 2017, arXiv:1711.05225. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. Available online: https://ieeexplore.ieee.org/document/8237336 (accessed on 15 October 2024).

- AlKaabi, L.A.; Ahmed, L.S.; Attiyah, M.F.A.; Abdel-Rahman, M.E. Predicting hypertension using machine learning: Findings from Qatar Biobank Study. PLoS ONE 2020, 15, e0240370. [Google Scholar] [CrossRef] [PubMed]

- Reckelhoff, J.F. Gender Differences in the Regulation of Blood Pressure. Hypertension 2001, 37, 1199–1208. [Google Scholar] [CrossRef]

- Kline, A.; Wang, H.; Li, Y.; Dennis, S.; Hutch, M.; Xu, Z.; Wang, F.; Cheng, F.; Luo, Y. Multimodal machine learning in precision health: A scoping review. npj Digit. Med. 2022, 5, 3404. [Google Scholar] [CrossRef]

- Qiu, S.; Miller, M.I.; Joshi, P.S.; Lee, J.C.; Xue, C.; Ni, Y.; Wang, Y.; De Anda-Duran, I.; Hwang, P.H.; Cramer, J.A.; et al. Multimodal deep learning for Alzheimer’s disease dementia assessment. Nat. Commun. 2022, 13, 3404. [Google Scholar] [CrossRef]

- Lee, Y.C.; Cha, J.; Shim, I.; Park, W.Y.; Kang, S.W.; Lim, D.H.; Won, H.-H. Multimodal deep learning of fundus abnormalities and traditional risk factors for cardiovascular risk prediction. npj Digit. Med. 2023, 6, 14. [Google Scholar] [CrossRef]

- Dai, G.; He, W.; Xu, L.; Pazo, E.E.; Lin, T.; Liu, S.; Zhang, C. Exploring the effect of hypertension on retinal microvasculature using deep learning on East Asian population. PLoS ONE 2020, 15, e0230111. [Google Scholar] [CrossRef]

| Total (n = 5016) | HTN (n = 2937) | Non-HTN (n = 2079) | |

|---|---|---|---|

| Age (years), mean ± SD | 58.65 ± 22.37 | 62.48 ± 10.23 | 53.25 ± 31.77 |

| Gender, n (%) | |||

| Male | 2224 (44%) | 1294 (44%) | 930 (45%) |

| Female | 2792 (56%) | 1643 (56%) | 1149 (55%) |

| Diabetes status, n (%) | |||

| Positive | 4145 (83%) | 2817 (96%) | 1328 (64%) |

| Negative | 871 (17%) | 120 (4%) | 751 (36%) |

| Model | F1 Score | AUC | PR | Accuracy | Precision | Recall |

|---|---|---|---|---|---|---|

| HyMNet | 0.771 [0.747, 0.796] | 0.705 [0.672, 0.738] | 0.743 [0.703, 0.784] | 0.690 [0.662, 0.719] | 0.683 [0.65, 0.716] | 0.887 [0.862, 0.912] |

| RETFound | 0.745 [0.719, 0.772] | 0.690 [0.657, 0.724] | 0.740 [0.701, 0.78] | 0.682 [0.647, 0.717] | 0.668 [0.639, 0.698] | 0.821 [0.791, 0.852] |

| DemographicFCNN | 0.752 [0.727, 0.778] | 0.694 [0.661, 0.727] | 0.742 [0.703, 0.782] | 0.661 [0.632, 0.69] | 0.662 [0.63, 0.695] | 0.871 [0.845, 0.898] |

| Diabetes Status | F1 | AUC | AUPRC | Accuracy | Precision | Recall |

|---|---|---|---|---|---|---|

| Positive | 0.796 [0.772, 0.821] | 0.68 [0.642, 0.717] | 0.788 [0.748, 0.828] | 0.696 [0.665, 0.727] | 0.716 [0.684, 0.749] | 0.895 [0.869, 0.921] |

| Negative | 0.466 [0.352, 0.581] | 0.704 [0.617, 0.79] | 0.306 [0.202, 0.411] | 0.642 [0.57, 0.715] | 0.344 [0.237, 0.451] | 0.78 [0.636, 0.923] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baharoon, M.; Almatar, H.; Alduhayan, R.; Aldebasi, T.; Alahmadi, B.; Bokhari, Y.; Alawad, M.; Almazroa, A.; Aljouie, A. HyMNet: A Multimodal Deep Learning System for Hypertension Prediction Using Fundus Images and Cardiometabolic Risk Factors. Bioengineering 2024, 11, 1080. https://doi.org/10.3390/bioengineering11111080

Baharoon M, Almatar H, Alduhayan R, Aldebasi T, Alahmadi B, Bokhari Y, Alawad M, Almazroa A, Aljouie A. HyMNet: A Multimodal Deep Learning System for Hypertension Prediction Using Fundus Images and Cardiometabolic Risk Factors. Bioengineering. 2024; 11(11):1080. https://doi.org/10.3390/bioengineering11111080

Chicago/Turabian StyleBaharoon, Mohammed, Hessa Almatar, Reema Alduhayan, Tariq Aldebasi, Badr Alahmadi, Yahya Bokhari, Mohammed Alawad, Ahmed Almazroa, and Abdulrhman Aljouie. 2024. "HyMNet: A Multimodal Deep Learning System for Hypertension Prediction Using Fundus Images and Cardiometabolic Risk Factors" Bioengineering 11, no. 11: 1080. https://doi.org/10.3390/bioengineering11111080

APA StyleBaharoon, M., Almatar, H., Alduhayan, R., Aldebasi, T., Alahmadi, B., Bokhari, Y., Alawad, M., Almazroa, A., & Aljouie, A. (2024). HyMNet: A Multimodal Deep Learning System for Hypertension Prediction Using Fundus Images and Cardiometabolic Risk Factors. Bioengineering, 11(11), 1080. https://doi.org/10.3390/bioengineering11111080