1. Introduction

A recent systematic review by Bengtsson suggests various drivers for the adoption of “Take Home Examinations (THEs)”, which include the desire to assess higher-order cognitive skills, the massification of higher education with accompanying changes in student learning habits, and the need for a more comprehensive assessment of learning outcomes [

1]. Bengtsson’s review considers THEs to be an extension of proctored Open Book Examinations (OBE); THEs are “

an exam that the students can do at any location of their choice, it is non-proctored and the time limit is extended to days (rather than hours…)” [

1]. A hallmark of the open-book assessment type, is that they may permit, and in some cases are designed to encourage, learners to consult resources of their choice. More recently, the necessity of COVID-19 could be added to this list of drivers [

2], as the pandemic led to the rapid development of a range of alternative assessment methods [

3], including OBEs. Educators aim to adopt research-informed approaches in developing curricula [

4], however, the literature that compares OBE approaches to conventional assessment methods in medical and dental education is somewhat limited [

5], The requirement to assure a range of stakeholders of assessment validity contributes to caution when considering changes in assessment type, which often favours maintaining the status quo. That said, tentative assurance is offered in research that may support change, as comparable outcomes have been reported when comparing OBEs and traditional methods [

5]. Nevertheless, it is noted that such understandings were not established against the backdrop of a global pandemic [

1,

5].

In response to COVID-19, and in common with educators from allied health professions, in 2020 the School of Dentistry at The University of Liverpool, was forced to redesign and deliver an online finals assessment approach at pace. Globally there has been recognition that this driver favoured expedience over good design [

3]. A marked and largely untested change in assessment method, at short notice, was an unanticipated shift. In particular, change often holds with it the very real possibility of raising levels of student anxiety and destabilising learners’ feelings of competence [

6], which could, in turn, have adverse impacts on academic outcomes [

7,

8]. This may be exacerbated when the window to introduce and prepare learners for such a change is limited.

The design of existing assessment blueprints at the school aims to ensure that competency is holistically evaluated through triangulation across a range of assessments including a significant amount of longitudinal work-based data available for each student, supplemented by data from simulated-environment assessments such as an OSCE. The assessment of applied knowledge that underpins the competencies relies on data from traditionally proctored single best answer (SBA) examinations. In the circumstances of the pandemic, a pragmatic solution was required that managed the loss of non-work-based, face to face assessments. Therefore, a replacement was designed that could:

be delivered online and unproctored (the timeline was considered to be too short to obtain and quality assure a method of remote proctoring), and which would avoid compromising the existing, limited item bank.

test the same attributes, such as the ability to synthesise clinical information and exercise clinical judgement for diagnostic, treatment planning and patient management purposes.

An OBE with multiple short answer format questions was designed, based on clinical vignettes with appropriate clinical images. The OBE permitted learners to consult resources, but the question design anticipated that students would not do so extensively in the given time constraints.

Drawing on the literature examined, we aimed to elicit and explore relationships between final examination marks and student views on:

Self-reported (a) anxieties associated with OBE, (b) time preparing for the OBE, and (c) time spent consulting resources during the OBE

Student perceptions (a) about the authenticity of the assessment method as a test of their competence and (b) of support derived from the learning environment

Whether learners endorsed the continuation of online (a) assessment and (b) associated delivery.

Our decision to make this change was driven by necessity, however, the situation also presented an opportunity to rethink how assessment, in this case, OBE, contributes to our assessment blueprint. Importantly, this also allowed us to explore the impact of the introduction of this novel method on learners. In this latter regard, we note a recent call by Zagury-Orly and colleagues to do just this [

2] and the need to remain agile and change assessment strategies to maintain an optimal approach [

4]. The current paper presents data from a

post hoc evaluation of student perceptions of the open book approach.

3. Results

3.1. Quantitative Data Analysis

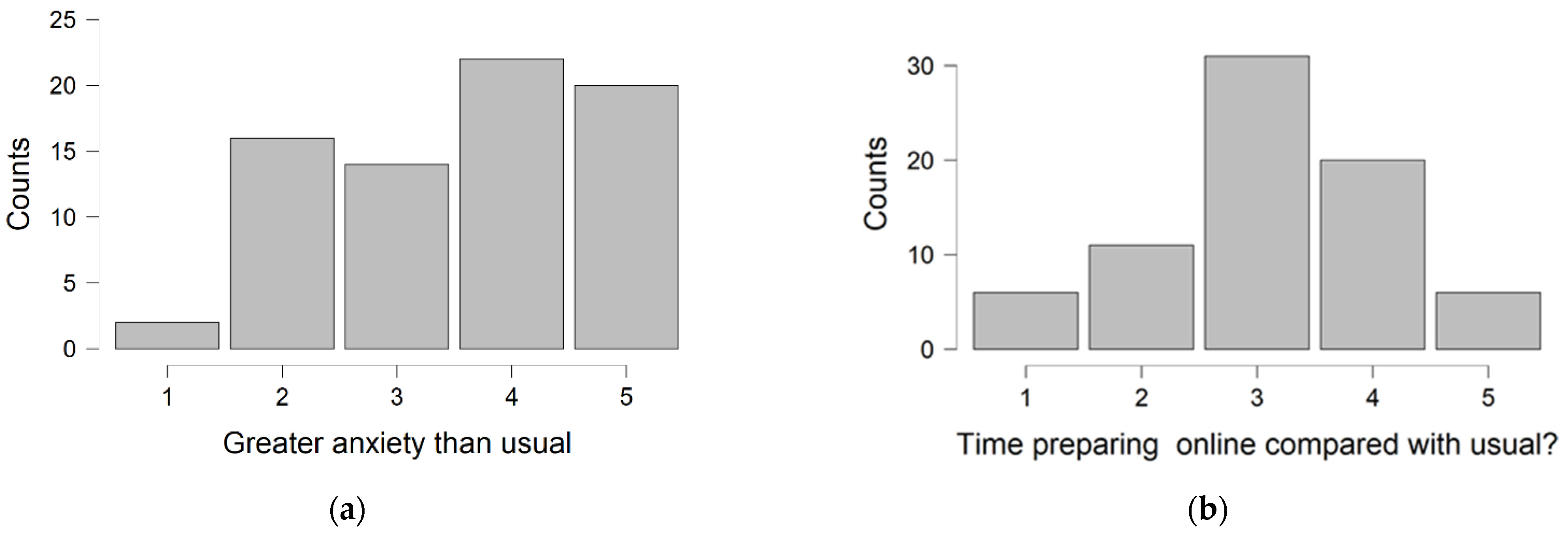

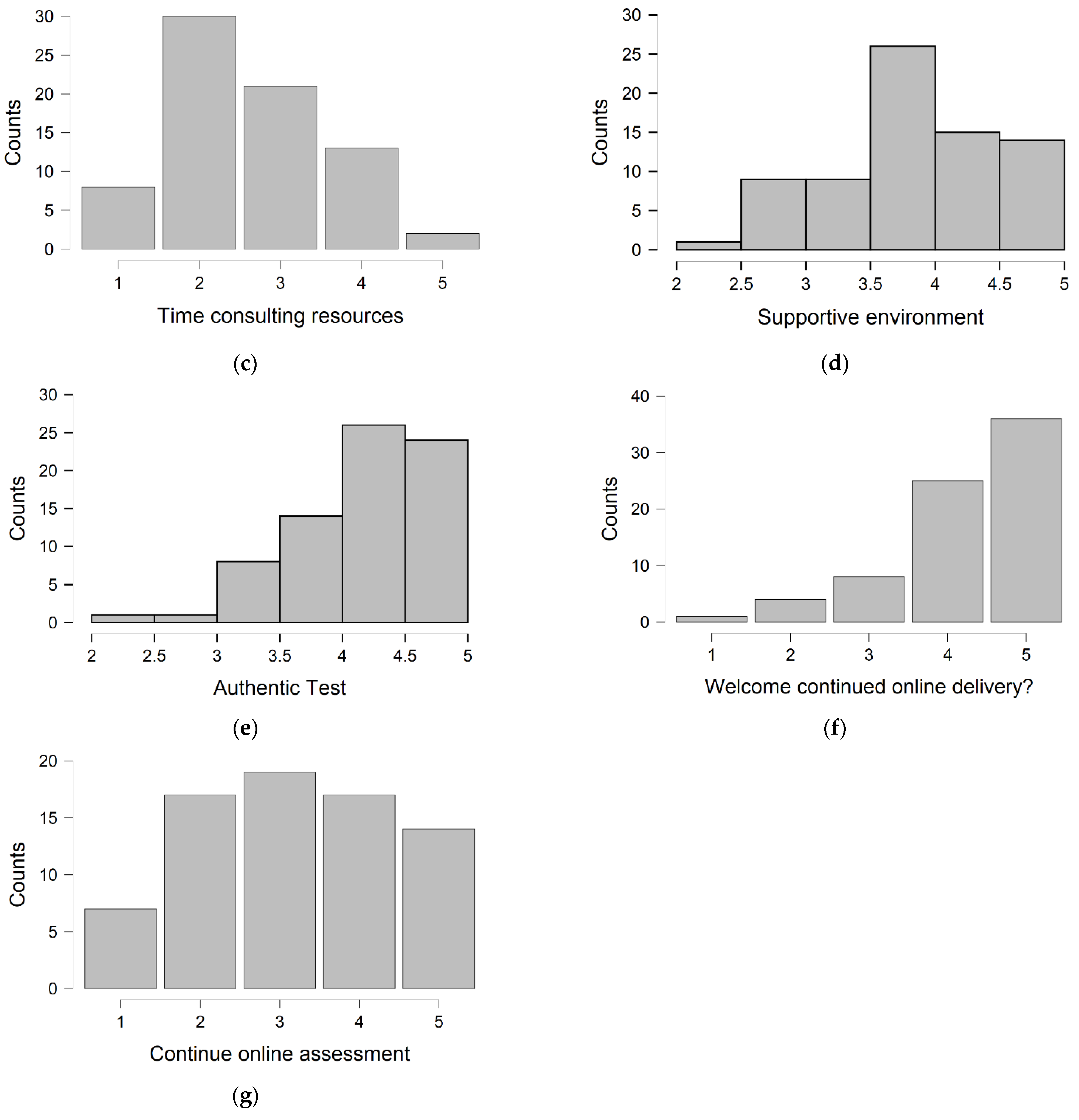

After running the preparatory descriptive analyses, data were employed in pursuit of the research aims. Both descriptive data and correlation effect estimates are reported (

Table 1). A narrative description of results follows. Largely, these summarise the significant relationships depicted in

Table 1, except where we determine that non-significant relationships support understanding; however, all relationships can be seen in

Table 1 for completeness.

Exploratory correlation analysis indicated that when learners reported heightened anxieties about OBEs they tended to increase their assessment preparations (rs = 0.55, p < 0.001). These heightened anxiety levels were also found to be inversely related to learners’ perceptions that the OBE was an authentic test allowing them to demonstrate competence (rs = −0.43, p < 0.001), and that learners endorsed the continuation of online assessment (rs = −0.27, p < 0.05), meaning that learners who reported lower levels of anxiety were more likely to endorse the authenticity of the assessment and were inclined to endorse the continuance of OBEs. Supporting this, when learners considered OBEs an authentic test of competence, they were more likely to welcome OBEs in future (rs = 0.42, p < 0.001). Potentially enhancing these perceptions, learners who reported that the learning environment was supportive, also endorsed the authenticity of OBE (rs = 0.23, p = 0.052) although this result marginally exceeded the 0.05 alpha threshold typically used to indicate significance. These results also indicated that learners who supported online delivery of learning also endorsed the continuation of online assessment (rs = 0.39, p < 0.001).

The marks achieved in the examination ranged from 59% to 94% (

Figure 1). The Hofstee passmark was 59%. This cohort’s performance in the examination did not appear particularly unusual in the experience of the authors, although no detailed comparisons with other cohorts or assessments has been undertaken.

When considering the final examination marks, an inverse relationship was seen between learners OBE assessment performance and consulting resources during the exam (

rs = −0.25,

p = 0.035) and in relation to continuing with online OBEs (

rs = −0.27,

p = 0.019). This suggests that students achieving marks towards the higher end of the range tended to report that they consulted resources less often during the assessment than their colleagues who did less well in comparison. In addition, higher-performing students were also more comfortable with continuing online OBE as an assessment method. It is noteworthy that examination marks were not significantly related to endorsement of continued online delivery of learning, time spent preparing for this assessment in comparison to other types of assessment, greater anxiety than usual, perceptions of test authenticity or experiencing a supportive test environment when compared with usual. Visual inspection indicated a largely non-parametric distribution of the participant responses to survey items, confirmed in each case by a significant Shapiro-Wilks test

p < 0.05 (

Figure 2).

3.2. Qualitative Data Analysis

Three themes emerged from participants’ responses to the open-ended question ‘Do you have any suggestions to help us improve online assessment? These were inter-related and included ‘assessment completion time’, ‘practice opportunities’ and ‘acceptance’. Learners diverged in their views on assessment completion time with some calling for more time during the assessment to allay practical concerns:

“Submit via plagiarism software instead or with online invigilation like the medical school and give a longer time to do it. Lots of anxiety worrying about internet connection dropping!”

This response included tacit acknowledgement by a student that the security of the test was crucial to provide assurance of test validity and acceptance. However, another student addressing assessment completion time called for shorter assessments, but at the same time increasing their quantity, due to fatigue:

“I found the exam very long (took me almost 4 hours) and although I thought the exam was relevant and tested my knowledge thoroughly, my eyes were starting to strain by the end of it and I was feeling very tired. Perhaps having shorter exams but more of them may help resolve this problem.”

Addressing the theme acceptance, this split may indicate that an optimal assessment length was achieved, which balanced the needs of individual learners. However, practically, acceptance for some was paired with a suggestion for greater variety in question format:

“I feel providing mixed formats of questions in future exams rather than 100% one type will allow students a nice middle ground.”

This may also indicate that aspects of the question design, paired with pragmatic issues around the length of assessment and practice opportunities would lead to greater acceptance, perhaps as this would facilitate learners’ competence perceptions when faced with a novel situation. Despite this, learners reported that in this situation:

“the online assessment was a fair assessment using clinical scenarios similar to those we would have been given in an OSCE—given the circumstances I think it was the best option.”

Although learners indicated suggestions to improve the pragmatics of the OBE assessment experience, they appear to accept the novel method. Learners also accepted the need for assurance that the assessment remains a valid test of ability and made suggestions to do this. Additional support in the learning environment, for example, increasing the availability of practice opportunities including a greater variety in questions may increase the acceptance of OBEs. This may be particularly important for those learners who were less sure that OBEs were a fair test of competence, as highlighted in the earlier quantitative analysis. Taken together these results emphasise that preparing learners for such a test is crucial in enabling them to perceive that they are competent to face the OBE regardless of the prevailing circumstances

4. Discussion

This mixed methods investigation examined the relationships between (1) learners self-reported preparations and behaviours in approaching the OBE, (2) their acceptance of the OBE as an authentic and valid test of competence, (3) their endorsement of support from the learning environment, and final examination marks. The quantitative and qualitative results reported indicate high levels of learner acceptance of the implemented OBE, with learners recognising the OBE as a valid test of their competence, and largely supporting the continuation of OBE examinations in future assessment rounds. These understandings were, however, nuanced.

We can firstly consider the relationships between anxiety, preparation, consulting resources and examination performance within this self-reported data set. It would appear that anxiety was a driver for increased time spent in preparation for the examination. However, this increased time did not correlate with better performance. This brings into question the nature and objective of the preparation undertaken, perhaps suggesting a surface learning approach that was ultimately of no benefit in an examination designed to test clinical problem solving. Given that neither preparation time nor anxiety appeared to be correlated with time spent consulting resources in the examination it is interesting to speculate what factors might be driving such behaviour, especially in the face of pre-examination warnings about its likely counter-productive effects. It is possible that a sub-set of learners adopt a very strategic approach to assessment, believing that “gaming” is an effective policy, seemingly encouraged by the unproctored nature of the examination. Furthermore, participants may have self-reported greater levels of preparation than were factual due to the lack of anonymity of their responses. Despite our lack of complete understanding, there do appear to be some important messages to be derived that coincide with an intuitive understanding of student behaviours and which can be used to reinforce guidance to students on their approach to this style of assessment.

Learners endorsed the continuation of the OBE in future assessment rounds. This endorsement increased as learners achieved higher examination marks, and more strongly by learners that endorsed the OBE as a valid test of competence, indicating learner acceptance of the OBE as a suitable alternative assessment method. This echoed the support from learners seen in qualitative analysis. Despite this report, it should be noted that more anxious learners were less likely to endorse the OBE as a valid test of competence and its continuance in future assessment rounds than colleagues reporting lower anxieties. This is perhaps a reflection of mindset and it is of interest whether unmeasured personality factors may have influenced levels of self-efficacy and competence. A number of other studies have reported that learners may prefer open-book to closed-book examinations due to reporting lower anxiety and in making less effort for preparation [

11,

12,

13,

14]. Furthermore, increased anxiety may lead to differences in students’ self-assessment of their performance compared to their actual performance [

15]. Competence perceptions in learning are thought to be associated with a range of global, contextual and situational factors [

16]. However, these same perceptions are well understood to be bolstered by a supportive educational environment that enhances learners’ feelings of autonomy [

7,

17]. Therefore, considering how to optimise the learning environment surrounding assessment so that learners feel supported, is a design consideration that plays a key part in reassuring learners and their feelings of competence so that they are enabled to successfully manage a situation, in this case, the revised assessment approach. It would have been perfectly possible for these perspectives to have been relegated in favour of expedience during the pandemic, leading to these motivational perspectives being undermined, which could have resulted in a negative impact on performance. Understanding learner perceptions of the environment may be related to acceptance of the assessment method, and support their feelings of competence, which was not assured given the OBEs introduction.

We found, in qualitative analysis, calls for additional practice opportunities, which might, within an appropriate preparatory framework, help learners to understand the nature of the OBE and accept it more readily as a valid test of competence. Learners suggestions for greater practice opportunities might go some way to alleviating the anxieties in a way that supports the acceptance of the OBE and it continuing as part of a suite of assessments. This said, learners were unaware of their assessment marks at the time they participated in the research, however, this may betray learners’ evaluations of the assessment accurately. It should be noted that, despite the opportunity for pre-examination familiarisation and practice, the adopted format was new to the students. It is not possible to determine from this study whether this lack of familiarity was the cause of anxiety rather than some intrinsic aspect of the assessment format. Finally, learners that were more likely to endorse online delivery of teaching also endorsed continued online assessment. The endorsement of continued online delivery was unrelated to other factors measured.

The small sample surveyed here was a limiting factor, nevertheless, the sample was the total population of interest for this investigation. A similar study was carried out anonymously and invited all undergraduate students in all years to participate; the response rate for final year students was 25% [

14]. Arguably, final year students might be best informed to self-report their perceptions of OBEs in comparison to closed-book examinations, and the 100% response rate in this study is a positive factor, although it is accepted that there may not be a direct correlation between response rate and validity. Low levels of statistical power may lead to the very real possibility that effect estimates reported are under- or over-inflated within this single centre study, and it may be that extending the sample might have secured a different understanding. However, extending the sample could also have introduced unanticipated confounds. This limitation is acknowledged, as is the exploratory nature of the analysis, and, consequently, the results should be interpreted with a degree of caution. We attempted to support the limits of the qualitative understanding by supplementing it with qualitative data collection and analysis, seeking learners’ views on improvements so that we can design an improved approach that takes account of learner perspectives going forward. The resulting report does this. These challenges aside, we consider that this study contributes to understanding, albeit in a limited manner, to learner perspective on the introduction of OBEs which may, in turn, suggest future research directions. Prior to the examination, strong messages were communicated to the students about the importance of preparation and the inadvisability of over-reliance on freedom to spend a lot of time consulting resources; the design of the examination in promoting the application of knowledge rather than resource-searching skills was reiterated. Most students appear to have taken this onboard but the inter-relationships of perceptions of difficulty, use of resources, anxiety, and performance are worthy of further study.