1. Introduction

It is an undoubted fact that the design of more reliable and accurate algorithms to compute the approximate values to the sine and cosine functions is one of the most fascinating topics of the constructive approximation of functions. This is clearly motivated by numerous applications of these trigonometric functions ranging from mathematics, physics to engineering.

The use of polynomial approximations plays a key role in computing values of the sine and cosine functions, as well as other elementary functions. The most commonly used polynomials are Taylor, Chebyshev, and Remez. Let us briefly mention an early historical fact related to approximating the trigonometric functions. That is the formula proposed by Bhaskara in the 7th century:

which is now explained clearly and logically (see [

1]). The explanation is based on the view of interpolation by polynomials and rational functions.

For these days, with a rapid development of technology and computer science, there has been great effort to build hardware and software algorithms for evaluating values of the elementary functions, especially the trigonometric functions, to meet an essential need in scientific computations, signal processing, telecommunication and computer graphics [

2]. Among these algorithms is the most important and fundamental one called the CORDIC algorithm. The name CORDIC is an acronym for Coordinate Rotation Digital Computer. This is a computing technique for solving the trigonometric relations that consist of plane coordinate rotation, Cartesian and polar coordinates. Details on CORDIC and developments on algorithms for evaluating elementary functions can be found in the excellent monograph by Muller [

3] Chapter 7, and also [

4,

5,

6,

7,

8].

It is worth mentioning that the desirable accuracy of computed transcendental functions mainly depends on the demand of users and/or practical scientific use. On the one hand, it would usually suffice to numerically solve real-world problems with an accuracy to machine precision. This is the reason that the majority of present computing software programs such as MATLAB, and its Chebfun software package, developed by Trefethen and his team [

9], aims to support. On the other hand, there has always been an imperative need to seek for simpler and more efficient algorithms with higher accuracy as the interest in improving scientific calculation method itself or from other sources of practical demand. This is purely our intention when writing the present article. The design and development of our algorithms stem from the fact that we are able to easily work with higher-degree polynomials (even degrees of thousands or millions [

10] Chapter 1) due to the increasing complexity of computer applications.

The goals of this paper are twofold. Firstly, we present an algorithm that gives pointwise approximate values of the sine and cosine functions at any rational angle with a desired precision. Secondly, our biggest contribution to novelty is the piecewise approximation procedure with an arbitrary small absolute error applied to these trigonometric functions. To this end, we introduce a special partition that can take the advantage of dividing an interval into appropriate subintervals for the approximation process. Our construction is merely based on the lookup table-like storage of approximate values of Pi (the number ). In some computer algebra systems, we can do this with built-in commands such as evalf[n](Pi) from MAPLE, vpa(pi,n) from MATLAB and N[Pi,n] from MATHEMATICA.

In principle, approximating the value

by the Taylor polynomial of order

n at a point

as

is meaningful when the point

satisfies

and all the coefficients

can be explicitly calculated. Such a point can be chosen as

, where

and

. We will choose

k to get the inequality even better

. Because we only have rational approximate values of

p, this challenges us with several practical questions to be solved: how to evaluate errors arising from replacing

p with its approximate value

and how to keep

unchanged with this change of value of

p? In addition, we also need an efficient mechanism to access rational approximate values of

p more enough to reach an acceptable or desired precision. Fortunately, more and more precise values of Pi have been updated from the projects of computation of special numbers (see [

11], for instance), and these values are now supplied conveniently and efficiently for use by present computer algebra systems. The current record of the longest decimal number computed for Pi has been 13.3 trillion digits (date announced: 8 October 2014), held by an anonymous programmer known online as “houkouonch”, and will be certainly broken later.

The paper is organized as follows. In

Section 2, we define the steps to obtain Taylor polynomials for approximating values of the sine function with an absolute error less than a given tolerance. We then, in

Section 3, provide appropriate regulations to show that it is possible to keep coefficients of the approximate Taylor polynomials unchanged when

is replaced with its approximate value

. The error analysis is presented in the same section in which the properties of special functions Gamma and Modified Bessel are used. We arrive at our algorithms for pointwise approximating values of the sine and cosine functions, and for piecewise approximating on any interval

in

Section 4 and

Section 5. To demonstrate the performance of our methods,

Section 6 is devoted to an application of numerical integration. Finally, some concluding remarks are discussed in the last section. For our purpose, we use MAPLE to implement our algorithms, and demonstrate numerical and graphical results.

3. Regulation

In practice, we are only provided with approximate values of

. Assume that such a value is

and let

. If

, we use the formula (

5) with

and we have the approximation

with the accuracy of

, where

n is determined from (

2). Therefore, we consider from this point on any rational number

x such that

and we then have that

For an initial setting, we assume that

satisfies

for a given

.

Because the formulas (

4) and (

5) depend on the distance between

and

according to the choice of

, we need to choose

so that

will not change its values. This can be attained when

or equivalently

Since

(

7) is satisfied if and only if

Since

, we then have (

8) if

simultaneously satisfies the following inequalities

Before proceeding any further, we emphasize here that we can set as the approximate value of p with the accuracy up to significant digits when to prevent inexactitude from rounding-off rules. Such an approximate value of p can be declared by, for instance, evalf[m + 3](Pi/2) in MAPLE. As a convention, when the output m has been found for to satisfy , we then set with evalf[m + 3](p).

Finally, we recall here the cases of choosing

and assume some supplementary requirements for

to make

unchanged when replacing

p with

. If

and due to

we also have

when

and we then choose

in this case. Similarly, if

, then we also have

when

and we choose

. In particular, if

, then we also choose

and note that (

9) and (

10) are obviously satisfied in this case.

Let us take an important notice that: (

11) implies (

10), and (

12) implies (

9). Thus, to find an appropriate value of

and then choose

, we first require

by determining a number

such that

Then,

can be chosen to satisfy simultaneously (

9) and (

11), which is

or (

10) and (

12), which is

depending on

or

, respectively. To prove the existence of such a

, we need some results from the notion of sequence limit. This result is an important basis of our approximation algorithm.

Supposing that we have found

satisfying (

13), then we choose

=

evalf[m0 + 3](p) and let

. Letting an index

i take its initial value

. If

, then we choose

; otherwise, we consider the following process. To choose

that satisfies simultaneously (

6), (

9), (

11) or (

6), (

10), (

12), from (

14) or (

15), we check

or

If both (

16) and (

17) could not occur, we go to the next step by setting

,

=

evalf[mi+1 + 3](p) and

. Then, we check again (

16) and (

17), but with

and

for this time. Continuing this process, we go to the following conclusion:

One of either (

16) and (

17) first occurs at some step

k, and assume it is (

16). Hence, we have

(and also

). Then we choose

(

). Similarly, we have

(and also

) and we choose

if (

17) occurs.

Both of (

16) and (

17) could not occur at any step. Then, we obtain the infinite sequences

,

=

evalf[m0 + 3 + i](p) and

,

, such that

and

Now, we show the second item of the conclusion cannot be true. Because

, then

,

. Since

, there exists an integer

such that

for all

, hence

for all

. Therefore,

If

, then

hence the left side of the inequality (

18) leads to ∞ as

, and this is a contradiction. If

, by a similar argument, we also derive a contradiction from (

19).

There is one more question that should be considered explicitly. What is the maximum value of

k that we may access in the calling sequence

evalf[m0 + 3 + k](p)? We provide here an analysis of this possibility. We set on the intervals

and

the functions

and

, respectively, such that

and

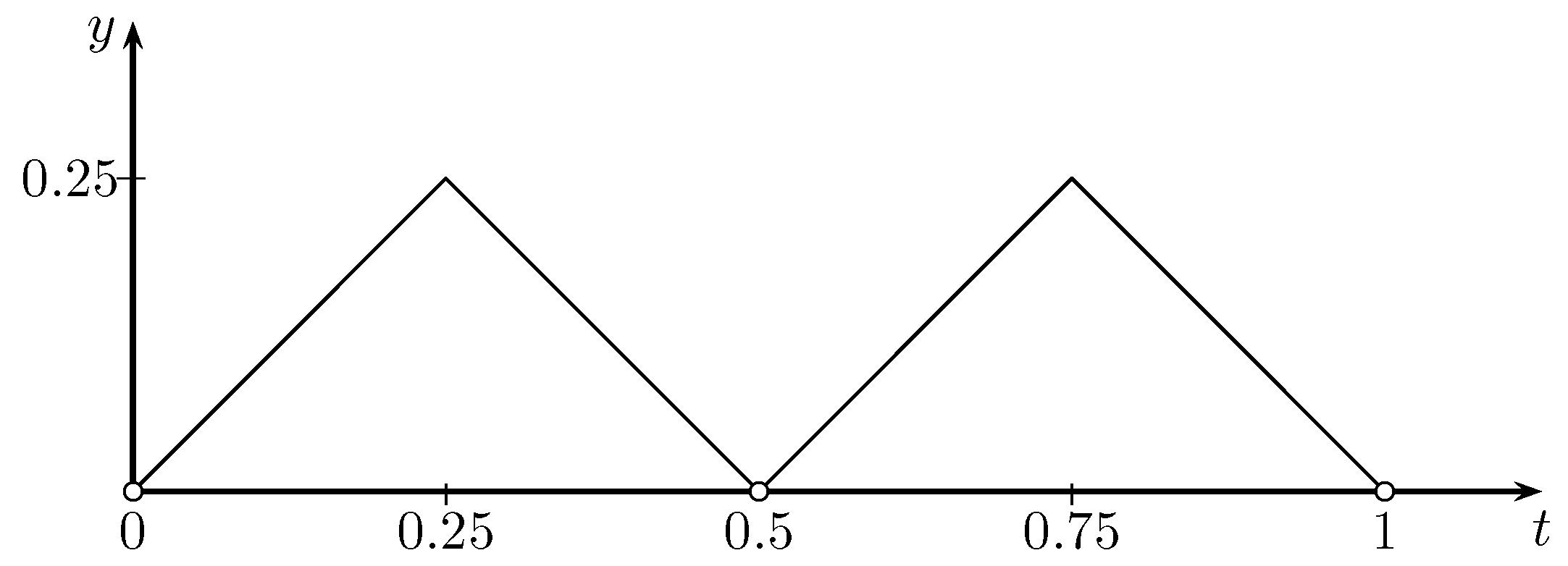

Then, we define

and give its graph in

Figure 1.

Now, reaching (

16) or (

17) at the step

k can be expressed as

Because

, we can attain this when

Taking integral values from

, the variable

k makes the value of the right side of (

20) increase very fast, whereas the positive values of

slightly change when

k increases. We also have the estimate

for all

. Thus, the minimum value of

satisfying (

16) or (

17) is governed by

. In practice, since

and

r are not too large, we may always determine such a value of

.

On the other hand, if we find a sequence of rational numbers

converging to

and a strictly increasing sequence of natural numbers

such that

we can determine

, following the above steps without using the command

evalf (or other equivalent commands).

According to the choice of

, we always have

. Now, we check that

for all choices of

. In the cases of

or

, we also have

or

and easily check that

or

. In the case

, since (

6), (

9) and (

10) are all satisfied, we have

and need to check (

21) directly. Indeed, we can write

hence

We formalize the above discussion by the following lemma.

Lemma 1. For every rational number x, there exists an integer and a rational approximation of such that , and or , depending on or , respectively. Moreover, the Taylor polynomial of the sine function at can give the approximation with the accuracy up to , where r is a given positive integer and the degree of is determined by(

2)

. In practice, since we cannot use

from (

4) or (

5) to approximate the value of the sine function at

x, we replace

with

instead. Such a changed polynomial can be either

depending on

odd or even, respectively. Because

, we derive the estimate

from (

2). Now, we need to estimate

, which we shall call the

practical error.

Firstly, we choose the polynomial (

22) and try to make an estimate for

or

Since

(

24) can be written as

hence we obtain the estimate

Letting

, from (

21) and (

25), we derive

To estimate the sum in (

26), we will use inequalities related to factorial numbers, so we need some properties of the generalized factorial function, the Gamma function:

has been proven to be a log-convex function on the interval

; thus, for

we have

(see [

12] Section 2). In addition, for a non-negative integer

n, we have

, and for

,

with integers

satisfying

, we derive the following inequality from (

27)

From (

26) and (

28), we can write

due to

.

To obtain the desired estimate of

, we use an approximate value of the Modified Bessel function of the First kind

which is

according to the approximation formula in ([

13] Section 3). Moreover, we have the estimate

that we now check only for

. Indeed, if

, then

, hence

; if

, we have

or

. These estimates for

lead to

Finally, from (

28), we derive

Similarly, if the polynomial (

23) has been chosen, we are led to the estimate

In this case, applying (

27), we obtain the inequality

To estimate the right-hand side of (

31), we use an appropriate inequality of Lazarević and Lupaş in ([

12] p. 95–96), stated in the form of

where

and

. Applying (

32) for

(

) and

, we easily obtain the inequality

Combining (

30), (

31) and (

33), we finally get the desired estimate

Thus, both of approximate polynomials (

22) and (

23) satisfy the same inequality for the practical error; that is

Finally, for a real number

x and its rational approximation

, we have the following estimation

where

is obtained from

, as indicated in Lemma 1, but for

instead. If

r is replaced with

in (

2) and (

6), and

, then

.

To sum up, we state our main result in this section by the following theorem.

Theorem 1. Let x be a real number and be a rational approximation of x such that , where r is a given positive integer. Then, from Lemma1 applied to , there exists a polynomial having the form of (

22)

or (

23)

that gives the approximation . 4. Algorithm for Pointwise Polynomial Approximation

Given a rational number

x and an integer

, we will construct an algorithm to evaluate the approximate value

a of

so that

is less than an arbitrarily small tolerance, and to display the result in the correct number

h of significant digits. The first task can be completed by the steps in Algorithm 1, following the steps as indicated in

Section 3, above Lemma 1. This algorithm can be converted into a MAPLE procedure called

ApproxSine that takes the two arguments

x and

r. The output of

ApproxSine(x,r) is

a with

,

. This estimation for

is obtained when

r is replaced with

in (

2) and (

6). Before completing the second task, we note that the accuracy up to the

r-th decimal digit after decimal point is in general quite different from

r significant digits. For instance, if

and

, then

and

evalf[4](a) = evalf[4](b), but, if

and

, then

evalf[6](a) ≠

evalf[6](b), whereas

. Therefore, we should first count how many zero consecutive digits there are right after decimal point contained in the number

a =

ApproxSine(x,r), and assume that the number of such digits is

k, which is

(

). In fact, the number

k can be determined easily, for instance, by a simple

while-do loop in MAPLE as follows:

| > a:=ApproxSine(x,h): |

| > k:=0: |

| > while (abs(a)*10^k-1<0) do |

| > k:=k+1: |

| > end do: |

Then, the second task can be completed by displaying the output of

ApproxSine(x,k + h + 1) in

h significant digits, which is the calling

evalf[h](ApproxSine(x,k + h + 1)). Now, we can make another MAPLE procedure named

Sine to perform the second task when using

ApproxSine as its local variable. The calling sequence

Sine(x,h) returns an approximate value

a of

such that the correct number of significant digits of

a is equal to

h. In the following, we present Algorithm 1 as a pseudo-code algorithm as

| Algorithm 1 |

| Input:x (rational number), r (positive integer) |

| Output: with |

![Mca 23 00037 i001 Mca 23 00037 i001]() |

Similarly, for approximating values of the cosine function, we use the Taylor polynomial of

:

where

and

The integer

is determined by the same way as the one in the previous sections and the index

k satisfies

due to

. Hence, depending on

even or odd,

is approximated by

or

respectively. Then, we have a similar algorithm to evaluate approximate values of

and a corresponding MAPLE procedure

Cosine taking the same arguments

x and

h. In the body of

Cosine,

ApproxSine becomes its local variable

ApproxCosine when the variable

a in

ApproxSine (as

a in Algorithm 1) is assigned to these corresponding values of

.

Cosine also returns approximate values of the cosine function in a given correct number of significant digits. In practice,

becomes

when

takes the position of

p, and again we can check the estimate

. As a convention, we will present all algorithms afterwards in a pseudo–code form and use corresponding MAPLE procedures with names to make calculations. Now, we implement our algorithms with some values of

x and

h, and the results of the calling sequences

Sine(x,r) and

Cosine(x,r) are given in

Table 1.

5. Algorithm for Piecewise Polynomial Approximation

We recall here what is needed for initial settings to the piecewise approximation process. For a given number

, we find an integer

such that the Taylor polynomial

at

,

, can be used to approximate

with the accuracy of

, where

r is a given positive integer. Because we only get rational approximate values of

p, we have found a way (as presented in previous sections) to determine such a value

, remaining the approximation

with the accuracy of

, where

is obtained from

by replacing

p with

. In addition, we have proved that

, or likewise

and

where

. In fact, more exactly, we have

and

for such a chosen

. Moreover, for every

, we can use

to approximate

because of

(note that

). Indeed, we have the estimate

and get the desired inequality when

or more strictly,

. However, this is obviously satisfied because

Therefore, we have the approximation

with the accuracy of

if we replace

r with

. This extension of our pointwise approximating result can be a starting point to construct a piecewise function

F that approximates the sine function on an interval

.

From most parts of Algorithm 1 (only without Step 2), we can derive a simpler algorithm that results in three objects k, and (respectively, , p and a in Algorithm 1) from an input number . For convenience, we will use the name FindPoint of a MAPLE procedure performing such an algorithm with one real argument, so its usage can be given in one of the forms: FindPoint(α) = [k,,], FindPoint(α)[1] = k, FindPoint(α)[2] = and FindPoint(α)[1] = .

Because the sine function is odd, we only need to find the function

F on

with

. In the cases of

and

, we may set

on

and

on

, respectively, where

and

n is determined by Algorithm 1. Therefore, it is sufficient to construct a piecewise approximate function

F on

when

.

On the other hand, from the choice of

in Algorithm 1 to approximate

, we now change it to

This choice is to guarantee the precision for our later construction of

F. Moreover, it is easy to check that if

are numbers such that

=

FindPoint()[2],

=

FindPoint()[2] then we have |α −

| < 0.8 and |β −

| < 0.8.

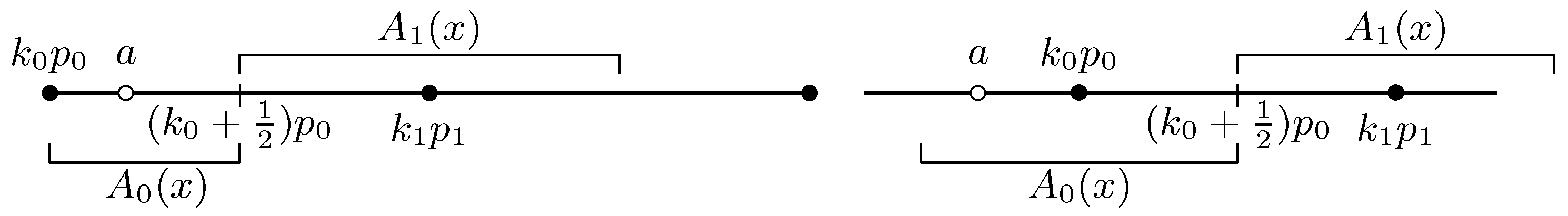

Firstly, we put

and similarly,

The cases of

and

are illustrated in

Figure 2.

Now, we consider the case

(or even

); then, we choose

on

because

as shown above for

. Next, we consider the case when

. We extend the approximation process to determine the next integer by setting

(

k0 +1 )

p0, and also

(

k0 + 1)

p0,

(

k0 +1 )

p0. Because

, by the choice of

, we must have

. Note that, from (

36), we also have

. If

, we then have cases that are illustrated in

Figure 3. It is obvious that we can set

on

, regardless of

or

. However, we will take different settings on the other intervals, depending on where

b is from

. These settings can be given in the following:

If

, we continue to put

In the case of

, we have the following choice for

F according to the different positions of

b from

:

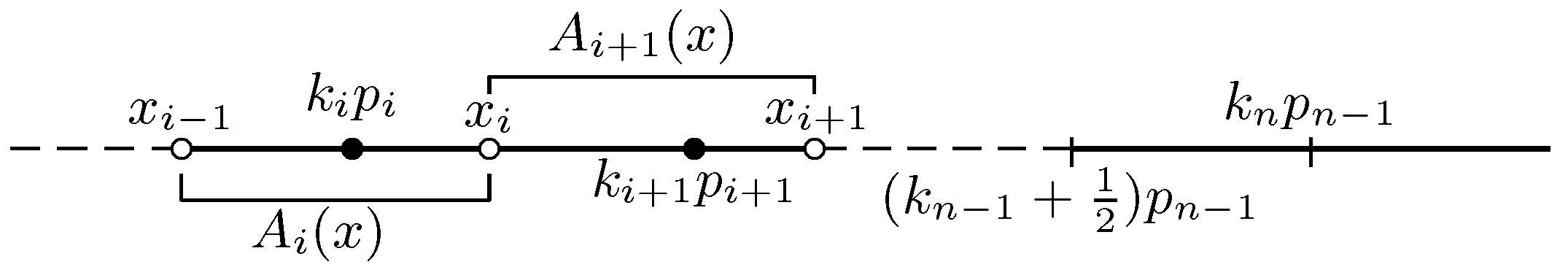

Finally, continuing the above process, we obtain a finite sequence

,

, …,

such that

(

ki−1 + 1)

pi−1,

, and

. Then, we also gain two sequences

(

ki−1 + 1)

pi−1 and

(

ki−1 + 1)

pi−1,

. Before giving our settings for this general case, let us consider ending points of the approximate intervals by taking two consecutive nodes

,

. If we let

, then we have

, so that

To show the assertion of (

39), we refer to (

36) again and take the same arguments as above. The inequality (

39) explains why we can choose

F on

to guarantee the accuracy of

.

In short, by the setting (

36), when

with

(see

Figure 4), we have constructed the piecewise function

F that approximates the sine function on

(

) with the accuracy of

. After getting the finite sequences

,

,

and the function

defined as above, we choose

F as follows:

If

:

If

:

Although steps for construction of F have been presented, we give here an abbreviated algorithm, Algorithm 2, to specifically determine this function.

In Algorithm 2, the approximate Taylor polynomials

are derived from Algorithm 1 and given here as

or

depending on

odd or even. The polynomial

takes a similar expression, where

is replaced with

.

In

Figure 5, the graphs of

F on intervals

with different values of

r are depicted by a MAPLE procedure corresponding to Algorithm 2.

| Algorithm 2 |

| Input:FindPoint (the MAPLE procedure, mentioned above); (real numbers); r (positive integer) |

| Output: on with |

![Mca 23 00037 i002 Mca 23 00037 i002]() |

To obtain a similar algorithm to approximate the cosine function, we just replace the functions

with

or

depending on

even or odd, and

with

Note that the cosine function is even, so we should regulate appropriately the function

F in Algorithm 2.

6. An Application of Numerical Integration

Suppose

is approximated by a polynomial

with the accuracy up to

on an interval

. Then, we will find an estimation of the absolute error for

and

with some natural number

s. One of the possibilities to compute

via

is given in ([

14] Lemma 1.3), but here we use our previously established results as follows. Firstly, we can write

where

and we also have

for all

. Hence, we have that

From this relation, we easily derive the estimate

Now, applying (

28), we obtain

and relying on the inequality ([

15] p. 93), we imply

Using (

44), (

45) and the formulas in [

15], such as

we attain the estimate

Finally, from (

43), (

46) and the formula for the sum of a geometric progression, we derive the following estimate

also due to

.

Thus, we have proven the result stated in the following lemma.

Lemma 2. Assume that is approximated by the polynomial on an interval with the accuracy up to , derived from the Taylor polynomial about , and , by replacing p with its rational approximation , as constructed in the previous sections. Then, ifis approximated bythe error has the following upper bound Because the upper bound of

in (

47) only depends on

r,

s, except the integral on the right side, we then easily imply the following theorem.

Theorem 2. Suppose an interval can be expressed as a finite union and is approximated by the polynomials on the intervals , , with the accuracy up to , where and . Then, if Q is an arbitrary polynomial andis approximated bythe error has the following upper bound Based on Algorithm 2, we have established a procedure, say

AppIntSin, to approximately compute the integral given in Theorem 2 with the desired accuracy.

AppIntSin’s arguments may take input values in order as for

a,

b,

r,

s and

Q, and we can even choose the value for

r to get the result satisfying a given tolerance. For instance, to have the estimate

, a positive integral value for

r may be chosen as to satisf

due to

and

.

Let us consider the following example. Find the approximate value of

with the accuracy up to

. We first derive

from (

49) with the settings

,

,

and

. Performing Algorithm 2 with

,

and

, we determine

,

,

and find the appropriate case (

41). Then, the calling

AppIntSin() gives us the desired approximation to

I, first put in symbolic form as

and then in numerical value

7. Conclusions

Motivated by the powerfulness and popular use of modern computer algebra systems in terms of its ease in programming and accessibility to the value of with a very high accuracy, simple and easily-implemented algorithms have been designed to approximate the trigonometric functions with an arbitrary accuracy by a means of Taylor expansion. We have provided a careful analysis of the proposed approach with numerical illustrations. Nevertheless, our initial intention is not to compare or surpass other well-developed and well-established existing algorithms in the research literature; rather, we emphasize on the simplification of our methods as a computational application of CAS for which we highlight below:

Approximating values of the trigonometric functions by Taylor polynomials with an arbitrary accuracy, taking the form of , .

Using a so-called spreading technique to switch the process of pointwise approximating to that of piecewise approximating all over an arbitrary interval.

Performing only arithmetics on very small values of finite rational numbers.

Only using one command of CAS to access approximate values of .

Our approximation method in Algorithm 2, utilizing the achievements of computing values of Pi with more and more exact significant digits and the power of current computer algebra systems, presents the special partition of an interval with nodes ’s, where and is an approximate value of . This is a great advantage for local approximation to values of the sine (or cosine) function f on each definite interval because ’s are all finite rational numbers. These nodes also play a role as adjoining centers of approximation, whereas the functional coefficients still take their normal values at .

Our procedure, corresponding to Algorithm 1, gives not only numerical results of great precision but also a remedy for mistakes in the use of some existing mathematical software programs when displaying wrong results with a small number n of significant digits (e.g., ). In such cases, the argument x’s to approximate are commonly close to ’s, .

In addition, Theorem 2 can be applied to look for desired estimates to the best -approximation of the sine function in the vector space of polynomials of degree at most ℓ, a subspace of .