Moving Multiscale Modelling to the Edge: Benchmarking and Load Optimization for Cellular Automata on Low Power Microcomputers

Abstract

1. Introduction

2. State of the Art

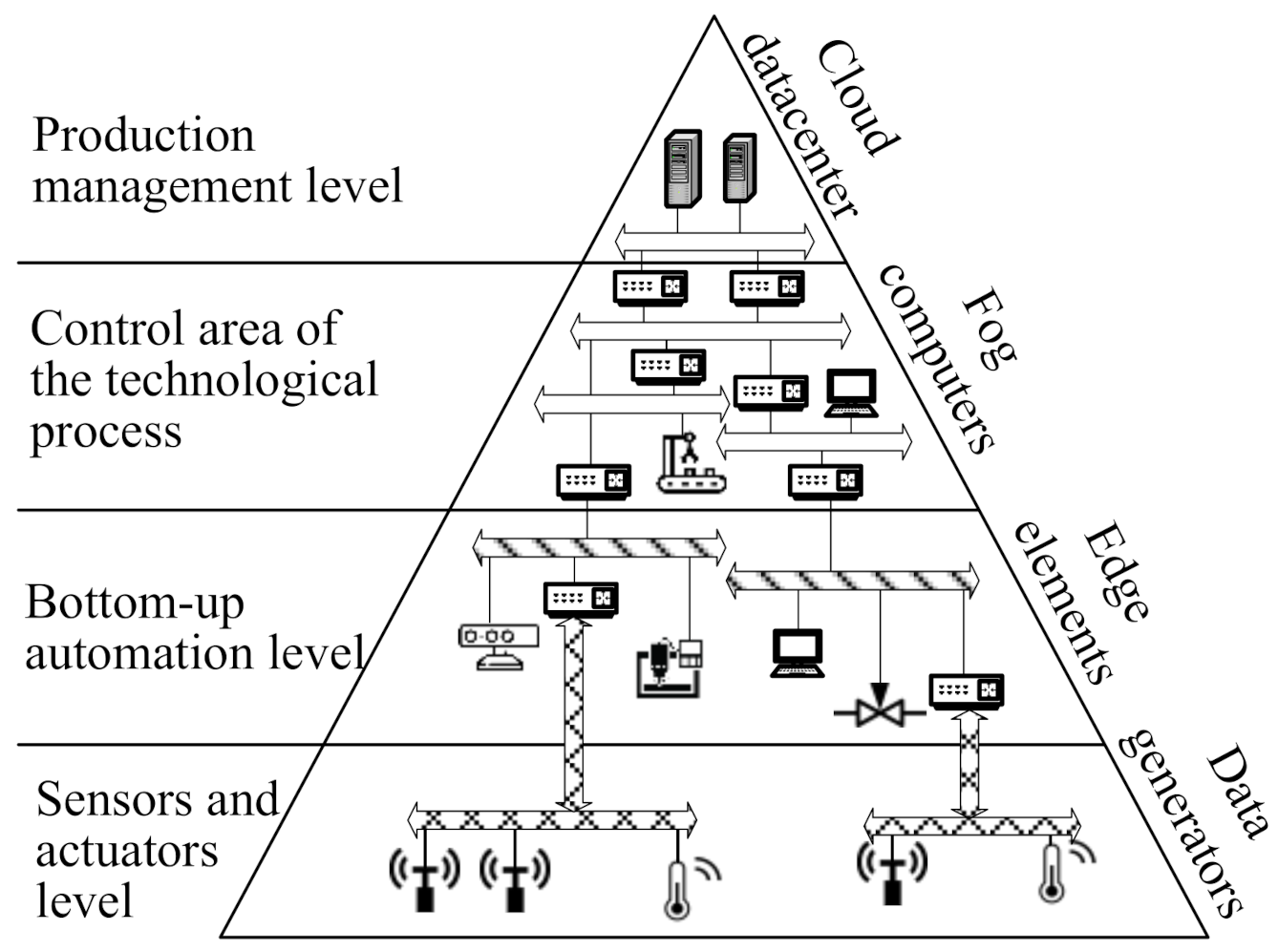

2.1. Computational Problems in Edge Architectures

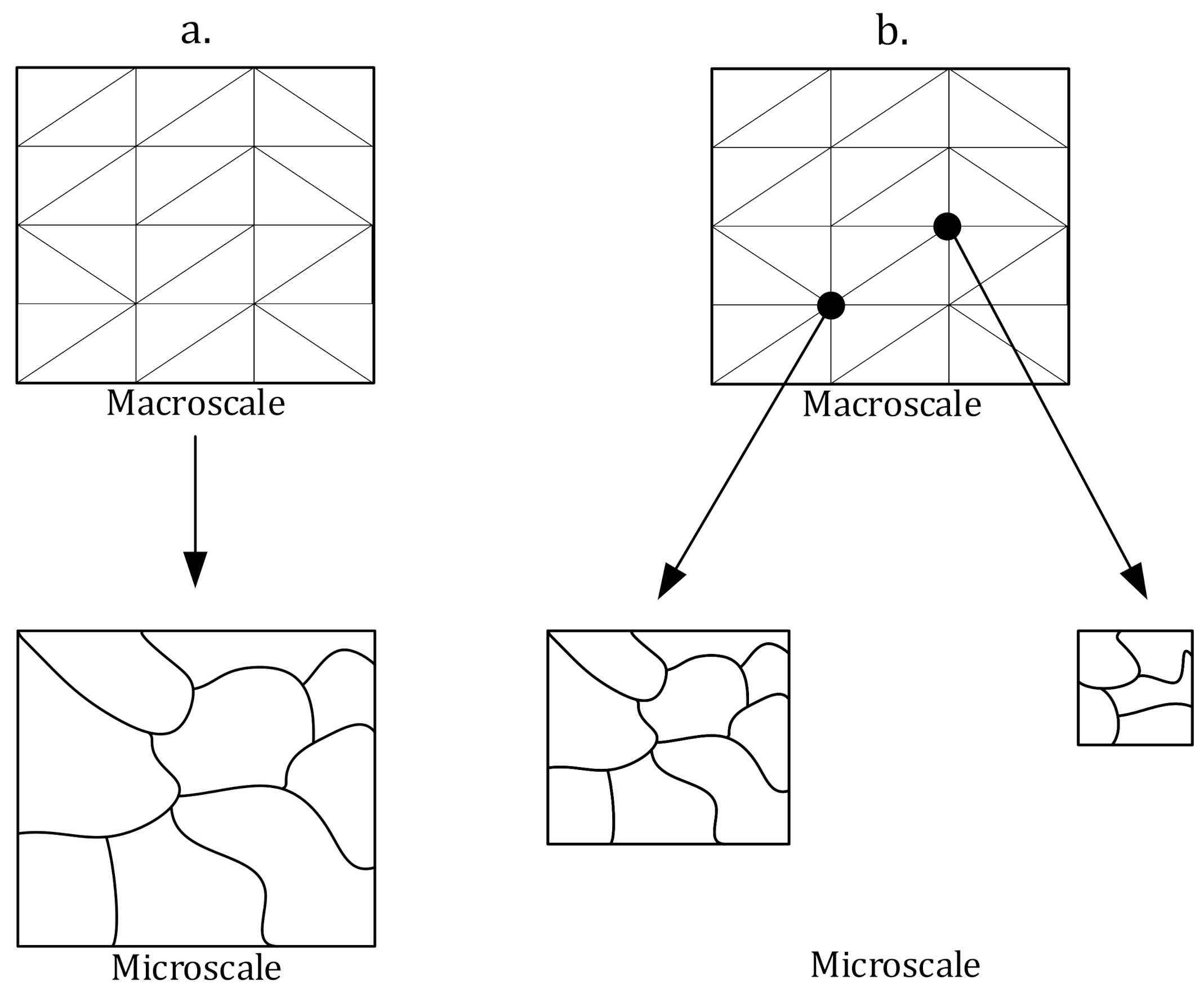

2.2. Multiscale Modelling vs. Edge Architectures

- Full-field models with the same computational domain is used in both macro and micro scale;

- Mean-field models with a single integration point in macro scale used as an input for micro scale computations.

2.3. Our Contribution

3. Benchmarking

3.1. Definition of the Tasks Being Solved

- Periodic—cells at the opposite side are considered to be neighbors of a cell at the end of the grid;

- Absorbing—it is assumed that there are only empty (dead) cells outside the grid.

| Algorithm 1 Cell’s state transition rule using Von Neumann neighborhood and absorbing boundary condition. |

|

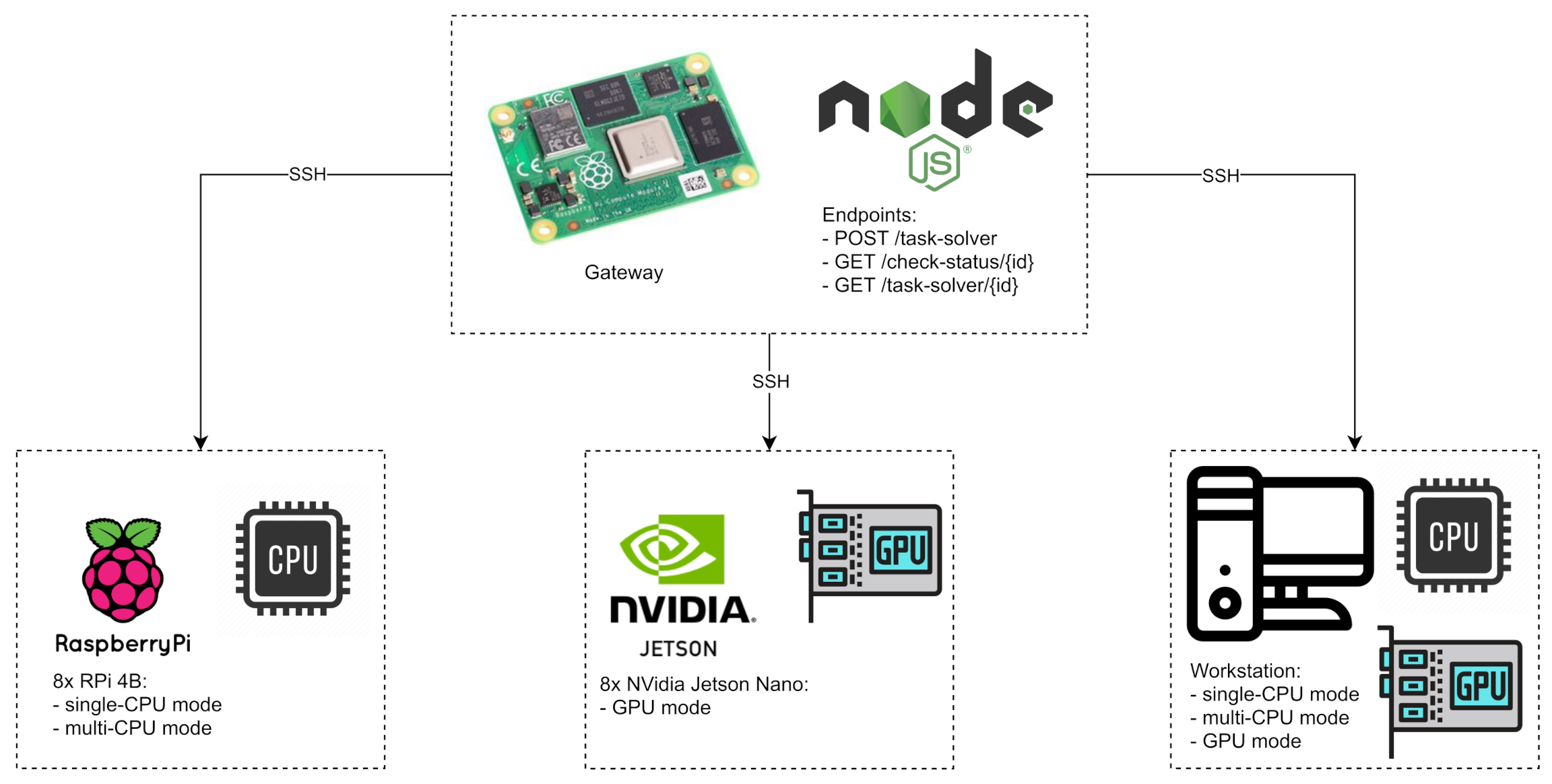

3.2. Architecture of the Edge System

- RPi4B does not have a GPU unit, so this mode is not available for this device. Although combination of single-CPU and multi-CPU modes can be considered, however any significant benefits should not be expected, due to the availability of only 4 cores;

- Jetson has memory shared between CPU and GPU units. It is possible to use both of them simultaneously, but it has two drawbacks. First, GPU computing makes sense for a large portion of data. In this case, the memory of 2 GB will be the limiter-it will not be possible to execute similar task at the same time. Second, regardless of the task class, computing on the GPU in CUDA requires at least one CPU core to execute main application (host operations). Only three cores are then available, which makes the simultaneous use of both units inefficient.

- POST/task-solver—schedules computations on the infrastructure. CA domain size is given as an argument. As a response, it returns the unique id of the requested task;

- GET/check-status/{id}—checks the status of computations. It can return one of four states: does-not-exist, in-queue, computing, completed;

- GET/task-solver/{id}—returns the results of computations, if they have been completed. Otherwise, it returns an error.

- Sequential—written only with C;

- Parallel on CPU—for this purpose, OpenMP library was used, which allows the program to be run on multiple cores using #pragma preprocessor directives;

- Parallel on GPU—CUDA library in version 10.1 was used for implementation. Higher versions are not supported on Jetson platform. Main execution unit is kernel, the single launch of which computes the state transition in one full iteration of CA.

3.3. Benchmarking Methodology

- —computation time for parallel processing (multiple cores involved);

- —computation time for sequential processing (single core involved);

- —two dimensional domain size.

3.3.1. Single-CPU Mode

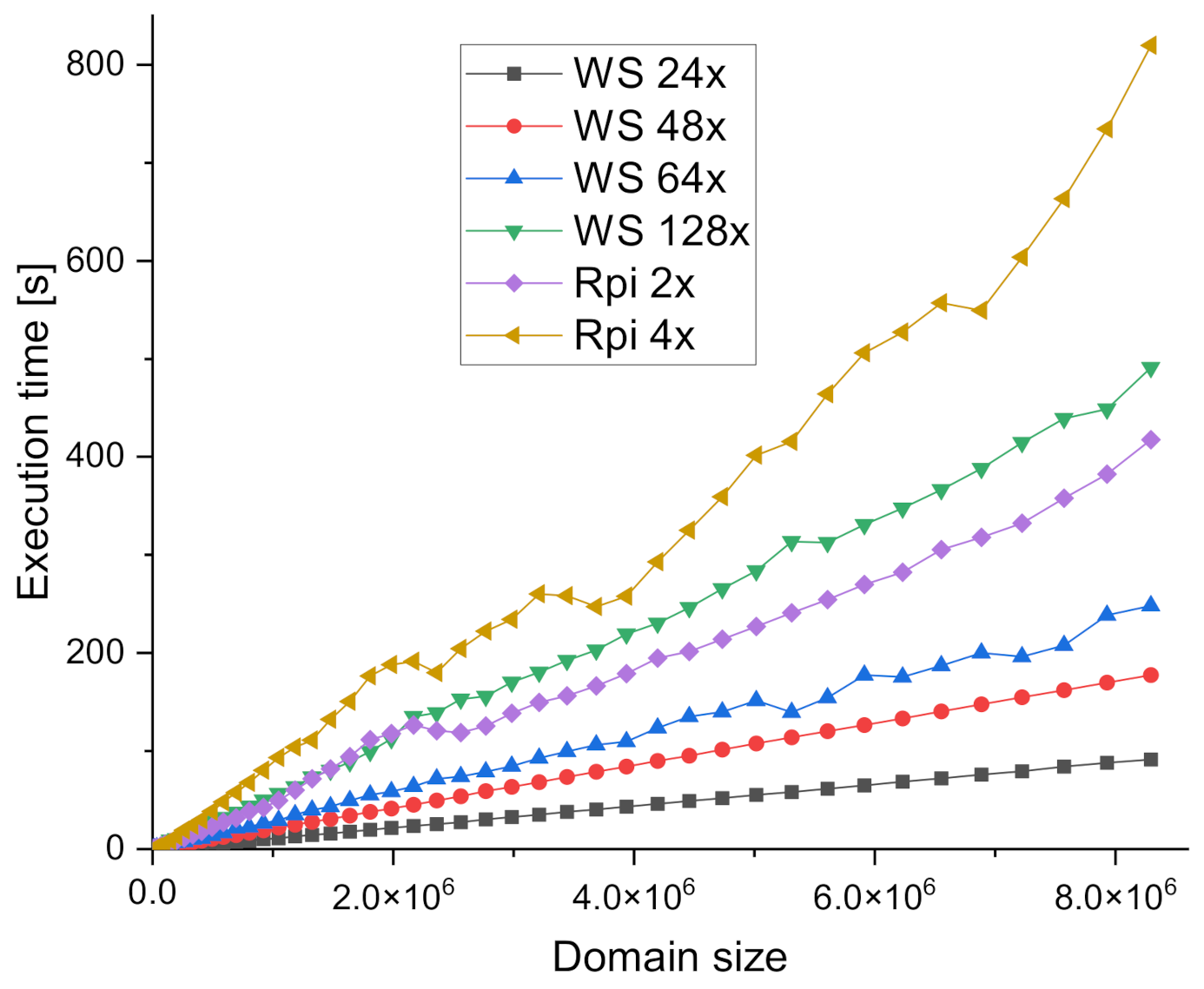

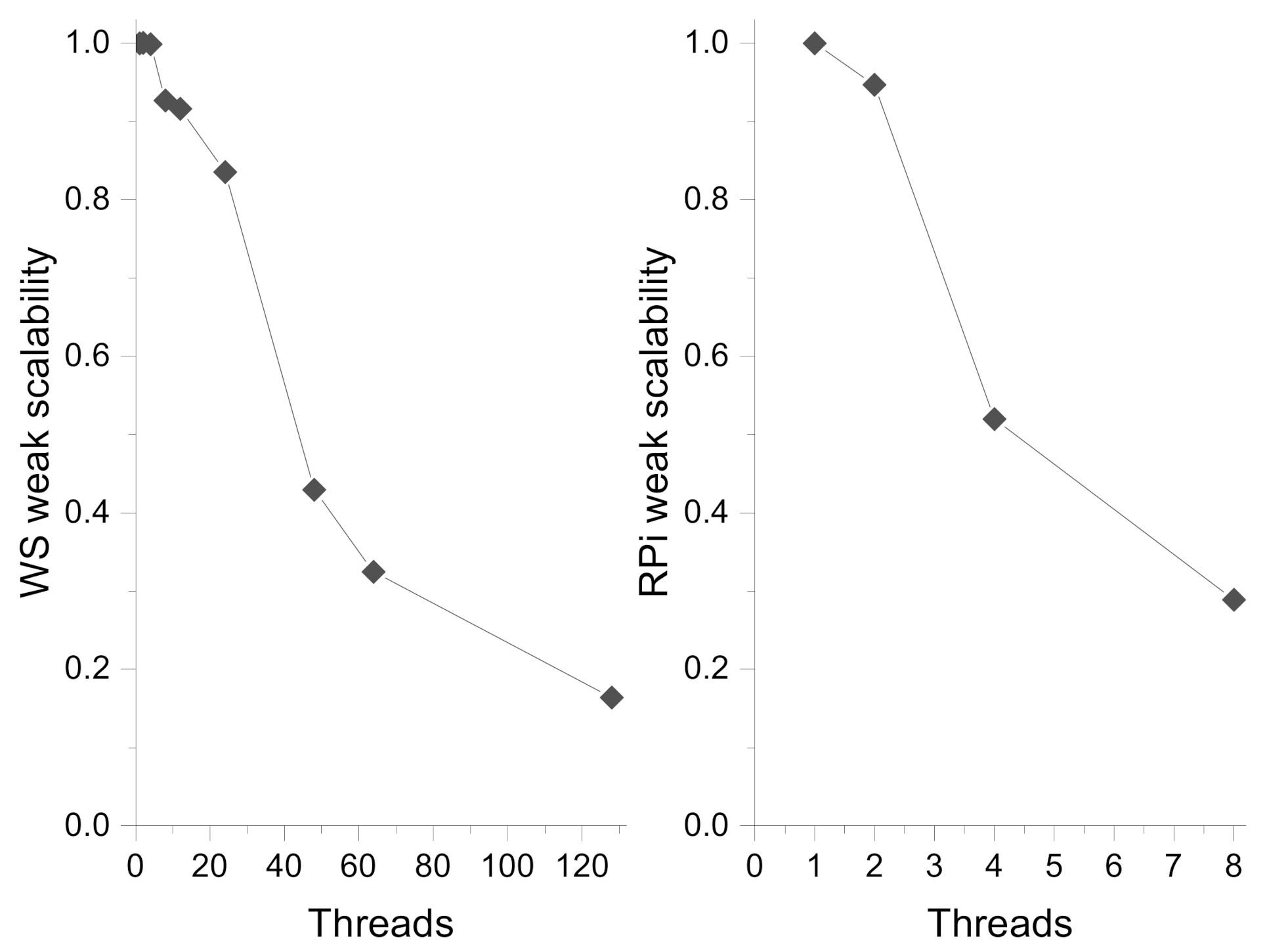

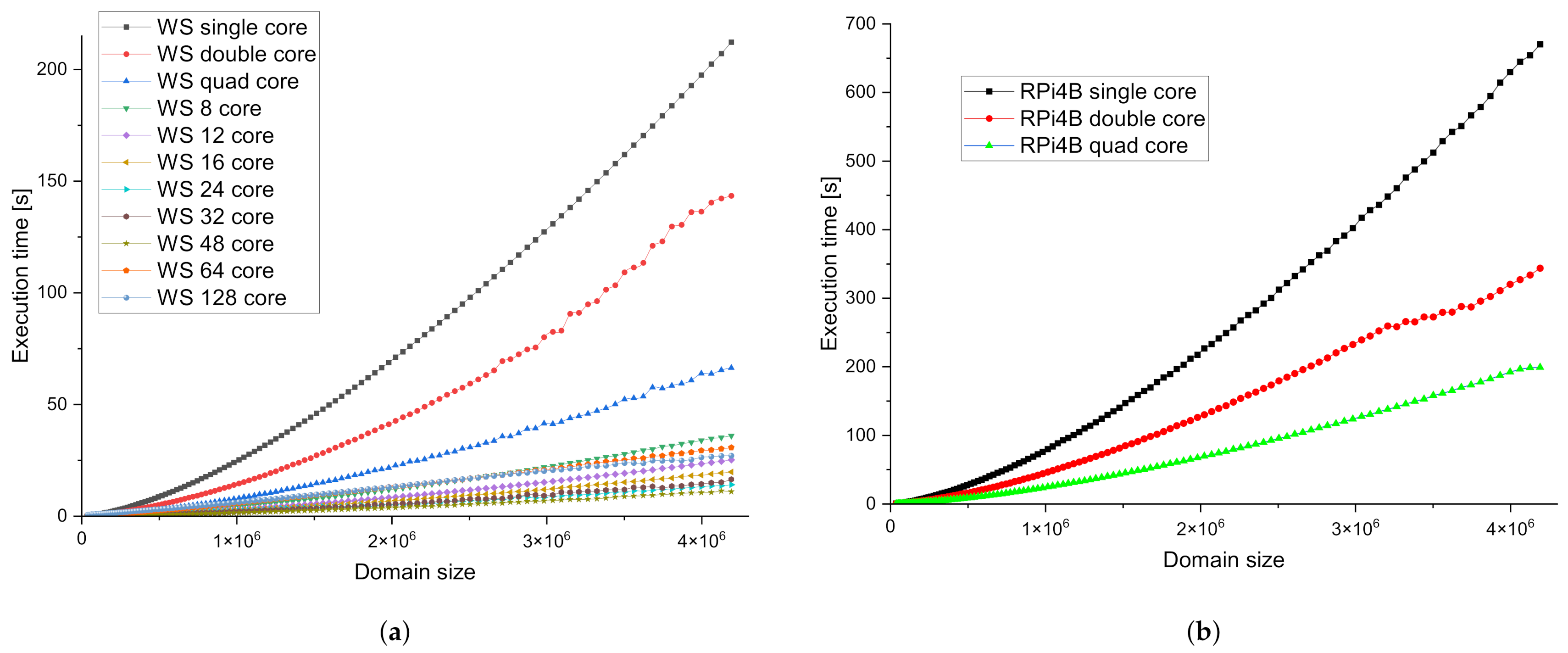

3.3.2. Multi-CPU Mode

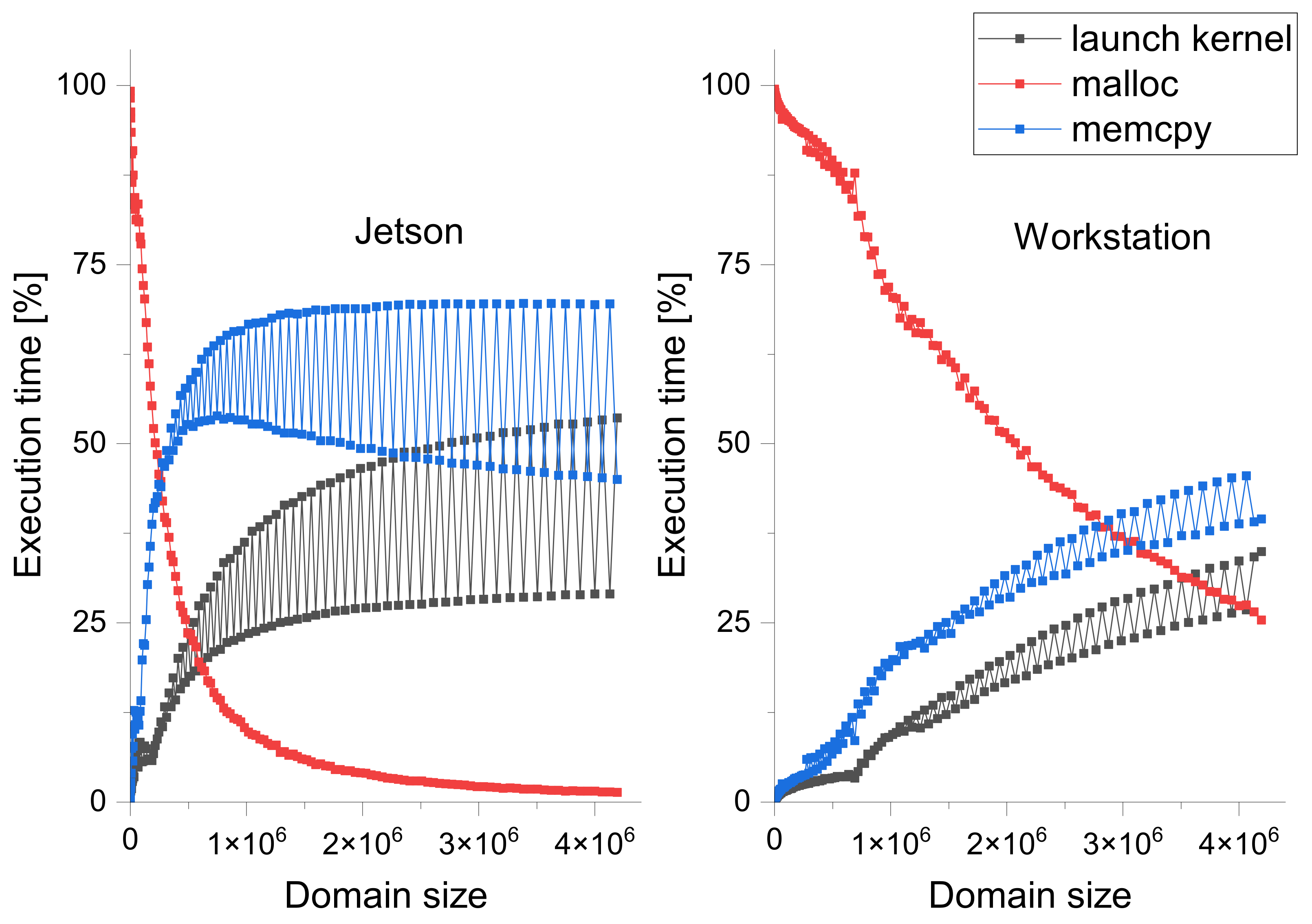

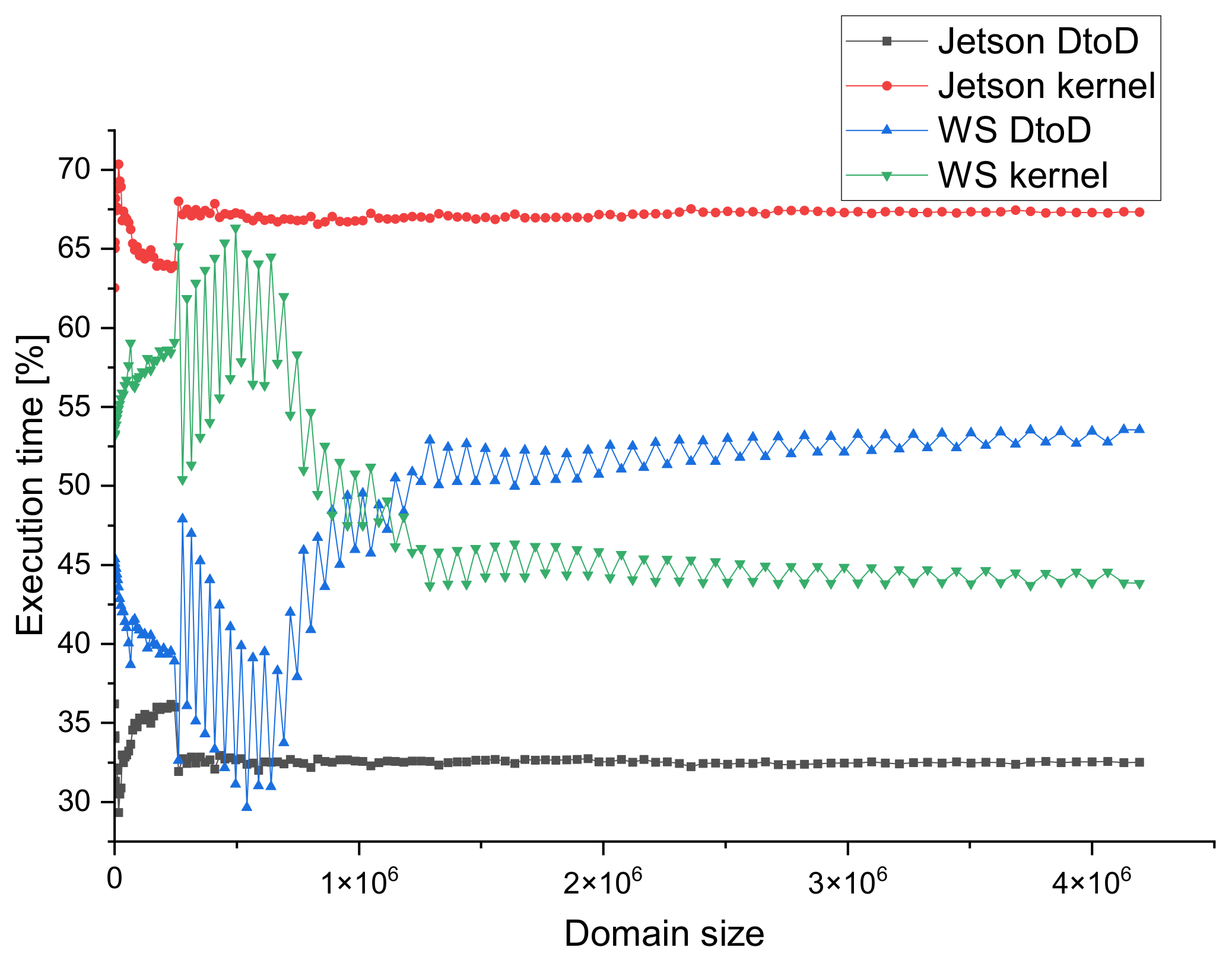

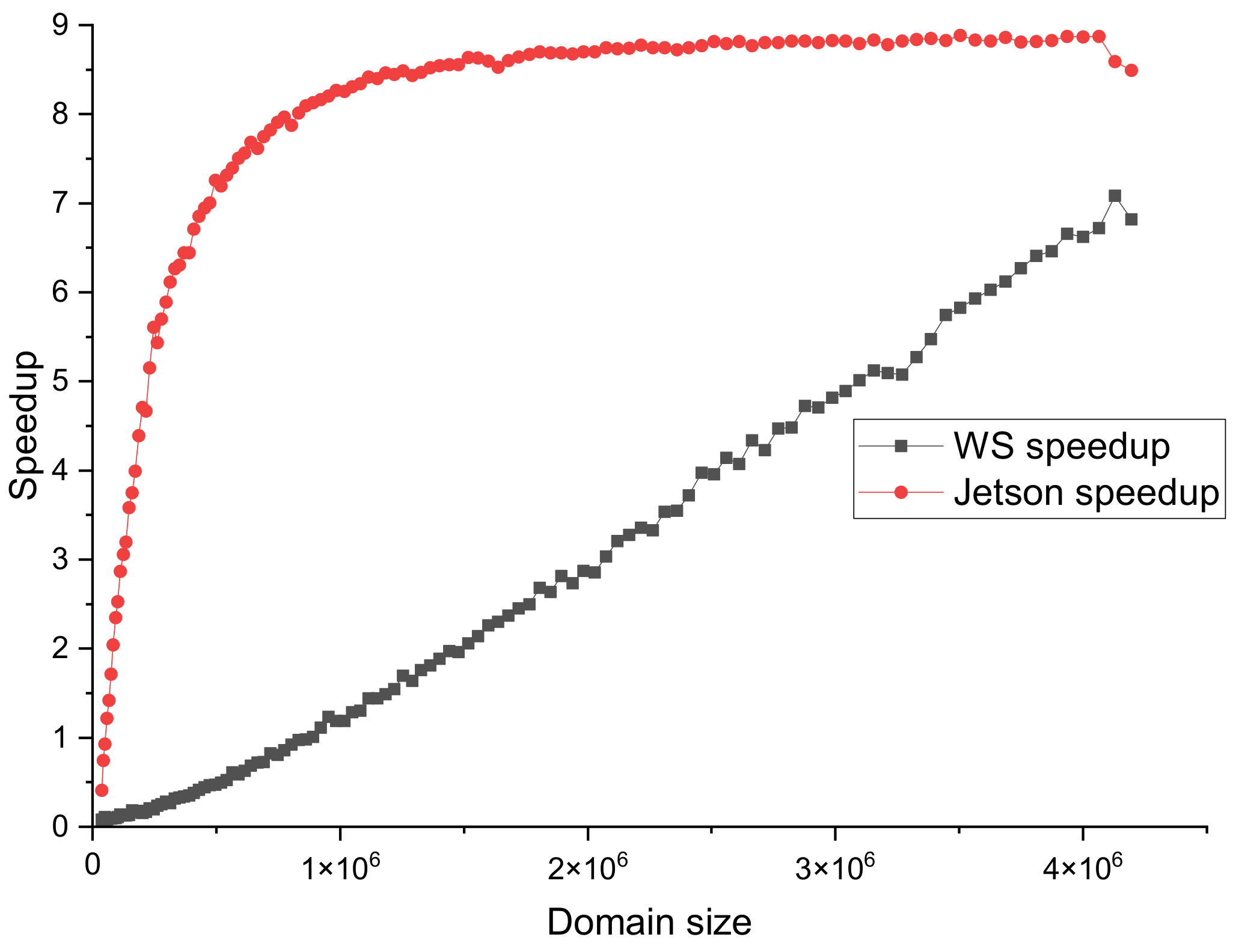

3.3.3. GPU Mode

3.3.4. Energy Consumption

4. Load Distribution Metamodel

5. Results, Tests, and Validation

- Multi-CPU mode with WS and RPi4B;

- GPU mode with WS and Jetson;

- Multi-CPU + GPU modes with RPi4B and Jetson;

- Multi-CPU + GPU modes with all devices.

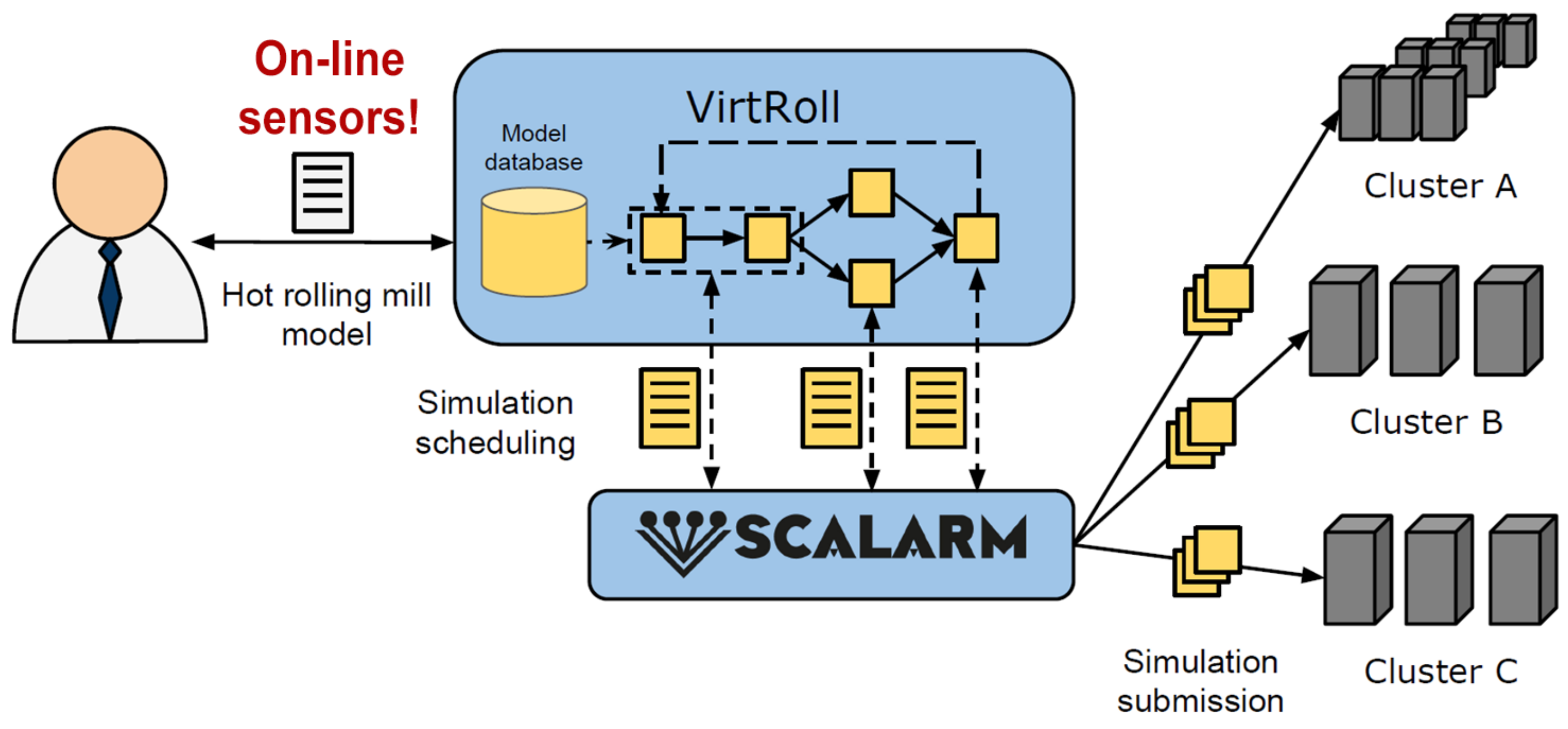

6. Proposition of Industrial Application

7. Conclusions and Further Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Top500. Top500 Supecomputers. Available online: https://www.top500.org/ (accessed on 4 February 2021).

- RaspberryPi Trading Ltd. RaspberryPi Compute Module 4. 2021. Available online: https://datasheets.raspberrypi.org/cm4/cm4-product-brief.pdf (accessed on 4 February 2021).

- Michiels, B.; Fostier, J.; Bogaert, I.; De Zutter, D. Weak Scalability Analysis of the Distributed-Memory Parallel MLFMA. IEEE Trans. Antennas Propag. 2013, 61, 5567–5574. [Google Scholar] [CrossRef][Green Version]

- Bellavista, P.; Foschini, L.; Mora, A. Decentralised Learning in Federated Deployment Environments: A System-Level Survey. ACM Comput. Surv. 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Hong, C.H.; Varghese, B. Resource Management in Fog/Edge Computing: A Survey on Architectures, Infrastructure, and Algorithms. ACM Comput. Surv. 2019, 52, 1–37. [Google Scholar] [CrossRef]

- Naveen, S.; Kounte, M.R. Key Technologies and challenges in IoT Edge Computing. In Proceedings of the 2019 Third International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Palladam, India, 12–14 December 2019; pp. 61–65. [Google Scholar] [CrossRef]

- Basaran, S.T.; Kurt, G.K.; Chatzimisios, P. Energy-Efficient Over-the-Air Computation Scheme for Densely Deployed IoT Networks. IEEE Trans. Ind. Inform. 2020, 16, 3558–3565. [Google Scholar] [CrossRef]

- De Souza, P.S.S.; Rubin, F.P.; Hohemberger, R.; Ferreto, T.C.; Lorenzon, A.F.; Luizelli, M.C.; Rossi, F.D. Detecting abnormal sensors via machine learning: An IoT farming WSN-based architecture case study. Measurement 2020, 164, 108042. [Google Scholar] [CrossRef]

- Yang, J.; Xie, Z.; Chen, L.; Liu, M. An acceleration-level visual servoing scheme for robot manipulator with IoT and sensors using recurrent neural network. Measurement 2020, 166, 108137. [Google Scholar] [CrossRef]

- Alarifi, A.; Alwadain, A. Killer heuristic optimized convolution neural network-based fall detection with wearable IoT sensor devices. Measurement 2021, 167, 108258. [Google Scholar] [CrossRef]

- Castañeda-Miranda, A.; Castaño-Meneses, V.M. Smart frost measurement for anti-disaster intelligent control in greenhouses via embedding IoT and hybrid AI methods. Measurement 2020, 164, 108043. [Google Scholar] [CrossRef]

- Grzesik, P.; Mrozek, D. Metagenomic Analysis at the Edge with Jetson Xavier NX. In Computational Science–ICCS 2021; Paszynski, M., Kranzlmüller, D., Krzhizhanovskaya, V.V., Dongarra, J.J., Sloot, P.M., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 500–511. [Google Scholar]

- Samie, F.; Tsoutsouras, V.; Bauer, L.; Xydis, S.; Soudris, D.; Henkel, J. Computation offloading and resource allocation for low-power IoT edge devices. In Proceedings of the 2016 IEEE 3rd World Forum on Internet of Things (WF-IoT), Reston, VA, USA, 12–14 December 2016; pp. 7–12. [Google Scholar] [CrossRef]

- Xu, X.; Liu, Q.; Luo, Y.; Peng, K.; Zhang, X.; Meng, S.; Qi, L. A computation offloading method over big data for IoT-enabled cloud-edge computing. Future Gener. Comput. Syst. 2019, 95, 522–533. [Google Scholar] [CrossRef]

- Ravindra, W.S.; Devi, P. Energy balancing between computation and communication in IoT edge devices using data offloading schemes. In Proceedings of the 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT), Coimbatore, India, 20–21 April 2018; pp. 22–25. [Google Scholar] [CrossRef]

- Singh, S. Optimize cloud computations using edge computing. In Proceedings of the 2017 International Conference on Big Data, IoT and Data Science (BID), Pune, India, 20–22 December 2017; pp. 49–53. [Google Scholar] [CrossRef]

- Alam, M.G.R.; Hassan, M.M.; Uddin, M.Z.; Almogren, A.; Fortino, G. Autonomic computation offloading in mobile edge for IoT applications. Future Gener. Comput. Syst. 2019, 90, 149–157. [Google Scholar] [CrossRef]

- Sonkoly, B.; Haja, D.; Németh, B.; Szalay, M.; Czentye, J.; Szabó, R.; Ullah, R.; Kim, B.S.; Toka, L. Scalable edge cloud platforms for IoT services. J. Netw. Comput. Appl. 2020, 170, 102785. [Google Scholar] [CrossRef]

- Carvalho, G.; Cabral, B.; Pereira, V.; Bernardino, J. Computation offloading in Edge Computing environments using Artificial Intelligence techniques. Eng. Appl. Artif. Intell. 2020, 95, 103840. [Google Scholar] [CrossRef]

- Peng, G.; Wu, H.; Wu, H.; Wolter, K. Constrained Multi-objective Optimization for IoT-enabled Computation Offloading in Collaborative Edge and Cloud Computing. IEEE Internet Things J. 2021, 8, 13723–13736. [Google Scholar] [CrossRef]

- Hajder, M.; Nycz, M.; Hajder, P.; Liput, M. Information Security of Weather Monitoring System with Elements of Internet Things. In Security and Trust Issues in the Internet of Things; Taylor & Francis Group: Boca Raton, FL, USA, 2020; pp. 57–84. [Google Scholar]

- Hajder, P.; Rauch, L. Reconfiguration of the Multi-channel Communication System with Hierarchical Structure and Distributed Passive Switching. In Computational Science—ICCS 2019, Part II, Proceedings of the 19th Annual International Conference on Computational Science (ICCS), Faro, Portugal, 12–14 June 2019; Lecture Notes in Computer, Science; Rodrigues, J.M.F., Cardoso, P.J.S., Monteiro, J., Lam, R., Krzhizhanovskaya, V.V., Lees, M.H., Dongarra, J.J., Sloot, P.M.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; Volume 11537, pp. 502–516. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, J.; Yin, Y.; Shen, X.; Shehabeldeen, T.A.; Ji, X. GPU-Accelerated Cellular Automaton Model for Grain Growth during Directional Solidification of Nickel-Based Superalloy. Metals 2021, 11, 298. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, J.; Yin, Y.; Shen, X.; Ji, X. Multi-GPU implementation of a cellular automaton model for dendritic growth of binary alloy. J. Mater. Res. Technol. 2021, 14, 1862–1872. [Google Scholar] [CrossRef]

- Delorme, M.; Mazoyer, J. (Eds.) An Introduction to Cellular Automata. In Cellular Automata: A Parallel Model; Springer: Dordrecht, The Netherlands, 1999; pp. 5–49. [Google Scholar] [CrossRef]

- Buyya, R.; Srirama, S.N. Fog and Edge Computing: Principles and Paradigms; Wiley: Hoboken, NJ, USA, 2019. [Google Scholar]

- Rauch, L.; Madej, L.; Weglarczyk, S.; Pietrzyk, M.; Kuziak, R. System for design of the manufacturing process of connecting parts for automotive industry. Arch. Civ. Mech. Eng. 2008, 8, 157–165. [Google Scholar] [CrossRef]

- Rauch, L.; Sztangret, L.; Pietrzyk, M. Computer System for Identification of Material Models on the basis of Plastometrictests. Archives of Metallurgy and Materials. Arch. Metall. Mater. 2013, 58, 737–743. [Google Scholar] [CrossRef]

- Kitowski, J.; Wiatr, K.; Bala, P.; Borcz, M.; Czyzewski, A.; Dutka, L.; Kluszczynski, R.; Kotus, J.; Kustra, P.; Meyer, N.; et al. Development of Domain-Specific Solutions within the Polish Infrastructure for Advanced Scientific Research. In Parallel Processing and Applied Mathematics (PPAM 2013), Part I, Proceedings of the 10th International Conference on Parallel Processing and Applied Mathematics (PPAM), Warsaw, Poland, 8–11 September 2013; Lecture Notes in Computer Science; Wyrzykowski, R., Dongarra, J., Karczewski, K., Wasniewski, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; Volume 8384, pp. 237–250. [Google Scholar] [CrossRef]

- Rauch, L.; Szeliga, D.; Bachniak, D.; Bzowski, K.; Slota, R.; Pietrzyk, M.; Kitowski, J. Identification of Multi-inclusion Statistically Similar Representative Volume Element for Advanced High Strength Steels by Using Data Farming Approach. Procedia Comput. Sci. 2015, 51, 924–933. [Google Scholar] [CrossRef]

| Parameter | Workstation | RaspberryPi 4B | NVidia Jetson Nano 2 GB | RaspberryPi CM4 |

|---|---|---|---|---|

| CPU | x86, 2× Intel Xeon Silver 4214 CPU 2.20 GHz | ARM v8, Broadcom BCM2711, Cortex-A72 1.5 GHz | ARM Cortex-A57 1.43 GHz | ARM v8, Broadcom BCM2711, Cortex-A72 1.5 GHz |

| No. cores | 12 physical, 24 logical per socket | 4 physical | 4 physical | 4 physical |

| RAM | 12 × 16 GB (192 total) DDR4 with ECC | 4 GB LPDDR4 | 2 GB LPDDR4 | 4 GB LPDDR4 with on-die ECC |

| GPU | NVidia Turing, RTX2080ti 11 GB, 4352 CUDA cores, clock: 1350 MHz | None | NVidia Maxwell, 1280 CUDA cores, clock: 640 MHz | None |

| NIC | 2 × 10 GB ethernet | 1 GB ethernet | 1 GB ethernet | 2 × 1 GB ethernet |

| OS | Ubuntu Server 20.04 x64 | Ubuntu Server 20.04 aarch64 | Ubuntu 18.04 aarch64, dedicated | Ubuntu Server 20.04 aarch64 |

| Other |

| Device | Idle [W] | Min Load [W] | Medium Load [W] | Max Load [W] | Peak [W] |

|---|---|---|---|---|---|

| RPi4B | 3.5 | 4.4 | 5.0 | 6.2 | 6.4 |

| Jetson | 1.1 | 2.4 | 4.5 | 7.6 | 8.4 |

| RTX2080ti | 29 | 65 | 92 | 186 | 210 |

| WS CPU | 137.0 | 182.0 | 248.5 | 291.3 | 304.2 |

| WS CPU + GPU | 137.0 | 258.1 | 366.0 | 488.6 | 532.3 |

| No. Scenario | Time [s] | Power [W] |

|---|---|---|

| 1 | 507.5 | 431 |

| 2 | 76.8 | 306 |

| 3 | 158.0 | 108 |

| 4 | 36.2 | 482 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hajder, P.; Rauch, Ł. Moving Multiscale Modelling to the Edge: Benchmarking and Load Optimization for Cellular Automata on Low Power Microcomputers. Processes 2021, 9, 2225. https://doi.org/10.3390/pr9122225

Hajder P, Rauch Ł. Moving Multiscale Modelling to the Edge: Benchmarking and Load Optimization for Cellular Automata on Low Power Microcomputers. Processes. 2021; 9(12):2225. https://doi.org/10.3390/pr9122225

Chicago/Turabian StyleHajder, Piotr, and Łukasz Rauch. 2021. "Moving Multiscale Modelling to the Edge: Benchmarking and Load Optimization for Cellular Automata on Low Power Microcomputers" Processes 9, no. 12: 2225. https://doi.org/10.3390/pr9122225

APA StyleHajder, P., & Rauch, Ł. (2021). Moving Multiscale Modelling to the Edge: Benchmarking and Load Optimization for Cellular Automata on Low Power Microcomputers. Processes, 9(12), 2225. https://doi.org/10.3390/pr9122225