R-CNN-Based Large-Scale Object-Defect Inspection System for Laser Cutting in the Automotive Industry

Abstract

:1. Introduction

2. Theoretical Background

- Features are extracted from images via a Feature Extraction Network (FEN) that includes the convolutional layers, Batch Normalization (BN) layers, and pooling layers.

- Create high-quality proposals by means of RPN.

- Create feature map based on proposals.

- Use RoI pooling to fix the size of each proposal.

- Using classification layers and border regulation layers, perform classification and regression analysis for boundaries.

- Map the original image again.

- Resize the image by means of bilinear interpolation.

- Adjust the image size to 1024 × 1024 by means of padding to use the backbone network.

- Create feature maps at each layer via ResNet-101.

- An additional feature map is generated from the feature map previously generated by means of the FPN.

- Classification and bounding-box regression outputs are derived by applying each RPN to the final feature map.

- Produce an anchor box by projecting the bounding box regression value obtained from the output to the original image.

- Delete all of the anchor boxes generated by Non-Max-Suppression except for the anchor box with the highest score.

- Size different anchor boxes with RoI alignment.

- Pass the anchor-box value to the Mask branch along with the classification and bounding-box regression branch in Fast R-CNN.

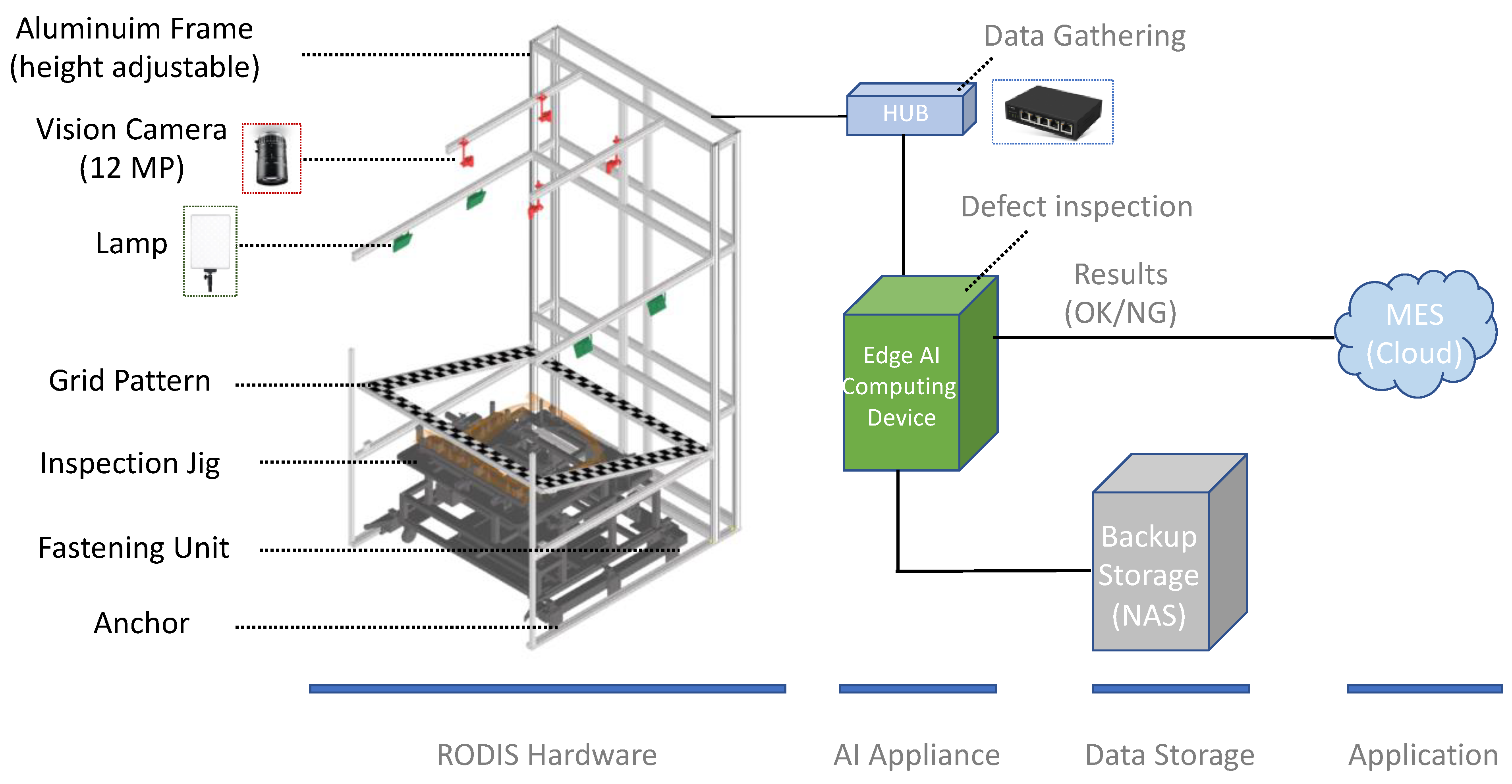

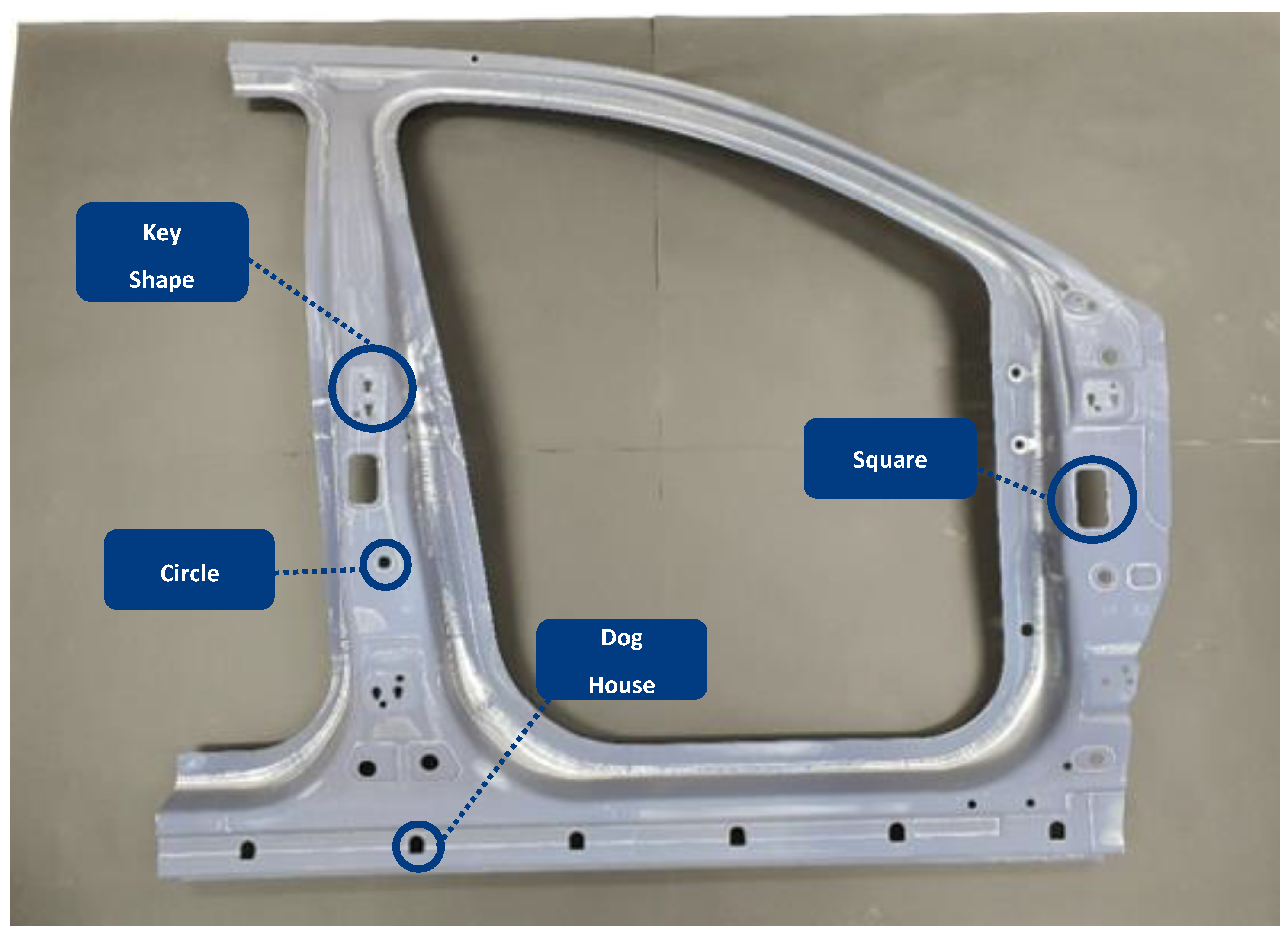

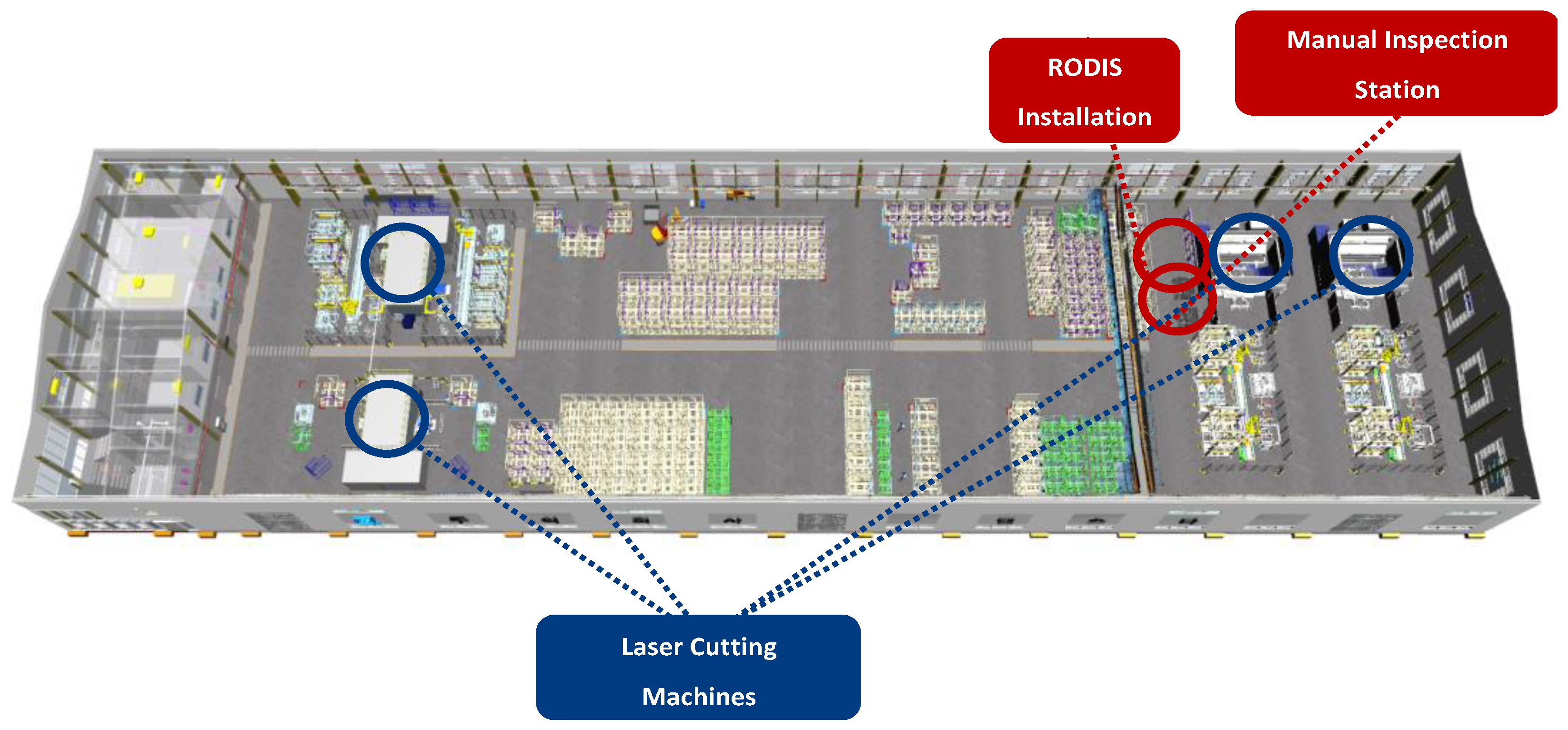

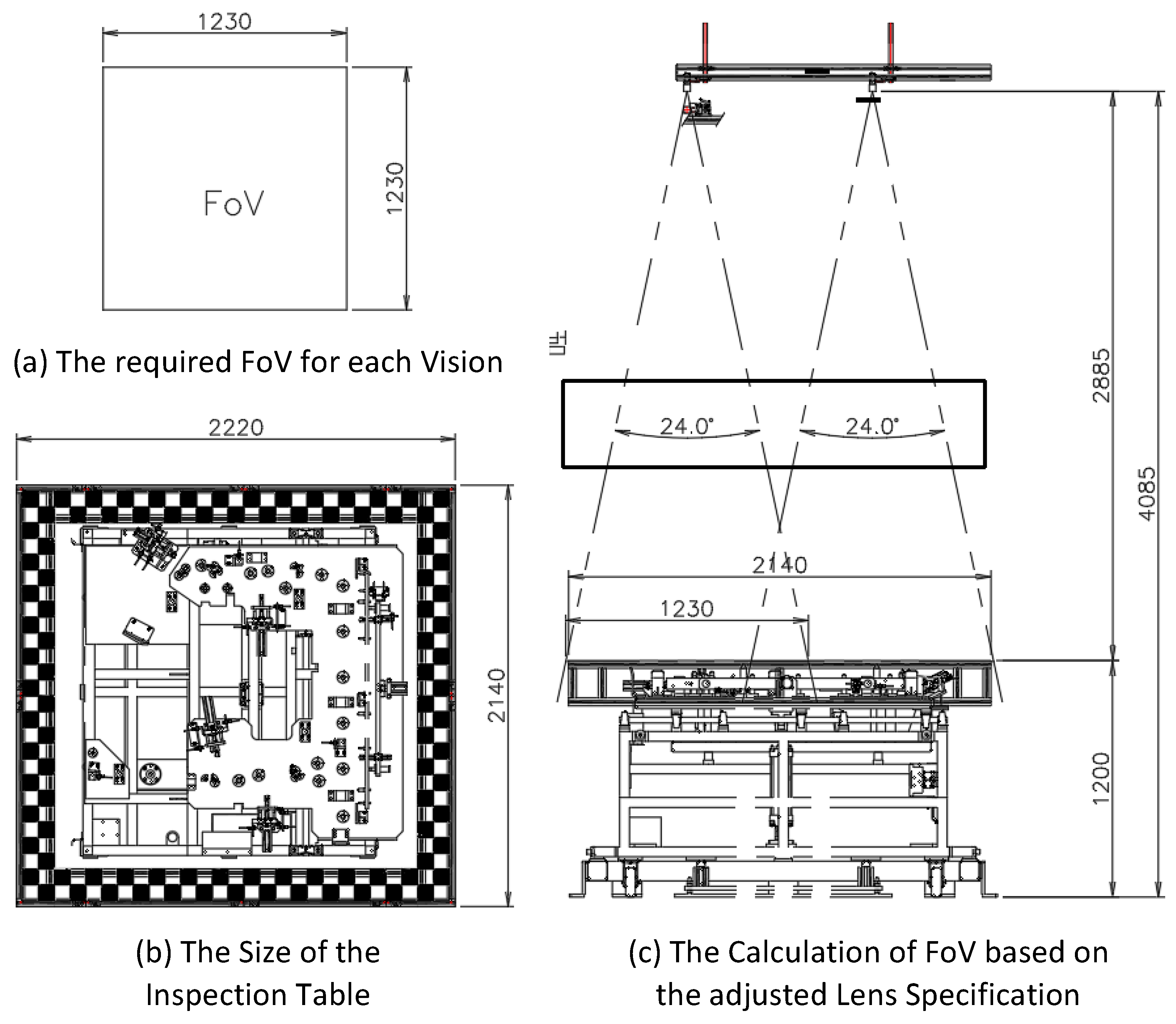

3. RODIS: R-CNN-Based Large-Scale Object-Defect Inspection System

3.1. System Framework

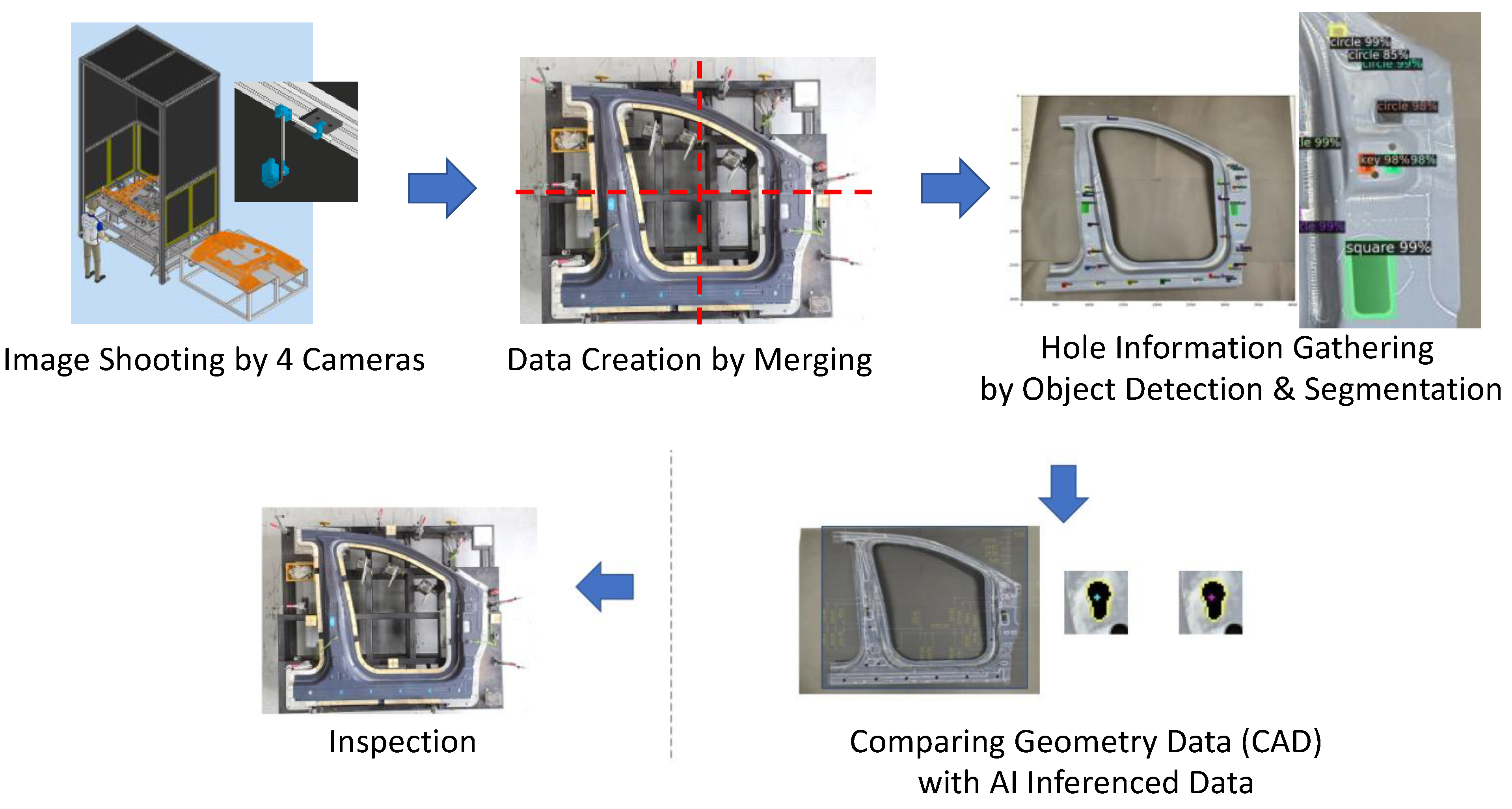

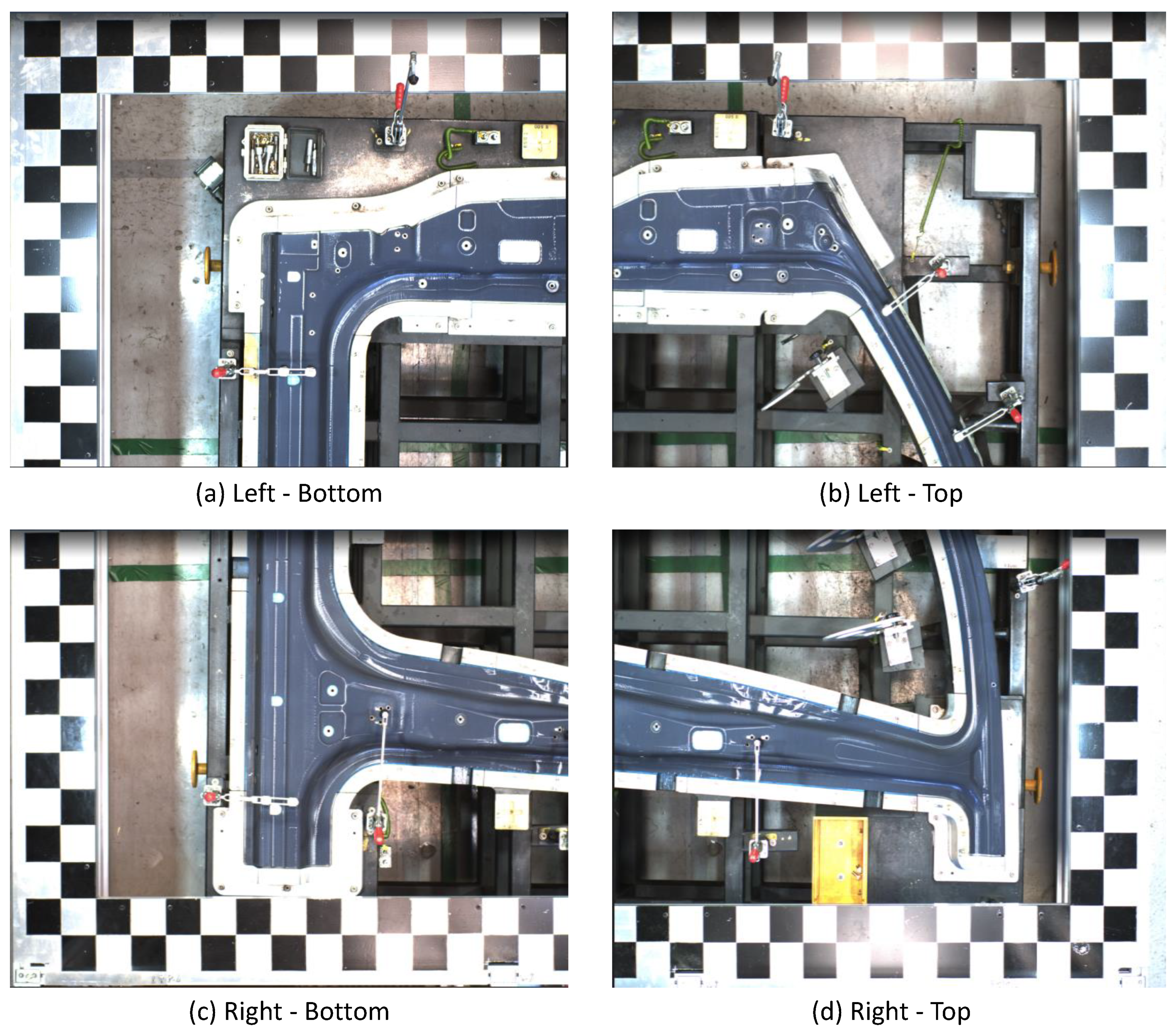

3.2. Inspection Workflow

- The shooting of the side-outer with the four high-resolution cameras;

- Data generation by merging of the split images;

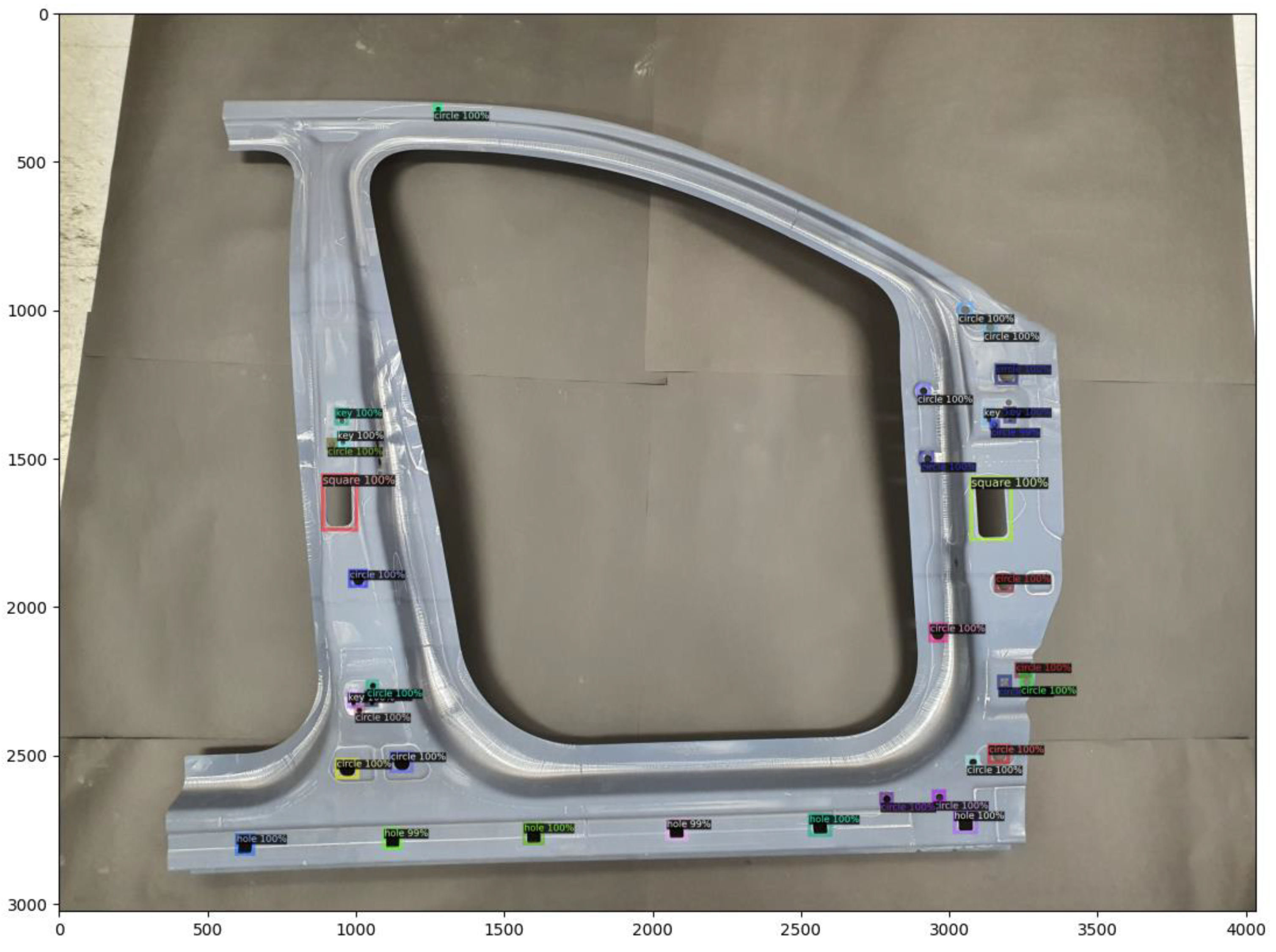

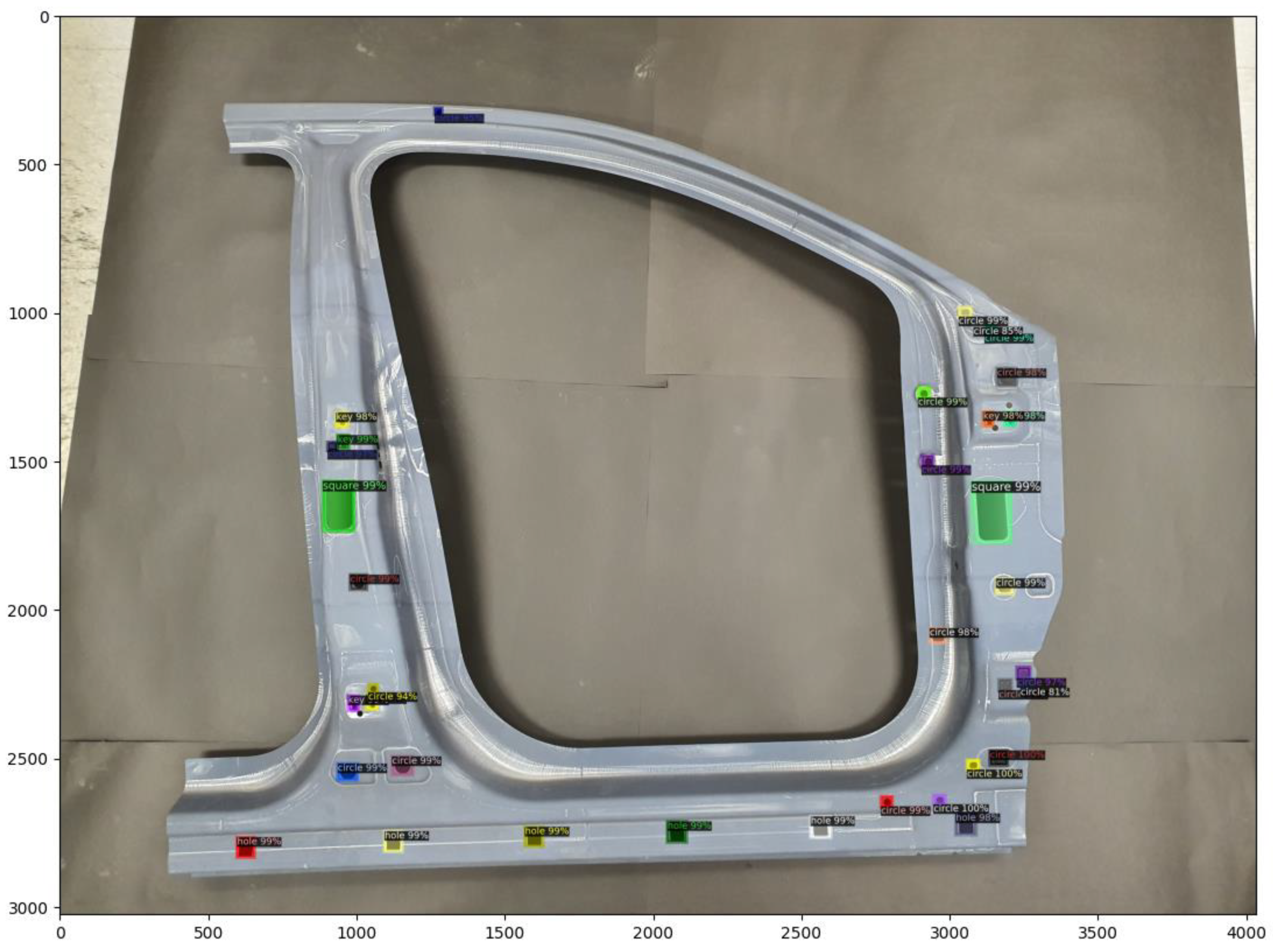

- Objects (processed holes) detected from images acquired by means of AI technology to obtain detailed geometric information;

- Calculate the error after comparing the 3D CAD data with the obtained information acquired by the object detection;

- Inspection based on the error range.

4. Experimental Setup

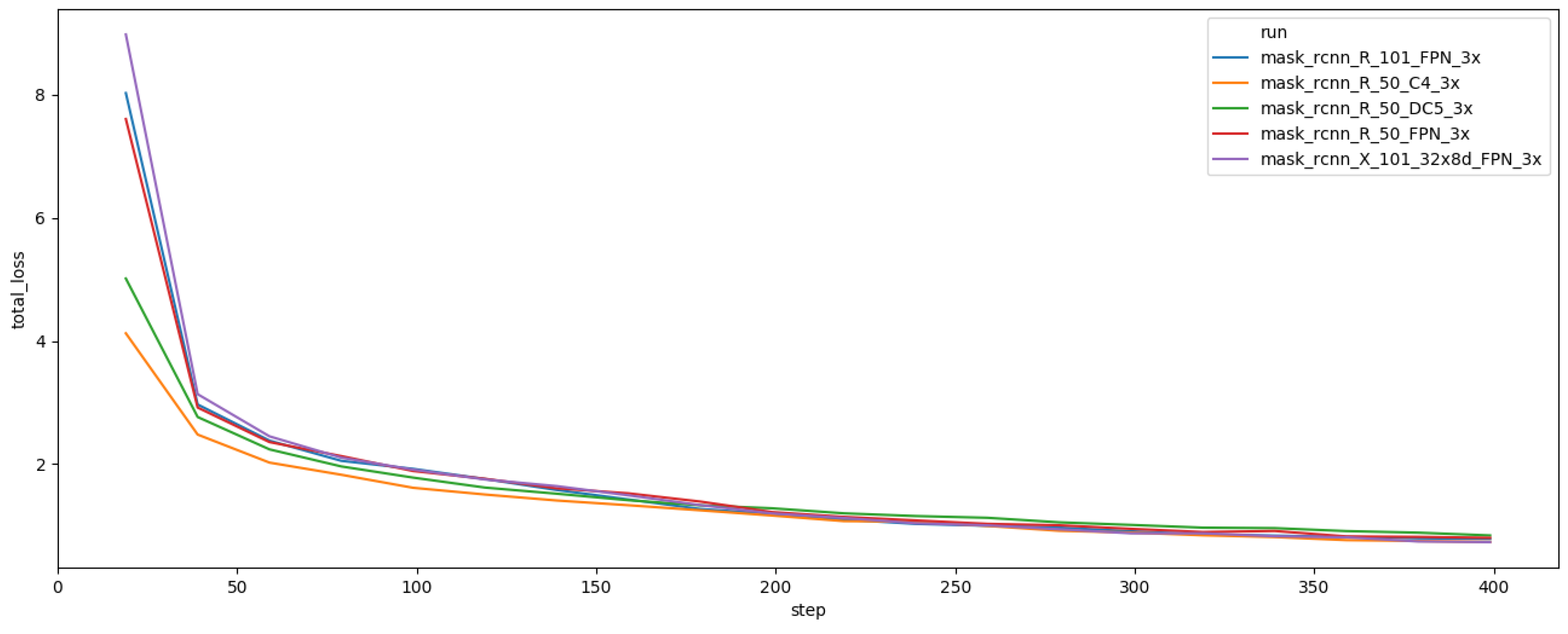

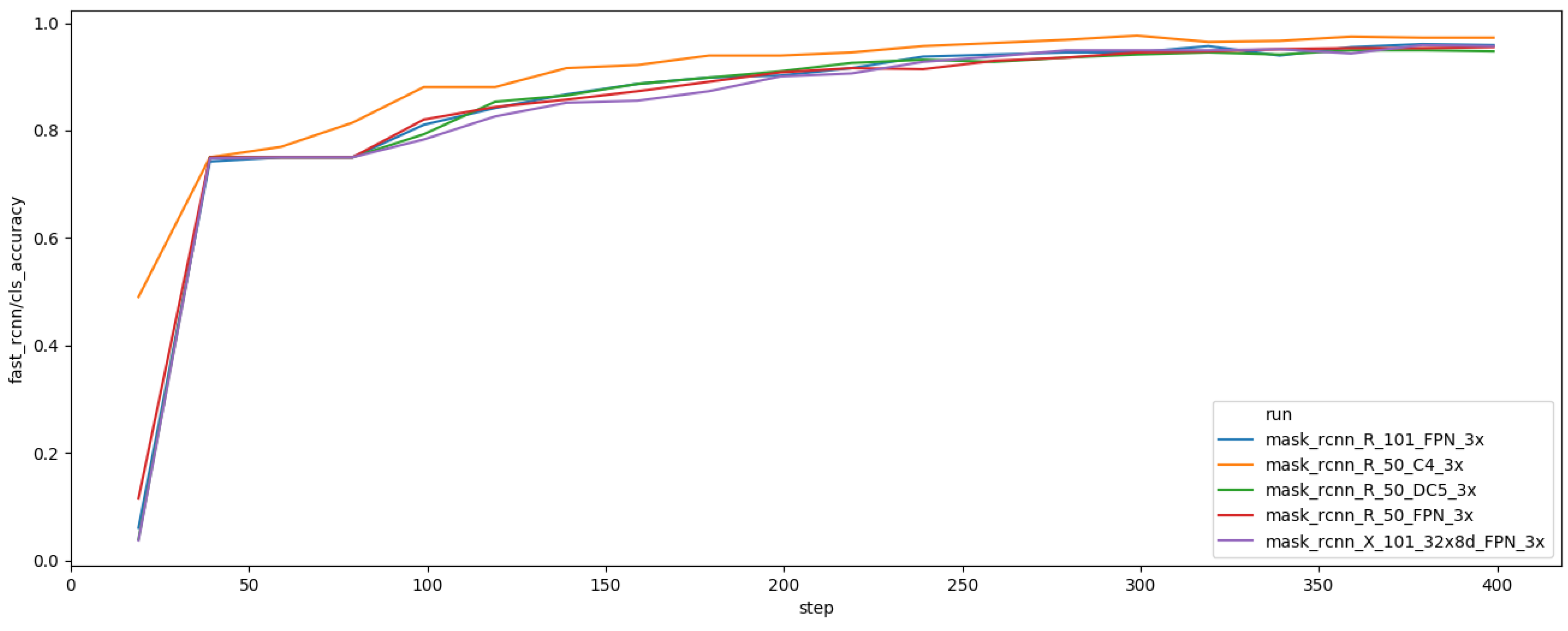

5. Experiment and Results

5.1. Implementation

5.2. Results

6. Discussions

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- IIHS HLDI, Fatality Facts 2018 Passenger Vehicle Occupants. Available online: https://www.iihs.org/topics/fatality-statistics/detail/passenger-vehicle-occupants (accessed on 25 March 2021).

- Karbasian, H.; Tekkaya, A. A review on hot stamping. J. Mater. Process. Technol. 2010, 210, 2103–2118. [Google Scholar] [CrossRef]

- Weng, J.; Cohen, P.; Herniou, M. Camera calibration with distortion models and accuracy evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 965–980. [Google Scholar] [CrossRef] [Green Version]

- Edmund Optics. “Distortion”, Edmund Optics Knowledge Center. Available online: https://www.edmundoptics.co.kr/knowledge-center/application-notes/imaging/distortion/ (accessed on 25 March 2021).

- Opto Engineering, Telecentric Lenses Tutorial, Opto Engineering. Available online: https://www.opto-e.com/resources/telecentric-lenses-tutorial (accessed on 25 March 2021).

- Malamas, E.N.; Petrakis, E.G.; Zervakis, M.; Petit, L.; Legat, J.-D. A survey on industrial vision systems, applications and tools. Image Vis. Comput. 2002, 21, 171–188. [Google Scholar] [CrossRef]

- Hao, R.; Lu, B.; Cheng, Y.; Li, X.; Huang, B. A steel surface defect inspection approach towards smart industrial monitoring. J. Intell. Manuf. 2020, 32, 1833–1843. [Google Scholar] [CrossRef]

- Zhang, C.; Xie, Y.; Liu, D.; Wang, L. Fast threshold image segmentation based on 2D fuzzy fisher and random local optimized QPSO. IEEE Trans. Image Process. 2016, 26, 1355–1362. [Google Scholar] [CrossRef]

- Ghorai, S.; Mukherjee, A.; Gangadaran, M.; Dutta, P.K. Automatic defect detection on hot-rolled flat steel products. IEEE Trans. Instrum. Meas. 2012, 62, 612–621. [Google Scholar] [CrossRef]

- Sun, J.; Li, C.; Wu, X.-J.; Palade, V.; Fang, W. An effective method of weld defect detection and classification based on machine vision. IEEE Trans. Ind. Informatics 2019, 15, 6322–6333. [Google Scholar] [CrossRef]

- Viana, I.; Orteu, J.-J.; Cornille, N.; Bugarin, F. Inspection of aeronautical mechanical parts with a pan-tilt-zoom camera: An approach guided by the computer-aided design model. J. Electron. Imaging 2015, 24, 061118. [Google Scholar] [CrossRef] [Green Version]

- Berndt, D. Digital Assembly Inspection: Automatic Quality Control even for Small Quantities. Available online: https://idw-online.de/de/news532655 (accessed on 25 March 2021).

- Ben Abdallah, H.; Jovančević, I.; Orteu, J.-J.; Brèthes, L. Automatic inspection of aeronautical mechanical assemblies by matching the 3D CAD model and real 2D images. J. Imaging 2019, 5, 81. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- James, G.K. Robot Technology Fundamentals, 1st ed.; Cengage Learning: Boston, MA, USA, 1999; pp. 187–207. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-Based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Liyun, X.; Boyu, L.; Hong, M.; Xingzhong, L. Improved Faster R-CNN algorithm for defect detection in powertrain assembly line. Procedia CIRP 2020, 93, 479–484. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, M.; Zheng, P.; Yang, H.; Zou, J. A smart surface inspection system using faster R-CNN in cloud-edge computing environment. Adv. Eng. Inform. 2020, 43, 101037. [Google Scholar] [CrossRef]

- Oh, S.-J.; Jung, M.-J.; Lim, C.; Shin, S.-C. Automatic detection of welding defects using faster R-CNN. Appl. Sci. 2020, 10, 8629. [Google Scholar] [CrossRef]

- Attard, L.; Debono, C.J.; Valentino, G.; Castro, M.; Masi, A.; Scibile, L. Automatic crack detection using mask R-CNN. In Proceedings of the 11th International Symposium on Image and Signal Processing and Analysis (ISPA), Dubrovnik, Croatia, 23 September 2019; pp. 152–157. [Google Scholar]

- Zhao, G.; Hu, J.; Xiao, W.; Zou, J. A mask R-CNN based method for inspecting cable brackets in aircraft. Chin. J. Aeronaut. 2020. [Google Scholar] [CrossRef]

- Im, D.; Lee, S.; Lee, H.; Yoon, B.; So, F.; Jeong, J. A data-centric approach to design and analysis of a surface-inspection system based on deep learning in the plastic injection molding industry. Processes 2021, 9, 1895. [Google Scholar] [CrossRef]

- Le, T.; Le, N.-T.; Jang, Y.M.; Thithanhnhan, L.; Nam-Tuan, L.; Min, J.Y. Performance of rolling shutter and global shutter camera in optical camera communications. In Proceedings of the 2015 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Korea, 28–30 October 2015; pp. 124–128. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 12 December 2016; pp. 770–778. [Google Scholar]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar]

- Zhang, X.; Zhu, K.; Chen, G.; Tan, X.; Zhang, L.; Dai, F.; Liao, P.; Gong, Y. Geospatial object detection on high resolution remote sensing imagery based on double multi-scale feature pyramid network. Remote Sens. 2019, 11, 755. [Google Scholar] [CrossRef] [Green Version]

- Tayara, H.; Chong, K.T. Object Detection in Very High-Resolution Aerial Images Using One-Stage Densely Connected Feature Pyramid Network. Sensors 2018, 18, 3341. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar] [CrossRef] [Green Version]

- Su, W.; Yuan, Y.; Zhu, M. A Relationship between the Average Precision and the Area Under the ROC Curve. In Proceedings of the 2015 International Conference on The Theory of Information Retrieval (ICTIR‘ 15). Association for Computing Machinery, New York, NY, USA, 27–30 September 2015; pp. 349–352. [Google Scholar]

| Information | Data Type |

|---|---|

| Area | Int [Scalar] |

| Coordinate | Int [Vector] |

| Category_ID | Int [Scalar] |

| Color | String [Scalar] |

| Height | Int [Scalar] |

| ID | Int [Scalar] |

| Image_ID | Int [Scalar] |

| Bounding Box | Boolean [Scalar] |

| Width | Int [Scalar] |

| Method | Data Type | Input |

|---|---|---|

| ResizeShortestEdge | Int [Vector] | [640, 672, 704, 736, 768, 800] |

| RandomFlip | Boolean | True |

| Specification | Tri120s | Tri122s |

|---|---|---|

| Frame rate | 10 FPS | 9.7 FPS |

| Shutter Type | Global | Rolling |

| Resolution (h × v) | 4096 × 3000 px | 4024 × 3036 px |

| Resolution | 12.3 MP | 12.2 MP |

| Pixel Size (h × v) | 3.45 μm × 3.45 μm | 1.85 μm × 1.85 μm |

| Sensor Size | 17.6 mm | 9.33 mm |

| Specification | V1228-MPY2 | AZURE-1623ML12M |

|---|---|---|

| Focal Length | 12 mm | 16 mm |

| Angle of View (1.1 type, d × h × v) | 71.1° × 60.5° × 46.2° | 54.39° × 44.56° × 34.45° |

| Angle of View (2/3 type, d × h × v) | 48.6° × 39.6° × 30.1° | 35.67° × 28.85° × 21.83° |

| Distortion | <0.5% | <0.4% |

| Labeled Name | Baseline | Feature Extraction | Dataset Size |

|---|---|---|---|

| mask_rcnn_R_101_FPN_3x | ResNet-101 | FPN | 37 epochs |

| mask_rcnn_R_50_C4_3x | ResNet-50 | Conv4 * | 37 epochs |

| mask_rcnn_R_50_DC5_3x | ResNet-50 | Conv5 ** | 37 epochs |

| mask_rcnn_R_50_FPN_3x | ResNet-50 | FPN | 37 epochs |

| mask_rcnn_X_101_32xBd_FPN_3x | ResNeXt-101 | FPN | 37 epochs |

| Backbone Network | AP(BBox) 1 | AP(Mask) 2 |

|---|---|---|

| ResNet-50-FPN | 71.6339 | 86.2131 |

| ResNet-50-C4 | 58.9034 | 64.0677 |

| ResNet-50-DC5 | 64.3613 | 66.3074 |

| ResNet-101-FPN | 71.8172 | 84.8889 |

| ResNeXt-101-FPN | 70.4178 | 85.4494 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Im, D.; Jeong, J. R-CNN-Based Large-Scale Object-Defect Inspection System for Laser Cutting in the Automotive Industry. Processes 2021, 9, 2043. https://doi.org/10.3390/pr9112043

Im D, Jeong J. R-CNN-Based Large-Scale Object-Defect Inspection System for Laser Cutting in the Automotive Industry. Processes. 2021; 9(11):2043. https://doi.org/10.3390/pr9112043

Chicago/Turabian StyleIm, Donggyun, and Jongpil Jeong. 2021. "R-CNN-Based Large-Scale Object-Defect Inspection System for Laser Cutting in the Automotive Industry" Processes 9, no. 11: 2043. https://doi.org/10.3390/pr9112043

APA StyleIm, D., & Jeong, J. (2021). R-CNN-Based Large-Scale Object-Defect Inspection System for Laser Cutting in the Automotive Industry. Processes, 9(11), 2043. https://doi.org/10.3390/pr9112043